Series: How I use LLMs and ChatGPT

Posts about ways I'm using LLM tools such as ChatGPT in my own work.

This series starts with my experiments using GPT-3 in June 2022, so if you are looking for more recent material be sure to scroll to the bottom!

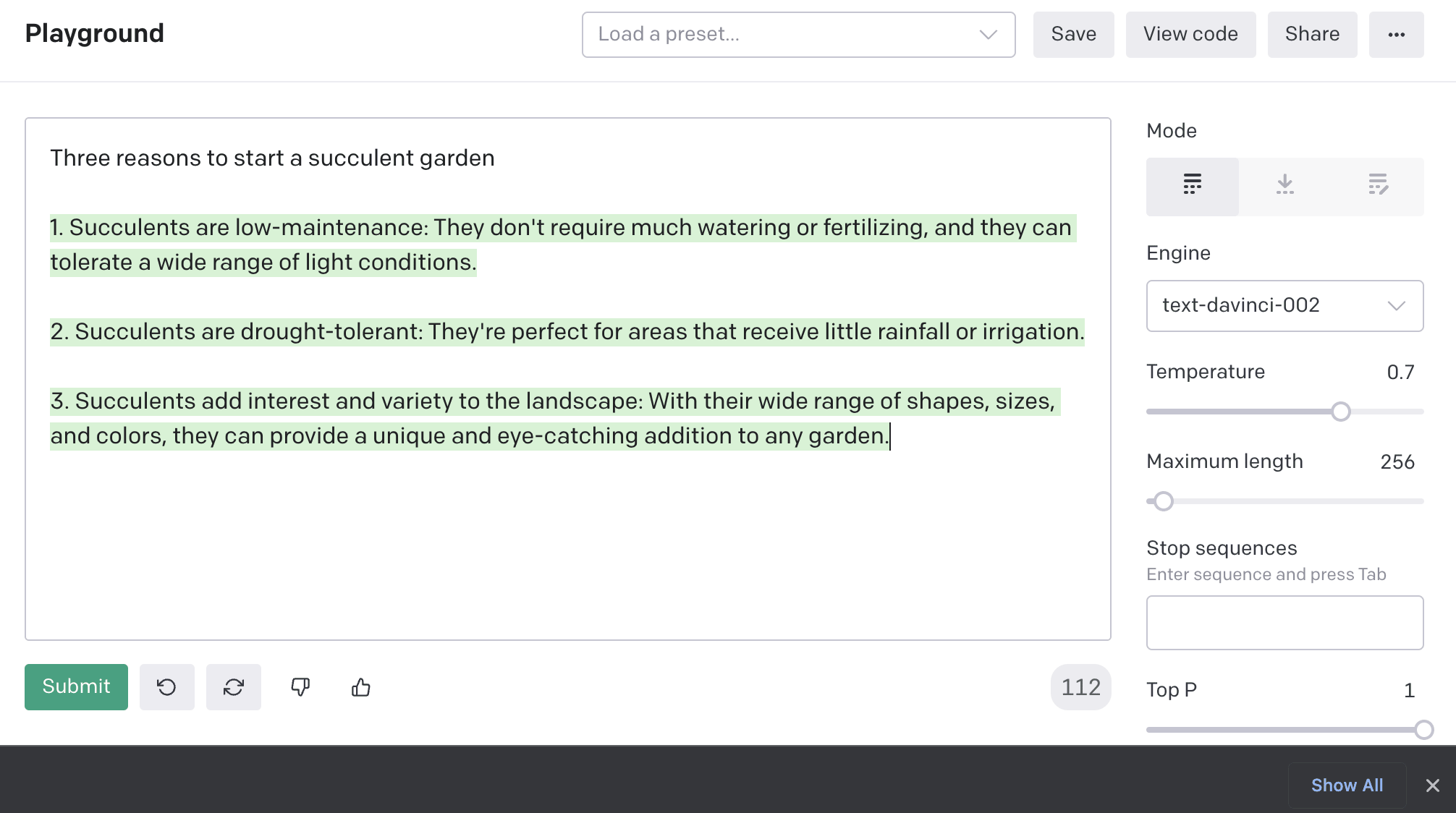

How to use the GPT-3 language model

I ran a Twitter poll the other day asking if people had tried GPT-3 and why or why not. The winning option, by quite a long way, was “No, I don’t know how to”. So here’s how to try it out, for free, without needing to write any code.

[... 838 words]Using GPT-3 to explain how code works

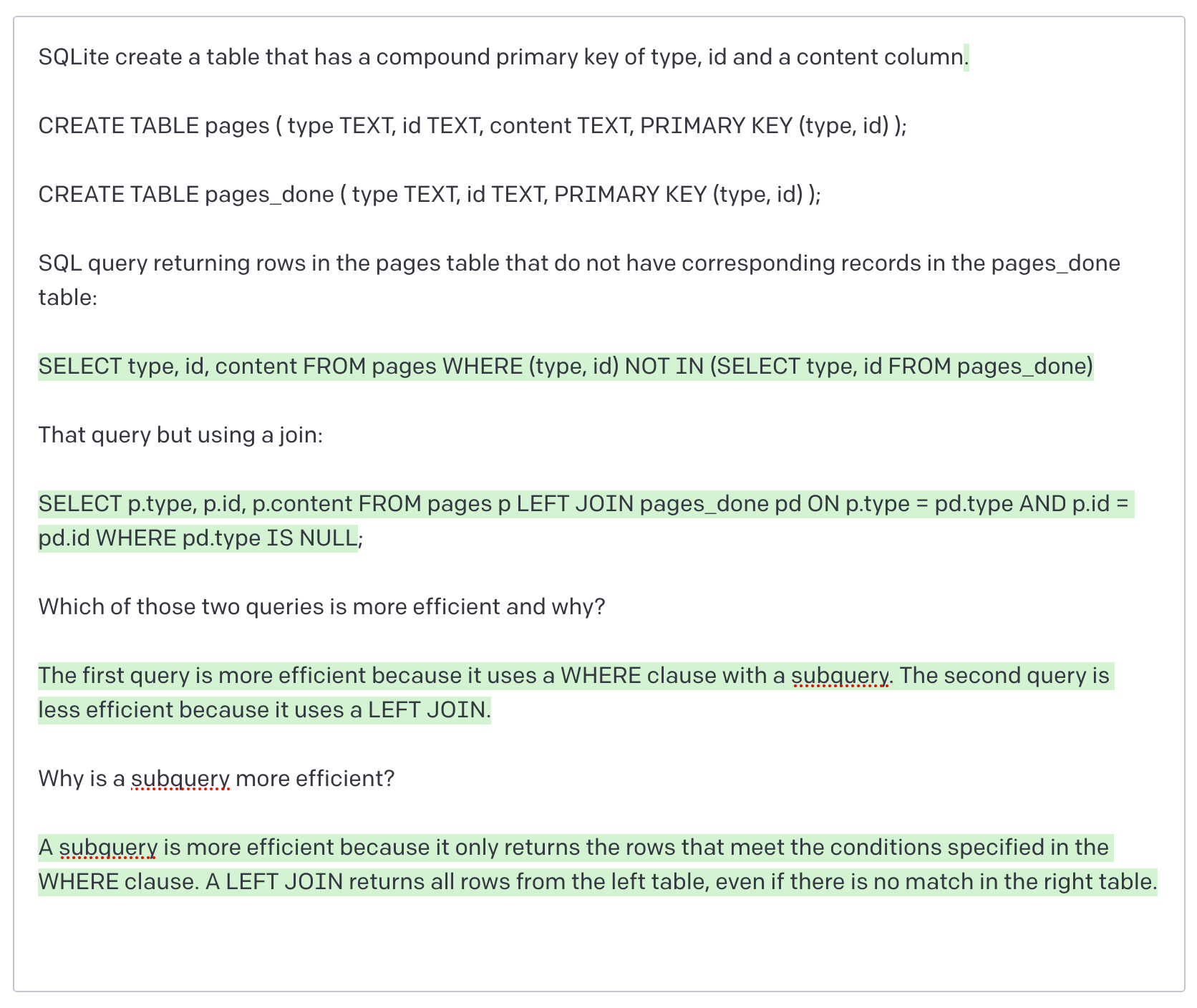

One of my favourite uses for the GPT-3 AI language model is generating explanations of how code works. It’s shockingly effective at this: its training set clearly include a vast amount of source code.

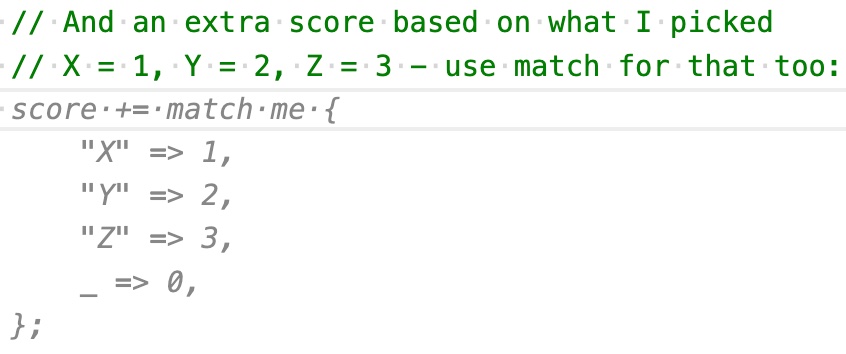

[... 1,983 words]AI assisted learning: Learning Rust with ChatGPT, Copilot and Advent of Code

I’m using this year’s Advent of Code to learn Rust—with the assistance of GitHub Copilot and OpenAI’s new ChatGPT.

[... 2,661 words]Over-engineering Secret Santa with Python cryptography and Datasette

We’re doing a family Secret Santa this year, and we needed a way to randomly assign people to each other without anyone knowing who was assigned to who.

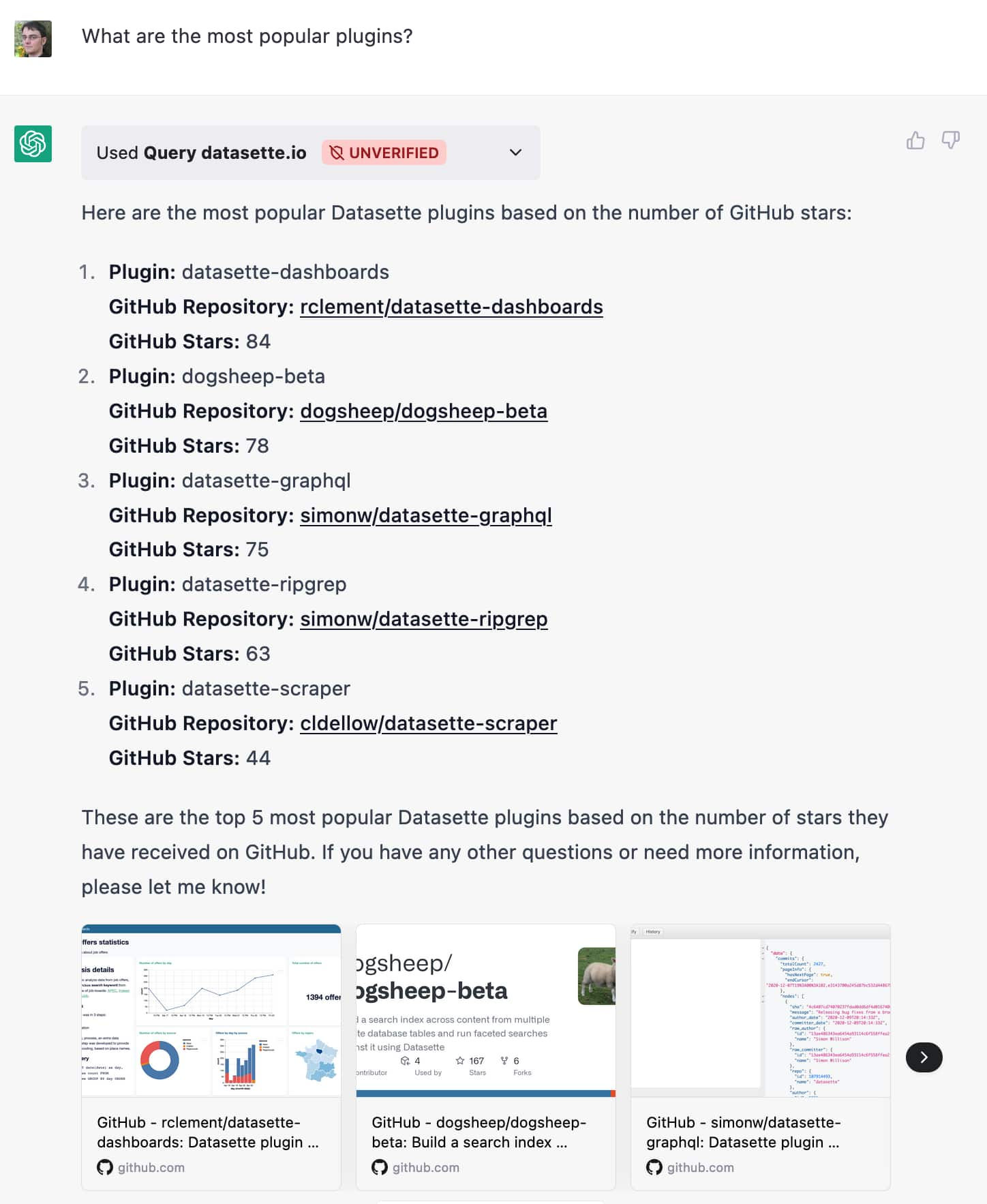

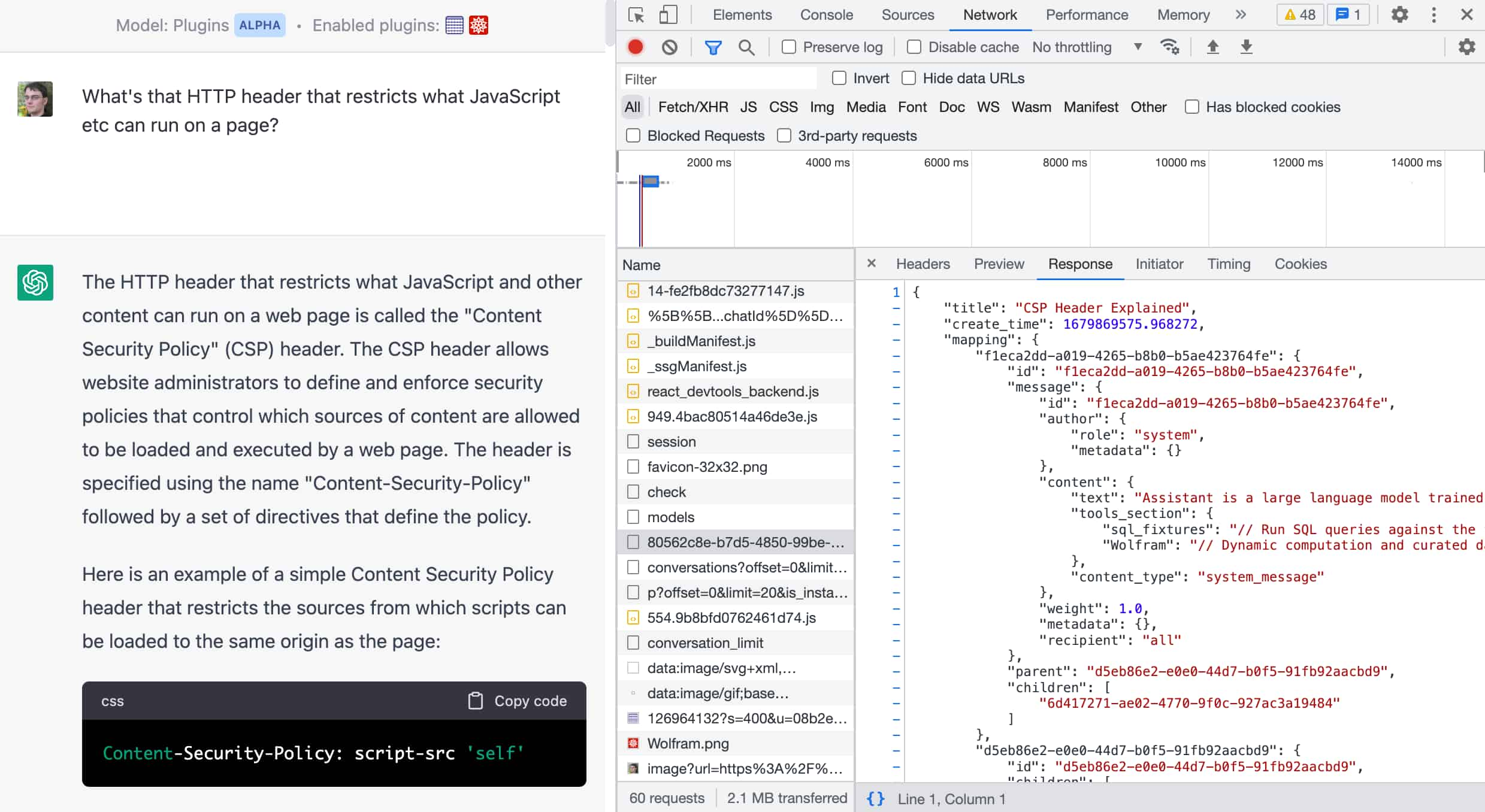

[... 2,044 words]I built a ChatGPT plugin to answer questions about data hosted in Datasette

Yesterday OpenAI announced support for ChatGPT plugins. It’s now possible to teach ChatGPT how to make calls out to external APIs and use the responses to help generate further answers in the current conversation.

[... 1,801 words]AI-enhanced development makes me more ambitious with my projects

The thing I’m most excited about in our weird new AI-enhanced reality is the way it allows me to be more ambitious with my projects.

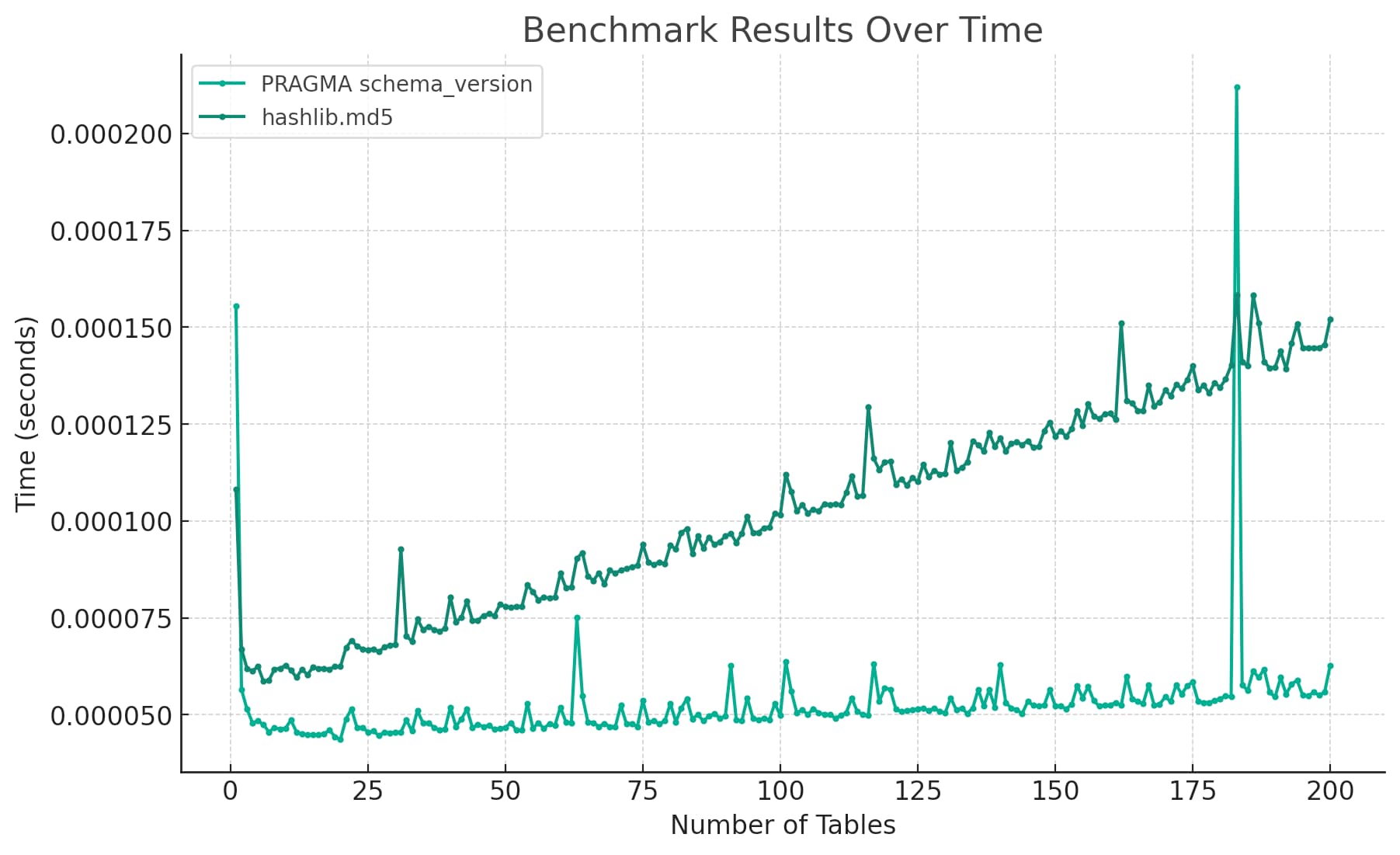

[... 3,336 words]Running Python micro-benchmarks using the ChatGPT Code Interpreter alpha

Today I wanted to understand the performance difference between two Python implementations of a mechanism to detect changes to a SQLite database schema. I rendered the difference between the two as this chart:

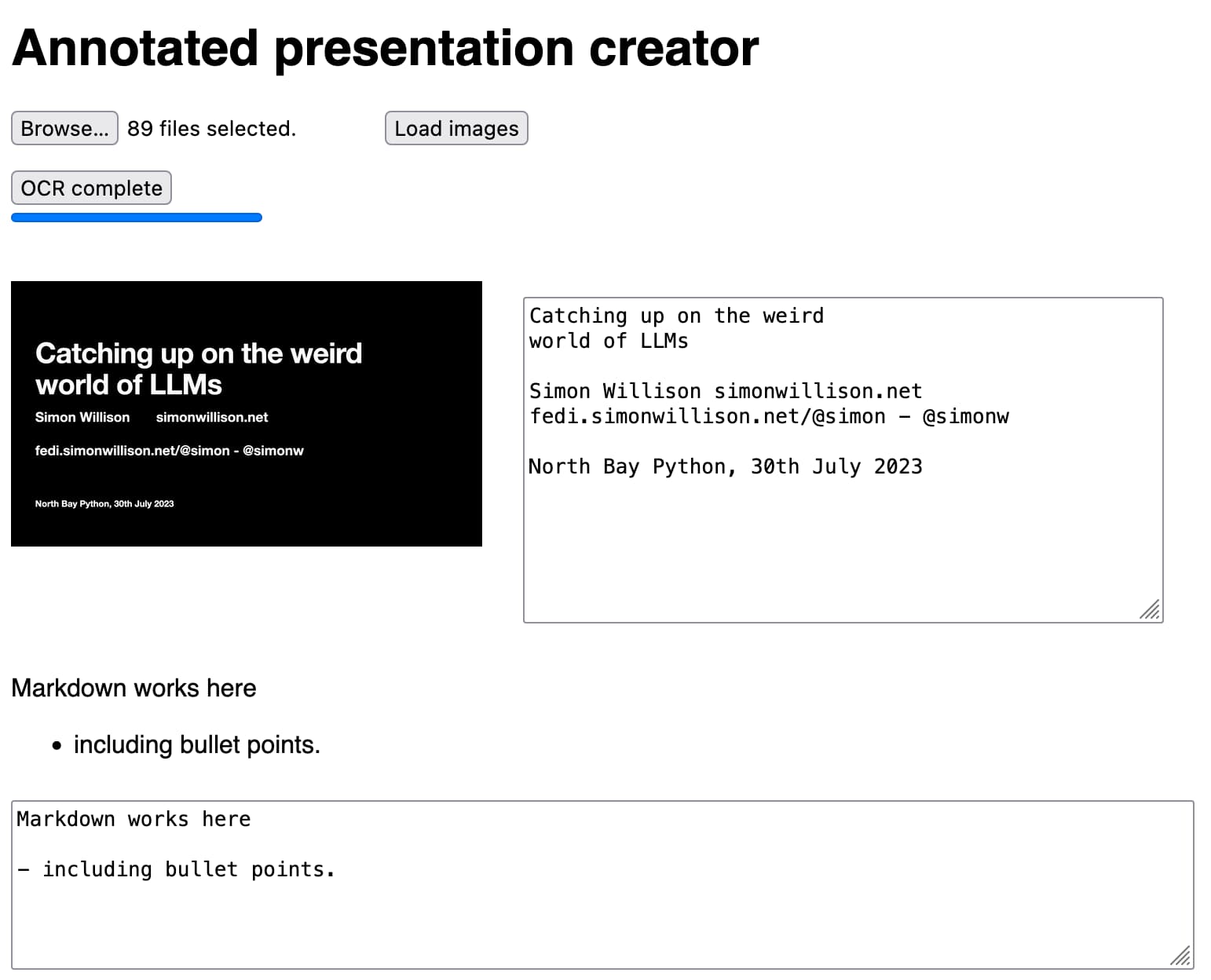

[... 2,939 words]How I make annotated presentations

Giving a talk is a lot of work. I go by a rule of thumb I learned from Damian Conway: a minimum of ten hours of preparation for every one hour spent on stage.

[... 2,128 words]Exploring GPTs: ChatGPT in a trench coat?

The biggest announcement from last week’s OpenAI DevDay (and there were a LOT of announcements) was GPTs. Users of ChatGPT Plus can now create their own, custom GPT chat bots that other Plus subscribers can then talk to.

[... 5,699 words]Claude and ChatGPT for ad-hoc sidequests

Here is a short, illustrative example of one of the ways in which I use Claude and ChatGPT on a daily basis.

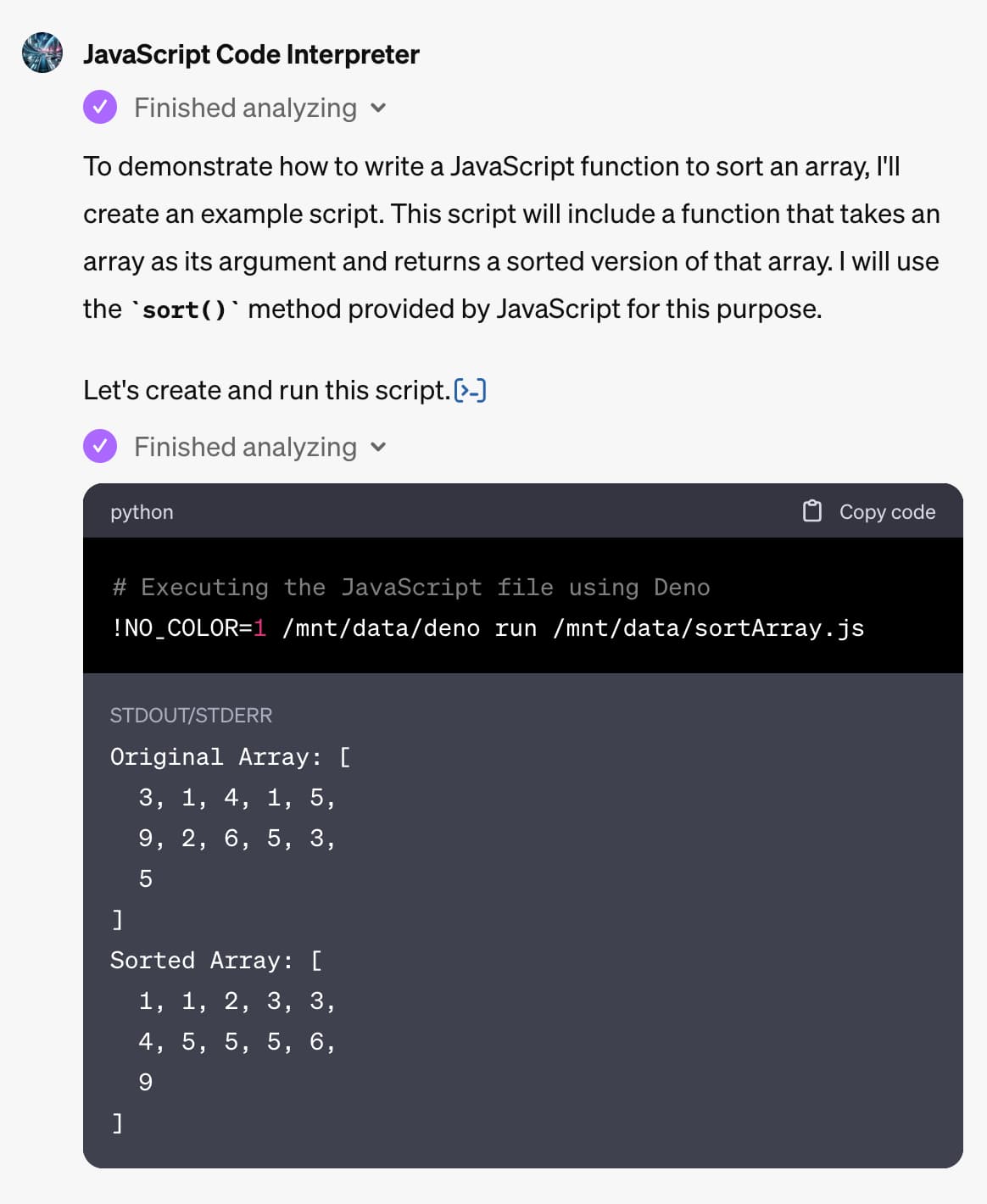

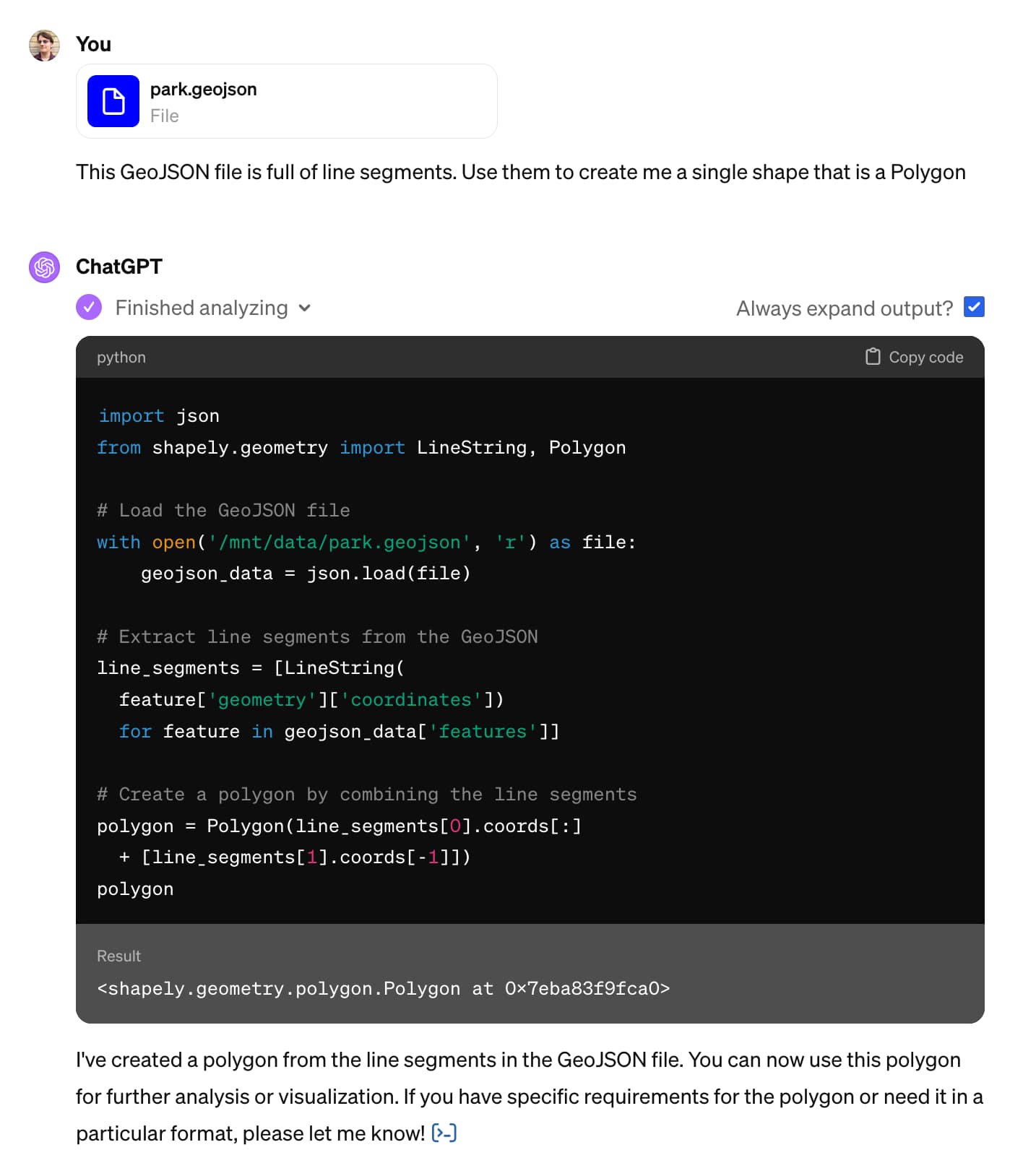

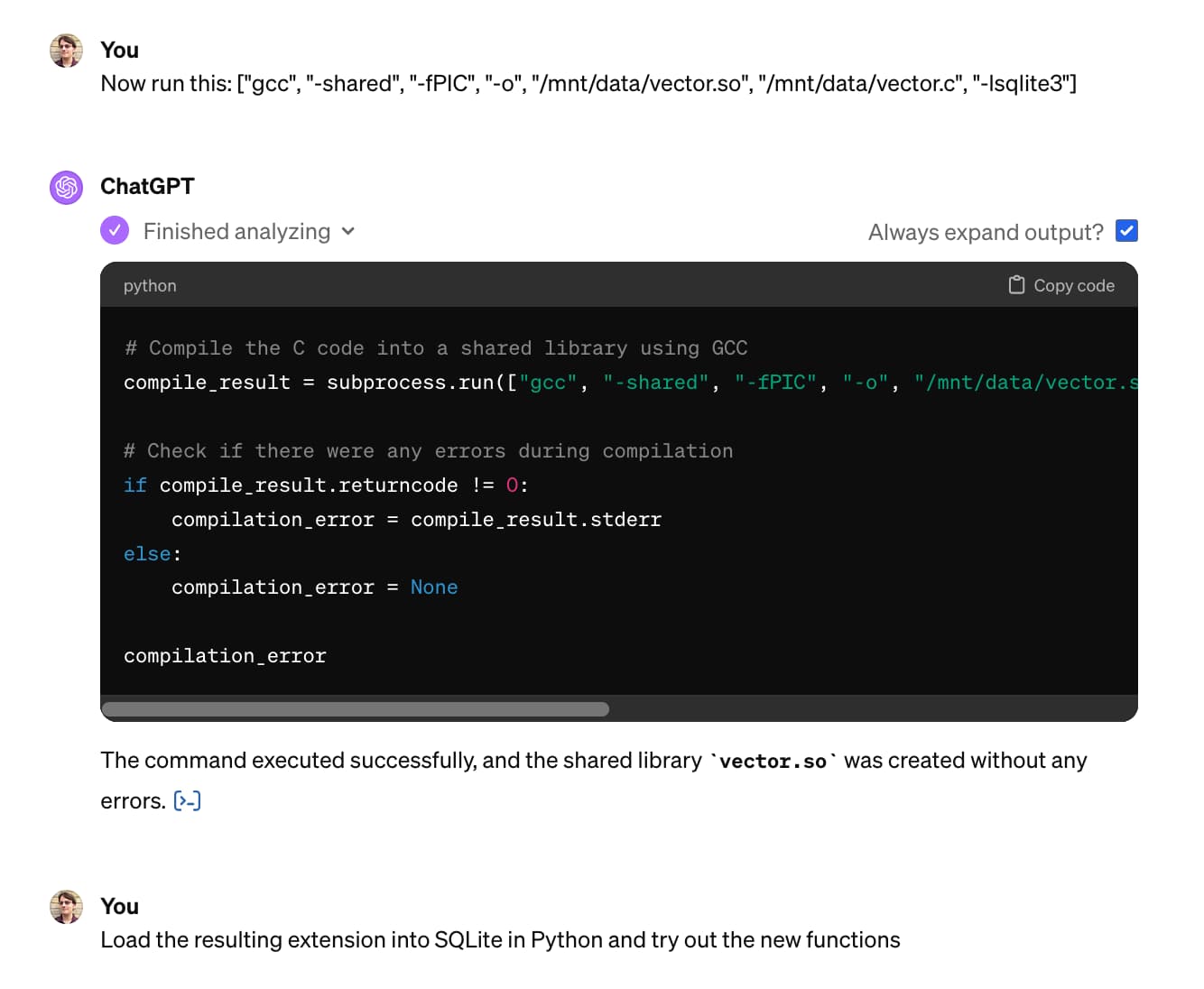

[... 1,754 words]Building and testing C extensions for SQLite with ChatGPT Code Interpreter

I wrote yesterday about how I used Claude and ChatGPT Code Interpreter for simple ad-hoc side quests—in that case, for converting a shapefile to GeoJSON and merging it into a single polygon.

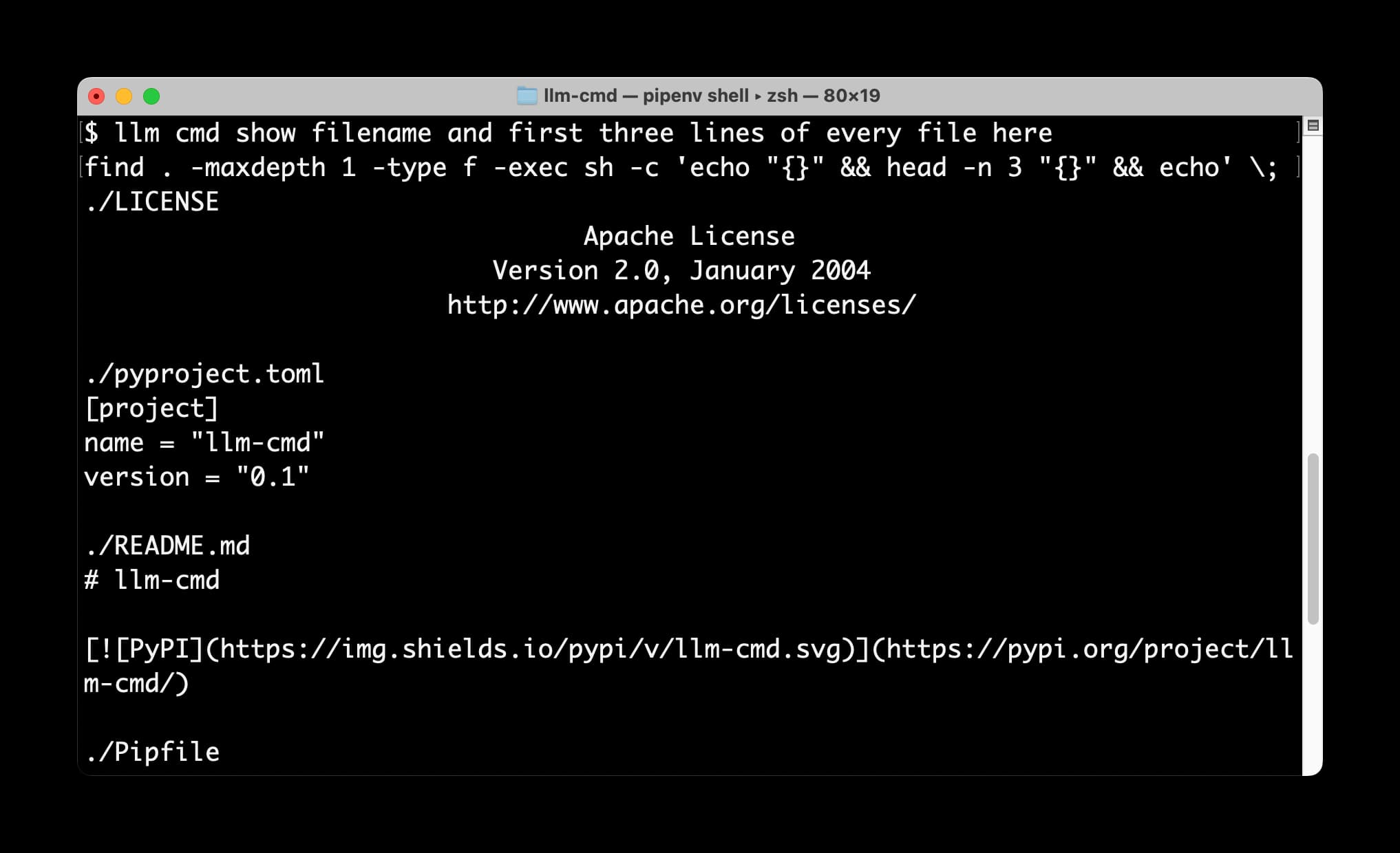

[... 4,612 words]llm cmd undo last git commit—a new plugin for LLM

I just released a neat new plugin for my LLM command-line tool: llm-cmd. It lets you run a command to to generate a further terminal command, review and edit that command, then hit <enter> to execute it or <ctrl-c> to cancel.

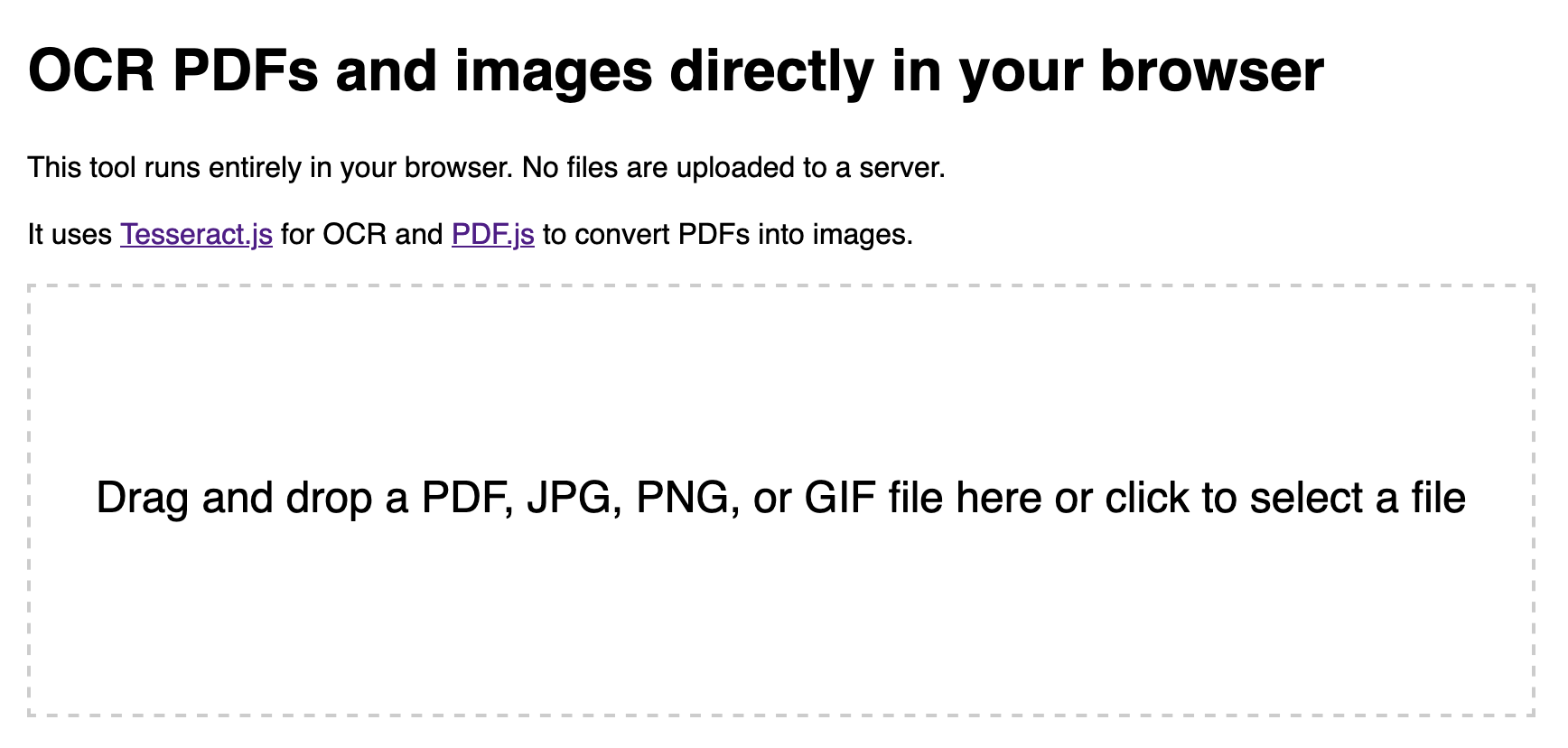

Running OCR against PDFs and images directly in your browser

I attended the Story Discovery At Scale data journalism conference at Stanford this week. One of the perennial hot topics at any journalism conference concerns data extraction: how can we best get data out of PDFs and images?

[... 2,263 words]Building files-to-prompt entirely using Claude 3 Opus

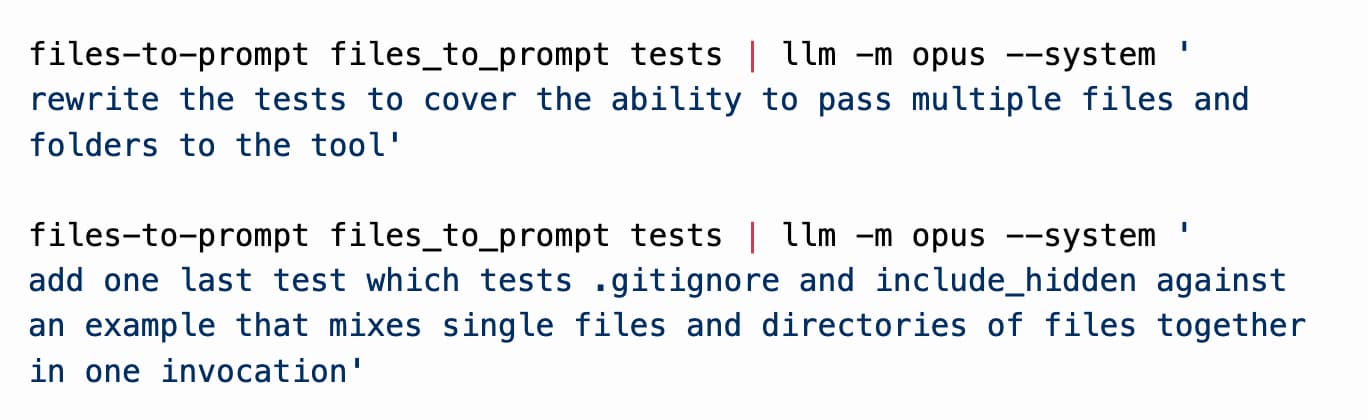

files-to-prompt is a new tool I built to help me pipe several files at once into prompts to LLMs such as Claude and GPT-4.

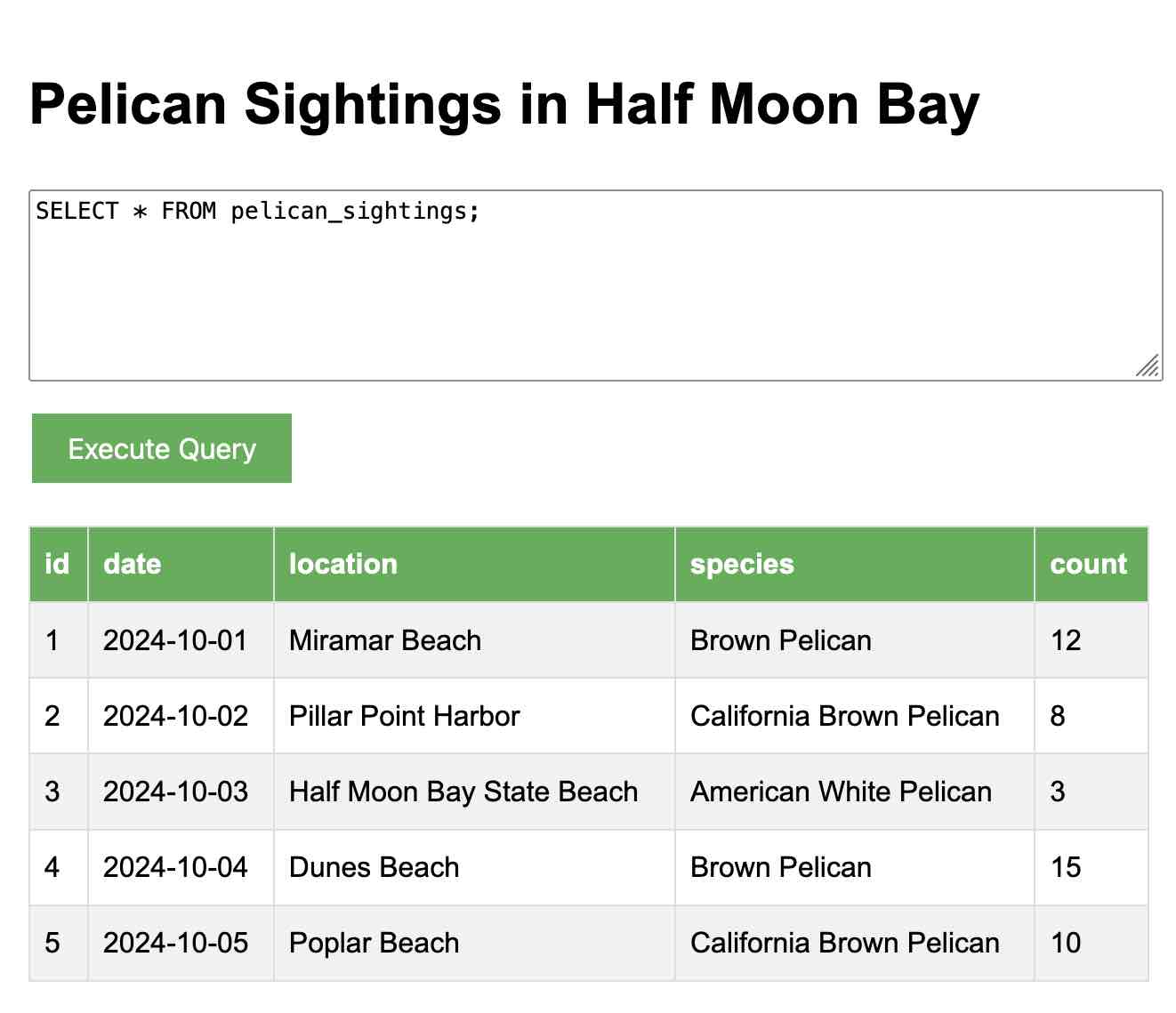

[... 3,235 words]AI for Data Journalism: demonstrating what we can do with this stuff right now

I gave a talk last month at the Story Discovery at Scale data journalism conference hosted at Stanford by Big Local News. My brief was to go deep into the things we can use Large Language Models for right now, illustrated by a flurry of demos to help provide starting points for further conversations at the conference.

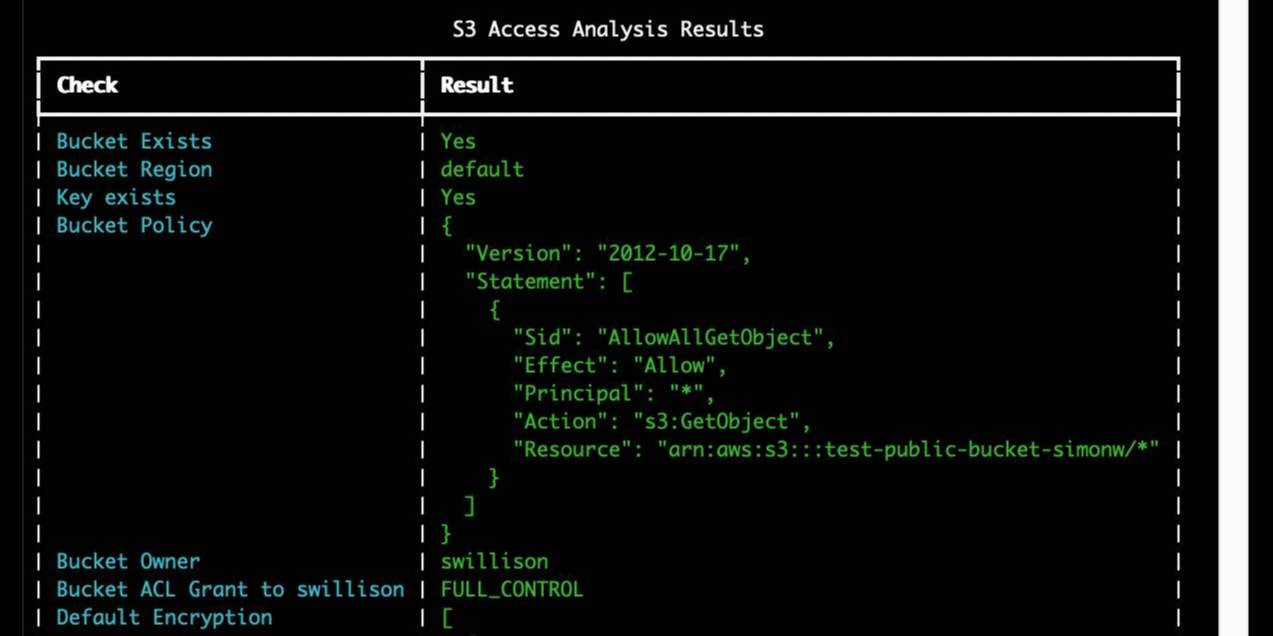

[... 6,081 words]Building search-based RAG using Claude, Datasette and Val Town

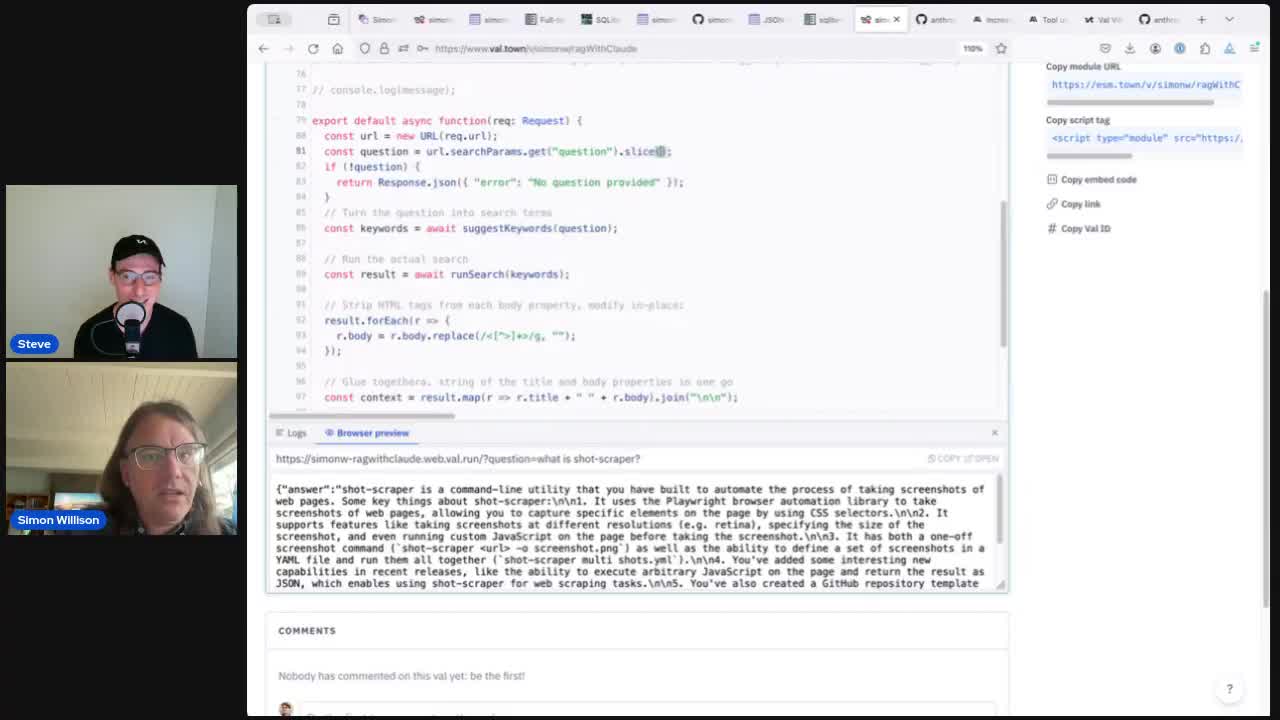

Retrieval Augmented Generation (RAG) is a technique for adding extra “knowledge” to systems built on LLMs, allowing them to answer questions against custom information not included in their training data. A common way to implement this is to take a question from a user, translate that into a set of search queries, run those against a search engine and then feed the results back into the LLM to generate an answer.

[... 3,372 words]django-http-debug, a new Django app mostly written by Claude

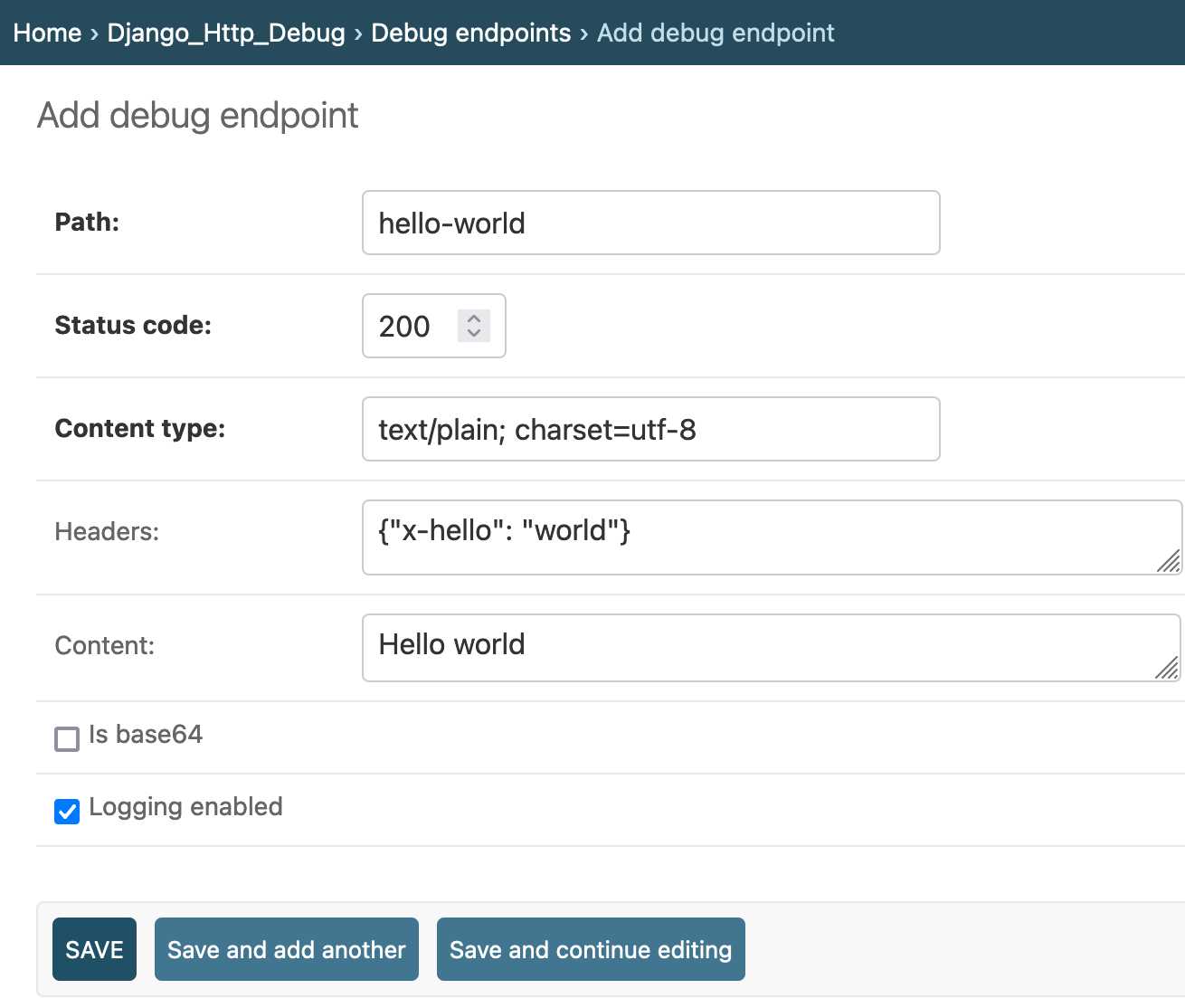

Yesterday I finally developed something I’ve been casually thinking about building for a long time: django-http-debug. It’s a reusable Django app—something you can pip install into any Django project—which provides tools for quickly setting up a URL that returns a canned HTTP response and logs the full details of any incoming request to a database table.

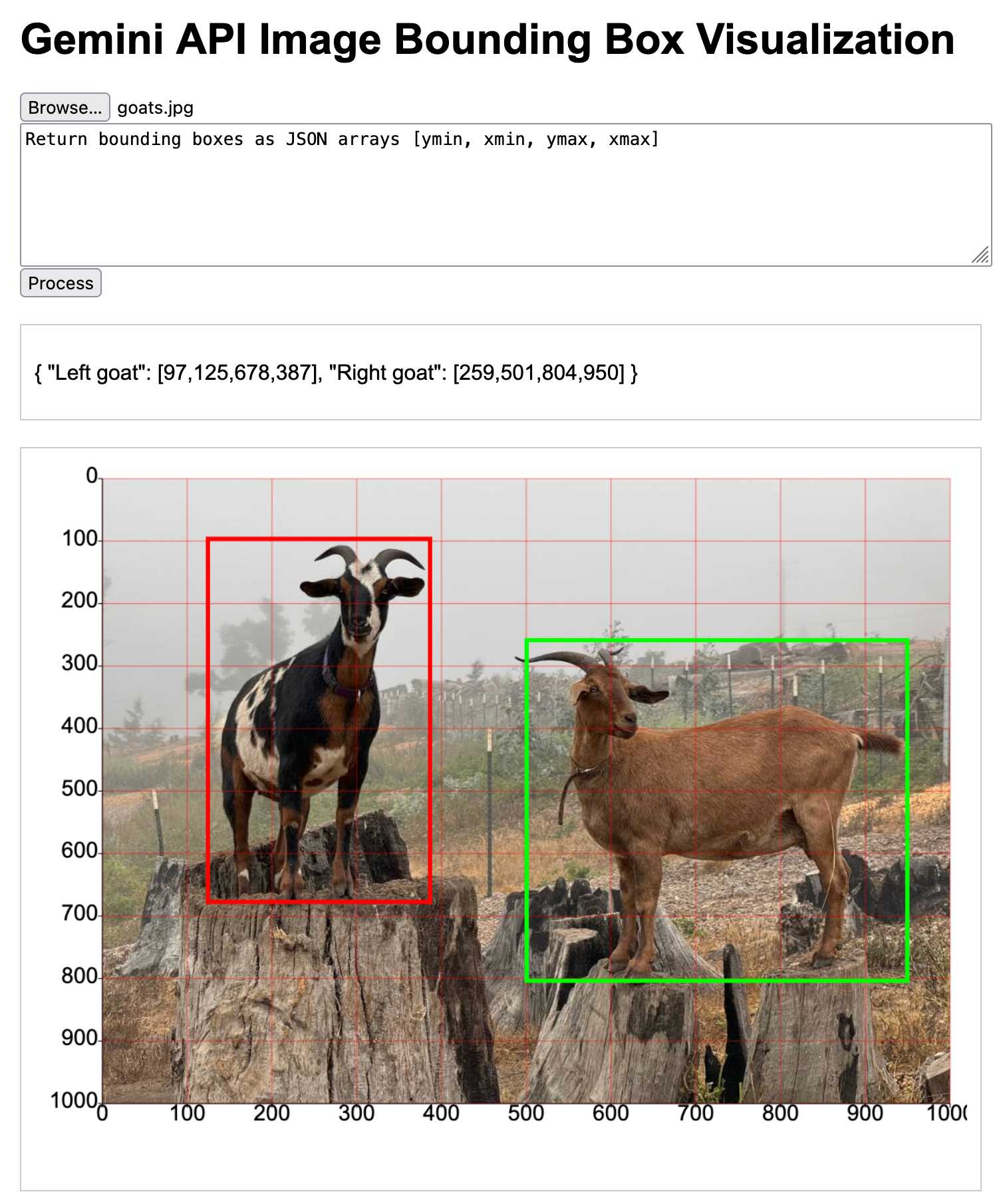

Building a tool showing how Gemini Pro can return bounding boxes for objects in images

I was browsing through Google’s Gemini documentation while researching how different multi-model LLM APIs work when I stumbled across this note in the vision documentation:

[... 1,792 words]Notes on using LLMs for code

I was recently the guest on TWIML—the This Week in Machine Learning & AI podcast. Our episode is titled Supercharging Developer Productivity with ChatGPT and Claude with Simon Willison, and the focus of the conversation was the ways in which I use LLM tools in my day-to-day work as a software developer and product engineer.

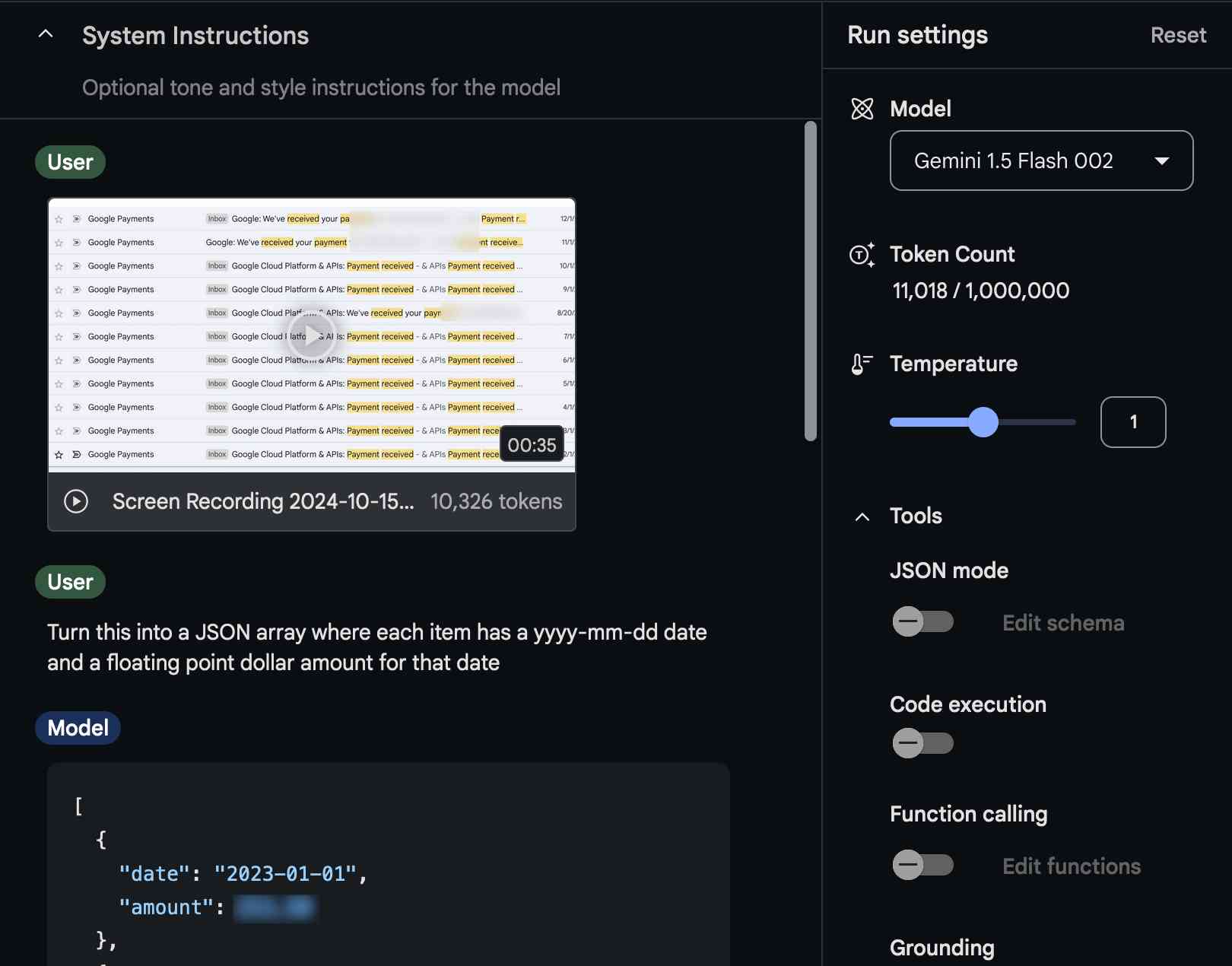

[... 861 words]Video scraping: extracting JSON data from a 35 second screen capture for less than 1/10th of a cent

The other day I found myself needing to add up some numeric values that were scattered across twelve different emails.

[... 1,294 words]Everything I built with Claude Artifacts this week

I’m a huge fan of Claude’s Artifacts feature, which lets you prompt Claude to create an interactive Single Page App (using HTML, CSS and JavaScript) and then view the result directly in the Claude interface, iterating on it further with the bot and then, if you like, copying out the resulting code.

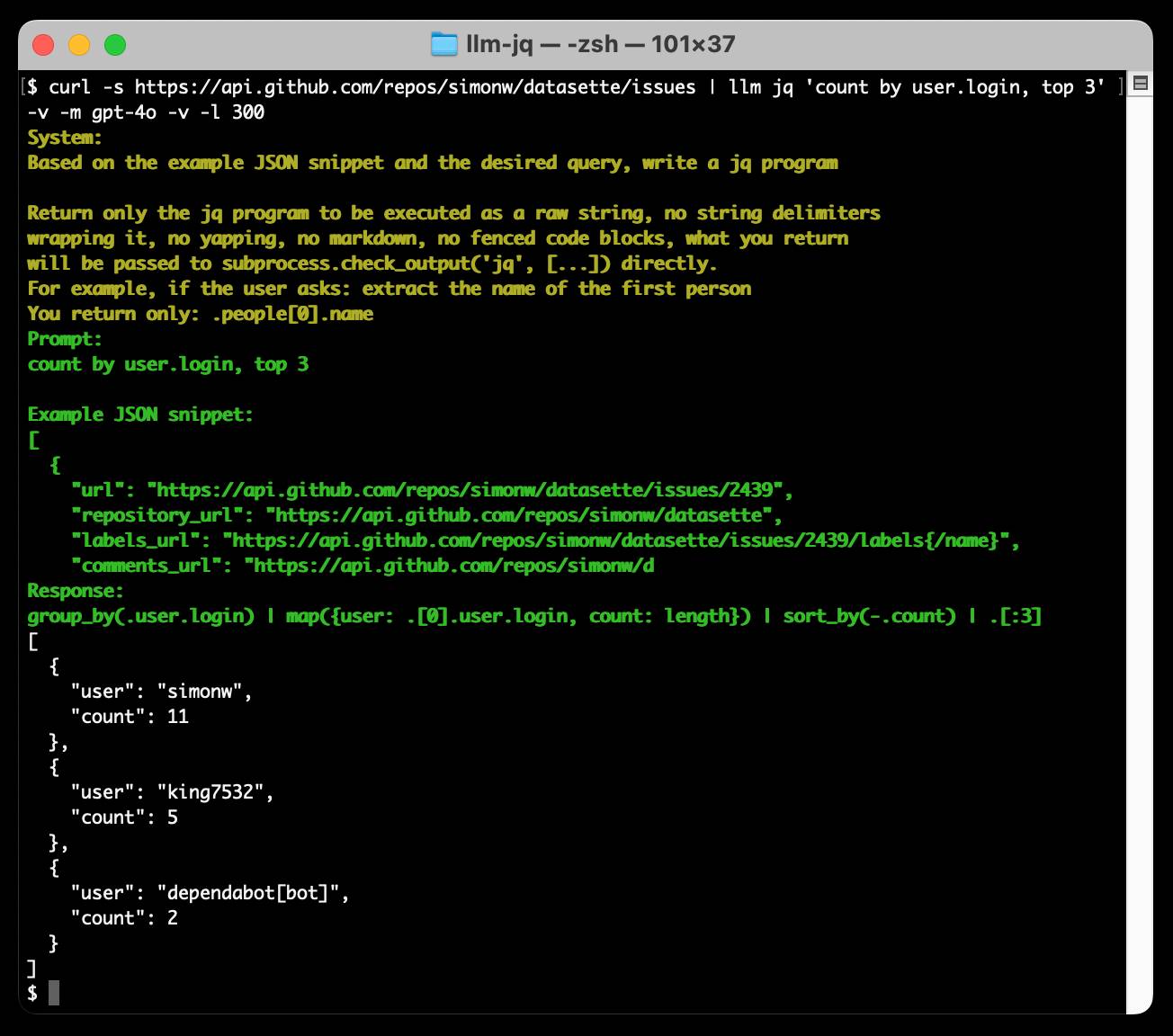

[... 2,273 words]Run a prompt to generate and execute jq programs using llm-jq

llm-jq is a brand new plugin for LLM which lets you pipe JSON directly into the llm jq command along with a human-language description of how you’d like to manipulate that JSON and have a jq program generated and executed for you on the fly.

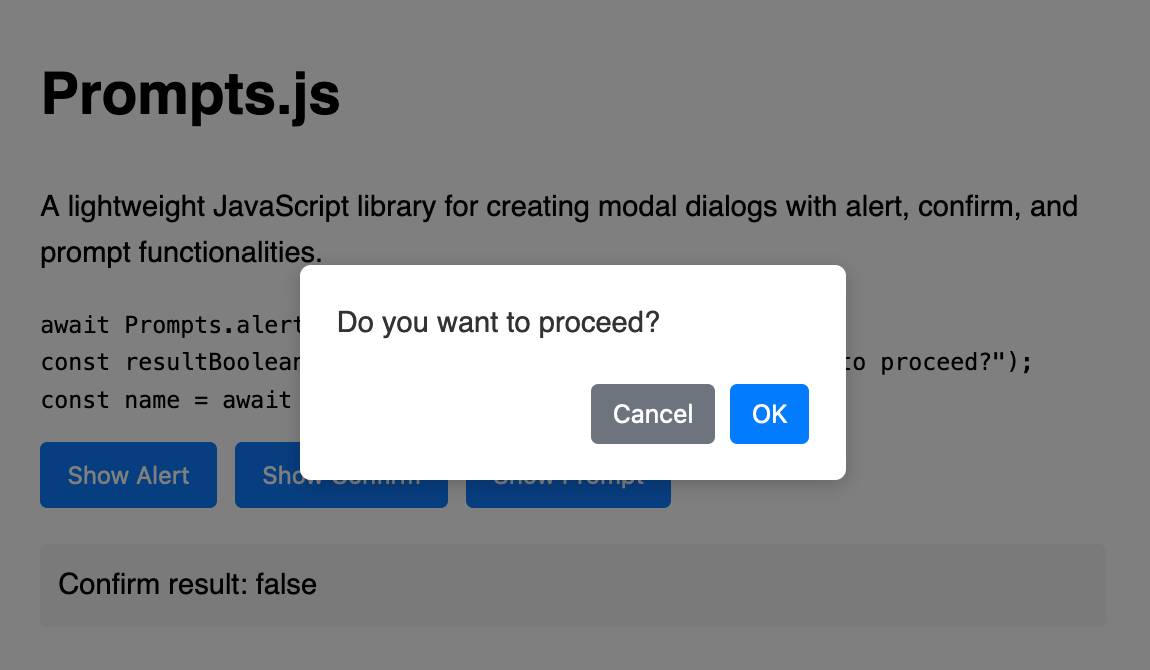

Prompts.js

I’ve been putting the new o1 model from OpenAI through its paces, in particular for code. I’m very impressed—it feels like it’s giving me a similar code quality to Claude 3.5 Sonnet, at least for Python and JavaScript and Bash... but it’s returning output noticeably faster.

[... 1,119 words]Building Python tools with a one-shot prompt using uv run and Claude Projects

I’ve written a lot about how I’ve been using Claude to build one-shot HTML+JavaScript applications via Claude Artifacts. I recently started using a similar pattern to create one-shot Python utilities, using a custom Claude Project combined with the dependency management capabilities of uv.

[... 899 words]Here’s how I use LLMs to help me write code

Online discussions about using Large Language Models to help write code inevitably produce comments from developers who’s experiences have been disappointing. They often ask what they’re doing wrong—how come some people are reporting such great results when their own experiments have proved lacking?

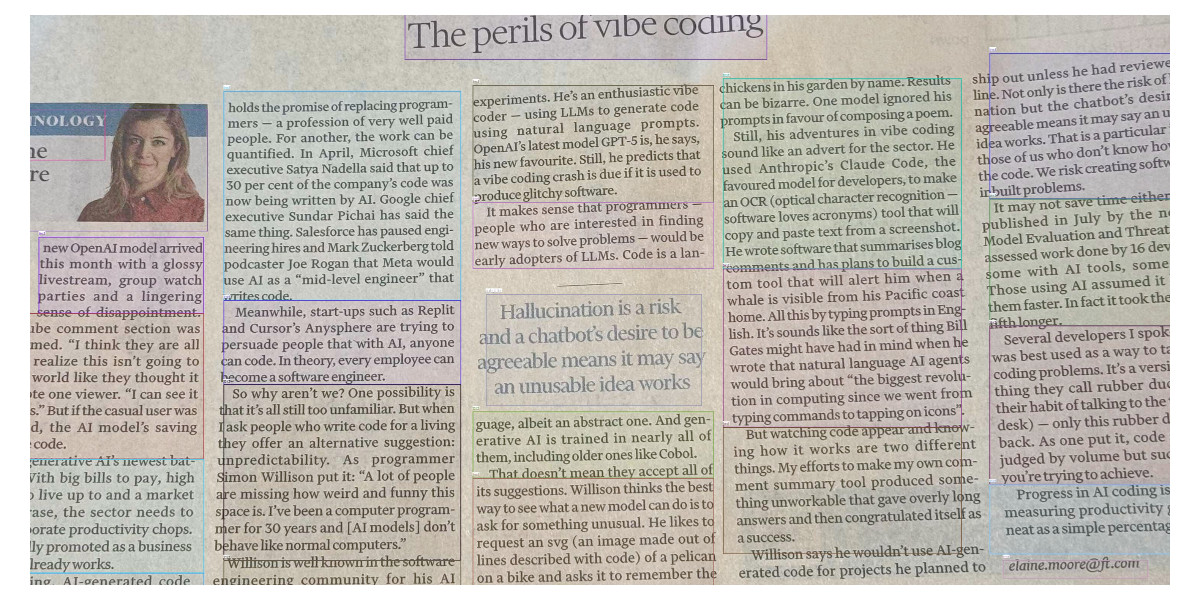

[... 5,178 words]Not all AI-assisted programming is vibe coding (but vibe coding rocks)

Vibe coding is having a moment. The term was coined by Andrej Karpathy just a few weeks ago (on February 6th) and has since been featured in the New York Times, Ars Technica, the Guardian and countless online discussions.

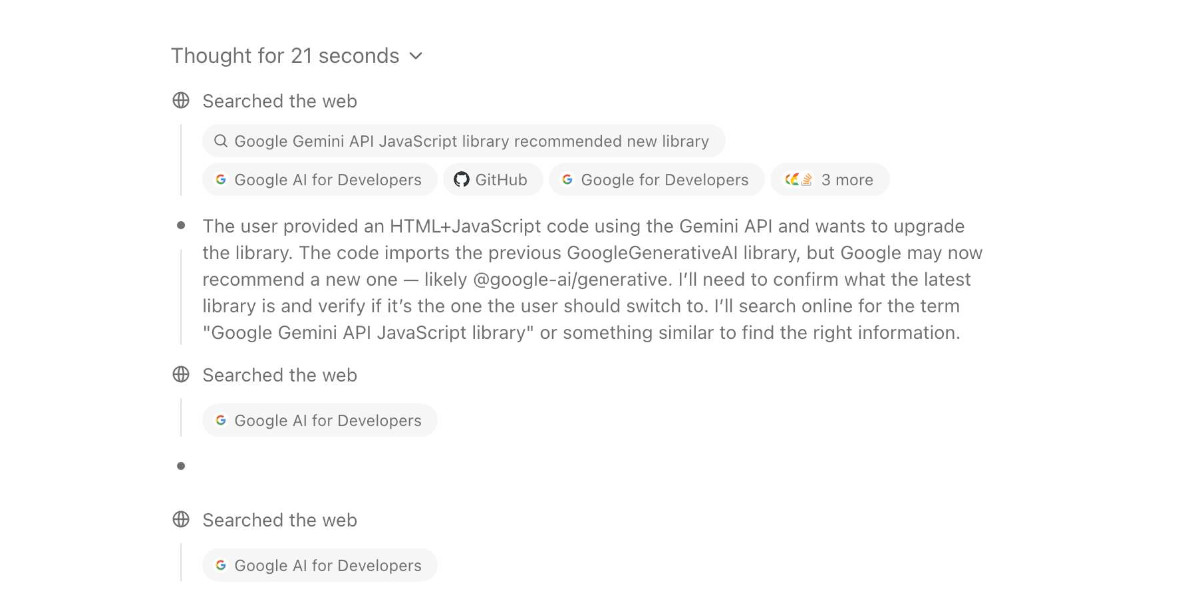

[... 1,486 words]AI assisted search-based research actually works now

For the past two and a half years the feature I’ve most wanted from LLMs is the ability to take on search-based research tasks on my behalf. We saw the first glimpses of this back in early 2023, with Perplexity (first launched December 2022, first prompt leak in January 2023) and then the GPT-4 powered Microsoft Bing (which launched/cratered spectacularly in February 2023). Since then a whole bunch of people have taken a swing at this problem, most notably Google Gemini and ChatGPT Search.

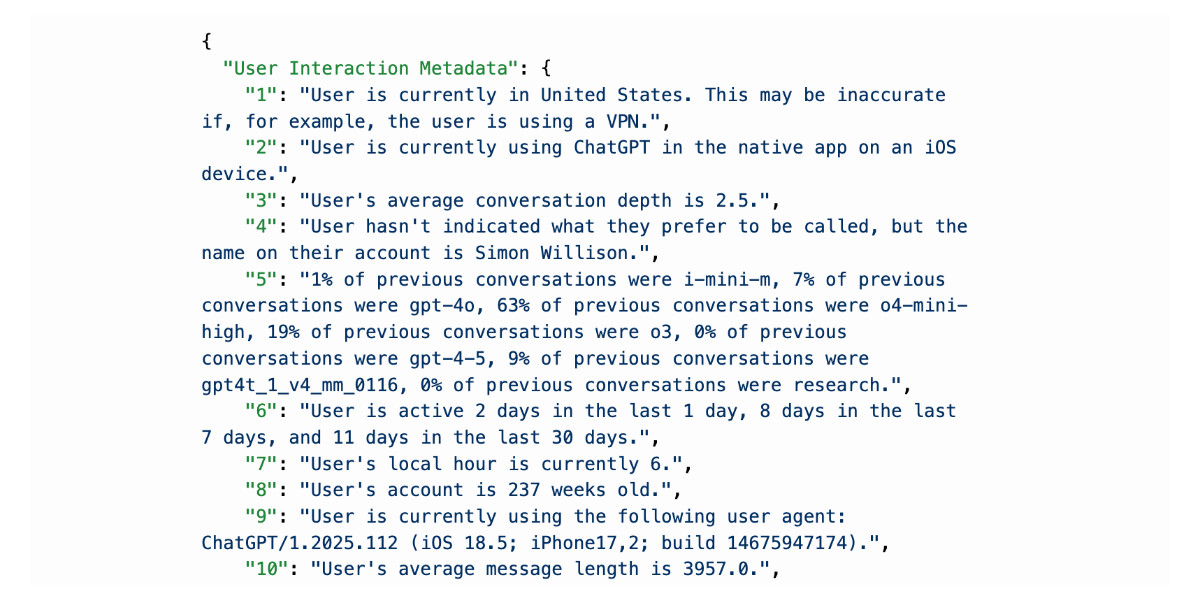

[... 1,618 words]I really don’t like ChatGPT’s new memory dossier

Last month ChatGPT got a major upgrade. As far as I can tell the closest to an official announcement was this tweet from @OpenAI:

[... 2,535 words]Tips on prompting ChatGPT for UK technology secretary Peter Kyle

Back in March New Scientist reported on a successful Freedom of Information request they had filed requesting UK Secretary of State for Science, Innovation and Technology Peter Kyle’s ChatGPT logs:

[... 1,189 words]Designing agentic loops

Coding agents like Anthropic’s Claude Code and OpenAI’s Codex CLI represent a genuine step change in how useful LLMs can be for producing working code. These agents can now directly exercise the code they are writing, correct errors, dig through existing implementation details, and even run experiments to find effective code solutions to problems.

[... 1,667 words]Embracing the parallel coding agent lifestyle

For a while now I’ve been hearing from engineers who run multiple coding agents at once—firing up several Claude Code or Codex CLI instances at the same time, sometimes in the same repo, sometimes against multiple checkouts or git worktrees.

[... 1,275 words]Vibe engineering

I feel like vibe coding is pretty well established now as covering the fast, loose and irresponsible way of building software with AI—entirely prompt-driven, and with no attention paid to how the code actually works. This leaves us with a terminology gap: what should we call the other end of the spectrum, where seasoned professionals accelerate their work with LLMs while staying proudly and confidently accountable for the software they produce?

[... 1,347 words]Claude can write complete Datasette plugins now

This isn’t necessarily surprising, but it’s worth noting anyway. Claude Sonnet 4.5 is capable of building a full Datasette plugin now.

[... 1,296 words]Getting DeepSeek-OCR working on an NVIDIA Spark via brute force using Claude Code

DeepSeek released a new model yesterday: DeepSeek-OCR, a 6.6GB model fine-tuned specifically for OCR. They released it as model weights that run using PyTorch and CUDA. I got it running on the NVIDIA Spark by having Claude Code effectively brute force the challenge of getting it working on that particular hardware.

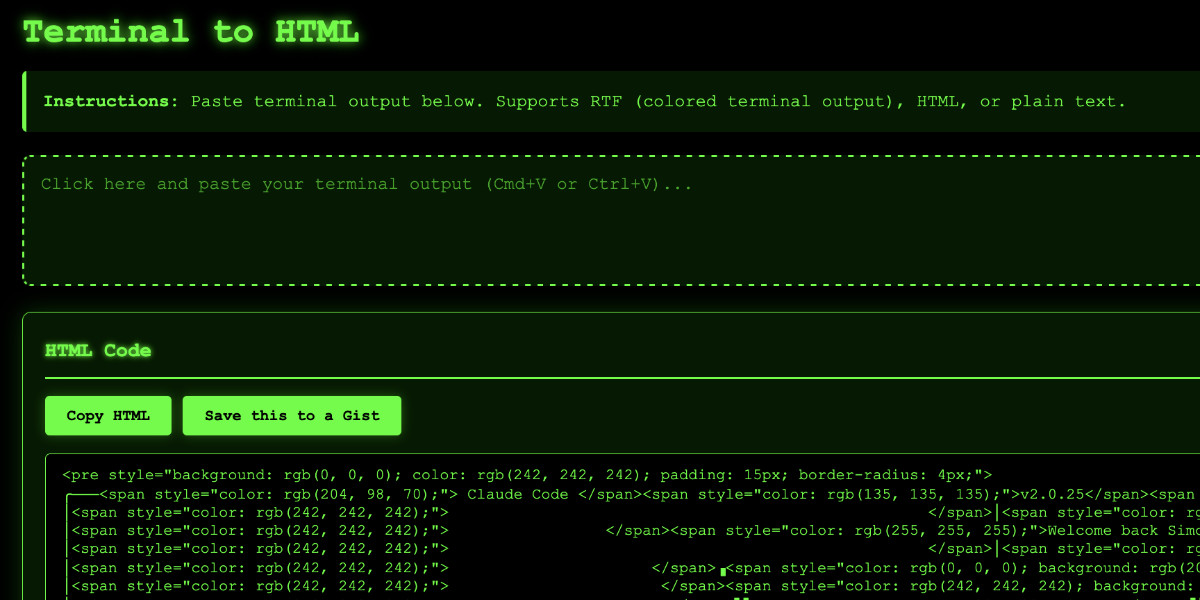

[... 1,971 words]Video: Building a tool to copy-paste share terminal sessions using Claude Code for web

This afternoon I was manually converting a terminal session into a shared HTML file for the umpteenth time when I decided to reduce the friction by building a custom tool for it—and on the spur of the moment I fired up Descript to record the process. The result is this new 11 minute YouTube video showing my workflow for vibe-coding simple tools from start to finish.

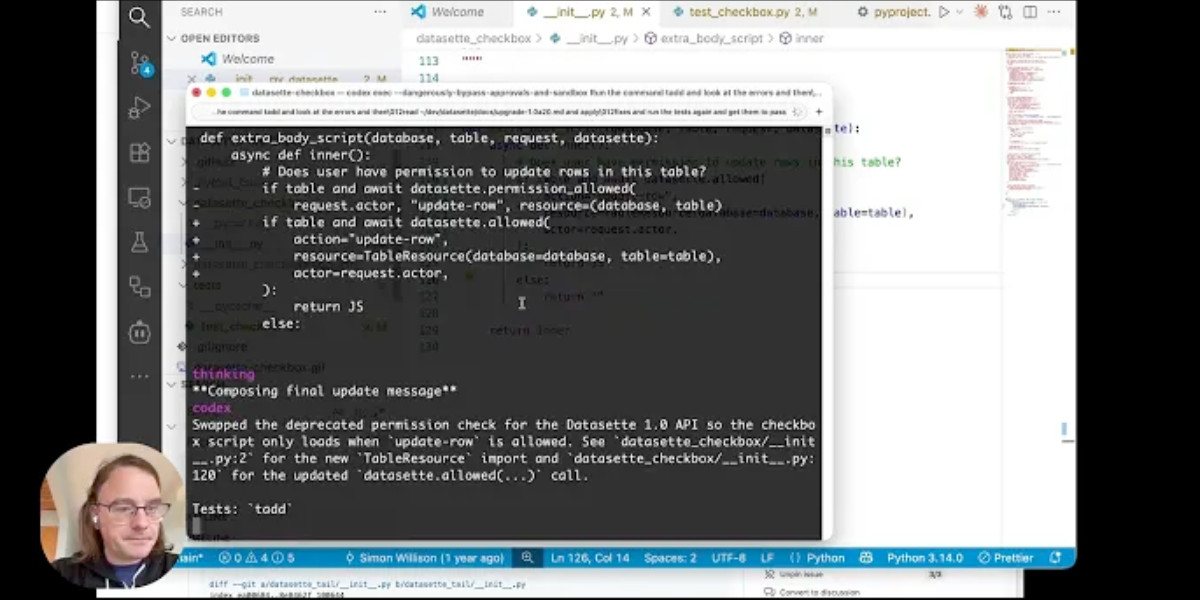

[... 1,338 words]Video + notes on upgrading a Datasette plugin for the latest 1.0 alpha, with help from uv and OpenAI Codex CLI

I’m upgrading various plugins for compatibility with the new Datasette 1.0a20 alpha release and I decided to record a video of the process. This post accompanies that video with detailed additional notes.

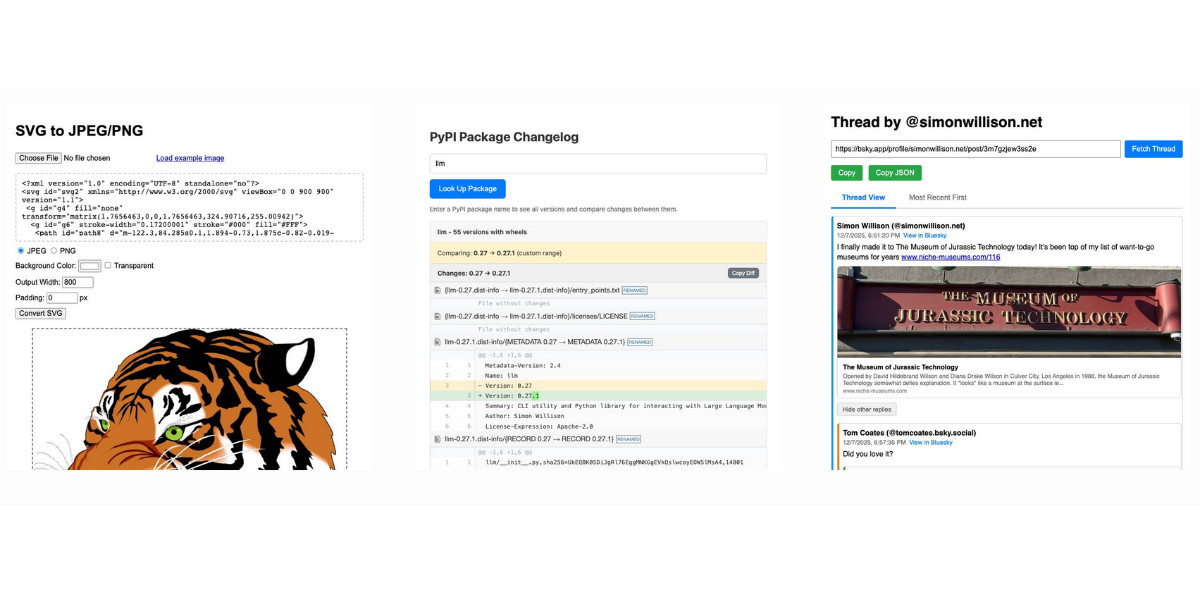

[... 1,094 words]Useful patterns for building HTML tools

I’ve started using the term HTML tools to refer to HTML applications that I’ve been building which combine HTML, JavaScript, and CSS in a single file and use them to provide useful functionality. I have built over 150 of these in the past two years, almost all of them written by LLMs. This article presents a collection of useful patterns I’ve discovered along the way.

[... 4,231 words]I ported JustHTML from Python to JavaScript with Codex CLI and GPT-5.2 in 4.5 hours

I wrote about JustHTML yesterday—Emil Stenström’s project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

[... 1,818 words]Cooking with Claude

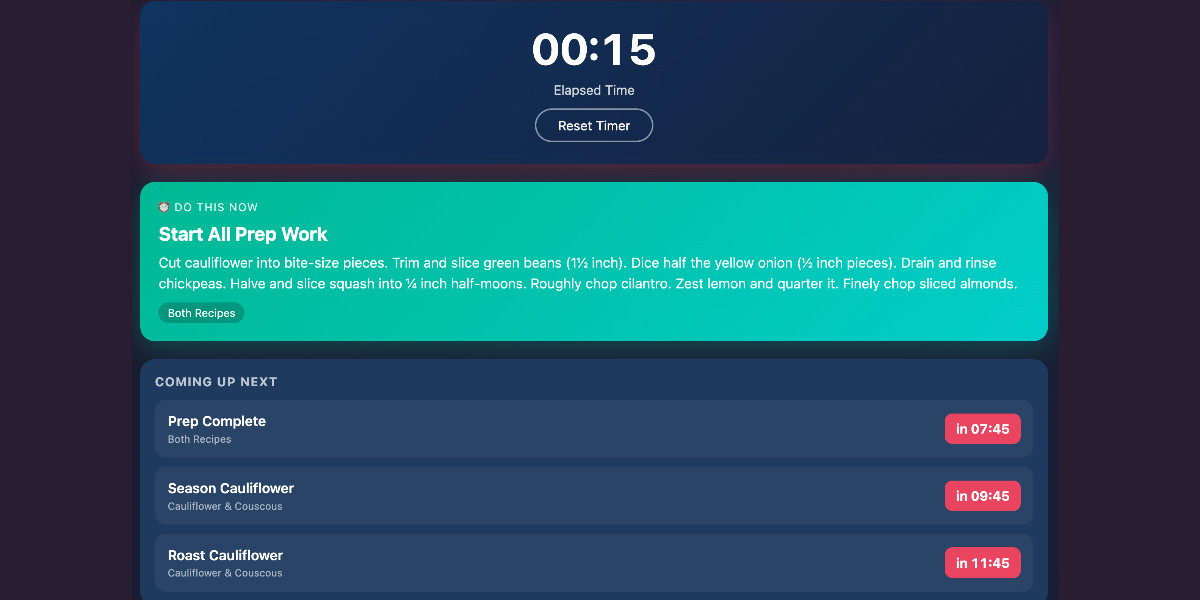

I’ve been having an absurd amount of fun recently using LLMs for cooking. I started out using them for basic recipes, but as I’ve grown more confident in their culinary abilities I’ve leaned into them for more advanced tasks. Today I tried something new: having Claude vibe-code up a custom application to help with the timing for a complicated meal preparation. It worked really well!

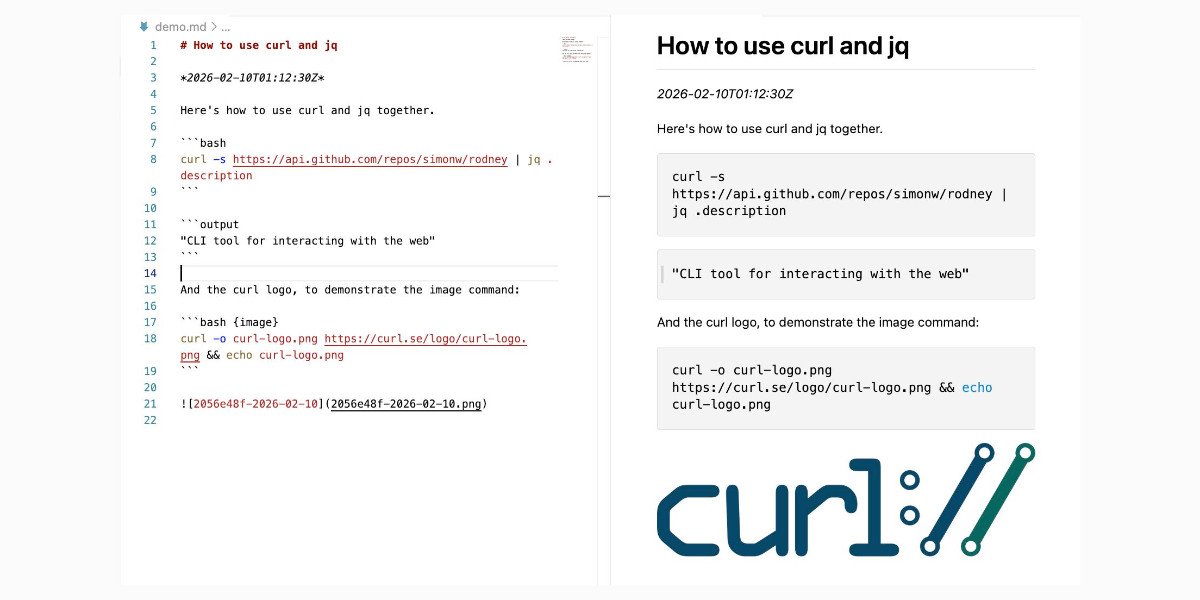

[... 1,313 words]Introducing Showboat and Rodney, so agents can demo what they’ve built

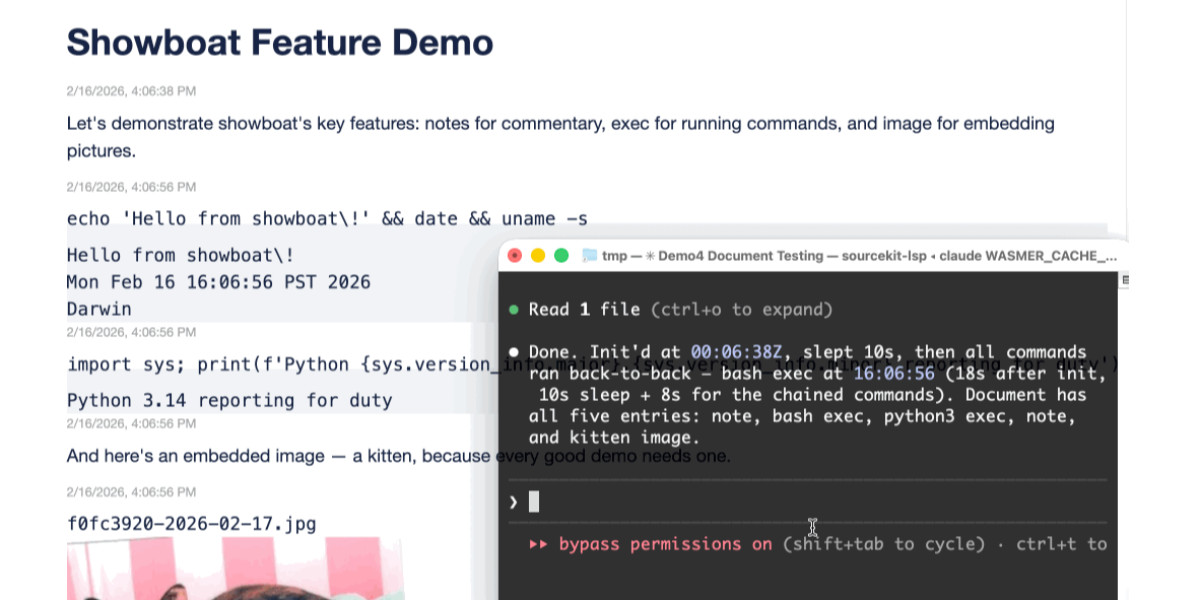

A key challenge working with coding agents is having them both test what they’ve built and demonstrate that software to you, their supervisor. This goes beyond automated tests—we need artifacts that show their progress and help us see exactly what the agent-produced software is able to do. I’ve just released two new tools aimed at this problem: Showboat and Rodney.

[... 2,023 words]Two new Showboat tools: Chartroom and datasette-showboat

I introduced Showboat a week ago—my CLI tool that helps coding agents create Markdown documents that demonstrate the code that they have created. I’ve been finding new ways to use it on a daily basis, and I’ve just released two new tools to help get the best out of the Showboat pattern. Chartroom is a CLI charting tool that works well with Showboat, and datasette-showboat lets Showboat’s new remote publishing feature incrementally push documents to a Datasette instance.

[... 1,756 words]