70 posts tagged “vision-llms”

LLMs that can also be used to interpret images and video, such as GPT-4o, Claude 3 and Gemini Pro.

2025

Introducing Gemma 3n: The developer guide. Extremely consequential new open weights model release from Google today:

Multimodal by design: Gemma 3n natively supports image, audio, video, and text inputs and text outputs.

Optimized for on-device: Engineered with a focus on efficiency, Gemma 3n models are available in two sizes based on effective parameters: E2B and E4B. While their raw parameter count is 5B and 8B respectively, architectural innovations allow them to run with a memory footprint comparable to traditional 2B and 4B models, operating with as little as 2GB (E2B) and 3GB (E4B) of memory.

This is very exciting: a 2B and 4B model optimized for end-user devices which accepts text, images and audio as inputs!

Gemma 3n is also the most comprehensive day one launch I've seen for any model: Google partnered with "AMD, Axolotl, Docker, Hugging Face, llama.cpp, LMStudio, MLX, NVIDIA, Ollama, RedHat, SGLang, Unsloth, and vLLM" so there are dozens of ways to try this out right now.

So far I've run two variants on my Mac laptop. Ollama offer a 7.5GB version (full tag gemma3n:e4b-it-q4_K_M0) of the 4B model, which I ran like this:

ollama pull gemma3n

llm install llm-ollama

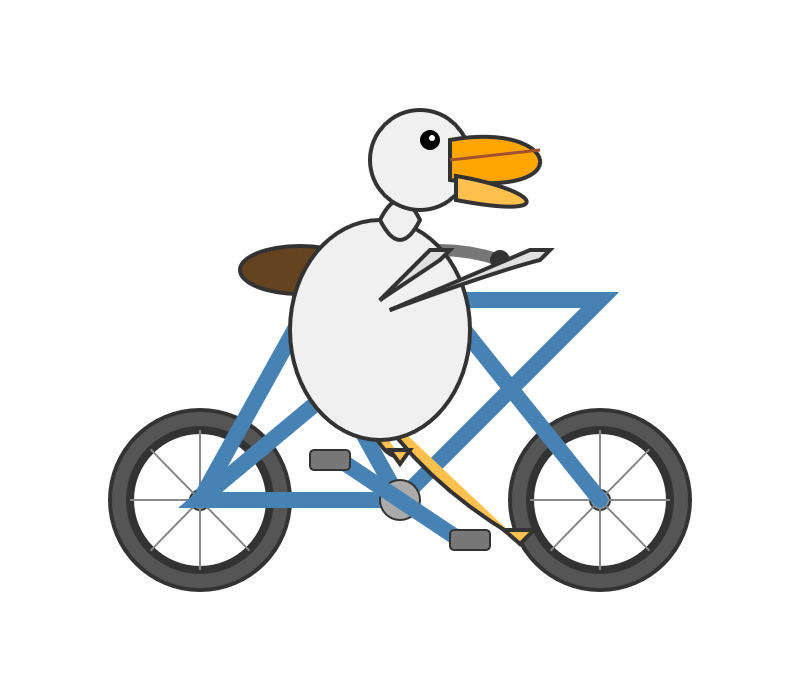

llm -m gemma3n:latest "Generate an SVG of a pelican riding a bicycle"

It drew me this:

The Ollama version doesn't appear to support image or audio input yet.

... but the mlx-vlm version does!

First I tried that on this WAV file like so (using a recipe adapted from Prince Canuma's video):

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Transcribe the following speech segment in English:" \

--audio pelican-joke-request.wav

That downloaded a 15.74 GB bfloat16 version of the model and output the following correct transcription:

Tell me a joke about a pelican.

Then I had it draw me a pelican for good measure:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Generate an SVG of a pelican riding a bicycle"

I quite like this one:

It's interesting to see such a striking visual difference between those 7.5GB and 15GB model quantizations.

Finally, I had it describe the image it had just created:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 1000 \

--prompt "Describe image" \

--image gemma3n-mlx-vlm.jpg

Here's what I got back - it thought this was a chemical diagram!

The image is a cartoon-style illustration depicting a molecular structure against a light blue background. The structure is composed of several differently colored and shaped elements connected by curved black lines.

Here's a breakdown of the elements:

- Large Black Circles: There are two large, solid black circles, likely representing larger molecules or components.

- Cream-Colored Oval: A prominent cream-colored oval shape is centrally located and connected to other elements. It has a smaller, darker cream-colored sphere attached to its left side.

- Red Oval: A smaller, solid red oval is positioned near one of the black circles and connected to the cream-colored oval.

- Black Lines: These lines act as bonds, connecting the various molecular components. They are curved and dynamic, suggesting movement or interaction.

- Triangular Shape: A small black triangle is attached to the smaller cream-colored sphere.

- Letter "I": The letter "I" appears twice, likely labeling specific parts of the molecule.

The overall impression is of a simplified representation of a biological molecule, possibly a protein or a complex organic compound. The use of different colors helps to distinguish the various components within the structure.

Mistral-Small 3.2. Released on Hugging Face a couple of hours ago, so far there aren't any quantizations to run it on a Mac but I'm sure those will emerge pretty quickly.

This is a minor bump to Mistral Small 3.1, one of my favorite local models. I've been running Small 3.1 via Ollama where it's a 15GB download - these 24 billion parameter models are a great balance between capabilities and not using up all of the available RAM on my laptop. I expect Ollama will add 3.2 imminently.

According to Mistral:

Small-3.2 improves in the following categories:

Interestingly they recommend running it with a temperature of 0.15 - many models recommend a default of 0.7. They also provide a suggested system prompt which includes a note that "Your knowledge base was last updated on 2023-10-01".

It's not currently available via Mistral's API, or through any of the third-party LLM hosting vendors that I've checked, so I've not been able to run a prompt through the model myself yet.

Update: I downloaded one of first GGUF quantizations to show up on Hugging Face, gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF by Gabriel Larson. I ran it using Ollama and llm-ollama like this:

ollama pull hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M

llm install llm-ollama

llm -m hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M \

"Generate an SVG of a pelican riding a bicycle"

This one is pretty good for a 15GB model!

Here's the full transcript - it doesn't quite work in the actual image but I was delighted to see the model attempt to add this detail:

<!-- Basket with fish -->

<rect x="250" y="190" width="25" height="15" rx="5" fill="#FFA500"/>

<circle cx="260" cy="200" r="3" fill="#FF4500"/> <!-- Fish -->

Here's what you get if you isolate just that part of the generated SVG:

I had Mistral Small 3.2 describe the full image to me, since it's a vision-LLM:

llm -m hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M \

'describe image' \

-a https://static.simonwillison.net/static/2025/mistral-3.2-pelican.jpg

And it gave me the following:

The image depicts a cartoonish illustration of a duck that is being lifted off the ground by a hook. The duck appears to be in mid-air, with its wings spread slightly as if it's surprised or reacting to being picked up. The hook is attached to an arm or a mechanism and seems to be connected to a vehicle below—perhaps a truck or a platform with wheels. The background of the image is light blue, indicating an outdoor setting. Overall, the scene is whimsical and playful, possibly suggesting a humorous or unusual situation where the duck is being transported in this manner.

Update 2: It's now available as an official Ollama model:

ollama pull mistral-small3.2

LM Studio has a community quantization too: lmstudio-community/Mistral-Small-3.2-24B-Instruct-2506-GGUF.

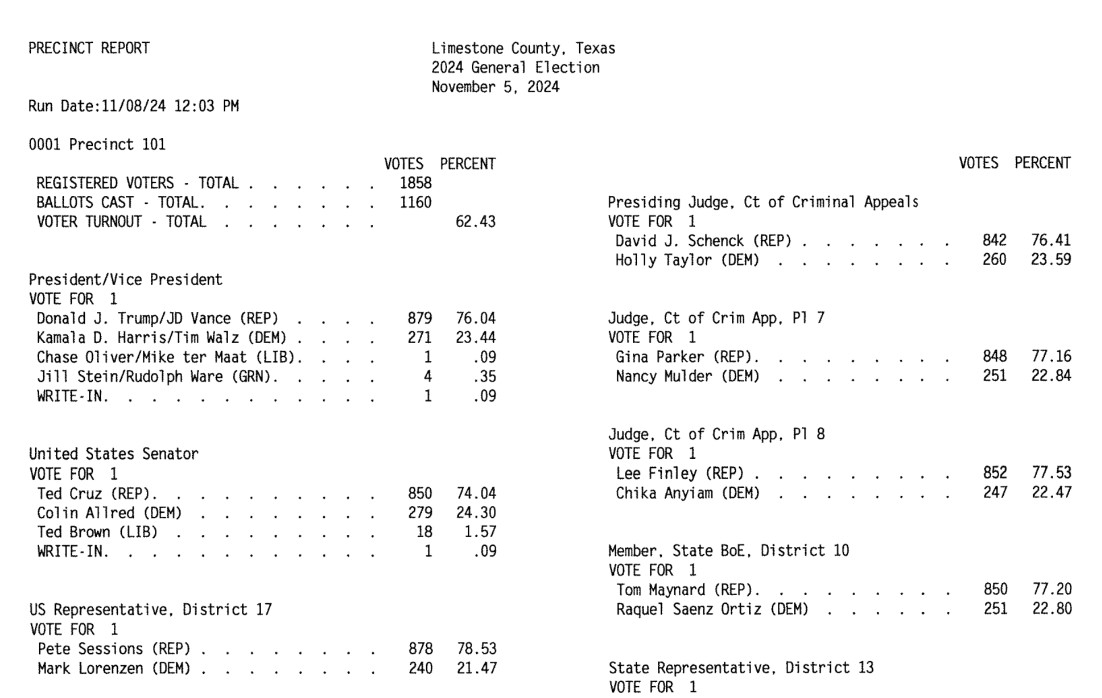

How OpenElections Uses LLMs (via) The OpenElections project collects detailed election data for the USA, all the way down to the precinct level. This is a surprisingly hard problem: while county and state-level results are widely available, precinct-level results are published in thousands of different ad-hoc ways and rarely aggregated once the election result has been announced.

A lot of those precinct results are published as image-filled PDFs.

Derek Willis has recently started leaning on Gemini to help parse those PDFs into CSV data:

For parsing image PDFs into CSV files, Google’s Gemini is my model of choice, for two main reasons. First, the results are usually very, very accurate (with a few caveats I’ll detail below), and second, Gemini’s large context window means it’s possible to work with PDF files that can be multiple MBs in size.

Is this piece he shares the process and prompts for a real-world expert level data entry project, assisted by Gemini.

This example from Limestone County, Texas is a great illustration of how tricky this problem can get. Getting traditional OCR software to correctly interpret multi-column layouts like this always requires some level of manual intervention:

Derek's prompt against Gemini 2.5 Pro throws in an example, some special instructions and a note about the two column format:

Produce a CSV file from the attached PDF based on this example:

county,precinct,office,district,party,candidate,votes,absentee,early_voting,election_day

Limestone,Precinct 101,Registered Voters,,,,1858,,,

Limestone,Precinct 101,Ballots Cast,,,,1160,,,

Limestone,Precinct 101,President,,REP,Donald J. Trump,879,,,

Limestone,Precinct 101,President,,DEM,Kamala D. Harris,271,,,

Limestone,Precinct 101,President,,LIB,Chase Oliver,1,,,

Limestone,Precinct 101,President,,GRN,Jill Stein,4,,,

Limestone,Precinct 101,President,,,Write-ins,1,,,

Skip Write-ins with candidate names and rows with "Cast Votes", "Not Assigned", "Rejected write-in votes", "Unresolved write-in votes" or "Contest Totals". Do not extract any values that end in "%"

Use the following offices:

President/Vice President -> President

United States Senator -> U.S. Senate

US Representative -> U.S. House

State Senator -> State Senate

Quote all office and candidate values. The results are split into two columns on each page; parse the left column first and then the right column.

A spot-check and a few manual tweaks and the result against a 42 page PDF was exactly what was needed.

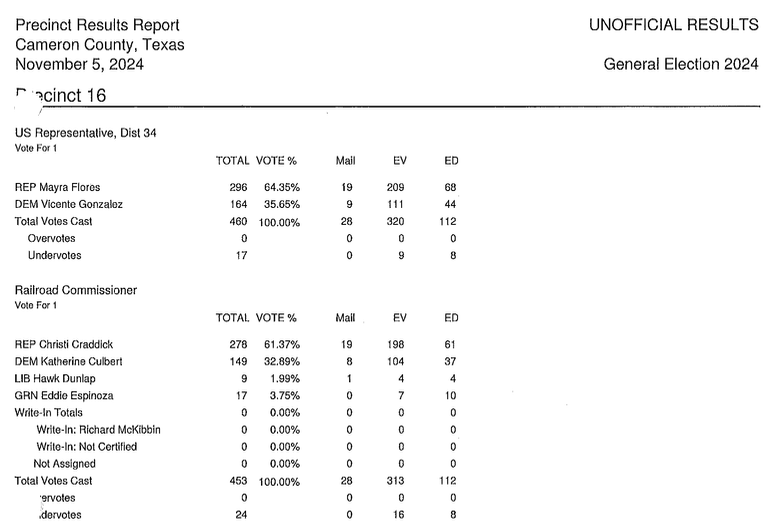

How about something harder? The results for Cameron County came as more than 600 pages and looked like this - note the hole-punch holes that obscure some of the text!

This file had to be split into chunks of 100 pages each, and the entire process still took a full hour of work - but the resulting table matched up with the official vote totals.

I love how realistic this example is. AI data entry like this isn't a silver bullet - there's still a bunch of work needed to verify the results and creative thinking needed to work through limitations - but it represents a very real improvement in how small teams can take on projects of this scale.

In the six weeks since we started working on Texas precinct results, we’ve been able to convert them for more than half of the state’s 254 counties, including many image PDFs like the ones on display here. That pace simply wouldn’t be possible with data entry or traditional OCR software.

qwen2.5vl in Ollama. Ollama announced a complete overhaul of their vision support the other day. Here's the first new model they've shipped since then - a packaged version of Qwen 2.5 VL which was first released on January 26th 2025. Here are my notes from that release.

I upgraded Ollama (it auto-updates so I just had to restart it from the tray icon) and ran this:

ollama pull qwen2.5vl

This downloaded a 6GB model file. I tried it out against my photo of Cleo rolling on the beach:

llm -a https://static.simonwillison.net/static/2025/cleo-sand.jpg \

'describe this image' -m qwen2.5vl

And got a pretty good result:

The image shows a dog lying on its back on a sandy beach. The dog appears to be a medium to large breed with a dark coat, possibly black or dark brown. It is wearing a red collar or harness around its chest. The dog's legs are spread out, and its belly is exposed, suggesting it might be rolling around or playing in the sand. The sand is light-colored and appears to be dry, with some small footprints and marks visible around the dog. The lighting in the image suggests it is taken during the daytime, with the sun casting a shadow of the dog to the left side of the image. The overall scene gives a relaxed and playful impression, typical of a dog enjoying time outdoors on a beach.

Qwen 2.5 VL has a strong reputation for OCR, so I tried it on my poster:

llm -a https://static.simonwillison.net/static/2025/poster.jpg \

'convert to markdown' -m qwen2.5vl

The result that came back:

It looks like the image you provided is a jumbled and distorted text, making it difficult to interpret. If you have a specific question or need help with a particular topic, please feel free to ask, and I'll do my best to assist you!

I'm not sure what went wrong here. My best guess is that the maximum resolution the model can handle is too small to make out the text, or maybe Ollama resized the image to the point of illegibility before handing it to the model?

Update: I think this may be a bug relating to URL handling in LLM/llm-ollama. I tried downloading the file first:

wget https://static.simonwillison.net/static/2025/poster.jpg

llm -m qwen2.5vl 'extract text' -a poster.jpg

This time it did a lot better. The results weren't perfect though - it ended up stuck in a loop outputting the same code example dozens of times.

I tried with a different prompt - "extract text" - and it got confused by the three column layout, misread Datasette as "Datasetette" and missed some of the text. Here's that result.

These experiments used qwen2.5vl:7b (6GB) - I expect the results would be better with the larger qwen2.5vl:32b (21GB) and qwen2.5vl:72b (71GB) models.

Fred Jonsson reported a better result using the MLX model via LM studio (~9GB model running in 8bit - I think that's mlx-community/Qwen2.5-VL-7B-Instruct-8bit). His full output is here - looks almost exactly right to me.

Building software on top of Large Language Models

I presented a three hour workshop at PyCon US yesterday titled Building software on top of Large Language Models. The goal of the workshop was to give participants everything they needed to get started writing code that makes use of LLMs.

[... 3,726 words]Vision Language Models (Better, Faster, Stronger) (via) Extremely useful review of the last year in vision and multi-modal LLMs.

So much has happened! I'm particularly excited about the range of small open weight vision models that are now available. Models like gemma3-4b-it and Qwen2.5-VL-3B-Instruct produce very impressive results and run happily on mid-range consumer hardware.

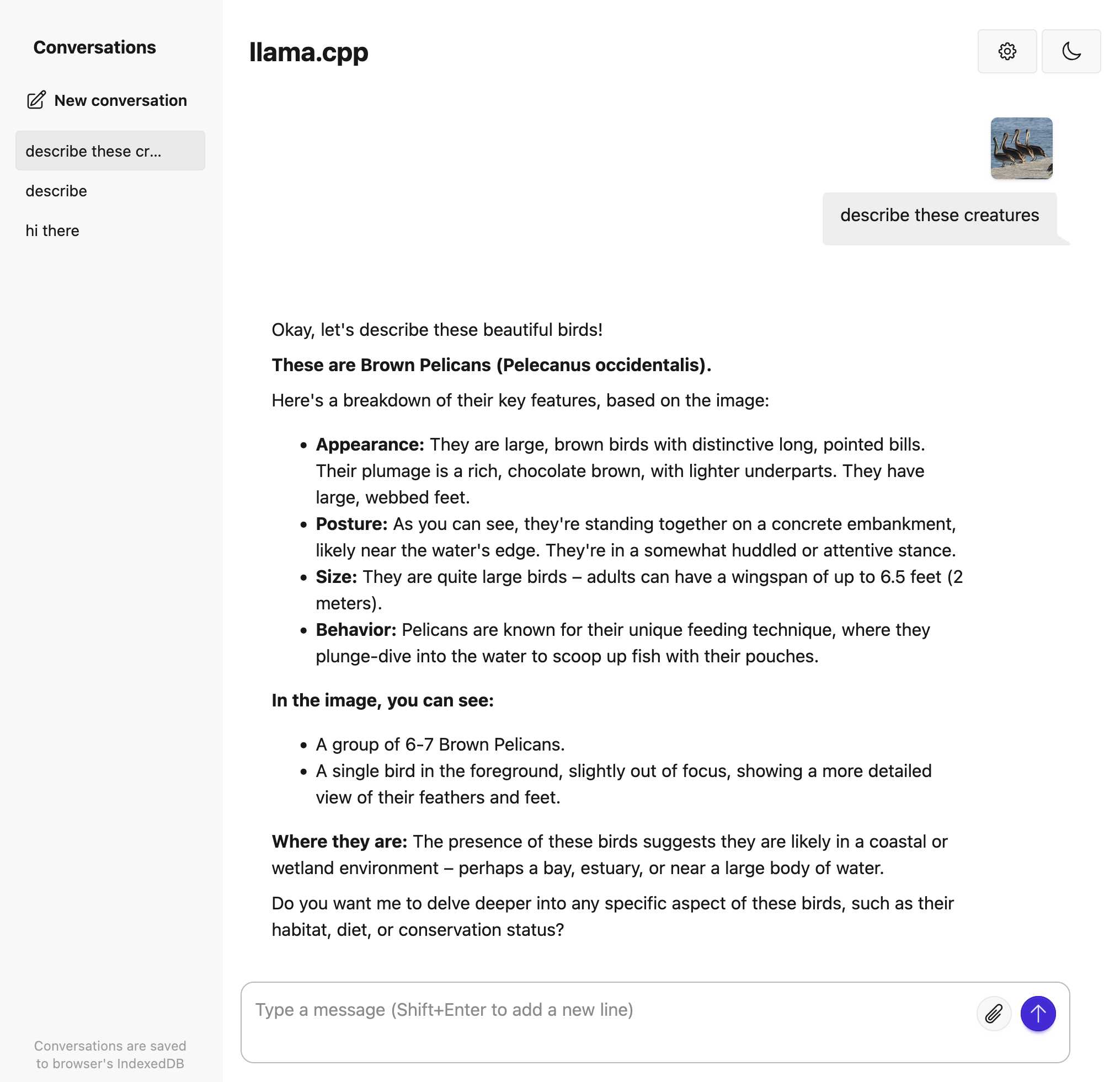

Trying out llama.cpp’s new vision support

This llama.cpp server vision support via libmtmd pull request—via Hacker News—was merged earlier today. The PR finally adds full support for vision models to the excellent llama.cpp project. It’s documented on this page, but the more detailed technical details are covered here. Here are my notes on getting it working on a Mac.

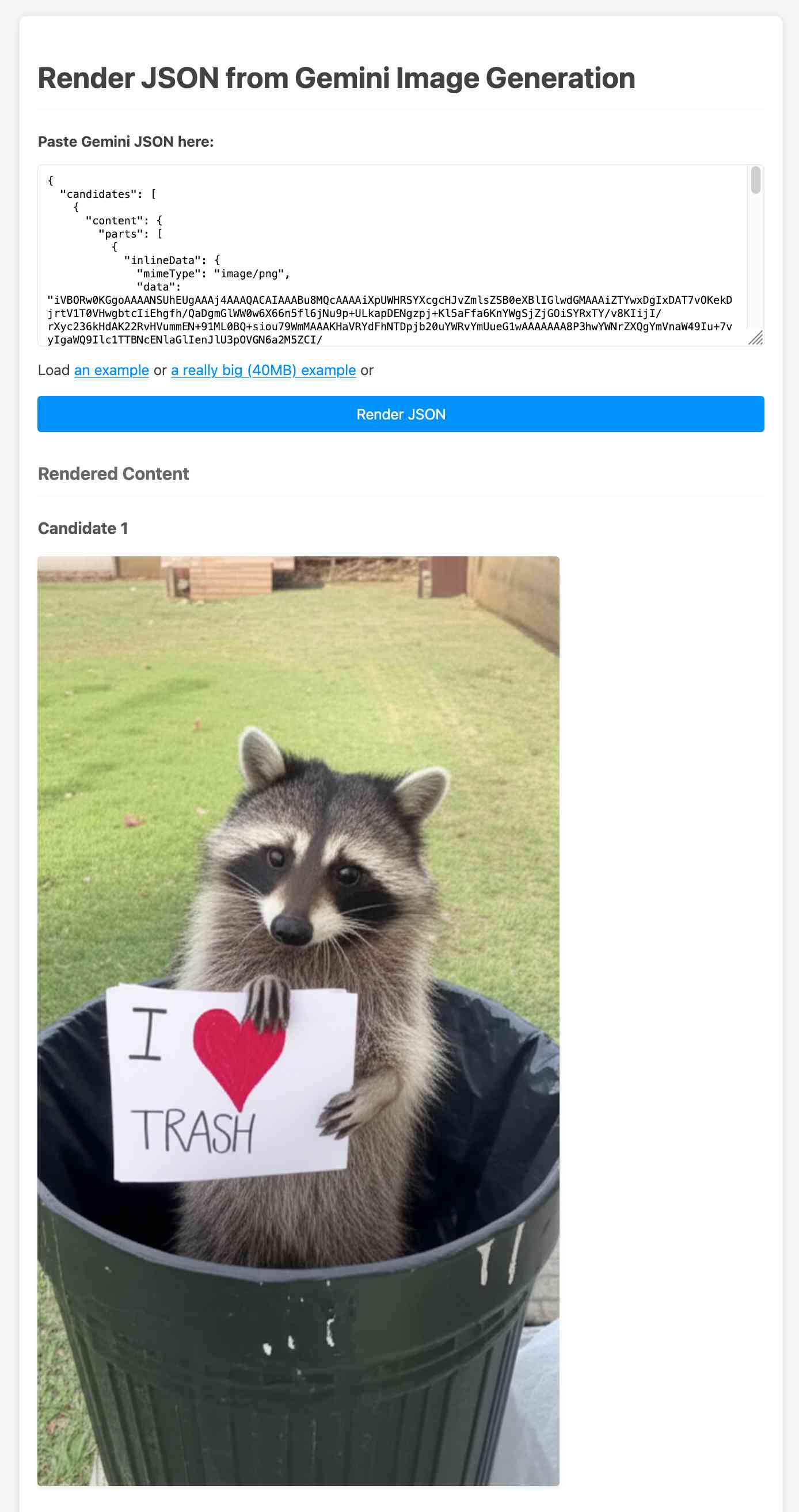

[... 1,693 words]Create and edit images with Gemini 2.0 in preview (via) Gemini 2.0 Flash has had image generation capabilities for a while now, and they're now available via the paid Gemini API - at 3.9 cents per generated image.

According to the API documentation you need to use the new gemini-2.0-flash-preview-image-generation model ID and specify {"responseModalities":["TEXT","IMAGE"]} as part of your request.

Here's an example that calls the API using curl (and fetches a Gemini key from the llm keys get store):

curl -s -X POST \ "https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key=$(llm keys get gemini)" \ -H "Content-Type: application/json" \ -d '{ "contents": [{ "parts": [ {"text": "Photo of a raccoon in a trash can with a paw-written sign that says I love trash"} ] }], "generationConfig":{"responseModalities":["TEXT","IMAGE"]} }' > /tmp/raccoon.json

Here's the response. I got Gemini 2.5 Pro to vibe-code me a new debug tool for visualizing that JSON. If you visit that tool and click the "Load an example" link you'll see the result of the raccoon image visualized:

The other prompt I tried was this one:

Provide a vegetarian recipe for butter chicken but with chickpeas not chicken and include many inline illustrations along the way

The result of that one was a 41MB JSON file(!) containing 28 images - which presumably cost over a dollar since images are 3.9 cents each.

Some of the illustrations it chose for that one were somewhat unexpected:

If you want to see that one you can click the "Load a really big example" link in the debug tool, then wait for your browser to fetch and render the full 41MB JSON file.

The most interesting feature of Gemini (as with GPT-4o images) is the ability to accept images as inputs. I tried that out with this pelican photo like this:

cat > /tmp/request.json << EOF { "contents": [{ "parts":[ {"text": "Modify this photo to add an inappropriate hat"}, { "inline_data": { "mime_type":"image/jpeg", "data": "$(base64 -i pelican.jpg)" } } ] }], "generationConfig": {"responseModalities": ["TEXT", "IMAGE"]} } EOF # Execute the curl command with the JSON file curl -X POST \ 'https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key='$(llm keys get gemini) \ -H 'Content-Type: application/json' \ -d @/tmp/request.json \ > /tmp/out.json

And now the pelican is wearing a hat:

Medium is the new large. New model release from Mistral - this time closed source/proprietary. Mistral Medium claims strong benchmark scores similar to GPT-4o and Claude 3.7 Sonnet, but is priced at $0.40/million input and $2/million output - about the same price as GPT 4.1 Mini. For comparison, GPT-4o is $2.50/$10 and Claude 3.7 Sonnet is $3/$15.

The model is a vision LLM, accepting both images and text.

More interesting than the price is the deployment model. Mistral Medium may not be open weights but it is very much available for self-hosting:

Mistral Medium 3 can also be deployed on any cloud, including self-hosted environments of four GPUs and above.

Mistral's other announcement today is Le Chat Enterprise. This is a suite of tools that can integrate with your company's internal data and provide "agents" (these look similar to Claude Projects or OpenAI GPTs), again with the option to self-host.

Is there a new open weights model coming soon? This note tucked away at the bottom of the Mistral Medium 3 announcement seems to hint at that:

With the launches of Mistral Small in March and Mistral Medium today, it's no secret that we're working on something 'large' over the next few weeks. With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we're excited to 'open' up what's to come :)

I released llm-mistral 0.12 adding support for the new model.

What’s the carbon footprint of using ChatGPT? Inspired by Andy Masley's cheat sheet (which I linked to last week) Hannah Ritchie explores some of the numbers herself.

Hanah is Head of Research at Our World in Data, a Senior Researcher at the University of Oxford (bio) and maintains a prolific newsletter on energy and sustainability so she has a lot more credibility in this area than Andy or myself!

My sense is that a lot of climate-conscious people feel guilty about using ChatGPT. In fact it goes further: I think many people judge others for using it, because of the perceived environmental impact. [...]

But after looking at the data on individual use of LLMs, I have stopped worrying about it and I think you should too.

The inevitable counter-argument to the idea that the impact of ChatGPT usage by an individual is negligible is that aggregate user demand is still the thing that drives these enormous investments in huge data centers and new energy sources to power them. Hannah acknowledges that:

I am not saying that AI energy demand, on aggregate, is not a problem. It is, even if it’s “just” of a similar magnitude to the other sectors that we need to electrify, such as cars, heating, or parts of industry. It’s just that individuals querying chatbots is a relatively small part of AI's total energy consumption. That’s how both of these facts can be true at the same time.

Meanwhile Arthur Clune runs the numbers on the potential energy impact of some much more severe usage patterns.

Developers burning through $100 of tokens per day (not impossible given some of the LLM-heavy development patterns that are beginning to emerge) could end the year with the equivalent of a short haul flight or 600 mile car journey.

In the panopticon scenario where all 10 million security cameras in the UK analyze video through a vision LLM at one frame per second Arthur estimates we would need to duplicate the total usage of Birmingham, UK - the output of a 1GW nuclear plant.

Let's not build that panopticon!

Gemini 2.5 Pro Preview: even better coding performance. New Gemini 2.5 Pro "Google I/O edition" model, released a few weeks ahead of that annual developer conference.

They claim even better frontend coding performance, highlighting their #1 ranking on the WebDev Arena leaderboard, notable because it knocked Claude 3.7 Sonnet from that top spot. They also highlight "state-of-the-art video understanding" with a 84.8% score on the new-to-me VideoMME benchmark.

I rushed out a new release of llm-gemini adding support for the new gemini-2.5-pro-preview-05-06 model ID, but it turns out if I had read to the end of their post I should not have bothered:

For developers already using Gemini 2.5 Pro, this new version will not only improve coding performance but will also address key developer feedback including reducing errors in function calling and improving function calling trigger rates. The previous iteration (03-25) now points to the most recent version (05-06), so no action is required to use the improved model

I'm not a fan of this idea that a model ID with a clear date in it like gemini-2.5-pro-preview-03-25 can suddenly start pointing to a brand new model!

I used the new Gemini 2.5 Pro to summarize the conversation about itself on Hacker News using the latest version of my hn-summary.sh script:

hn-summary.sh 43906018 -m gemini-2.5-pro-preview-05-06

Here's what I got back - 30,408 input tokens, 8,535 output tokens and 3,980 thinknig tokens for a total cost of 16.316 cents.

8,535 output tokens is a lot. My system prompt includes the instruction to "Go long" - this is the first time I've seen a model really take that to heart. For comparison, here's the result of a similar experiment against the previous version of Gemini 2.5 Pro two months ago.

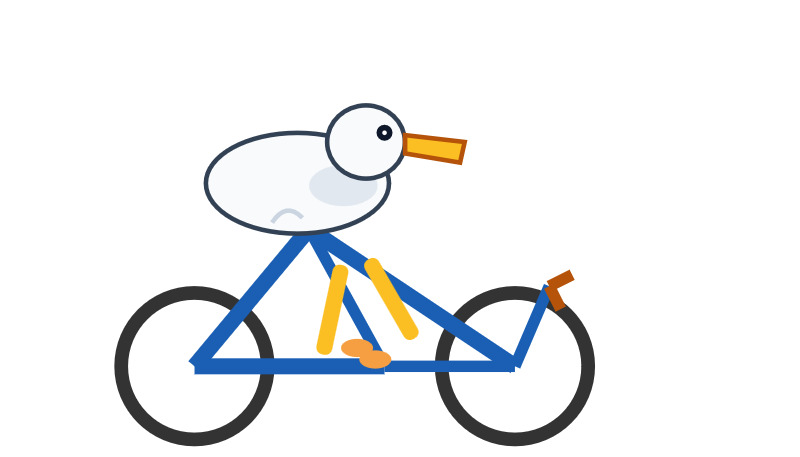

Update: The one time I forget to run my "Generate an SVG of a pelican riding a bicycle" test is the time that the model turns out to produce one of the best results I've seen yet!

Here's the transcript - 11 input tokens and 3,281 output tokens and 1,558 thinking tokens = 4.8404 cents.

I asked Gemini to describe that image:

llm -m gemini-2.5-pro-preview-05-06 \

-a https://static.simonwillison.net/static/2025/gemini-latest-pelican.jpg \

'describe image for alt text'

Here's what I got back. Gemini thought it had drawn a duck:

A cartoon illustration of a white duck with an orange beak riding a blue bicycle.

The duck has a large, oval white body and a smaller round head with a black dot eye. Its thin black wings act as arms, gripping the blue handlebars. One yellow-orange leg is visible, bent and pushing a grey pedal.

The bicycle has a blue frame with a distinctive cross-brace, a brown oval seat, and dark grey wheels with silver spokes. The entire image is set against a plain white background.

Feed a video to a vision LLM as a sequence of JPEG frames on the CLI (also LLM 0.25)

The new llm-video-frames plugin can turn a video file into a sequence of JPEG frames and feed them directly into a long context vision LLM such as GPT-4.1, even when that LLM doesn’t directly support video input. It depends on a plugin feature I added to LLM 0.25, which I released last night.

[... 1,600 words]Qwen2.5 Omni: See, Hear, Talk, Write, Do It All! I'm not sure how I missed this one at the time, but last month (March 27th) Qwen released their first multi-modal model that can handle audio and video in addition to text and images - and that has audio output as a core model feature.

We propose Thinker-Talker architecture, an end-to-end multimodal model designed to perceive diverse modalities, including text, images, audio, and video, while simultaneously generating text and natural speech responses in a streaming manner. We propose a novel position embedding, named TMRoPE (Time-aligned Multimodal RoPE), to synchronize the timestamps of video inputs with audio.

Here's the Qwen2.5-Omni Technical Report PDF.

As far as I can tell nobody has an easy path to getting it working on a Mac yet (the closest report I saw was this comment on Hugging Face).

This release is notable because, while there's a pretty solid collection of open weight vision LLMs now, multi-modal models that go beyond that are still very rare. Like most of Qwen's recent models, Qwen2.5 Omni is released under an Apache 2.0 license.

Qwen 3 is expected to release within the next 24 hours or so. @jianxliao captured a screenshot of their Hugging Face collection which they accidentally revealed before withdrawing it again which suggests the new model will be available in 0.6B / 1.7B / 4B / 8B / 30B sizes. I'm particularly excited to try the 30B one - 22-30B has established itself as my favorite size range for running models on my 64GB M2 as it often delivers exceptional results while still leaving me enough memory to run other applications at the same time.

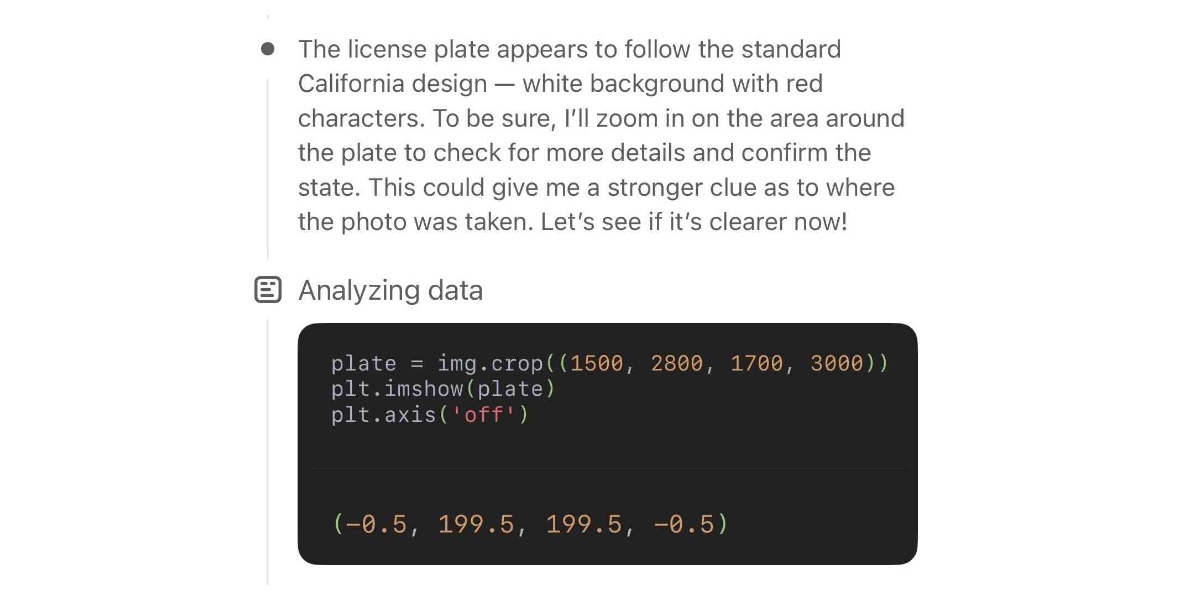

o3 Beats a Master-Level Geoguessr Player—Even with Fake EXIF Data. Sam Patterson (previously) puts his GeoGuessr ELO of 1188 (just short of the top champions division) to good use, exploring o3's ability to guess the location from a photo in a much more thorough way than my own experiment.

Over five rounds o3 narrowly beat him, guessing better than Sam in only 2/5 but with a higher score due to closer guesses in the ones that o3 won.

Even more interestingly, Sam experimented with feeding images with fake EXIF GPS locations to see if o3 (when reminded to use Python to read those tags) would fall for the trick. It spotted the ruse:

Those coordinates put you in suburban Bangkok, Thailand—obviously nowhere near the Andean coffee-zone scene in the photo. So either the file is a re-encoded Street View frame with spoofed/default metadata, or the camera that captured the screenshot had stale GPS information.

We've been seeing if the latest versions of LLMs are any better at geolocating and chronolocating images, and they've improved dramatically since we last tested them in 2023. [...]

Before anyone worries about it taking our job, I see it more as the difference between a hand whisk and an electric whisk, just the same job done quicker, and either way you've got to check if your peaks are stiff at the end of it.

— Eliot Higgins, Bellingcat

Watching o3 guess a photo’s location is surreal, dystopian and wildly entertaining

Watching OpenAI’s new o3 model guess where a photo was taken is one of those moments where decades of science fiction suddenly come to life. It’s a cross between the Enhance Button and Omniscient Database TV Tropes.

[... 1,582 words]Image segmentation using Gemini 2.5

Max Woolf pointed out this new feature of the Gemini 2.5 series (here’s my coverage of 2.5 Pro and 2.5 Flash) in a comment on Hacker News:

[... 1,428 words]I work for OpenAI. [...] o4-mini is actually a considerably better vision model than o3, despite the benchmarks. Similar to how o3-mini-high was a much better coding model than o1. I would recommend using o4-mini-high over o3 for any task involving vision.

— James Betker, OpenAI

GPT-4.1: Three new million token input models from OpenAI, including their cheapest model yet

OpenAI introduced three new models this morning: GPT-4.1, GPT-4.1 mini and GPT-4.1 nano. These are API-only models right now, not available through the ChatGPT interface (though you can try them out in OpenAI’s API playground). All three models can handle 1,047,576 tokens of input and 32,768 tokens of output, and all three have a May 31, 2024 cut-off date (their previous models were mostly September 2023).

[... 1,124 words]Mistral Small 3.1 on Ollama. Mistral Small 3.1 (previously) is now available through Ollama, providing an easy way to run this multi-modal (vision) model on a Mac (and other platforms, though I haven't tried those myself).

I had to upgrade Ollama to the most recent version to get it to work - prior to that I got a Error: unable to load model message. Upgrades can be accessed through the Ollama macOS system tray icon.

I fetched the 15GB model by running:

ollama pull mistral-small3.1

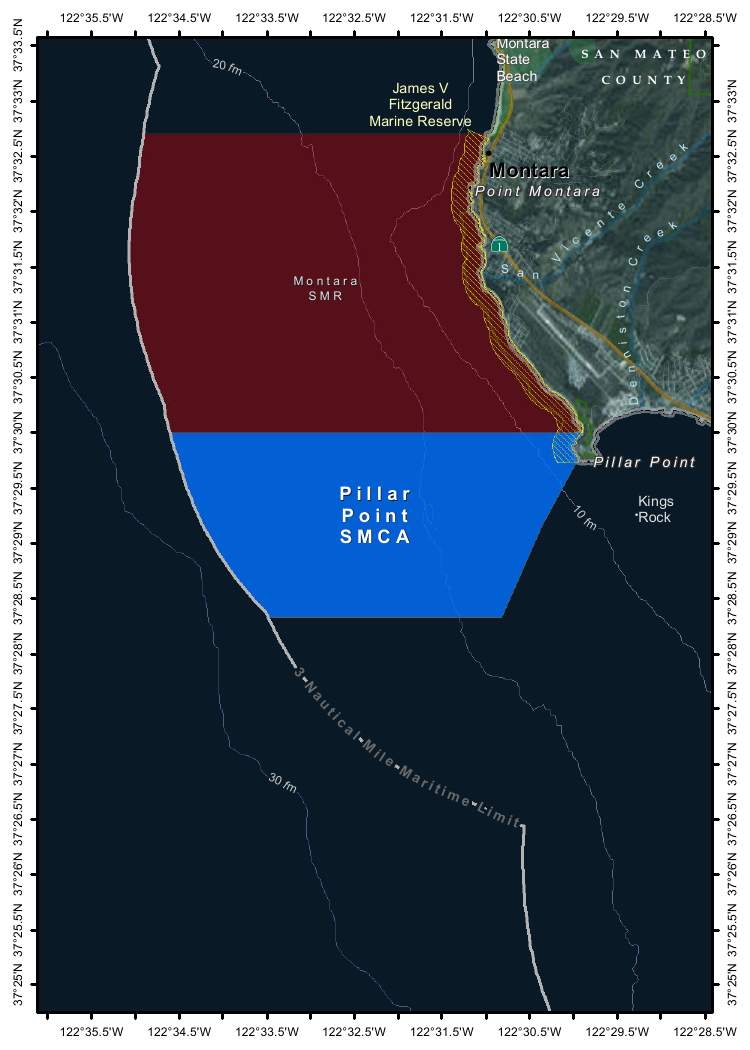

Then used llm-ollama to run prompts through it, including one to describe this image:

llm install llm-ollama

llm -m mistral-small3.1 'describe this image' -a https://static.simonwillison.net/static/2025/Mpaboundrycdfw-1.png

Here's the output. It's good, though not quite as impressive as the description I got from the slightly larger Qwen2.5-VL-32B.

I also tried it on a scanned (private) PDF of hand-written text with very good results, though it did misread one of the hand-written numbers.

Initial impressions of Llama 4

Dropping a model release as significant as Llama 4 on a weekend is plain unfair! So far the best place to learn about the new model family is this post on the Meta AI blog. They’ve released two new models today: Llama 4 Maverick is a 400B model (128 experts, 17B active parameters), text and image input with a 1 million token context length. Llama 4 Scout is 109B total parameters (16 experts, 17B active), also multi-modal and with a claimed 10 million token context length—an industry first.

[... 1,468 words]Putting Gemini 2.5 Pro through its paces

There’s a new release from Google Gemini this morning: the first in the Gemini 2.5 series. Google call it “a thinking model, designed to tackle increasingly complex problems”. It’s already sat at the top of the LM Arena leaderboard, and from initial impressions looks like it may deserve that top spot.

[... 2,400 words]Qwen2.5-VL-32B: Smarter and Lighter. The second big open weight LLM release from China today - the first being DeepSeek v3-0324.

Qwen's previous vision model was Qwen2.5 VL, released in January in 3B, 7B and 72B sizes.

Today's Apache 2.0 licensed release is a 32B model, which is quickly becoming my personal favourite model size - large enough to have GPT-4-class capabilities, but small enough that on my 64GB Mac there's still enough RAM for me to run other memory-hungry applications like Firefox and VS Code.

Qwen claim that the new model (when compared to their previous 2.5 VL family) can "align more closely with human preferences", is better at "mathematical reasoning" and provides "enhanced accuracy and detailed analysis in tasks such as image parsing, content recognition, and visual logic deduction".

They also offer some presumably carefully selected benchmark results showing it out-performing Gemma 3-27B, Mistral Small 3.1 24B and GPT-4o-0513 (there have been two more recent GPT-4o releases since that one, 2024-08-16 and 2024-11-20).

As usual, Prince Canuma had MLX versions of the models live within hours of the release, in 4 bit, 6 bit, 8 bit, and bf16 variants.

I ran the 4bit version (a 18GB model download) using uv and Prince's mlx-vlm like this:

uv run --with 'numpy<2' --with mlx-vlm \

python -m mlx_vlm.generate \

--model mlx-community/Qwen2.5-VL-32B-Instruct-4bit \

--max-tokens 1000 \

--temperature 0.0 \

--prompt "Describe this image." \

--image Mpaboundrycdfw-1.pngHere's the image:

And here's the resulting description, which I found to be very impressive:

This image appears to be a map highlighting specific areas along the coastline, likely in California, based on the geographical features and place names. Here is a detailed description of the image:

Geographic Features:

- The map shows a section of the coastline, with land on the right and the sea on the left.

- The land area includes parts of SanMateo County, as noted in the top-right corner.

- The coastline features several named locations, including:

- Point Montara

- Pillar Point

- Kings Rock

- VicenteCreek

- DennistonCreek

- State Beach

Highlighted Areas:

- The map contains two distinct areas marked with different colors:

- Red Area (Top Section)

- Labeled as "MONTARA" and "MONTARA M R" (possibly referring to a "Marine Reserves" or similar protected area).

- This area is marked with a dark red color and extends from the coastline inland.

- Blue Area (Bottom Section)

- Labeled as "PILLAR" and "PILLAR P O I N T" along with "SMCA" (Special Management Area).

- This area is marked with a blue color and is located closer to the coastline, including the area around "Pillar Point."

Depth Contours:

- The map includes depth markings in the sea, indicating the bathymetrical features:

- 20 fathom (fm) line is marked in the upper-left part of the sea.

- 10 fathom (fm) line is marked closer to the coastline.

- 30 fathom (fm) line is marked further out to sea.

Other Features:

- State Beach: Marked near the top-right, indicating a protected recreational area.

- Kings Rock: A prominent feature near the coastline, likely a rocky outcropping.

- Creeks: The map shows several creeks, including VicenteCreek and DennistonCreek, which flow into the sea.

Protected Areas:

- The map highlights specific protected areas:

- Marine Reserves:

- "MONTARA M R" (Marine Reserves) in red.

- Special Management Area (SMCA)

- "PILLAR P O I N T" in blue, indicating a Special Management Area.

Grid and Coordinates:

- The map includes a grid with latitude and longitude markings:

- Latitude ranges from approximately 37°25'N to 37°35'N.

- Longitude ranges from approximately 122°22.5'W to 122°35.5'W.

Topography:

- The land area shows topographic features, including elevations and vegetation, with green areas indicating higher elevations or vegetated land.

Other Labels:

- "SMR": Likely stands for "State Managed Reserves."

- "SMCA": Likely stands for "Special Management Control Area."

In summary, this map highlights specific protected areas along the coastline, including a red "Marine Reserves" area and a blue "Special Management Area" near "Pillar Point." The map also includes depth markings, geographical features, and place names, providing a detailed view of the region's natural and protected areas.

It included the following runtime statistics:

Prompt: 1051 tokens, 111.985 tokens-per-sec

Generation: 760 tokens, 17.328 tokens-per-sec

Peak memory: 21.110 GB

Mistral Small 3.1. Mistral Small 3 came out in January and was a notable, genuinely excellent local model that used an Apache 2.0 license.

Mistral Small 3.1 offers a significant improvement: it's multi-modal (images) and has an increased 128,000 token context length, while still "fitting within a single RTX 4090 or a 32GB RAM MacBook once quantized" (according to their model card). Mistral's own benchmarks show it outperforming Gemma 3 and GPT-4o Mini, but I haven't seen confirmation from external benchmarks.

Despite their mention of a 32GB MacBook I haven't actually seen any quantized GGUF or MLX releases yet, which is a little surprising since they partnered with Ollama on launch day for their previous Mistral Small 3. I expect we'll see various quantized models released by the community shortly.

Update 20th March 2025: I've now run the text version on my laptop using mlx-community/Mistral-Small-3.1-Text-24B-Instruct-2503-8bit and llm-mlx:

llm mlx download-model mlx-community/Mistral-Small-3.1-Text-24B-Instruct-2503-8bit -a mistral-small-3.1

llm chat -m mistral-small-3.1

The model can be accessed via Mistral's La Plateforme API, which means you can use it via my llm-mistral plugin.

Here's the model describing my photo of two pelicans in flight:

llm install llm-mistral

# Run this if you have previously installed the plugin:

llm mistral refresh

llm -m mistral/mistral-small-2503 'describe' \

-a https://static.simonwillison.net/static/2025/two-pelicans.jpg

The image depicts two brown pelicans in flight against a clear blue sky. Pelicans are large water birds known for their long bills and large throat pouches, which they use for catching fish. The birds in the image have long, pointed wings and are soaring gracefully. Their bodies are streamlined, and their heads and necks are elongated. The pelicans appear to be in mid-flight, possibly gliding or searching for food. The clear blue sky in the background provides a stark contrast, highlighting the birds' silhouettes and making them stand out prominently.

I added Mistral's API prices to my tools.simonwillison.net/llm-prices pricing calculator by pasting screenshots of Mistral's pricing tables into Claude.

Notes on Google’s Gemma 3

Google’s Gemma team released an impressive new model today (under their not-open-source Gemma license). Gemma 3 comes in four sizes—1B, 4B, 12B, and 27B—and while 1B is text-only the larger three models are all multi-modal for vision:

[... 804 words]What’s new in the world of LLMs, for NICAR 2025

I presented two sessions at the NICAR 2025 data journalism conference this year. The first was this one based on my review of LLMs in 2024, extended by several months to cover everything that’s happened in 2025 so far. The second was a workshop on Cutting-edge web scraping techniques, which I’ve written up separately.

[... 2,797 words]Mistral OCR (via) New closed-source specialist OCR model by Mistral - you can feed it images or a PDF and it produces Markdown with optional embedded images.

It's available via their API, or it's "available to self-host on a selective basis" for people with stringent privacy requirements who are willing to talk to their sales team.

I decided to try out their API, so I copied and pasted example code from their notebook into my custom Claude project and told it:

Turn this into a CLI app, depends on mistralai - it should take a file path and an optional API key defauling to env vironment called MISTRAL_API_KEY

After some further iteration / vibe coding I got to something that worked, which I then tidied up and shared as mistral_ocr.py.

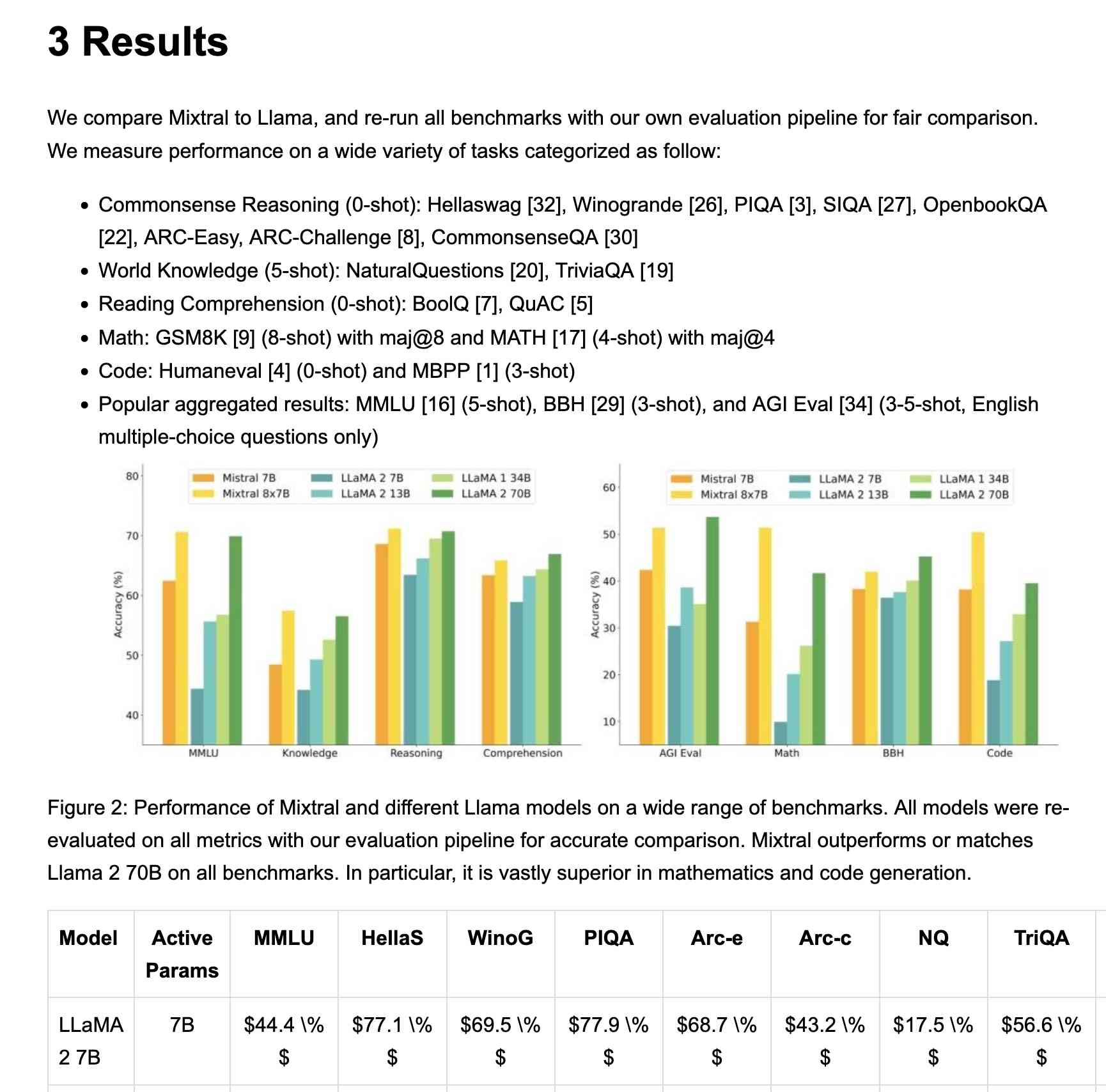

You can try it out like this:

export MISTRAL_API_KEY='...'

uv run http://tools.simonwillison.net/python/mistral_ocr.py \

mixtral.pdf --html --inline-images > mixtral.html

I fed in the Mixtral paper as a PDF. The API returns Markdown, but my --html option renders that Markdown as HTML and the --inline-images option takes any images and inlines them as base64 URIs (inspired by monolith). The result is mixtral.html, a 972KB HTML file with images and text bundled together.

This did a pretty great job!

My script renders Markdown tables but I haven't figured out how to render inline Markdown MathML yet. I ran the command a second time and requested Markdown output (the default) like this:

uv run http://tools.simonwillison.net/python/mistral_ocr.py \

mixtral.pdf > mixtral.md

Here's that Markdown rendered as a Gist - there are a few MathML glitches so clearly the Mistral OCR MathML dialect and the GitHub Formatted Markdown dialect don't quite line up.

My tool can also output raw JSON as an alternative to Markdown or HTML - full details in the documentation.

The Mistral API is priced at roughly 1000 pages per dollar, with a 50% discount for batch usage.

The big question with LLM-based OCR is always how well it copes with accidental instructions in the text (can you safely OCR a document full of prompting examples?) and how well it handles text it can't write.

Mistral's Sophia Yang says it "should be robust" against following instructions in the text, and invited people to try and find counter-examples.

Alexander Doria noted that Mistral OCR can hallucinate text when faced with handwriting that it cannot understand.

llm-ollama 0.9.0.

This release of the llm-ollama plugin adds support for schemas, thanks to a PR by Adam Compton.

Ollama provides very robust support for this pattern thanks to their structured outputs feature, which works across all of the models that they support by intercepting the logic that outputs the next token and restricting it to only tokens that would be valid in the context of the provided schema.

With Ollama and llm-ollama installed you can run even run structured schemas against vision prompts for local models. Here's one against Ollama's llama3.2-vision:

llm -m llama3.2-vision:latest \

'describe images' \

--schema 'species,description,count int' \

-a https://static.simonwillison.net/static/2025/two-pelicans.jpg

I got back this:

{

"species": "Pelicans",

"description": "The image features a striking brown pelican with its distinctive orange beak, characterized by its large size and impressive wingspan.",

"count": 1

}

(Actually a bit disappointing, as there are two pelicans and their beaks are brown.)

olmOCR (via) New from Ai2 - olmOCR is "an open-source tool designed for high-throughput conversion of PDFs and other documents into plain text while preserving natural reading order".

At its core is allenai/olmOCR-7B-0225-preview, a Qwen2-VL-7B-Instruct variant trained on ~250,000 pages of diverse PDF content (both scanned and text-based) that were labelled using GPT-4o and made available as the olmOCR-mix-0225 dataset.

The olmocr Python library can run the model on any "recent NVIDIA GPU". I haven't managed to run it on my own Mac yet - there are GGUFs out there but it's not clear to me how to run vision prompts through them - but Ai2 offer an online demo which can handle up to ten pages for free.

Given the right hardware this looks like a very inexpensive way to run large scale document conversion projects:

We carefully optimized our inference pipeline for large-scale batch processing using SGLang, enabling olmOCR to convert one million PDF pages for just $190 - about 1/32nd the cost of using GPT-4o APIs.

The most interesting idea from the technical report (PDF) is something they call "document anchoring":

Document anchoring extracts coordinates of salient elements in each page (e.g., text blocks and images) and injects them alongside raw text extracted from the PDF binary file. [...]

Document anchoring processes PDF document pages via the PyPDF library to extract a representation of the page’s structure from the underlying PDF. All of the text blocks and images in the page are extracted, including position information. Starting with the most relevant text blocks and images, these are sampled and added to the prompt of the VLM, up to a defined maximum character limit. This extra information is then available to the model when processing the document.

![Left side shows a green-header interface with coordinates like [150x220]√3x−1+(1+x)², [150x180]Section 6, [150x50]Lorem ipsum dolor sit amet, [150x70]consectetur adipiscing elit, sed do, [150x90]eiusmod tempor incididunt ut, [150x110]labore et dolore magna aliqua, [100x280]Table 1, followed by grid coordinates with A, B, C, AA, BB, CC, AAA, BBB, CCC values. Right side shows the rendered document with equation, text and table.](https://static.simonwillison.net/static/2025/olmocr-document-anchoring.jpg)

The one limitation of olmOCR at the moment is that it doesn't appear to do anything with diagrams, figures or illustrations. Vision models are actually very good at interpreting these now, so my ideal OCR solution would include detailed automated descriptions of this kind of content in the resulting text.

Update: Jonathan Soma figured out how to run it on a Mac using LM Studio and the olmocr Python package.

Llama 4 is making great progress in training. Llama 4 mini is done with pre-training and our reasoning models and larger model are looking good too. Our goal with Llama 3 was to make open source competitive with closed models, and our goal for Llama 4 is to lead. Llama 4 will be natively multimodal -- it's an omni-model -- and it will have agentic capabilities, so it's going to be novel and it's going to unlock a lot of new use cases.

— Mark Zuckerberg, on Meta's quarterly earnings report