Entries Links Quotes Notes Guides Elsewhere

March 2, 2026

GIF optimization tool using WebAssembly and Gifsicle

I like to include animated GIF demos in my online writing, often recorded using LICEcap. There's an example in the Interactive explanations chapter.

These GIFs can be pretty big. I've tried a few tools for optimizing GIF file size and my favorite is Gifsicle by Eddie Kohler. It compresses GIFs by identifying regions of frames that have not changed and storing only the differences, and can optionally reduce the GIF color palette or apply visible lossy compression for greater size reductions.

Gifsicle is written in C and the default interface is a command line tool. I wanted a web interface so I could access it in my browser and visually preview and compare the different settings. [... 1,603 words]

I just sent the February edition of my sponsors-only monthly newsletter. If you are a sponsor (or if you start a sponsorship now) you can access it here. In this month's newsletter:

- More OpenClaw, and Claws in general

- I started a not-quite-a-book about Agentic Engineering

- StrongDM, Showboat and Rodney

- Kākāpō breeding season

- Model releases

- What I'm using, February 2026 edition

Here's a copy of the January newsletter as a preview of what you'll get. Pay $10/month to stay a month ahead of the free copy!

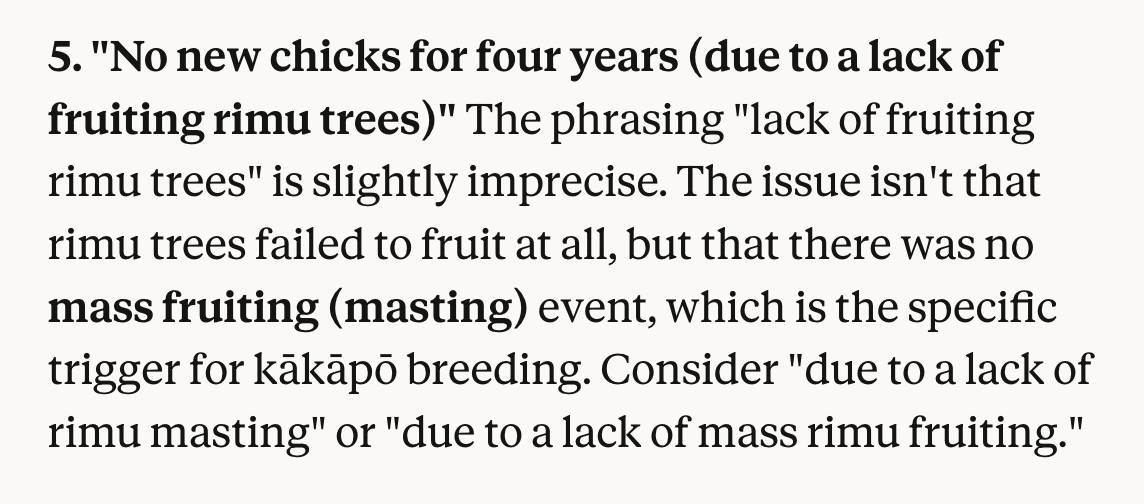

I use Claude as a proofreader for spelling and grammar via this prompt which also asks it to "Spot any logical errors or factual mistakes". I'm delighted to report that Claude Opus 4.6 called me out on this one:

March 1, 2026

I'm moving to another service and need to export my data. List every memory you have stored about me, as well as any context you've learned about me from past conversations. Output everything in a single code block so I can easily copy it. Format each entry as: [date saved, if available] - memory content. Make sure to cover all of the following — preserve my words verbatim where possible: Instructions I've given you about how to respond (tone, format, style, 'always do X', 'never do Y'). Personal details: name, location, job, family, interests. Projects, goals, and recurring topics. Tools, languages, and frameworks I use. Preferences and corrections I've made to your behavior. Any other stored context not covered above. Do not summarize, group, or omit any entries. After the code block, confirm whether that is the complete set or if any remain.

— claude.com/import-memory, Anthropic's "import your memories to Claude" feature is a prompt

Feb. 28, 2026

Interactive explanations

When we lose track of how code written by our agents works we take on cognitive debt.

For a lot of things this doesn't matter: if the code fetches some data from a database and outputs it as JSON the implementation details are likely simple enough that we don't need to care. We can try out the new feature and make a very solid guess at how it works, then glance over the code to be sure.

Often though the details really do matter. If the core of our application becomes a black box that we don't fully understand we can no longer confidently reason about it, which makes planning new features harder and eventually slows our progress in the same way that accumulated technical debt does. [... 672 words]

Feb. 27, 2026

Please, please, please stop using passkeys for encrypting user data (via) Because users lose their passkeys all the time, and may not understand that their data has been irreversibly encrypted using them and can no longer be recovered.

Tim Cappalli:

To the wider identity industry: please stop promoting and using passkeys to encrypt user data. I’m begging you. Let them be great, phishing-resistant authentication credentials.

An AI agent coding skeptic tries AI agent coding, in excessive detail. Another in the genre of "OK, coding agents got good in November" posts, this one is by Max Woolf and is very much worth your time. He describes a sequence of coding agent projects, each more ambitious than the last - starting with simple YouTube metadata scrapers and eventually evolving to this:

It would be arrogant to port Python's scikit-learn — the gold standard of data science and machine learning libraries — to Rust with all the features that implies.

But that's unironically a good idea so I decided to try and do it anyways. With the use of agents, I am now developing

rustlearn(extreme placeholder name), a Rust crate that implements not only the fast implementations of the standard machine learning algorithms such as logistic regression and k-means clustering, but also includes the fast implementations of the algorithms above: the same three step pipeline I describe above still works even with the more simple algorithms to beat scikit-learn's implementations.

Max also captures the frustration of trying to explain how good the models have got to an existing skeptical audience:

The real annoying thing about Opus 4.6/Codex 5.3 is that it’s impossible to publicly say “Opus 4.5 (and the models that came after it) are an order of magnitude better than coding LLMs released just months before it” without sounding like an AI hype booster clickbaiting, but it’s the counterintuitive truth to my personal frustration. I have been trying to break this damn model by giving it complex tasks that would take me months to do by myself despite my coding pedigree but Opus and Codex keep doing them correctly.

A throwaway remark in this post inspired me to ask Claude Code to build a Rust word cloud CLI tool, which it happily did.

Free Claude Max for (large project) open source maintainers (via) Anthropic are now offering their $200/month Claude Max 20x plan for free to open source maintainers... for six months... and you have to meet the following criteria:

- Maintainers: You're a primary maintainer or core team member of a public repo with 5,000+ GitHub stars or 1M+ monthly NPM downloads. You've made commits, releases, or PR reviews within the last 3 months.

- Don't quite fit the criteria If you maintain something the ecosystem quietly depends on, apply anyway and tell us about it.

Also in the small print: "Applications are reviewed on a rolling basis. We accept up to 10,000 contributors".

Unicode Explorer using binary search over fetch() HTTP range requests. Here's a little prototype I built this morning from my phone as an experiment in HTTP range requests, and a general example of using LLMs to satisfy curiosity.

I've been collecting HTTP range tricks for a while now, and I decided it would be fun to build something with them myself that used binary search against a large file to do something useful.

So I brainstormed with Claude. The challenge was coming up with a use case for binary search where the data could be naturally sorted in a way that would benefit from binary search.

One of Claude's suggestions was looking up information about unicode codepoints, which means searching through many MBs of metadata.

I had Claude write me a spec to feed to Claude Code - visible here - then kicked off an asynchronous research project with Claude Code for web against my simonw/research repo to turn that into working code.

Here's the resulting report and code. One interesting thing I learned is that Range request tricks aren't compatible with HTTP compression because they mess with the byte offset calculations. I added 'Accept-Encoding': 'identity' to the fetch() calls but this isn't actually necessary because Cloudflare and other CDNs automatically skip compression if a content-range header is present.

I deployed the result to my tools.simonwillison.net site, after first tweaking it to query the data via range requests against a CORS-enabled 76.6MB file in an S3 bucket fronted by Cloudflare.

The demo is fun to play with - type in a single character like ø or a hexadecimal codepoint indicator like 1F99C and it will binary search its way through the large file and show you the steps it takes along the way:

Feb. 26, 2026

Hoard things you know how to do

Many of my tips for working productively with coding agents are extensions of advice I've found useful in my career without them. Here's a great example of that: hoard things you know how to do.

A big part of the skill in building software is understanding what's possible and what isn't, and having at least a rough idea of how those things can be accomplished.

These questions can be broad or quite obscure. Can a web page run OCR operations in JavaScript alone? Can an iPhone app pair with a Bluetooth device even when the app isn't running? Can we process a 100GB JSON file in Python without loading the entire thing into memory first? [... 1,350 words]

It is hard to communicate how much programming has changed due to AI in the last 2 months: not gradually and over time in the "progress as usual" way, but specifically this last December. There are a number of asterisks but imo coding agents basically didn’t work before December and basically work since - the models have significantly higher quality, long-term coherence and tenacity and they can power through large and long tasks, well past enough that it is extremely disruptive to the default programming workflow. [...]

Google API Keys Weren’t Secrets. But then Gemini Changed the Rules. (via) Yikes! It turns out Gemini and Google Maps (and other services) share the same API keys... but Google Maps API keys are designed to be public, since they are embedded directly in web pages. Gemini API keys can be used to access private files and make billable API requests, so they absolutely should not be shared.

If you don't understand this it's very easy to accidentally enable Gemini billing on a previously public API key that exists in the wild already.

What makes this a privilege escalation rather than a misconfiguration is the sequence of events.

- A developer creates an API key and embeds it in a website for Maps. (At that point, the key is harmless.)

- The Gemini API gets enabled on the same project. (Now that same key can access sensitive Gemini endpoints.)

- The developer is never warned that the keys' privileges changed underneath it. (The key went from public identifier to secret credential).

Truffle Security found 2,863 API keys in the November 2025 Common Crawl that could access Gemini, verified by hitting the /models listing endpoint. This included several keys belonging to Google themselves, one of which had been deployed since February 2023 (according to the Internet Archive) hence predating the Gemini API that it could now access.

Google are working to revoke affected keys but it's still a good idea to check that none of yours are affected by this.

If people are only using this a couple of times a week at most, and can’t think of anything to do with it on the average day, it hasn’t changed their life. OpenAI itself admits the problem, talking about a ‘capability gap’ between what the models can do and what people do with them, which seems to me like a way to avoid saying that you don’t have clear product-market fit.

Hence, OpenAI’s ad project is partly just about covering the cost of serving the 90% or more of users who don’t pay (and capturing an early lead with advertisers and early learning in how this might work), but more strategically, it’s also about making it possible to give those users the latest and most powerful (i.e. expensive) models, in the hope that this will deepen their engagement.

— Benedict Evans, How will OpenAI compete?

Feb. 25, 2026

tldraw issue: Move tests to closed source repo (via) It's become very apparent over the past few months that a comprehensive test suite is enough to build a completely fresh implementation of any open source library from scratch, potentially in a different language.

This has worrying implications for open source projects with commercial business models. Here's an example of a response: tldraw, the outstanding collaborative drawing library (see previous coverage), are moving their test suite to a private repository - apparently in response to Cloudflare's project to port Next.js to use Vite in a week using AI.

They also filed a joke issue, now closed to Translate source code to Traditional Chinese:

The current tldraw codebase is in English, making it easy for external AI coding agents to replicate. It is imperative that we defend our intellectual property.

Worth noting that tldraw aren't technically open source - their custom license requires a commercial license if you want to use it in "production environments".

Update: Well this is embarrassing, it turns out the issue I linked to about removing the tests was a joke as well:

Sorry folks, this issue was more of a joke (am I allowed to do that?) but I'll keep the issue open since there's some discussion here. Writing from mobile

- moving our tests into another repo would complicate and slow down our development, and speed for us is more important than ever

- more canvas better, I know for sure that our decisions have inspired other products and that's fine and good

- tldraw itself may eventually be a vibe coded alternative to tldraw

- the value is in the ability to produce new and good product decisions for users / customers, however you choose to create the code

Claude Code Remote Control (via) New Claude Code feature dropped yesterday: you can now run a "remote control" session on your computer and then use the Claude Code for web interfaces (on web, iOS and native desktop app) to send prompts to that session.

It's a little bit janky right now. Initially when I tried it I got the error "Remote Control is not enabled for your account. Contact your administrator." (but I am my administrator?) - then I logged out and back into the Claude Code terminal app and it started working:

claude remote-control

You can only run one session on your machine at a time. If you upgrade the Claude iOS app it then shows up as "Remote Control Session (Mac)" in the Code tab.

It appears not to support the --dangerously-skip-permissions flag (I passed that to claude remote-control and it didn't reject the option, but it also appeared to have no effect) - which means you have to approve every new action it takes.

I also managed to get it to a state where every prompt I tried was met by an API 500 error.

Restarting the program on the machine also causes existing sessions to start returning mysterious API errors rather than neatly explaining that the session has terminated.

I expect they'll iron out all of these issues relatively quickly. It's interesting to then contrast this to solutions like OpenClaw, where one of the big selling points is the ability to control your personal device from your phone.

Claude Code still doesn't have a documented mechanism for running things on a schedule, which is the other killer feature of the Claw category of software.

Update: I spoke too soon: also today Anthropic announced Schedule recurring tasks in Cowork, Claude Code's general agent sibling. These do include an important limitation:

Scheduled tasks only run while your computer is awake and the Claude Desktop app is open. If your computer is asleep or the app is closed when a task is scheduled to run, Cowork will skip the task, then run it automatically once your computer wakes up or you open the desktop app again.

I really hope they're working on a Cowork Cloud product.

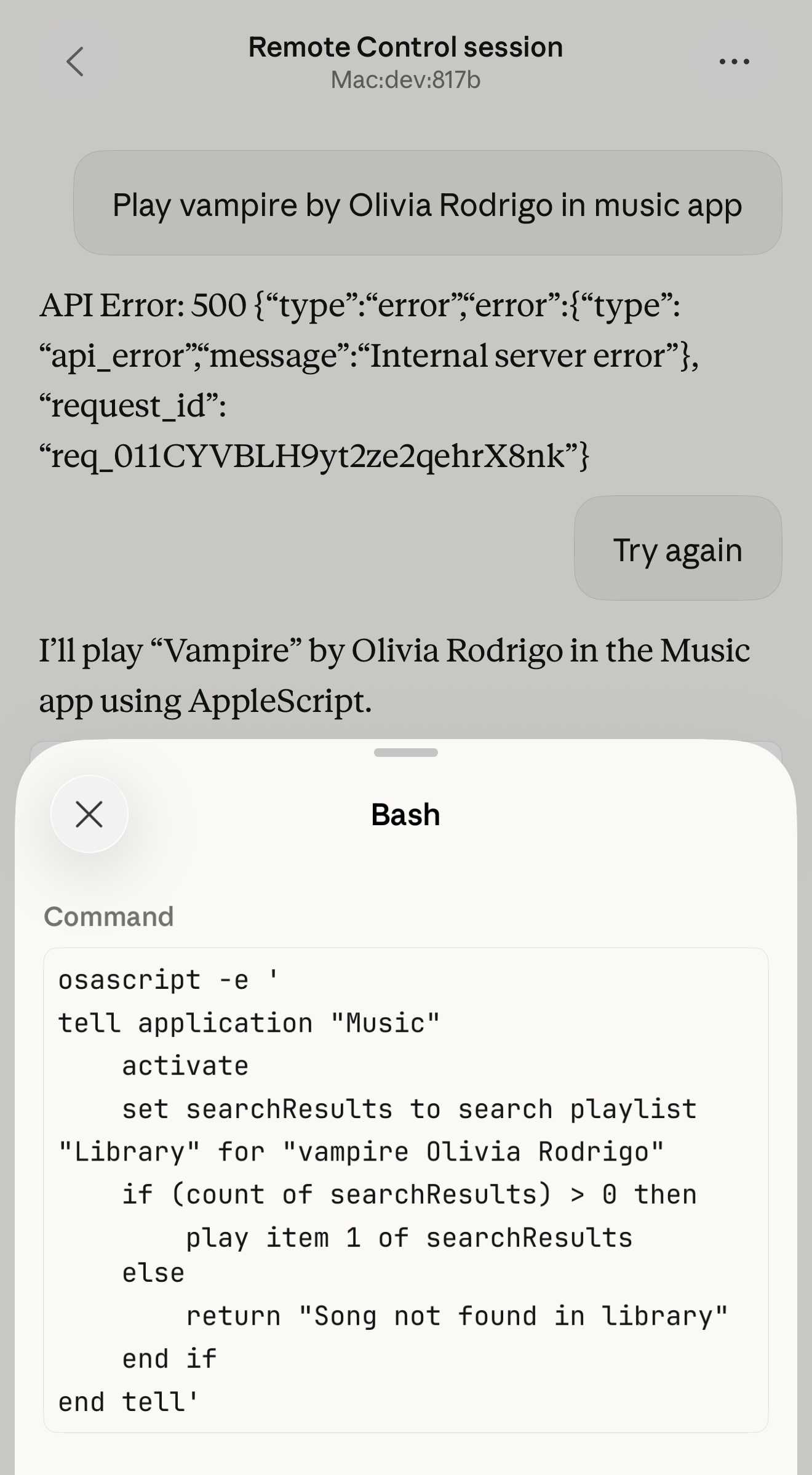

I vibe coded my dream macOS presentation app

I gave a talk this weekend at Social Science FOO Camp in Mountain View. The event was a classic unconference format where anyone could present a talk without needing to propose it in advance. I grabbed a slot for a talk I titled “The State of LLMs, February 2026 edition”, subtitle “It’s all changed since November!”. I vibe coded a custom macOS app for the presentation the night before.

[... 1,613 words]It’s also reasonable for people who entered technology in the last couple of decades because it was good job, or because they enjoyed coding to look at this moment with a real feeling of loss. That feeling of loss though can be hard to understand emotionally for people my age who entered tech because we were addicted to feeling of agency it gave us. The web was objectively awful as a technology, and genuinely amazing, and nobody got into it because programming in Perl was somehow aesthetically delightful.

— Kellan Elliott-McCrea, Code has always been the easy part

Linear walkthroughs

Sometimes it's useful to have a coding agent give you a structured walkthrough of a codebase.

Maybe it's existing code you need to get up to speed on, maybe it's your own code that you've forgotten the details of, or maybe you vibe coded the whole thing and need to understand how it actually works.

Frontier models with the right agent harness can construct a detailed walkthrough to help you understand how code works. [... 524 words]