15 items tagged “gemini”

2024

Chrome Prompt Playground.

Google Chrome Canary is currently shipping an experimental on-device LLM, in the form of Gemini Nano. You can access it via the new window.ai API, after first enabling the "Prompt API for Gemini Nano" experiment in chrome://flags (and then waiting an indeterminate amount of time for the ~1.7GB model file to download - I eventually spotted it in ~/Library/Application Support/Google/Chrome Canary/OptGuideOnDeviceModel).

I got Claude 3.5 Sonnet to build me this playground interface for experimenting with the model. You can execute prompts, stream the responses and all previous prompts and responses are stored in localStorage.

Here's the full Sonnet transcript, and the final source code for the app.

The best documentation I've found for the new API is is explainers-by-googlers/prompt-api on GitHub.

PDF to Podcast (via) At first glance this project by Stephan Fitzpatrick is a cute demo of a terrible sounding idea... but then I tried it out and the results are weirdly effective. You can listen to a fake podcast version of the transformers paper, or upload your own PDF (with your own OpenAI API key) to make your own.

It's open source (Apache 2) so I had a poke around in the code. It gets a lot done with a single 180 line Python script.

When I'm exploring code like this I always jump straight to the prompt - it's quite long, and starts like this:

Your task is to take the input text provided and turn it into an engaging, informative podcast dialogue. The input text may be messy or unstructured, as it could come from a variety of sources like PDFs or web pages. Don't worry about the formatting issues or any irrelevant information; your goal is to extract the key points and interesting facts that could be discussed in a podcast. [...]

So I grabbed a copy of it and pasted in my blog entry about WWDC, which produced this result when I ran it through Gemini Flash using llm-gemini:

cat prompt.txt | llm -m gemini-1.5-flash-latest

Then I piped the result through my ospeak CLI tool for running text-to-speech with the OpenAI TTS models (after truncating to 690 tokens with ttok because it turned out to be slightly too long for the API to handle):

llm logs --response | ttok -t 690 | ospeak -s -o wwdc-auto-podcast.mp3

And here's the result (3.9MB 3m14s MP3).

It's not as good as the PDF-to-Podcast version because Stephan has some really clever code that uses different TTS voices for each of the characters in the transcript, but it's still a surprisingly fun way of repurposing text from my blog. I enjoyed listening to it while I was cooking dinner.

Contrast [Apple Intelligence] to what OpenAI is trying to accomplish with its GPT models, or Google with Gemini, or Anthropic with Claude: those large language models are trying to incorporate all of the available public knowledge to know everything; it’s a dramatically larger and more difficult problem space, which is why they get stuff wrong. There is also a lot of stuff that they don’t know because that information is locked away — like all of the information on an iPhone.

Understand errors and warnings better with Gemini (via) As part of Google's Gemini-in-everything strategy, Chrome DevTools now includes an opt-in feature for passing error messages in the JavaScript console to Gemini for an explanation, via a lightbulb icon.

Amusingly, this documentation page includes a warning about prompt injection:

Many of LLM applications are susceptible to a form of abuse known as prompt injection. This feature is no different. It is possible to trick the LLM into accepting instructions that are not intended by the developers.

They include a screenshot of a harmless example, but I'd be interested in hearing if anyone has a theoretical attack that could actually cause real damage here.

Context caching for Google Gemini (via) Another new Gemini feature announced today. Long context models enable answering questions against large chunks of text, but the price of those long prompts can be prohibitive—$3.50/million for Gemini Pro 1.5 up to 128,000 tokens and $7/million beyond that.

Context caching offers a price optimization, where the long prefix prompt can be reused between requests, halving the cost per prompt but at an additional cost of $4.50 / 1 million tokens per hour to keep that context cache warm.

Given that hourly extra charge this isn’t a default optimization for all cases, but certain high traffic applications might be able to save quite a bit on their longer prompt systems.

It will be interesting to see if other vendors such as OpenAI and Anthropic offer a similar optimization in the future.

llm-gemini 0.1a4.

A new release of my llm-gemini plugin adding support for the Gemini 1.5 Flash model that was revealed this morning at Google I/O.

I'm excited about this new model because of its low price. Flash is $0.35 per 1 million tokens for prompts up to 128K token and $0.70 per 1 million tokens for longer prompts - up to a million tokens now and potentially two million at some point in the future. That's 1/10th of the price of Gemini Pro 1.5, cheaper than GPT 3.5 ($0.50/million) and only a little more expensive than Claude 3 Haiku ($0.25/million).

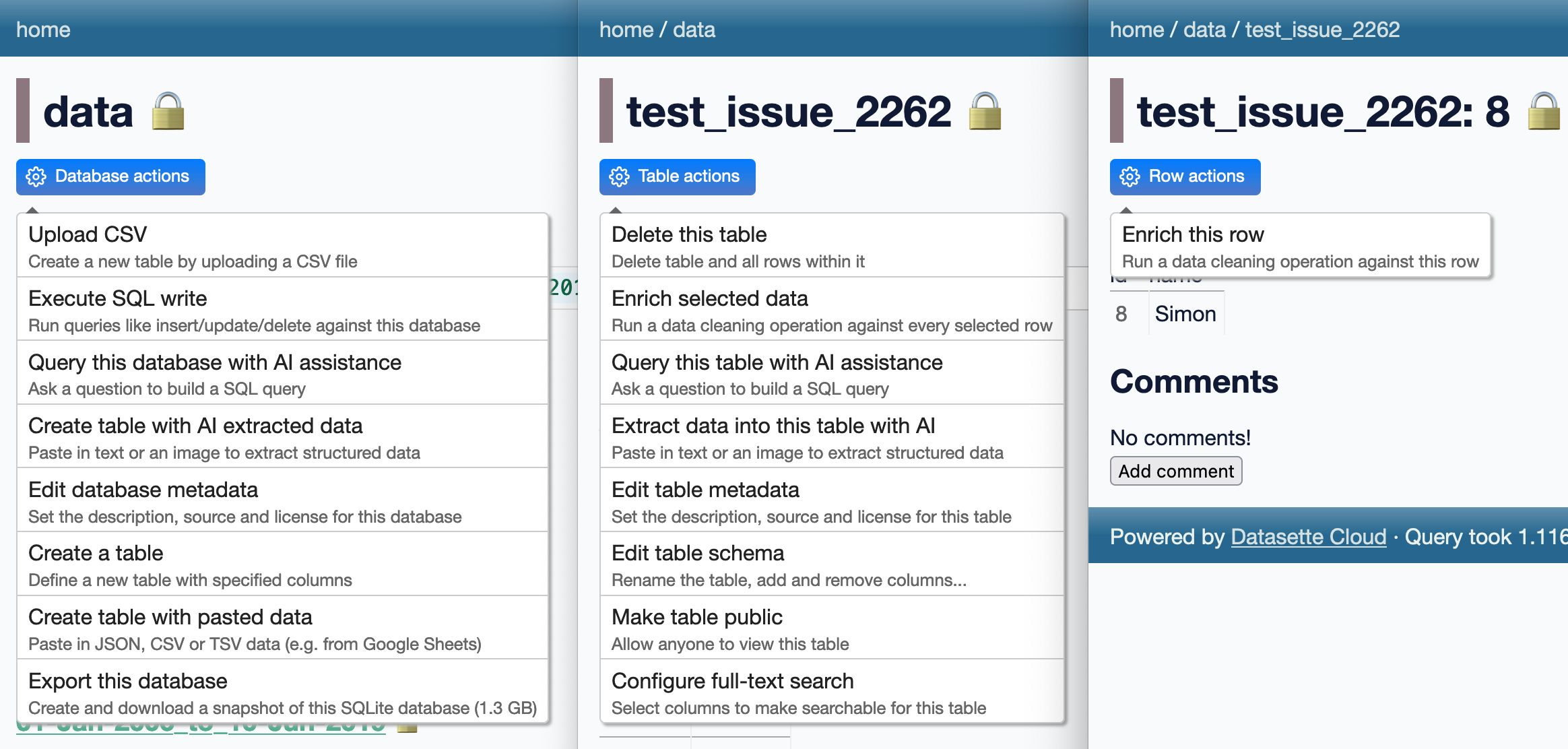

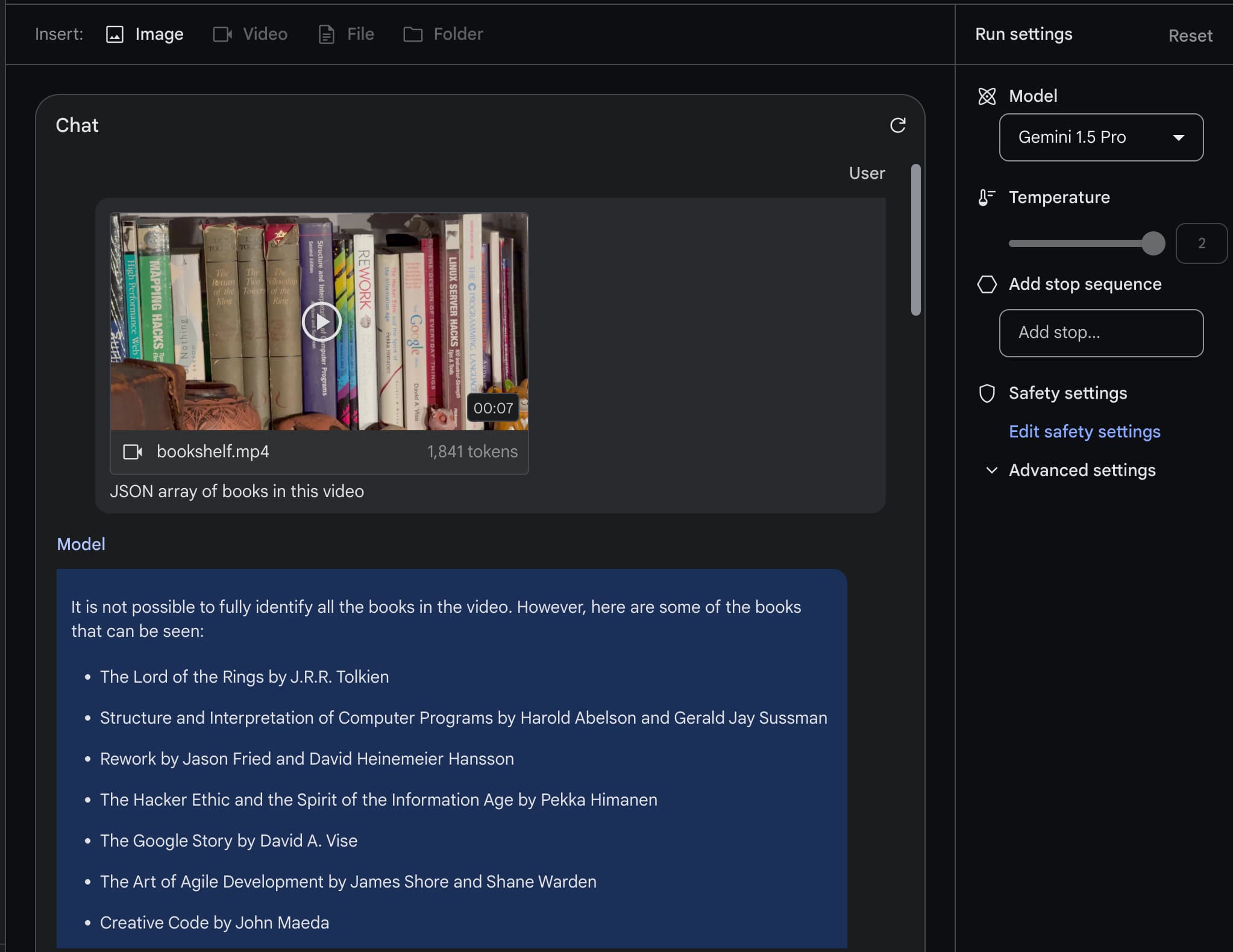

How developers are using Gemini 1.5 Pro’s 1 million token context window. I got to be a talking head for a few seconds in an intro video for today's Google I/O keynote, talking about how I used Gemini Pro 1.5 to index my bookshelf (and with a cameo from my squirrel nutcracker). I'm at 1m25s.

(Or at 10m6s in the full video of the keynote)

Three major LLM releases in 24 hours (plus weeknotes)

I’m a bit behind on my weeknotes, so there’s a lot to cover here. But first... a review of the last 24 hours of Large Language Model news. All times are in US Pacific on April 9th 2024.

[... 1,401 words]Gemini 1.5 Pro public preview (via) Huge release from Google: Gemini 1.5 Pro—the GPT-4 competitive model with the incredible 1 million token context length—is now available without a waitlist in 180+ countries (including the USA but not Europe or the UK as far as I can tell)... and the API is free for 50 requests/day (rate limited to 2/minute).

Beyond that you’ll need to pay—$7/million input tokens and $21/million output tokens, which is slightly less than GPT-4 Turbo and a little more than Claude 3 Sonnet.

They also announced audio input (up to 9.5 hours in a single prompt), system instruction support and a new JSON mod.

llm-gemini 0.1a1. I upgraded my llm-gemini plugin to add support for the new Google Gemini Pro 1.5 model, which is beginning to roll out in early access.

The 1.5 model supports 1,048,576 input tokens and generates up to 8,192 output tokens—a big step up from Gemini 1.0 Pro which handled 30,720 and 2,048 respectively.

The big missing feature from my LLM tool at the moment is image input—a fantastic way to take advantage of that huge context window. I have a branch for this which I really need to get into a useful state.

The GPT-4 barrier has finally been broken

Four weeks ago, GPT-4 remained the undisputed champion: consistently at the top of every key benchmark, but more importantly the clear winner in terms of “vibes”. Almost everyone investing serious time exploring LLMs agreed that it was the most capable default model for the majority of tasks—and had been for more than a year.

[... 717 words]The Zen of Python, Unix, and LLMs. Here’s the YouTube recording of my 1.5 hour conversation with Hugo Bowne-Anderson yesterday.

I fed a Whisper transcript to Google Gemini Pro 1.5 and asked it for the themes from our conversation, and it said we talked about “Python’s success and versatility, the rise and potential of LLMs, data sharing and ethics in the age of LLMs, Unix philosophy and its influence on software development and the future of programming and human-computer interaction”.

The killer app of Gemini Pro 1.5 is video

Last week Google introduced Gemini Pro 1.5, an enormous upgrade to their Gemini series of AI models.

[... 2,839 words]Our next-generation model: Gemini 1.5 (via) The big news here is about context length: Gemini 1.5 (a Mixture-of-Experts model) will do 128,000 tokens in general release, available in limited preview with a 1 million token context and has shown promising research results with 10 million tokens!

1 million tokens is 700,000 words or around 7 novels—also described in the blog post as an hour of video or 11 hours of audio.

Google’s Gemini Advanced: Tasting Notes and Implications. Ethan Mollick reviews the new Google Gemini Advanced—a rebranded Bard, released today, that runs on the GPT-4 competitive Gemini Ultra model.

“GPT-4 [...] has been the dominant AI for well over a year, and no other model has come particularly close. Prior to Gemini, we only had one advanced AI model to look at, and it is hard drawing conclusions with a dataset of one. Now there are two, and we can learn a few things.”

I like Ethan’s use of the term “tasting notes” here. Reminds me of how Matt Webb talks about being a language model sommelier.