21 items tagged “scraping”

2024

For the last few years, Meta has had a team of attorneys dedicated to policing unauthorized forms of scraping and data collection on Meta platforms. The decision not to further pursue these claims seems as close to waving the white flag as you can get against these kinds of companies. But why? [...]

In short, I think Meta cares more about access to large volumes of data and AI than it does about outsiders scraping their public data now. My hunch is that they know that any success in anti-scraping cases can be thrown back at them in their own attempts to build AI training databases and LLMs. And they care more about the latter than the former.

2023

scrapeghost (via) Scraping is a really interesting application for large language model tools like GPT3. James Turk’s scrapeghost is a very neatly designed entrant into this space—it’s a Python library and CLI tool that can be pointed at any URL and given a roughly defined schema (using a neat mini schema language) which will then use GPT3 to scrape the page and try to return the results in the supplied format.

I expect GPT-4 will have a LOT of applications in web scraping

The increased 32,000 token limit will be large enough to send it the full DOM of most pages, serialized to HTML - then ask questions to extract data

Or... take a screenshot and use the GPT4 image input mode to ask questions about the visually rendered page instead!

Might need to dust off all of those old semantic web dreams, because the world's information is rapidly becoming fully machine readable

— Me

datasette-scraper walkthrough on YouTube (via) datasette-scraper is Colin Dellow’s new plugin that turns Datasette into a powerful web scraping tool, with a web UI based on plugin-driven customizations to the Datasette interface. It’s really impressive, and this ten minute demo shows quite how much it is capable of: it can crawl sitemaps and fetch pages, caching them (using zstandard with optional custom dictionaries for extra compression) to speed up subsequent crawls... and you can add your own plugins to extract structured data from crawled pages and save it to a separate SQLite table!

2022

curl-impersonate (via) “A special build of curl that can impersonate the four major browsers: Chrome, Edge, Safari & Firefox. curl-impersonate is able to perform TLS and HTTP handshakes that are identical to that of a real browser.”

I hadn’t realized that it’s become increasingly common for sites to use fingerprinting of TLS and HTTP handshakes to block crawlers. curl-impersonate attempts to impersonate browsers much more accurately, using tricks like compiling with Firefox’s nss TLS library and Chrome’s BoringSSL.

Web Scraping via Javascript Runtime Heap Snapshots (via) This is an absolutely brilliant scraping trick. Adrian Cooney figured out a way to use Puppeteer and the Chrome DevTools protocol to take a heap snapshot of all of the JavaScript running on a web page, then recursively crawl through the heap looking for any JavaScript objects that have a specified selection of properties. This allows him to scrape data from arbitrarily complex client-side web applications. He built a JavaScript library and command line tool that implements the pattern.

Scraping web pages from the command line with shot-scraper

I’ve added a powerful new capability to my shot-scraper command line browser automation tool: you can now use it to load a web page in a headless browser, execute JavaScript to extract information and return that information back to the terminal as JSON.

[... 1,276 words]shot-scraper: automated screenshots for documentation, built on Playwright

shot-scraper is a new tool that I’ve built to help automate the process of keeping screenshots up-to-date in my documentation. It also doubles as a scraping tool—hence the name—which I picked as a complement to my git scraping and help scraping techniques.

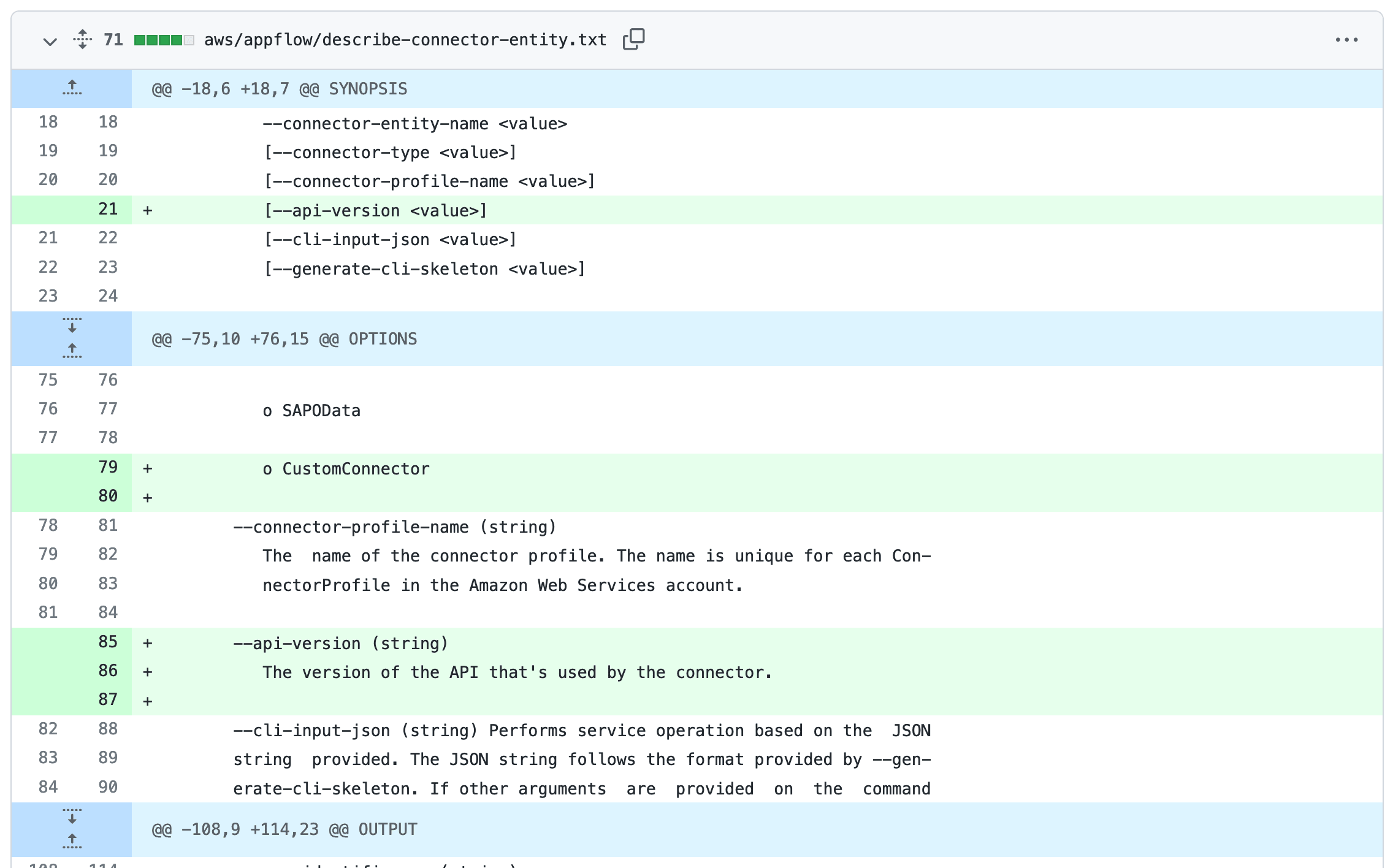

[... 1,802 words]Help scraping: track changes to CLI tools by recording their --help using Git

I’ve been experimenting with a new variant of Git scraping this week which I’m calling Help scraping. The key idea is to track changes made to CLI tools over time by recording the output of their --help commands in a Git repository.

2021

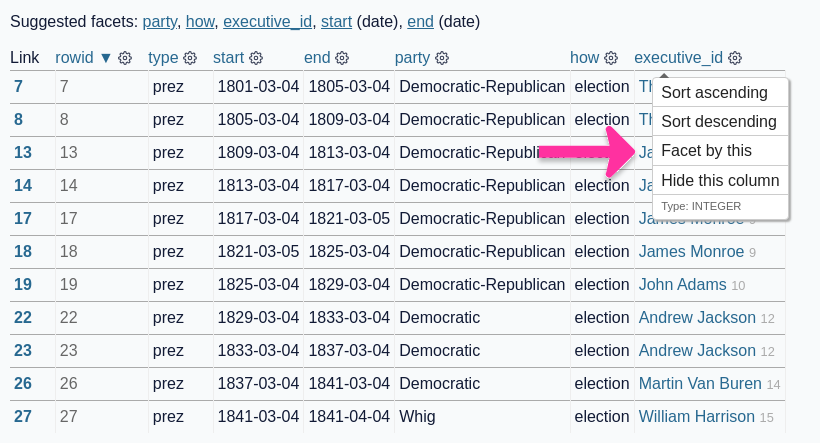

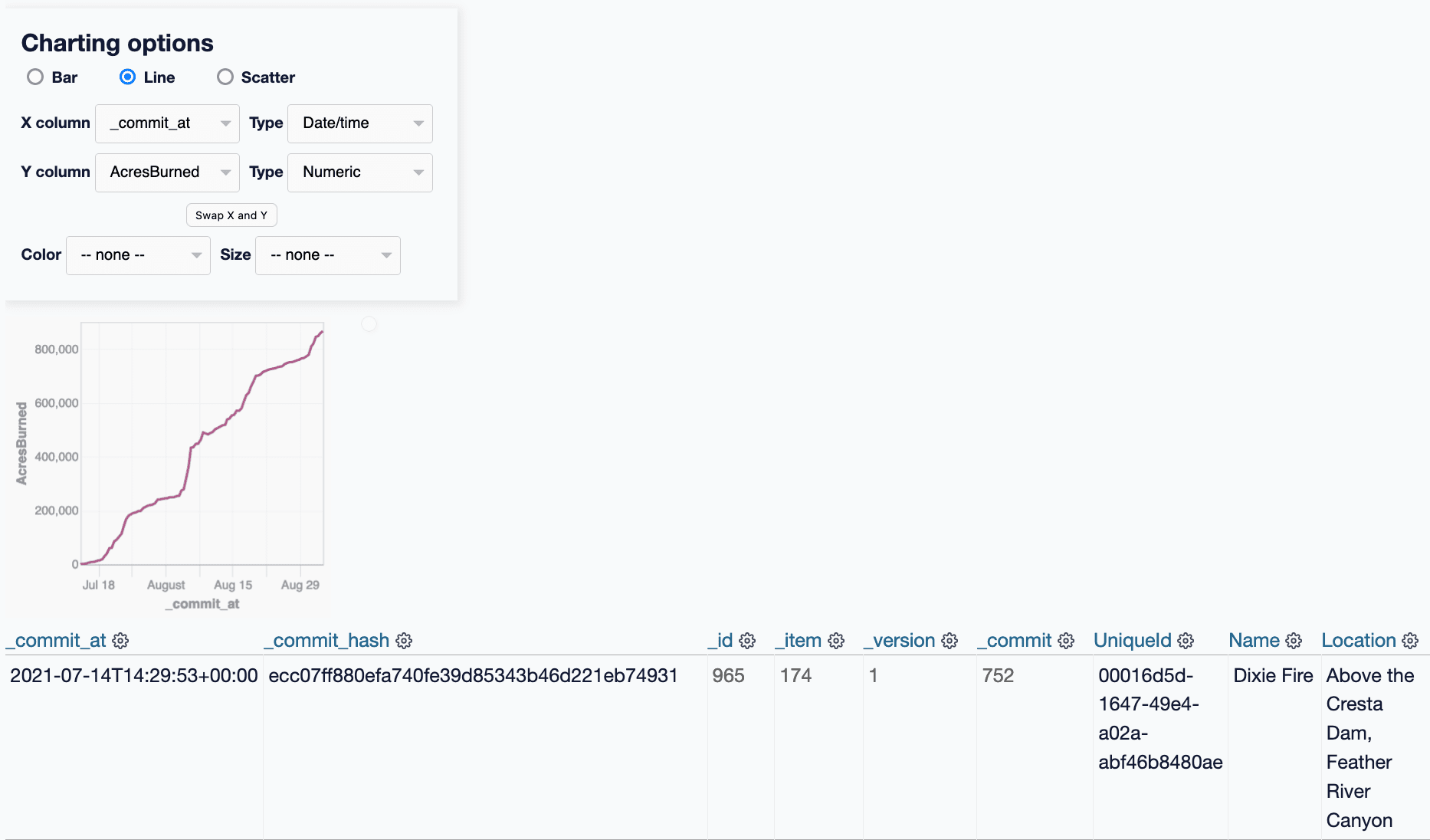

git-history: a tool for analyzing scraped data collected using Git and SQLite

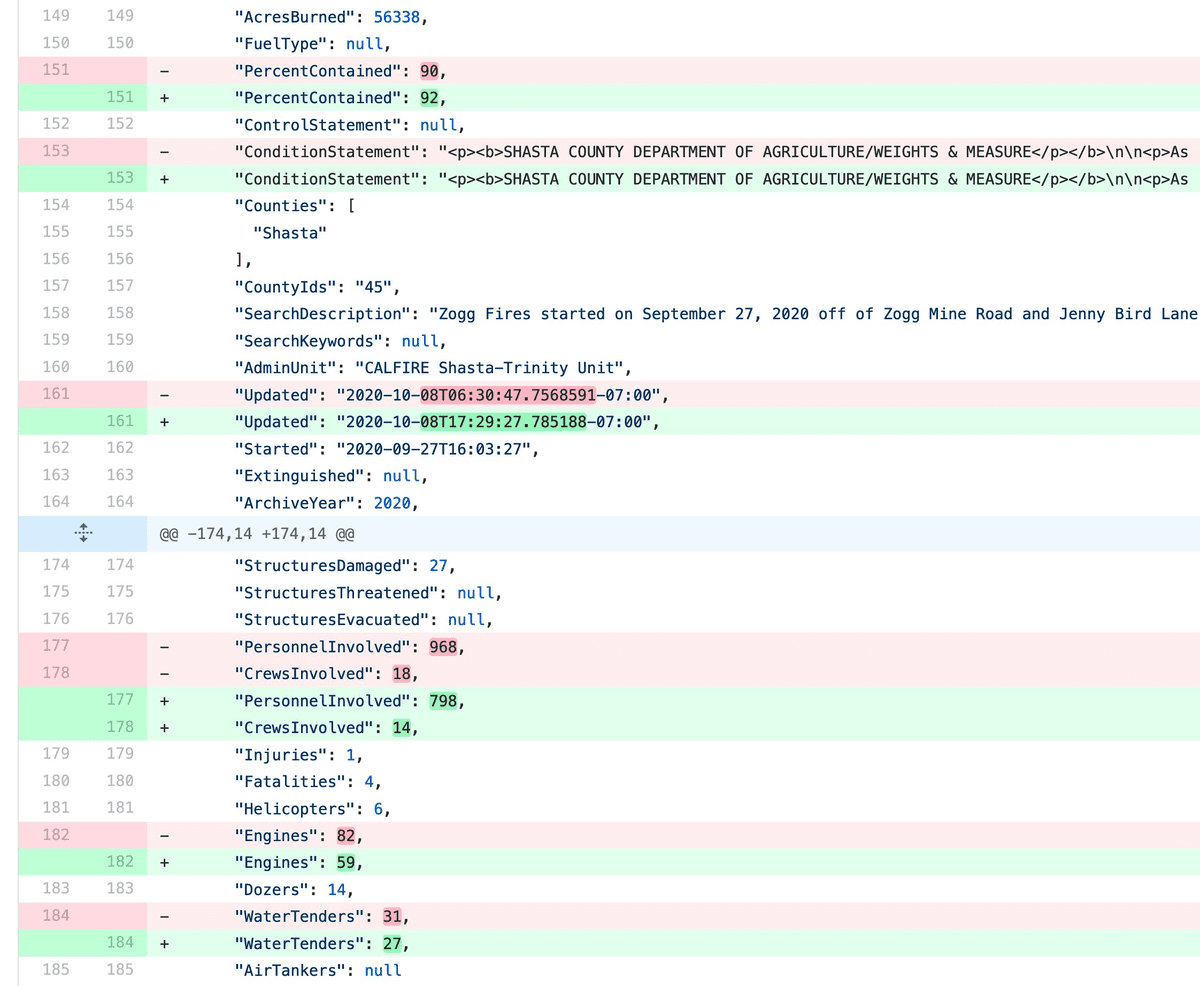

I described Git scraping last year: a technique for writing scrapers where you periodically snapshot a source of data to a Git repository in order to record changes to that source over time.

[... 2,002 words]Git scraping, the five minute lightning talk

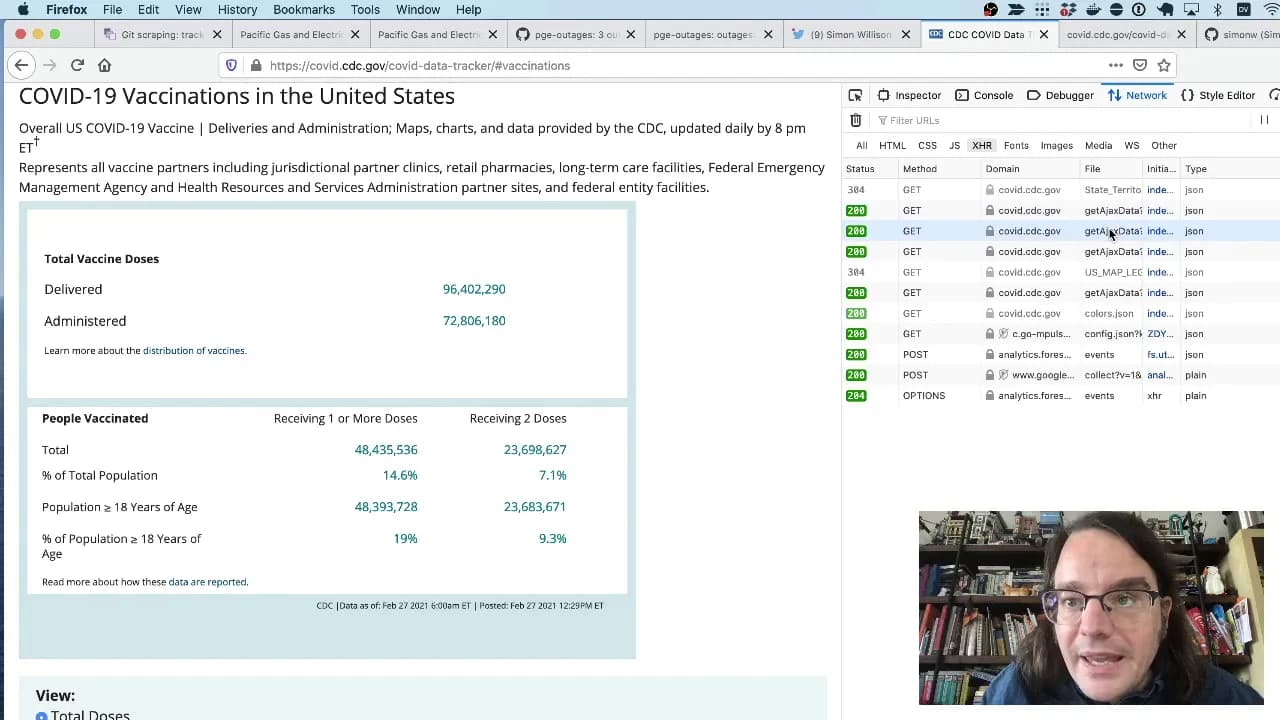

I prepared a lightning talk about Git scraping for the NICAR 2021 data journalism conference. In the talk I explain the idea of running scheduled scrapers in GitHub Actions, show some examples and then live code a new scraper for the CDC’s vaccination data using the GitHub web interface. Here’s the video.

[... 289 words]2020

selenium-wire. Really useful scraping tool: enhances the Python Selenium bindings to run against a proxy which then allows Python scraping code to look at captured requests—great for if a site you are working with triggers Ajax requests and you want to extract data from the raw JSON that came back.

Weeknotes: evernote-to-sqlite, Datasette Weekly, scrapers, csv-diff, sqlite-utils

This week I built evernote-to-sqlite (see Building an Evernote to SQLite exporter), launched the Datasette Weekly newsletter, worked on some scrapers and pushed out some small improvements to several other projects.

Git scraping: track changes over time by scraping to a Git repository

Git scraping is the name I’ve given a scraping technique that I’ve been experimenting with for a few years now. It’s really effective, and more people should use it.

[... 963 words]2019

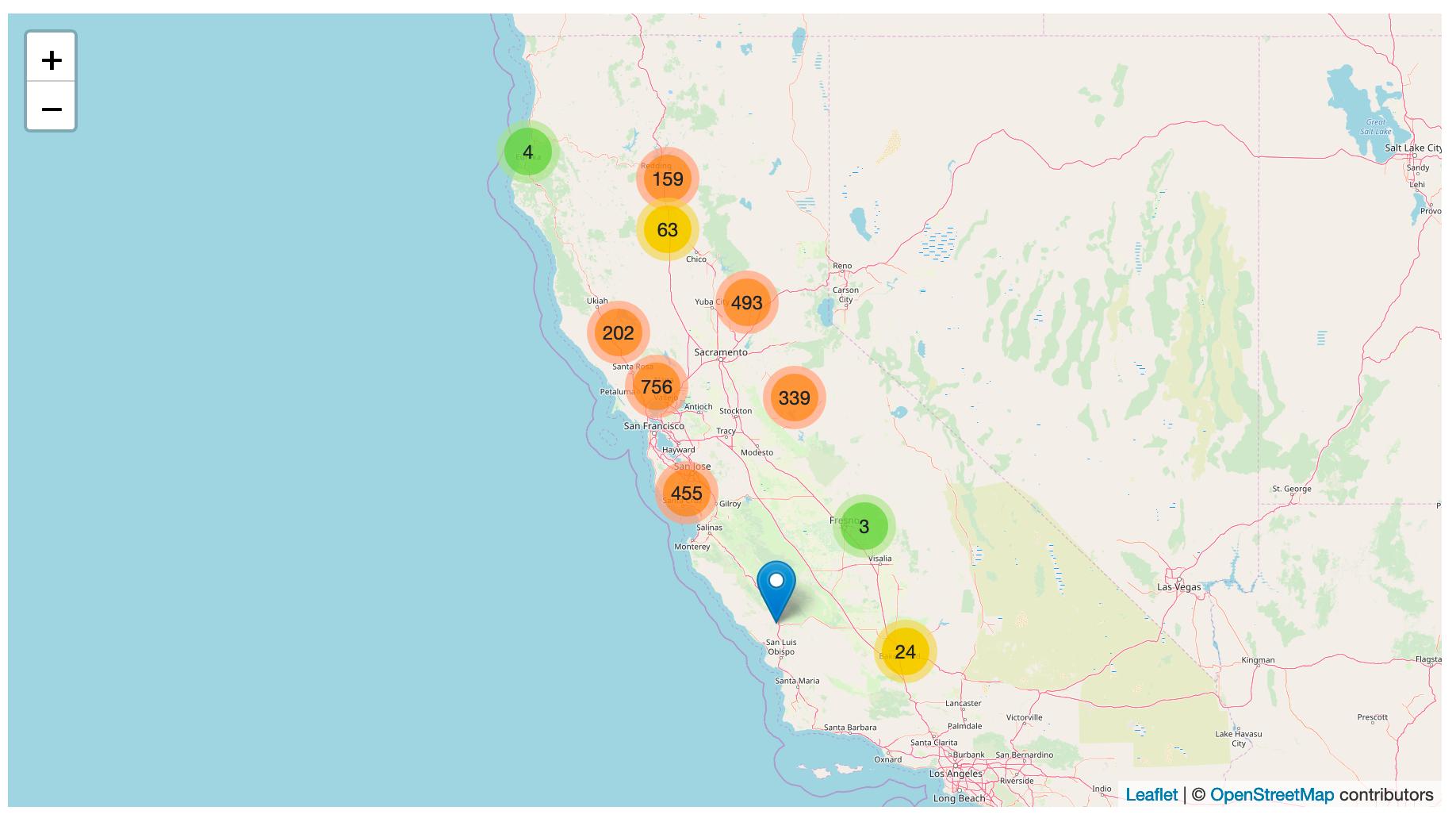

Tracking PG&E outages by scraping to a git repo

PG&E have cut off power to several million people in northern California, supposedly as a precaution against wildfires.

[... 868 words]2018

scrapely. Neat twist on a screen scraping library: this one lets you “train it” by feeding it examples of URLs paired with a dictionary of the data you would like to have extracted from that URL, then uses an instance based learning earning algorithm to run against new URLs. Slightly confusing name since it’s maintained by the scrapy team but is a totally independent project from the scrapy web crawling framework.

sqlitebiter. Similar to my csvs-to-sqlite tool, but sqlitebiter handles “CSV/Excel/HTML/JSON/LTSV/Markdown/SQLite/SSV/TSV/Google-Sheets”. Most interestingly, it works against HTML pages—run “sqlitebiter -v url ’https://en.wikipedia.org/wiki/Comparison_of_firewalls’” and it will scrape that Wikipedia page and create a SQLite table for each of the HTML tables it finds there.

kennethreitz/requests-html: HTML Parsing for Humans™ (via) Neat and tiny wrapper around requests, lxml and html2text that provides a Kenneth Reitz grade API design for intuitively fetching and scraping web pages. The inclusion of html2text means you can use a CSS selector to select a specific HTML element and then convert that to the equivalent markdown in a one-liner.

2017

Using “import refs” to iteratively import data into Django

I’ve been writing a few scripts to backfill my blog with content I originally posted elsewhere. So far I’ve imported answers I posted on Quora (background), answers I posted on Ask MetaFilter and content I recovered from the Internet Archive.

[... 559 words]2008

YQL—converting the web to JSON with mock SQL. YQL just got a whole lot more interesting to me—I had no idea they were exposing an HTML and RSS scraping tool over a JSONP API in addition to all of the Yahoo! web service methods.

Data Scraping Wikipedia with Google Spreadsheets. I hadn’t played with =importHTML in Google spreadsheets, which lets you suck in data from an HTML table or list somewhere on the web. This tutorial takes it further, bringing Wikipedia, Yahoo! Pipes and KML in to the mix.