52 items tagged “s3”

2024

Leader Election With S3 Conditional Writes (via) Amazon S3 added support for conditional writes last week, so you can now write a key to S3 with a reliable failure if someone else has has already created it.

This is a big deal. It reminds me of the time in 2020 when S3 added read-after-write consistency, an astonishing piece of distributed systems engineering.

Gunnar Morling demonstrates how this can be used to implement a distributed leader election system. The core flow looks like this:

- Scan an S3 bucket for files matching

lock_*- likelock_0000000001.json. If the highest number contains{"expired": false}then that is the leader - If the highest lock has expired, attempt to become the leader yourself: increment that lock ID and then attempt to create

lock_0000000002.jsonwith a PUT request that includes the newIf-None-Match: *header - set the file content to{"expired": false} - If that succeeds, you are the leader! If not then someone else beat you to it.

- To resign from leadership, update the file with

{"expired": true}

There's a bit more to it than that - Gunnar also describes how to implement lock validity timeouts such that a crashed leader doesn't leave the system leaderless.

After giving it a lot of thought, we made the decision to discontinue new access to a small number of services, including AWS CodeCommit.

While we are no longer onboarding new customers to these services, there are no plans to change the features or experience you get today, including keeping them secure and reliable. [...]

The services I'm referring to are: S3 Select, CloudSearch, Cloud9, SimpleDB, Forecast, Data Pipeline, and CodeCommit.

How an empty S3 bucket can make your AWS bill explode (via) Maciej Pocwierz accidentally created an S3 bucket with a name that was already used as a placeholder value in a widely used piece of software. They saw 100 million PUT requests to their new bucket in a single day, racking up a big bill since AWS charges $5/million PUTs.

It turns out AWS charge that same amount for PUTs that result in a 403 authentication error, a policy that extends even to "requester pays" buckets!

So, if you know someone's S3 bucket name you can DDoS their AWS bill just by flooding them with meaningless unauthenticated PUT requests.

AWS support refunded Maciej's bill as an exception here, but I'd like to see them reconsider this broken policy entirely.

Update from Jeff Barr:

We agree that customers should not have to pay for unauthorized requests that they did not initiate. We’ll have more to share on exactly how we’ll help prevent these charges shortly.

s3-credentials 0.16.

I spent entirely too long this evening trying to figure out why files in my new supposedly public S3 bucket were unavailable to view. It turns out these days you need to set a PublicAccessBlockConfiguration of {"BlockPublicAcls": false, "IgnorePublicAcls": false, "BlockPublicPolicy": false, "RestrictPublicBuckets": false}.

The s3-credentials --create-bucket --public option now does that for you. I also added a s3-credentials debug-bucket name-of-bucket command to help figure out why a bucket isn't working as expected.

S3 is files, but not a filesystem (via) Cal Paterson helps some concepts click into place for me: S3 imitates a file system but has a number of critical missing features, the most important of which is the lack of partial updates. Any time you want to modify even a few bytes in a file you have to upload and overwrite the entire thing. Almost every database system is dependent on partial updates to function, which is why there are so few databases that can use S3 directly as a backend storage mechanism.

Slashing Data Transfer Costs in AWS by 99% (via) Brilliant trick by Daniel Kleinstein. If you have data in two availability zones in the same AWS region, transferring a TB will cost you $10 in ingress and $10 in egress at the inter-zone rates charged by AWS.

But... transferring data to an S3 bucket in that same region is free (aside from S3 storage costs). And buckets are available with free transfer to all availability zones in their region, which means that TB of data can be transferred between availability zones for mere cents of S3 storage costs provided you delete the data as soon as it’s transferred.

2023

How ima.ge.cx works (via) ima.ge.cx is Aidan Steele’s web tool for browsing the contents of Docker images hosted on Docker Hub. The architecture is really interesting: it’s a set of AWS Lambda functions, written in Go, that fetch metadata about the images using Step Functions and then cache it in DynamoDB and S3. It uses S3 Select to serve directory listings from newline-delimited JSON in S3 without retrieving the whole file.

2022

Litestream backups for Datasette Cloud (and weeknotes)

My main focus this week has been adding robust backups to the forthcoming Datasette Cloud.

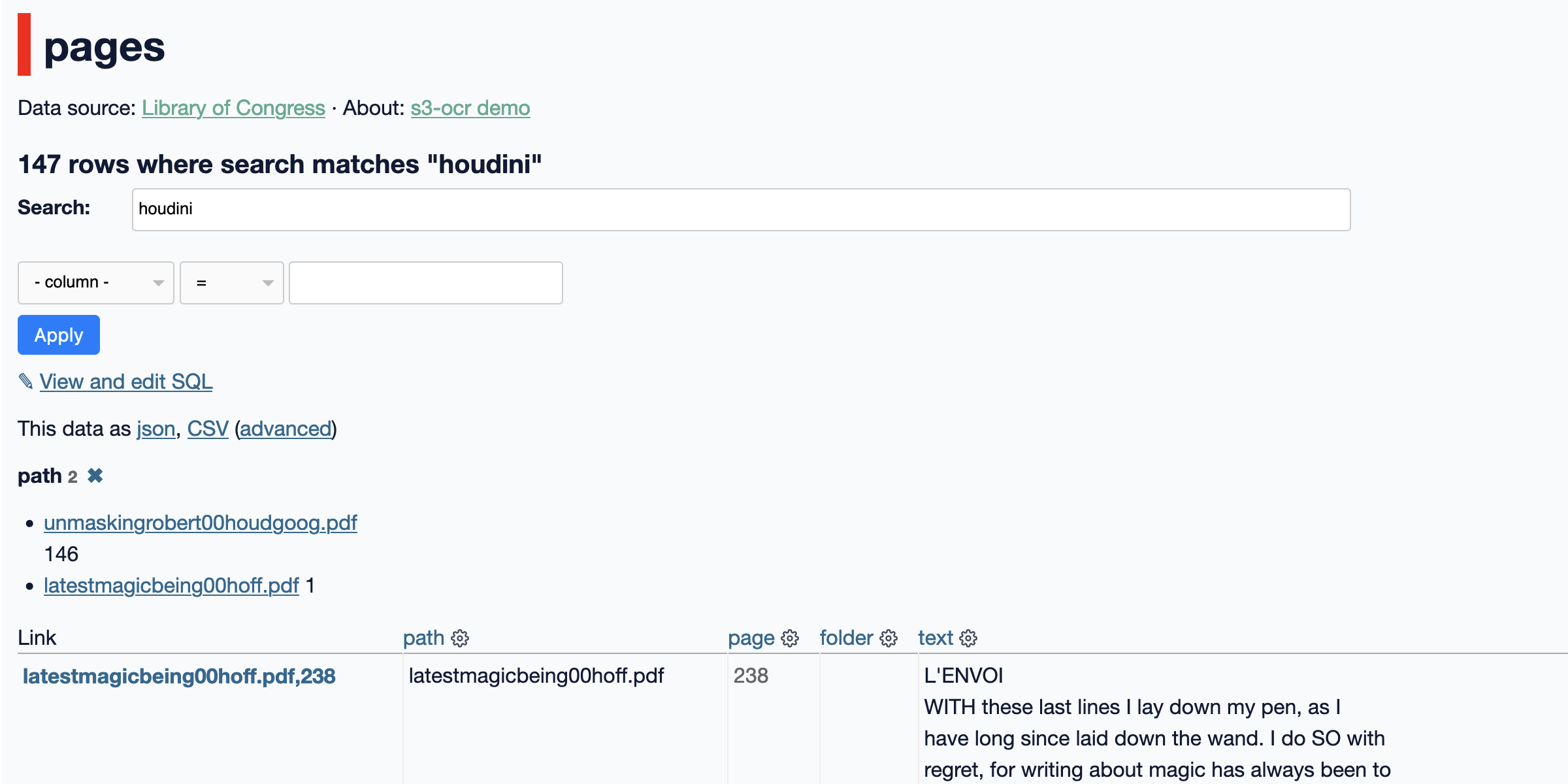

[... 1,604 words]s3-ocr: Extract text from PDF files stored in an S3 bucket

I’ve released s3-ocr, a new tool that runs Amazon’s Textract OCR text extraction against PDF files in an S3 bucket, then writes the resulting text out to a SQLite database with full-text search configured so you can run searches against the extracted data.

[... 1,493 words]2021

s3-credentials 0.8. The latest release of my s3-credentials CLI tool for creating S3 buckets with credentials to access them (with read-write, read-only or write-only policies) adds a new --public option for creating buckets that allow public access, such that anyone who knows a filename can download a file. The s3-credentials put-object command also now sets the appropriate Content-Type heading on the uploaded object.

Weeknotes: git-history, created for a Git scraping workshop

My main project this week was a 90 minute workshop I delivered about Git scraping at Coda.Br 2021, a Brazilian data journalism conference, on Friday. This inspired the creation of a brand new tool, git-history, plus smaller improvements to a range of other projects.

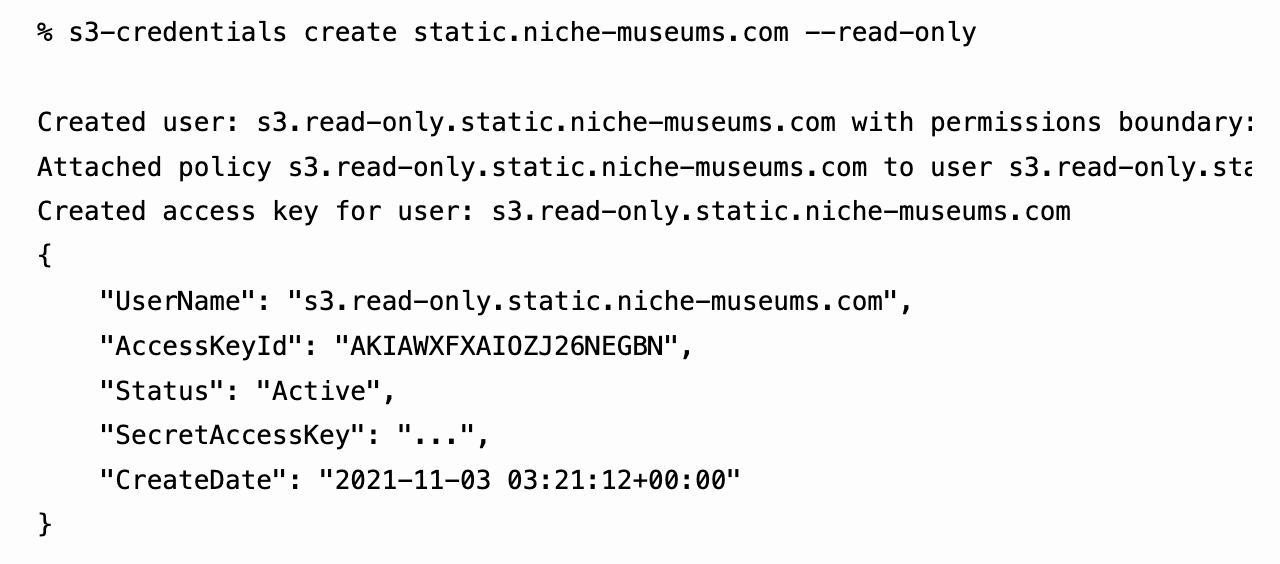

[... 1,239 words]s3-credentials: a tool for creating credentials for S3 buckets

I’ve built a command-line tool called s3-credentials to solve a problem that’s been frustrating me for ages: how to quickly and easily create AWS credentials (an access key and secret key) that have permission to read or write from just a single S3 bucket.

[... 1,618 words]Abusing Terraform to Upload Static Websites to S3 (via) I found this really interesting. Terraform is infrastructure as code software which mostly handles creating and updating infrastructure resources, so it’s a poor fit for uploading files to S3 and setting the correct Content-Type headers for them. But... in figuring out how to do that, this article taught me a ton about how Terraform works. I wonder if that’s a useful general pattern? Get a tool to do something that it’s poorly designed to handle and see how much you learn about that tool along the way.

Folks think s3 is static assets hosting but really it's a consistent and highly available key value store with first class blob support

logpaste (via) Useful example of how to use the Litestream SQLite replication tool in a Dockerized application: S3 credentials are passed to the container on startup, it then attempts to restore the SQLite database from S3 and starts a Litestream process in the same container to periodically synchronize changes back up to the S3 bucket.

2019

athena-sqlite (via) Amazon Athena is the AWS tool for querying data stored in S3—as CSV, JSON or Apache Parquet files—using SQL. It’s an interesting way of buliding a very cheap data warehouse on top of S3 without having to run any additional services. Athena recently added a query federation SDK which lets you define additional custom data sources using Lambda functions. Damon Cortesi used this to write a custom connector for SQLite, which lets you run queries against data stored in SQLite files that you have uploaded to S3. You can then run joins between that data and other Athena sources.

Client-side instrumentation for under $1 per month. No servers necessary. (via) Rolling your own analytics used to be too complex and expensive to be worth the effort. Thanks to cloud technologies like Cloudfront, Athena, S3 and Lambda you can now inexpensively implement client-side analytics (via requests to a tracking pixel) that stores detailed logs on S3, then use Amazon Athena to run queries against those logs ($5/TB scanned) to get detailed reporting. This post also introduced me to Snowplow, an open source JavaScript analytics script (released by a commercial analytics platform) which looks very neat—it’s based on piwik.js, the tracker from the open-source Piwik analytics tool.

2018

Django Bakery (via) “A set of helpers for baking your Django site out as flat files”. Released by the LA Times Data Desk, who use it for a large number of projects from election results to data journalism interactives. Statically publishing these projects to S3 lets them handle huge traffic spikes at a very low cost.

s3monkey: A Python library that allows you to interact with Amazon S3 Buckets as if they are your local filesystem. (via) A particularly devious hack by Kenneth Reitz—provides a context manager within which various Python filesystem APIs such as open() and os.listdir() are monkeypatched to operate against an S3 bucket instead. Kenneth built it to make it easier to work with files from apps running on Heroku. Under the hood it uses pyfakefs, a filesystem mocking library originally released by Google.

2013

For a Django application, deployed on Heroku, what are my options for storing user-uploaded media files?

S3 is really a no-brainer for this, it’s extremely inexpensive, very easy to integrate with and unbelievably reliable. It’s so cheap that it will be practically free for testing purposes (expect to spend pennies a month on it).

[... 88 words]2011

The excess capacity story is a myth. It was never a matter of selling excess capacity, actually within 2 months after launch AWS would have already burned through the excess Amazon.com capacity. Amazon Web Services was always considered a business by itself, with the expectation that it could even grow as big as the Amazon.com retail operation.

2010

Amazon S3: Versioning Proposal. The us-west-1 S3 bucket region now optionally supports versioning—once enabled on a bucket, all previous versions of keys will be preserved.

2009

OpenStreetMap Rendering Database. Amazon have added an OpenStreetMap snapshot as a public data set, thanks to some smart prompting by Jeremy Dunck.

App Engine outage postmortem. Interesting peek behind the scenes. The primary cause of the error was a bug in a GFS (Google File System) Master server caused by a MapReduce process sending a malformed filehandle, reminiscent of the error which took down S3 last year.

AWS Import/Export: Ship Us That Disk! Andrew Tanenbaum said “Never underestimate the bandwidth of a station wagon full of tapes hurtling down the highway”, and now you can ship your storage device direct to Amazon and have them load the data in to an S3 bucket for you.

aws—simple access to Amazon EC2 and S3. The best command line client I’ve found for EC2 and S3. “aws put --progress my-bucket-name/large-file.tar.gz large-file.tar.gz” is particularly useful for uploading large files to S3. Written in Perl (with no dependencies), shelling out to curl to do the heavy lifting.

Amazon Elastic MapReduce (via) Hadoop as a service. Basically a web based GUI around Hadoop—you could roll this yourself on EC2 but for a small markup on regular EC2 prices you get to avoid the extra work setting everything up. Data processing scripts can be written in Java, Ruby, Perl, Python, PHP, R, or C++ and are loaded in to S3 before firing off the job.

Oscars 2009: the interactive results | guardian.co.uk. My latest project for the Guardian, put together on very short notice. Updates live as the results are announced, and allows Twitter users to vote on their favourite for each category by sending a specially formatted message to @guardianfilm—jQuery and Ajax polling against S3 under the hood.

2008

How Tarsnap uses Amazon Web Services (via) Useful case study, including some thoughts on SimpleDB.

Amazon SimpleDB a complete flop? Terry asks if anyone is actually using SimpleDB (related Google searches indicate not, and I’ve personally not heard of anyone using it despite plenty of usage of S3 and EC2). One factor might be that lock-in to EC2 and S3 is pretty small, but if you rely on SimpleDB you’ll need to rewrite your entire application to escape.