November 2024

94 posts: 9 entries, 65 links, 19 quotes, 1 note

Nov. 12, 2024

Qwen2.5-Coder-32B is an LLM that can code well that runs on my Mac

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

[... 697 words]Ars Live: Our first encounter with manipulative AI (via) I'm participating in a live conversation with Benj Edwards on 19th November reminiscing over that incredible time back in February last year when Bing went feral.

Nov. 13, 2024

django-plugin-django-debug-toolbar (via) Tom Viner built a plugin for my DJP Django plugin system that configures the excellent django-debug-toolbar debugging tool.

You can see everything it sets up for you in this Python code: it configures installed apps, URL patterns and middleware and sets the INTERNAL_IPS and DEBUG settings.

Here are Tom's running notes as he created the plugin.

Ollama: Llama 3.2 Vision. Ollama released version 0.4 last week with support for Meta's first Llama vision model, Llama 3.2.

If you have Ollama installed you can fetch the 11B model (7.9 GB) like this:

ollama pull llama3.2-vision

Or the larger 90B model (55GB download, likely needs ~88GB of RAM) like this:

ollama pull llama3.2-vision:90b

I was delighted to learn that Sukhbinder Singh had already contributed support for LLM attachments to Sergey Alexandrov's llm-ollama plugin, which means the following works once you've pulled the models:

llm install --upgrade llm-ollama

llm -m llama3.2-vision:latest 'describe' \

-a https://static.simonwillison.net/static/2024/pelican.jpg

This image features a brown pelican standing on rocks, facing the camera and positioned to the left of center. The bird's long beak is a light brown color with a darker tip, while its white neck is adorned with gray feathers that continue down to its body. Its legs are also gray.

In the background, out-of-focus boats and water are visible, providing context for the pelican's environment.

That's not a bad description of this image, especially for a 7.9GB model that runs happily on my MacBook Pro.

This tutorial exists because of a particular quirk of mine: I love to write tutorials about things as I learn them. This is the backstory of TRPL, of which an ancient draft was "Rust for Rubyists." You only get to look at a problem as a beginner once, and so I think writing this stuff down is interesting. It also helps me clarify what I'm learning to myself.

— Steve Klabnik, Steve's Jujutsu Tutorial

Nov. 14, 2024

Releasing the largest multilingual open pretraining dataset (via) Common Corpus is a new "open and permissible licensed text dataset, comprising over 2 trillion tokens (2,003,039,184,047 tokens)" released by French AI Lab PleIAs.

This appears to be the largest available corpus of openly licensed training data:

- 926,541,096,243 tokens of public domain books, newspapers, and Wikisource content

- 387,965,738,992 tokens of government financial and legal documents

- 334,658,896,533 tokens of open source code from GitHub

- 221,798,136,564 tokens of academic content from open science repositories

- 132,075,315,715 tokens from Wikipedia, YouTube Commons, StackExchange and other permissively licensed web sources

It's majority English but has significant portions in French and German, and some representation for Latin, Dutch, Italian, Polish, Greek and Portuguese.

I can't wait to try some LLMs trained exclusively on this data. Maybe we will finally get a GPT-4 class model that isn't trained on unlicensed copyrighted data.

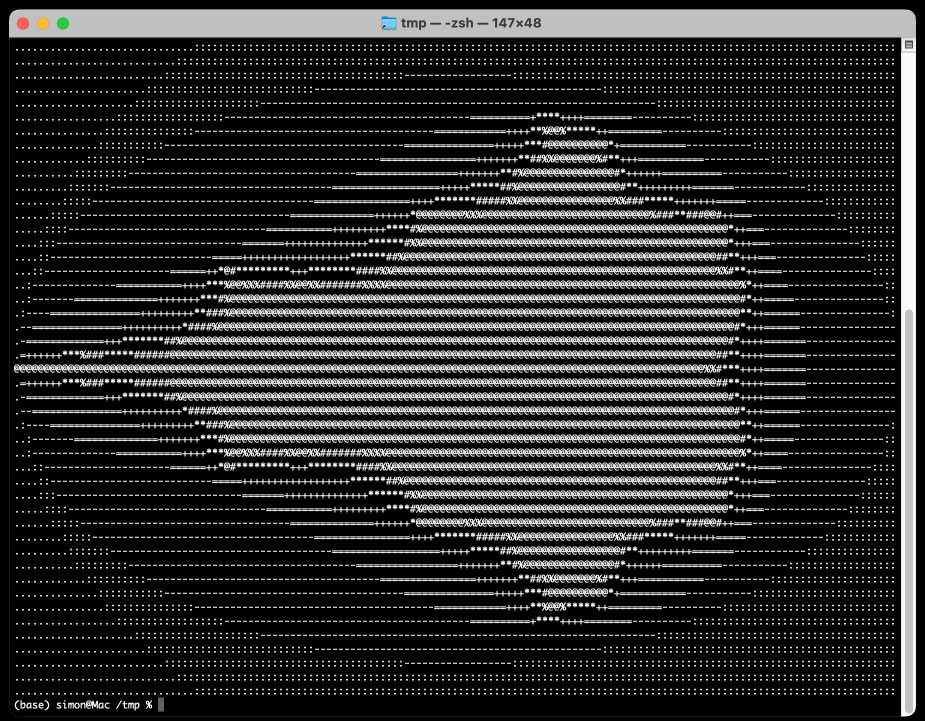

QuickTime video script to capture frames and bounding boxes. An update to an older TIL. I'm working on the write-up for my DjangoCon US talk on plugins and I found myself wanting to capture individual frames from the video in two formats: a full frame capture, and another that captured just the portion of the screen shared from my laptop.

I have a script for the former, so I got Claude to update my script to add support for one or more --box options, like this:

capture-bbox.sh ../output.mp4 --box '31,17,100,87' --box '0,0,50,50'

Open output.mp4 in QuickTime Player, run that script and then every time you hit a key in the terminal app it will capture three JPEGs from the current position in QuickTime Player - one for the whole screen and one each for the specified bounding box regions.

Those bounding box regions are percentages of the width and height of the image. I also got Claude to build me this interactive tool on top of cropperjs to help figure out those boxes:

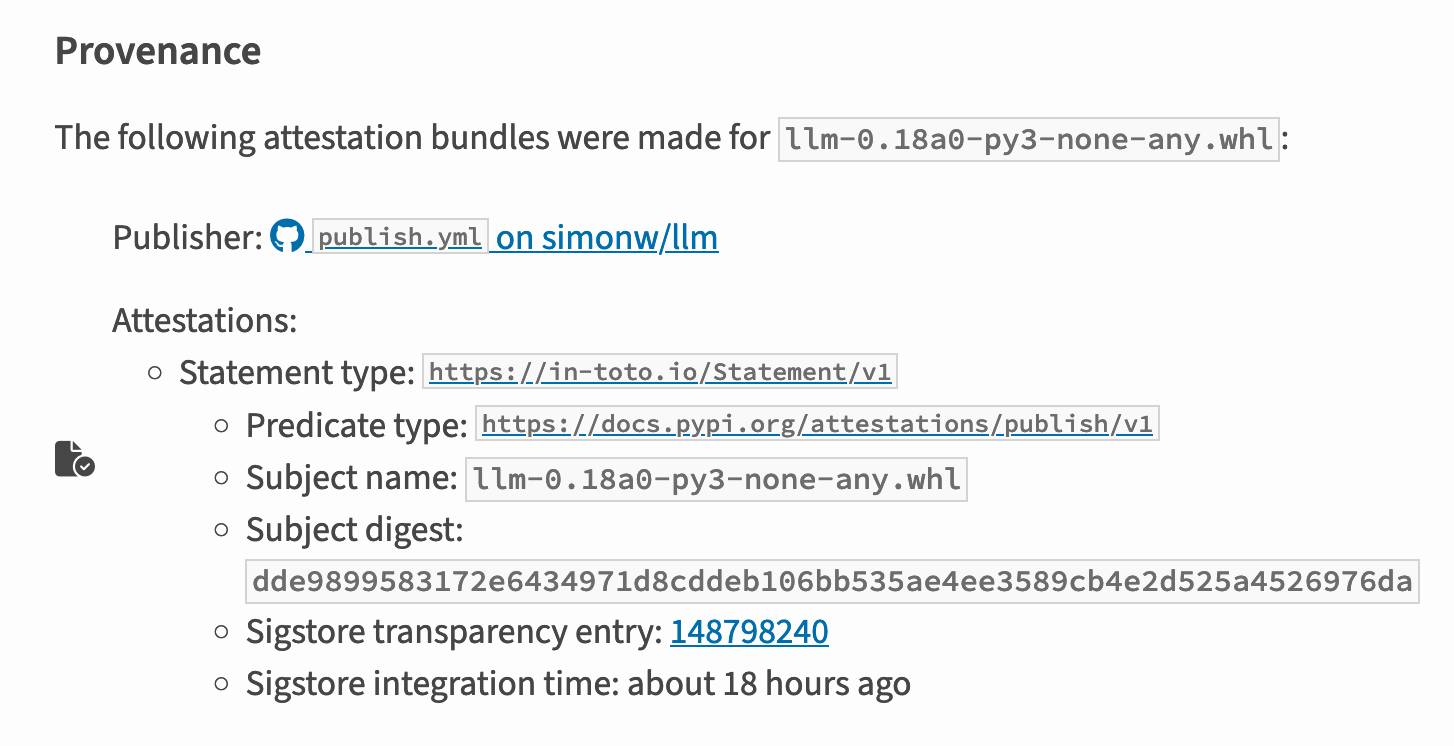

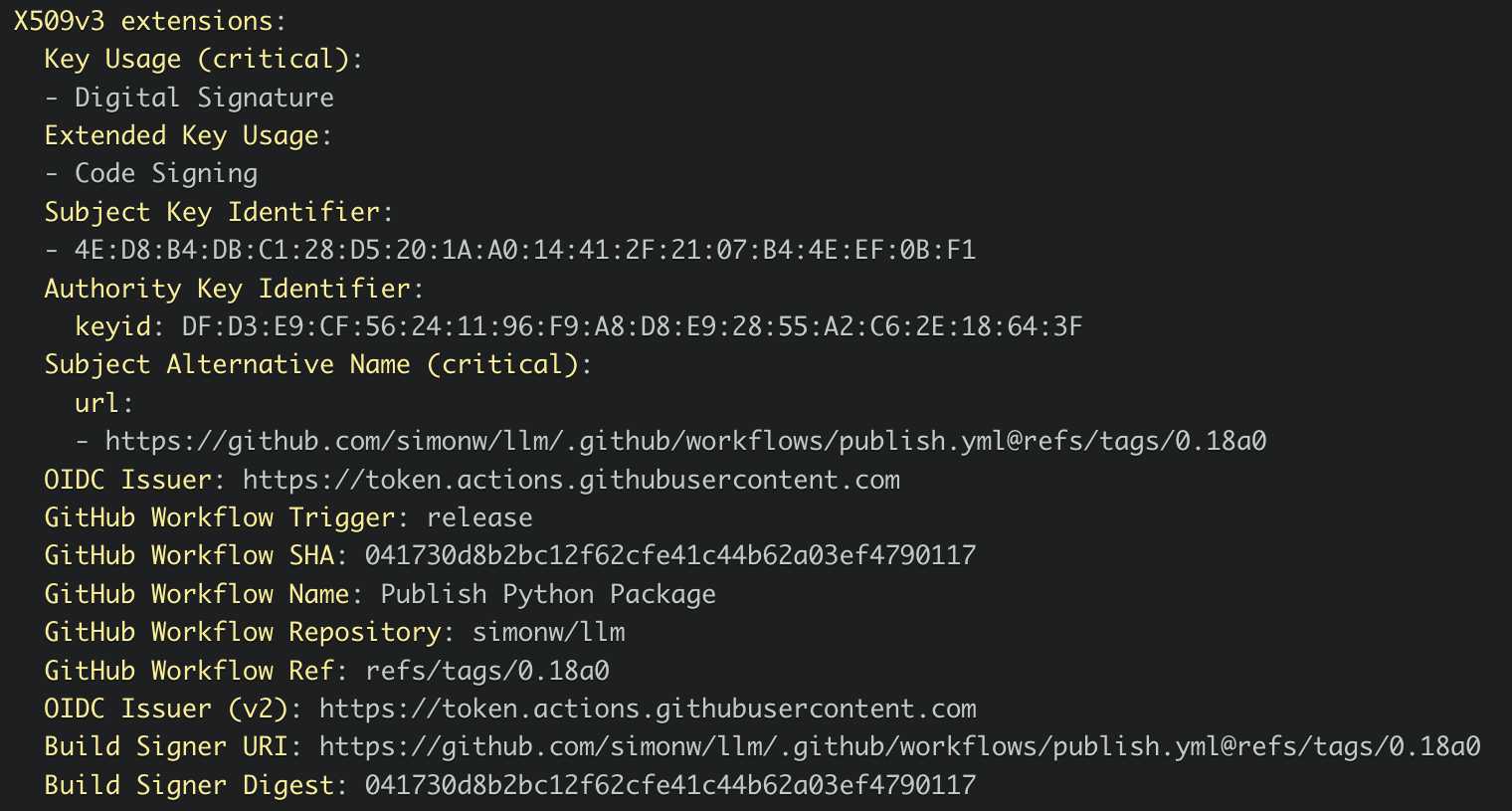

PyPI now supports digital attestations (via) Dustin Ingram:

PyPI package maintainers can now publish signed digital attestations when publishing, in order to further increase trust in the supply-chain security of their projects. Additionally, a new API is available for consumers and installers to verify published attestations.

This has been in the works for a while, and is another component of PyPI's approach to supply chain security for Python packaging - see PEP 740 – Index support for digital attestations for all of the underlying details.

A key problem this solves is cryptographically linking packages published on PyPI to the exact source code that was used to build those packages. In the absence of this feature there are no guarantees that the .tar.gz or .whl file you download from PyPI hasn't been tampered with (to add malware, for example) in a way that's not visible in the published source code.

These new attestations provide a mechanism for proving that a known, trustworthy build system was used to generate and publish the package, starting with its source code on GitHub.

The good news is that if you're using the PyPI Trusted Publishers mechanism in GitHub Actions to publish packages, you're already using this new system. I wrote about that system in January: Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions - and hundreds of my own PyPI packages are already using that system, thanks to my various cookiecutter templates.

Trail of Bits helped build this feature, and provide extra background about it on their own blog in Attestations: A new generation of signatures on PyPI:

As of October 29, attestations are the default for anyone using Trusted Publishing via the PyPA publishing action for GitHub. That means roughly 20,000 packages can now attest to their provenance by default, with no changes needed.

They also built Are we PEP 740 yet? (key implementation here) to track the rollout of attestations across the 360 most downloaded packages from PyPI. It works by hitting URLs such as https://pypi.org/simple/pydantic/ with a Accept: application/vnd.pypi.simple.v1+json header - here's the JSON that returns.

I published an alpha package using Trusted Publishers last night and the files for that release are showing the new provenance information already:

Which links to this Sigstore log entry with more details, including the Git hash that was used to build the package:

Sigstore is a transparency log maintained by Open Source Security Foundation (OpenSSF), a sub-project of the Linux Foundation.

Anthropic declined to comment, but referred Bloomberg News to a five-hour podcast featuring Chief Executive Officer Dario Amodei that was released Monday.

"People call them scaling laws. That's a misnomer," he said on the podcast. "They're not laws of the universe. They're empirical regularities. I am going to bet in favor of them continuing, but I'm not certain of that."

[...]

An Anthropic spokesperson said the language about Opus was removed from the website as part of a marketing decision to only show available and benchmarked models. Asked whether Opus 3.5 would still be coming out this year, the spokesperson pointed to Amodei’s podcast remarks. In the interview, the CEO said Anthropic still plans to release the model but repeatedly declined to commit to a timetable.

— OpenAI, Google and Anthropic Are Struggling to Build More Advanced AI, Rachel Metz, Shirin Ghaffary, Dina Bass, and Julia Love for Bloomberg

OpenAI Public Bug Bounty. Reading this investigation of the security boundaries of OpenAI's Code Interpreter environment helped me realize that the rules for OpenAI's public bug bounty inadvertently double as the missing details for a whole bunch of different aspects of their platform.

This description of Code Interpreter is significantly more useful than their official documentation!

Code execution from within our sandboxed Python code interpreter is out of scope. (This is an intended product feature.) When the model executes Python code it does so within a sandbox. If you think you've gotten RCE outside the sandbox, you must include the output of

uname -a. A result like the following indicates that you are inside the sandbox -- specifically note the 2016 kernel version:

Linux 9d23de67-3784-48f6-b935-4d224ed8f555 4.4.0 #1 SMP Sun Jan 10 15:06:54 PST 2016 x86_64 x86_64 x86_64 GNU/LinuxInside the sandbox you would also see

sandboxas the output ofwhoami, and as the only user in the output ofps.

Nov. 15, 2024

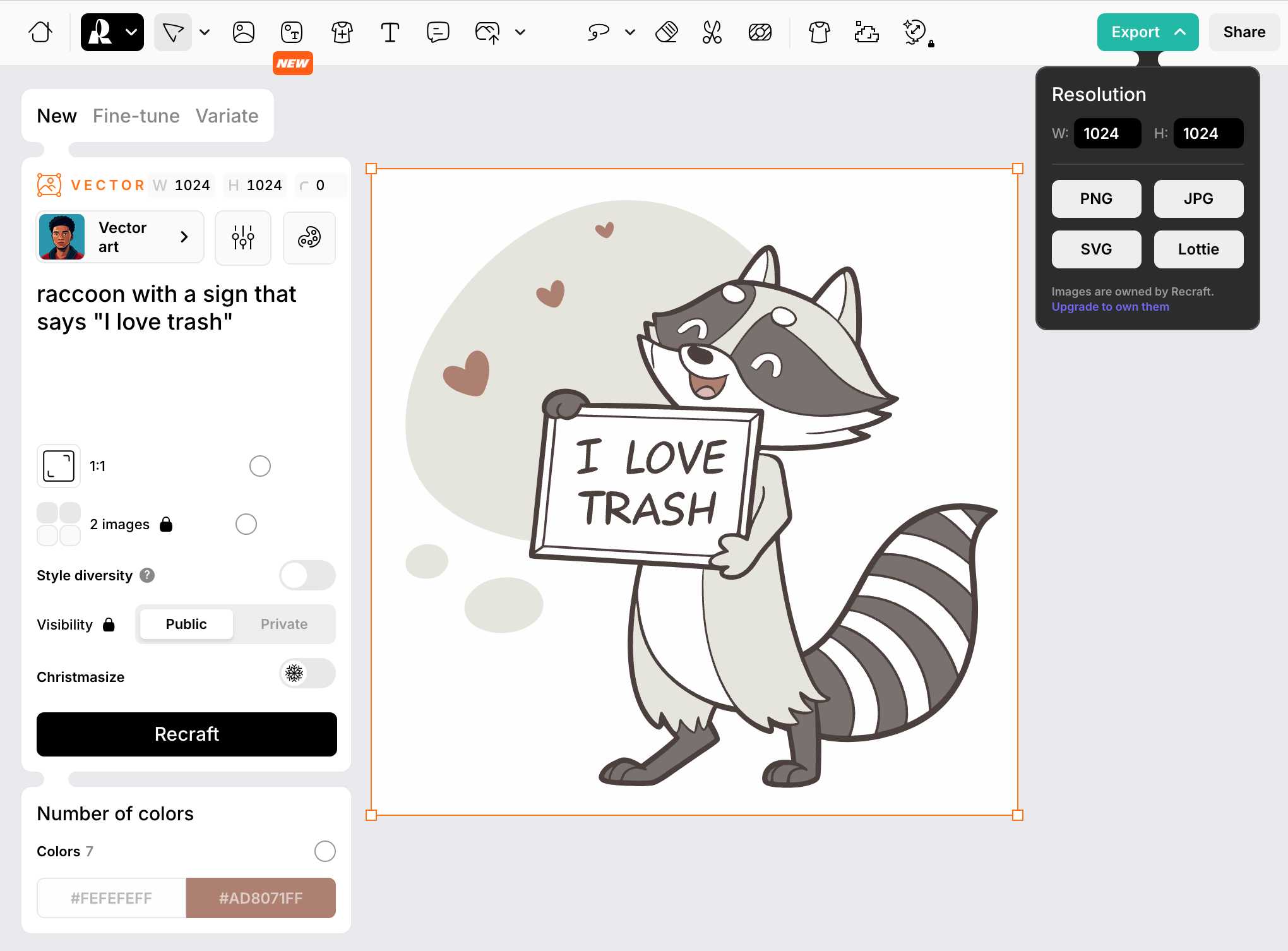

Recraft V3. Recraft are a generative AI design tool startup based out of London who released their v3 model a few weeks ago. It's currently sat at the top of the Artificial Analysis Image Arena Leaderboard, beating Midjourney and Flux 1.1 pro.

The thing that impressed me is that it can generate both raster and vector graphics... and the vector graphics can be exported as SVG!

Here's what I got for raccoon with a sign that says "I love trash" - SVG here.

That's an editable SVG - when I open it up in Pixelmator I can select and modify the individual paths and shapes:

![]()

They also have an API. I spent $1 on 1000 credits and then spent 80 credits (8 cents) making this SVG of a pelican riding a bicycle, using my API key stored in 1Password:

export RECRAFT_API_TOKEN="$(

op item get recraft.ai --fields label=password \

--format json | jq .value -r)"

curl https://external.api.recraft.ai/v1/images/generations \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $RECRAFT_API_TOKEN" \

-d '{

"prompt": "california brown pelican riding a bicycle",

"style": "vector_illustration",

"model": "recraftv3"

}'

Voting opens for Oxford Word of the Year 2024 (via) One of the options is slop!

slop (n.): Art, writing, or other content generated using artificial intelligence, shared and distributed online in an indiscriminate or intrusive way, and characterized as being of low quality, inauthentic, or inaccurate.

Update 1st December: Slop lost to Brain rot

Nov. 16, 2024

NuExtract 1.5. Structured extraction - where an LLM helps turn unstructured text (or image content) into structured data - remains one of the most directly useful applications of LLMs.

NuExtract is a family of small models directly trained for this purpose (though text only at the moment) and released under the MIT license.

It comes in a variety of shapes and sizes:

- NuExtract-v1.5 is a 3.8B parameter model fine-tuned on Phi-3.5-mini instruct. You can try this one out in this playground.

- NuExtract-tiny-v1.5 is 494M parameters, fine-tuned on Qwen2.5-0.5B.

- NuExtract-1.5-smol is 1.7B parameters, fine-tuned on SmolLM2-1.7B.

All three models were fine-tuned on NuMind's "private high-quality dataset". It's interesting to see a model family that uses one fine-tuning set against three completely different base models.

Useful tip from Steffen Röcker:

Make sure to use it with low temperature, I've uploaded NuExtract-tiny-v1.5 to Ollama and set it to 0. With the Ollama default of 0.7 it started repeating the input text. It works really well despite being so smol.

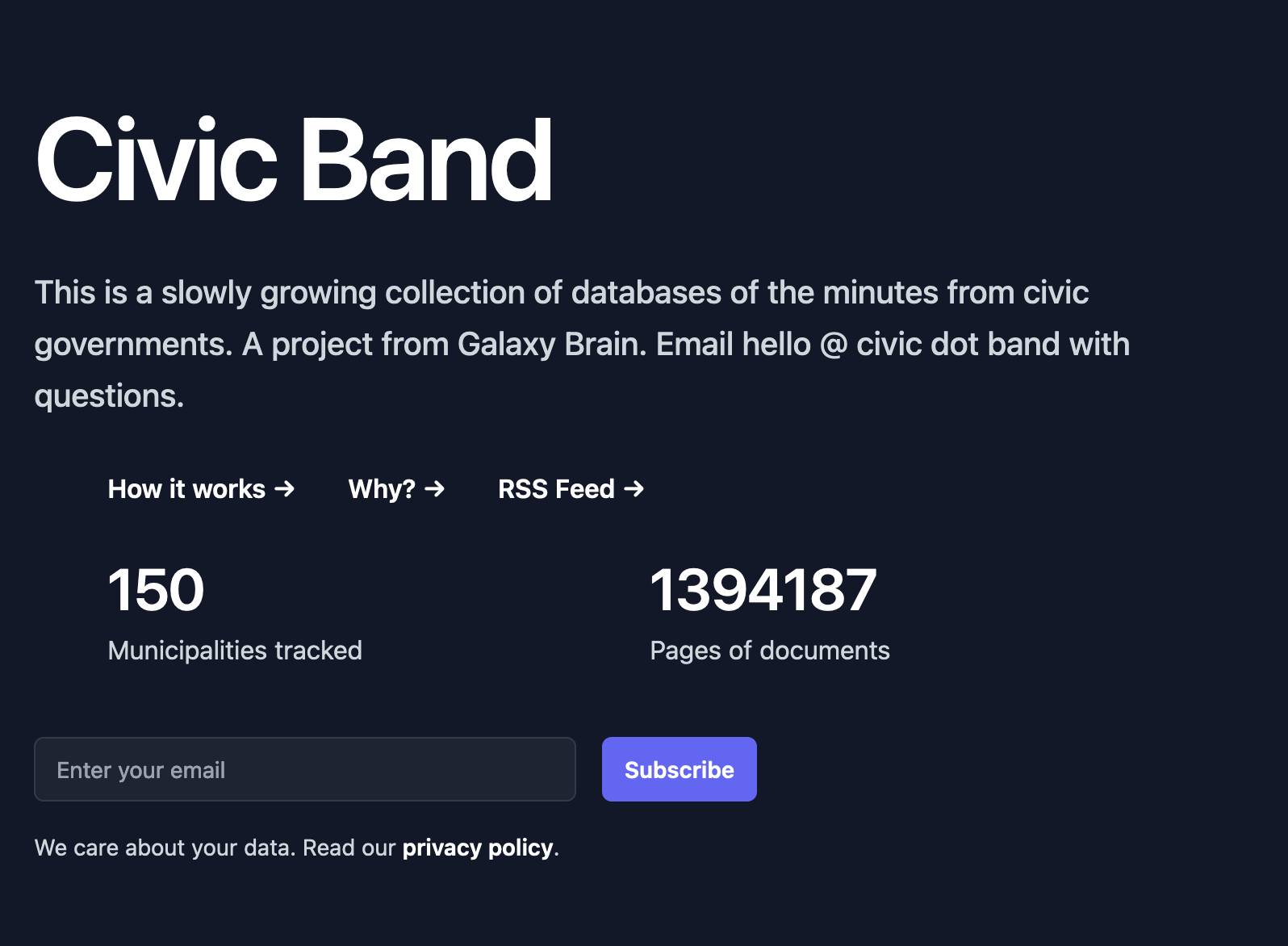

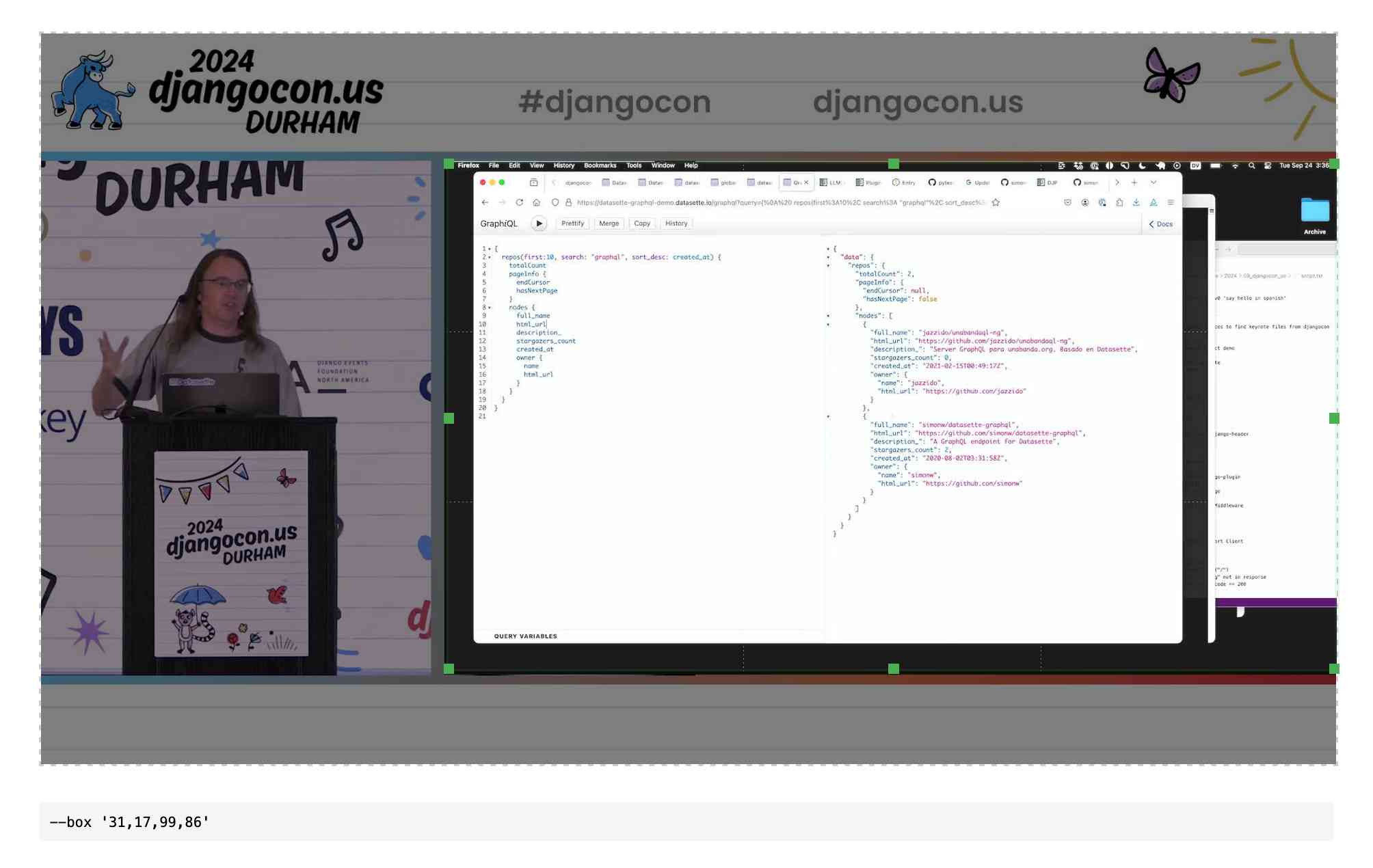

Project: Civic Band—scraping and searching PDF meeting minutes from hundreds of municipalities

I interviewed Philip James about Civic Band, his “slowly growing collection of databases of the minutes from civic governments”. Philip demonstrated the site and talked through his pipeline for scraping and indexing meeting minutes from many different local government authorities around the USA.

[... 762 words]Nov. 17, 2024

LLM 0.18. New release of LLM. The big new feature is asynchronous model support - you can now use supported models in async Python code like this:

import llm

model = llm.get_async_model("gpt-4o")

async for chunk in model.prompt(

"Five surprising names for a pet pelican"

):

print(chunk, end="", flush=True)

Also new in this release: support for sending audio attachments to OpenAI's gpt-4o-audio-preview model.

Nov. 18, 2024

llm-gemini 0.4.

New release of my llm-gemini plugin, adding support for asynchronous models (see LLM 0.18), plus the new gemini-exp-1114 model (currently at the top of the Chatbot Arena) and a -o json_object 1 option to force JSON output.

I also released llm-claude-3 0.9 which adds asynchronous support for the Claude family of models.

The main innovation here is just using more data. Specifically, Qwen2.5 Coder is a continuation of an earlier Qwen 2.5 model. The original Qwen 2.5 model was trained on 18 trillion tokens spread across a variety of languages and tasks (e.g, writing, programming, question answering). Qwen 2.5-Coder sees them train this model on an additional 5.5 trillion tokens of data. This means Qwen has been trained on a total of ~23T tokens of data – for perspective, Facebook’s LLaMa3 models were trained on about 15T tokens. I think this means Qwen is the largest publicly disclosed number of tokens dumped into a single language model (so far).

Qwen: Extending the Context Length to 1M Tokens (via) The new Qwen2.5-Turbo boasts a million token context window (up from 128,000 for Qwen 2.5) and faster performance:

Using sparse attention mechanisms, we successfully reduced the time to first token for processing a context of 1M tokens from 4.9 minutes to 68 seconds, achieving a 4.3x speedup.

The benchmarks they've published look impressive, including a 100% score on the 1M-token passkey retrieval task (not the first model to achieve this).

There's a catch: unlike previous models in the Qwen 2.5 series it looks like this one hasn't been released as open weights: it's available exclusively via their (inexpensive) paid API - for which it looks like you may need a +86 Chinese phone number.

Pixtral Large (via) New today from Mistral:

Today we announce Pixtral Large, a 124B open-weights multimodal model built on top of Mistral Large 2. Pixtral Large is the second model in our multimodal family and demonstrates frontier-level image understanding.

The weights are out on Hugging Face (over 200GB to download, and you'll need a hefty GPU rig to run them). The license is free for academic research but you'll need to pay for commercial usage.

The new Pixtral Large model is available through their API, as models called pixtral-large-2411 and pixtral-large-latest.

Here's how to run it using LLM and the llm-mistral plugin:

llm install -U llm-mistral

llm keys set mistral

# paste in API key

llm mistral refresh

llm -m mistral/pixtral-large-latest describe -a https://static.simonwillison.net/static/2024/pelicans.jpg

The image shows a large group of birds, specifically pelicans, congregated together on a rocky area near a body of water. These pelicans are densely packed together, some looking directly at the camera while others are engaging in various activities such as preening or resting. Pelicans are known for their large bills with a distinctive pouch, which they use for catching fish. The rocky terrain and the proximity to water suggest this could be a coastal area or an island where pelicans commonly gather in large numbers. The scene reflects a common natural behavior of these birds, often seen in their nesting or feeding grounds.

Update: I released llm-mistral 0.8 which adds async model support for the full Mistral line, plus a new llm -m mistral-large shortcut alias for the Mistral Large model.

Nov. 19, 2024

Security means securing people where they are (via) William Woodruff is an Engineering Director at Trail of Bits who worked on the recent PyPI digital attestations project.

That feature is based around open standards but launched with an implementation against GitHub, which resulted in push back (and even some conspiracy theories) that PyPI were deliberately favoring GitHub over other platforms.

William argues here for pragmatism over ideology:

Being serious about security at scale means meeting users where they are. In practice, this means deciding how to divide a limited pool of engineering resources such that the largest demographic of users benefits from a security initiative. This results in a fundamental bias towards institutional and pre-existing services, since the average user belongs to these institutional services and does not personally particularly care about security. Participants in open source can and should work to counteract this institutional bias, but doing so as a matter of ideological purity undermines our shared security interests.

Preview: Gemini API Additional Terms of Service. Google sent out an email last week linking to this preview of upcoming changes to the Gemini API terms. Key paragraph from that email:

To maintain a safe and responsible environment for all users, we're enhancing our abuse monitoring practices for Google AI Studio and Gemini API. Starting December 13, 2024, Gemini API will log prompts and responses for Paid Services, as described in the terms. These logs are only retained for a limited time (55 days) and are used solely to detect abuse and for required legal or regulatory disclosures. These logs are not used for model training. Logging for abuse monitoring is standard practice across the global AI industry. You can preview the updated Gemini API Additional Terms of Service, effective December 13, 2024.

That "for required legal or regulatory disclosures" piece makes it sound like somebody could subpoena Google to gain access to your logged Gemini API calls.

It's not clear to me if this is a change from their current policy though, other than the number of days of log retention increasing from 30 to 55 (and I'm having trouble finding that 30 day number written down anywhere.)

That same email also announced the deprecation of the older Gemini 1.0 Pro model:

Gemini 1.0 Pro will be discontinued on February 15, 2025.

Notes from Bing Chat—Our First Encounter With Manipulative AI

I participated in an Ars Live conversation with Benj Edwards of Ars Technica today, talking about that wild period of LLM history last year when Microsoft launched Bing Chat and it instantly started misbehaving, gaslighting and defaming people.

[... 438 words]Understanding the BM25 full text search algorithm (via) Evan Schwartz provides a deep dive explanation of how the classic BM25 search relevance scoring function works, including a very useful breakdown of the mathematics it uses.

Using uv with PyTorch (via) PyTorch is a notoriously tricky piece of Python software to install, due to the need to provide separate wheels for different combinations of Python version and GPU accelerator (e.g. different CUDA versions).

uv now has dedicated documentation for PyTorch which I'm finding really useful - it clearly explains the challenge and then shows exactly how to configure a pyproject.toml such that uv knows which version of each package it should install from where.

OpenStreetMap vector tiles demo

(via)

Long-time OpenStreetMap developer Paul Norman has been working on adding vector tile support to OpenStreetMap for quite a while. Paul recently announced that vector.openstreetmap.org is now serving vector tiles (in Mapbox Vector Tiles (MVT) format) - here's his interactive demo for seeing what they look like.

Nov. 20, 2024

Bluesky WebSocket Firehose. Very quick (10 seconds of Claude hacking) prototype of a web page that attaches to the public Bluesky WebSocket firehose and displays the results directly in your browser.

Here's the code - there's very little to it, it's basically opening a connection to wss://jetstream2.us-east.bsky.network/subscribe?wantedCollections=app.bsky.feed.post and logging out the results to a <textarea readonly> element.

Bluesky's Jetstream isn't their main atproto firehose - that's a more complicated protocol involving CBOR data and CAR files. Jetstream is a new Go proxy (source code here) that provides a subset of that firehose over WebSocket.

Jetstream was built by Bluesky developer Jaz, initially as a side-project, in response to the surge of traffic they received back in September when Brazil banned Twitter. See Jetstream: Shrinking the AT Proto Firehose by >99% for their description of the project when it first launched.

The API scene growing around Bluesky is really exciting right now. Twitter's API is so expensive it may as well not exist, and Mastodon's community have pushed back against many potential uses of the Mastodon API as incompatible with that community's value system.

Hacking on Bluesky feels reminiscent of the massive diversity of innovation we saw around Twitter back in the late 2000s and early 2010s.

Here's a much more fun Bluesky demo by Theo Sanderson: firehose3d.theo.io (source code here) which displays the firehose from that same WebSocket endpoint in the style of a Windows XP screensaver.

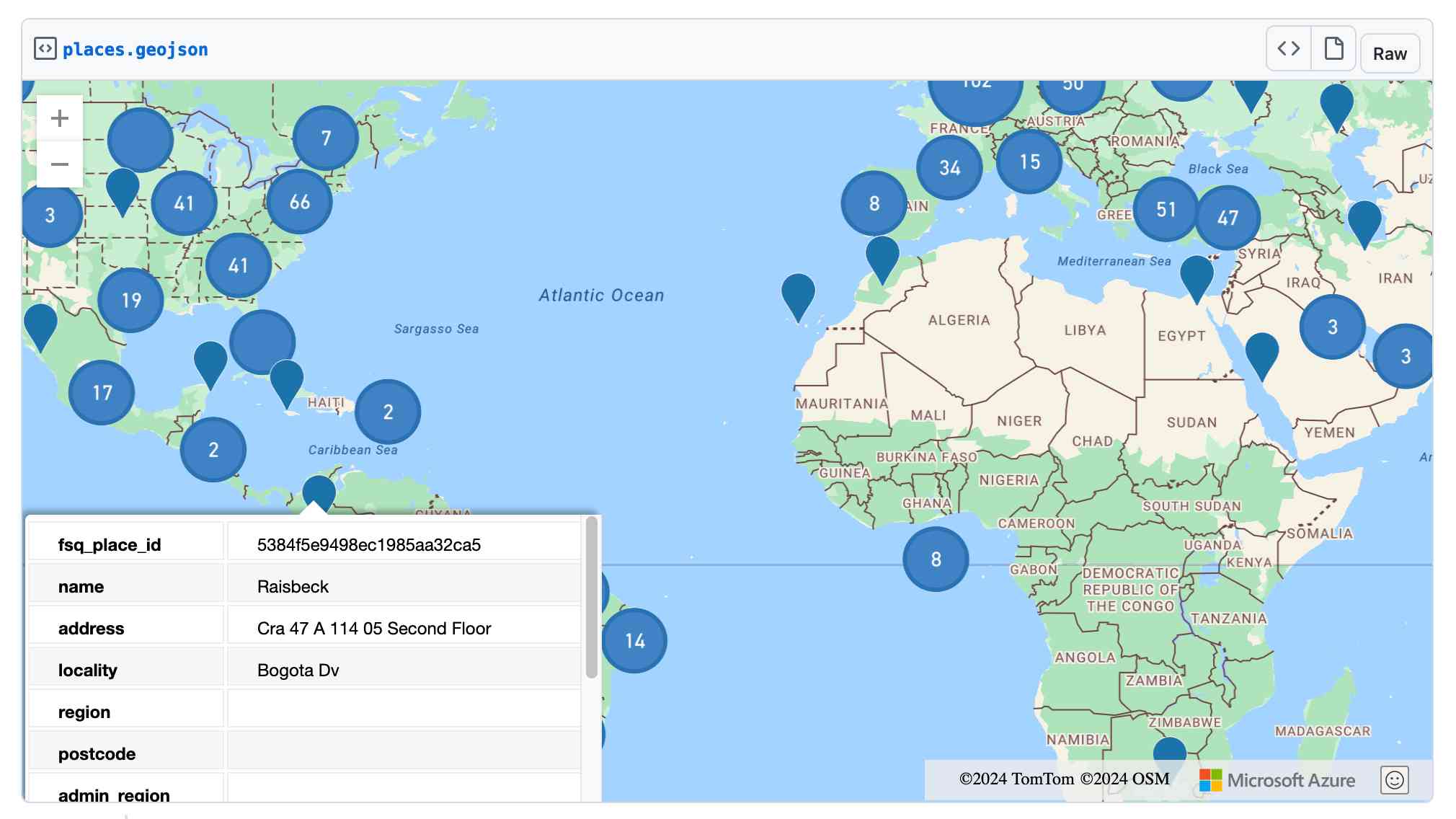

Foursquare Open Source Places: A new foundational dataset for the geospatial community (via) I did not expect this!

[...] we are announcing today the general availability of a foundational open data set, Foursquare Open Source Places ("FSQ OS Places"). This base layer of 100mm+ global places of interest ("POI") includes 22 core attributes (see schema here) that will be updated monthly and available for commercial use under the Apache 2.0 license framework.

The data is available as Parquet files hosted on Amazon S3.

Here's how to list the available files:

aws s3 ls s3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/

I got back places-00000.snappy.parquet through places-00024.snappy.parquet, each file around 455MB for a total of 10.6GB of data.

I ran duckdb and then used DuckDB's ability to remotely query Parquet on S3 to explore the data a bit more without downloading it to my laptop first:

select count(*) from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-00000.snappy.parquet';

This got back 4,180,424 - that number is similar for each file, suggesting around 104,000,000 records total.

Update: DuckDB can use wildcards in S3 paths (thanks, Paul) so this query provides an exact count:

select count(*) from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-*.snappy.parquet';

That returned 104,511,073 - and Activity Monitor on my Mac confirmed that DuckDB only needed to fetch 1.2MB of data to answer that query.

I ran this query to retrieve 1,000 places from that first file as newline-delimited JSON:

copy (

select * from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-00000.snappy.parquet'

limit 1000

) to '/tmp/places.json';

Here's that places.json file, and here it is imported into Datasette Lite.

Finally, I got ChatGPT Code Interpreter to convert that file to GeoJSON and pasted the result into this Gist, giving me a map of those thousand places (because Gists automatically render GeoJSON):

Nov. 21, 2024

When we started working on what became NotebookLM in the summer of 2022, we could fit about 1,500 words in the context window. Now we can fit up to 1.5 million words. (And using various other tricks, effectively fit 25 million words.) The emergence of long context models is, I believe, the single most unappreciated AI development of the past two years, at least among the general public. It radically transforms the utility of these models in terms of actual, practical applications.

TextSynth Server (via) I'd missed this: Fabrice Bellard (yes, that Fabrice Bellard) has a project called TextSynth Server which he describes like this:

ts_server is a web server proposing a REST API to large language models. They can be used for example for text completion, question answering, classification, chat, translation, image generation, ...

It has the following characteristics:

- All is included in a single binary. Very few external dependencies (Python is not needed) so installation is easy.

- Supports many Transformer variants (GPT-J, GPT-NeoX, GPT-Neo, OPT, Fairseq GPT, M2M100, CodeGen, GPT2, T5, RWKV, LLAMA, Falcon, MPT, Llama 3.2, Mistral, Mixtral, Qwen2, Phi3, Whisper) and Stable Diffusion.

- [...]

Unlike many of his other notable projects (such as FFmpeg, QEMU, QuickJS) this isn't open source - in fact it's not even source available, you instead can download compiled binaries for Linux or Windows that are available for non-commercial use only.

Commercial terms are available, or you can visit textsynth.com and pre-pay for API credits which can then be used with the hosted REST API there.

This is not a new project: the earliest evidence I could find of it was this July 2019 page in the Internet Archive, which said:

Text Synth is build using the GPT-2 language model released by OpenAI. [...] This implementation is original because instead of using a GPU, it runs using only 4 cores of a Xeon E5-2640 v3 CPU at 2.60GHz. With a single user, it generates 40 words per second. It is programmed in plain C using the LibNC library.

How some of the world’s most brilliant computer scientists got password policies so wrong (via) Stuart Schechter blames Robert Morris and Ken Thompson for the dire state of passwords today:

The story of why password rules were recommended and enforced without scientific evidence since their invention in 1979 is a story of brilliant people, at the very top of their field, whose well-intentioned recommendations led to decades of ignorance.

As Stuart describes it, their first mistake was inventing password policies (the ones about having at least one special character in a password) without testing that these would genuinely help the average user create a more secure password. Their second mistake was introducing one-way password hashing, which made the terrible password choices of users invisible to administrators of these systems!

As a result of Morris and Thompson’s recommendations, and those who believed their assumptions without evidence, it was not until well into the 21st century that the scientific community learned just how ineffective password policies were. This period of ignorance finally came to an end, in part, because hackers started stealing password databases from large websites and publishing them.

Stuart suggests using public-private key cryptography for passwords instead, which would allow passwords to be securely stored while still allowing researchers holding the private key the ability to analyze the passwords. He notes that this is a tough proposal to pitch today:

Alas, to my knowledge, nobody has ever used this approach, because after Morris and Thompson’s paper storing passwords in any form that can be reversed became taboo.