Sunday, 24th November 2024

Whatever you think of capitalism, the evidence is overwhelming: Social networks with a single proprietor have trouble with long-term survival, and those do survive have trouble with user-experience quality: see Enshittification.

The evidence is also perfectly clear that it doesn’t have to be this way. The original social network, email, is now into its sixth decade of vigorous life. It ain’t perfect but it is essential, and not in any serious danger.

The single crucial difference between email and all those other networks — maybe the only significant difference — is that nobody owns or controls it.

— Tim Bray, Why Not Bluesky

Is async Django ready for prime time? (via) Jonathan Adly reports on his experience using Django to build ColiVara, a hosted RAG API that uses ColQwen2 visual embeddings, inspired by the ColPali paper.

In a breach of Betteridge's law of headlines the answer to the question posed by this headline is “yes”.

We believe async Django is ready for production. In theory, there should be no performance loss when using async Django instead of FastAPI for the same tasks.

The ColiVara application is itself open source, and you can see how it makes use of Django’s relatively new asynchronous ORM features in the api/views.py module.

I also picked up a useful trick from their Dockerfile: if you want uv in a container you can install it with this one-liner:

COPY --from=ghcr.io/astral-sh/uv:latest /uv /bin/uv

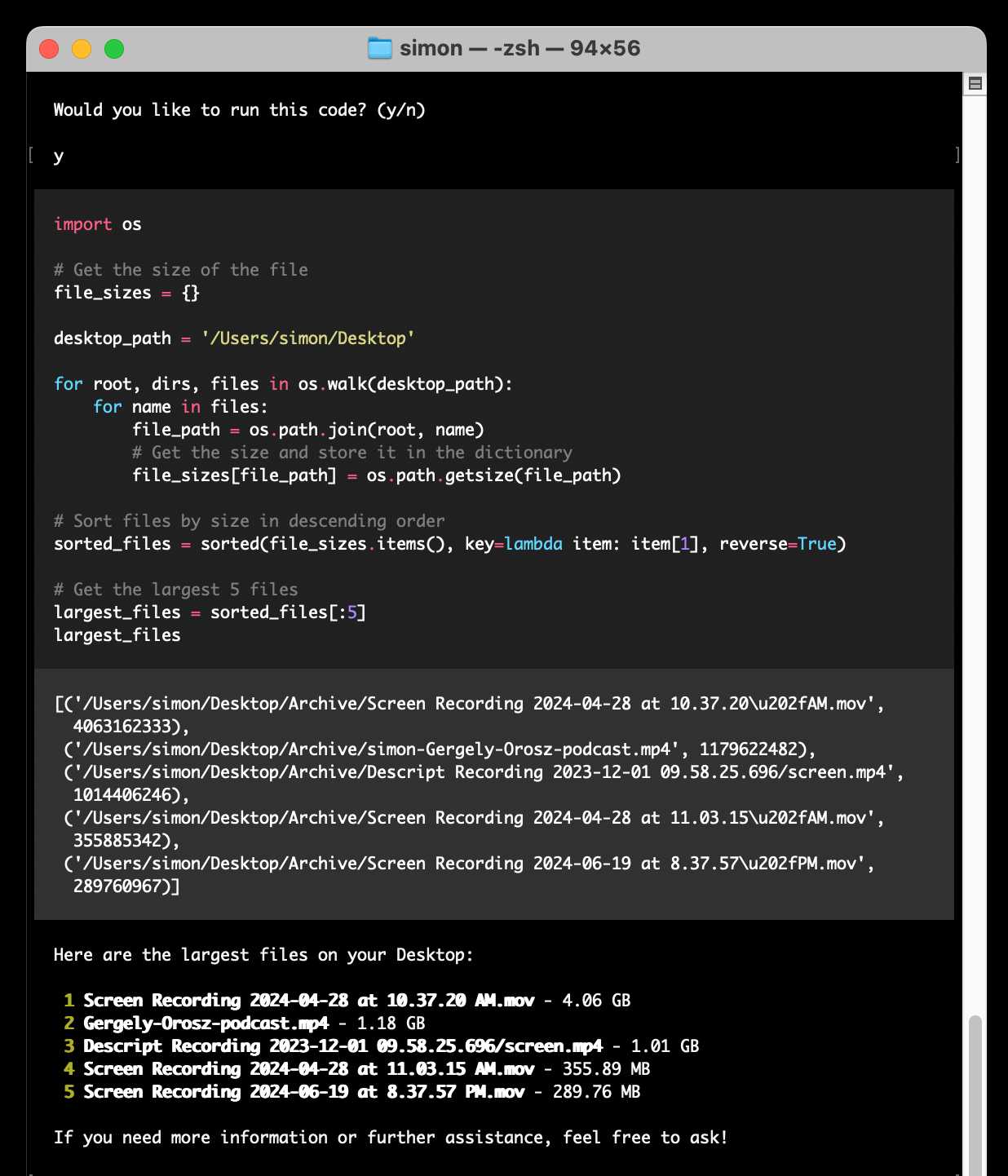

open-interpreter (via) This "natural language interface for computers" open source ChatGPT Code Interpreter alternative has been around for a while, but today I finally got around to trying it out.

Here's how I ran it (without first installing anything) using uv:

uvx --from open-interpreter interpreter

The default mode asks you for an OpenAI API key so it can use gpt-4o - there are a multitude of other options, including the ability to use local models with interpreter --local.

It runs in your terminal and works by generating Python code to help answer your questions, asking your permission to run it and then executing it directly on your computer.

I pasted in an API key and then prompted it with this:

find largest files on my desktop

Here's the full transcript.

Since code is run directly on your machine there are all sorts of ways things could go wrong if you don't carefully review the generated code before hitting "y". The team have an experimental safe mode in development which works by scanning generated code with semgrep. I'm not convinced by that approach, I think executing code in a sandbox would be a much more robust solution here - but sandboxing Python is still a very difficult problem.

They do at least have an experimental Docker integration.

follow_theirs.py. Hamel Husain wrote this Python script on top of the atproto Python library for interacting with Bluesky, which lets you specify another user and then follows every account that user is following.

I forked it and added two improvements: inline PEP 723 dependencies and input() and getpass.getpass() to interactively ask for the credentials needed to run the script.

This means you can run my version using uv run like this:

uv run https://gist.githubusercontent.com/simonw/848a3b91169a789bc084a459aa7ecf83/raw/397ad07c8be0601eaf272d9d5ab7675c7fd3c0cf/follow_theirs.py

I really like this pattern of being able to create standalone Python scripts with dependencies that can be run from a URL as a one-liner. Here's the comment section at the top of the script that makes it work:

# /// script

# dependencies = [

# "atproto"

# ]

# ///

Often, you are told to do this by treating AI like an intern. In retrospect, however, I think that this particular analogy ends up making people use AI in very constrained ways. To put it bluntly, any recent frontier model (by which I mean Claude 3.5, ChatGPT-4o, Grok 2, Llama 3.1, or Gemini Pro 1.5) is likely much better than any intern you would hire, but also weirder.

Instead, let me propose a new analogy: treat AI like an infinitely patient new coworker who forgets everything you tell them each new conversation, one that comes highly recommended but whose actual abilities are not that clear.