445 posts tagged “sqlite”

SQLite is the world's most widely deployed database engine.

2026

cysqlite—a new sqlite driver

(via)

Charles Leifer has been maintaining pysqlite3 - a fork of the Python standard library's sqlite3 module that makes it much easier to run upgraded SQLite versions - since 2018.

He's been working on a ground-up Cython rewrite called cysqlite for almost as long, but it's finally at a stage where it's ready for people to try out.

The biggest change from the sqlite3 module involves transactions. Charles explains his discomfort with the sqlite3 implementation at length - that library provides two different variants neither of which exactly match the autocommit mechanism in SQLite itself.

I'm particularly excited about the support for custom virtual tables, a feature I'd love to see in sqlite3 itself.

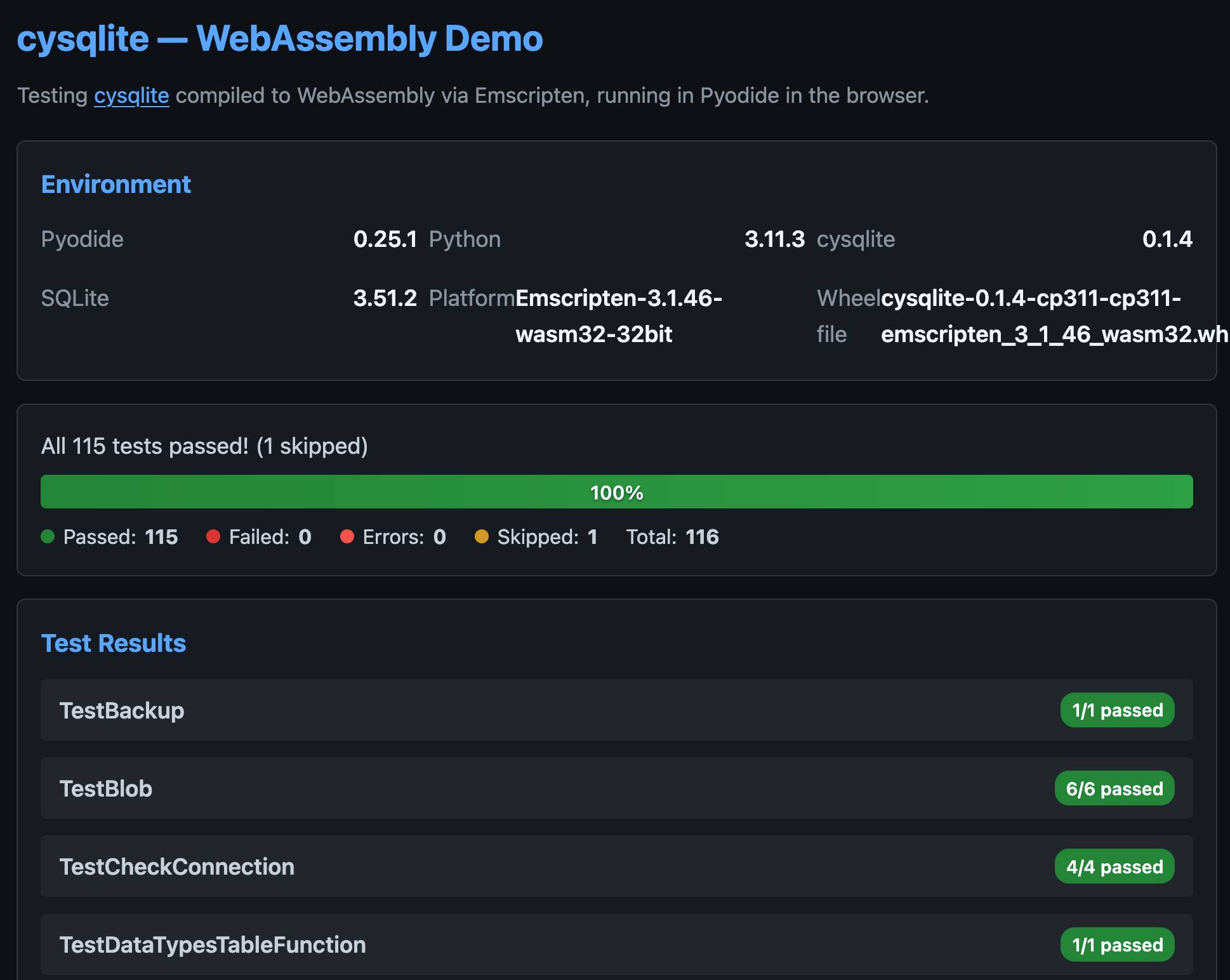

cysqlite provides a Python extension compiled from C, which means it normally wouldn't be available in Pyodide. I set Claude Code on it (here's the prompt) and it built me cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl, a 688KB wheel file with a WASM build of the library that can be loaded into Pyodide like this:

import micropip await micropip.install( "https://simonw.github.io/research/cysqlite-wasm-wheel/cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl" ) import cysqlite print(cysqlite.connect(":memory:").execute( "select sqlite_version()" ).fetchone())

(I also learned that wheels like this have to be built for the emscripten version used by that edition of Pyodide - my experimental wheel loads in Pyodide 0.25.1 but fails in 0.27.5 with a Wheel was built with Emscripten v3.1.46 but Pyodide was built with Emscripten v3.1.58 error.)

You can try my wheel in this new Pyodide REPL i had Claude build as a mobile-friendly alternative to Pyodide's own hosted console.

I also had Claude build this demo page that executes the original test suite in the browser and displays the results:

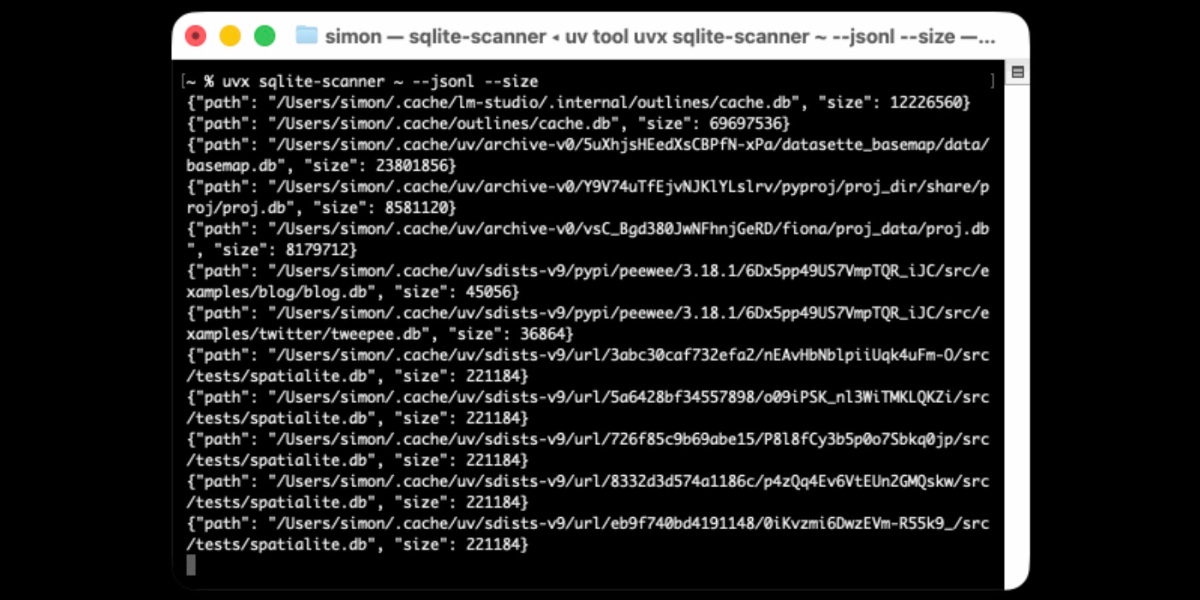

Distributing Go binaries like sqlite-scanner through PyPI using go-to-wheel

I’ve been exploring Go for building small, fast and self-contained binary applications recently. I’m enjoying how there’s generally one obvious way to do things and the resulting code is boring and readable—and something that LLMs are very competent at writing. The one catch is distribution, but it turns out publishing Go binaries to PyPI means any Go binary can be just a uvx package-name call away.

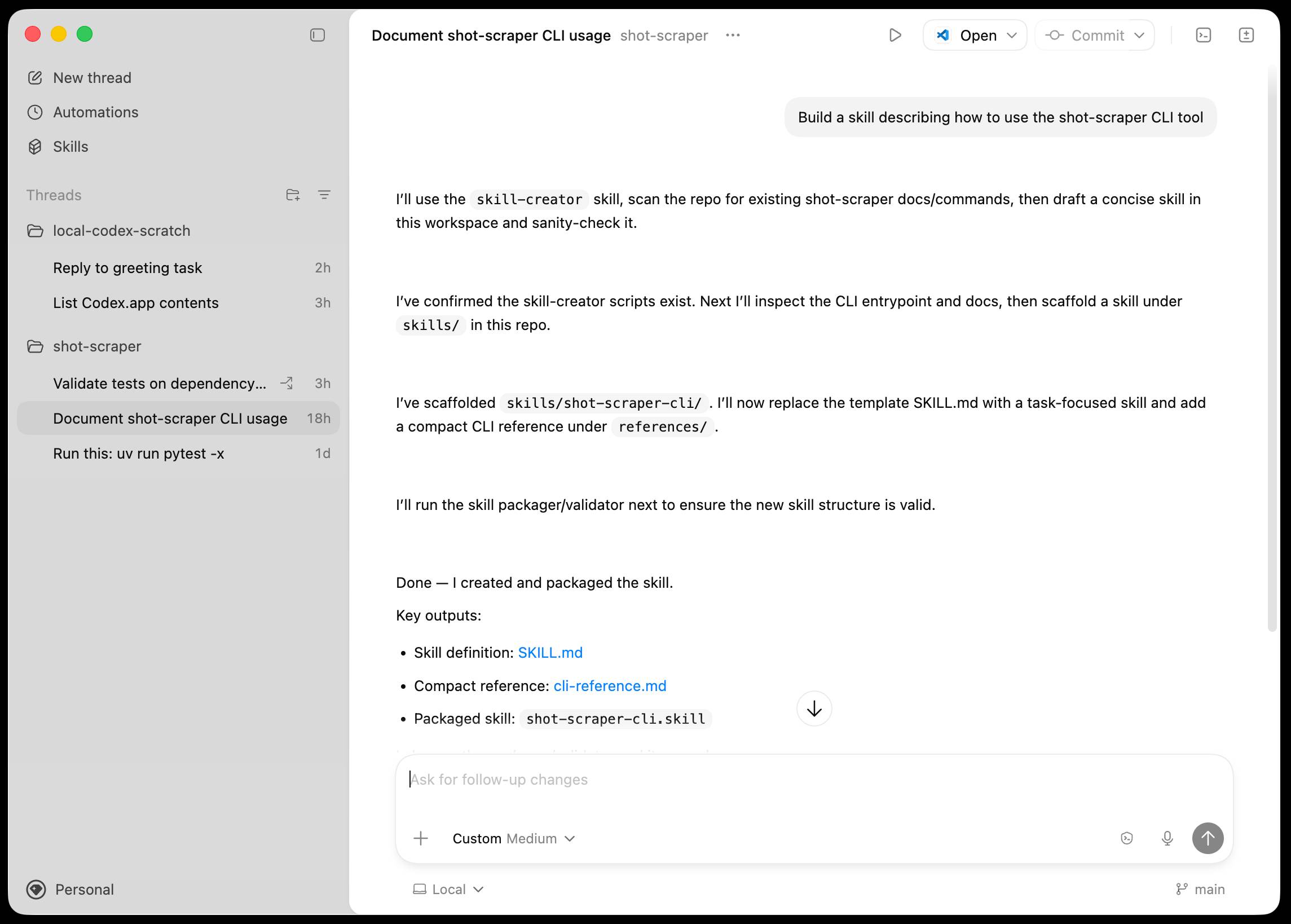

Introducing the Codex app. OpenAI just released a new macOS app for their Codex coding agent. I've had a few days of preview access - it's a solid app that provides a nice UI over the capabilities of the Codex CLI agent and adds some interesting new features, most notably first-class support for Skills, and Automations for running scheduled tasks.

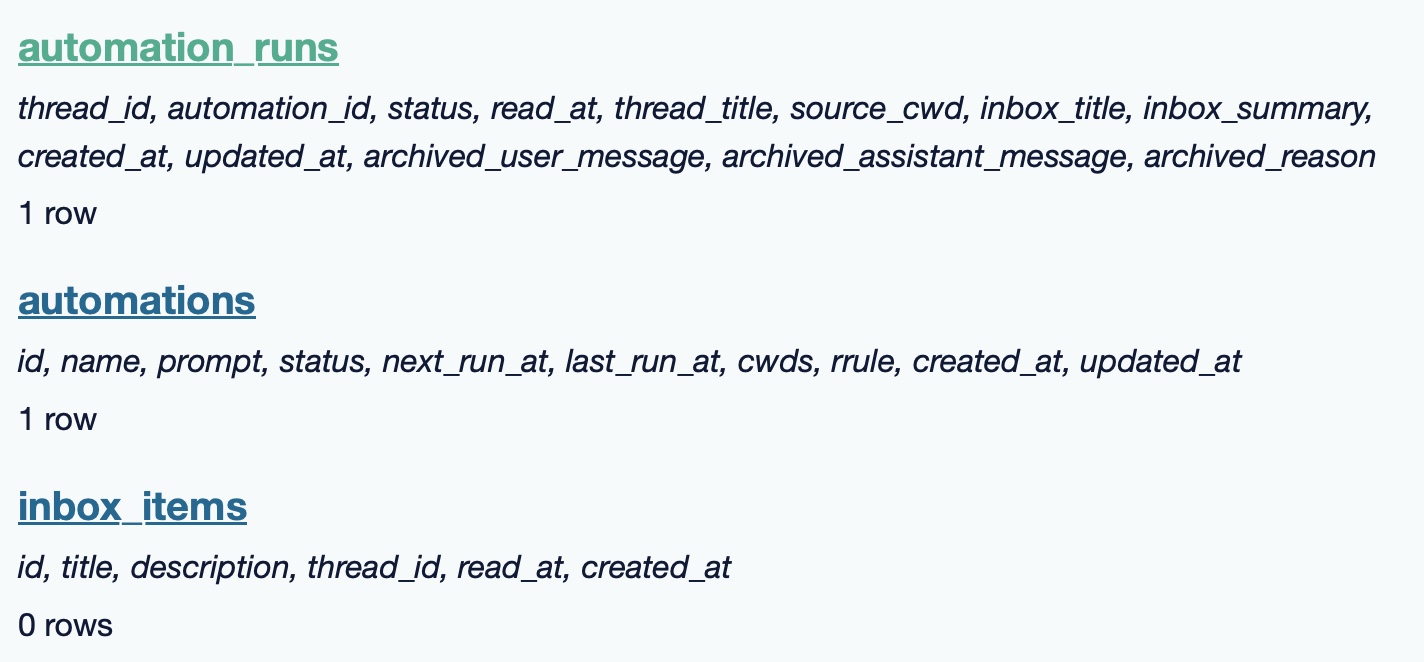

The app is built with Electron and Node.js. Automations track their state in a SQLite database - here's what that looks like if you explore it with uvx datasette ~/.codex/sqlite/codex-dev.db:

Here’s an interactive copy of that database in Datasette Lite.

The announcement gives us a hint at some usage numbers for Codex overall - the holiday spike is notable:

Since the launch of GPT‑5.2-Codex in mid-December, overall Codex usage has doubled, and in the past month, more than a million developers have used Codex.

Automations are currently restricted in that they can only run when your laptop is powered on. OpenAI promise that cloud-based automations are coming soon, which will resolve this limitation.

They chose Electron so they could target other operating systems in the future, with Windows “coming very soon”. OpenAI’s Alexander Embiricos noted on the Hacker News thread that:

it's taking us some time to get really solid sandboxing working on Windows, where there are fewer OS-level primitives for it.

Like Claude Code, Codex is really a general agent harness disguised as a tool for programmers. OpenAI acknowledge that here:

Codex is built on a simple premise: everything is controlled by code. The better an agent is at reasoning about and producing code, the more capable it becomes across all forms of technical and knowledge work. [...] We’ve focused on making Codex the best coding agent, which has also laid the foundation for it to become a strong agent for a broad range of knowledge work tasks that extend beyond writing code.

Claude Code had to rebrand to Cowork to better cover the general knowledge work case. OpenAI can probably get away with keeping the Codex name for both.

OpenAI have made Codex available to free and Go plans for "a limited time" (update: Sam Altman says two months) during which they are also doubling the rate limits for paying users.

The Design & Implementation of Sprites (via) I wrote about Sprites last week. Here's Thomas Ptacek from Fly with the insider details on how they work under the hood.

I like this framing of them as "disposable computers":

Sprites are ball-point disposable computers. Whatever mark you mean to make, we’ve rigged it so you’re never more than a second or two away from having a Sprite to do it with.

I've noticed that new Fly Machines can take a while (up to around a minute) to provision. Sprites solve that by keeping warm pools of unused machines in multiple regions, which is enabled by them all using the same container:

Now, today, under the hood, Sprites are still Fly Machines. But they all run from a standard container. Every physical worker knows exactly what container the next Sprite is going to start with, so it’s easy for us to keep pools of “empty” Sprites standing by. The result: a Sprite create doesn’t have any heavy lifting to do; it’s basically just doing the stuff we do when we start a Fly Machine.

The most interesting detail is how the persistence layer works. Sprites only charge you for data you have written that differs from the base image and provide ~300ms checkpointing and restores - it turns out that's power by a custom filesystem on top of S3-compatible storage coordinated by Litestream-replicated local SQLite metadata:

We still exploit NVMe, but not as the root of storage. Instead, it’s a read-through cache for a blob on object storage. S3-compatible object stores are the most trustworthy storage technology we have. I can feel my blood pressure dropping just typing the words “Sprites are backed by object storage.” [...]

The Sprite storage stack is organized around the JuiceFS model (in fact, we currently use a very hacked-up JuiceFS, with a rewritten SQLite metadata backend). It works by splitting storage into data (“chunks”) and metadata (a map of where the “chunks” are). Data chunks live on object stores; metadata lives in fast local storage. In our case, that metadata store is kept durable with Litestream. Nothing depends on local storage.

The most popular blogs of Hacker News in 2025 (via) Michael Lynch maintains HN Popularity Contest, a site that tracks personal blogs on Hacker News and scores them based on how well they perform on that platform.

The engine behind the project is the domain-meta.csv CSV on GiHub, a hand-curated list of known personal blogs with author and bio and tag metadata, which Michael uses to separate out personal blog posts from other types of content.

I came top of the rankings in 2023, 2024 and 2025 but I'm listed in third place for all time behind Paul Graham and Brian Krebs.

I dug around in the browser inspector and was delighted to find that the data powering the site is served with open CORS headers, which means you can easily explore it with external services like Datasette Lite.

Here's a convoluted window function query Claude Opus 4.5 wrote for me which, for a given domain, shows where that domain ranked for each year since it first appeared in the dataset:

with yearly_scores as ( select domain, strftime('%Y', date) as year, sum(score) as total_score, count(distinct date) as days_mentioned from "hn-data" group by domain, strftime('%Y', date) ), ranked as ( select domain, year, total_score, days_mentioned, rank() over (partition by year order by total_score desc) as rank from yearly_scores ) select r.year, r.total_score, r.rank, r.days_mentioned from ranked r where r.domain = :domain and r.year >= ( select min(strftime('%Y', date)) from "hn-data" where domain = :domain ) order by r.year desc

(I just noticed that the last and r.year >= ( clause isn't actually needed here.)

My simonwillison.net results show me ranked 3rd in 2022, 30th in 2021 and 85th back in 2007 - though I expect there are many personal blogs from that year which haven't yet been manually added to Michael's list.

Also useful is that every domain gets its own CORS-enabled CSV file with details of the actual Hacker News submitted from that domain, e.g. https://hn-popularity.cdn.refactoringenglish.com/domains/simonwillison.net.csv. Here's that one in Datasette Lite.

2025

But once we got that and got this aviation grade testing in place, the number of bugs just dropped to a trickle. Now we still do have bugs but the aviation grade testing allows us to move fast, which is important because in this business you either move fast or you're disrupted. So, we're able to make major changes to the structure of the code that we deliver and be confident that we're not breaking things because we had these intense tests. Probably half the time we spend is actually writing new tests, we're constantly writing new tests. And over the 17-year history, we have amassed a huge suite of tests which we run constantly.

Other database engines don't do this; don't have this level of testing. But they're still high quality, I mean, I noticed in particular, PostgreSQL is a very high-quality database engine, they don't have many bugs. I went to the PostgreSQL and ask them “how do you prevent the bugs”? We talked about this for a while. What I came away with was they've got a very elaborate peer review process, and if they've got code that has worked for 10 years they just don't mess with it, leave it alone, it works. Whereas we change our code fearlessly, and we have a much smaller team and we don't have the peer review process.

— D. Richard Hipp, ACM SIGMOD Record, June 2019 (PDF)

Copyright Release for Contributions To SQLite. D. Richard Hipp called me out for spreading misinformation on Hacker News that SQLite refuses outside contributions:

No, Simon, we don't "refuse". We are just very selective and there is a lot of paperwork involved to confirm the contribution is in the public domain and does not contaminate the SQLite core with licensed code.

I deeply regret this error! I'm linking to the copyright release document here - it looks like SQLite's public domain nature makes this kind of clause extremely important:

[...] To the best of my knowledge and belief, the changes and enhancements that I have contributed to SQLite are either originally written by me or are derived from prior works which I have verified are also in the public domain and are not subject to claims of copyright by other parties.

Out of curiosity I decided to see how many people have contributed to SQLite outside of the core team of Richard, Dan and Joe. I ran that query using Fossil, SQLite's own SQLite-based version control system, like this:

brew install fossil

fossil clone https://www.sqlite.org/src sqlite.fossil

fossil sql -R sqlite.fossil "

SELECT user, COUNT(*) as commits

FROM event WHERE type='ci'

GROUP BY user ORDER BY commits DESC

"

I got back 38 rows, though I think danielk1977 and dan may be duplicates.

Update: The SQLite team have clarified this on their SQLite is Public Domain page. It used to read "In order to keep SQLite completely free and unencumbered by copyright, the project does not accept patches." - it now reads:

In order to keep SQLite completely free and unencumbered by copyright, the project does not accept patches from random people on the internet. There is a process to get a patch accepted, but that process is involved and for smaller changes is not normally worth the effort.

Under the hood of Canada Spends with Brendan Samek

I talked to Brendan Samek about Canada Spends, a project from Build Canada that makes Canadian government financial data accessible and explorable using a combination of Datasette, a neat custom frontend, Ruby ingestion scripts, sqlite-utils and pieces of LLM-powered PDF extraction.

[... 561 words]sqlite-utils 3.39.

I got a report of a bug in sqlite-utils concerning plugin installation - if you installed the package using uv tool install further attempts to install plugins with sqlite-utils install X would fail, because uv doesn't bundle pip by default. I had the same bug with Datasette a while ago, turns out I forgot to apply the fix to sqlite-utils.

Since I was pushing a new dot-release I decided to integrate some of the non-breaking changes from the 4.0 alpha I released last night.

I tried to have Claude Code do the backporting for me:

create a new branch called 3.x starting with the 3.38 tag, then consult https://github.com/simonw/sqlite-utils/issues/688 and cherry-pick the commits it lists in the second comment, then review each of the links in the first comment and cherry-pick those as well. After each cherry-pick run the command "just test" to confirm the tests pass and fix them if they don't. Look through the commit history on main since the 3.38 tag to help you with this task.

This worked reasonably well - here's the terminal transcript. It successfully argued me out of two of the larger changes which would have added more complexity than I want in a small dot-release like this.

I still had to do a bunch of manual work to get everything up to scratch, which I carried out in this PR - including adding comments there and then telling Claude Code:

Apply changes from the review on this PR https://github.com/simonw/sqlite-utils/pull/689

Here's the transcript from that.

The release is now out with the following release notes:

- Fixed a bug with

sqlite-utils installwhen the tool had been installed usinguv. (#687)- The

--functionsargument now optionally accepts a path to a Python file as an alternative to a string full of code, and can be specified multiple times - see Defining custom SQL functions. (#659)sqlite-utilsnow requires on Python 3.10 or higher.

sqlite-utils 4.0a1 has several (minor) backwards incompatible changes

I released a new alpha version of sqlite-utils last night—the 128th release of that package since I started building it back in 2018.

[... 1,049 words]How I automate my Substack newsletter with content from my blog

I sent out my weekly-ish Substack newsletter this morning and took the opportunity to record a YouTube video demonstrating my process and describing the different components that make it work. There’s a lot of digital duct tape involved, taking the content from Django+Heroku+PostgreSQL to GitHub Actions to SQLite+Datasette+Fly.io to JavaScript+Observable and finally to Substack.

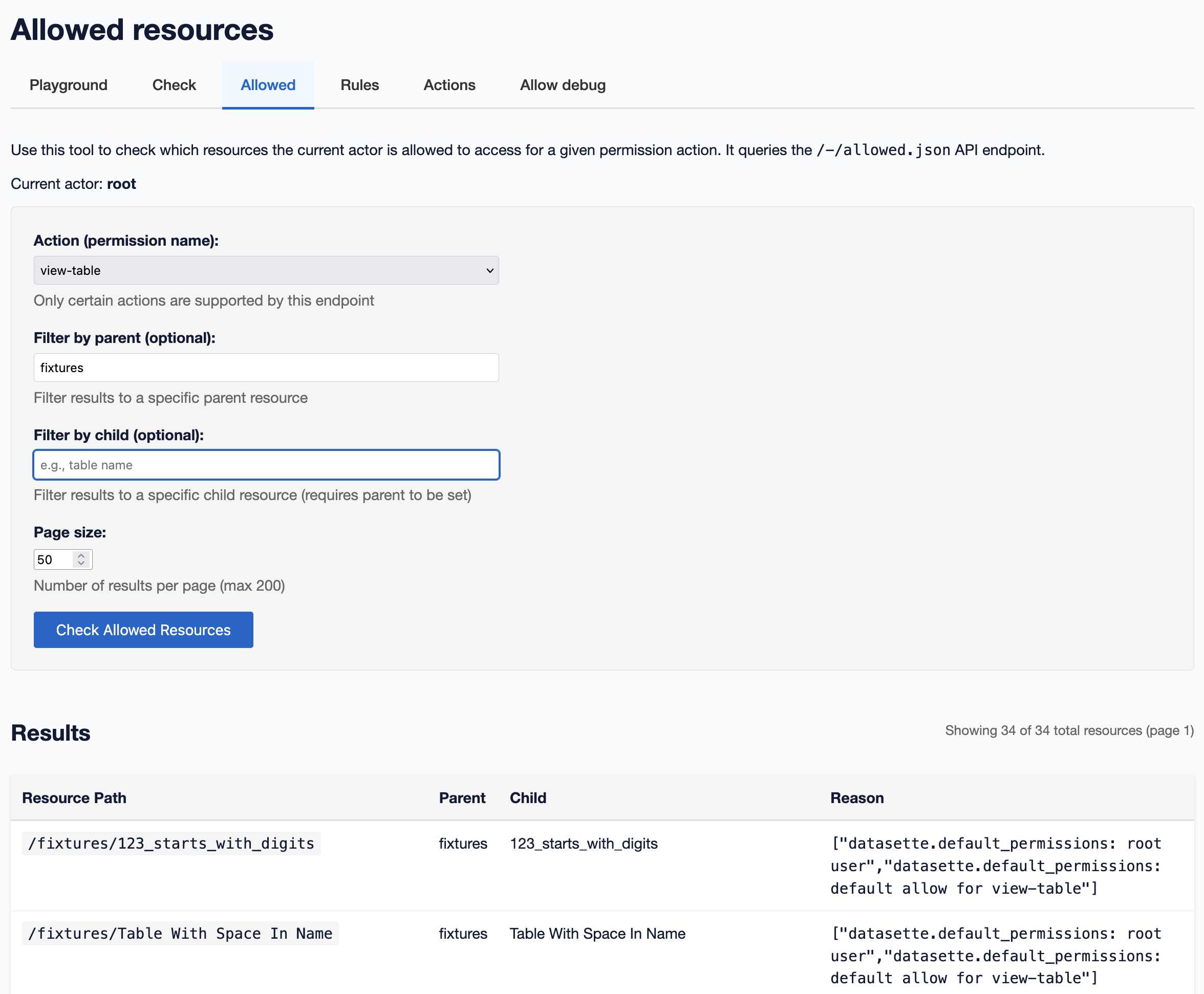

[... 1,345 words]A new SQL-powered permissions system in Datasette 1.0a20

Datasette 1.0a20 is out with the biggest breaking API change on the road to 1.0, improving how Datasette’s permissions system works by migrating permission logic to SQL running in SQLite. This release involved 163 commits, with 10,660 additions and 1,825 deletions, most of which was written with the help of Claude Code.

[... 2,750 words]Litestream v0.5.0 is Here (via) I've been running Litestream to backup SQLite databases in production for a couple of years now without incident. The new version has been a long time coming - Ben Johnson took a detour into the FUSE-based LiteFS before deciding that the single binary Litestream approach is more popular - and Litestream 0.5 just landed with this very detailed blog posts describing the improved architecture.

SQLite stores data in pages - 4096 (by default) byte blocks of data. Litestream replicates modified pages to a backup location - usually object storage like S3.

Most SQLite tables have an auto-incrementing primary key, which is used to decide which page the row's data should be stored in. This means sequential inserts to a small table are sent to the same page, which caused previous Litestream to replicate many slightly different copies of that page block in succession.

The new LTX format - borrowed from LiteFS - addresses that by adding compaction, which Ben describes as follows:

We can use LTX compaction to compress a bunch of LTX files into a single file with no duplicated pages. And Litestream now uses this capability to create a hierarchy of compactions:

- at Level 1, we compact all the changes in a 30-second time window

- at Level 2, all the Level 1 files in a 5-minute window

- at Level 3, all the Level 2’s over an hour.

Net result: we can restore a SQLite database to any point in time, using only a dozen or so files on average.

I'm most looking forward to trying out the feature that isn't quite landed yet: read-replicas, implemented using a SQLite VFS extension:

The next major feature we’re building out is a Litestream VFS for read replicas. This will let you instantly spin up a copy of the database and immediately read pages from S3 while the rest of the database is hydrating in the background.

After struggling for years trying to figure out why people think [Cloudflare] Durable Objects are complicated, I'm increasingly convinced that it's just that they sound complicated.

Feels like we can solve 90% of it by renaming

DurableObjecttoStatefulWorker?It's just a worker that has state. And because it has state, it also has to have a name, so that you can route to the specific worker that has the state you care about. There may be a sqlite database attached, there may be a container attached. Those are just part of the state.

Serving 200 million requests per day with a cgi-bin (via) Jake Gold tests how well 90s-era CGI works today, using a Go + SQLite CGI program running on a 16-thread AMD 3700X.

Using CGI on modest hardware, it’s possible to serve 2400+ requests per second or 200M+ requests per day.

I got my start in web development with CGI back in the late 1990s - I was a huge fan of NewsPro, which was effectively a weblog system before anyone knew what a weblog was.

CGI works by starting, executing and terminating a process for every incoming request. The nascent web community quickly learned that this was a bad idea, and invented technologies like PHP and FastCGI to help avoid that extra overhead and keep code resident in-memory instead.

This lesson ended up baked into my brain, and I spent the next twenty years convinced that you should never execute a full process as part of serving a web page.

Of course, computers in those two decades got a lot faster. I finally overcame that twenty-year core belief in 2020, when I built datasette-ripgrep, a Datasette plugin that shells out to the lightning fast ripgrep CLI tool (written in Rust) to execute searches. It worked great!

As was pointed out on Hacker News, part of CGI's problem back then was that we were writing web scripts in languages like Perl, Python and Java which had not been designed for lightning fast startup speeds. Using Go and Rust today helps make CGI-style requests a whole lot more effective.

Jake notes that CGI-style request handling is actually a great way to take advantage of multiple CPU cores:

These days, we have servers with 384 CPU threads. Even a small VM can have 16 CPUs. The CPUs and memory are much faster as well.

Most importantly, CGI programs, because they run as separate processes, are excellent at taking advantage of many CPUs!

Maybe we should start coding web applications like it's 1998, albeit with Go and Rust!

To clarify, I don't think most people should do this. I just think it's interesting that it's not as bad an idea as it was ~25 years ago.

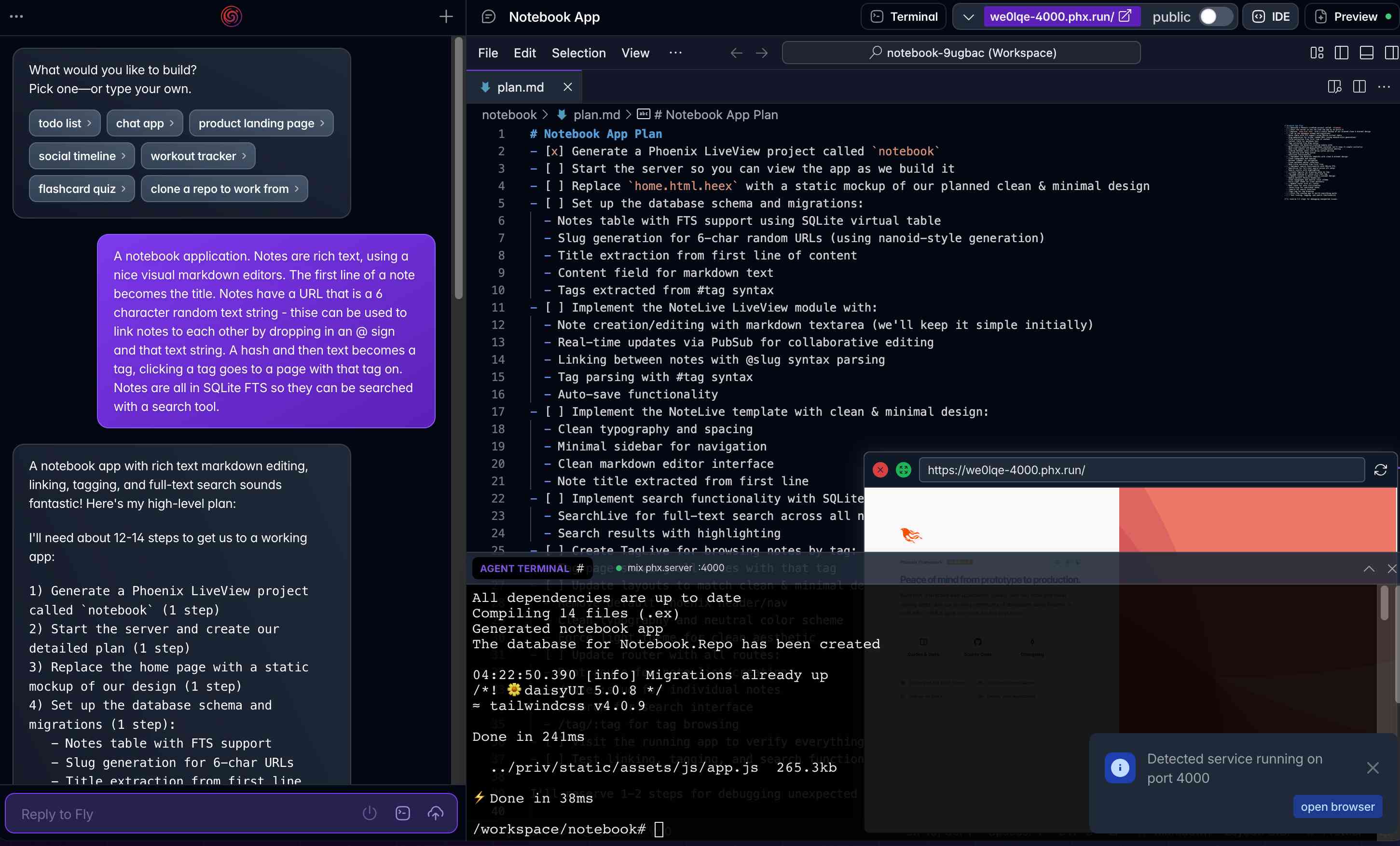

Phoenix.new is Fly’s entry into the prompt-driven app development space

Here’s a fascinating new entrant into the AI-assisted-programming / coding-agents space by Fly.io, introduced on their blog in Phoenix.new – The Remote AI Runtime for Phoenix: describe an app in a prompt, get a full Phoenix application, backed by SQLite and running on Fly’s hosting platform. The official Phoenix.new YouTube launch video is a good way to get a sense for what this does.

[... 1,361 words]TIL: SQLite triggers. I've been doing some work with SQLite triggers recently while working on sqlite-chronicle, and I decided I needed a single reference to exactly which triggers are executed for which SQLite actions and what data is available within those triggers.

I wrote this triggers.py script to output as much information about triggers as possible, then wired it into a TIL article using Cog. The Cog-powered source code for the TIL article can be seen here.

sqlite-utils 4.0a0. New alpha release of sqlite-utils, my Python library and CLI tool for manipulating SQLite databases.

It's the first 4.0 alpha because there's a (minor) backwards-incompatible change: I've upgraded the .upsert() and .upsert_all() methods to use SQLIte's UPSERT mechanism, INSERT INTO ... ON CONFLICT DO UPDATE. Details in this issue.

That feature was added to SQLite in version 3.24.0, released 2018-06-04. I'm pretty cautious about my SQLite version support since the underlying library can be difficult to upgrade, depending on your platform and operating system.

I'm going to leave the new alpha to bake for a little while before pushing a stable release. Since this is a major version bump I'm going to take the opportunity to see if there are any other minor API warts that I can clean up at the same time.

SQLite CREATE TABLE: The DEFAULT clause. If your SQLite create table statement includes a line like this:

CREATE TABLE alerts (

-- ...

alert_created_at text default current_timestamp

)

current_timestamp will be replaced with a UTC timestamp in the format 2025-05-08 22:19:33. You can also use current_time for HH:MM:SS and current_date for YYYY-MM-DD, again using UTC.

Posting this here because I hadn't previously noticed that this defaults to UTC, which is a useful detail. It's also a strong vote in favor of YYYY-MM-DD HH:MM:SS as a string format for use with SQLite, which doesn't otherwise provide a formal datetime type.

SQLite File Format Viewer (via) Neat browser-based visual interface for exploring the structure of a SQLite database file, built by Visal In using React and a custom parser implemented in TypeScript.

Stevens: a hackable AI assistant using a single SQLite table and a handful of cron jobs. Geoffrey Litt reports on Stevens, a shared digital assistant he put together for his family using SQLite and scheduled tasks running on Val Town.

The design is refreshingly simple considering how much it can do. Everything works around a single memories table. A memory has text, tags, creation metadata and an optional date for things like calendar entries and weather reports.

Everything else is handled by scheduled jobs to popular weather information and events from Google Calendar, a Telegram integration offering a chat UI and a neat system where USPS postal email delivery notifications are run through Val's own email handling mechanism to trigger a Claude prompt to add those as memories too.

Here's the full code on Val Town, including the daily briefing prompt that incorporates most of the personality of the bot.

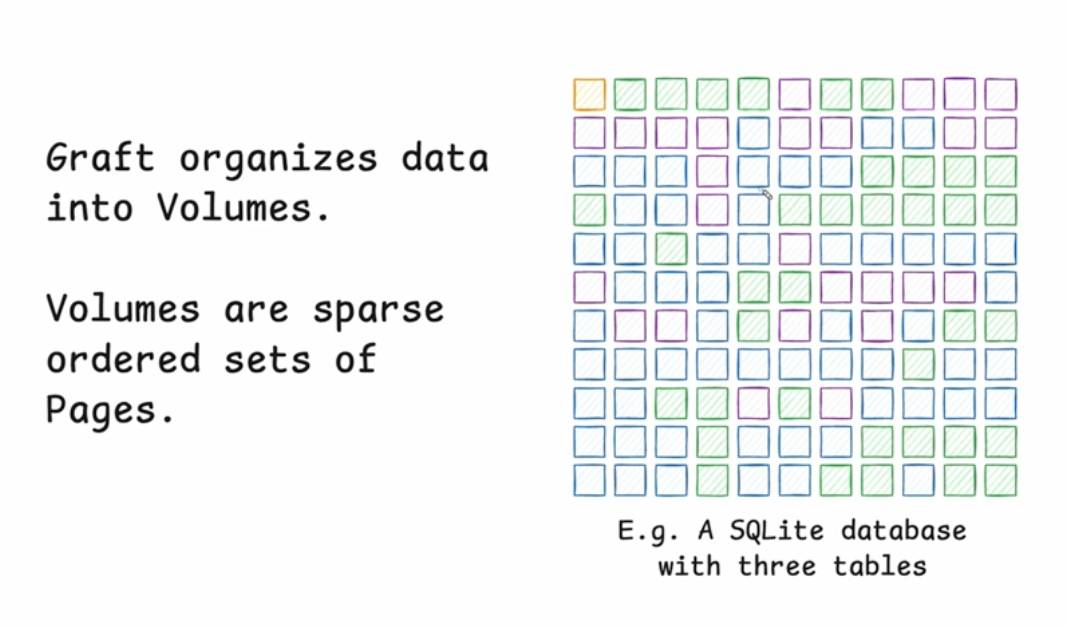

Stop syncing everything. In which Carl Sverre announces Graft, a fascinating new open source Rust data synchronization engine he's been working on for the past year.

Carl's recent talk at the Vancouver Systems meetup explains Graft in detail, including this slide which helped everything click into place for me:

Graft manages a volume, which is a collection of pages (currently at a fixed 4KB size). A full history of that volume is maintained using snapshots. Clients can read and write from particular snapshot versions for particular pages, and are constantly updated on which of those pages have changed (while not needing to synchronize the actual changed data until they need it).

This is a great fit for B-tree databases like SQLite.

The Graft project includes a SQLite VFS extension that implements multi-leader read-write replication on top of a Graft volume. You can see a demo of that running at 36m15s in the video, or consult the libgraft extension documentation and try it yourself.

The section at the end on What can you build with Graft? has some very useful illustrative examples:

Offline-first apps: Note-taking, task management, or CRUD apps that operate partially offline. Graft takes care of syncing, allowing the application to forget the network even exists. When combined with a conflict handler, Graft can also enable multiplayer on top of arbitrary data.

Cross-platform data: Eliminate vendor lock-in and allow your users to seamlessly access their data across mobile platforms, devices, and the web. Graft is architected to be embedded anywhere

Stateless read replicas: Due to Graft's unique approach to replication, a database replica can be spun up with no local state, retrieve the latest snapshot metadata, and immediately start running queries. No need to download all the data and replay the log.

Replicate anything: Graft is just focused on consistent page replication. It doesn't care about what's inside those pages. So go crazy! Use Graft to sync AI models, Parquet or Lance files, Geospatial tilesets, or just photos of your cats. The sky's the limit with Graft.

What to do about SQLITE_BUSY errors despite setting a timeout

(via)

Bert Hubert takes on the challenge of explaining SQLite's single biggest footgun: in WAL mode you may see SQLITE_BUSY errors even when you have a generous timeout set if a transaction attempts to obtain a write lock after initially running at least one SELECT. The fix is to use BEGIN IMMEDIATE if you know your transaction is going to make a write.

Bert provides the clearest explanation I've seen yet of why this is necessary:

When the transaction on the left wanted to upgrade itself to a read-write transaction, SQLite could not allow this since the transaction on the right might already have made changes that the transaction on the left had not yet seen.

This in turn means that if left and right transactions would commit sequentially, the result would not necessarily be what would have happened if all statements had been executed sequentially within the same transaction.

I've written about this a few times before, so I just started a sqlite-busy tag to collect my notes together on a single page.

files-to-prompt 0.5.

My files-to-prompt tool (originally built using Claude 3 Opus back in April) had been accumulating a bunch of issues and PRs - I finally got around to spending some time with it and pushed a fresh release:

- New

-n/--line-numbersflag for including line numbers in the output. Thanks, Dan Clayton. #38- Fix for utf-8 handling on Windows. Thanks, David Jarman. #36

--ignorepatterns are now matched against directory names as well as file names, unless you pass the new--ignore-files-onlyflag. Thanks, Nick Powell. #30

I use this tool myself on an almost daily basis - it's fantastic for quickly answering questions about code. Recently I've been plugging it into Gemini 2.0 with its 2 million token context length, running recipes like this one:

git clone https://github.com/bytecodealliance/componentize-py

cd componentize-py

files-to-prompt . -c | llm -m gemini-2.0-pro-exp-02-05 \

-s 'How does this work? Does it include a python compiler or AST trick of some sort?'

I ran that question against the bytecodealliance/componentize-py repo - which provides a tool for turning Python code into compiled WASM - and got this really useful answer.

Here's another example. I decided to have o3-mini review how Datasette handles concurrent SQLite connections from async Python code - so I ran this:

git clone https://github.com/simonw/datasette

cd datasette/datasette

files-to-prompt database.py utils/__init__.py -c | \

llm -m o3-mini -o reasoning_effort high \

-s 'Output in markdown a detailed analysis of how this code handles the challenge of running SQLite queries from a Python asyncio application. Explain how it works in the first section, then explore the pros and cons of this design. In a final section propose alternative mechanisms that might work better.'

Here's the result. It did an extremely good job of explaining how my code works - despite being fed just the Python and none of the other documentation. Then it made some solid recommendations for potential alternatives.

I added a couple of follow-up questions (using llm -c) which resulted in a full working prototype of an alternative threadpool mechanism, plus some benchmarks.

One final example: I decided to see if there were any undocumented features in Litestream, so I checked out the repo and ran a prompt against just the .go files in that project:

git clone https://github.com/benbjohnson/litestream

cd litestream

files-to-prompt . -e go -c | llm -m o3-mini \

-s 'Write extensive user documentation for this project in markdown'

Once again, o3-mini provided a really impressively detailed set of unofficial documentation derived purely from reading the source.

sqlite-s3vfs (via) Neat open source project on the GitHub organisation for the UK government's Department for Business and Trade: a "Python virtual filesystem for SQLite to read from and write to S3."

I tried out their usage example by running it in a Python REPL with all of the dependencies

uv run --python 3.13 --with apsw --with sqlite-s3vfs --with boto3 python

It worked as advertised. When I listed my S3 bucket I found it had created two files - one called demo.sqlite/0000000000 and another called demo.sqlite/0000000001, both 4096 bytes because each one represented a SQLite page.

The implementation is just 200 lines of Python, implementing a new SQLite Virtual Filesystem on top of apsw.VFS.

The README includes this warning:

No locking is performed, so client code must ensure that writes do not overlap with other writes or reads. If multiple writes happen at the same time, the database will probably become corrupt and data be lost.

I wonder if the conditional writes feature added to S3 back in November could be used to protect against that happening. Tricky as there are multiple files involved, but maybe it (or a trick like this one) could be used to implement some kind of exclusive lock between multiple processes?

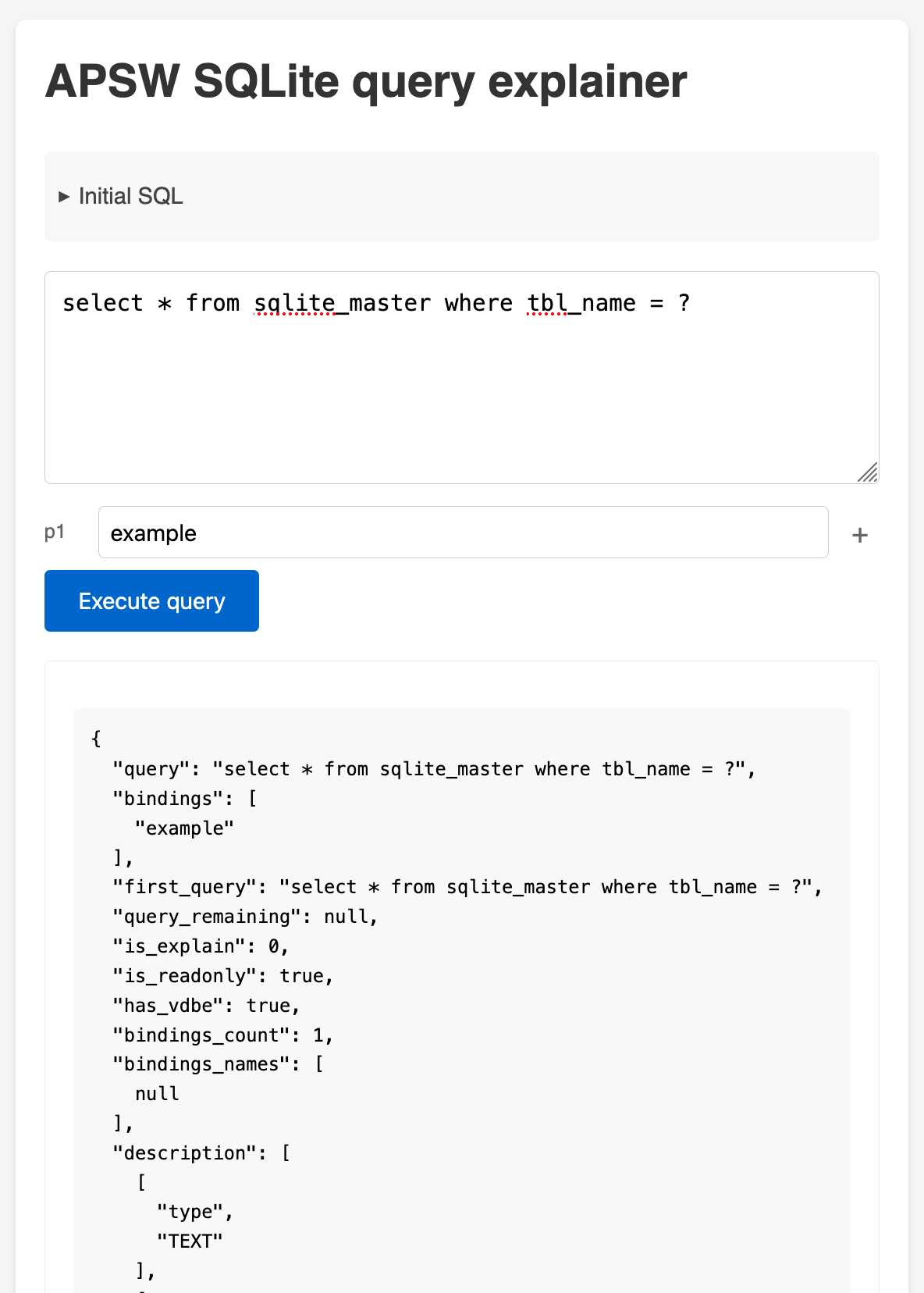

APSW SQLite query explainer. Today I found out about APSW's (Another Python SQLite Wrapper, in constant development since 2004) apsw.ext.query_info() function, which takes a SQL query and returns a very detailed set of information about that query - all without executing it.

It actually solves a bunch of problems I've wanted to address in Datasette - like taking an arbitrary query and figuring out how many parameters (?) it takes and which tables and columns are represented in the result.

I tried it out in my console (uv run --with apsw python) and it seemed to work really well. Then I remembered that the Pyodide project includes WebAssembly builds of a number of Python C extensions and was delighted to find apsw on that list.

... so I got Claude to build me a web interface for trying out the function, using Pyodide to run a user's query in Python in their browser via WebAssembly.

Claude didn't quite get it in one shot - I had to feed it the URL to a more recent Pyodide and it got stuck in a bug loop which I fixed by pasting the code into a fresh session.

sqlite-page-explorer (via) Outstanding tool by Luke Rissacher for understanding the SQLite file format. Download the application (built using redbean and Cosmopolitan, so the same binary runs on Windows, Mac and Linux) and point it at a SQLite database to get a local web application with an interface for exploring how the file is structured.

Here's it running against the datasette.io/content database that runs the official Datasette website: