63 items tagged “webassembly”

2024

No More Blue Fridays (via) Brendan Gregg: "In the future, computers will not crash due to bad software updates, even those updates that involve kernel code. In the future, these updates will push eBPF code."

New-to-me things I picked up from this:

- eBPF - a technology I had thought was unique to the a Linux kernel - is coming Windows!

- A useful mental model to have for eBPF is that it provides a WebAssembly-style sandbox for kernel code.

- eBPF doesn't stand for "extended Berkeley Packet Filter" any more - that name greatly understates its capabilities and has been retired. More on that in the eBPF FAQ.

- From this Hacker News thread eBPF programs can be analyzed before running despite the halting problem because eBPF only allows verifiably-halting programs to run.

marimo.app. The Marimo reactive notebook (previously) - a Python notebook that's effectively a cross between Jupyter and Observable - now also has a version that runs entirely in your browser using WebAssembly and Pyodide. Here's the documentation.

llama.ttf (via) llama.ttf is "a font file which is also a large language model and an inference engine for that model".

You can see it kick into action at 8m28s in this video, where creator Søren Fuglede Jørgensen types "Once upon a time" followed by dozens of exclamation marks, and those exclamation marks then switch out to render a continuation of the story. But... when they paste the code out of the editor again it shows as the original exclamation marks were preserved - the LLM output was presented only in the way they were rendered.

The key trick here is that the font renderer library HarfBuzz (used by Firefox, Chrome, Android, GNOME and more) added a new WebAssembly extension in version 8.0 last year, which is powerful enough to run a full LLM based on the tinyllama-15M model - which fits in a 60MB font file.

(Here's a related demo from Valdemar Erk showing Tetris running in a WASM font, at 22m56s in this video.)

The source code for llama.ttf is available on GitHub.

Experimenting with local alt text generation in Firefox Nightly (via) The PDF editor in Firefox (confession: I did not know Firefox ships with a PDF editor) is getting an experimental feature that can help suggest alt text for images for the human editor to then adapt and improve on.

This is a great application of AI, made all the more interesting here because Firefox will run a local model on-device for this, using a custom trained model they describe as "our 182M parameters model using a Distilled version of GPT-2 alongside a Vision Transformer (ViT) image encoder".

The model uses WebAssembly with ONNX running in Transfomers.js, and will be downloaded the first time the feature is put to use.

Pyodide 0.26 Release (via) PyOdide provides Python packaged for browser WebAssembly alongside an ecosystem of additional tools and libraries to help Python and JavaScript work together.

The latest release bumps the Python version up to 3.12, and also adds support for pygame-ce, allowing games written using pygame to run directly in the browser.

The PyOdide community also just landed a 14-month-long PR adding support to cibuildwheel, which should make it easier to ship binary wheels targeting PyOdide.

experimental-phi3-webgpu (via) Run Microsoft’s excellent Phi-3 model directly in your browser, using WebGPU so didn’t work in Firefox for me, just in Chrome.

It fetches around 2.1GB of data into the browser cache on first run, but then gave me decent quality responses to my prompts running at an impressive 21 tokens a second (M2, 64GB).

I think Phi-3 is the highest quality model of this size, so it’s a really good fit for running in a browser like this.

Figma’s journey to TypeScript: Compiling away our custom programming language (via) I love a good migration story. Figma had their own custom language that compiled to JavaScript, called Skew. As WebAssembly support in browsers emerged and improved the need for Skew’s performance optimizations reduced, and TypeScript’s maturity and popularity convinced them to switch.

Rather than doing a stop-the-world rewrite they built a transpiler from Skew to TypeScript, enabling a multi-year migration without preventing their product teams from continuing to make progress on new features.

Introducing Enhance WASM (via) “Backend agnostic server-side rendering (SSR) for Web Components”—fascinating new project from Brian LeRoux and Begin.

The idea here is to provide server-side rendering of Web Components using WebAssembly that can run on any platform that is supported within the Extism WASM ecosystem.

The key is the enhance-ssr.wasm bundle, a 4.1MB WebAssembly version of the enhance-ssr JavaScript library, compiled using the Extism JavaScript PDK (Plugin Development Kit) which itself bundles a WebAssembly version of QuickJS.

Bringing Python to Workers using Pyodide and WebAssembly (via) Cloudflare Workers is Cloudflare’s serverless hosting tool for deploying server-side functions to edge locations in their CDN.

They just released Python support, accompanied by an extremely thorough technical explanation of how they got that to work. The details are fascinating.

Workers runs on V8 isolates, and the new Python support was implemented using Pyodide (CPython compiled to WebAssembly) running inside V8.

Getting this to work performantly and ergonomically took a huge amount of work.

There are too many details in here to effectively summarize, but my favorite detail is this one:

“We scan the Worker’s code for import statements, execute them, and then take a snapshot of the Worker’s WebAssembly linear memory. Effectively, we perform the expensive work of importing packages at deploy time, rather than at runtime.”

The Dropflow Playground (via) Dropflow is a “CSS layout engine” written in TypeScript and taking advantage of the HarfBuzz text shaping engine (used by Chrome, Android, Firefox and more) compiled to WebAssembly to implement glyph layout.

This linked demo is fascinating: on the left hand side you can edit HTML with inline styles, and the right hand side then updates live to show that content rendered by Dropflow in a canvas element.

Why would you want this? It lets you generate images and PDFs with excellent performance using your existing knowledge HTML and CSS. It’s also just really cool!

PGlite (via) PostgreSQL compiled for WebAssembly and turned into a very neat JavaScript library. Previous attempts at running PostgreSQL in WASM have worked by bundling a full Linux virtual machine—PGlite just bundles a compiled PostgreSQL itself, which brings the size down to an impressive 3.7MB gzipped.

The original WWW proposal is a Word for Macintosh 4.0 file from 1990, can we open it? (via) In which John Graham-Cumming attempts to open the original WWW proposal by Tim Berners-Lee, a 68,608 bytes Microsoft Word for Macintosh 4.0 file.

Microsoft Word and Apple Pages fail. OpenOffice gets the text but not the formatting. LibreOffice gets the diagrams too, but the best results come from the Infinite Mac WebAssembly emulator.

urllib3 2.2.0. Highlighted feature: “urllib3 now works in the browser”—the core urllib3 library now includes code that can integrate with Pyodide, using the browser’s fetch() or XMLHttpRequest APIs to make HTTP requests (to CORS-enabled endpoints).

ColBERT query-passage scoring interpretability (via) Neat interactive visualization tool for understanding what the ColBERT embedding model does—this works by loading around 50MB of model files directly into your browser and running them with WebAssembly.

NYT Flash-based visualizations work again. The New York Times are using the open source Ruffle Flash emulator—built using Rust, compiled to WebAssembly—to get their old archived data visualization interactives working again.

container2wasm (via) “Converts a container to WASM with emulation by Bochs (for x86_64 containers) and TinyEMU (for riscv64 containers)”—effectively letting you take a Docker container and turn it into a WebAssembly blob that can then run in any WebAssembly host environment, including the browser.

Run “c2w ubuntu:22.04 out.wasm” to output a WASM binary for the Ubuntu 22:04 container from Docker Hub, then “wasmtime out.wasm uname -a” to run a command.

Even better, check out the live browser demos linked fro the README, which let you do things like run a Python interpreter in a Docker container directly in your browser.

2023

The WebAssembly Go Playground (via) Jeff Lindsay has a full Go 1.21.1 compiler running entirely in the browser.

Perplexity: interactive LLM visualization (via) I linked to a video of Linus Lee’s GPT visualization tool the other day. Today he’s released a new version of it that people can actually play with: it runs entirely in a browser, powered by a 120MB version of the GPT-2 ONNX model loaded using the brilliant Transformers.js JavaScript library.

Wikipedia search-by-vibes through millions of pages offline (via) Really cool demo by Lee Butterman, who built embeddings of 2 million Wikipedia pages and figured out how to serve them directly to the browser, where they are used to implement “vibes based” similarity search returning results in 250ms. Lots of interesting details about how he pulled this off, using Arrow as the file format and ONNX to run the model in the browser.

WebLLM supports Llama 2 70B now. The WebLLM project from MLC uses WebGPU to run large language models entirely in the browser. They recently added support for Llama 2, including Llama 2 70B, the largest and most powerful model in that family.

To my astonishment, this worked! I used a M2 Mac with 64GB of RAM and Chrome Canary and it downloaded many GBs of data... but it worked, and spat out tokens at a slow but respectable rate of 3.25 tokens/second.

Building a Signal Analyzer with Modern Web Tech (via) Casey Primozic’s detailed write-up of his project to build a spectrogram and oscilloscope using cutting-edge modern web technology: Web Workers, Web Audio, SharedArrayBuffer, Atomics.waitAsync, OffscreenCanvas, WebAssembly SIMD and more. His conclusion: “Web developers now have all the tools they need to build native-or-better quality apps on the web.”

Web LLM runs the vicuna-7b Large Language Model entirely in your browser, and it’s very impressive

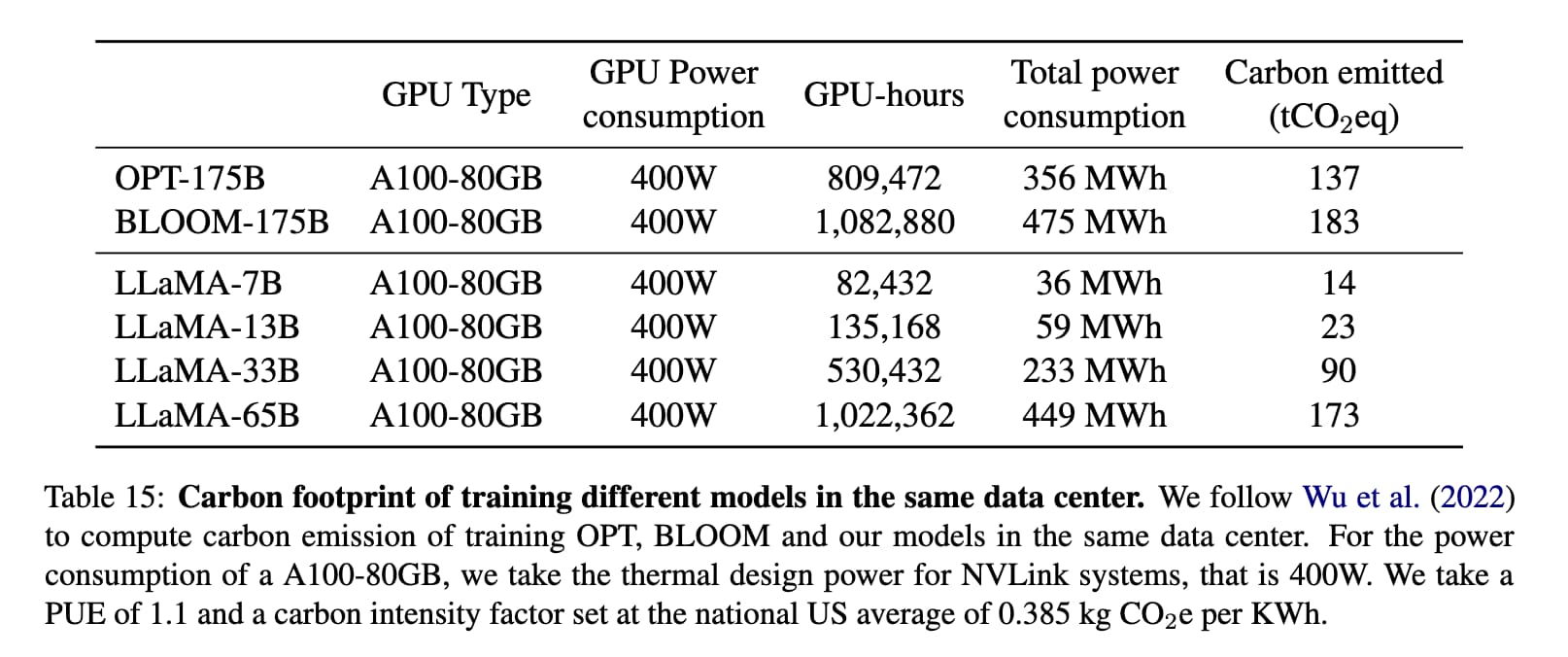

A month ago I asked Could you train a ChatGPT-beating model for $85,000 and run it in a browser?. $85,000 was a hypothetical training cost for LLaMA 7B plus Stanford Alpaca. “Run it in a browser” was based on the fact that Web Stable Diffusion runs a 1.9GB Stable Diffusion model in a browser, so maybe it’s not such a big leap to run a small Large Language Model there as well.

[... 2,276 words]AI photo sorter (via) Really interesting implementation of machine learning photo classification by Alexander Visheratin. This tool lets you select as many photos as you like from your own machine, then provides a web interface for classifying them into labels that you provide. It loads a 102MB quantized CLIP model and executes it in the browser using WebAssembly. Once classified, a “Generate script” button produces a copyable list of shell commands for moving your images into corresponding folders on your own machine. Your photos never get uploaded to a server—everything happens directly in your browser.

Could you train a ChatGPT-beating model for $85,000 and run it in a browser?

I think it’s now possible to train a large language model with similar functionality to GPT-3 for $85,000. And I think we might soon be able to run the resulting model entirely in the browser, and give it capabilities that leapfrog it ahead of ChatGPT.

[... 1,751 words]Web Stable Diffusion (via) I just ran the full Stable Diffusion image generation model entirely in my browser, and used it to generate an image (of two raccoons eating pie in the woods, see “via” link). I had to use Google Chrome Canary since this depends on WebGPU which still isn’t fully rolled out, but it worked perfectly.

Weeknotes: A bunch of things I learned this week, plus datasette-explain

The Datasette table view refactor, JSON redesign and ?_extra= continues this week, mainly in this ongoing pull request and this tracking issue.

PocketPy. PocketPy is “a lightweight(~5000 LOC) Python interpreter for game engines”. It’s implemented as a single C++ header which provides an impressive subset of the Python language: functions, dictionaries, lists, strings and basic classes too. There’s also a browser demo that loads a 766.66 KB pypocket.wasm file (240.72 KB compressed) and uses it to power a basic terminal interface. I tried and failed to get that pypocket.wasm file working from wasmer/wasmtime/wasm3—it should make a really neat lightweight language to run in a WebAssembly sandbox.

Python Sandbox in Web Assembly (via) Jim Kring responded to my questions on Mastodon about running Python in a WASM sandbox by building this repo, which demonstrates using wasmer-python to run a build of Python 3.6 compiled to WebAssembly, complete with protected access to a sandbox directory.

2022

talk.wasm (via) “Talk with an Artificial Intelligence in your browser”. Absolutely stunning demo which loads the Whisper speech recognition model (75MB) and a GPT-2 model (240MB) and executes them both in your browser via WebAssembly, then uses the Web Speech API to talk back to you. The result is a full speak-with-an-AI interface running entirely client-side. GPT-2 sadly mostly generates gibberish but the fact that this works at all is pretty astonishing.

Microsoft Flight Simulator: WebAssembly (via) This is such a smart application of WebAssembly: it can now be used to write extensions for Microsoft Flight Simulator, which means you can run code from untrusted sources safely in a sandbox. I’m really looking forward to more of this kind of usage—I love the idea of finally having a robust sandbox for running things like plugins.