1,230 posts tagged “python”

The Python programming language.

2024

An LLM TDD loop (via) Super neat demo by David Winterbottom, who wrapped my LLM and files-to-prompt tools in a short Bash script that can be fed a file full of Python unit tests and an empty implementation file and will then iterate on that file in a loop until the tests pass.

jefftriplett/django-startproject

(via)

Django's django-admin startproject and startapp commands include a --template option which can be used to specify an alternative template for generating the initial code.

Jeff Triplett actively maintains his own template for new projects, which includes the pattern that I personally prefer of keeping settings and URLs in a config/ folder. It also configures the development environment to run using Docker Compose.

The latest update adds support for Python 3.13, Django 5.1 and uv. It's neat how you can get started without even installing Django using uv run like this:

uv run --with=django django-admin startproject \

--extension=ini,py,toml,yaml,yml \

--template=https://github.com/jefftriplett/django-startproject/archive/main.zip \

example_project

Perks of Being a Python Core Developer

(via)

Mariatta Wijaya provides a detailed breakdown of the exact capabilities and privileges that are granted to Python core developers - including commit access to the Python main, the ability to write or sponsor PEPs, the ability to vote on new core developers and for the steering council election and financial support from the PSF for travel expenses related to PyCon and core development sprints.

Not to be under-estimated is that you also gain respect:

Everyone’s always looking for ways to stand out in resumes, right? So do I. I’ve been an engineer for longer than I’ve been a core developer, and I do notice that having the extra title like open source maintainer and public speaker really make a difference. As a woman, as someone with foreign last name that nobody knows how to pronounce, as someone who looks foreign, and speaks in a foreign accent, having these extra “credentials” helped me be seen as more or less equal compared to other people.

Python 3.13’s best new features (via) Trey Hunner highlights some Python 3.13 usability improvements I had missed, mainly around the new REPL.

Pasting a block of code like a class or function that includes blank lines no longer breaks in the REPL - particularly useful if you frequently have LLMs write code for you to try out.

Hitting F2 in the REPL toggles "history mode" which gives you your Python code without the REPL's >>> and ... prefixes - great for copying code back out again.

Creating a virtual environment with python3.13 -m venv .venv now adds a .venv/.gitignore file containing * so you don't need to explicitly ignore that directory. I just checked and it looks like uv venv implements the same trick.

And my favourite:

Historically, any line in the Python debugger prompt that started with a PDB command would usually trigger the PDB command, instead of PDB interpreting the line as Python code. [...]

But now, if the command looks like Python code,

pdbwill run it as Python code!

Which means I can finally call list(iterable) in my pdb seesions, where previously I've had to use [i for i in iterable] instead.

(Tip from Trey: !list(iterable) and [*iterable] are good alternatives for pre-Python 3.13.)

Trey's post is also available as a YouTube video.

Free Threaded Python With Asyncio.

Jamie Chang expanded my free-threaded Python experiment from a few months ago to explore the interaction between Python's asyncio and the new GIL-free build of Python 3.13.

The results look really promising. Jamie says:

Generally when it comes to Asyncio, the discussion around it is always about the performance or lack there of. Whilst peroformance is certain important, the ability to reason about concurrency is the biggest benefit. [...]

Depending on your familiarity with AsyncIO, it might actually be the simplest way to start a thread.

This code for running a Python function in a thread really is very pleasant to look at:

result = await asyncio.to_thread(some_function, *args, **kwargs)

Jamie also demonstrates asyncio.TaskGroup, which makes it easy to execute a whole bunch of threads and wait for them all to finish:

async with TaskGroup() as tg:

for _ in range(args.tasks):

tg.create_task(to_thread(cpu_bound_task, args.size))

otterwiki (via) It's been a while since I've seen a new-ish Wiki implementation, and this one by Ralph Thesen is really nice. It's written in Python (Flask + SQLAlchemy + mistune for Markdown + GitPython) and keeps all of the actual wiki content as Markdown files in a local Git repository.

The installation instructions are a little in-depth as they assume a production installation with Docker or systemd - I figured out this recipe for trying it locally using uv:

git clone https://github.com/redimp/otterwiki.git

cd otterwiki

mkdir -p app-data/repository

git init app-data/repository

echo "REPOSITORY='${PWD}/app-data/repository'" >> settings.cfg

echo "SQLALCHEMY_DATABASE_URI='sqlite:///${PWD}/app-data/db.sqlite'" >> settings.cfg

echo "SECRET_KEY='$(echo $RANDOM | md5sum | head -c 16)'" >> settings.cfg

export OTTERWIKI_SETTINGS=$PWD/settings.cfg

uv run --with gunicorn gunicorn --bind 127.0.0.1:8080 otterwiki.server:app

What’s New In Python 3.13. It's Python 3.13 release day today. The big signature features are a better REPL with improved error messages, an option to run Python without the GIL and the beginnings of the new JIT. Here are some of the smaller highlights I spotted while perusing the release notes.

iOS and Android are both now Tier 3 supported platforms, thanks to the efforts of Russell Keith-Magee and the Beeware project. Tier 3 means "must have a reliable buildbot" but "failures on these platforms do not block a release". This is still a really big deal for Python as a mobile development platform.

There's a whole bunch of smaller stuff relevant to SQLite.

Python's dbm module has long provided a disk-backed key-value store against multiple different backends. 3.13 introduces a new backend based on SQLite, and makes it the default.

>>> import dbm

>>> db = dbm.open("/tmp/hi", "c")

>>> db["hi"] = 1The "c" option means "Open database for reading and writing, creating it if it doesn’t exist".

After running the above, /tmp/hi was a SQLite database containing the following data:

sqlite3 /tmp/hi .dump

PRAGMA foreign_keys=OFF;

BEGIN TRANSACTION;

CREATE TABLE Dict (

key BLOB UNIQUE NOT NULL,

value BLOB NOT NULL

);

INSERT INTO Dict VALUES(X'6869',X'31');

COMMIT;

The dbm.open() function can detect which type of storage is being referenced. I found the implementation for that in the whichdb(filename) function.

I was hopeful that this change would mean Python 3.13 deployments would be guaranteed to ship with a more recent SQLite... but it turns out 3.15.2 is from November 2016 so still quite old:

SQLite 3.15.2 or newer is required to build the

sqlite3extension module. (Contributed by Erlend Aasland in gh-105875.)

The conn.iterdump() SQLite method now accepts an optional filter= keyword argument taking a LIKE pattern for the tables that you want to dump. I found the implementation for that here.

And one last change which caught my eye because I could imagine having code that might need to be updated to reflect the new behaviour:

pathlib.Path.glob()andrglob()now return both files and directories if a pattern that ends with "**" is given, rather than directories only. Add a trailing slash to keep the previous behavior and only match directories.

With the release of Python 3.13, Python 3.8 is officially end-of-life. Łukasz Langa:

If you're still a user of Python 3.8, I don't blame you, it's a lovely version. But it's time to move on to newer, greater things. Whether it's typing generics in built-in collections, pattern matching,

except*, low-impact monitoring, or a new pink REPL, I'm sure you'll find your favorite new feature in one of the versions we still support. So upgrade today!

Datasette 0.65. Python 3.13 was released today, which broke compatibility with the Datasette 0.x series due to an issue with an underlying dependency. I've fixed that problem by vendoring and fixing the dependency and the new 0.65 release works on Python 3.13 (but drops support for Python 3.8, which is EOL this month). Datasette 1.0a16 added support for Python 3.13 last month.

UV with GitHub Actions to run an RSS to README project.

Jeff Triplett demonstrates a very neat pattern for using uv to run Python scripts with their dependencies inside of GitHub Actions. First, add uv to the workflow using the setup-uv action:

- uses: astral-sh/setup-uv@v3

with:

enable-cache: true

cache-dependency-glob: "*.py"

This enables the caching feature, which stores uv's own cache of downloads from PyPI between runs. The cache-dependency-glob key ensures that this cache will be invalidated if any .py file in the repository is updated.

Now you can run Python scripts using steps that look like this:

- run: uv run fetch-rss.py

If that Python script begins with some dependency definitions (PEP 723) they will be automatically installed by uv run on the first run and reused from the cache in the future. From the start of fetch-rss.py:

# /// script

# requires-python = ">=3.11"

# dependencies = [

# "feedparser",

# "typer",

# ]

# ///

uv will download the required Python version and cache that as well.

marimo v0.9.0 with mo.ui.chat. The latest release of the Marimo Python reactive notebook project includes a neat new feature: you can now easily embed a custom chat interface directly inside of your notebook.

Marimo co-founder Myles Scolnick posted this intriguing demo on Twitter, demonstrating a chat interface to my LLM library “in only 3 lines of code”:

import marimo as mo import llm model = llm.get_model() conversation = model.conversation() mo.ui.chat(lambda messages: conversation.prompt(messages[-1].content))

I tried that out today - here’s the result:

![Screenshot of a Marimo notebook editor, with lines of code and an embedded chat interface. Top: import marimo as mo and import llm. Middle: Chat messages - User: Hi there, Three jokes about pelicans. AI: Hello! How can I assist you today?, Sure! Here are three pelican jokes for you: 1. Why do pelicans always carry a suitcase? Because they have a lot of baggage to handle! 2. What do you call a pelican that can sing? A tune-ican! 3. Why did the pelican break up with his girlfriend? She said he always had his head in the clouds and never winged it! Hope these made you smile! Bottom code: model = llm.get_model(), conversation = model.conversation(), mo.ui.chat(lambda messages:, conversation.prompt(messages[-1].content))](https://static.simonwillison.net/static/2024/marimo-pelican-jokes.jpg)

marimo.ui.chat() takes a function which is passed a list of Marimo chat messages (representing the current state of that widget) and returns a string - or other type of renderable object - to add as the next message in the chat. This makes it trivial to hook in any custom chat mechanism you like.

Marimo also ship their own built-in chat handlers for OpenAI, Anthropic and Google Gemini which you can use like this:

mo.ui.chat( mo.ai.llm.anthropic( "claude-3-5-sonnet-20240620", system_message="You are a helpful assistant.", api_key="sk-ant-...", ), show_configuration_controls=True )

Conflating Overture Places Using DuckDB, Ollama, Embeddings, and More.

Drew Breunig's detailed tutorial on "conflation" - combining different geospatial data sources by de-duplicating address strings such as RESTAURANT LOS ARCOS,3359 FOOTHILL BLVD,OAKLAND,94601 and LOS ARCOS TAQUERIA,3359 FOOTHILL BLVD,OAKLAND,94601.

Drew uses an entirely offline stack based around Python, DuckDB and Ollama and finds that a combination of H3 geospatial tiles and mxbai-embed-large embeddings (though other embedding models should work equally well) gets really good results.

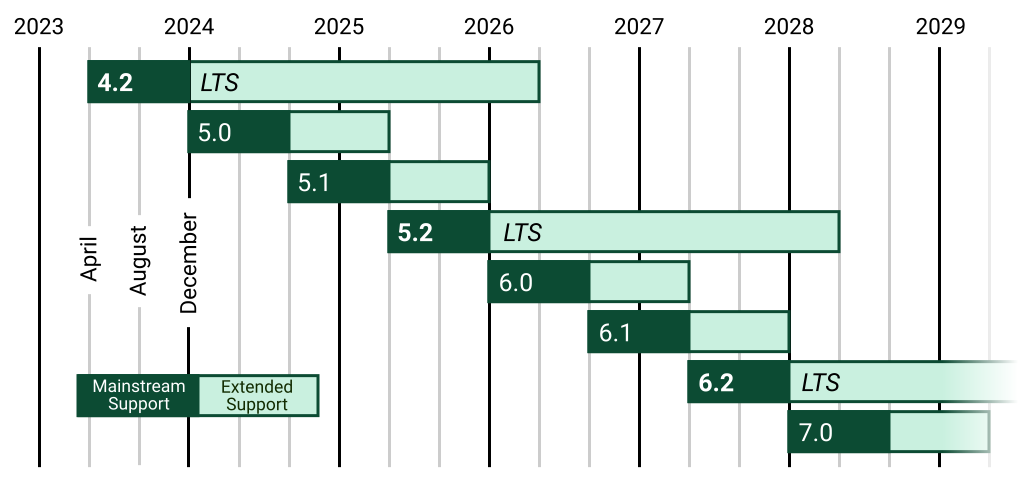

mlx-vlm (via) The MLX ecosystem of libraries for running machine learning models on Apple Silicon continues to expand. Prince Canuma is actively developing this library for running vision models such as Qwen-2 VL and Pixtral and LLaVA using Python running on a Mac.

I used uv to run it against this image with this shell one-liner:

uv run --with mlx-vlm \

python -m mlx_vlm.generate \

--model Qwen/Qwen2-VL-2B-Instruct \

--max-tokens 1000 \

--temp 0.0 \

--image https://static.simonwillison.net/static/2024/django-roadmap.png \

--prompt "Describe image in detail, include all text"

The --image option works equally well with a URL or a path to a local file on disk.

This first downloaded 4.1GB to my ~/.cache/huggingface/hub/models--Qwen--Qwen2-VL-2B-Instruct folder and then output this result, which starts:

The image is a horizontal timeline chart that represents the release dates of various software versions. The timeline is divided into years from 2023 to 2029, with each year represented by a vertical line. The chart includes a legend at the bottom, which distinguishes between different types of software versions.

Legend

Mainstream Support:

- 4.2 (2023)

- 5.0 (2024)

- 5.1 (2025)

- 5.2 (2026)

- 6.0 (2027) [...]

Ensuring a block is overridden in a Django template (via) Neat Django trick by Tom Carrick: implement a Django template tag that raises a custom exception, then you can use this pattern in your templates:

{% block title %}{% ensure_overridden %}{% endblock %}

To ensure you don't accidentally extend a base template but forget to fill out a critical block.

Themes from DjangoCon US 2024

I just arrived home from a trip to Durham, North Carolina for DjangoCon US 2024. I’ve already written about my talk where I announced a new plugin system for Django; here are my notes on some of the other themes that resonated with me during the conference.

[... 1,470 words]nanodjango. Richard Terry demonstrated this in a lightning talk at DjangoCon US today. It's the latest in a long line of attempts to get Django to work with a single file (I had a go at this problem 15 years ago with djng) but this one is really compelling.

I tried nanodjango out just now and it works exactly as advertised. First install it like this:

pip install nanodjango

Create a counter.py file:

from django.db import models from nanodjango import Django app = Django() @app.admin # Registers with the Django admin class CountLog(models.Model): timestamp = models.DateTimeField(auto_now_add=True) @app.route("/") def count(request): CountLog.objects.create() return f"<p>Number of page loads: {CountLog.objects.count()}</p>"

Then run it like this (it will run migrations and create a superuser as part of that first run):

nanodjango run counter.py

That's it! This gave me a fully configured Django application with models, migrations, the Django Admin configured and a bunch of other goodies such as Django Ninja for API endpoints.

Here's the full documentation.

simonw/docs cookiecutter template. Over the last few years I’ve settled on the combination of Sphinx, the Furo theme and the myst-parser extension (enabling Markdown in place of reStructuredText) as my documentation toolkit of choice, maintained in GitHub and hosted using ReadTheDocs.

My LLM and shot-scraper projects are two examples of that stack in action.

Today I wanted to spin up a new documentation site so I finally took the time to construct a cookiecutter template for my preferred configuration. You can use it like this:

pipx install cookiecutter

cookiecutter gh:simonw/docs

Or with uv:

uv tool run cookiecutter gh:simonw/docs

Answer a few questions:

[1/3] project (): shot-scraper

[2/3] author (): Simon Willison

[3/3] docs_directory (docs):

And it creates a docs/ directory ready for you to start editing docs:

cd docs

pip install -r requirements.txt

make livehtml

Jiter (via) One of the challenges in dealing with LLM streaming APIs is the need to parse partial JSON - until the stream has ended you won't have a complete valid JSON object, but you may want to display components of that JSON as they become available.

I've solved this previously using the ijson streaming JSON library, see my previous TIL.

Today I found out about Jiter, a new option from the team behind Pydantic. It's written in Rust and extracted from pydantic-core, so the Python wrapper for it can be installed using:

pip install jiter

You can feed it an incomplete JSON bytes object and use partial_mode="on" to parse the valid subset:

import jiter partial_json = b'{"name": "John", "age": 30, "city": "New Yor' jiter.from_json(partial_json, partial_mode="on") # {'name': 'John', 'age': 30}

Or use partial_mode="trailing-strings" to include incomplete string fields too:

jiter.from_json(partial_json, partial_mode="trailing-strings") # {'name': 'John', 'age': 30, 'city': 'New Yor'}

The current README was a little thin, so I submiitted a PR with some extra examples. I got some help from files-to-prompt and Claude 3.5 Sonnet):

cd crates/jiter-python/ && files-to-prompt -c README.md tests | llm -m claude-3.5-sonnet --system 'write a new README with comprehensive documentation'

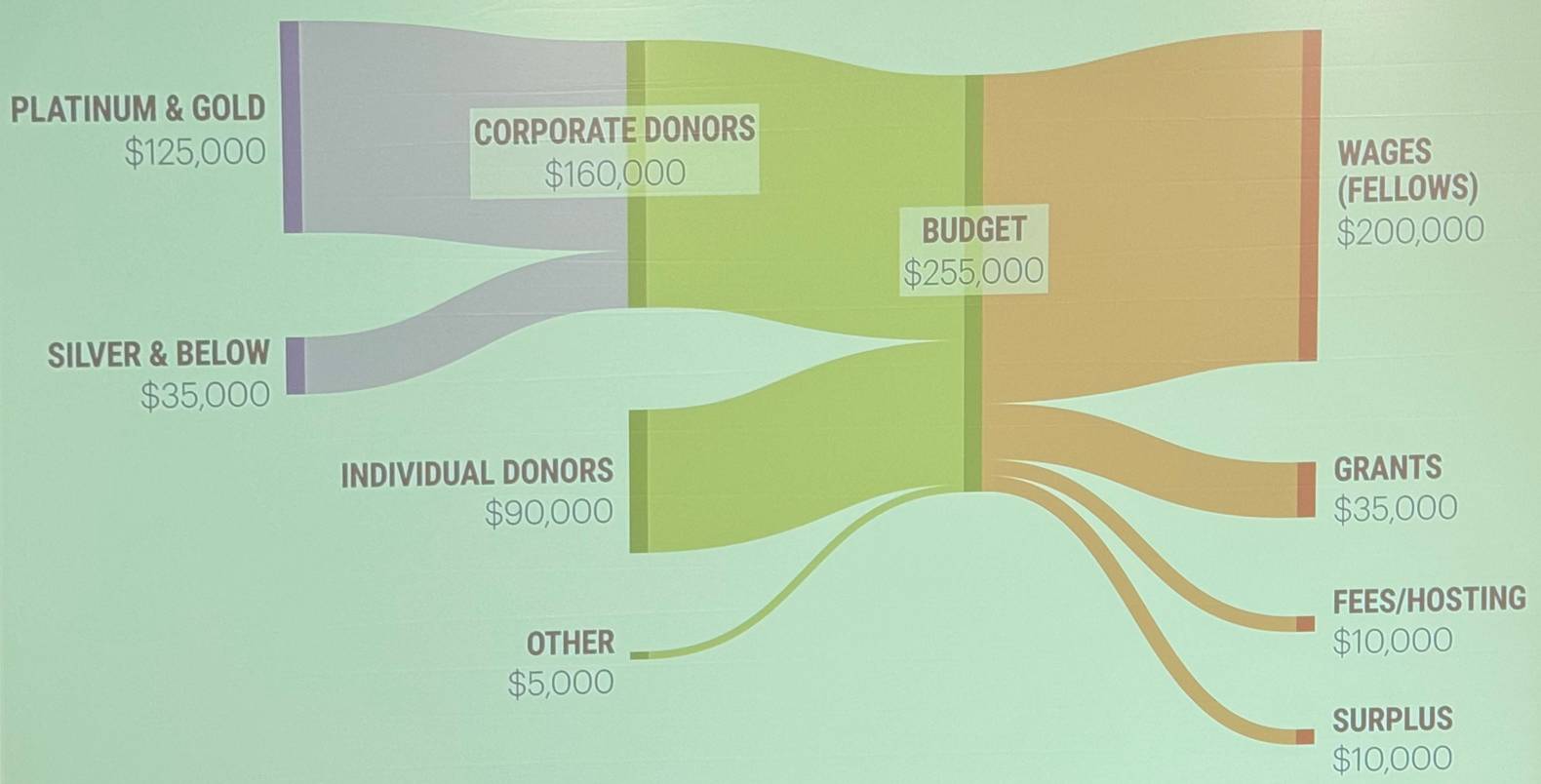

Things I’ve learned serving on the board of the Python Software Foundation

Two years ago I was elected to the board of directors for the Python Software Foundation—the PSF. I recently returned from the annual PSF board retreat (this one was in Lisbon, Portugal) and this feels like a good opportunity to write up some of the things I’ve learned along the way.

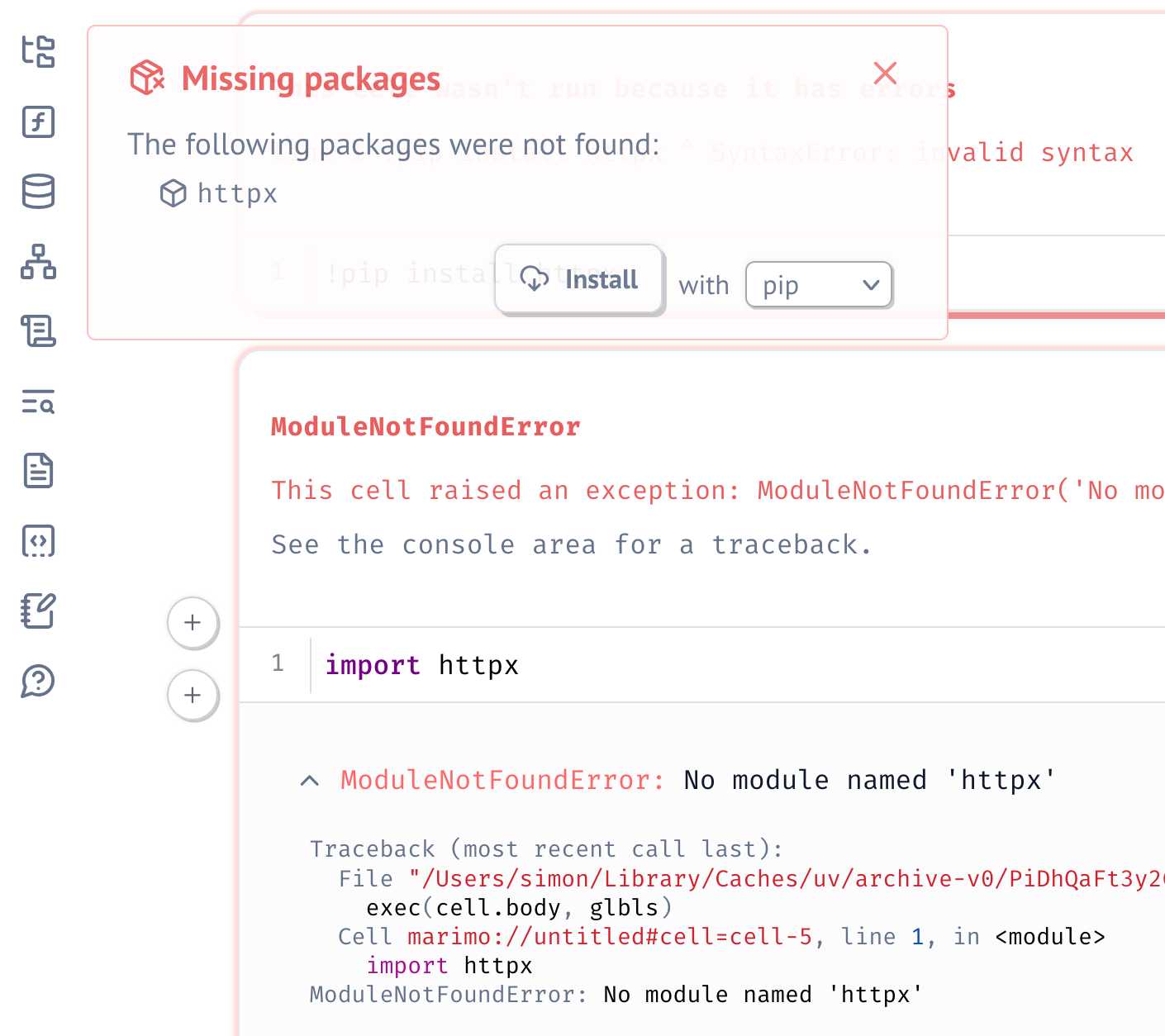

[... 2,702 words]Serializing package requirements in marimo notebooks. The latest release of Marimo - a reactive alternative to Jupyter notebooks - has a very neat new feature enabled by its integration with uv:

One of marimo’s goals is to make notebooks reproducible, down to the packages used in them. To that end, it’s now possible to create marimo notebooks that have their package requirements serialized into them as a top-level comment.

This takes advantage of the PEP 723 inline metadata mechanism, where a code comment at the top of a Python file can list package dependencies (and their versions).

I tried this out by installing marimo using uv:

uv tool install --python=3.12 marimo

Then grabbing one of their example notebooks:

wget 'https://raw.githubusercontent.com/marimo-team/spotlights/main/001-anywidget/tldraw_colorpicker.py'

And running it in a fresh dependency sandbox like this:

marimo run --sandbox tldraw_colorpicker.py

Also neat is that when editing a notebook using marimo edit:

marimo edit --sandbox notebook.py

Just importing a missing package is enough for Marimo to prompt to add that to the dependencies - at which point it automatically adds that package to the comment at the top of the file:

UV — I am (somewhat) sold

(via)

Oliver Andrich's detailed notes on adopting uv. Oliver has some pretty specific requirements:

I need to have various Python versions installed locally to test my work and my personal projects. Ranging from Python 3.8 to 3.13. [...] I also require decent dependency management in my projects that goes beyond manually editing a

pyproject.tomlfile. Likewise, I am way too accustomed topoetry add .... And I run a number of Python-based tools --- djhtml, poetry, ipython, llm, mkdocs, pre-commit, tox, ...

He's braver than I am!

I started by removing all Python installations, pyenv, pipx and Homebrew from my machine. Rendering me unable to do my work.

Here's a neat trick: first install a specific Python version with uv like this:

uv python install 3.11

Then create an alias to run it like this:

alias python3.11 'uv run --python=3.11 python3'

And install standalone tools with optional extra dependencies like this (a replacement for pipx and pipx inject):

uv tool install --python=3.12 --with mkdocs-material mkdocs

Oliver also links to Anže Pečar's handy guide on using UV with Django.

uv under discussion on Mastodon. Jacob Kaplan-Moss kicked off this fascinating conversation about uv on Mastodon recently. It's worth reading the whole thing, which includes input from a whole range of influential Python community members such as Jeff Triplett, Glyph Lefkowitz, Russell Keith-Magee, Seth Michael Larson, Hynek Schlawack, James Bennett and others. (Mastodon is a pretty great place for keeping up with the Python community these days.)

The key theme of the conversation is that, while uv represents a huge set of potential improvements to the Python ecosystem, it comes with additional risks due its attachment to a VC-backed company - and its reliance on Rust rather than Python.

Here are a few comments that stood out to me.

As enthusiastic as I am about the direction uv is going, I haven't adopted them anywhere - because I want very much to understand Astral’s intended business model before I hook my wagon to their tools. It's definitely not clear to me how they're going to stay liquid once the VC money runs out. They could get me onboard in a hot second if they published a "This is what we're planning to charge for" blog post.

As much as I hate VC, [...] FOSS projects flame out all the time too. If Frost loses interest, there’s no PDM anymore. Same for Ofek and Hatch(ling).

I fully expect Astral to flame out and us having to fork/take over—it’s the circle of FOSS. To me uv looks like a genius sting to trick VCs into paying to fix packaging. We’ll be better off either way.

Even in the best case, Rust is more expensive and difficult to maintain, not to mention "non-native" to the average customer here. [...] And the difficulty with VC money here is that it can burn out all the other projects in the ecosystem simultaneously, creating a risk of monoculture, where previously, I think we can say that "monoculture" was the least of Python's packaging concerns.

I don’t think y’all quite grok what uv makes so special due to your seniority. The speed is really cool, but the reason Rust is elemental is that it’s one compiled blob that can be used to bootstrap and maintain a Python development. A blob that will never break because someone upgraded Homebrew, ran pip install or any other creative way people found to fuck up their installations. Python has shown to be a terrible tech to maintain Python.

Just dropping in here to say that corporate capture of the Python ecosystem is the #1 keeps-me-up-at-night subject in my community work, so I watch Astral with interest, even if I'm not yet too worried.

I'm reminded of this note from Armin Ronacher, who created Rye and later donated it to uv maintainers Astral:

However having seen the code and what uv is doing, even in the worst possible future this is a very forkable and maintainable thing. I believe that even in case Astral shuts down or were to do something incredibly dodgy licensing wise, the community would be better off than before uv existed.

I'm currently inclined to agree with Armin and Hynek: while the risk of corporate capture for a crucial aspect of the Python packaging and onboarding ecosystem is a legitimate concern, the amount of progress that has been made here in a relatively short time combined with the open license and quality of the underlying code keeps me optimistic that uv will be a net positive for Python overall.

Update: uv creator Charlie Marsh joined the conversation:

I don't want to charge people money to use our tools, and I don't want to create an incentive structure whereby our open source offerings are competing with any commercial offerings (which is what you see with a lost of hosted-open-source-SaaS business models).

What I want to do is build software that vertically integrates with our open source tools, and sell that software to companies that are already using Ruff, uv, etc. Alternatives to things that companies already pay for today.

An example of what this might look like (we may not do this, but it's helpful to have a concrete example of the strategy) would be something like an enterprise-focused private package registry. A lot of big companies use uv. We spend time talking to them. They all spend money on private package registries, and have issues with them. We could build a private registry that integrates well with uv, and sell it to those companies. [...]

But the core of what I want to do is this: build great tools, hopefully people like them, hopefully they grow, hopefully companies adopt them; then sell software to those companies that represents the natural next thing they need when building with Python. Hopefully we can build something better than the alternatives by playing well with our OSS, and hopefully we are the natural choice if they're already using our OSS.

Docker images using uv’s python (via) Michael Kennedy interviewed uv/Ruff lead Charlie Marsh on his Talk Python podcast, and was inspired to try uv with Talk Python's own infrastructure, a single 8 CPU server running 17 Docker containers (status page here).

The key line they're now using is this:

RUN uv venv --python 3.12.5 /venv

Which downloads the uv selected standalone Python binary for Python 3.12.5 and creates a virtual environment for it at /venv all in one go.

Python Developers Survey 2023 Results (via) The seventh annual Python survey is out. Here are the things that caught my eye or that I found surprising:

25% of survey respondents had been programming in Python for less than a year, and 33% had less than a year of professional experience.

37% of Python developers reported contributing to open-source projects last year - a new question for the survey. This is delightfully high!

6% of users are still using Python 2. The survey notes:

Almost half of Python 2 holdouts are under 21 years old and a third are students. Perhaps courses are still using Python 2?

In web frameworks, Flask and Django neck and neck at 33% each, but FastAPI is a close third at 29%! Starlette is at 6%, but that's an under-count because it's the basis for FastAPI.

The most popular library in "other framework and libraries" was BeautifulSoup with 31%, then Pillow 28%, then OpenCV-Python at 22% (wow!) and Pydantic at 22%. Tkinter had 17%. These numbers are all a surprise to me.

pytest scores 52% for unit testing, unittest from the standard library just 25%. I'm glad to see pytest so widely used, it's my favourite testing tool across any programming language.

The top cloud providers are AWS, then Google Cloud Platform, then Azure... but PythonAnywhere (11%) took fourth place just ahead of DigitalOcean (10%). And Alibaba Cloud is a new entrant in sixth place (after Heroku) with 4%. Heroku's ending of its free plan dropped them from 14% in 2021 to 7% now.

Linux and Windows equal at 55%, macOS is at 29%. This was one of many multiple-choice questions that could add up to more than 100%.

In databases, SQLite usage was trending down - 38% in 2021 to 34% for 2023, but still in second place behind PostgreSQL, stable at 43%.

The survey incorporates quotes from different Python experts responding to the numbers, it's worth reading through the whole thing.

Why I Still Use Python Virtual Environments in Docker (via) Hynek Schlawack argues for using virtual environments even when running Python applications in a Docker container. This argument was most convincing to me:

I'm responsible for dozens of services, so I appreciate the consistency of knowing that everything I'm deploying is in

/app, and if it's a Python application, I know it's a virtual environment, and if I run/app/bin/python, I get the virtual environment's Python with my application ready to be imported and run.

Also:

It’s good to use the same tools and primitives in development and in production.

Also worth a look: Hynek's guide to Production-ready Docker Containers with uv, an actively maintained guide that aims to reflect ongoing changes made to uv itself.

Anatomy of a Textual User Interface. Will McGugan used Textual and my LLM Python library to build a delightful TUI for talking to a simulation of Mother, the AI from the Aliens movies:

The entire implementation is just 77 lines of code. It includes PEP 723 inline dependency information:

# /// script # requires-python = ">=3.12" # dependencies = [ # "llm", # "textual", # ] # ///

Which means you can run it in a dedicated environment with the correct dependencies installed using uv run like this:

wget 'https://gist.githubusercontent.com/willmcgugan/648a537c9d47dafa59cb8ece281d8c2c/raw/7aa575c389b31eb041ae7a909f2349a96ffe2a48/mother.py'

export OPENAI_API_KEY='sk-...'

uv run mother.pyI found the send_prompt() method particularly interesting. Textual uses asyncio for its event loop, but LLM currently only supports synchronous execution and can block for several seconds while retrieving a prompt.

Will used the Textual @work(thread=True) decorator, documented here, to run that operation in a thread:

@work(thread=True) def send_prompt(self, prompt: str, response: Response) -> None: response_content = "" llm_response = self.model.prompt(prompt, system=SYSTEM) for chunk in llm_response: response_content += chunk self.call_from_thread(response.update, response_content)

Looping through the response like that and calling self.call_from_thread(response.update, response_content) with an accumulated string is all it takes to implement streaming responses in the Textual UI, and that Response object sublasses textual.widgets.Markdown so any Markdown is rendered using Rich.

uvtrick (via) This "fun party trick" by Vincent D. Warmerdam is absolutely brilliant and a little horrifying. The following code:

from uvtrick import Env def uses_rich(): from rich import print print("hi :vampire:") Env("rich", python="3.12").run(uses_rich)

Executes that uses_rich() function in a fresh virtual environment managed by uv, running the specified Python version (3.12) and ensuring the rich package is available - even if it's not installed in the current environment.

It's taking advantage of the fact that uv is so fast that the overhead of getting this to work is low enough for it to be worth at least playing with the idea.

The real magic is in how uvtrick works. It's only 127 lines of code with some truly devious trickery going on.

That Env.run() method:

- Creates a temporary directory

- Pickles the

argsandkwargsand saves them topickled_inputs.pickle - Uses

inspect.getsource()to retrieve the source code of the function passed torun() - Writes that to a

pytemp.pyfile, along with a generatedif __name__ == "__main__":block that calls the function with the pickled inputs and saves its output to another pickle file calledtmp.pickle

Having created the temporary Python file it executes the program using a command something like this:

uv run --with rich --python 3.12 --quiet pytemp.pyIt reads the output from tmp.pickle and returns it to the caller!

Anthropic’s Prompt Engineering Interactive Tutorial (via) Anthropic continue their trend of offering the best documentation of any of the leading LLM vendors. This tutorial is delivered as a set of Jupyter notebooks - I used it as an excuse to try uvx like this:

git clone https://github.com/anthropics/courses

uvx --from jupyter-core jupyter notebook coursesThis installed a working Jupyter system, started the server and launched my browser within a few seconds.

The first few chapters are pretty basic, demonstrating simple prompts run through the Anthropic API. I used %pip install anthropic instead of !pip install anthropic to make sure the package was installed in the correct virtual environment, then filed an issue and a PR.

One new-to-me trick: in the first chapter the tutorial suggests running this:

API_KEY = "your_api_key_here" %store API_KEY

This stashes your Anthropic API key in the IPython store. In subsequent notebooks you can restore the API_KEY variable like this:

%store -r API_KEY

I poked around and on macOS those variables are stored in files of the same name in ~/.ipython/profile_default/db/autorestore.

Chapter 4: Separating Data and Instructions included some interesting notes on Claude's support for content wrapped in XML-tag-style delimiters:

Note: While Claude can recognize and work with a wide range of separators and delimeters, we recommend that you use specifically XML tags as separators for Claude, as Claude was trained specifically to recognize XML tags as a prompt organizing mechanism. Outside of function calling, there are no special sauce XML tags that Claude has been trained on that you should use to maximally boost your performance. We have purposefully made Claude very malleable and customizable this way.

Plus this note on the importance of avoiding typos, with a nod back to the problem of sandbagging where models match their intelligence and tone to that of their prompts:

This is an important lesson about prompting: small details matter! It's always worth it to scrub your prompts for typos and grammatical errors. Claude is sensitive to patterns (in its early years, before finetuning, it was a raw text-prediction tool), and it's more likely to make mistakes when you make mistakes, smarter when you sound smart, sillier when you sound silly, and so on.

Chapter 5: Formatting Output and Speaking for Claude includes notes on one of Claude's most interesting features: prefill, where you can tell it how to start its response:

client.messages.create( model="claude-3-haiku-20240307", max_tokens=100, messages=[ {"role": "user", "content": "JSON facts about cats"}, {"role": "assistant", "content": "{"} ] )

Things start to get really interesting in Chapter 6: Precognition (Thinking Step by Step), which suggests using XML tags to help the model consider different arguments prior to generating a final answer:

Is this review sentiment positive or negative? First, write the best arguments for each side in <positive-argument> and <negative-argument> XML tags, then answer.

The tags make it easy to strip out the "thinking out loud" portions of the response.

It also warns about Claude's sensitivity to ordering. If you give Claude two options (e.g. for sentiment analysis):

In most situations (but not all, confusingly enough), Claude is more likely to choose the second of two options, possibly because in its training data from the web, second options were more likely to be correct.

This effect can be reduced using the thinking out loud / brainstorming prompting techniques.

A related tip is proposed in Chapter 8: Avoiding Hallucinations:

How do we fix this? Well, a great way to reduce hallucinations on long documents is to make Claude gather evidence first.

In this case, we tell Claude to first extract relevant quotes, then base its answer on those quotes. Telling Claude to do so here makes it correctly notice that the quote does not answer the question.

I really like the example prompt they provide here, for answering complex questions against a long document:

<question>What was Matterport's subscriber base on the precise date of May 31, 2020?</question>

Please read the below document. Then, in <scratchpad> tags, pull the most relevant quote from the document and consider whether it answers the user's question or whether it lacks sufficient detail. Then write a brief numerical answer in <answer> tags.

light-the-torchis a small utility that wrapspipto ease the installation process for PyTorch distributions liketorch,torchvision,torchaudio, and so on as well as third-party packages that depend on them. It auto-detects compatible CUDA versions from the local setup and installs the correct PyTorch binaries without user interference.

Use it like this:

pip install light-the-torch

ltt install torchIt works by wrapping and patching pip.

There is an elephant in the room which is that Astral is a VC funded company. What does that mean for the future of these tools? Here is my take on this: for the community having someone pour money into it can create some challenges. For the PSF and the core Python project this is something that should be considered. However having seen the code and what uv is doing, even in the worst possible future this is a very forkable and maintainable thing. I believe that even in case Astral shuts down or were to do something incredibly dodgy licensing wise, the community would be better off than before uv existed.

#!/usr/bin/env -S uv run (via) This is a really neat pattern. Start your Python script like this:

#!/usr/bin/env -S uv run

# /// script

# requires-python = ">=3.12"

# dependencies = [

# "flask==3.*",

# ]

# ///

import flask

# ...

And now if you chmod 755 it you can run it on any machine with the uv binary installed like this: ./app.py - and it will automatically create its own isolated environment and run itself with the correct installed dependencies and even the correctly installed Python version.

All of that from putting uv run in the shebang line!

Code from this PR by David Laban.