63 posts tagged “github-actions”

GitHub's Actions tool for repository automation.

2026

Vouch. Mitchell Hashimoto's new system to help address the deluge of worthless AI-generated PRs faced by open source projects now that the friction involved in contributing has dropped so low.

The idea is simple: Unvouched users can't contribute to your projects. Very bad users can be explicitly "denounced", effectively blocked. Users are vouched or denounced by contributors via GitHub issue or discussion comments or via the CLI.

Integration into GitHub is as simple as adopting the published GitHub actions. Done. Additionally, the system itself is generic to forges and not tied to GitHub in any way.

Who and how someone is vouched or denounced is up to the project. I'm not the value police for the world. Decide for yourself what works for your project and your community.

2025

simonw/actions-latest.

Today in extremely niche projects, I got fed up of Claude Code creating GitHub Actions workflows for me that used stale actions: actions/setup-python@v4 when the latest is actions/setup-python@v6 for example.

I couldn't find a good single place listing those latest versions, so I had Claude Code for web (via my phone, I'm out on errands) build a Git scraper to publish those versions in one place:

https://simonw.github.io/actions-latest/versions.txt

Tell your coding agent of choice to fetch that any time it wants to write a new GitHub Actions workflows.

(I may well bake this into a Skill.)

Here's the first and second transcript I used to build this, shared using my claude-code-transcripts tool (which just gained a search feature.)

uv-init-demos.

uv has a useful uv init command for setting up new Python projects, but it comes with a bunch of different options like --app and --package and --lib and I wasn't sure how they differed.

So I created this GitHub repository which demonstrates all of those options, generated using this update-projects.sh script (thanks, Claude) which will run on a schedule via GitHub Actions to capture any changes made by future releases of uv.

How I automate my Substack newsletter with content from my blog

I sent out my weekly-ish Substack newsletter this morning and took the opportunity to record a YouTube video demonstrating my process and describing the different components that make it work. There’s a lot of digital duct tape involved, taking the content from Django+Heroku+PostgreSQL to GitHub Actions to SQLite+Datasette+Fly.io to JavaScript+Observable and finally to Substack.

[... 1,345 words]Python 3.14. This year's major Python version, Python 3.14, just made its first stable release!

As usual the what's new in Python 3.14 document is the best place to get familiar with the new release:

The biggest changes include template string literals, deferred evaluation of annotations, and support for subinterpreters in the standard library.

The library changes include significantly improved capabilities for introspection in asyncio, support for Zstandard via a new compression.zstd module, syntax highlighting in the REPL, as well as the usual deprecations and removals, and improvements in user-friendliness and correctness.

Subinterpreters look particularly interesting as a way to use multiple CPU cores to run Python code despite the continued existence of the GIL. If you're feeling brave and your dependencies cooperate you can also use the free-threaded build of Python 3.14 - now officially supported - to skip the GIL entirely.

A new major Python release means an older release hits the end of its support lifecycle - in this case that's Python 3.9. If you maintain open source libraries that target every supported Python versions (as I do) this means features introduced in Python 3.10 can now be depended on! What's new in Python 3.10 lists those - I'm most excited by structured pattern matching (the match/case statement) and the union type operator, allowing int | float | None as a type annotation in place of Optional[Union[int, float]].

If you use uv you can grab a copy of 3.14 using:

uv self update

uv python upgrade 3.14

uvx python@3.14

Or for free-threaded Python 3.1;:

uvx python@3.14t

The uv team wrote about their Python 3.14 highlights in their announcement of Python 3.14's availability via uv.

The GitHub Actions setup-python action includes Python 3.14 now too, so the following YAML snippet in will run tests on all currently supported versions:

strategy:

matrix:

python-version: ["3.10", "3.11", "3.12", "3.13", "3.14"]

steps:

- uses: actions/setup-python@v6

with:

python-version: ${{ matrix.python-version }}

Full example here for one of my many Datasette plugin repos.

Using Claude Code to build a GitHub Actions workflow. I wanted to add a small feature to one of my GitHub repos - an automatically updated README index listing other files in the repo - so I decided to use Descript to record my process using Claude Code. Here's a 7 minute video showing what I did.

I've been wanting to start producing more video content for a while - this felt like a good low-stakes opportunity to put in some reps.

Continuous AI. GitHub Next have coined the term "Continuous AI" to describe "all uses of automated AI to support software collaboration on any platform". It's intended as an echo of Continuous Integration and Continuous Deployment:

We've chosen the term "Continuous AI” to align with the established concept of Continuous Integration/Continuous Deployment (CI/CD). Just as CI/CD transformed software development by automating integration and deployment, Continuous AI covers the ways in which AI can be used to automate and enhance collaboration workflows.

“Continuous AI” is not a term GitHub owns, nor a technology GitHub builds: it's a term we use to focus our minds, and which we're introducing to the industry. This means Continuous AI is an open-ended set of activities, workloads, examples, recipes, technologies and capabilities; a category, rather than any single tool.

I was thrilled to bits to see LLM get a mention as a tool that can be used to implement some of these patterns inside of GitHub Actions:

You can also use the llm framework in combination with the llm-github-models extension to create LLM-powered GitHub Actions which use GitHub Models using Unix shell scripting.

The GitHub Next team have started maintaining an Awesome Continuous AI list with links to projects that fit under this new umbrella term.

I'm particularly interested in the idea of having CI jobs (I guess CAI jobs?) that check proposed changes to see if there's documentation that needs to be updated and that might have been missed - a much more powerful variant of my documentation unit tests pattern.

llm-github-models 0.15. Anthony Shaw's llm-github-models plugin just got an upgrade: it now supports LLM 0.26 tool use for a subset of the models hosted on the GitHub Models API, contributed by Caleb Brose.

The neat thing about this GitHub Models plugin is that it picks up an API key from your GITHUB_TOKEN - and if you're running LLM within a GitHub Actions worker the API key provided by the worker should be enough to start executing prompts!

I tried it out against Cohere Command A via GitHub Models like this (transcript here):

llm install llm-github-models

llm keys set github

# Paste key here

llm -m github/cohere-command-a -T llm_time 'What time is it?' --td

We now have seven LLM plugins that provide tool support, covering OpenAI, Anthropic, Gemini, Mistral, Ollama, llama-server and now GitHub Models.

simonw/ollama-models-atom-feed. I setup a GitHub Actions + GitHub Pages Atom feed of scraped recent models data from the Ollama latest models page - Ollama remains one of the easiest ways to run models on a laptop so a new model release from them is worth hearing about.

I built the scraper by pasting example HTML into Claude and asking for a Python script to convert it to Atom - here's the script we wrote together.

Update 25th March 2025: The first version of this included all 160+ models in a single feed. I've upgraded the script to output two feeds - the original atom.xml one and a new atom-recent-20.xml feed containing just the most recent 20 items.

I modified the script using Google's new Gemini 2.5 Pro model, like this:

cat to_atom.py | llm -m gemini-2.5-pro-exp-03-25 \

-s 'rewrite this script so that instead of outputting Atom to stdout it saves two files, one called atom.xml with everything and another called atom-recent-20.xml with just the most recent 20 items - remove the output option entirely'

Here's the full transcript.

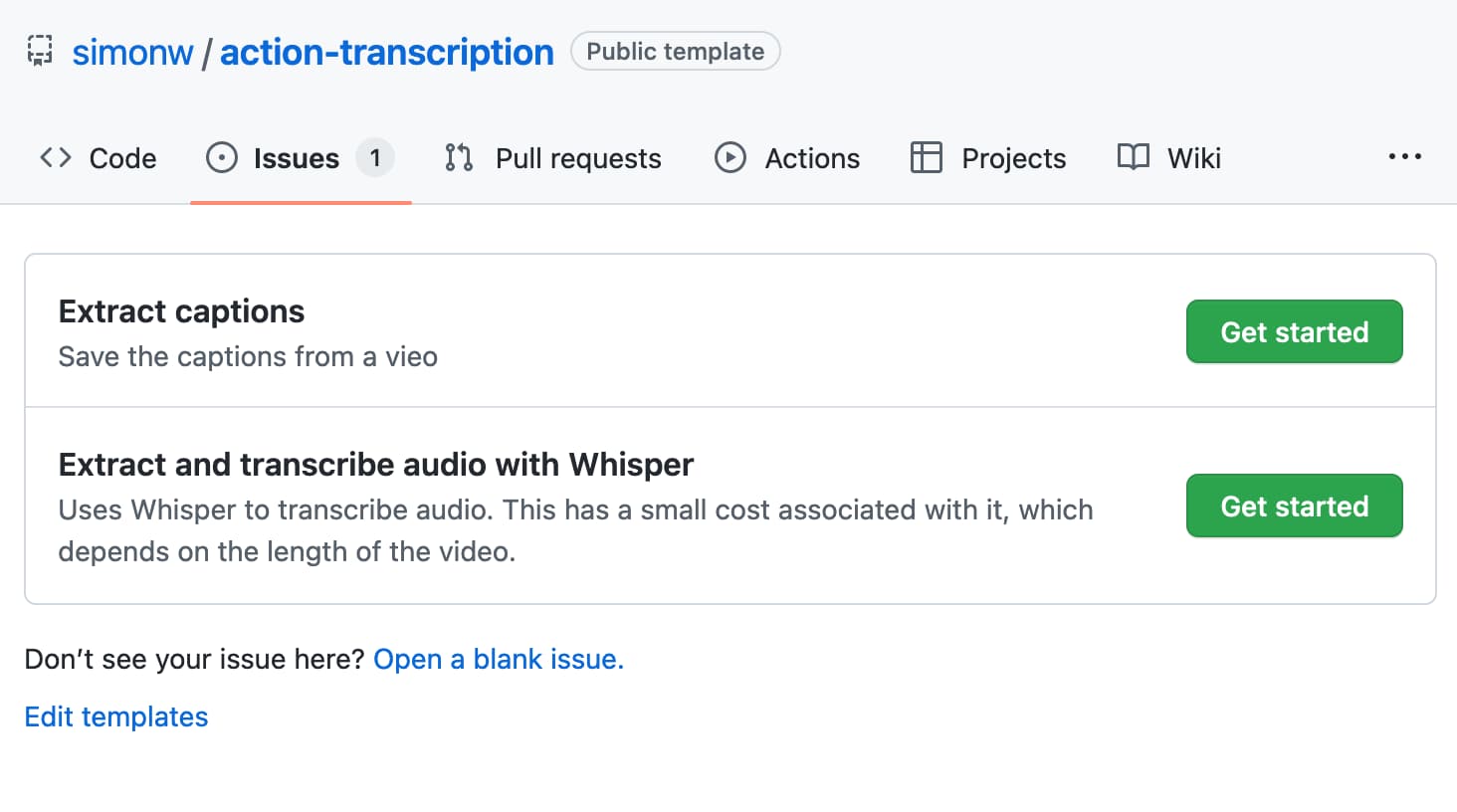

Building and deploying a custom site using GitHub Actions and GitHub Pages. I figured out a minimal example of how to use GitHub Actions to run custom scripts to build a website and then publish that static site to GitHub Pages. I turned the example into a template repository, which should make getting started for a new project extremely quick.

I've needed this for various projects over the years, but today I finally put these notes together while setting up a system for scraping the iNaturalist API for recent sightings of the California Brown Pelican and converting those into an Atom feed that I can subscribe to in NetNewsWire:

I got Claude to write me the script that converts the scraped JSON to atom.

Update: I just found out iNaturalist have their own atom feeds! Here's their own feed of recent Pelican observations.

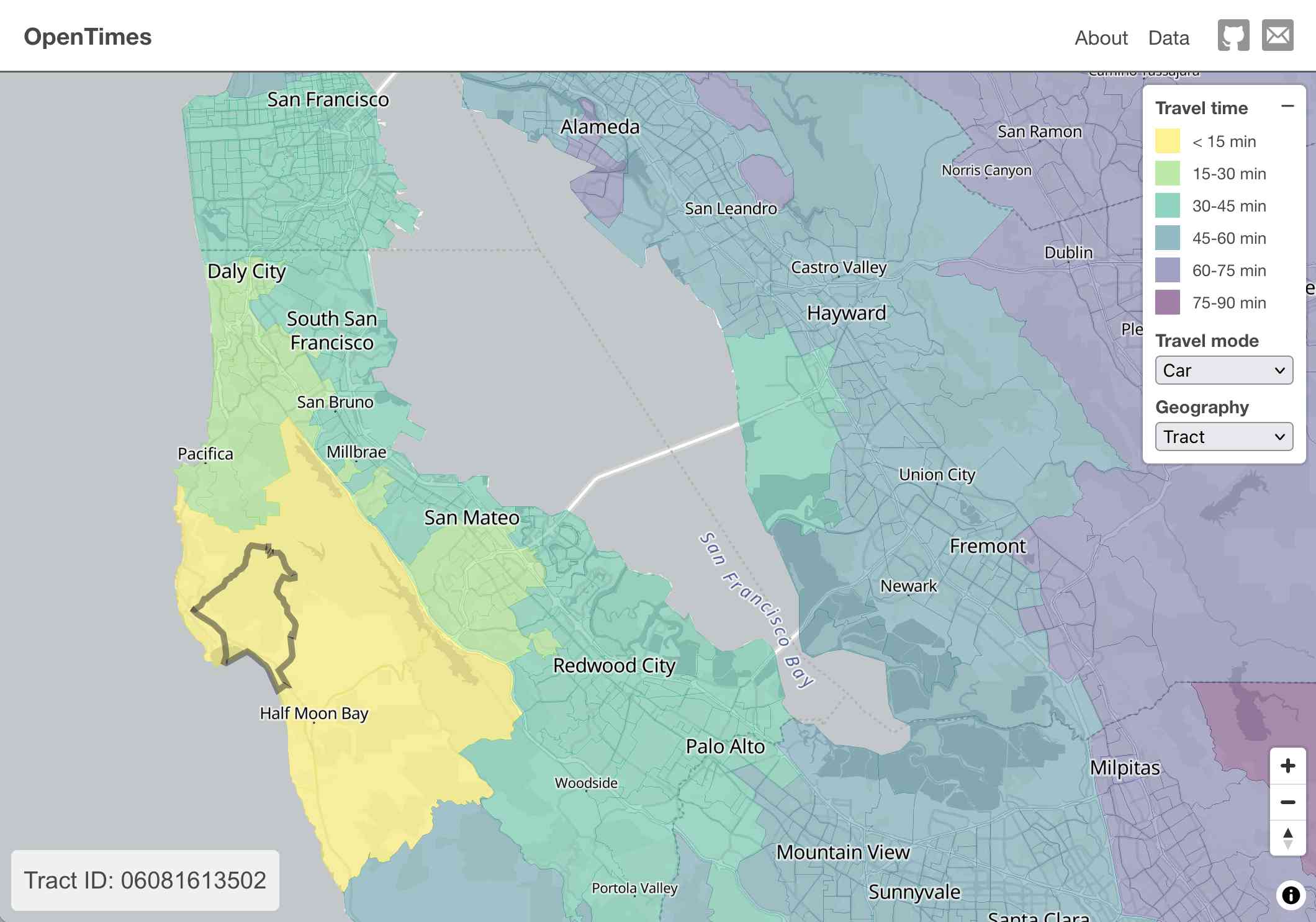

OpenTimes (via) Spectacular new open geospatial project by Dan Snow:

OpenTimes is a database of pre-computed, point-to-point travel times between United States Census geographies. It lets you download bulk travel time data for free and with no limits.

Here's what I get for travel times by car from El Granada, California:

The technical details are fascinating:

- The entire OpenTimes backend is just static Parquet files on Cloudflare's R2. There's no RDBMS or running service, just files and a CDN. The whole thing costs about $10/month to host and costs nothing to serve. In my opinion, this is a great way to serve infrequently updated, large public datasets at low cost (as long as you partition the files correctly).

Sure enough, R2 pricing charges "based on the total volume of data stored" - $0.015 / GB-month for standard storage, then $0.36 / million requests for "Class B" operations which include reads. They charge nothing for outbound bandwidth.

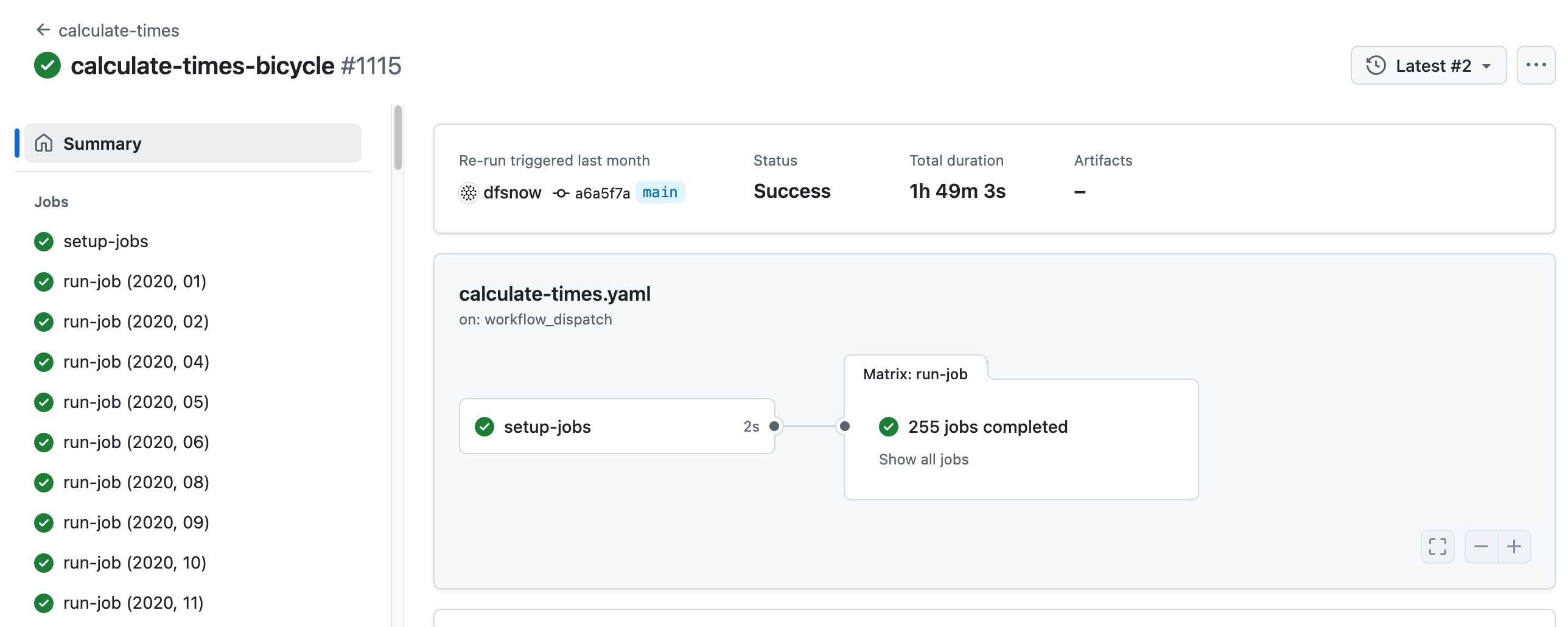

- All travel times were calculated by pre-building the inputs (OSM, OSRM networks) and then distributing the compute over hundreds of GitHub Actions jobs. This worked shockingly well for this specific workload (and was also completely free).

Here's a GitHub Actions run of the calculate-times.yaml workflow which uses a matrix to run 255 jobs!

Relevant YAML:

matrix:

year: ${{ fromJSON(needs.setup-jobs.outputs.years) }}

state: ${{ fromJSON(needs.setup-jobs.outputs.states) }}

Where those JSON files were created by the previous step, which reads in the year and state values from this params.yaml file.

- The query layer uses a single DuckDB database file with views that point to static Parquet files via HTTP. This lets you query a table with hundreds of billions of records after downloading just the ~5MB pointer file.

This is a really creative use of DuckDB's feature that lets you run queries against large data from a laptop using HTTP range queries to avoid downloading the whole thing.

The README shows how to use that from R and Python - I got this working in the duckdb client (brew install duckdb):

INSTALL httpfs;

LOAD httpfs;

ATTACH 'https://data.opentimes.org/databases/0.0.1.duckdb' AS opentimes;

SELECT origin_id, destination_id, duration_sec

FROM opentimes.public.times

WHERE version = '0.0.1'

AND mode = 'car'

AND year = '2024'

AND geography = 'tract'

AND state = '17'

AND origin_id LIKE '17031%' limit 10;

In answer to a question about adding public transit times Dan said:

In the next year or so maybe. The biggest obstacles to adding public transit are:

- Collecting all the necessary scheduling data (e.g. GTFS feeds) for every transit system in the county. Not insurmountable since there are services that do this currently.

- Finding a routing engine that can compute nation-scale travel time matrices quickly. Currently, the two fastest open-source engines I've tried (OSRM and Valhalla) don't support public transit for matrix calculations and the engines that do support public transit (R5, OpenTripPlanner, etc.) are too slow.

GTFS is a popular CSV-based format for sharing transit schedules - here's an official list of available feed directories.

This whole project feels to me like a great example of the baked data architectural pattern in action.

Here’s how I use LLMs to help me write code

Online discussions about using Large Language Models to help write code inevitably produce comments from developers who’s experiences have been disappointing. They often ask what they’re doing wrong—how come some people are reporting such great results when their own experiments have proved lacking?

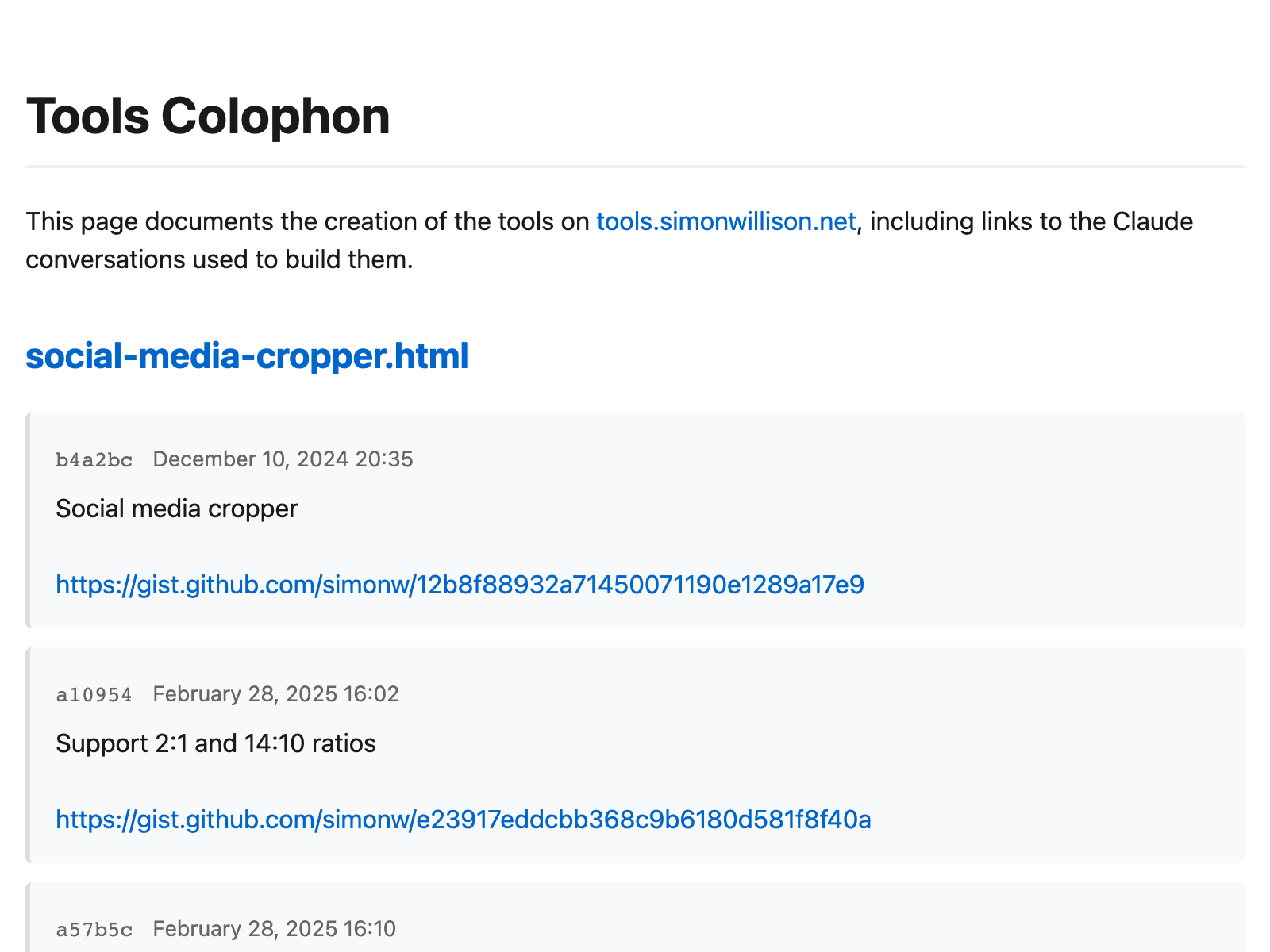

[... 5,178 words]simonw/git-scraper-template. I built this new GitHub template repository in preparation for a workshop I'm giving at NICAR (the data journalism conference) next week on Cutting-edge web scraping techniques.

One of the topics I'll be covering is Git scraping - creating a GitHub repository that uses scheduled GitHub Actions workflows to grab copies of websites and data feeds and store their changes over time using Git.

This template repository is designed to be the fastest possible way to get started with a new Git scraper: simple create a new repository from the template and paste the URL you want to scrape into the description field and the repository will be initialized with a custom script that scrapes and stores that URL.

It's modeled after my earlier shot-scraper-template tool which I described in detail in Instantly create a GitHub repository to take screenshots of a web page.

The new git-scraper-template repo took some help from Claude to figure out. It uses a custom script to download the provided URL and derive a filename to use based on the URL and the content type, detected using file --mime-type -b "$file_path" against the downloaded file.

It also detects if the downloaded content is JSON and, if it is, pretty-prints it using jq - I find this is a quick way to generate much more useful diffs when the content changes.

Using a Tailscale exit node with GitHub Actions. New TIL. I started running a git scraper against doge.gov to track changes made to that website over time. The DOGE site runs behind Cloudflare which was blocking requests from the GitHub Actions IP range, but I figured out how to run a Tailscale exit node on my Apple TV and use that to proxy my shot-scraper requests.

The scraper is running in simonw/scrape-doge-gov. It uses the new shot-scraper har command I added in shot-scraper 1.6 (and improved in shot-scraper 1.7).

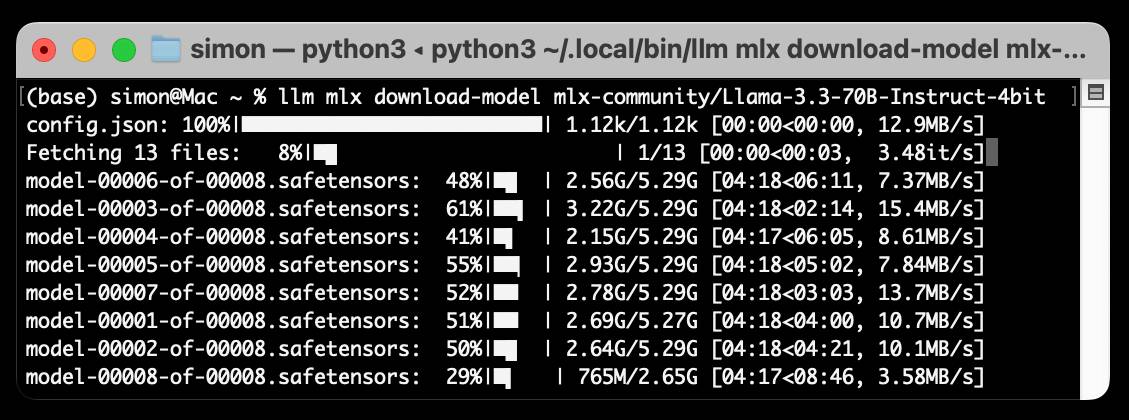

Run LLMs on macOS using llm-mlx and Apple’s MLX framework

llm-mlx is a brand new plugin for my LLM Python Library and CLI utility which builds on top of Apple’s excellent MLX array framework library and mlx-lm package. If you’re a terminal user or Python developer with a Mac this may be the new easiest way to start exploring local Large Language Models.

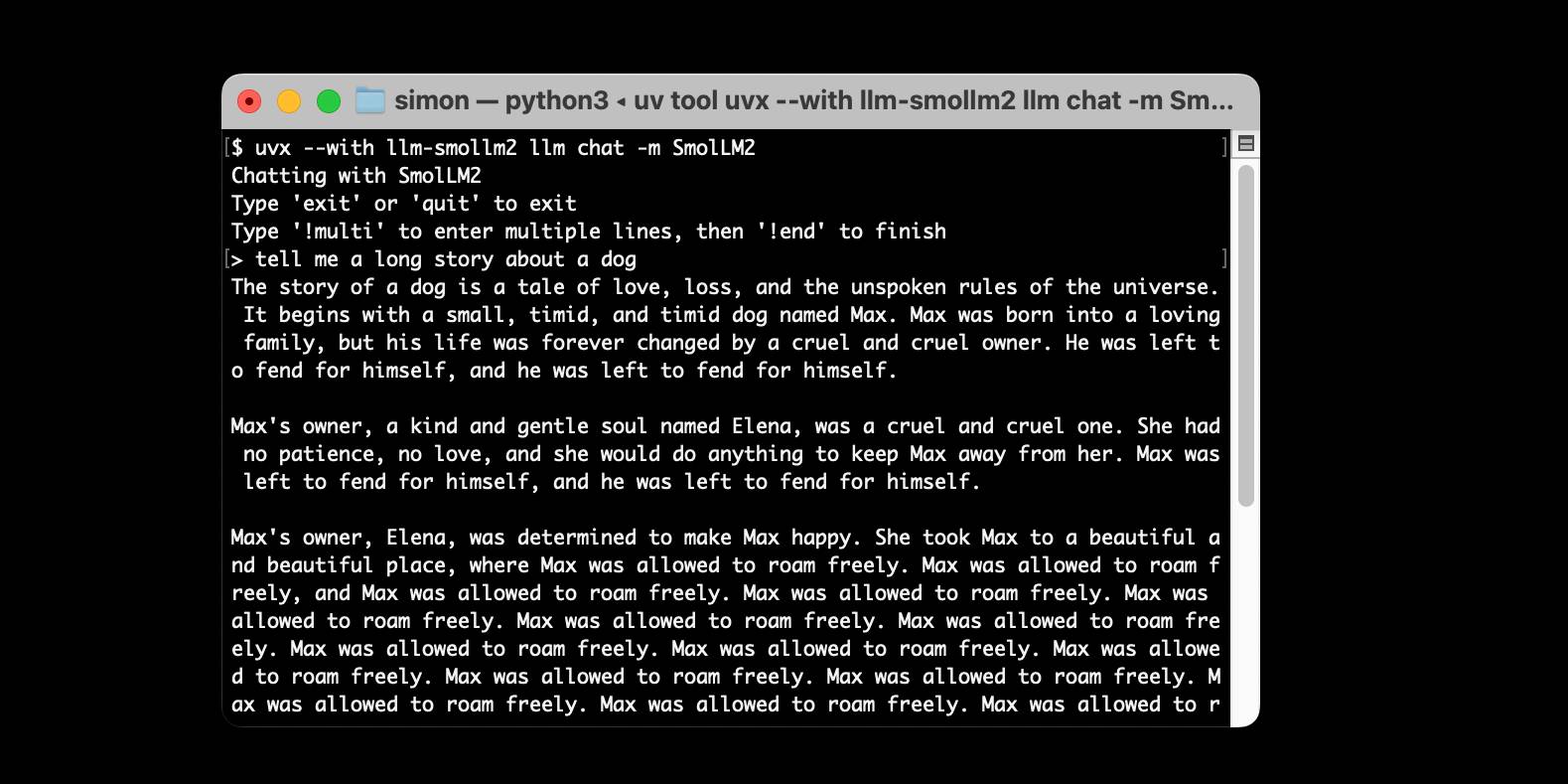

[... 1,524 words]Using pip to install a Large Language Model that’s under 100MB

I just released llm-smollm2, a new plugin for LLM that bundles a quantized copy of the SmolLM2-135M-Instruct LLM inside of the Python package.

[... 1,553 words]2024

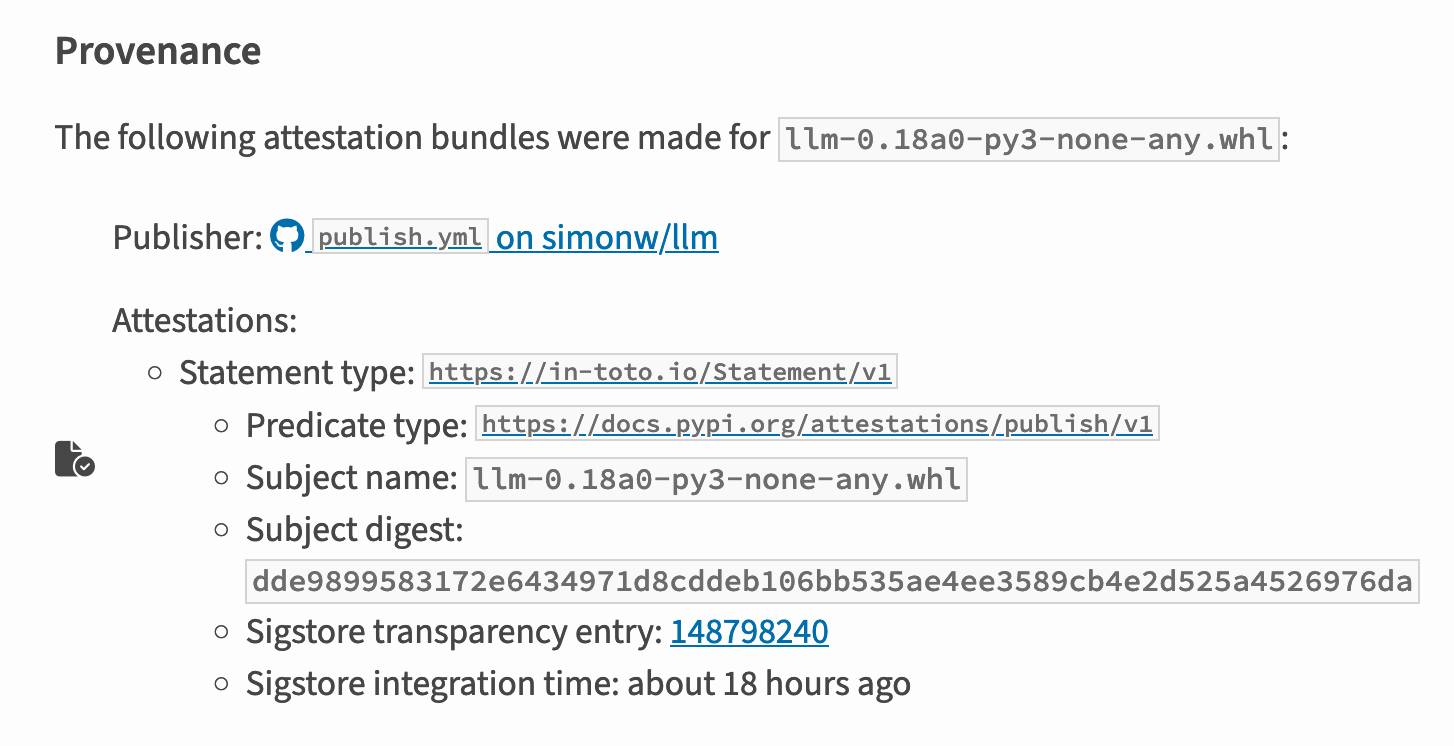

PyPI now supports digital attestations (via) Dustin Ingram:

PyPI package maintainers can now publish signed digital attestations when publishing, in order to further increase trust in the supply-chain security of their projects. Additionally, a new API is available for consumers and installers to verify published attestations.

This has been in the works for a while, and is another component of PyPI's approach to supply chain security for Python packaging - see PEP 740 – Index support for digital attestations for all of the underlying details.

A key problem this solves is cryptographically linking packages published on PyPI to the exact source code that was used to build those packages. In the absence of this feature there are no guarantees that the .tar.gz or .whl file you download from PyPI hasn't been tampered with (to add malware, for example) in a way that's not visible in the published source code.

These new attestations provide a mechanism for proving that a known, trustworthy build system was used to generate and publish the package, starting with its source code on GitHub.

The good news is that if you're using the PyPI Trusted Publishers mechanism in GitHub Actions to publish packages, you're already using this new system. I wrote about that system in January: Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions - and hundreds of my own PyPI packages are already using that system, thanks to my various cookiecutter templates.

Trail of Bits helped build this feature, and provide extra background about it on their own blog in Attestations: A new generation of signatures on PyPI:

As of October 29, attestations are the default for anyone using Trusted Publishing via the PyPA publishing action for GitHub. That means roughly 20,000 packages can now attest to their provenance by default, with no changes needed.

They also built Are we PEP 740 yet? (key implementation here) to track the rollout of attestations across the 360 most downloaded packages from PyPI. It works by hitting URLs such as https://pypi.org/simple/pydantic/ with a Accept: application/vnd.pypi.simple.v1+json header - here's the JSON that returns.

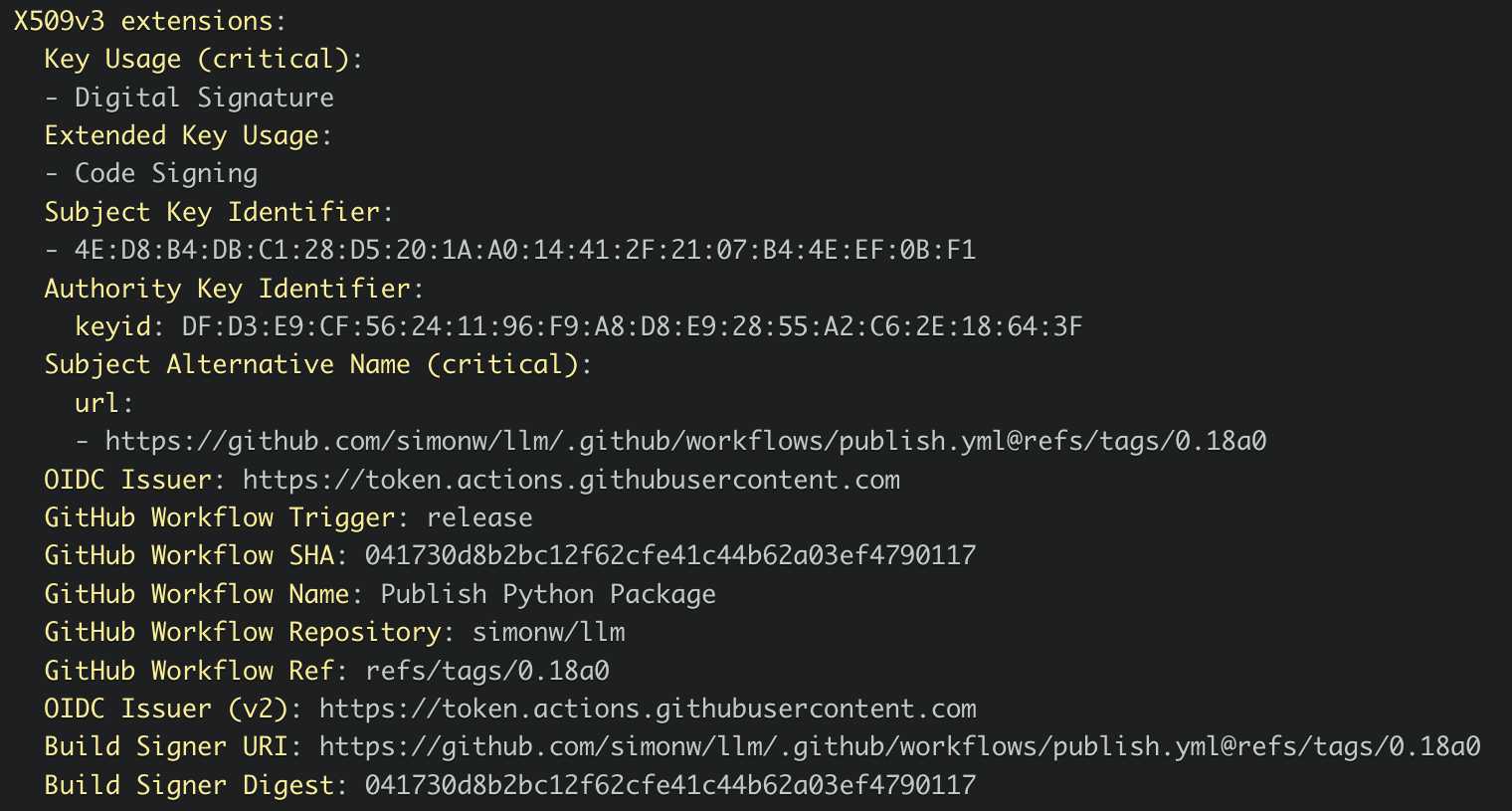

I published an alpha package using Trusted Publishers last night and the files for that release are showing the new provenance information already:

Which links to this Sigstore log entry with more details, including the Git hash that was used to build the package:

Sigstore is a transparency log maintained by Open Source Security Foundation (OpenSSF), a sub-project of the Linux Foundation.

Generating Descriptive Weather Reports with LLMs. Drew Breunig produces the first example I've seen in the wild of the new LLM attachments Python API. Drew's Downtown San Francisco Weather Vibes project combines output from a JSON weather API with the latest image from a webcam pointed at downtown San Francisco to produce a weather report "with a style somewhere between Jack Kerouac and J. Peterman".

Here's the Python code that constructs and executes the prompt. The code runs in GitHub Actions.

UV with GitHub Actions to run an RSS to README project.

Jeff Triplett demonstrates a very neat pattern for using uv to run Python scripts with their dependencies inside of GitHub Actions. First, add uv to the workflow using the setup-uv action:

- uses: astral-sh/setup-uv@v3

with:

enable-cache: true

cache-dependency-glob: "*.py"

This enables the caching feature, which stores uv's own cache of downloads from PyPI between runs. The cache-dependency-glob key ensures that this cache will be invalidated if any .py file in the repository is updated.

Now you can run Python scripts using steps that look like this:

- run: uv run fetch-rss.py

If that Python script begins with some dependency definitions (PEP 723) they will be automatically installed by uv run on the first run and reused from the cache in the future. From the start of fetch-rss.py:

# /// script

# requires-python = ">=3.11"

# dependencies = [

# "feedparser",

# "typer",

# ]

# ///

uv will download the required Python version and cache that as well.

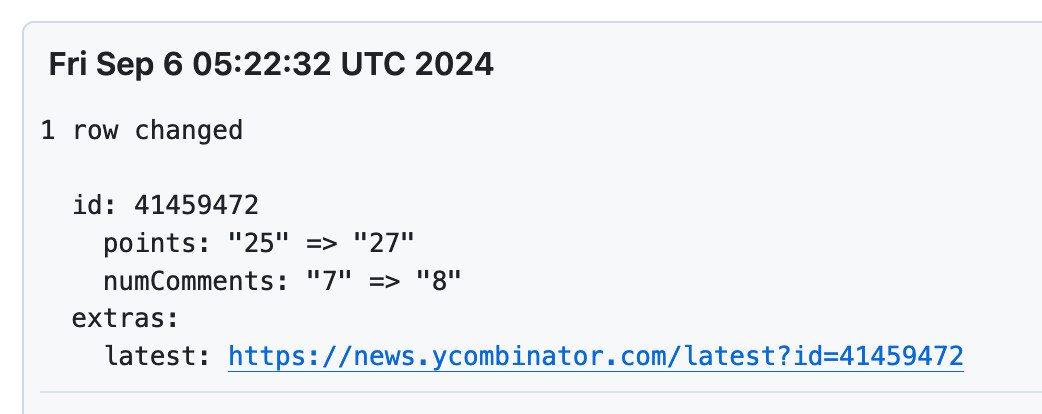

New improved commit messages for scrape-hacker-news-by-domain. My simonw/scrape-hacker-news-by-domain repo has a very specific purpose. Once an hour it scrapes the Hacker News /from?site=simonwillison.net page (and the equivalent for datasette.io) using my shot-scraper tool and stashes the parsed links, scores and comment counts in JSON files in that repo.

It does this mainly so I can subscribe to GitHub's Atom feed of the commit log - visit simonw/scrape-hacker-news-by-domain/commits/main and add .atom to the URL to get that.

NetNewsWire will inform me within about an hour if any of my content has made it to Hacker News, and the repo will track the score and comment count for me over time. I wrote more about how this works in Scraping web pages from the command line with shot-scraper back in March 2022.

Prior to the latest improvement, the commit messages themselves were pretty uninformative. The message had the date, and to actually see which Hacker News post it was referring to, I had to click through to the commit and look at the diff.

I built my csv-diff tool a while back to help address this problem: it can produce a slightly more human-readable version of a diff between two CSV or JSON files, ideally suited for including in a commit message attached to a git scraping repo like this one.

I got that working, but there was still room for improvement. I recently learned that any Hacker News thread has an undocumented URL at /latest?id=x which displays the most recently added comments at the top.

I wanted that in my commit messages, so I could quickly click a link to see the most recent comments on a thread.

So... I added one more feature to csv-diff: a new --extra option lets you specify a Python format string to be used to add extra fields to the displayed difference.

My GitHub Actions workflow now runs this command:

csv-diff simonwillison-net.json simonwillison-net-new.json \

--key id --format json \

--extra latest 'https://news.ycombinator.com/latest?id={id}' \

>> /tmp/commit.txt

This generates the diff between the two versions, using the id property in the JSON to tie records together. It adds a latest field linking to that URL.

The commits now look like this:

GitHub Actions: Faster Python runs with cached virtual environments (via) Adam Johnson shares his improved pattern for caching Python environments in GitHub Actions.

I've been using the pattern where you add cache: pip to the actions/setup-python block, but it has two disadvantages: if the tests fail the cache won't be saved at the end, and it still spends time installing the packages despite not needing to download them fresh since the wheels are in the cache.

Adam's pattern works differently: he caches the entire .venv/ folder between runs, avoiding the overhead of installing all of those packages. He also wraps the block that installs the packages between explicit actions/cache/restore and actions/cache/save steps to avoid the case where failed tests skip the cache persistence.

qrank (via) Interesting and very niche project by Colin Dellow.

Wikidata has pages for huge numbers of concepts, people, places and things.

One of the many pieces of data they publish is QRank—“ranking Wikidata entities by aggregating page views on Wikipedia, Wikispecies, Wikibooks, Wikiquote, and other Wikimedia projects”. Every item gets a score and these scores can be used to answer questions like “which island nations get the most interest across Wikipedia”—potentially useful for things like deciding which labels to display on a highly compressed map of the world.

QRank is published as a gzipped CSV file.

Colin’s hikeratlas/qrank GitHub repository runs weekly, fetches the latest qrank.csv.gz file and loads it into a SQLite database using SQLite’s “.import” mechanism. Then it publishes the resulting SQLite database as an asset attached to the “latest” GitHub release on that repo—currently a 307MB file.

The database itself has just a single table mapping the Wikidata ID (a primary key integer) to the latest QRank—another integer. You’d need your own set of data with Wikidata IDs to join against this to do anything useful.

I’d never thought of using GitHub Releases for this kind of thing. I think it’s a really interesting pattern.

GitHub Actions: Introducing the new M1 macOS runner available to open source! Set “runs-on: macos-14” to run a GitHub Actions workflow on a 7GB of RAM ARM M1 runner. I have been looking forward to this for ages: it should make it much easier to build releases of both Electron apps and Python binary wheels for Apple Silicon.

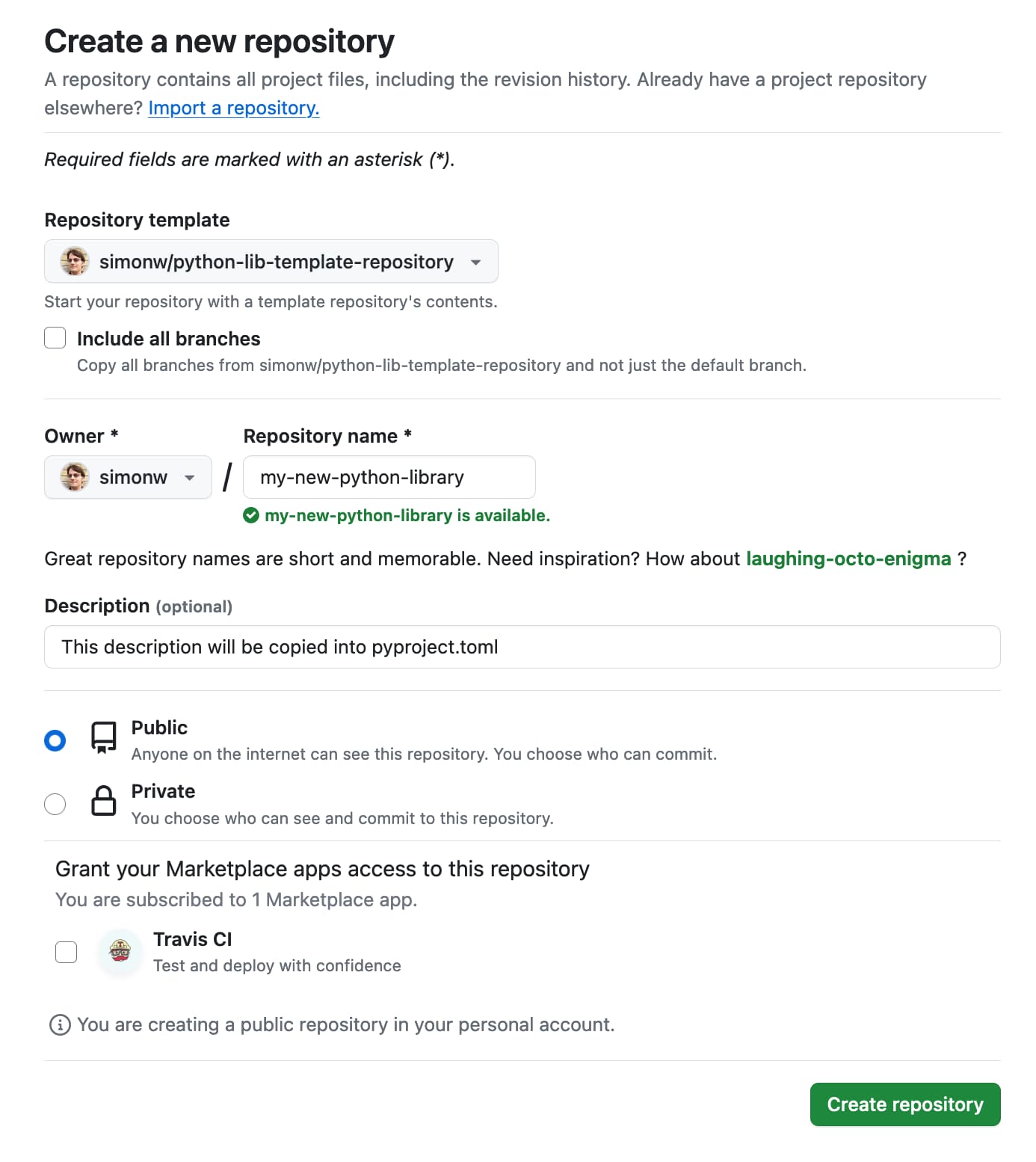

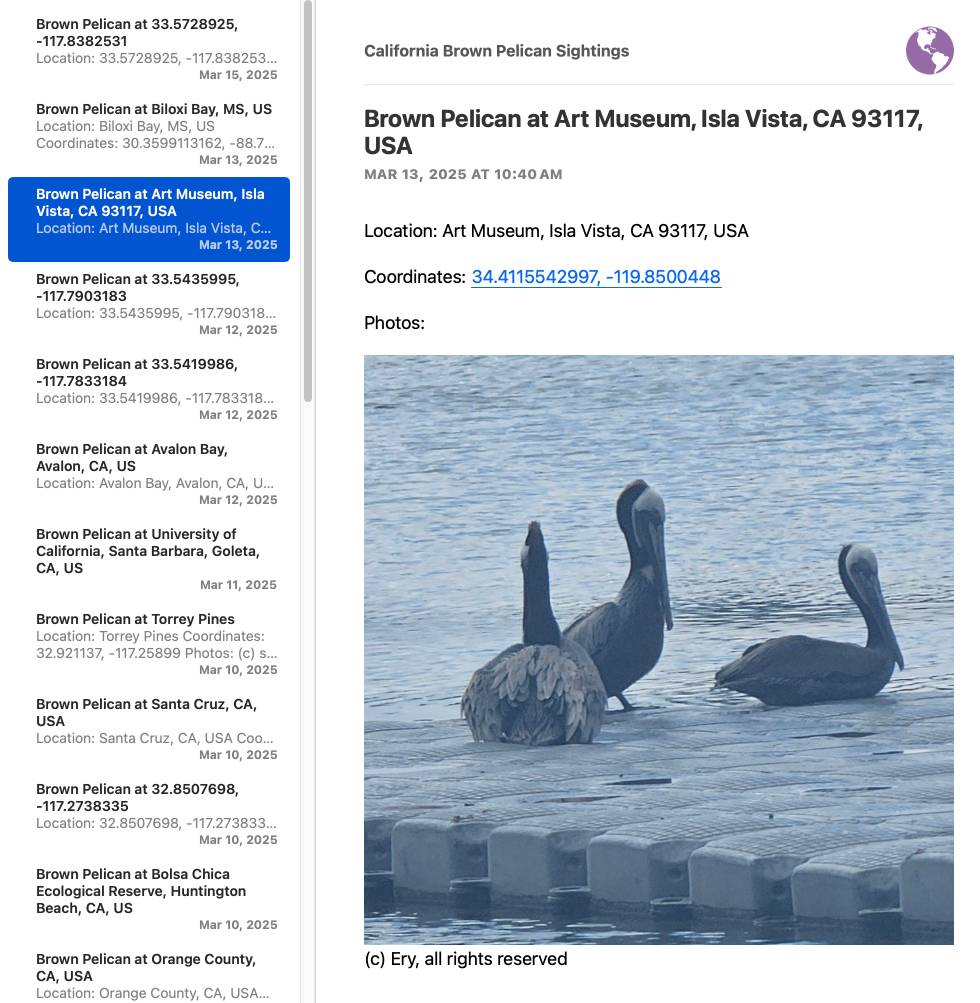

Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions

I use cookiecutter to start almost all of my Python projects. It helps me quickly generate a skeleton of a project with my preferred directory structure and configured tools.

[... 686 words]2022

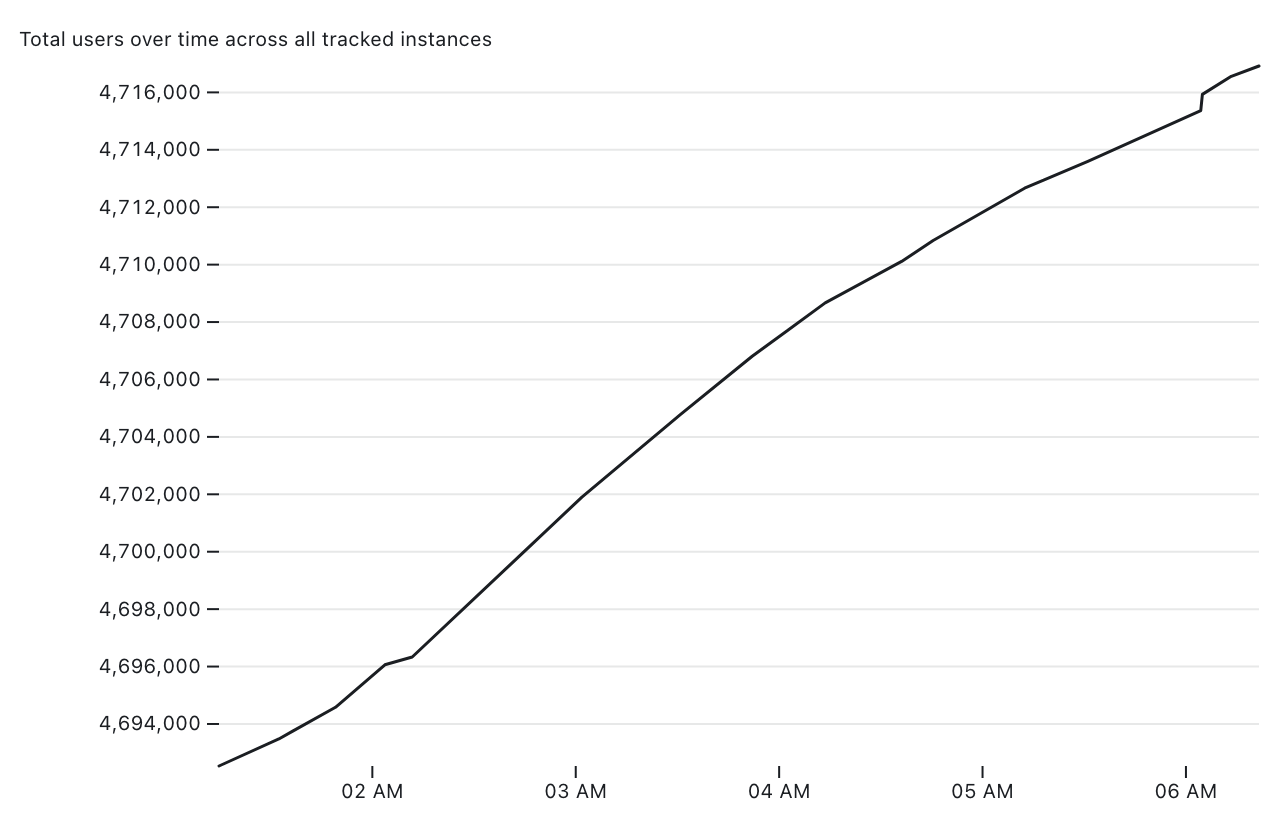

Tracking Mastodon user numbers over time with a bucket of tricks

Mastodon is definitely having a moment. User growth is skyrocketing as more and more people migrate over from Twitter.

[... 1,534 words]Leveraging ’shot-scraper’ and creating image diffs. Üllar Seerme has a neat recipe for using shot-scraper and ImageMagick to create differential animations showing how a scraped web page has visually changed.

How to create a Python package in 2022 (via) Fantastic tutorial on modern Python packaging by Rodrigo Girão Serrão. I’ve been meaning to figure out Poetry for a while now and this gave me exactly the information I needed to start figuring it out. Great coverage of GitHub Actions, Tox and pre-commit as well.

Automating screenshots for the Datasette documentation using shot-scraper

I released shot-scraper back in March as a tool for keeping screenshots in documentation up-to-date.

[... 1,810 words]Software engineering practices

Gergely Orosz started a Twitter conversation asking about recommended “software engineering practices” for development teams.

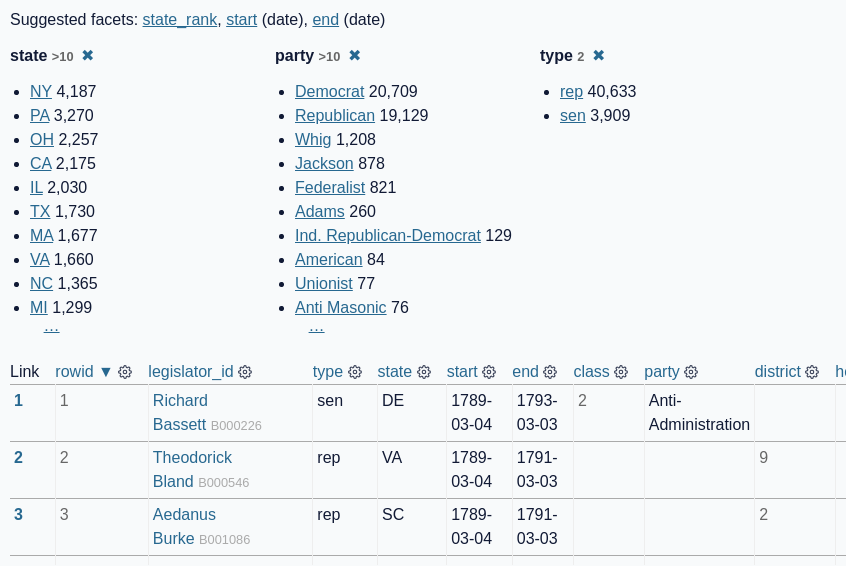

[... 1,557 words]A tool to run caption extraction against online videos using Whisper and GitHub Issues/Actions

I released a new project this weekend, built during the Bellingcat Hackathon (I came second!) It’s called Action Transcription and it’s a tool for caturing captions and transcripts from online videos.

[... 1,362 words]