Automatically opening issues when tracked file content changes

28th April 2022

I figured out a GitHub Actions pattern to keep track of a file published somewhere on the internet and automatically open a new repository issue any time the contents of that file changes.

Extracting GZipMiddleware from Starlette

Here’s why I needed to solve this problem.

I want to add gzip support to my Datasette open source project. Datasette builds on the Python ASGI standard, and Starlette provides an extremely well tested, robust GZipMiddleware class that adds gzip support to any ASGI application. As with everything else in Starlette, it’s really good code.

The problem is, I don’t want to add the whole of Starlette as a dependency. I’m trying to keep Datasette’s core as small as possible, so I’m very careful about new dependencies. Starlette itself is actually very light (and only has a tiny number of dependencies of its own) but I still don’t want the whole thing just for that one class.

So I decided to extract the GZipMiddleware class into a separate Python package, under the same BSD license as Starlette itself.

The result is my new asgi-gzip package, now available on PyPI.

What if Starlette fixes a bug?

The problem with extracting code like this is that Starlette is a very effectively maintained package. What if they make improvements or fix bugs in the GZipMiddleware class? How can I make sure to apply those same fixes to my extracted copy?

As I thought about this challenge, I realized I had most of the solution already.

Git scraping is the name I’ve given to the trick of running a periodic scraper that writes to a git repository in order to track changes to data over time.

It may seem redundant to do this against a file that already lives in version control elsewhere—but in addition to tracking changes, Git scraping can offfer a cheap and easy way to add automation that triggers when a change is detected.

I need an actionable alert any time the Starlette code changes so I can review the change and apply a fix to my own library, if necessary.

Since I already run all of my projects out of GitHub issues, automatically opening an issue against the asgi-gzip repository would be ideal.

My track.yml workflow does exactly that: it implements the Git scraping pattern against the gzip.py module in Starlette, and files an issue any time it detects changes to that file.

Starlette haven’t made any changes to that file since I started tracking it, so I created a test repo to try this out.

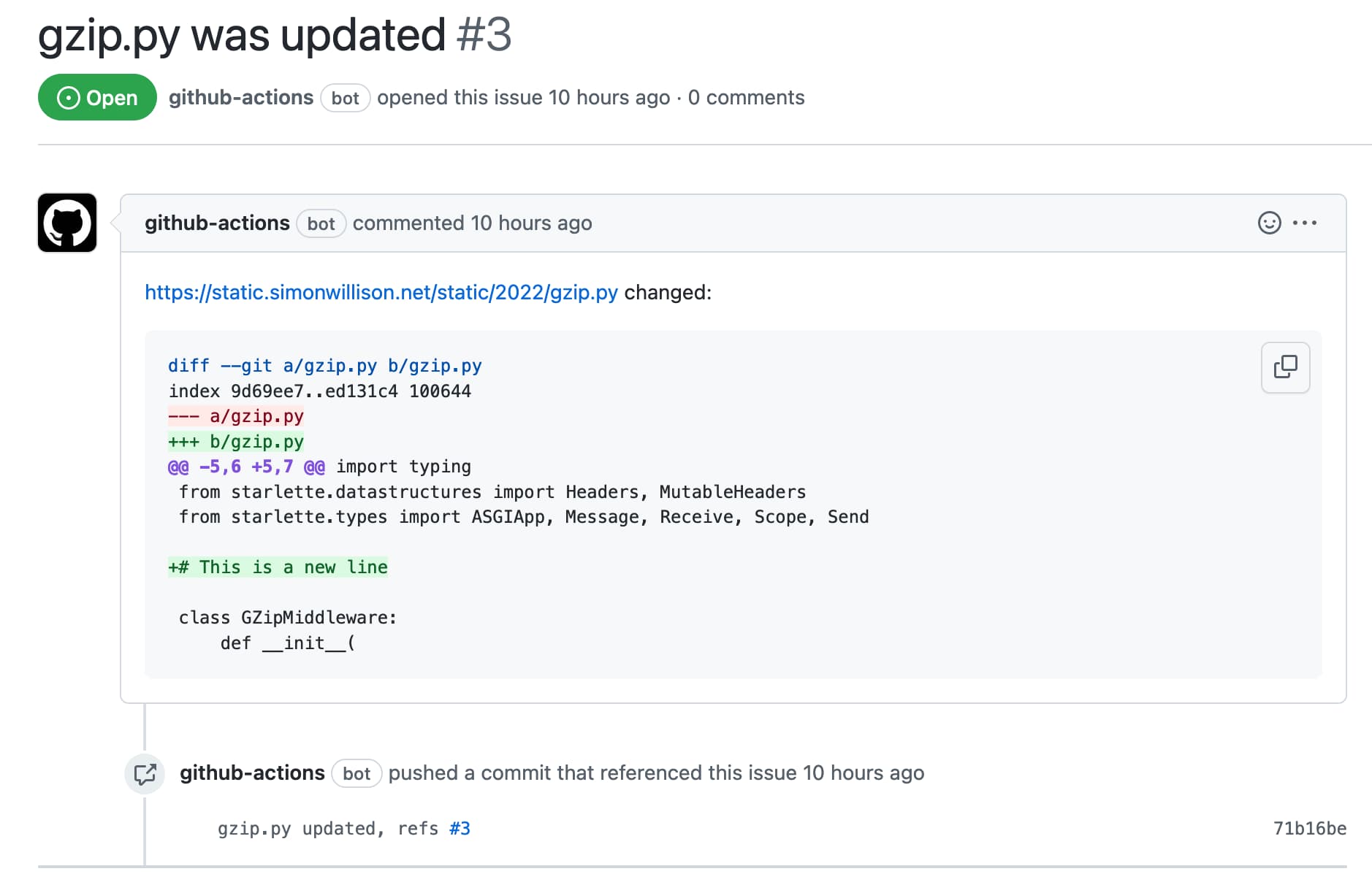

Here’s one of the example issues. I decided to include the visual diff in the issue description and have a link to it from the underlying commit as well.

How it works

The implementation is contained entirely in this track.yml workflow. I designed this to be contained as a single file to make it easy to copy and paste it to adapt it for other projects.

It uses actions/github-script, which makes it easy to do things like file new issues using JavaScript.

Here’s a heavily annotated copy:

name: Track the Starlette version of this

# Run on repo pushes, and if a user clicks the "run this action" button,

# and on a schedule at 5:21am UTC every day

on:

push:

workflow_dispatch:

schedule:

- cron: '21 5 * * *'

# Without this block I got this error when the action ran:

# HttpError: Resource not accessible by integration

permissions:

# Allow the action to create issues

issues: write

# Allow the action to commit back to the repository

contents: write

jobs:

check:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- uses: actions/github-script@v6

# Using env: here to demonstrate how an action like this can

# be adjusted to take dynamic inputs

env:

URL: https://raw.githubusercontent.com/encode/starlette/master/starlette/middleware/gzip.py

FILE_NAME: tracking/gzip.py

with:

script: |

const { URL, FILE_NAME } = process.env;

// promisify pattern for getting an await version of child_process.exec

const util = require("util");

// Used exec_ here because 'exec' variable name is already used:

const exec_ = util.promisify(require("child_process").exec);

// Use curl to download the file

await exec_(`curl -o ${FILE_NAME} ${URL}`);

// Use 'git diff' to detect if the file has changed since last time

const { stdout } = await exec_(`git diff ${FILE_NAME}`);

if (stdout) {

// There was a diff to that file

const title = `${FILE_NAME} was updated`;

const body =

`${URL} changed:` +

"\n\n```diff\n" +

stdout +

"\n```\n\n" +

"Close this issue once those changes have been integrated here";

const issue = await github.rest.issues.create({

owner: context.repo.owner,

repo: context.repo.repo,

title: title,

body: body,

});

const issueNumber = issue.data.number;

// Now commit and reference that issue number, so the commit shows up

// listed at the bottom of the issue page

const commitMessage = `${FILE_NAME} updated, refs #${issueNumber}`;

// https://til.simonwillison.net/github-actions/commit-if-file-changed

await exec_(`git config user.name "Automated"`);

await exec_(`git config user.email "actions@users.noreply.github.com"`);

await exec_(`git add -A`);

await exec_(`git commit -m "${commitMessage}" || exit 0`);

await exec_(`git pull --rebase`);

await exec_(`git push`);

}In the asgi-gzip repository I keep the fetched gzip.py file in a tracking/ directory. This directory isn’t included in the Python package that gets uploaded to PyPI—it’s there only so that my code can track changes to it over time.

More interesting applications

I built this to solve my "tell me when Starlette update their gzip.py file" problem, but clearly this pattern has much more interesting uses.

You could point this at any web page to get a new GitHub issue opened when that page content changes. Subscribe to notifications for that repository and you get a robust , shared mechanism for alerts—plus an issue system where you can post additional comments and close the issue once someone has reviewed the change.

There’s a lot of potential here for solving all kinds of interesting problems. And it doesn’t cost anything either: GitHub Actions (somehow) remains completely free for public repositories!

Update: October 13th 2022

Almost six months after writing about this... it triggered for the first time!

Here’s the issue that the script opened: #4: tracking/gzip.py was updated.

I applied the improvement (Marcelo Trylesinski and Kai Klingenberg updated Starlette’s code to avoid gzipping if the response already had a Content-Encoding header) and released version 0.2 of the package.

More recent articles

- Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode - 11th December 2024

- ChatGPT Canvas can make API requests now, but it's complicated - 10th December 2024

- I can now run a GPT-4 class model on my laptop - 9th December 2024