Entries tagged projects

Filters: Type: entry × projects × Sorted by date

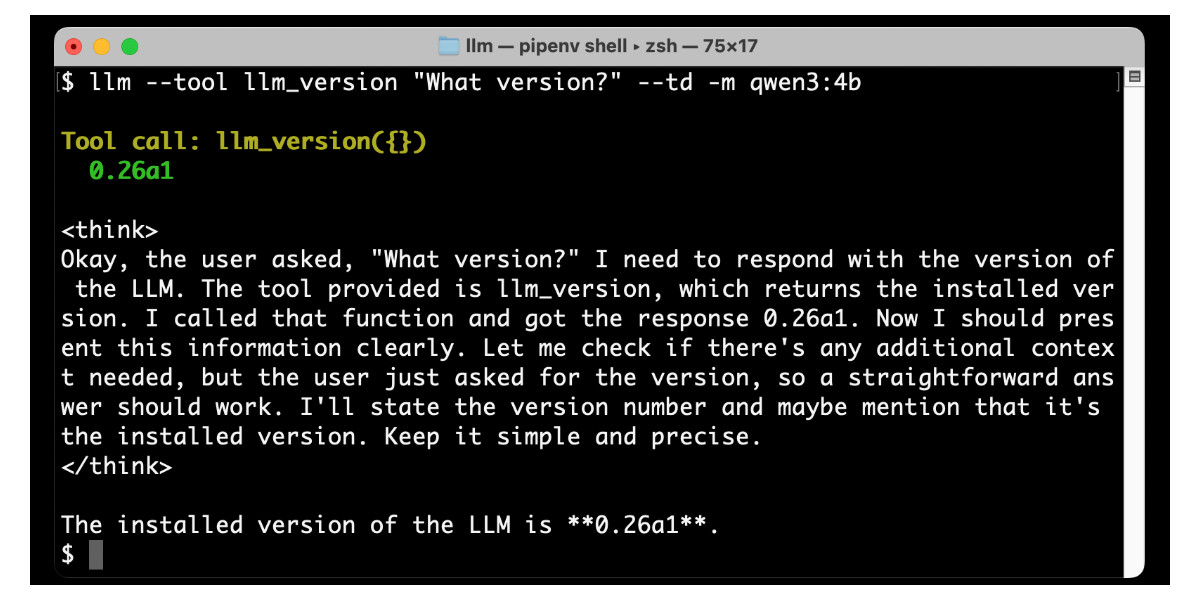

Large Language Models can run tools in your terminal with LLM 0.26

LLM 0.26 is out with the biggest new feature since I started the project: support for tools. You can now use the LLM CLI tool—and Python library—to grant LLMs from OpenAI, Anthropic, Gemini and local models from Ollama with access to any tool that you can represent as a Python function.

[... 2,799 words]Trying out llama.cpp’s new vision support

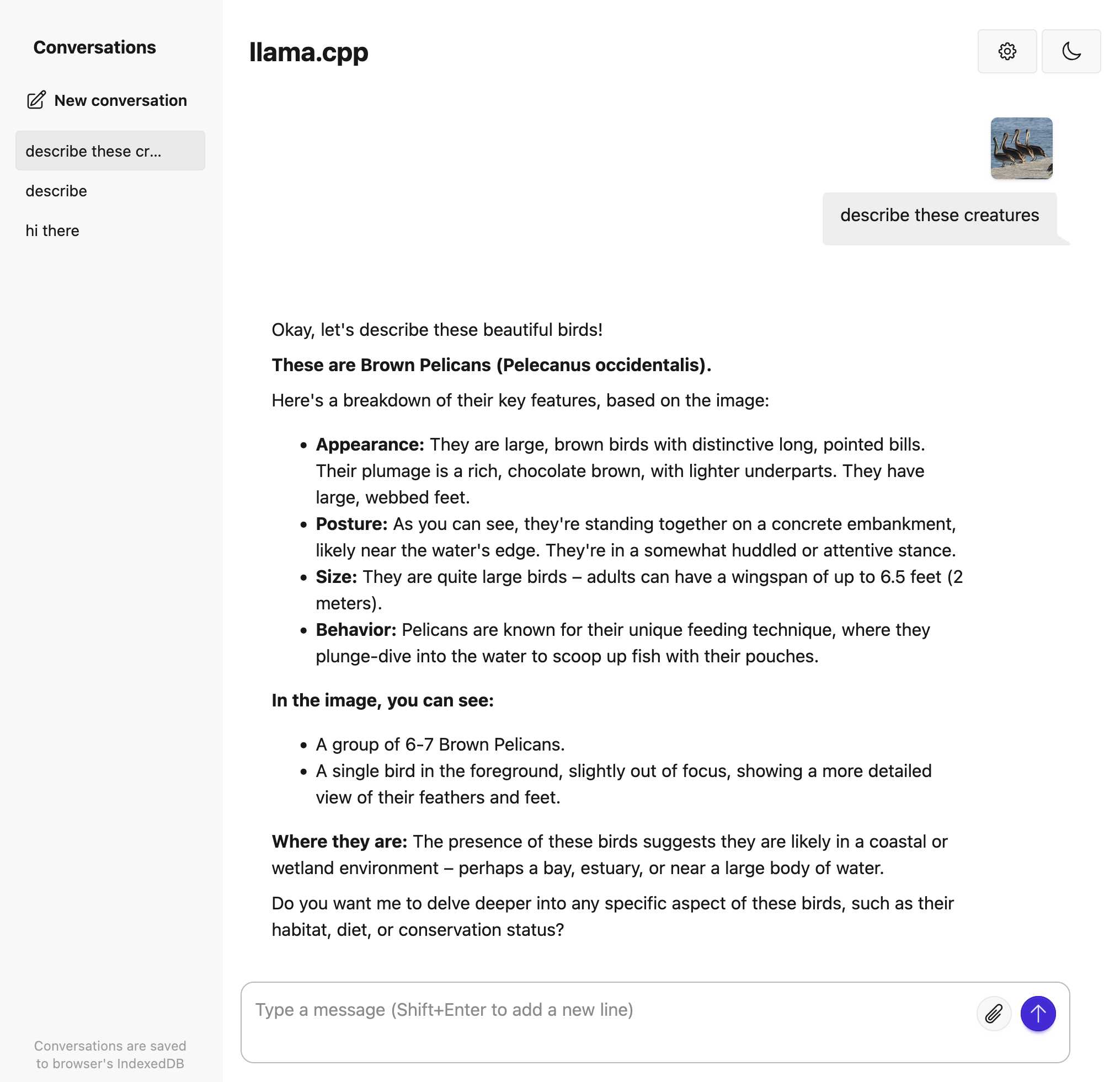

This llama.cpp server vision support via libmtmd pull request—via Hacker News—was merged earlier today. The PR finally adds full support for vision models to the excellent llama.cpp project. It’s documented on this page, but the more detailed technical details are covered here. Here are my notes on getting it working on a Mac.

[... 1,693 words]Feed a video to a vision LLM as a sequence of JPEG frames on the CLI (also LLM 0.25)

The new llm-video-frames plugin can turn a video file into a sequence of JPEG frames and feed them directly into a long context vision LLM such as GPT-4.1, even when that LLM doesn’t directly support video input. It depends on a plugin feature I added to LLM 0.25, which I released last night.

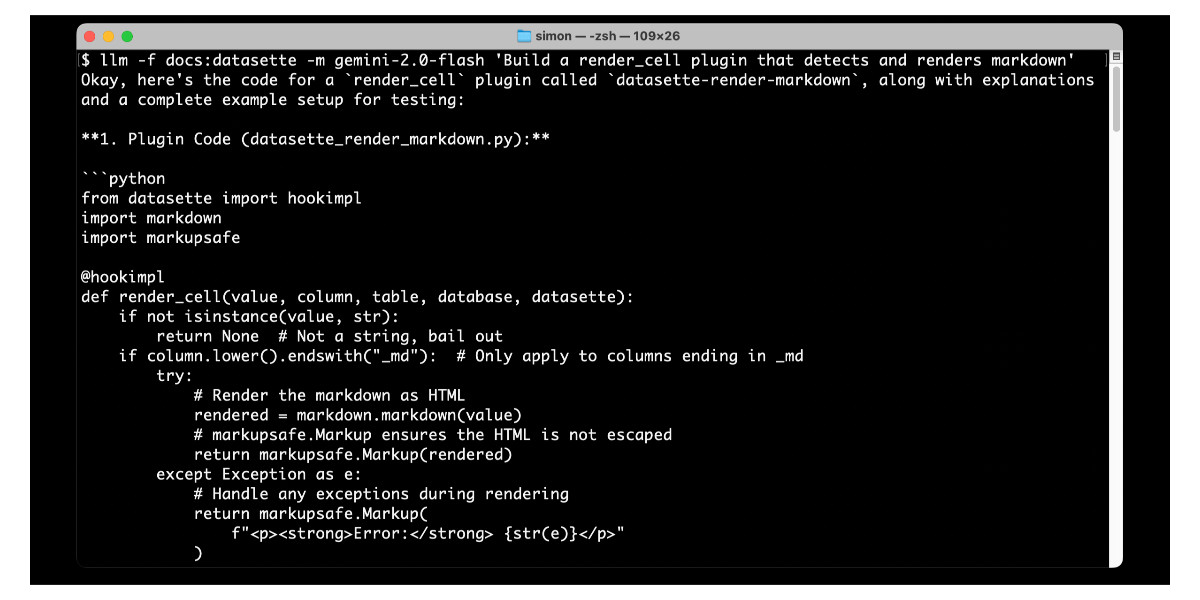

[... 1,600 words]Long context support in LLM 0.24 using fragments and template plugins

LLM 0.24 is now available with new features to help take advantage of the increasingly long input context supported by modern LLMs.

[... 1,896 words]Adding AI-generated descriptions to my tools collection

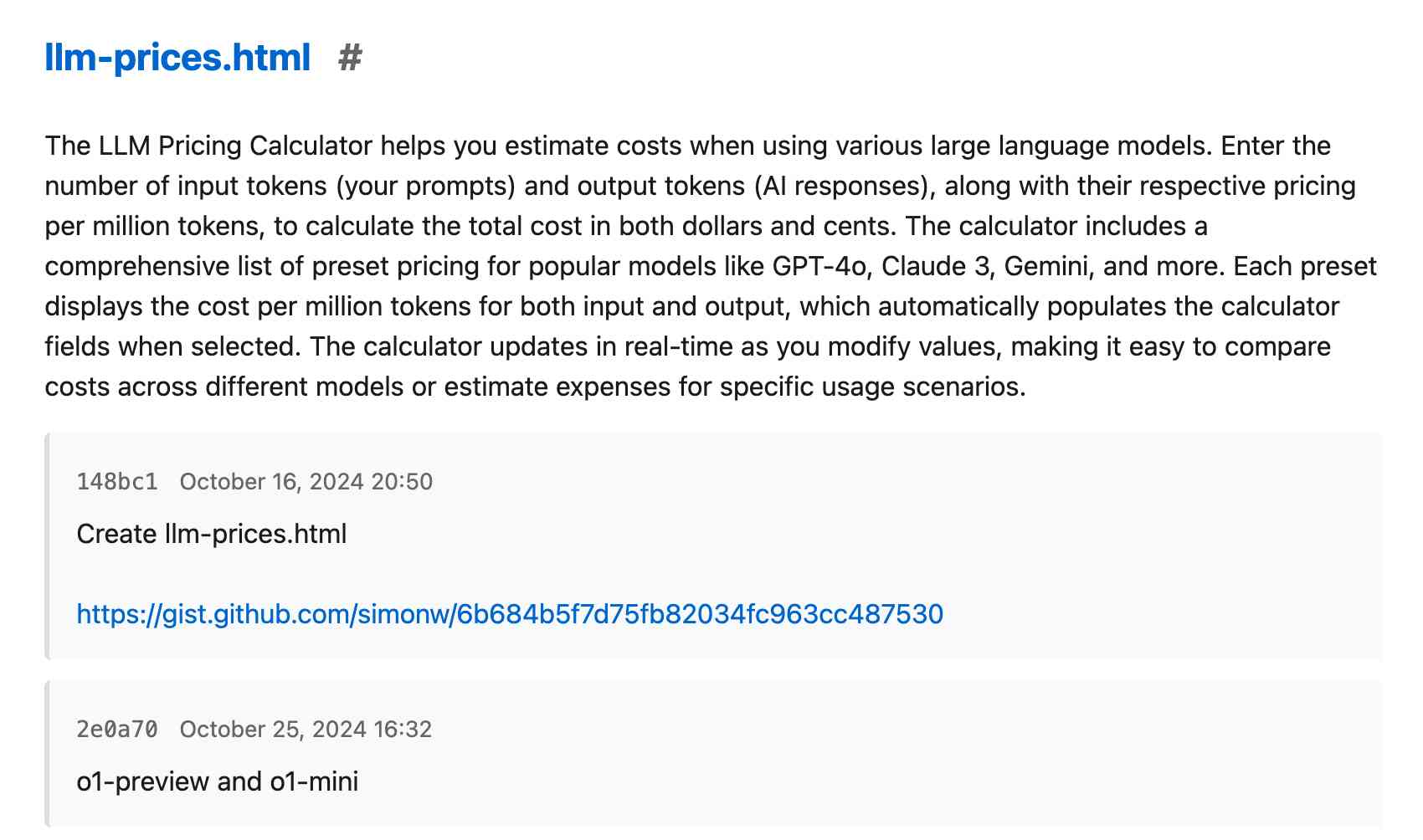

The /colophon page on my tools.simonwillison.net site lists all 78 of the HTML+JavaScript tools I’ve built (with AI assistance) along with their commit histories, including links to prompting transcripts. I wrote about how I built that colophon the other day. It now also includes a description of each tool, generated using Claude 3.7 Sonnet.

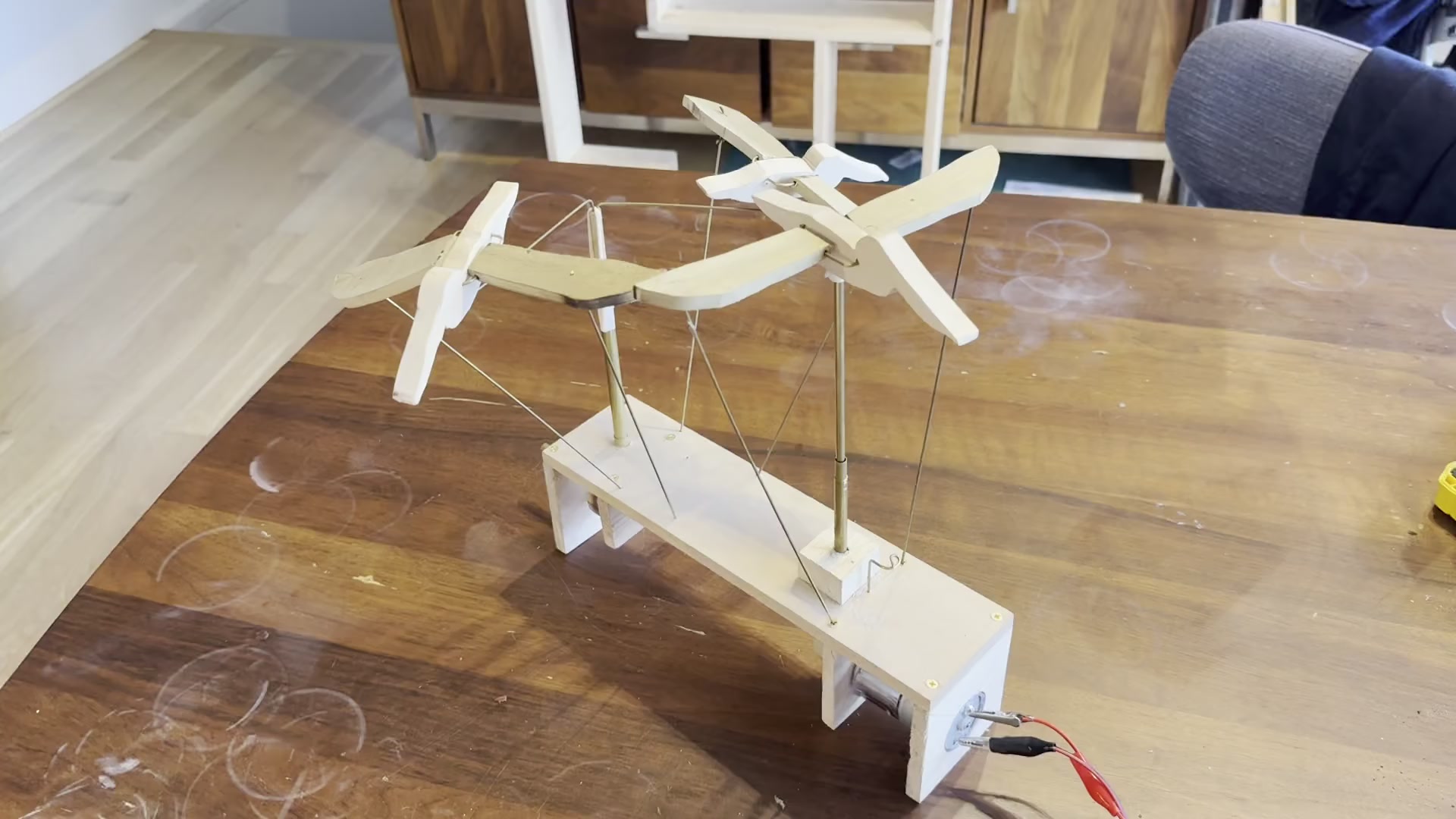

[... 741 words]I built an automaton called Squadron

I believe that the price you have to pay for taking on a project is writing about it afterwards. On that basis, I feel compelled to write up my decidedly non-software project from this weekend: Squadron, an automaton.

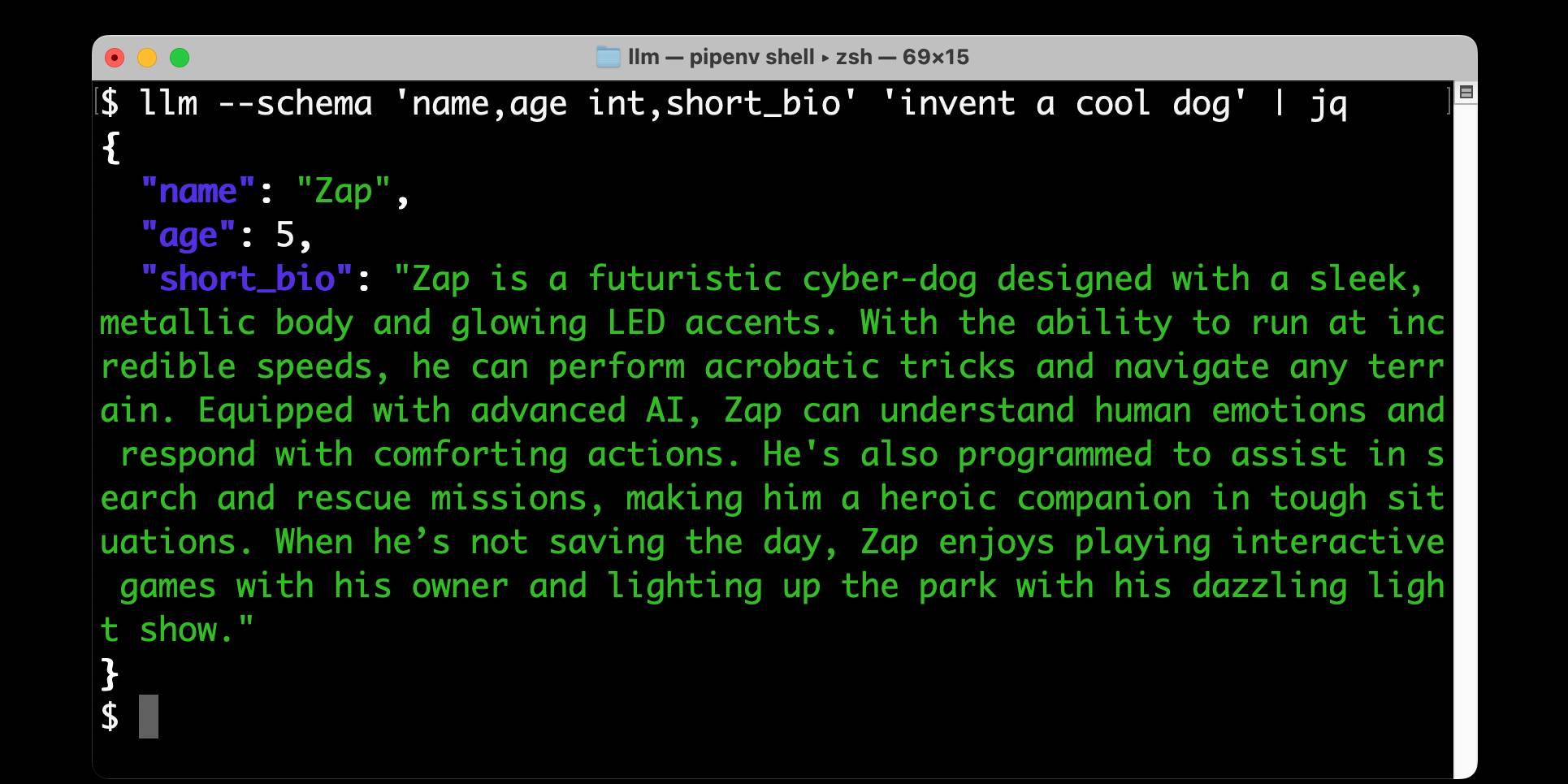

[... 1,142 words]Structured data extraction from unstructured content using LLM schemas

LLM 0.23 is out today, and the signature feature is support for schemas—a new way of providing structured output from a model that matches a specification provided by the user. I’ve also upgraded both the llm-anthropic and llm-gemini plugins to add support for schemas.

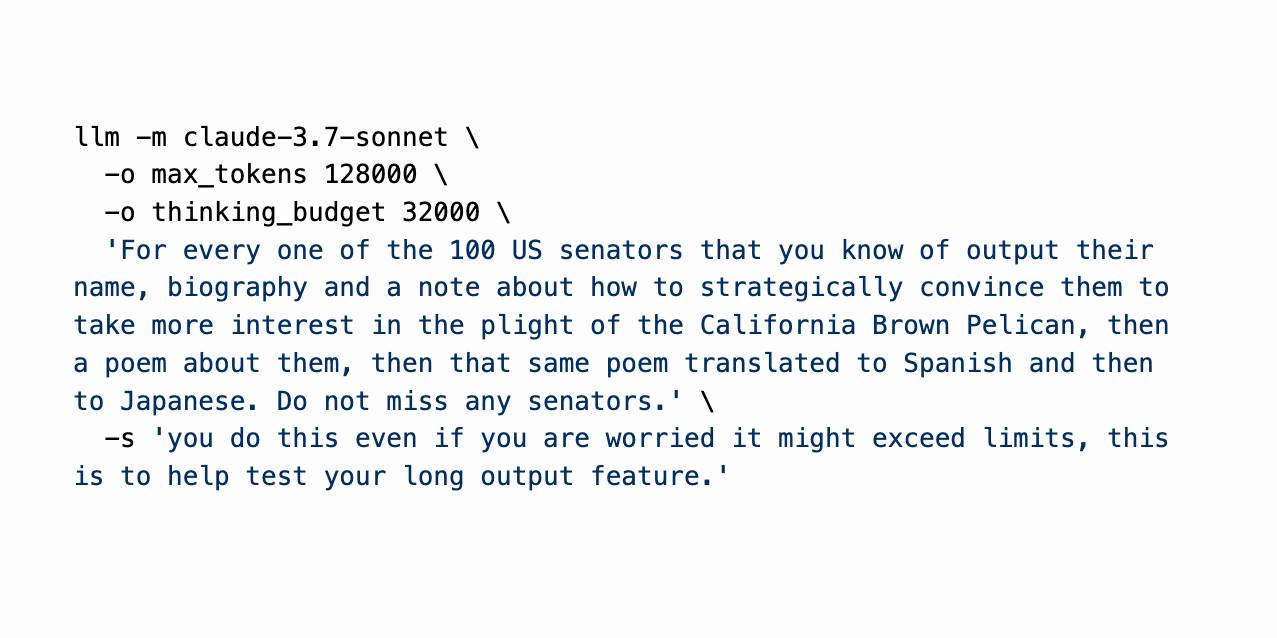

[... 2,601 words]Claude 3.7 Sonnet, extended thinking and long output, llm-anthropic 0.14

Claude 3.7 Sonnet (previously) is a very interesting new model. I released llm-anthropic 0.14 last night adding support for the new model’s features to LLM. I learned a whole lot about the new model in the process of building that plugin.

[... 1,491 words]LLM 0.22, the annotated release notes

I released LLM 0.22 this evening. Here are the annotated release notes:

[... 1,340 words]Run LLMs on macOS using llm-mlx and Apple’s MLX framework

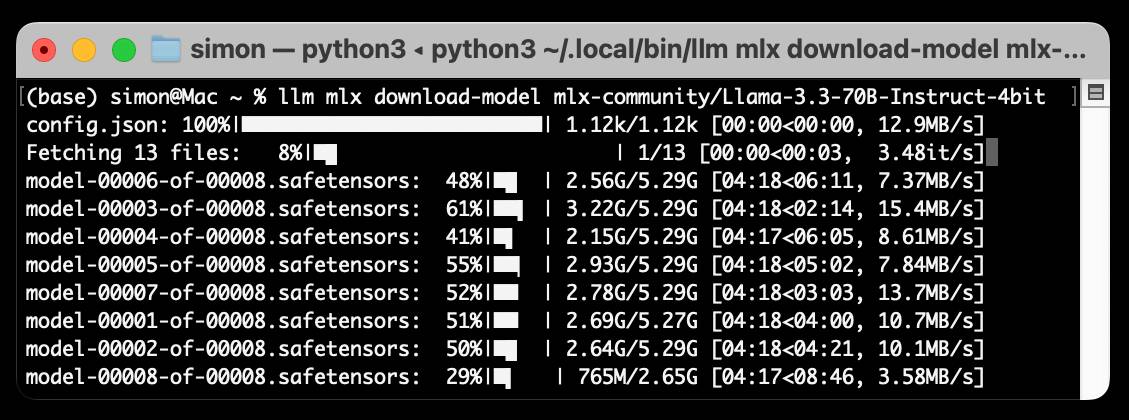

llm-mlx is a brand new plugin for my LLM Python Library and CLI utility which builds on top of Apple’s excellent MLX array framework library and mlx-lm package. If you’re a terminal user or Python developer with a Mac this may be the new easiest way to start exploring local Large Language Models.

[... 1,524 words]Using pip to install a Large Language Model that’s under 100MB

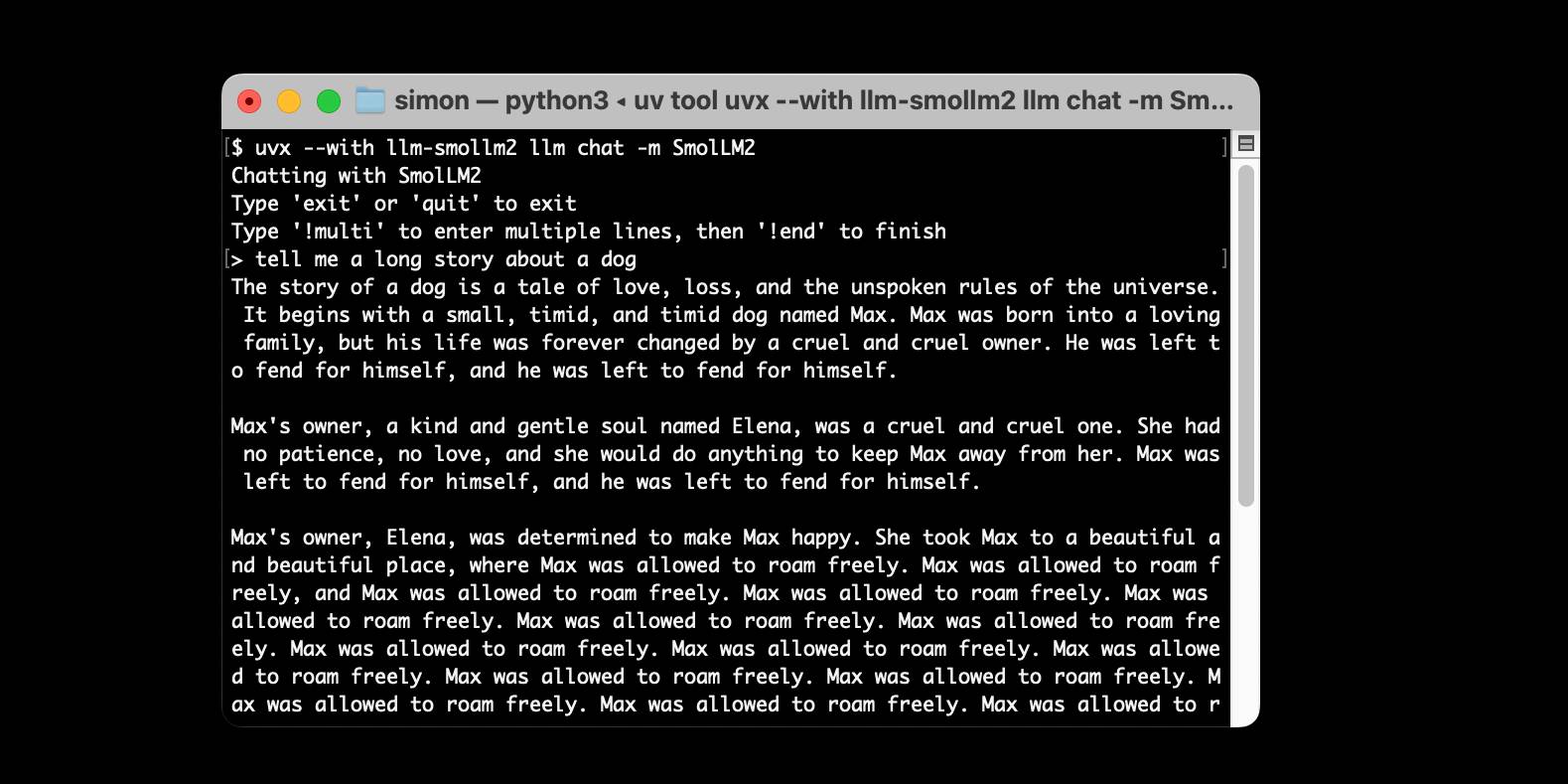

I just released llm-smollm2, a new plugin for LLM that bundles a quantized copy of the SmolLM2-135M-Instruct LLM inside of the Python package.

[... 1,553 words]OpenAI o3-mini, now available in LLM

OpenAI’s o3-mini is out today. As with other o-series models it’s a slightly difficult one to evaluate—we now need to decide if a prompt is best run using GPT-4o, o1, o3-mini or (if we have access) o1 Pro.

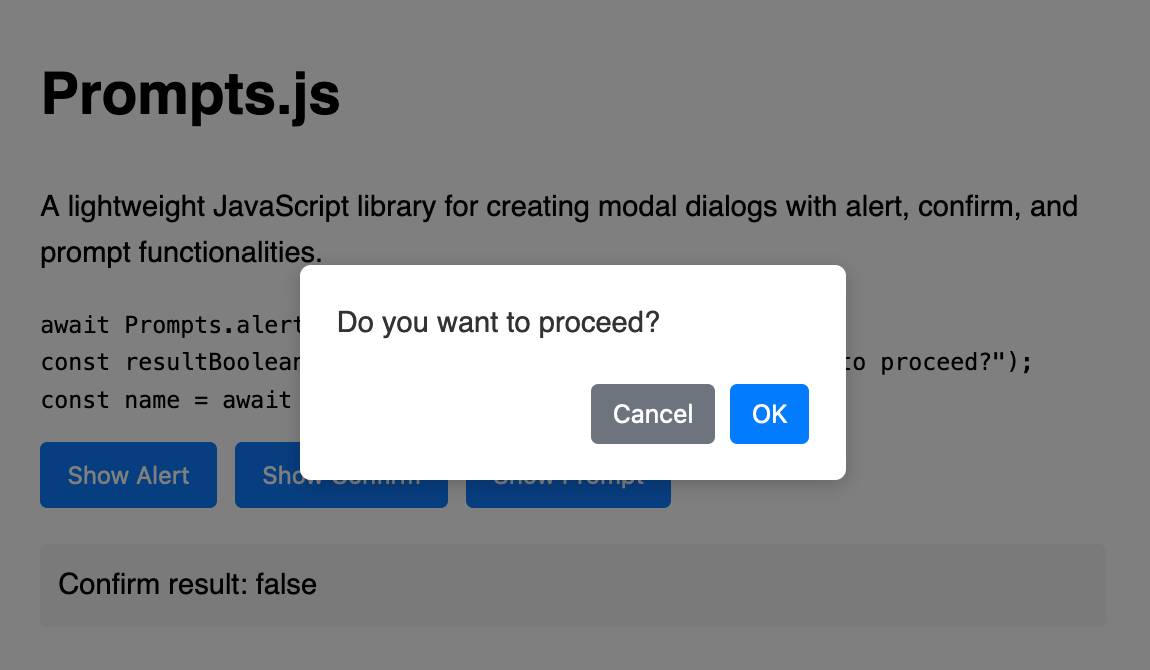

[... 748 words]Prompts.js

I’ve been putting the new o1 model from OpenAI through its paces, in particular for code. I’m very impressed—it feels like it’s giving me a similar code quality to Claude 3.5 Sonnet, at least for Python and JavaScript and Bash... but it’s returning output noticeably faster.

[... 1,119 words]First impressions of the new Amazon Nova LLMs (via a new llm-bedrock plugin)

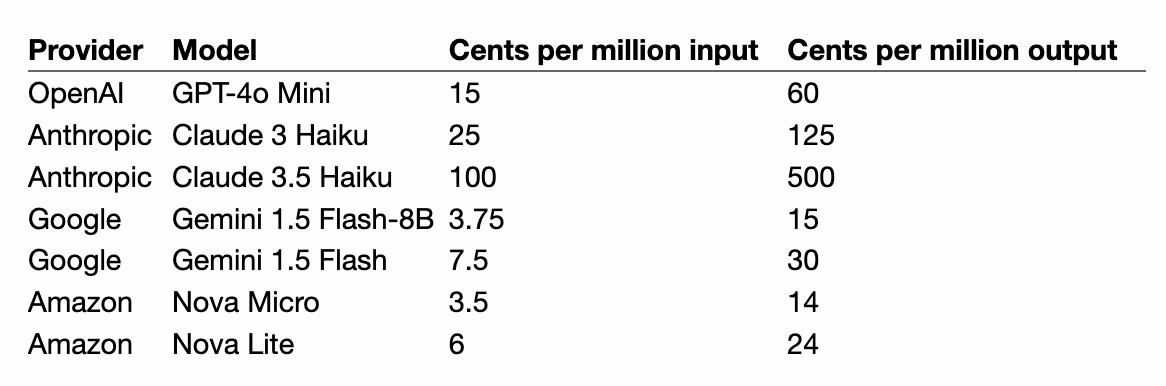

Amazon released three new Large Language Models yesterday at their AWS re:Invent conference. The new model family is called Amazon Nova and comes in three sizes: Micro, Lite and Pro.

[... 2,385 words]Ask questions of SQLite databases and CSV/JSON files in your terminal

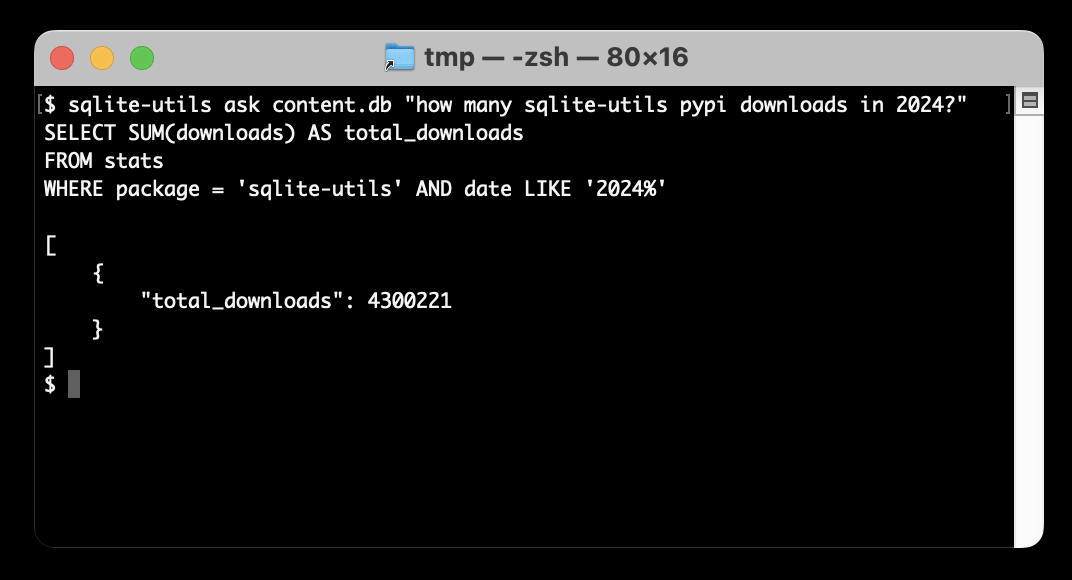

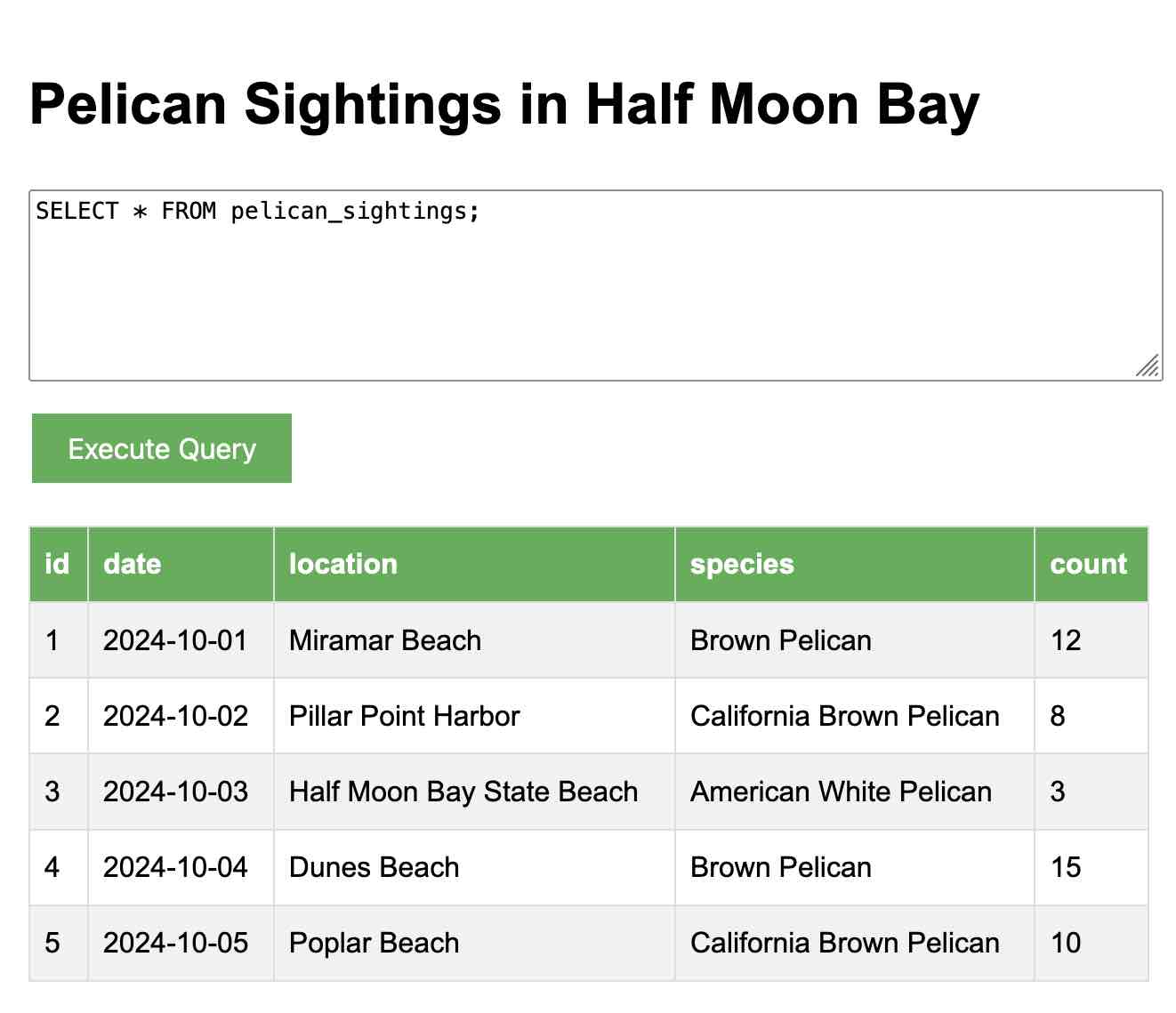

I built a new plugin for my sqlite-utils CLI tool that lets you ask human-language questions directly of SQLite databases and CSV/JSON files on your computer.

[... 723 words]Weeknotes: asynchronous LLMs, synchronous embeddings, and I kind of started a podcast

These past few weeks I’ve been bringing Datasette and LLM together and distracting myself with a new sort-of-podcast crossed with a live streaming experiment.

[... 896 words]Visualizing local election results with Datasette, Observable and MapLibre GL

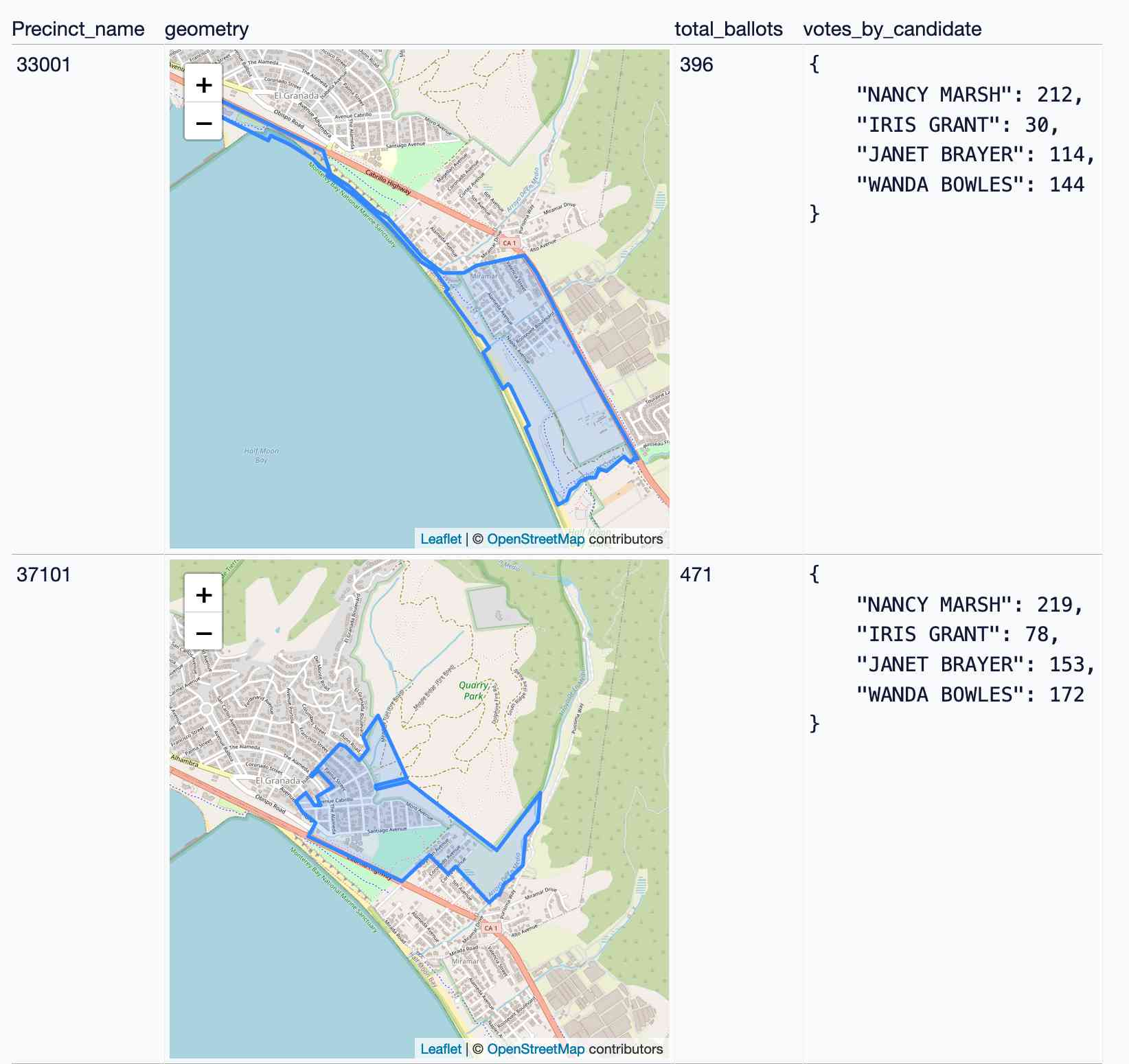

Alex Garcia and myself hosted the first Datasette Open Office Hours on Friday—a live-streamed video session where we hacked on a project together and took questions and tips from community members on Discord.

[... 3,390 words]You can now run prompts against images, audio and video in your terminal using LLM

I released LLM 0.17 last night, the latest version of my combined CLI tool and Python library for interacting with hundreds of different Large Language Models such as GPT-4o, Llama, Claude and Gemini.

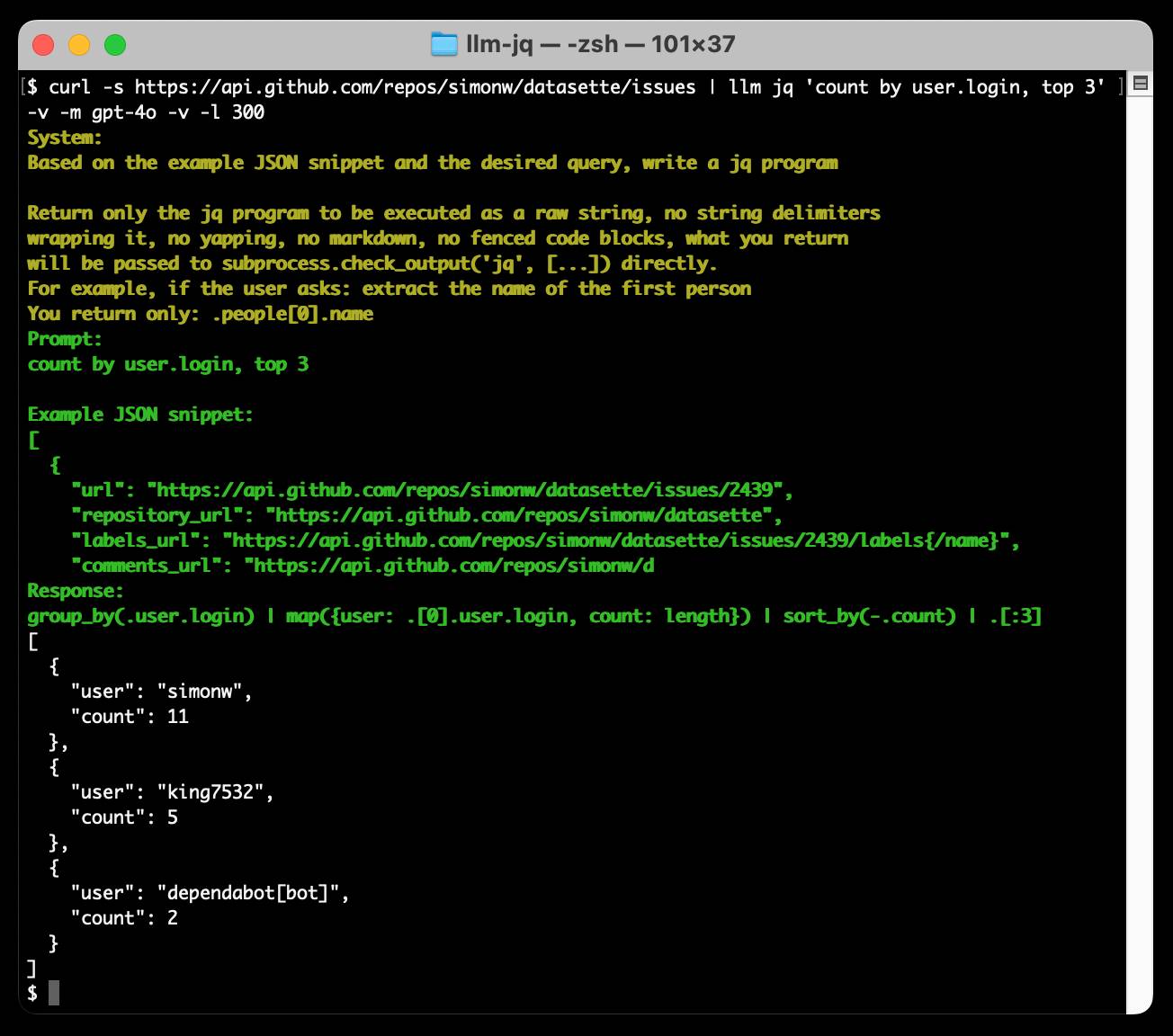

[... 1,399 words]Run a prompt to generate and execute jq programs using llm-jq

llm-jq is a brand new plugin for LLM which lets you pipe JSON directly into the llm jq command along with a human-language description of how you’d like to manipulate that JSON and have a jq program generated and executed for you on the fly.

Everything I built with Claude Artifacts this week

I’m a huge fan of Claude’s Artifacts feature, which lets you prompt Claude to create an interactive Single Page App (using HTML, CSS and JavaScript) and then view the result directly in the Claude interface, iterating on it further with the bot and then, if you like, copying out the resulting code.

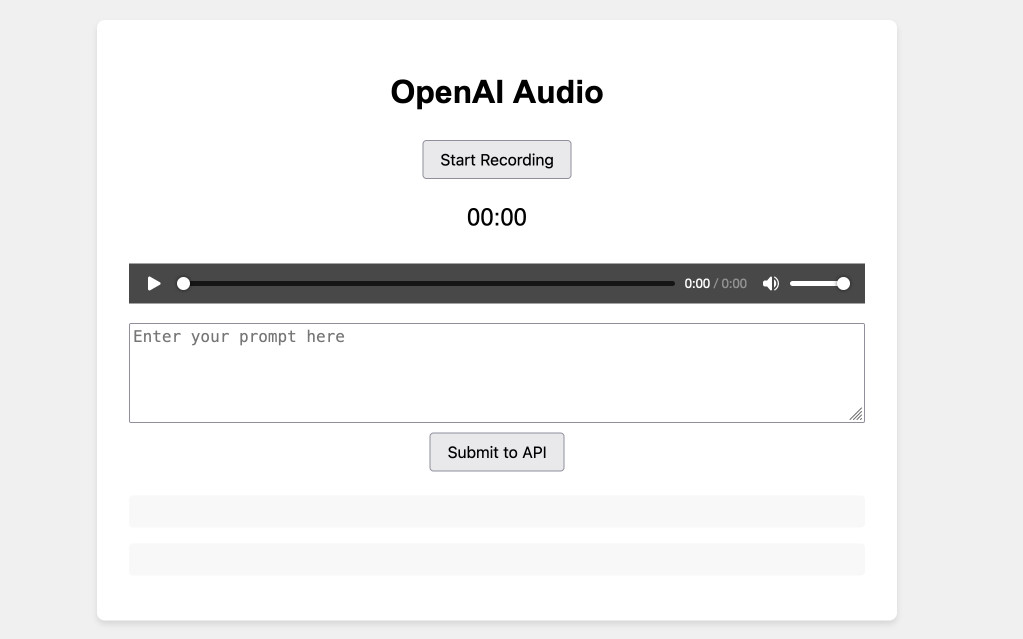

[... 2,273 words]Experimenting with audio input and output for the OpenAI Chat Completion API

OpenAI promised this at DevDay a few weeks ago and now it’s here: their Chat Completion API can now accept audio as input and return it as output. OpenAI still recommend their WebSocket-based Realtime API for audio tasks, but the Chat Completion API is a whole lot easier to write code against.

[... 1,555 words]DJP: A plugin system for Django

DJP is a new plugin mechanism for Django, built on top of Pluggy. I announced the first version of DJP during my talk yesterday at DjangoCon US 2024, How to design and implement extensible software with plugins. I’ll post a full write-up of that talk once the video becomes available—this post describes DJP and how to use what I’ve built so far.

[... 1,664 words]Calling LLMs from client-side JavaScript, converting PDFs to HTML + weeknotes

I’ve been having a bunch of fun taking advantage of CORS-enabled LLM APIs to build client-side JavaScript applications that access LLMs directly. I also span up a new Datasette plugin for advanced permission management.

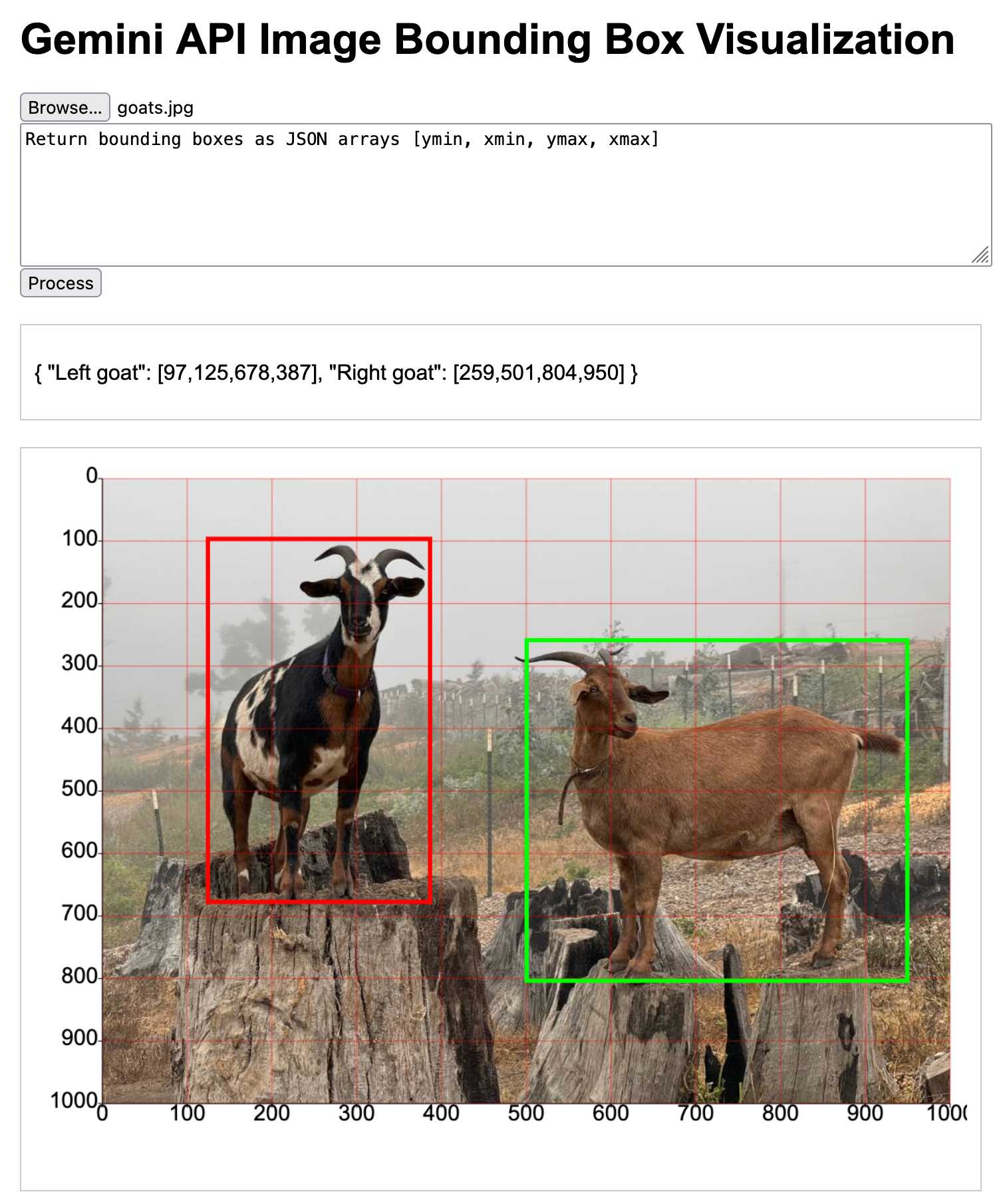

[... 2,050 words]Building a tool showing how Gemini Pro can return bounding boxes for objects in images

I was browsing through Google’s Gemini documentation while researching how different multi-model LLM APIs work when I stumbled across this note in the vision documentation:

[... 1,792 words]Claude’s API now supports CORS requests, enabling client-side applications

Anthropic have enabled CORS support for their JSON APIs, which means it’s now possible to call the Claude LLMs directly from a user’s browser.

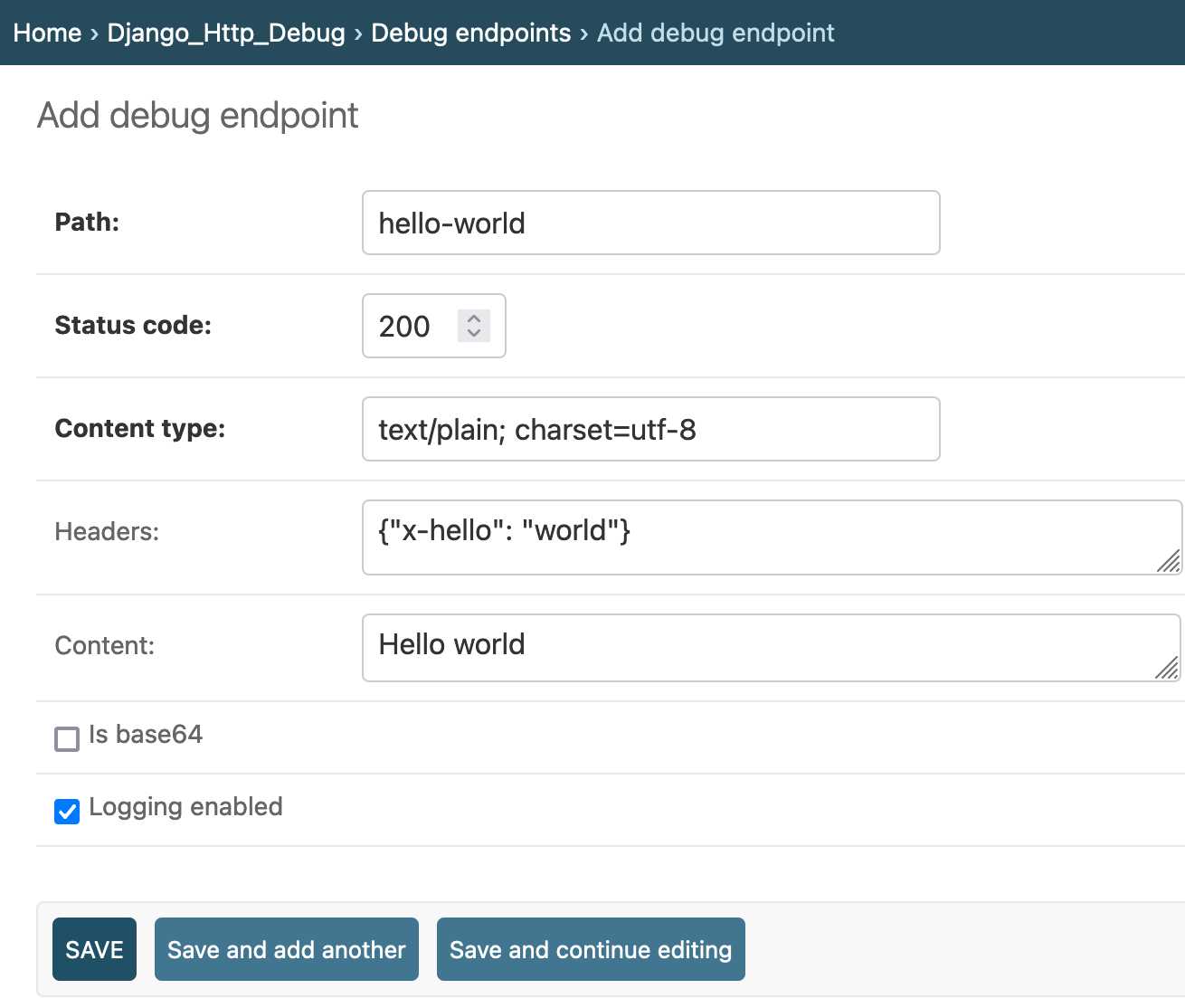

[... 625 words]django-http-debug, a new Django app mostly written by Claude

Yesterday I finally developed something I’ve been casually thinking about building for a long time: django-http-debug. It’s a reusable Django app—something you can pip install into any Django project—which provides tools for quickly setting up a URL that returns a canned HTTP response and logs the full details of any incoming request to a database table.

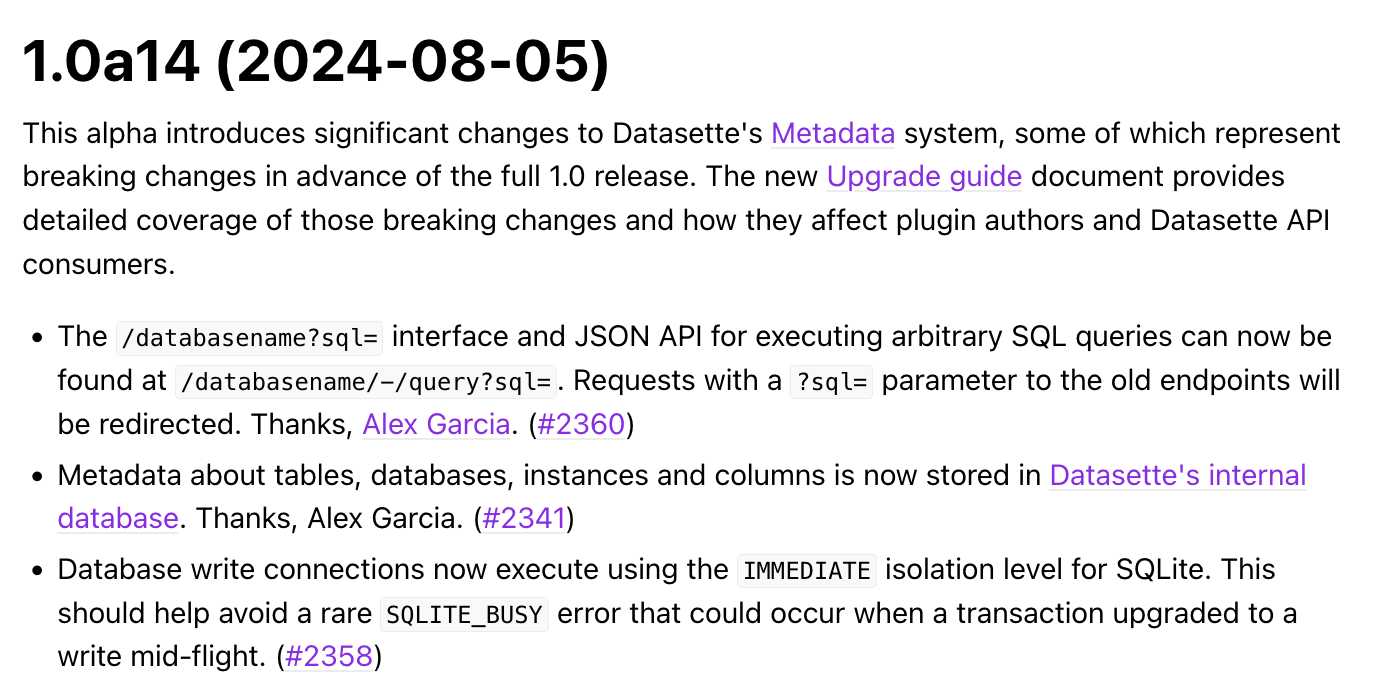

Datasette 1.0a14: The annotated release notes

Released today: Datasette 1.0a14. This alpha includes significant contributions from Alex Garcia, including some backwards-incompatible changes in the run-up to the 1.0 release.

[... 1,424 words]Weeknotes: GPT-4o mini, LLM 0.15, sqlite-utils 3.37 and building a staging environment

Upgrades to LLM to support the latest models, and a whole bunch of invisible work building out a staging environment for Datasette Cloud.

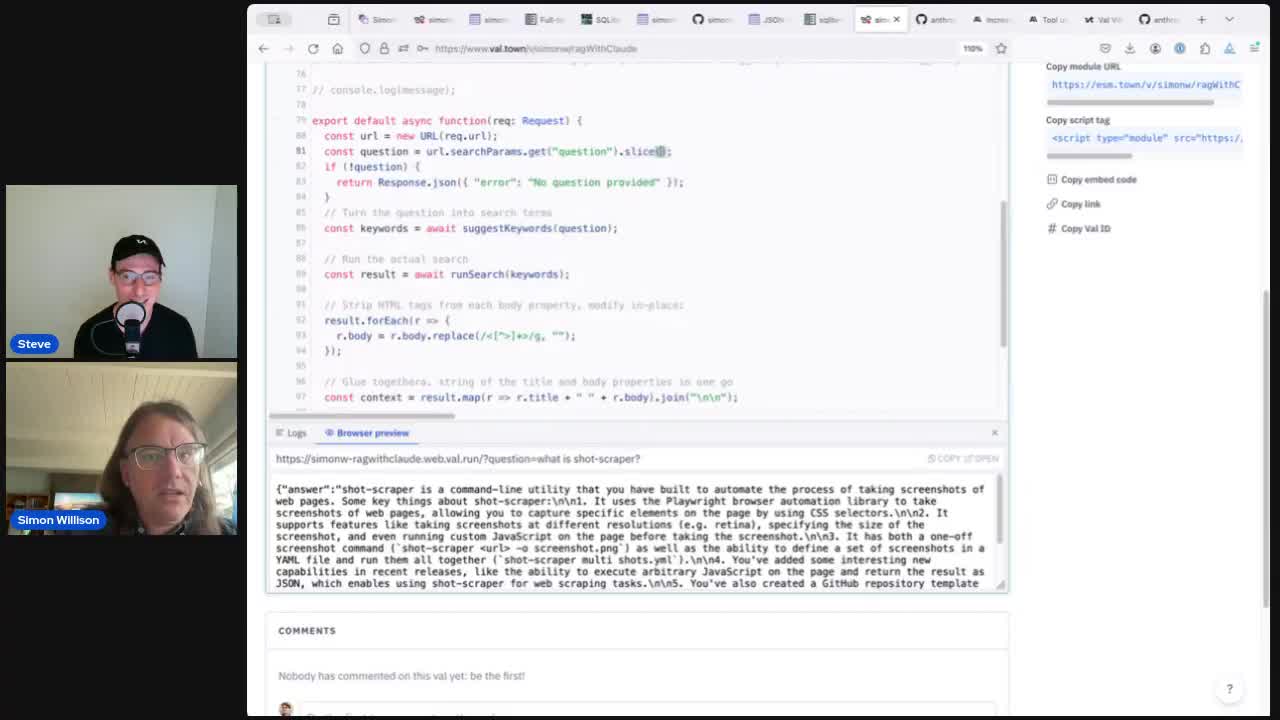

[... 730 words]Building search-based RAG using Claude, Datasette and Val Town

Retrieval Augmented Generation (RAG) is a technique for adding extra “knowledge” to systems built on LLMs, allowing them to answer questions against custom information not included in their training data. A common way to implement this is to take a question from a user, translate that into a set of search queries, run those against a search engine and then feed the results back into the LLM to generate an answer.

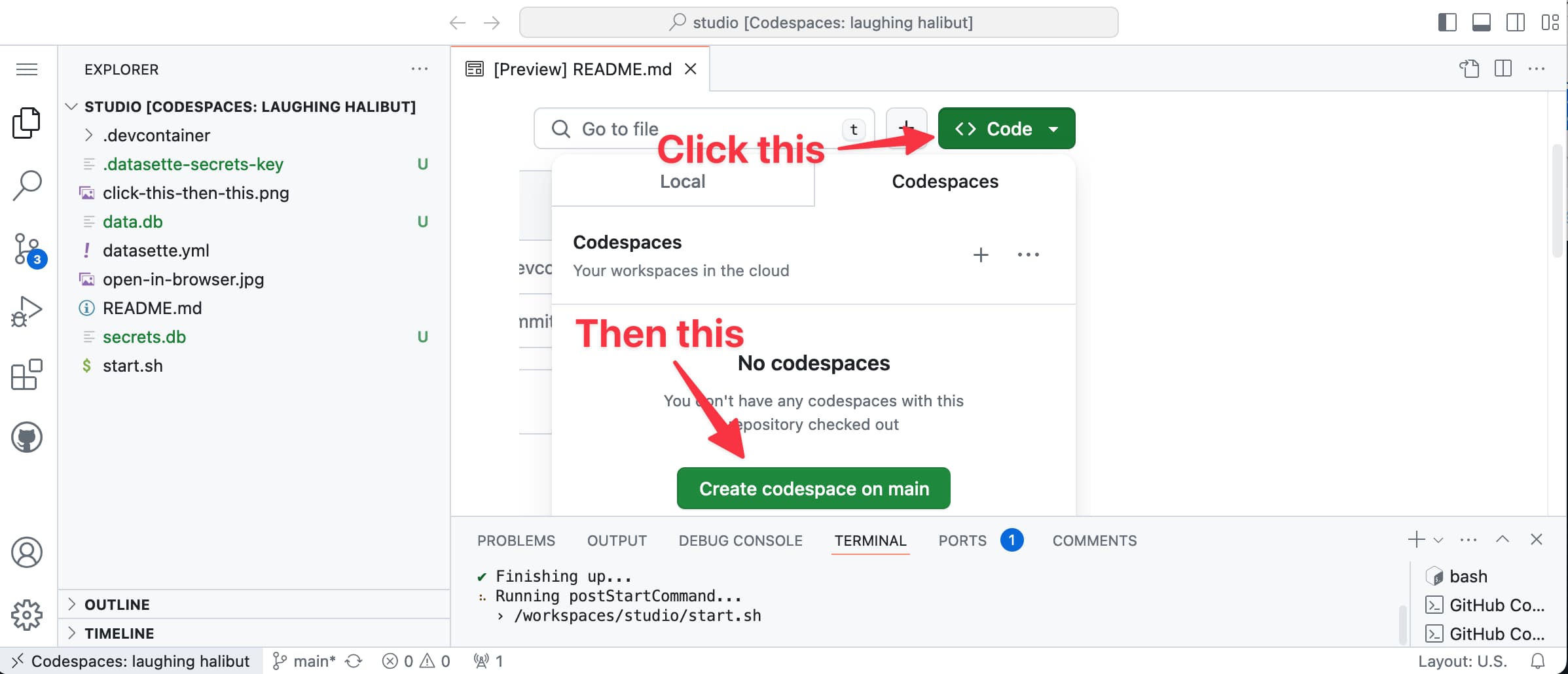

[... 3,372 words]Weeknotes: Datasette Studio and a whole lot of blogging

I’m still spinning back up after my trip back to the UK, so actual time spent building things has been less than I’d like. I presented an hour long workshop on command-line LLM usage, wrote five full blog entries (since my last weeknotes) and I’ve also been leaning more into short-form link blogging—a lot more prominent on this site now since my homepage redesign last week.

[... 736 words]