742 posts tagged “javascript”

2026

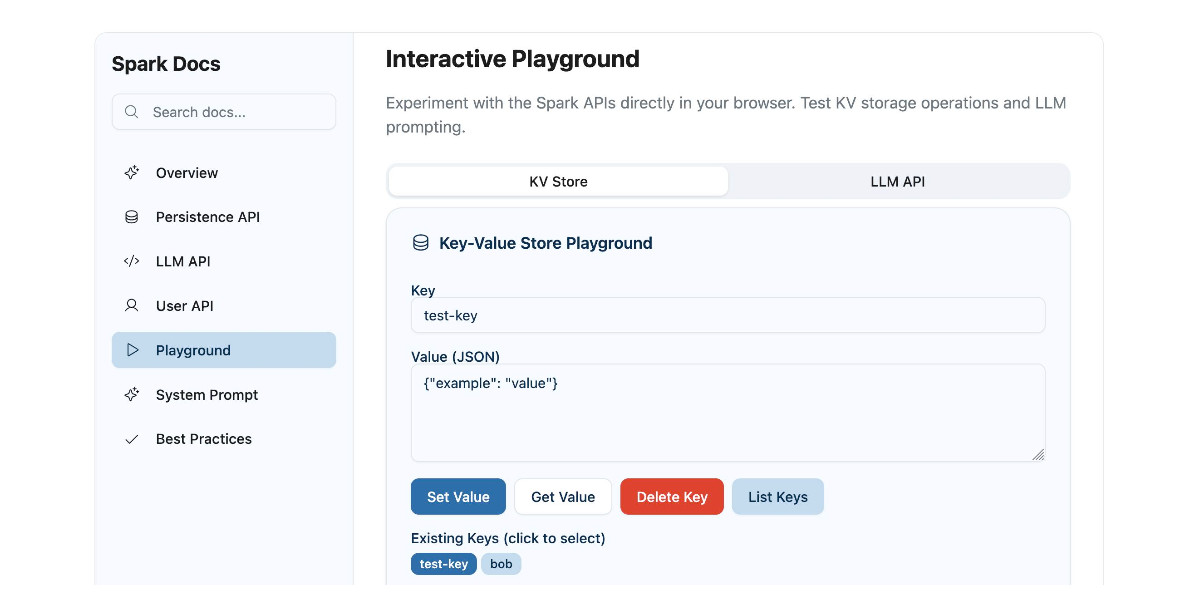

Gwtar: a static efficient single-file HTML format (via) Fascinating new project from Gwern Branwen and Said Achmiz that targets the challenge of combining large numbers of assets into a single archived HTML file without that file being inconvenient to view in a browser.

The key trick it uses is to fire window.stop() early in the page to prevent the browser from downloading the whole thing, then following that call with inline tar uncompressed content.

It can then make HTTP range requests to fetch content from that tar data on-demand when it is needed by the page.

The JavaScript that has already loaded rewrites asset URLs to point to https://localhost/ purely so that they will fail to load. Then it uses a PerformanceObserver to catch those attempted loads:

let perfObserver = new PerformanceObserver((entryList, observer) => {

resourceURLStringsHandler(entryList.getEntries().map(entry => entry.name));

});

perfObserver.observe({ entryTypes: [ "resource" ] });

That resourceURLStringsHandler callback finds the resource if it is already loaded or fetches it with an HTTP range request otherwise and then inserts the resource in the right place using a blob: URL.

Here's what the window.stop() portion of the document looks like if you view the source:

Amusingly for an archive format it doesn't actually work if you open the file directly on your own computer. Here's what you see if you try to do that:

You are seeing this message, instead of the page you should be seeing, because

gwtarfiles cannot be opened locally (due to web browser security restrictions).To open this page on your computer, use the following shell command:

perl -ne'print $_ if $x; $x=1 if /<!-- GWTAR END/' < foo.gwtar.html | tar --extractThen open the file

foo.htmlin any web browser.

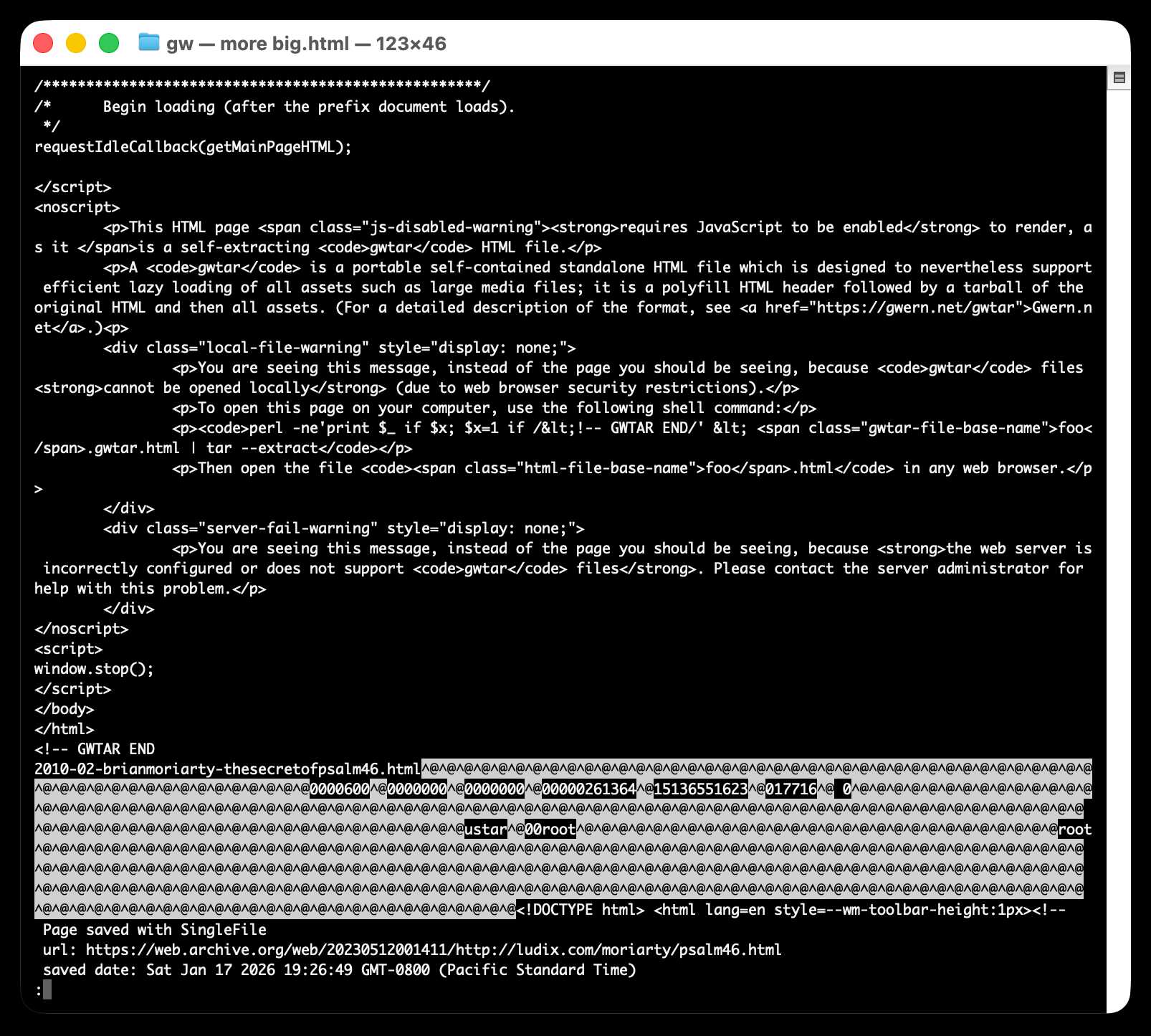

Launching Interop 2026. Jake Archibald reports on Interop 2026, the initiative between Apple, Google, Igalia, Microsoft, and Mozilla to collaborate on ensuring a targeted set of web platform features reach cross-browser parity over the course of the year.

I hadn't realized how influential and successful the Interop series has been. It started back in 2021 as Compat 2021 before being rebranded to Interop in 2022.

The dashboards for each year can be seen here, and they demonstrate how wildly effective the program has been: 2021, 2022, 2023, 2024, 2025, 2026.

Here's the progress chart for 2025, which shows every browser vendor racing towards a 95%+ score by the end of the year:

The feature I'm most excited about in 2026 is Cross-document View Transitions, building on the successful 2025 target of Same-Document View Transitions. This will provide fancy SPA-style transitions between pages on websites with no JavaScript at all.

As a keen WebAssembly tinkerer I'm also intrigued by this one:

JavaScript Promise Integration for Wasm allows WebAssembly to asynchronously 'suspend', waiting on the result of an external promise. This simplifies the compilation of languages like C/C++ which expect APIs to run synchronously.

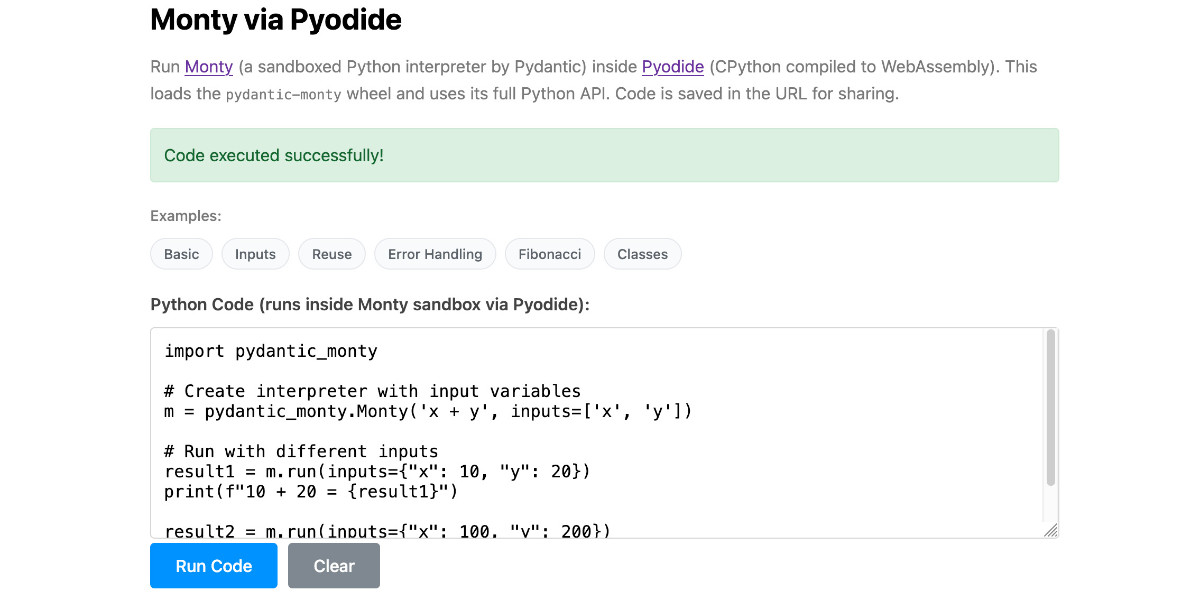

Running Pydantic’s Monty Rust sandboxed Python subset in WebAssembly

There’s a jargon-filled headline for you! Everyone’s building sandboxes for running untrusted code right now, and Pydantic’s latest attempt, Monty, provides a custom Python-like language (a subset of Python) in Rust and makes it available as both a Rust library and a Python package. I got it working in WebAssembly, providing a sandbox-in-a-sandbox.

[... 854 words]Adding dynamic features to an aggressively cached website

My blog uses aggressive caching: it sits behind Cloudflare with a 15 minute cache header, which guarantees it can survive even the largest traffic spike to any given page. I’ve recently added a couple of dynamic features that work in spite of that full-page caching. Here’s how those work.

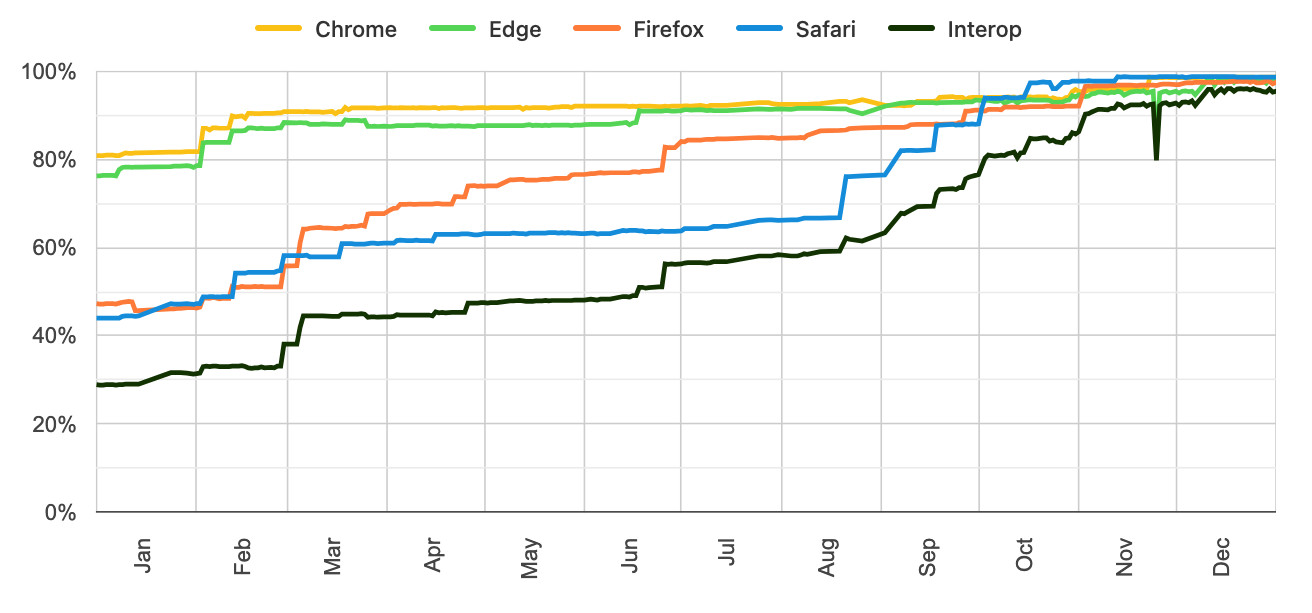

[... 1,145 words]the browser is the sandbox. Paul Kinlan is a web platform developer advocate at Google and recently turned his attention to coding agents. He quickly identified the importance of a robust sandbox for agents to operate in and put together these detailed notes on how the web browser can help:

This got me thinking about the browser. Over the last 30 years, we have built a sandbox specifically designed to run incredibly hostile, untrusted code from anywhere on the web, the instant a user taps a URL. [...]

Could you build something like Cowork in the browser? Maybe. To find out, I built a demo called Co-do that tests this hypothesis. In this post I want to discuss the research I've done to see how far we can get, and determine if the browser's ability to run untrusted code is useful (and good enough) for enabling software to do more for us directly on our computer.

Paul then describes how the three key aspects of a sandbox - filesystem, network access and safe code execution - can be handled by browser technologies: the File System Access API (still Chrome-only as far as I can tell), CSP headers with <iframe sandbox> and WebAssembly in Web Workers.

Co-do is a very interesting demo that illustrates all of these ideas in a single application:

You select a folder full of files and configure an LLM provider and set an API key, Co-do then uses CSP-approved API calls to interact with that provider and provides a chat interface with tools for interacting with those files. It does indeed feel similar to Claude Cowork but without running a multi-GB local container to provide the sandbox.

My biggest complaint about <iframe sandbox> remains how thinly documented it is, especially across different browsers. Paul's post has all sorts of useful details on that which I've not encountered elsewhere, including a complex double-iframe technique to help apply network rules to the inner of the two frames.

Thanks to this post I also learned about the <input type="file" webkitdirectory> tag which turns out to work on Firefox, Safari and Chrome and allows a browser read-only access to a full directory of files at once. I had Claude knock up a webkitdirectory demo to try it out and I'll certainly be using it for projects in the future.

Introducing gisthost.github.io

I am a huge fan of gistpreview.github.io, the site by Leon Huang that lets you append ?GIST_id to see a browser-rendered version of an HTML page that you have saved to a Gist. The last commit was ten years ago and I needed a couple of small changes so I’ve forked it and deployed an updated version at gisthost.github.io.

2025

textarea.my on GitHub (via) Anton Medvedev built textarea.my, which he describes as:

A minimalist text editor that lives entirely in your browser and stores everything in the URL hash.

It's ~160 lines of HTML, CSS and JavaScript and it's worth reading the whole thing. I picked up a bunch of neat tricks from this!

<article contenteditable="plaintext-only">- I did not know about theplaintext-onlyvalue, supported across all the modern browsers.- It uses

new CompressionStream('deflate-raw')to compress the editor state so it can fit in a shorter fragment URL. - It has a neat custom save option which triggers if you hit

((e.metaKey || e.ctrlKey) && e.key === 's')- on browsers that support it (mainly Chrome variants) this useswindow.showSaveFilePicker(), other browsers get a straight download - in both cases generated usingURL.createObjectURL(new Blob([html], {type: 'text/html'}))

The debounce() function it uses deserves a special note:

function debounce(ms, fn) { let timer return (...args) => { clearTimeout(timer) timer = setTimeout(() => fn(...args), ms) } }

That's really elegant. The goal of debounce(ms, fn) is to take a function and a timeout (e.g. 100ms) and ensure that the function runs at most once every 100ms.

This one works using a closure variable timer to capture the setTimeout time ID. On subsequent calls that timer is cancelled and a new one is created - so if you call the function five times in quick succession it will execute just once, 100ms after the last of that sequence of calls.

If this [MicroQuickJS] had been available in 2010, Redis scripting would have been JavaScript and not Lua. Lua was chosen based on the implementation requirements, not on the language ones... (small, fast, ANSI-C). I appreciate certain ideas in Lua, and people love it, but I was never able to like Lua, because it departs from a more Algol-like syntax and semantics without good reasons, for my taste. This creates friction for newcomers. I love friction when it opens new useful ideas and abstractions that are worth it, if you learn SmallTalk or FORTH and for some time you are lost, it's part of how the languages are different. But I think for Lua this is not true enough: it feels like it departs from what people know without good reasons.

— Salvatore Sanfilippo, Hacker News comment on MicroQuickJS

MicroQuickJS. New project from programming legend Fabrice Bellard, of ffmpeg and QEMU and QuickJS and so much more fame:

MicroQuickJS (aka. MQuickJS) is a Javascript engine targetted at embedded systems. It compiles and runs Javascript programs with as low as 10 kB of RAM. The whole engine requires about 100 kB of ROM (ARM Thumb-2 code) including the C library. The speed is comparable to QuickJS.

It supports a subset of full JavaScript, though it looks like a rich and full-featured subset to me.

One of my ongoing interests is sandboxing: mechanisms for executing untrusted code - from end users or generated by LLMs - in an environment that restricts memory usage and applies a strict time limit and restricts file or network access. Could MicroQuickJS be useful in that context?

I fired up Claude Code for web (on my iPhone) and kicked off an asynchronous research project to see explore that question:

My full prompt is here. It started like this:

Clone https://github.com/bellard/mquickjs to /tmp

Investigate this code as the basis for a safe sandboxing environment for running untrusted code such that it cannot exhaust memory or CPU or access files or the network

First try building python bindings for this using FFI - write a script that builds these by checking out the code to /tmp and building against that, to avoid copying the C code in this repo permanently. Write and execute tests with pytest to exercise it as a sandbox

Then build a "real" Python extension not using FFI and experiment with that

Then try compiling the C to WebAssembly and exercising it via both node.js and Deno, with a similar suite of tests [...]

I later added to the interactive session:

Does it have a regex engine that might allow a resource exhaustion attack from an expensive regex?

(The answer was no - the regex engine calls the interrupt handler even during pathological expression backtracking, meaning that any configured time limit should still hold.)

Here's the full transcript and the final report.

Some key observations:

- MicroQuickJS is very well suited to the sandbox problem. It has robust near and time limits baked in, it doesn't expose any dangerous primitive like filesystem of network access and even has a regular expression engine that protects against exhaustion attacks (provided you configure a time limit).

- Claude span up and tested a Python library that calls a MicroQuickJS shared library (involving a little bit of extra C), a compiled a Python binding and a library that uses the original MicroQuickJS CLI tool. All of those approaches work well.

- Compiling to WebAssembly was a little harder. It got a version working in Node.js and Deno and Pyodide, but the Python libraries wasmer and wasmtime proved harder, apparently because "mquickjs uses setjmp/longjmp for error handling". It managed to get to a working wasmtime version with a gross hack.

I'm really excited about this. MicroQuickJS is tiny, full featured, looks robust and comes from excellent pedigree. I think this makes for a very solid new entrant in the quest for a robust sandbox.

Update: I had Claude Code build tools.simonwillison.net/microquickjs, an interactive web playground for trying out the WebAssembly build of MicroQuickJS, adapted from my previous QuickJS plaground. My QuickJS page loads 2.28 MB (675 KB transferred). The MicroQuickJS one loads 303 KB (120 KB transferred).

Here are the prompts I used for that.

I ported JustHTML from Python to JavaScript with Codex CLI and GPT-5.2 in 4.5 hours

I wrote about JustHTML yesterday—Emil Stenström’s project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

[... 1,818 words]Useful patterns for building HTML tools

I’ve started using the term HTML tools to refer to HTML applications that I’ve been building which combine HTML, JavaScript, and CSS in a single file and use them to provide useful functionality. I have built over 150 of these in the past two years, almost all of them written by LLMs. This article presents a collection of useful patterns I’ve discovered along the way.

[... 4,231 words]Anthropic acquires Bun. Anthropic just acquired the company behind the Bun JavaScript runtime, which they adopted for Claude Code back in July. Their announcement includes an impressive revenue update on Claude Code:

In November, Claude Code achieved a significant milestone: just six months after becoming available to the public, it reached $1 billion in run-rate revenue.

Here "run-rate revenue" means that their current monthly revenue would add up to $1bn/year.

I've been watching Anthropic's published revenue figures with interest: their annual revenue run rate was $1 billion in January 2025 and had grown to $5 billion by August 2025 and to $7 billion by October.

I had suspected that a large chunk of this was down to Claude Code - given that $1bn figure I guess a large chunk of the rest of the revenue comes from their API customers, since Claude Sonnet/Opus are extremely popular models for coding assistant startups.

Bun founder Jarred Sumner explains the acquisition here. They still had plenty of runway after their $26m raise but did not yet have any revenue:

Instead of putting our users & community through "Bun, the VC-backed startups tries to figure out monetization" – thanks to Anthropic, we can skip that chapter entirely and focus on building the best JavaScript tooling. [...] When people ask "will Bun still be around in five or ten years?", answering with "we raised $26 million" isn't a great answer. [...]

Anthropic is investing in Bun as the infrastructure powering Claude Code, Claude Agent SDK, and future AI coding products. Our job is to make Bun the best place to build, run, and test AI-driven software — while continuing to be a great general-purpose JavaScript runtime, bundler, package manager, and test runner.

How I automate my Substack newsletter with content from my blog

I sent out my weekly-ish Substack newsletter this morning and took the opportunity to record a YouTube video demonstrating my process and describing the different components that make it work. There’s a lot of digital duct tape involved, taking the content from Django+Heroku+PostgreSQL to GitHub Actions to SQLite+Datasette+Fly.io to JavaScript+Observable and finally to Substack.

[... 1,345 words]The fetch()ening (via) After several years of stable htmx 2.0 and a promise to never release a backwards-incompatible htmx 3 Carson Gross is technically keeping that promise... by skipping to htmx 4 instead!

The main reason is to replace XMLHttpRequest with fetch() - a change that will have enough knock-on compatibility effects to require a major version bump - so they're using that as an excuse to clean up various other accumulated design warts at the same time.

htmx is a very responsibly run project. Here's their plan for the upgrade:

That said, htmx 2.0 users will face an upgrade project when moving to 4.0 in a way that they did not have to in moving from 1.0 to 2.0.

I am sorry about that, and want to offer three things to address it:

- htmx 2.0 (like htmx 1.0 & intercooler.js 1.0) will be supported in perpetuity, so there is absolutely no pressure to upgrade your application: if htmx 2.0 is satisfying your hypermedia needs, you can stick with it.

- We will create extensions that revert htmx 4 to htmx 2 behaviors as much as is feasible (e.g. Supporting the old implicit attribute inheritance model, at least)

- We will roll htmx 4.0 out slowly, over a multi-year period. As with the htmx 1.0 -> 2.0 upgrade, there will be a long period where htmx 2.x is

latestand htmx 4.x isnext

There are lots of neat details in here about the design changes they plan to make. It's a really great piece of technical writing - I learned a bunch about htmx and picked up some good notes on API design in general from this.

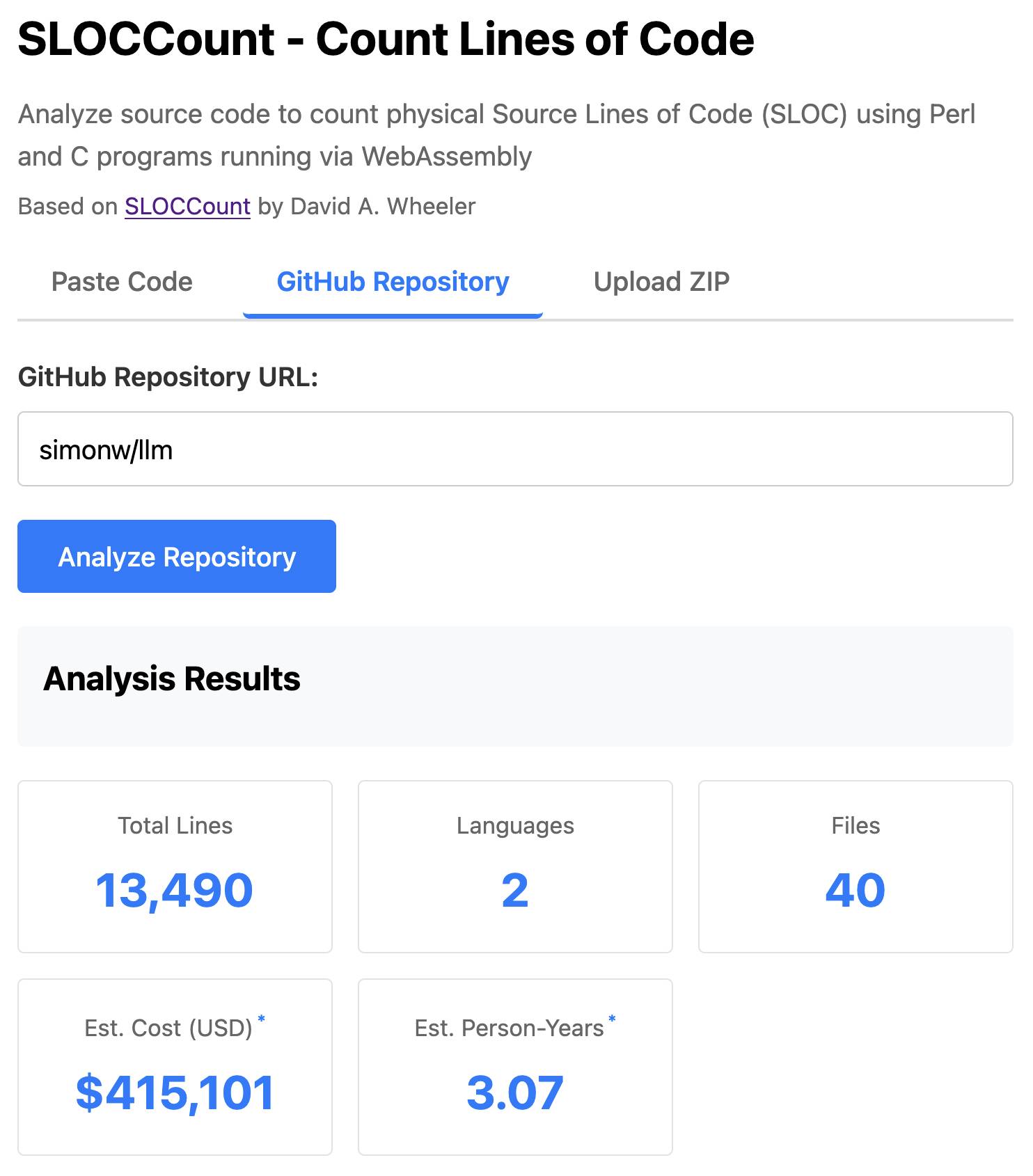

SLOCCount in WebAssembly. This project/side-quest got a little bit out of hand.

I remembered an old tool called SLOCCount which could count lines of code and produce an estimate for how much they would cost to develop. I thought it would be fun to play around with it again, especially given how cheap it is to generate code using LLMs these days.

Here's the homepage for SLOCCount by David A. Wheeler. It dates back to 2001!

I figured it might be fun to try and get it running on the web. Surely someone had compiled Perl to WebAssembly...?

WebPerl by Hauke Dämpfling is exactly that, even adding a neat <script type="text/perl"> tag.

I told Claude Code for web on my iPhone to figure it out and build something, giving it some hints from my initial research:

Build sloccount.html - a mobile friendly UI for running the Perl sloccount tool against pasted code or against a GitHub repository that is provided in a form field

It works using the webperl webassembly build of Perl, plus it loads Perl code from this exact commit of this GitHub repository https://github.com/licquia/sloccount/tree/7220ff627334a8f646617fe0fa542d401fb5287e - I guess via the GitHub API, maybe using the https://github.com/licquia/sloccount/archive/7220ff627334a8f646617fe0fa542d401fb5287e.zip URL if that works via CORS

Test it with playwright Python - don’t edit any file other than sloccount.html and a tests/test_sloccount.py file

Since I was working on my phone I didn't review the results at all. It seemed to work so I deployed it to static hosting... and then when I went to look at it properly later on found that Claude had given up, cheated and reimplemented it in JavaScript instead!

So I switched to Claude Code on my laptop where I have more control and coached Claude through implementing the project for real. This took way longer than the project deserved - probably a solid hour of my active time, spread out across the morning.

I've shared some of the transcripts - one, two, and three - as terminal sessions rendered to HTML using my rtf-to-html tool.

At one point I realized that the original SLOCCount project wasn't even entirely Perl as I had assumed, it included several C utilities! So I had Claude Code figure out how to compile those to WebAssembly (it used Emscripten) and incorporate those into the project (with notes on what it did.)

The end result (source code here) is actually pretty cool. It's a web UI with three tabs - one for pasting in code, a second for loading code from a GitHub repository and a third that lets you open a Zip file full of code that you want to analyze. Here's an animated demo:

The cost estimates it produces are of very little value. By default it uses the original method from 2001. You can also twiddle the factors - bumping up the expected US software engineer's annual salary from its 2000 estimate of $56,286 is a good start!

I had ChatGPT take a guess at what those figures should be for today and included those in the tool, with a very prominent warning not to trust them in the slightest.

httpjail

(via)

Here's a promising new (experimental) project in the sandboxing space from Ammar Bandukwala at Coder. httpjail provides a Rust CLI tool for running an individual process against a custom configured HTTP proxy.

The initial goal is to help run coding agents like Claude Code and Codex CLI with extra rules governing how they interact with outside services. From Ammar's blog post that introduces the new tool, Fine-grained HTTP filtering for Claude Code:

httpjailimplements an HTTP(S) interceptor alongside process-level network isolation. Under default configuration, all DNS (udp:53) is permitted and all other non-HTTP(S) traffic is blocked.

httpjailrules are either JavaScript expressions or custom programs. This approach makes them far more flexible than traditional rule-oriented firewalls and avoids the learning curve of a DSL.Block all HTTP requests other than the LLM API traffic itself:

$ httpjail --js "r.host === 'api.anthropic.com'" -- claude "build something great"

I tried it out using OpenAI's Codex CLI instead and found this recipe worked:

brew upgrade rust

cargo install httpjail # Drops it in `~/.cargo/bin`

httpjail --js "r.host === 'chatgpt.com'" -- codex

Within that Codex instance the model ran fine but any attempts to access other URLs (e.g. telling it "Use curl to fetch simonwillison.net)" failed at the proxy layer.

This is still at a really early stage but there's a lot I like about this project. Being able to use JavaScript to filter requests via the --js option is neat (it's using V8 under the hood), and there's also a --sh shellscript option which instead runs a shell program passing environment variables that can be used to determine if the request should be allowed.

At a basic level it works by running a proxy server and setting HTTP_PROXY and HTTPS_PROXY environment variables so well-behaving software knows how to route requests.

It can also add a bunch of other layers. On Linux it sets up nftables rules to explicitly deny additional network access. There's also a --docker-run option which can launch a Docker container with the specified image but first locks that container down to only have network access to the httpjail proxy server.

It can intercept, filter and log HTTPS requests too by generating its own certificate and making that available to the underlying process.

I'm always interested in new approaches to sandboxing, and fine-grained network access is a particularly tricky problem to solve. This looks like a very promising step in that direction - I'm looking forward to seeing how this project continues to evolve.

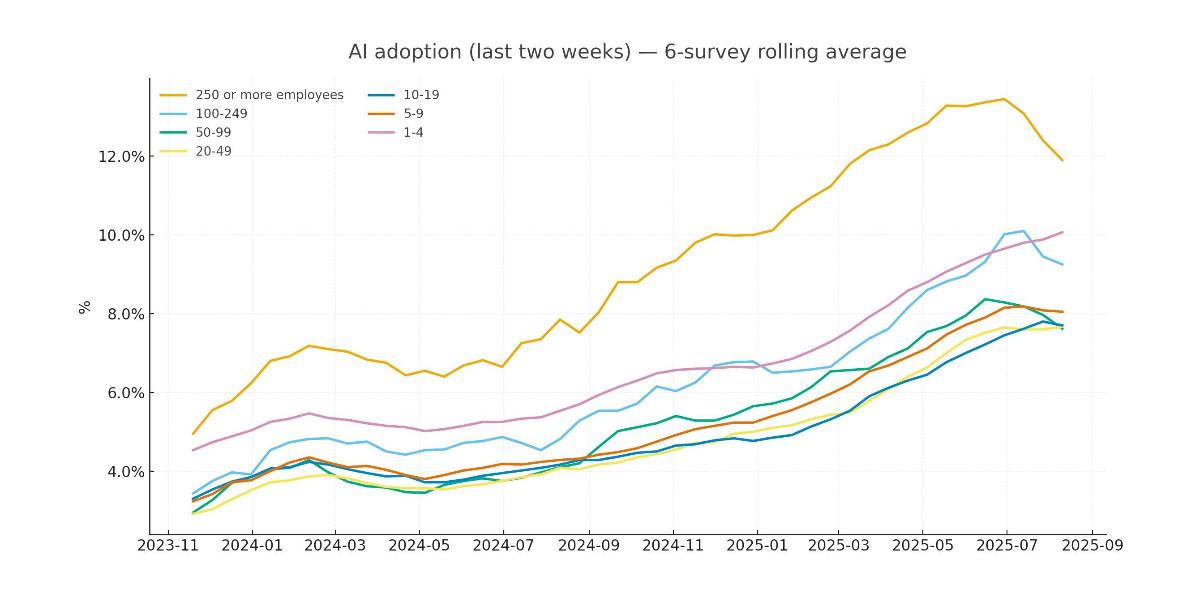

Recreating the Apollo AI adoption rate chart with GPT-5, Python and Pyodide

Apollo Global Management’s “Chief Economist” Dr. Torsten Sløk released this interesting chart which appears to show a slowdown in AI adoption rates among large (>250 employees) companies:

[... 2,673 words]Load Llama-3.2 WebGPU in your browser from a local folder (via) Inspired by a comment on Hacker News I decided to see if it was possible to modify the transformers.js-examples/tree/main/llama-3.2-webgpu Llama 3.2 chat demo (online here, I wrote about it last November) to add an option to open a local model file directly from a folder on disk, rather than waiting for it to download over the network.

I posed the problem to OpenAI's GPT-5-enabled Codex CLI like this:

git clone https://github.com/huggingface/transformers.js-examples

cd transformers.js-examples/llama-3.2-webgpu

codex

Then this prompt:

Modify this application such that it offers the user a file browse button for selecting their own local copy of the model file instead of loading it over the network. Provide a "download model" option too.

Codex churned away for several minutes, even running commands like curl -sL https://raw.githubusercontent.com/huggingface/transformers.js/main/src/models.js | sed -n '1,200p' to inspect the source code of the underlying Transformers.js library.

After four prompts total (shown here) it built something which worked!

To try it out you'll need your own local copy of the Llama 3.2 ONNX model. You can get that (a ~1.2GB) download) like so:

git lfs install

git clone https://huggingface.co/onnx-community/Llama-3.2-1B-Instruct-q4f16

Then visit my llama-3.2-webgpu page in Chrome or Firefox Nightly (since WebGPU is required), click "Browse folder", select that folder you just cloned, agree to the "Upload" confirmation (confusing since nothing is uploaded from your browser, the model file is opened locally on your machine) and click "Load local model".

Here's an animated demo (recorded in real-time, I didn't speed this up):

I pushed a branch with those changes here. The next step would be to modify this to support other models in addition to the Llama 3.2 demo, but I'm pleased to have got to this proof of concept with so little work beyond throwing some prompts at Codex to see if it could figure it out.

According to the Codex /status command this used 169,818 input tokens, 17,112 output tokens and 1,176,320 cached input tokens. At current GPT-5 token pricing ($1.25/million input, $0.125/million cached input, $10/million output) that would cost 53.942 cents, but Codex CLI hooks into my existing $20/month ChatGPT Plus plan so this was bundled into that.

Making XML human-readable without XSLT. In response to the recent discourse about XSLT support in browsers, Jake Archibald shares a new-to-me alternative trick for making an XML document readable in a browser: adding the following element near the top of the XML:

<script

xmlns="http://www.w3.org/1999/xhtml"

src="script.js" defer="" />

That script.js will then be executed by the browser, and can swap out the XML with HTML by creating new elements using the correct namespace:

const htmlEl = document.createElementNS(

'http://www.w3.org/1999/xhtml',

'html',

);

document.documentElement.replaceWith(htmlEl);

// Now populate the new DOM

Tom MacWright: Observable Notebooks 2.0. Observable announced Observable Notebooks 2.0 last week - the latest take on their JavaScript notebook technology, this time with an open file format and a brand new macOS desktop app.

Tom MacWright worked at Observable during their first iteration and here provides thoughtful commentary from an insider-to-outsider perspective on how their platform has evolved over time.

I particularly appreciated this aside on the downsides of evolving your own not-quite-standard language syntax:

Notebook Kit and Desktop support vanilla JavaScript, which is excellent and cool. The Observable changes to JavaScript were always tricky and meant that we struggled to use off-the-shelf parsers, and users couldn't use standard JavaScript tooling like eslint. This is stuff like the

viewofoperator which meant that Observable was not JavaScript. [...] Sidenote: I now work on Val Town, which is also a platform based on writing JavaScript, and when I joined it also had a tweaked version of JavaScript. We used the@character to let you 'mention' other vals and implicitly import them. This was, like it was in Observable, not worth it and we switched to standard syntax: don't mess with language standards folks!

The many, many, many JavaScript runtimes of the last decade (via) Extraordinary piece of writing by Jamie Birch who spent over a year putting together this comprehensive reference to JavaScript runtimes. It covers everything from Node.js, Deno, Electron, AWS Lambda, Cloudflare Workers and Bun all the way to much smaller projects idea like dukluv and txiki.js.

Using GitHub Spark to reverse engineer GitHub Spark

GitHub Spark was released in public preview yesterday. It’s GitHub’s implementation of the prompt-to-app pattern also seen in products like Claude Artifacts, Lovable, Vercel v0, Val Town Townie and Fly.io’s Phoenix New. In this post I reverse engineer Spark and explore its fascinating system prompt in detail.

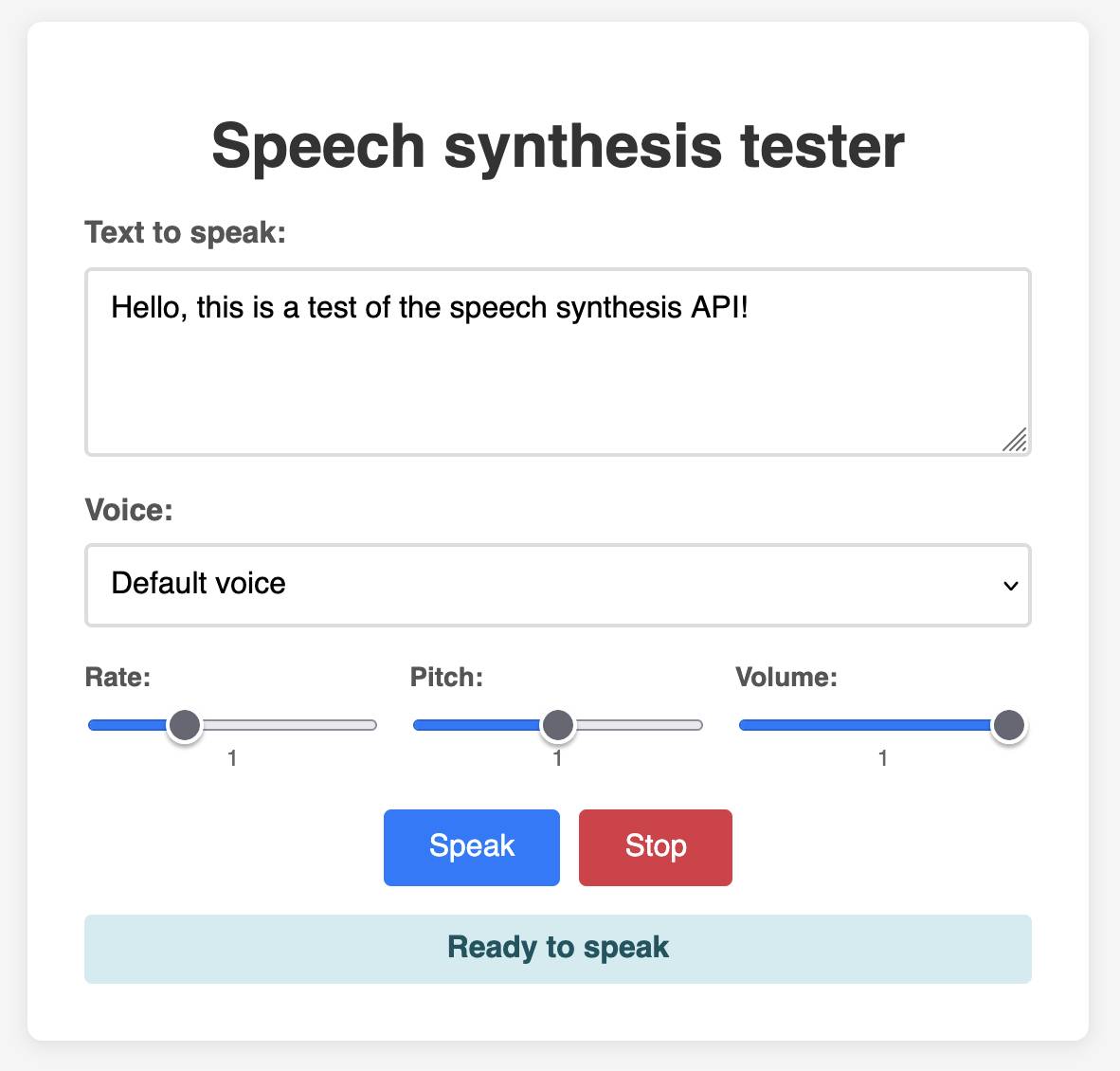

[... 3,900 words]1KB JS Numbers Station. Terence Eden built a neat and weird 1023 byte JavaScript demo that simulates a numbers station using the browser SpeechSynthesisUtterance, which I hadn't realized is supported by every modern browser now.

This inspired me to vibe code up this playground interface for that API using Claude:

ccusage.

Claude Code logs detailed usage information to the ~/.claude/ directory. ccusage is a neat little Node.js tool which reads that information and shows you a readable summary of your usage patterns, including the estimated cost in USD per day.

You can run it using npx like this:

npx ccusage@latest

Here's a tip that works on YouTube and almost any other web page that shows you a video. You can increase the playback rate beyond the usually-exposed 2x by running this in your browser DevTools console:

document.querySelector('video').playbackRate = 2.5

I find this is the fastest I can reasonably watch most videos at, with subtitles on to help my comprehension - it turns a 40 minute video into just 16 minutes, short enough that I don't feel too guilty taking time off whatever else I'm doing to watch it!

Directive prologues and JavaScript dark matter (via) Tom MacWright does some archaeology and describes the three different magic comment formats that can affect how JavaScript/TypeScript files are processed:

"a directive"; is a directive prologue, most commonly seen with "use strict";.

/** @aPragma */ is a pragma for a transpiler, often used for /** @jsx h */.

//# aMagicComment is usually used for source maps - //# sourceMappingURL=<url> - but also just got used by v8 for their new explicit compile hints feature.

Progressive JSON. This post by Dan Abramov is a trap! It proposes a fascinating way of streaming JSON objects to a client in a way that provides the shape of the JSON before the stream has completed, then fills in the gaps as more data arrives... and then turns out to be a sneaky tutorial in how React Server Components work.

Ignoring the sneakiness, the imaginary streaming JSON format it describes is a fascinating thought exercise:

{

header: "$1",

post: "$2",

footer: "$3"

}

/* $1 */

"Welcome to my blog"

/* $3 */

"Hope you like it"

/* $2 */

{

content: "$4",

comments: "$5"

}

/* $4 */

"This is my article"

/* $5 */

["$6", "$7", "$8"]

/* $6 */

"This is the first comment"

/* $7 */

"This is the second comment"

/* $8 */

"This is the third comment"

After each block the full JSON document so far can be constructed, and Dan suggests interleaving Promise() objects along the way for placeholders that have not yet been fully resolved - so after receipt of block $3 above (note that the blocks can be served out of order) the document would look like this:

{

header: "Welcome to my blog",

post: new Promise(/* ... not yet resolved ... */),

footer: "Hope you like it"

}

I'm tucking this idea away in case I ever get a chance to try it out in the future.

If you've found web development frustrating over the past 5-10 years, here's something that has worked worked great for me: give yourself permission to avoid any form of frontend build system (so no npm / React / TypeScript / JSX / Babel / Vite / Tailwind etc) and code in HTML and JavaScript like it's 2009.

The joy came flooding back to me! It turns out browser APIs are really good now.

You don't even need jQuery to paper over the gaps any more - use document.querySelectorAll() and fetch() directly and see how much value you can build with a few dozen lines of code.

If you want to create completely free software for other people to use, the absolute best delivery mechanism right now is static HTML and JavaScript served from a free web host with an established reputation.

Thanks to WebAssembly the set of potential software that can be served in this way is vast and, I think, under appreciated. Pyodide means we can ship client-side Python applications now!

This assumes that you would like your gift to the world to keep working for as long as possible, while granting you the freedom to lose interest and move onto other projects without needing to keep covering expenses far into the future.

Even the cheapest hosting plan requires you to monitor and update billing details every few years. Domains have to be renewed. Anything that runs server-side will inevitably need to be upgraded someday - and the longer you wait between upgrades the harder those become.

My top choice for this kind of thing in 2025 is GitHub, using GitHub Pages. It's free for public repositories and I haven't seen GitHub break a working URL that they have hosted in the 17+ years since they first launched.

A few years ago I'd have recommended Heroku on the basis that their free plan had stayed reliable for more than a decade, but Salesforce took that accumulated goodwill and incinerated it in 2022.

It almost goes without saying that you should release it under an open source license. The license alone is not enough to ensure regular human beings can make use of what you have built though: give people a link to something that works!

shot-scraper 1.8. I've added a new feature to shot-scraper that makes it easier to share scripts for other people to use with the shot-scraper javascript command.

shot-scraper javascript lets you load up a web page in an invisible Chrome browser (via Playwright), execute some JavaScript against that page and output the results to your terminal. It's a fun way of running complex screen-scraping routines as part of a terminal session, or even chained together with other commands using pipes.

The -i/--input option lets you load that JavaScript from a file on disk - but now you can also use a gh: prefix to specify loading code from GitHub instead.

To quote the release notes:

shot-scraper javascriptcan now optionally load scripts hosted on GitHub via the newgh:prefix to theshot-scraper javascript -i/--inputoption. #173Scripts can be referenced as

gh:username/repo/path/to/script.jsor, if the GitHub user has created a dedicatedshot-scraper-scriptsrepository and placed scripts in the root of it, usinggh:username/name-of-script.For example, to run this readability.js script against any web page you can use the following:

shot-scraper javascript --input gh:simonw/readability \ https://simonwillison.net/2025/Mar/24/qwen25-vl-32b/

The output from that example starts like this:

{

"title": "Qwen2.5-VL-32B: Smarter and Lighter",

"byline": "Simon Willison",

"dir": null,

"lang": "en-gb",

"content": "<div id=\"readability-page-1\"...My simonw/shot-scraper-scripts repo only has that one file in it so far, but I'm looking forward to growing that collection and hopefully seeing other people create and share their own shot-scraper-scripts repos as well.

This feature is an imitation of a similar feature that's coming in the next release of LLM.