283 posts tagged “open-source”

2025

Run Your Own AI (via) Anthony Lewis published this neat, concise tutorial on using my LLM tool to run local models on your own machine, using llm-mlx.

An under-appreciated way to contribute to open source projects is to publish unofficial guides like this one. Always brightens my day when something like this shows up.

What people get wrong about the leading Chinese open models: Adoption and censorship (via) While I've been enjoying trying out Alibaba's Qwen 3 a lot recently, Nathan Lambert focuses on the elephant in the room:

People vastly underestimate the number of companies that cannot use Qwen and DeepSeek open models because they come from China. This includes on-premise solutions built by people who know the fact that model weights alone cannot reveal anything to their creators.

The root problem here is the closed nature of the training data. Even if a model is open weights, it's not possible to conclusively determine that it couldn't add backdoors to generated code or trigger "indirect influence of Chinese values on Western business systems". Qwen 3 certainly has baked in opinions about the status of Taiwan!

Nathan sees this as an opportunity for other liberally licensed models, including his own team's OLMo:

This gap provides a big opportunity for Western AI labs to lead in open models. Without DeepSeek and Qwen, the top tier of models we’re left with are Llama and Gemma, which both have very restrictive licenses when compared to their Chinese counterparts. These licenses are proportionally likely to block an IT department from approving a model.

This takes us to the middle tier of permissively licensed, open weight models who actually have a huge opportunity ahead of them: OLMo, of course, I’m biased, Microsoft with Phi, Mistral, IBM (!??!), and some other smaller companies to fill out the long tail.

Redis is open source again (via) Salvatore Sanfilippo:

Five months ago, I rejoined Redis and quickly started to talk with my colleagues about a possible switch to the AGPL license, only to discover that there was already an ongoing discussion, a very old one, too. [...]

I’ll be honest: I truly wanted the code I wrote for the new Vector Sets data type to be released under an open source license. [...]

So, honestly, while I can’t take credit for the license switch, I hope I contributed a little bit to it, because today I’m happy. I’m happy that Redis is open source software again, under the terms of the AGPLv3 license.

I'm absolutely thrilled to hear this. Redis 8.0 is out today under the new license, including a beta release of Vector Sets. I've been watching Salvatore's work on those with fascination, while sad that I probably wouldn't use it often due to the janky license. That concern is now gone. I'm looking forward to putting them through their paces!

See also Redis is now available under the AGPLv3 open source license on the Redis blog. An interesting note from that is that they are also:

Integrating Redis Stack technologies, including JSON, Time Series, probabilistic data types, Redis Query Engine and more into core Redis 8 under AGPL

That's a whole bunch of new things that weren't previously part of Redis core.

I hadn't encountered Redis Query Engine before - it looks like that's a whole set of features that turn Redis into more of an Elasticsearch-style document database complete with full-text, vector search operations and geospatial operations and aggregations. It supports search syntax that looks a bit like this:

FT.SEARCH places "museum @city:(san francisco|oakland) @shape:[CONTAINS $poly]" PARAMS 2 poly 'POLYGON((-122.5 37.7, -122.5 37.8, -122.4 37.8, -122.4 37.7, -122.5 37.7))' DIALECT 3

(Noteworthy that Elasticsearch chose the AGPL too when they switched back from the SSPL to an open source license last year).

If you want to create completely free software for other people to use, the absolute best delivery mechanism right now is static HTML and JavaScript served from a free web host with an established reputation.

Thanks to WebAssembly the set of potential software that can be served in this way is vast and, I think, under appreciated. Pyodide means we can ship client-side Python applications now!

This assumes that you would like your gift to the world to keep working for as long as possible, while granting you the freedom to lose interest and move onto other projects without needing to keep covering expenses far into the future.

Even the cheapest hosting plan requires you to monitor and update billing details every few years. Domains have to be renewed. Anything that runs server-side will inevitably need to be upgraded someday - and the longer you wait between upgrades the harder those become.

My top choice for this kind of thing in 2025 is GitHub, using GitHub Pages. It's free for public repositories and I haven't seen GitHub break a working URL that they have hosted in the 17+ years since they first launched.

A few years ago I'd have recommended Heroku on the basis that their free plan had stayed reliable for more than a decade, but Salesforce took that accumulated goodwill and incinerated it in 2022.

It almost goes without saying that you should release it under an open source license. The license alone is not enough to ensure regular human beings can make use of what you have built though: give people a link to something that works!

I wrote to the address in the GPLv2 license notice and received the GPLv3 license. Fun story from Mendhak who noticed that the GPLv2 license used to include this in the footer:

You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

So they wrote to the address (after hunting down the necessary pieces for a self-addressed envelope from the USA back to the UK) and five weeks later received a copy.

(The copy was the GPLv3, but since they didn't actually specify GPLv2 in their request I don't think that's particularly notable.)

The comments on Hacker News included this delightful note from Davis Remmel:

This is funny because I was the operations assistant (office secretary) at the time we received this letter, and I remember it because of the distinct postage.

Someone asked "How many per day were you sending out?". The answer:

On average, zero per day, maybe 5 to 10 per year.

The FSF moved out of 51 Franklin Street in 2024, after 19 years in that location. They work remotely now - their new mailing address, 31 Milk Street, # 960789, Boston, MA 02196, is a USPS PO Box.

Now that Llama has very real competition in open weight models (Gemma 3, latest Mistrals, DeepSeek, Qwen) I think their janky license is becoming much more of a liability for them. It's just limiting enough that it could be the deciding factor for using something else.

Maybe Meta’s Llama claims to be open source because of the EU AI act

I encountered a theory a while ago that one of the reasons Meta insist on using the term “open source” for their Llama models despite the Llama license not actually conforming to the terms of the Open Source Definition is that the EU’s AI act includes special rules for open source models without requiring OSI compliance.

[... 852 words]openai/codex. Just released by OpenAI, a "lightweight coding agent that runs in your terminal". Looks like their version of Claude Code, though unlike Claude Code Codex is released under an open source (Apache 2) license.

Here's the main prompt that runs in a loop, which starts like this:

You are operating as and within the Codex CLI, a terminal-based agentic coding assistant built by OpenAI. It wraps OpenAI models to enable natural language interaction with a local codebase. You are expected to be precise, safe, and helpful.

You can:

- Receive user prompts, project context, and files.

- Stream responses and emit function calls (e.g., shell commands, code edits).

- Apply patches, run commands, and manage user approvals based on policy.

- Work inside a sandboxed, git-backed workspace with rollback support.

- Log telemetry so sessions can be replayed or inspected later.

- More details on your functionality are available at codex --help

The Codex CLI is open-sourced. Don't confuse yourself with the old Codex language model built by OpenAI many moons ago (this is understandably top of mind for you!). Within this context, Codex refers to the open-source agentic coding interface. [...]

I like that the prompt describes OpenAI's previous Codex language model as being from "many moons ago". Prompt engineering is so weird.

Since the prompt says that it works "inside a sandboxed, git-backed workspace" I went looking for the sandbox. On macOS it uses the little-known sandbox-exec process, part of the OS but grossly under-documented. The best information I've found about it is this article from 2020, which notes that man sandbox-exec lists it as deprecated. I didn't spot evidence in the Codex code of sandboxes for other platforms.

Using LLMs as the first line of support in Open Source (via) From reading the title I was nervous that this might involve automating the initial response to a user support query in an issue tracker with an LLM, but Carlton Gibson has better taste than that.

The open contribution model engendered by GitHub — where anonymous (to the project) users can create issues, and comments, which are almost always extractive support requests — results in an effective denial-of-service attack against maintainers. [...]

For anonymous users, who really just want help almost all the time, the pattern I’m settling on is to facilitate them getting their answer from their LLM of choice. [...] we can generate a file that we offer users to download, then we tell the user to pass this to (say) Claude with a simple prompt for their question.

This resonates with the concept proposed by llms.txt - making LLM-friendly context files available for different projects.

My simonw/docs-for-llms contains my own early experiment with this: I'm running a build script to create LLM-friendly concatenated documentation for several of my projects, and my llm-docs plugin (described here) can then be used to ask questions of that documentation.

It's possible to pre-populate the Claude UI with a prompt by linking to https://claude.ai/new?q={PLACE_HOLDER}, but it looks like there's quite a short length limit on how much text can be passed that way. It would be neat if you could pass a URL to a larger document instead.

ChatGPT also supports https://chatgpt.com/?q=your-prompt-here (again with a short length limit) and directly executes the prompt rather than waiting for you to edit it first(!)

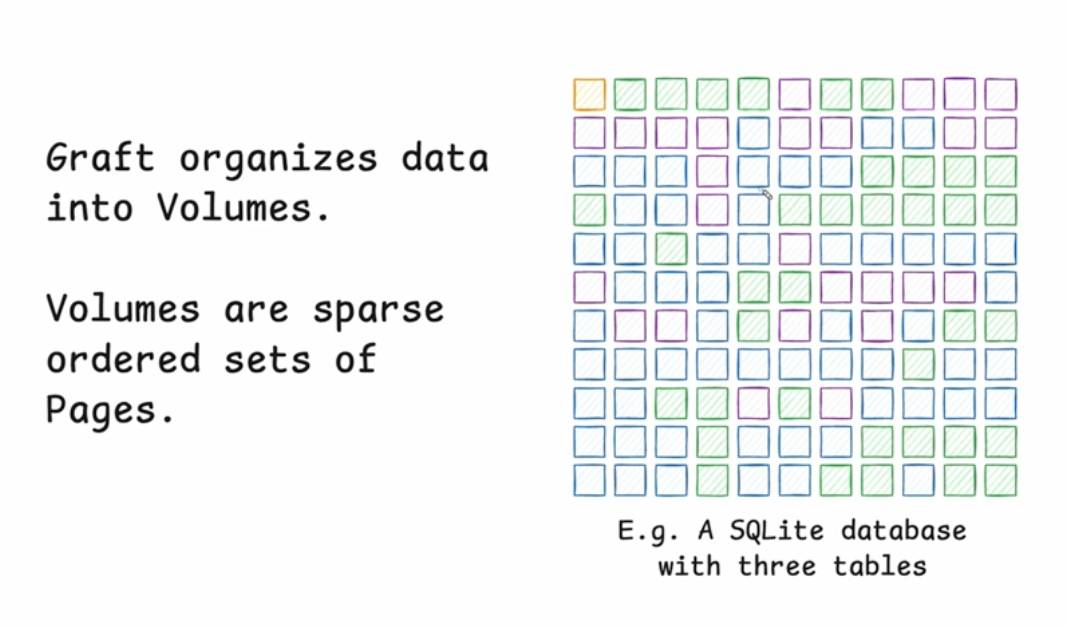

Stop syncing everything. In which Carl Sverre announces Graft, a fascinating new open source Rust data synchronization engine he's been working on for the past year.

Carl's recent talk at the Vancouver Systems meetup explains Graft in detail, including this slide which helped everything click into place for me:

Graft manages a volume, which is a collection of pages (currently at a fixed 4KB size). A full history of that volume is maintained using snapshots. Clients can read and write from particular snapshot versions for particular pages, and are constantly updated on which of those pages have changed (while not needing to synchronize the actual changed data until they need it).

This is a great fit for B-tree databases like SQLite.

The Graft project includes a SQLite VFS extension that implements multi-leader read-write replication on top of a Graft volume. You can see a demo of that running at 36m15s in the video, or consult the libgraft extension documentation and try it yourself.

The section at the end on What can you build with Graft? has some very useful illustrative examples:

Offline-first apps: Note-taking, task management, or CRUD apps that operate partially offline. Graft takes care of syncing, allowing the application to forget the network even exists. When combined with a conflict handler, Graft can also enable multiplayer on top of arbitrary data.

Cross-platform data: Eliminate vendor lock-in and allow your users to seamlessly access their data across mobile platforms, devices, and the web. Graft is architected to be embedded anywhere

Stateless read replicas: Due to Graft's unique approach to replication, a database replica can be spun up with no local state, retrieve the latest snapshot metadata, and immediately start running queries. No need to download all the data and replay the log.

Replicate anything: Graft is just focused on consistent page replication. It doesn't care about what's inside those pages. So go crazy! Use Graft to sync AI models, Parquet or Lance files, Geospatial tilesets, or just photos of your cats. The sky's the limit with Graft.

We estimate the supply-side value of widely-used OSS is $4.15 billion, but that the demand-side value is much larger at $8.8 trillion. We find that firms would need to spend 3.5 times more on software than they currently do if OSS did not exist.

— Manuel Hoffmann, Frank Nagle, Yanuo Zhou, The Value of Open Source Software, Harvard Business School

suitenumerique/docs. New open source (MIT licensed) collaborative text editing web application, similar to Google Docs or Notion, notable because it's a joint effort funded by the French and German governments and "currently onboarding the Netherlands".

It's built using Django and React:

Docs is built on top of Django Rest Framework, Next.js, BlockNote.js, HocusPocus and Yjs.

Deployments currently require Kubernetes, PostgreSQL, memcached, an S3 bucket (or compatible) and an OIDC provider.

Today we release OLMo 2 32B, the most capable and largest model in the OLMo 2 family, scaling up the OLMo 2 training recipe used for our 7B and 13B models released in November. It is trained up to 6T tokens and post-trained using Tulu 3.1. OLMo 2 32B is the first fully-open model (all data, code, weights, and details are freely available) to outperform GPT3.5-Turbo and GPT-4o mini on a suite of popular, multi-skill academic benchmarks.

— Ai2, OLMo 2 32B release announcement

QwQ-32B: Embracing the Power of Reinforcement Learning (via) New Apache 2 licensed reasoning model from Qwen:

We are excited to introduce QwQ-32B, a model with 32 billion parameters that achieves performance comparable to DeepSeek-R1, which boasts 671 billion parameters (with 37 billion activated). This remarkable outcome underscores the effectiveness of RL when applied to robust foundation models pretrained on extensive world knowledge.

I had a lot of fun trying out their previous QwQ reasoning model last November. I demonstrated this new QwQ in my talk at NICAR about recent LLM developments. Here's the example I ran.

LM Studio just released GGUFs ranging in size from 17.2 to 34.8 GB. MLX have compatible weights published in 3bit, 4bit, 6bit and 8bit. Ollama has the new qwq too - it looks like they've renamed the previous November release qwq:32b-preview.

[In response to a question about releasing model weights]

Yes, we are discussing. I personally think we have been on the wrong side of history here and need to figure out a different open source strategy; not everyone at OpenAI shares this view, and it's also not our current highest priority.

— Sam Altman, in a Reddit AMA

Mistral Small 3 (via) First model release of 2025 for French AI lab Mistral, who describe Mistral Small 3 as "a latency-optimized 24B-parameter model released under the Apache 2.0 license."

More notably, they claim the following:

Mistral Small 3 is competitive with larger models such as Llama 3.3 70B or Qwen 32B, and is an excellent open replacement for opaque proprietary models like GPT4o-mini. Mistral Small 3 is on par with Llama 3.3 70B instruct, while being more than 3x faster on the same hardware.

Llama 3.3 70B and Qwen 32B are two of my favourite models to run on my laptop - that ~20GB size turns out to be a great trade-off between memory usage and model utility. It's exciting to see a new entrant into that weight class.

The license is important: previous Mistral Small models used their Mistral Research License, which prohibited commercial deployments unless you negotiate a commercial license with them. They appear to be moving away from that, at least for their core models:

We’re renewing our commitment to using Apache 2.0 license for our general purpose models, as we progressively move away from MRL-licensed models. As with Mistral Small 3, model weights will be available to download and deploy locally, and free to modify and use in any capacity. […] Enterprises and developers that need specialized capabilities (increased speed and context, domain specific knowledge, task-specific models like code completion) can count on additional commercial models complementing what we contribute to the community.

Despite being called Mistral Small 3, this appears to be the fourth release of a model under that label. The Mistral API calls this one mistral-small-2501 - previous model IDs were mistral-small-2312, mistral-small-2402 and mistral-small-2409.

I've updated the llm-mistral plugin for talking directly to Mistral's La Plateforme API:

llm install -U llm-mistral

llm keys set mistral

# Paste key here

llm -m mistral/mistral-small-latest "tell me a joke about a badger and a puffin"

Sure, here's a light-hearted joke for you:

Why did the badger bring a puffin to the party?

Because he heard puffins make great party 'Puffins'!

(That's a play on the word "puffins" and the phrase "party people.")

API pricing is $0.10/million tokens of input, $0.30/million tokens of output - half the price of the previous Mistral Small API model ($0.20/$0.60). for comparison, GPT-4o mini is $0.15/$0.60.

Mistral also ensured that the new model was available on Ollama in time for their release announcement.

You can pull the model like this (fetching 14GB):

ollama run mistral-small:24b

The llm-ollama plugin will then let you prompt it like so:

llm install llm-ollama

llm -m mistral-small:24b "say hi"

A selfish personal argument for releasing code as Open Source

I’m the guest for the most recent episode of the Real Python podcast with Christopher Bailey, talking about Using LLMs for Python Development. We covered a lot of other topics as well—most notably my relationship with Open Source development over the years.

[... 464 words]2024

My Approach to Building Large Technical Projects (via) Mitchell Hashimoto wrote this piece about taking on large projects back in June 2023. The project he described in the post is a terminal emulator written in Zig called Ghostty which just reached its 1.0 release.

I've learned that when I break down my large tasks in chunks that result in seeing tangible forward progress, I tend to finish my work and retain my excitement throughout the project. People are all motivated and driven in different ways, so this may not work for you, but as a broad generalization I've not found an engineer who doesn't get excited by a good demo. And the goal is to always give yourself a good demo.

For backend-heavy projects the lack of an initial UI is a challenge here, so Mitchell advocates for early automated tests as a way to start exercising code and seeing progress right from the start. Don't let tests get in the way of demos though:

No matter what I'm working on, I try to build one or two demos per week intermixed with automated test feedback as explained in the previous section.

Building a demo also provides you with invaluable product feedback. You can quickly intuit whether something feels good, even if it isn't fully functional.

For more on the development of Ghostty see this talk Mitchell gave at Zig Showtime last year:

I want the terminal to be a modern platform for text application development, analogous to the browser being a modern platform for GUI application development (for better or worse).

Introducing Limbo: A complete rewrite of SQLite in Rust (via) This looks absurdly ambitious:

Our goal is to build a reimplementation of SQLite from scratch, fully compatible at the language and file format level, with the same or higher reliability SQLite is known for, but with full memory safety and on a new, modern architecture.

The Turso team behind it have been maintaining their libSQL fork for two years now, so they're well equipped to take on a challenge of this magnitude.

SQLite is justifiably famous for its meticulous approach to testing. Limbo plans to take an entirely different approach based on "Deterministic Simulation Testing" - a modern technique pioneered by FoundationDB and now spearheaded by Antithesis, the company Turso have been working with on their previous testing projects.

Another bold claim (emphasis mine):

We have both added DST facilities to the core of the database, and partnered with Antithesis to achieve a level of reliability in the database that lives up to SQLite’s reputation.

[...] With DST, we believe we can achieve an even higher degree of robustness than SQLite, since it is easier to simulate unlikely scenarios in a simulator, test years of execution with different event orderings, and upon finding issues, reproduce them 100% reliably.

The two most interesting features that Limbo is planning to offer are first-party WASM support and fully asynchronous I/O:

SQLite itself has a synchronous interface, meaning driver authors who want asynchronous behavior need to have the extra complication of using helper threads. Because SQLite queries tend to be fast, since no network round trips are involved, a lot of those drivers just settle for a synchronous interface. [...]

Limbo is designed to be asynchronous from the ground up. It extends

sqlite3_step, the main entry point API to SQLite, to be asynchronous, allowing it to return to the caller if data is not ready to consume immediately.

Datasette provides an async API for executing SQLite queries which is backed by all manner of complex thread management - I would be very interested in a native asyncio Python library for talking to SQLite database files.

I successfully tried out Limbo's Python bindings against a demo SQLite test database using uv like this:

uv run --with pylimbo python

>>> import limbo

>>> conn = limbo.connect("/tmp/demo.db")

>>> cursor = conn.cursor()

>>> print(cursor.execute("select * from foo").fetchall())

It crashed when I tried against a more complex SQLite database that included SQLite FTS tables.

The Python bindings aren't yet documented, so I piped them through LLM and had the new google-exp-1206 model write this initial documentation for me:

files-to-prompt limbo/bindings/python -c | llm -m gemini-exp-1206 -s 'write extensive usage documentation in markdown, including realistic usage examples'

From where I left. Four and a half years after he left the project, Redis creator Salvatore Sanfilippo is returning to work on Redis.

Hacking randomly was cool but, in the long run, my feeling was that I was lacking a real purpose, and every day I started to feel a bigger urgency to be part of the tech world again. At the same time, I saw the Redis community fragmenting, something that was a bit concerning to me, even as an outsider.

I'm personally still upset at the license change, but Salvatore sees it as necessary to support the commercial business model for Redis Labs. It feels to me like a betrayal of the volunteer efforts by previous contributors. I posted about that on Hacker News and Salvatore replied:

I can understand that, but the thing about the BSD license is that such value never gets lost. People are able to fork, and after a fork for the original project to still lead will be require to put something more on the table.

Salvatore's first new project is an exploration of adding vector sets to Redis. The vector similarity API he previews in this post reminds me of why I fell in love with Redis in the first place - it's clean, simple and feels obviously right to me.

VSIM top_1000_movies_imdb ELE "The Matrix" WITHSCORES

1) "The Matrix"

2) "0.9999999403953552"

3) "Ex Machina"

4) "0.8680362105369568"

...

New Pleias 1.0 LLMs trained exclusively on openly licensed data (via) I wrote about the Common Corpus public domain dataset back in March. Now Pleias, the team behind Common Corpus, have released the first family of models that are:

[...] trained exclusively on open data, meaning data that are either non-copyrighted or are published under a permissible license.

There's a lot to absorb here. The Pleias 1.0 family comes in three base model sizes: 350M, 1.2B and 3B. They've also released two models specialized for multi-lingual RAG: Pleias-Pico (350M) and Pleias-Nano (1.2B).

Here's an official GGUF for Pleias-Pico.

I'm looking forward to seeing benchmarks from other sources, but Pleias ran their own custom multilingual RAG benchmark which had their Pleias-nano-1.2B-RAG model come in between Llama-3.2-Instruct-3B and Llama-3.2-Instruct-8B.

The 350M and 3B models were trained on the French government's Jean Zay supercomputer. Pleias are proud of their CO2 footprint for training the models - 0.5, 4 and 16 tCO2eq for the three models respectively, which they compare to Llama 3.2,s reported figure of 133 tCO2eq.

How clean is the training data from a licensing perspective? I'm confident people will find issues there - truly 100% public domain data remains a rare commodity. So far I've seen questions raised about the GitHub source code data (most open source licenses have attribution requirements) and Wikipedia (CC BY-SA, another attribution license). Plus this from the announcement:

To supplement our corpus, we have generated 30B+ words synthetically with models allowing for outputs reuse.

If those models were themselves trained on unlicensed data this could be seen as a form of copyright laundering.

Open source is really part of my process of getting unstuck, learning and contributing back to the community, and also helping future me have an easier time. ‘Me’ is probably the number one beneficiary of my open-source software work. To be honest with you, a lot of it is selfish. It's really about making me more productive, happier, and less stressed. For people who wonder why we should do open source, I think that they should consider that they themselves may benefit more than they realize.

Simon Willison: The Future of Open Source and AI (via) I sat down a few weeks ago to record this conversation with Logan Kilpatrick and Nolan Fortman for their podcast Around the Prompt. The episode is available on YouTube and Apple Podcasts and other platforms.

We talked about a whole bunch of different topics, including the ongoing debate around the term "open source" when applied to LLMs and my thoughts on why I don't feel threatened by LLMs as a software engineer (at 40m05s).

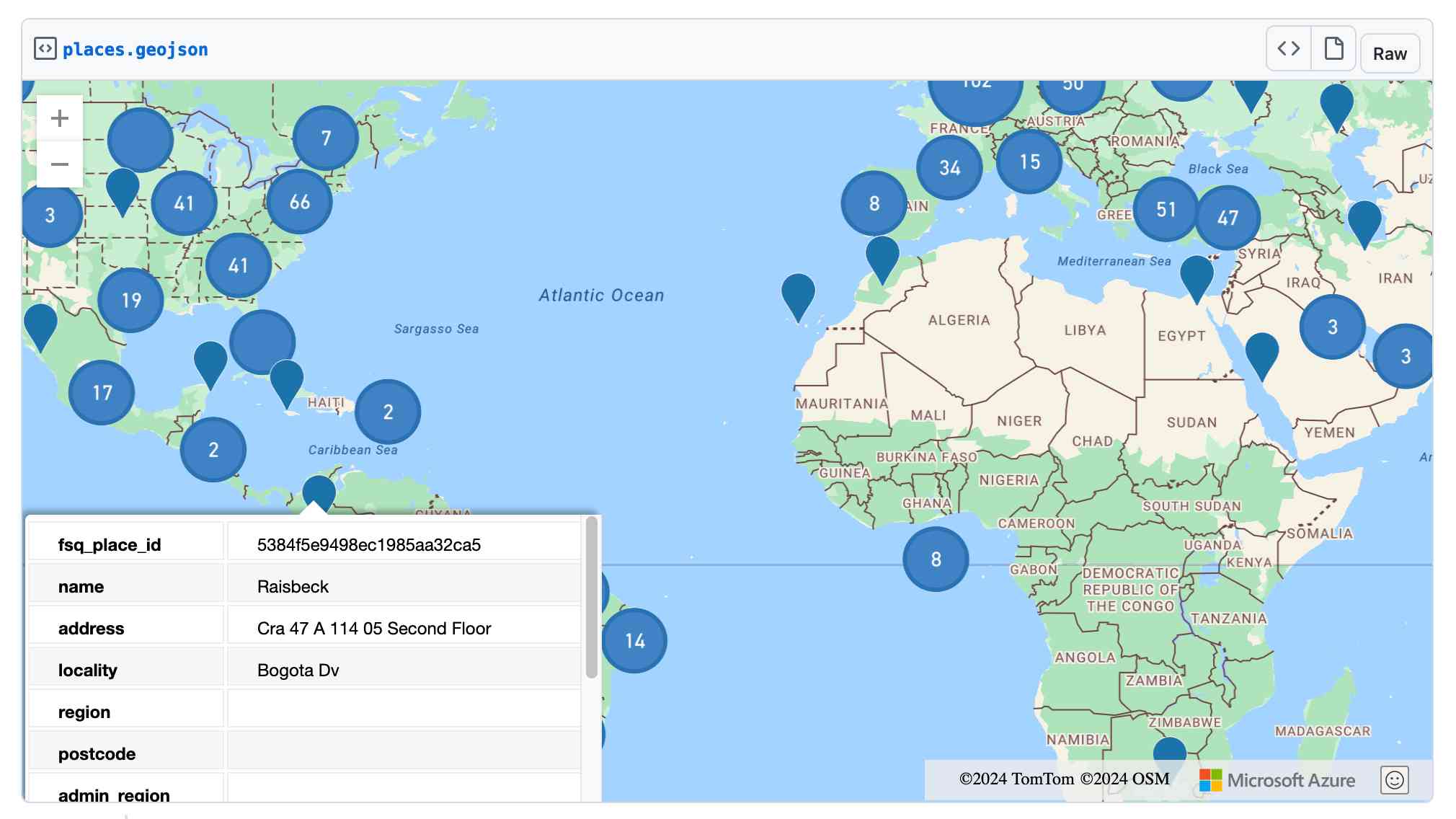

Foursquare Open Source Places: A new foundational dataset for the geospatial community (via) I did not expect this!

[...] we are announcing today the general availability of a foundational open data set, Foursquare Open Source Places ("FSQ OS Places"). This base layer of 100mm+ global places of interest ("POI") includes 22 core attributes (see schema here) that will be updated monthly and available for commercial use under the Apache 2.0 license framework.

The data is available as Parquet files hosted on Amazon S3.

Here's how to list the available files:

aws s3 ls s3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/

I got back places-00000.snappy.parquet through places-00024.snappy.parquet, each file around 455MB for a total of 10.6GB of data.

I ran duckdb and then used DuckDB's ability to remotely query Parquet on S3 to explore the data a bit more without downloading it to my laptop first:

select count(*) from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-00000.snappy.parquet';

This got back 4,180,424 - that number is similar for each file, suggesting around 104,000,000 records total.

Update: DuckDB can use wildcards in S3 paths (thanks, Paul) so this query provides an exact count:

select count(*) from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-*.snappy.parquet';

That returned 104,511,073 - and Activity Monitor on my Mac confirmed that DuckDB only needed to fetch 1.2MB of data to answer that query.

I ran this query to retrieve 1,000 places from that first file as newline-delimited JSON:

copy (

select * from 's3://fsq-os-places-us-east-1/release/dt=2024-11-19/places/parquet/places-00000.snappy.parquet'

limit 1000

) to '/tmp/places.json';

Here's that places.json file, and here it is imported into Datasette Lite.

Finally, I got ChatGPT Code Interpreter to convert that file to GeoJSON and pasted the result into this Gist, giving me a map of those thousand places (because Gists automatically render GeoJSON):

Qwen2.5-Coder-32B is an LLM that can code well that runs on my Mac

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

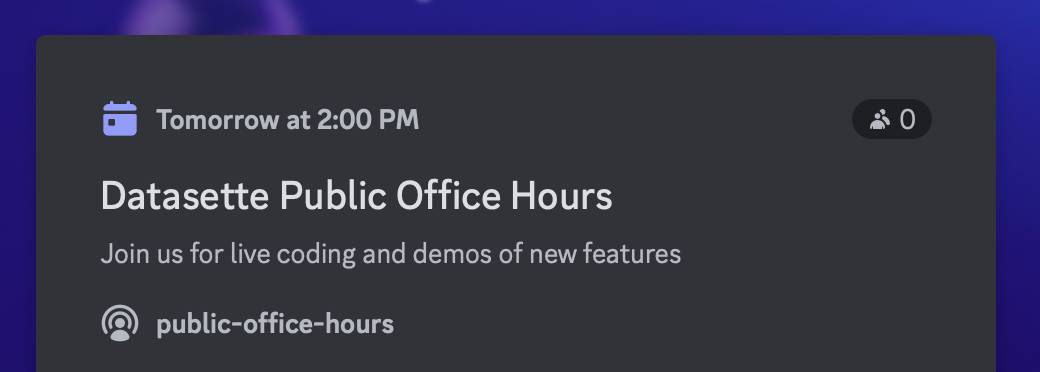

[... 697 words]Datasette Public Office Hours, Friday Nov 8th at 2pm PT. Tomorrow afternoon (Friday 8th November) at 2pm PT we'll be hosting the first Datasette Public Office Hours - a livestream video session on Discord where Alex Garcia and myself will live code on some Datasette projects and hang out to chat about the project.

This is our first time trying this format. If it works out well I plan to turn it into a series.

SmolLM2 (via) New from Loubna Ben Allal and her research team at Hugging Face:

SmolLM2 is a family of compact language models available in three size: 135M, 360M, and 1.7B parameters. They are capable of solving a wide range of tasks while being lightweight enough to run on-device. [...]

It was trained on 11 trillion tokens using a diverse dataset combination: FineWeb-Edu, DCLM, The Stack, along with new mathematics and coding datasets that we curated and will release soon.

The model weights are released under an Apache 2 license. I've been trying these out using my llm-gguf plugin for LLM and my first impressions are really positive.

Here's a recipe to run a 1.7GB Q8 quantized model from lmstudio-community:

llm install llm-gguf

llm gguf download-model https://huggingface.co/lmstudio-community/SmolLM2-1.7B-Instruct-GGUF/resolve/main/SmolLM2-1.7B-Instruct-Q8_0.gguf -a smol17

llm chat -m smol17

Or at the other end of the scale, here's how to run the 138MB Q8 quantized 135M model:

llm gguf download-model https://huggingface.co/lmstudio-community/SmolLM2-135M-Instruct-GGUF/resolve/main/SmolLM2-135M-Instruct-Q8_0.gguf' -a smol135m

llm chat -m smol135m

The blog entry to accompany SmolLM2 should be coming soon, but in the meantime here's the entry from July introducing the first version: SmolLM - blazingly fast and remarkably powerful .

Perks of Being a Python Core Developer

(via)

Mariatta Wijaya provides a detailed breakdown of the exact capabilities and privileges that are granted to Python core developers - including commit access to the Python main, the ability to write or sponsor PEPs, the ability to vote on new core developers and for the steering council election and financial support from the PSF for travel expenses related to PyCon and core development sprints.

Not to be under-estimated is that you also gain respect:

Everyone’s always looking for ways to stand out in resumes, right? So do I. I’ve been an engineer for longer than I’ve been a core developer, and I do notice that having the extra title like open source maintainer and public speaker really make a difference. As a woman, as someone with foreign last name that nobody knows how to pronounce, as someone who looks foreign, and speaks in a foreign accent, having these extra “credentials” helped me be seen as more or less equal compared to other people.

The Fair Source Definition (via) Fair Source (fair.io) is the new-ish initiative from Chad Whitacre and Sentry aimed at providing an alternative licensing philosophy that provides additional protection for the business models of companies that release their code.

I like that they're establishing a new brand for this and making it clear that it's a separate concept from Open Source. Here's their definition:

Fair Source is an alternative to closed source, allowing you to safely share access to your core products. Fair Source Software (FSS):

- is publicly available to read;

- allows use, modification, and redistribution with minimal restrictions to protect the producer’s business model; and

- undergoes delayed Open Source publication (DOSP).

They link to the Delayed Open Source Publication research paper published by OSI in January. (I was frustrated that this is only available as a PDF, so I converted it to Markdown using Gemini 1.5 Pro so I could read it on my phone.)

The most interesting background I could find on Fair Source was this GitHub issues thread, started in May, where Chad and other contributors fleshed out the initial launch plan over the course of several months.

Django Commons. Django Commons is a really promising initiative started by Tim Schilling, aimed at the problem of keeping key Django community projects responsibly maintained on a long-term basis.

Django Commons is an organization dedicated to supporting the community's efforts to maintain packages. It seeks to improve the maintenance experience for all contributors; reducing the barrier to entry for new contributors and reducing overhead for existing maintainers.

I’ve stated recently that I’d love to see the Django Software Foundation take on this role - adopting projects and ensuring they are maintained long-term. Django Commons looks like it solves that exact problem, assuring the future of key projects beyond their initial creators.

So far the Commons has taken on responsibility for django-fsm-2, django-tasks-scheduler and, as-of this week, diango-typer.

Here’s Tim introducing the project back in May. Thoughtful governance has been baked in from the start:

Having multiple administrators makes the role more sustainable, lessens the impact of a person stepping away, and shortens response time for administrator requests. It’s important to me that the organization starts with multiple administrators so that collaboration and documentation are at the forefront of all decisions.