Posts in 2024

Filters: Year: 2024 × Sorted by date

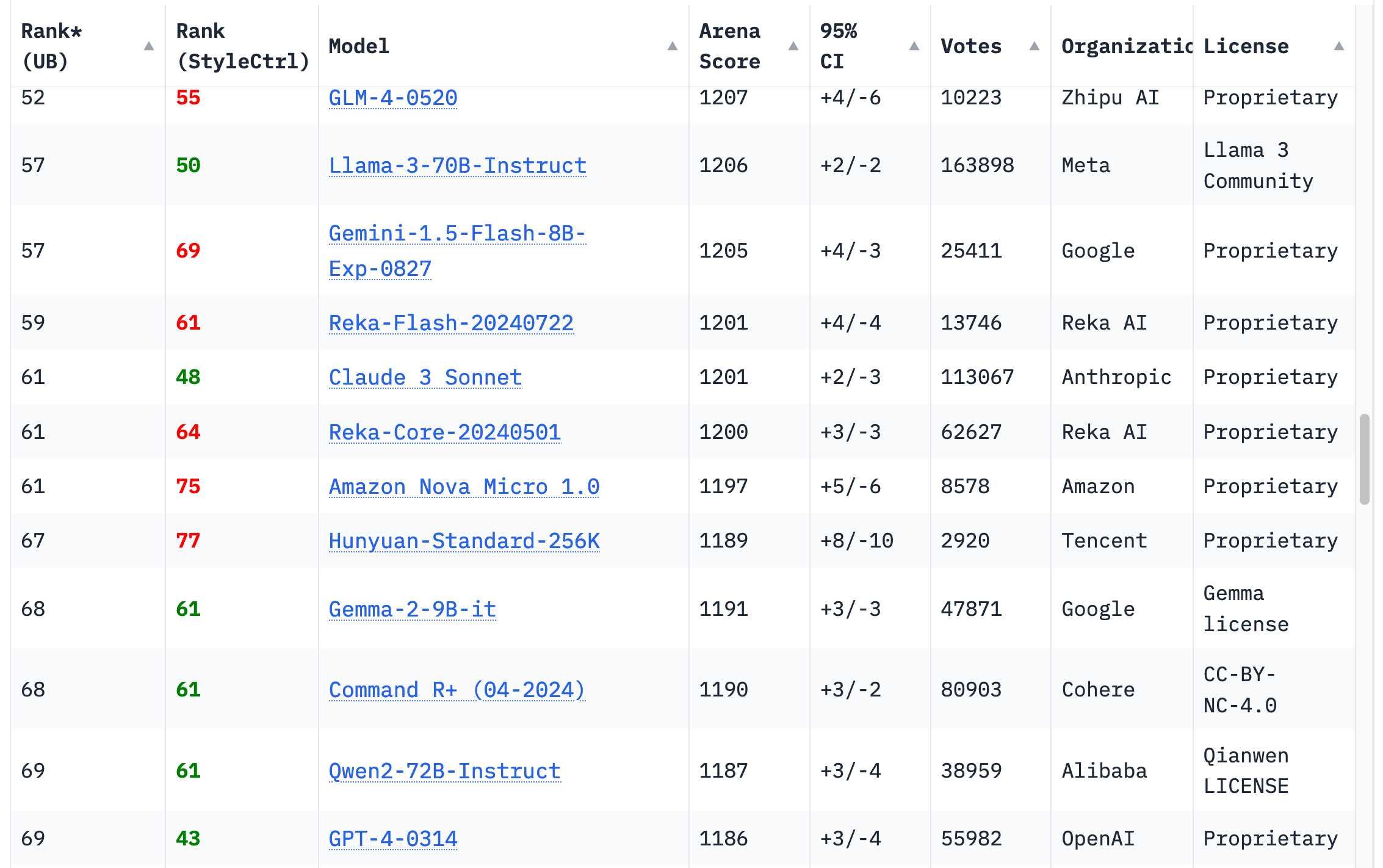

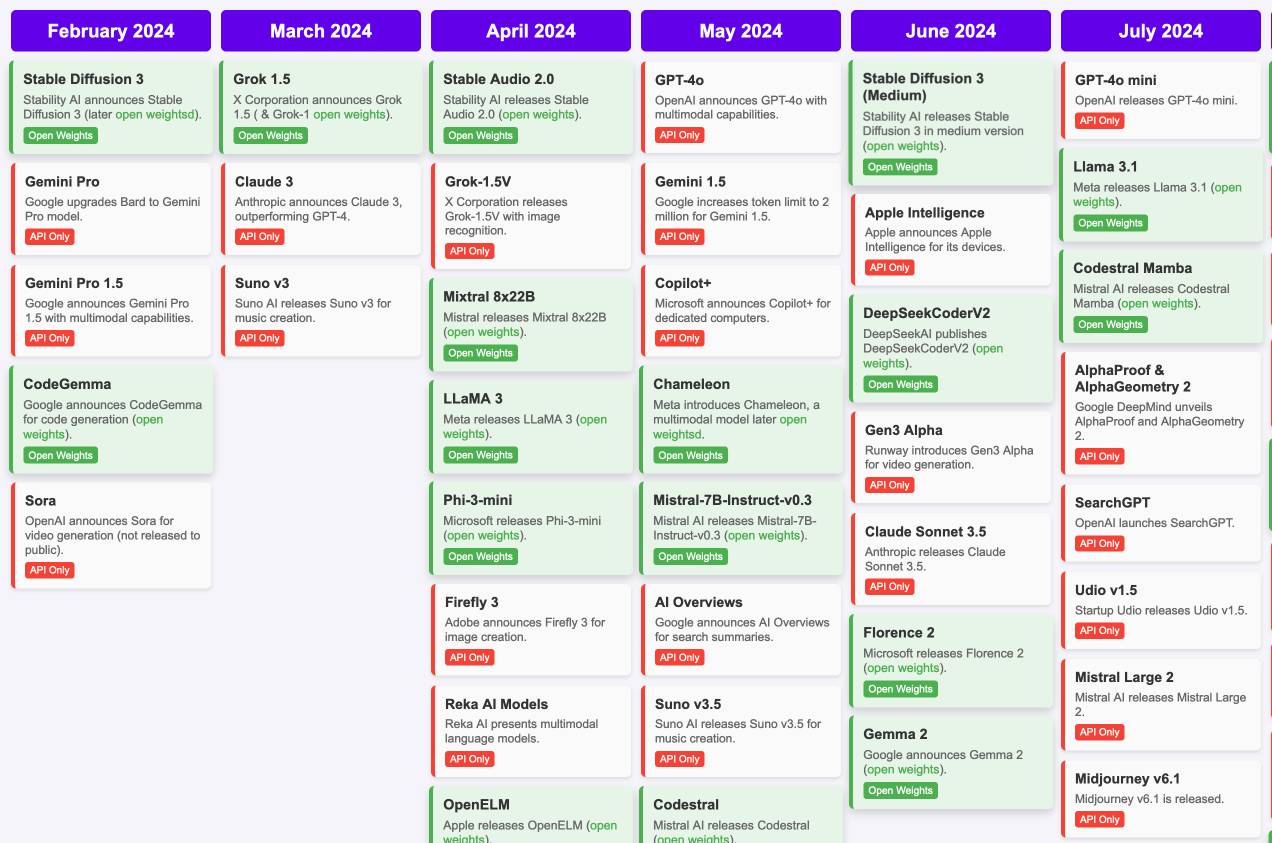

Timeline of AI model releases in 2024 (via) VB assembled this detailed timeline of every significant AI model release in 2024, for both API and open weight models.

I'd hoped to include something like this in my 2024 review - I'm glad I didn't bother, because VB's is way better than anything I had planned.

VB built it with assistance from DeepSeek v3, incorporating data from this Artificial Intelligence Timeline project by NHLOCAL. The source code (pleasingly simple HTML, CSS and a tiny bit of JavaScript) is on GitHub.

Things we learned about LLMs in 2024

A lot has happened in the world of Large Language Models over the course of 2024. Here’s a review of things we figured out about the field in the past twelve months, plus my attempt at identifying key themes and pivotal moments.

[... 7,490 words]Basically, a frontier model like OpenAI’s O1 is like a Ferrari SF-23. It’s an obvious triumph of engineering, designed to win races, and that’s why we talk about it. But it takes a special pit crew just to change the tires and you can’t buy one for yourself. In contrast, a BERT model is like a Honda Civic. It’s also an engineering triumph, but more subtly, since it is engineered to be affordable, fuel-efficient, reliable, and extremely useful. And that’s why they’re absolutely everywhere.

Severance on FanFare. I'm coordinating a rewatch of season one of Severance on MetaFilter Fanfare in preparation for season two (due to start on January 17th). I'm posting an episode every three days - we are up to episode 5 so far (excellently titled "The Grim Barbarics of Optics and Design").

Severance is a show that rewatches really well. There are so many delightful details that stand out once you know more about where the series is going.

There is no technical moat in this field, and so OpenAI is the epicenter of an investment bubble.

Thus, effectively, OpenAI is to this decade’s generative-AI revolution what Netscape was to the 1990s’ internet revolution. The revolution is real, but it’s ultimately going to be a commodity technology layer, not the foundation of a defensible proprietary moat. In 1995 investors mistakenly thought investing in Netscape was a good way to bet on the future of the open internet and the World Wide Web in particular. Investing in OpenAI today is a bit like that — generative AI technology has a bright future and is transforming the world, but it’s wishful thinking that the breakthrough client implementation is going to form the basis of a lasting industry titan.

What's holding back research isn't a lack of verbose, low-signal, high-noise papers. Using LLMs to automatically generate 100x more of those will not accelerate science, it will slow it down.

— François Chollet, 12th May 2024

How we think about Threads’ iOS performance (via) This article by Dave LaMacchia and Jason Patterson provides an incredibly deep insight into what effective performance engineering looks like for an app with 100s of millions of users.

I always like hearing about custom performance metrics with their own acronyms. Here we are introduced to %FIRE - the portion of people who experience a frustrating image-render experience (based on how long an image takes to load after the user scrolls it into the viewport), TTNC (time-to-network content) measuring time from app launch to fresh content visible in the feed and cPSR (creation-publish success rate) for how often a user manages to post content that they started to create.

This article introduced me to the concept of a boundary test, described like this:

A boundary test is one where we measure extreme ends of a boundary to learn what the effect is. In our case, we introduced a slight bit of latency when a small percentage of our users would navigate to a user profile, to the conversion view for a post, or to their activity feed.

This latency would allow us to extrapolate what the effect would be if we similarly improved how we delivered content to those views.

[...]

We learned that iOS users don’t tolerate a lot of latency. The more we added, the less often they would launch the app and the less time they would stay in it. With the smallest latency injection, the impact was small or negligible for some views, but the largest injections had negative effects across the board. People would read fewer posts, post less often themselves, and in general interact less with the app. Remember, we weren’t injecting latency into the core feed, either; just into the profile, permalink, and activity.

There's a whole lot more in there, including details of their custom internal performance logger (SLATE, the “Systemic LATEncy” logger) and several case studies of surprising performance improvements made with the assistance of their metrics and tools, plus some closing notes on how Swift concurrency is being adopted throughout Meta.

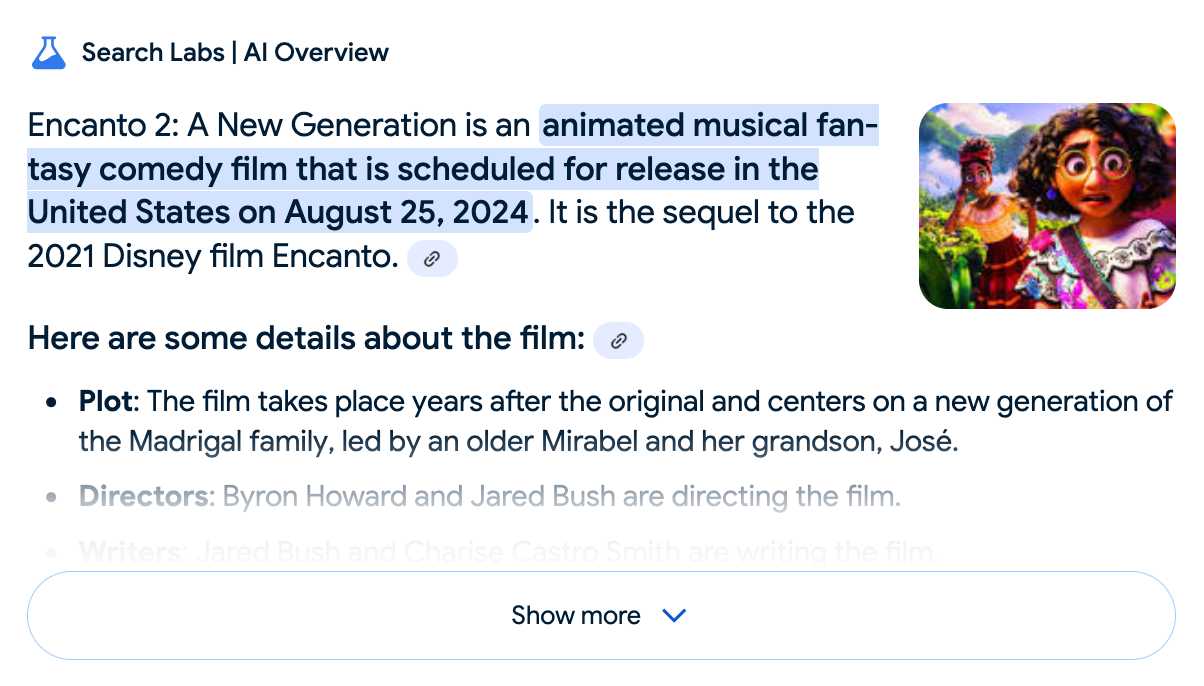

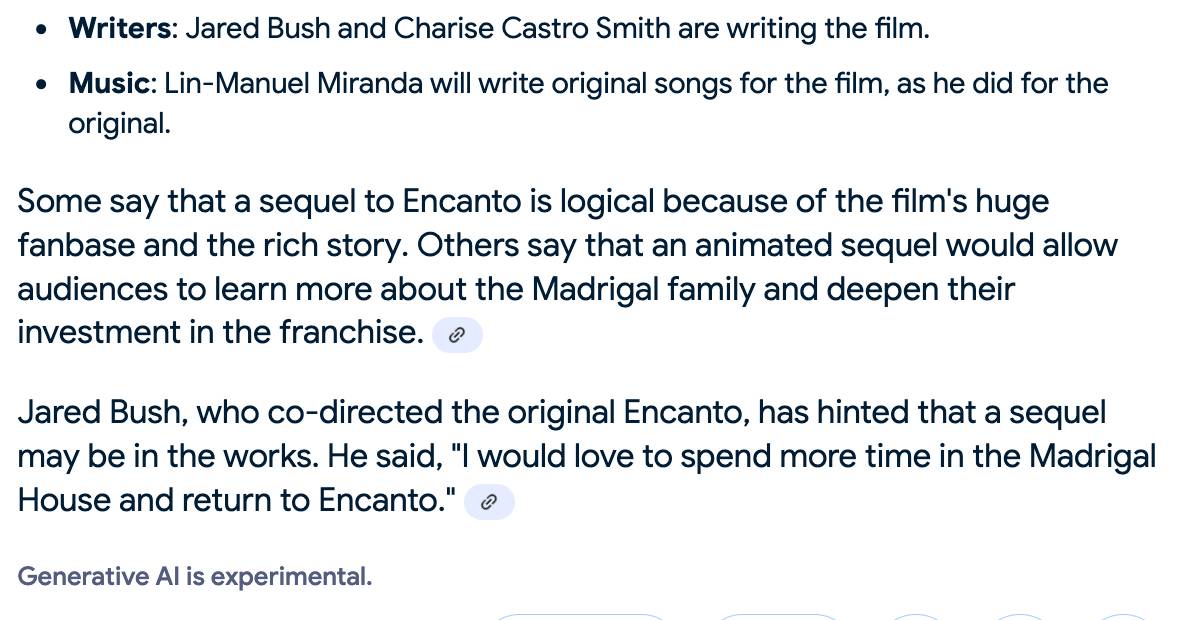

Google search hallucinates Encanto 2. Jason Schreier on Bluesky:

I was excited to tell my kids that there's a sequel to Encanto, only to scroll down and learn that Google's AI just completely made this up

I just replicated the same result by searching Google for encanto 2. Here's what the "AI overview" at the top of the page looked like:

Only when I clicked the "Show more" link did it become clear what had happened:

The link in that first snippet was to the Encanto 2: A New Generation page on Idea Wiki:

This is a fanon wiki, and just like fan-fiction wikis, this one has a variety of fan created ideas on here! These include potential sequels and new series that have yet to exist.

Other cited links included this article about Instagram fan art and Encanto's Sequel Chances Addressed by Disney Director, a very thin article built around a short quote from Encanto's director at D23 Brazil.

And that August 2024 release date (which the AI summary weirdly lists as "scheduled for release" despite that date being five months in the past)? It's from the Idea Wiki imaginary info box for the film.

This is a particularly clear example of how badly wrong AI summarization can go. LLMs are gullible: they believe what you tell them, and the web is full of misleading information - some of which is completely innocent.

Update: I've had some pushback over my use of the term "hallucination" here, on the basis that the LLM itself is doing what it's meant to: summarizing the RAG content that has been provided to it by the host system.

That's fair: this is not a classic LLM hallucination, where the LLM produces incorrect data purely from knowledge partially encoded in its weights.

I classify this as a bug in Google's larger LLM-powered AI overview system. That system should be able to take the existence of invalid data sources into account - given how common searches for non-existent movie sequels (or TV seasons) are, I would hope that AI overviews could classify such searches and take extra steps to avoid serving misleading answers.

So think this is a "hallucination" bug in the AI overview system itself: it's making statements about the world that are not true.

My Approach to Building Large Technical Projects (via) Mitchell Hashimoto wrote this piece about taking on large projects back in June 2023. The project he described in the post is a terminal emulator written in Zig called Ghostty which just reached its 1.0 release.

I've learned that when I break down my large tasks in chunks that result in seeing tangible forward progress, I tend to finish my work and retain my excitement throughout the project. People are all motivated and driven in different ways, so this may not work for you, but as a broad generalization I've not found an engineer who doesn't get excited by a good demo. And the goal is to always give yourself a good demo.

For backend-heavy projects the lack of an initial UI is a challenge here, so Mitchell advocates for early automated tests as a way to start exercising code and seeing progress right from the start. Don't let tests get in the way of demos though:

No matter what I'm working on, I try to build one or two demos per week intermixed with automated test feedback as explained in the previous section.

Building a demo also provides you with invaluable product feedback. You can quickly intuit whether something feels good, even if it isn't fully functional.

For more on the development of Ghostty see this talk Mitchell gave at Zig Showtime last year:

I want the terminal to be a modern platform for text application development, analogous to the browser being a modern platform for GUI application development (for better or worse).

Looking back, it's clear we overcomplicated things. While embeddings fundamentally changed how we can represent and compare content, they didn't need an entirely new infrastructure category. What we label as "vector databases" are, in reality, search engines with vector capabilities. The market is already correcting this categorization—vector search providers rapidly add traditional search features while established search engines incorporate vector search capabilities. This category convergence isn't surprising: building a good retrieval engine has always been about combining multiple retrieval and ranking strategies. Vector search is just another powerful tool in that toolbox, not a category of its own.

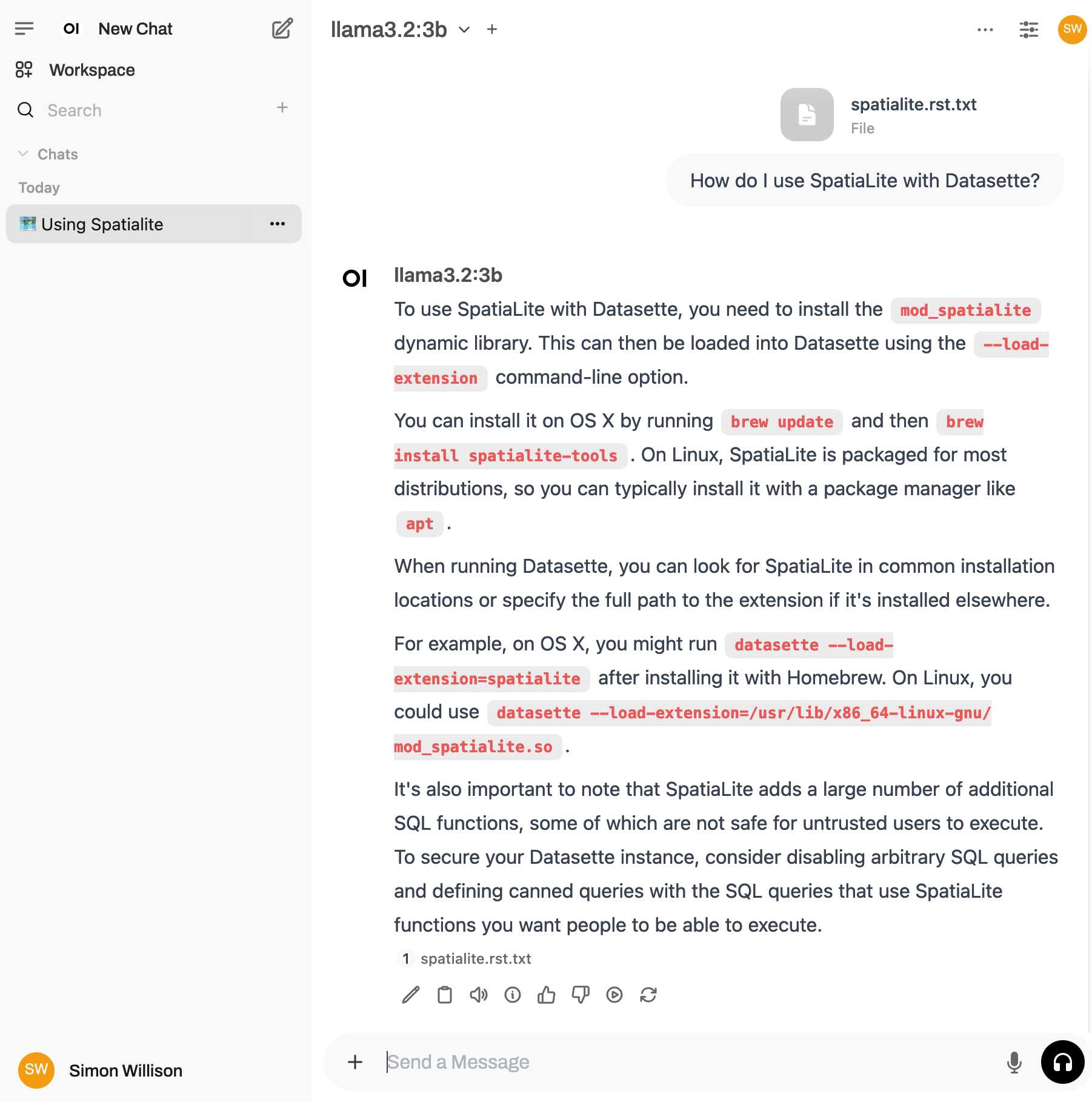

Open WebUI. I tried out this open source (MIT licensed, JavaScript and Python) localhost UI for accessing LLMs today for the first time. It's very nicely done.

I ran it with uvx like this:

uvx --python 3.11 open-webui serve

On first launch it installed a bunch of dependencies and then downloaded 903MB to ~/.cache/huggingface/hub/models--sentence-transformers--all-MiniLM-L6-v2 - a copy of the all-MiniLM-L6-v2 embedding model, presumably for its RAG feature.

It then presented me with a working Llama 3.2:3b chat interface, which surprised me because I hadn't spotted it downloading that model. It turns out that was because I have Ollama running on my laptop already (with several models, including Llama 3.2:3b, already installed) - and Open WebUI automatically detected Ollama and gave me access to a list of available models.

I found a "knowledge" section and added all of the Datasette documentation (by dropping in the .rst files from the docs) - and now I can type # in chat to search for a file, add that to the context and then ask questions about it directly.

I selected the spatialite.rst.txt file, prompted it with "How do I use SpatiaLite with Datasette" and got back this:

That's honestly a very solid answer, especially considering the Llama 3.2 3B model from Ollama is just a 1.9GB file! It's impressive how well that model can handle basic Q&A and summarization against text provided to it - it somehow has a 128,000 token context size.

Open WebUI has a lot of other tricks up its sleeve: it can talk to API models such as OpenAI directly, has optional integrations with web search and custom tools and logs every interaction to a SQLite database. It also comes with extensive documentation.

DeepSeek_V3.pdf (via) The DeepSeek v3 paper (and model card) are out, after yesterday's mysterious release of the undocumented model weights.

Plenty of interesting details in here. The model pre-trained on 14.8 trillion "high-quality and diverse tokens" (not otherwise documented).

Following this, we conduct post-training, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base model of DeepSeek-V3, to align it with human preferences and further unlock its potential. During the post-training stage, we distill the reasoning capability from the DeepSeek-R1 series of models, and meanwhile carefully maintain the balance between model accuracy and generation length.

By far the most interesting detail though is how much the training cost. DeepSeek v3 trained on 2,788,000 H800 GPU hours at an estimated cost of $5,576,000. For comparison, Meta AI's Llama 3.1 405B (smaller than DeepSeek v3's 685B parameters) trained on 11x that - 30,840,000 GPU hours, also on 15 trillion tokens.

DeepSeek v3 benchmarks comparably to Claude 3.5 Sonnet, indicating that it's now possible to train a frontier-class model (at least for the 2024 version of the frontier) for less than $6 million!

For reference, this level of capability is supposed to require clusters of closer to 16K GPUs, the ones being brought up today are more around 100K GPUs. E.g. Llama 3 405B used 30.8M GPU-hours, while DeepSeek-V3 looks to be a stronger model at only 2.8M GPU-hours (~11X less compute). If the model also passes vibe checks (e.g. LLM arena rankings are ongoing, my few quick tests went well so far) it will be a highly impressive display of research and engineering under resource constraints.

DeepSeek also announced their API pricing. From February 8th onwards:

Input: $0.27/million tokens ($0.07/million tokens with cache hits)

Output: $1.10/million tokens

Claude 3.5 Sonnet is currently $3/million for input and $15/million for output, so if the models are indeed of equivalent quality this is a dramatic new twist in the ongoing LLM pricing wars.

Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used.

— EU Artificial Intelligence Act, Article 4: AI literacy

Cognitive load is what matters (via) Excellent living document (the underlying repo has 625 commits since being created in May 2023) maintained by Artem Zakirullin about minimizing the cognitive load needed to understand and maintain software.

This all rings very true to me. I judge the quality of a piece of code by how easy it is to change, and anything that causes me to take on more cognitive load - unraveling a class hierarchy, reading though dozens of tiny methods - reduces the quality of the code by that metric.

Lots of accumulated snippets of wisdom in this one.

Mantras like "methods should be shorter than 15 lines of code" or "classes should be small" turned out to be somewhat wrong.

deepseek-ai/DeepSeek-V3-Base (via) No model card or announcement yet, but this new model release from Chinese AI lab DeepSeek (an arm of Chinese hedge fund High-Flyer) looks very significant.

It's a huge model - 685B parameters, 687.9 GB on disk (TIL how to size a git-lfs repo). The architecture is a Mixture of Experts with 256 experts, using 8 per token.

For comparison, Meta AI's largest released model is their Llama 3.1 model with 405B parameters.

The new model is apparently available to some people via both chat.deepseek.com and the DeepSeek API as part of a staged rollout.

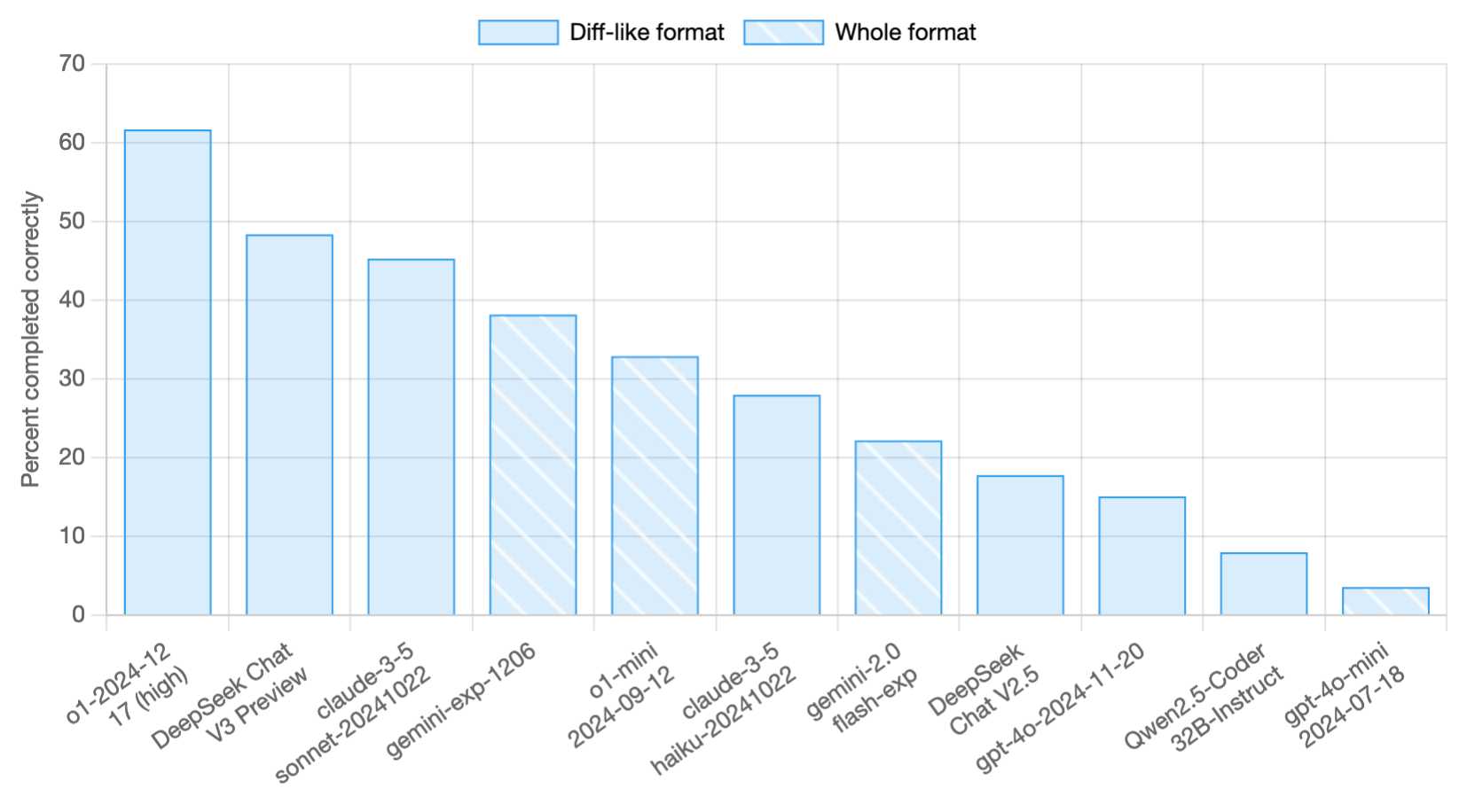

Paul Gauthier got API access and used it to update his new Aider Polyglot leaderboard - DeepSeek v3 preview scored 48.4%, putting it in second place behind o1-2024-12-17 (high) and in front of both claude-3-5-sonnet-20241022 and gemini-exp-1206!

I never know if I can believe models or not (the first time I asked "what model are you?" it claimed to be "based on OpenAI's GPT-4 architecture"), but I just got this result using LLM and the llm-deepseek plugin:

llm -m deepseek-chat 'what deepseek model are you?'

I'm DeepSeek-V3 created exclusively by DeepSeek. I'm an AI assistant, and I'm at your service! Feel free to ask me anything you'd like. I'll do my best to assist you.

Here's my initial experiment log.

Trying out QvQ—Qwen’s new visual reasoning model

I thought we were done for major model releases in 2024, but apparently not: Alibaba’s Qwen team just dropped the Apache 2.0 licensed Qwen licensed (the license changed) QvQ-72B-Preview, “an experimental research model focusing on enhancing visual reasoning capabilities”.

it's really hard not to be obsessed with these tools. It's like having a bespoke, free, (usually) accurate curiosity-satisfier in your pocket, no matter where you go - if you know how to ask questions, then suddenly the world is an audiobook

[On Reddit] we had to look up every single comment on the page to see if you had voted on it [...]

But with a bloom filter, we could very quickly look up all the comments and get back a list of all the ones you voted on (with a couple of false positives in there). Then we could go to the cache and see if your actual vote was there (and if it was an upvote or a downvote). It was only after a failed cache hit did we have to actually go to the database.

But that bloom filter saved us from doing sometimes 1000s of cache lookups.

Finally, a replacement for BERT: Introducing ModernBERT (via) BERT was an early language model released by Google in October 2018. Unlike modern LLMs it wasn't designed for generating text. BERT was trained for masked token prediction and was generally applied to problems like Named Entity Recognition or Sentiment Analysis. BERT also wasn't very useful on its own - most applications required you to fine-tune a model on top of it.

In exploring BERT I decided to try out dslim/distilbert-NER, a popular Named Entity Recognition model fine-tuned on top of DistilBERT (a smaller distilled version of the original BERT model). Here are my notes on running that using uv run.

Jeremy Howard's Answer.AI research group, LightOn and friends supported the development of ModernBERT, a brand new BERT-style model that applies many enhancements from the past six years of advances in this space.

While BERT was trained on 3.3 billion tokens, producing 110 million and 340 million parameter models, ModernBERT trained on 2 trillion tokens, resulting in 140 million and 395 million parameter models. The parameter count hasn't increased much because it's designed to run on lower-end hardware. It has a 8192 token context length, a significant improvement on BERT's 512.

I was able to run one of the demos from the announcement post using uv run like this (I'm not sure why I had to use numpy<2.0 but without that I got an error about cannot import name 'ComplexWarning' from 'numpy.core.numeric'):

uv run --with 'numpy<2.0' --with torch --with 'git+https://github.com/huggingface/transformers.git' pythonThen this Python:

import torch from transformers import pipeline from pprint import pprint pipe = pipeline( "fill-mask", model="answerdotai/ModernBERT-base", torch_dtype=torch.bfloat16, ) input_text = "He walked to the [MASK]." results = pipe(input_text) pprint(results)

Which downloaded 573MB to ~/.cache/huggingface/hub/models--answerdotai--ModernBERT-base and output:

[{'score': 0.11669921875,

'sequence': 'He walked to the door.',

'token': 3369,

'token_str': ' door'},

{'score': 0.037841796875,

'sequence': 'He walked to the office.',

'token': 3906,

'token_str': ' office'},

{'score': 0.0277099609375,

'sequence': 'He walked to the library.',

'token': 6335,

'token_str': ' library'},

{'score': 0.0216064453125,

'sequence': 'He walked to the gate.',

'token': 7394,

'token_str': ' gate'},

{'score': 0.020263671875,

'sequence': 'He walked to the window.',

'token': 3497,

'token_str': ' window'}]

I'm looking forward to trying out models that use ModernBERT as their base. The model release is accompanied by a paper (Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference) and new documentation for using it with the Transformers library.

There’s been a lot of strange reporting recently about how ‘scaling is hitting a wall’ – in a very narrow sense this is true in that larger models were getting less score improvement on challenging benchmarks than their predecessors, but in a larger sense this is false – techniques like those which power O3 means scaling is continuing (and if anything the curve has steepened), you just now need to account for scaling both within the training of the model and in the compute you spend on it once trained.

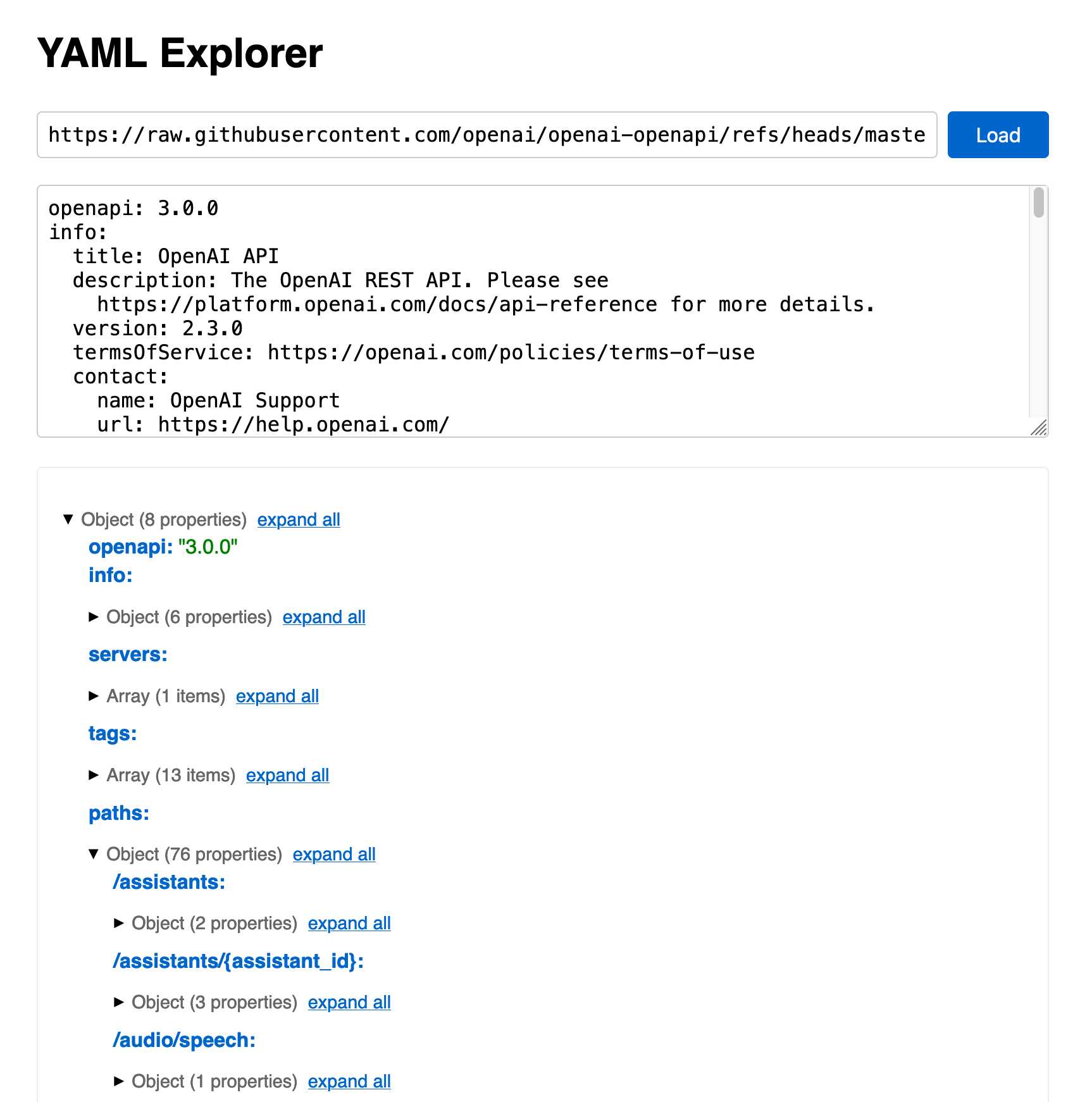

Whether you’re an AI-programming skeptic or an enthusiast, the reality is that many programming tasks are beyond the reach of today’s models. But many decent dev tools are actually quite easy for AI to build, and can help the rest of the programming go smoother. In general, these days any time I’m spending more than a minute staring at a JSON blob, I consider whether it’s worth building a custom UI for it.

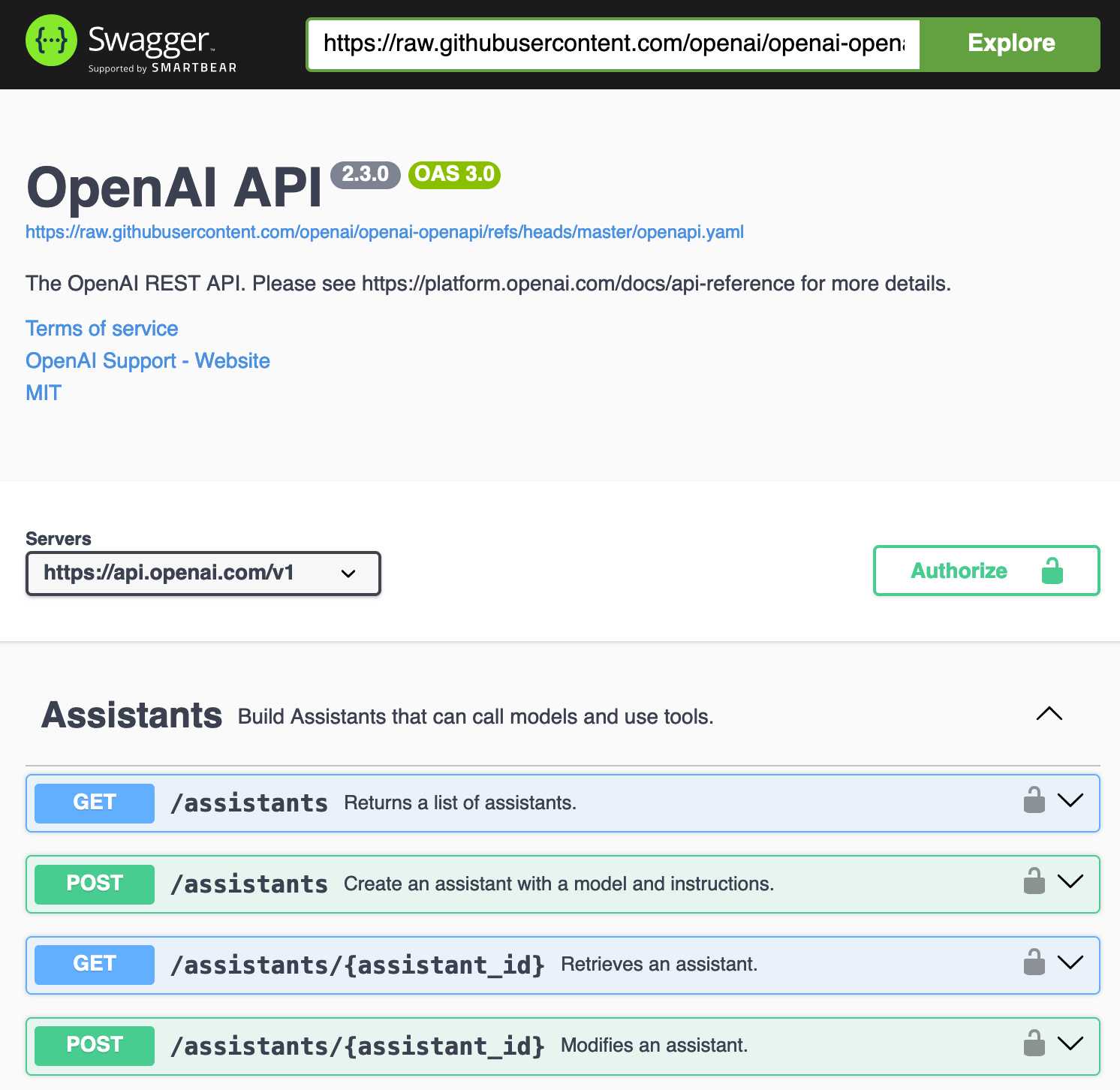

openai/openai-openapi. Seeing as the LLM world has semi-standardized on imitating OpenAI's API format for a whole host of different tools, it's useful to note that OpenAI themselves maintain a dedicated repository for a OpenAPI YAML representation of their current API.

(I get OpenAI and OpenAPI typo-confused all the time, so openai-openapi is a delightfully fiddly repository name.)

The openapi.yaml file itself is over 26,000 lines long, defining 76 API endpoints ("paths" in OpenAPI terminology) and 284 "schemas" for JSON that can be sent to and from those endpoints. A much more interesting view onto it is the commit history for that file, showing details of when each different API feature was released.

Browsing 26,000 lines of YAML isn't pleasant, so I got Claude to build me a rudimentary YAML expand/hide exploration tool. Here's that tool running against the OpenAI schema, loaded directly from GitHub via a CORS-enabled fetch() call: https://tools.simonwillison.net/yaml-explorer#.eyJ1c... - the code after that fragment is a base64-encoded JSON for the current state of the tool (mostly Claude's idea).

The tool is a little buggy - the expand-all option doesn't work quite how I want - but it's useful enough for the moment.

Update: It turns out the petstore.swagger.io demo has an (as far as I can tell) undocumented ?url= parameter which can load external YAML files, so here's openai-openapi/openapi.yaml in an OpenAPI explorer interface.

What happened to the world’s largest tube TV? (via) This YouTube video is an absolute delight.

Shank Mods describes the legendary Sony PVM-4300 - the largest CRT television ever made, released by Sony in 1989 and weighing over 400lb. CRT enthusiasts had long debated its very existence, given the lack of known specimens outside of Sony's old marketing materials. Then Shank tracked a working one down... on the second floor of a 300 year old Soba noodle restaurant in Osaka, Japan.

This story of how they raced to rescue the TV before the restaurant was demolished, given the immense difficulty of moving a 400lb television (and then shipping it to the USA), is a fantastic ride.

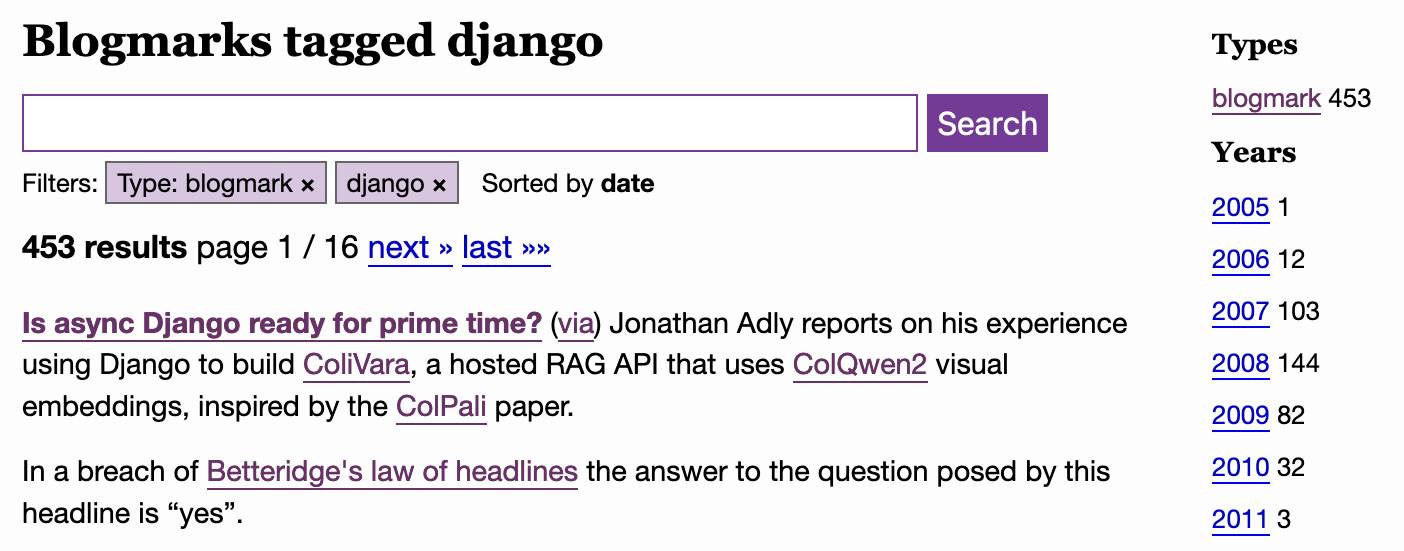

My approach to running a link blog

I started running a basic link blog on this domain back in November 2003—publishing links (which I called “blogmarks”) with a title, URL, short snippet of commentary and a “via” link where appropriate.

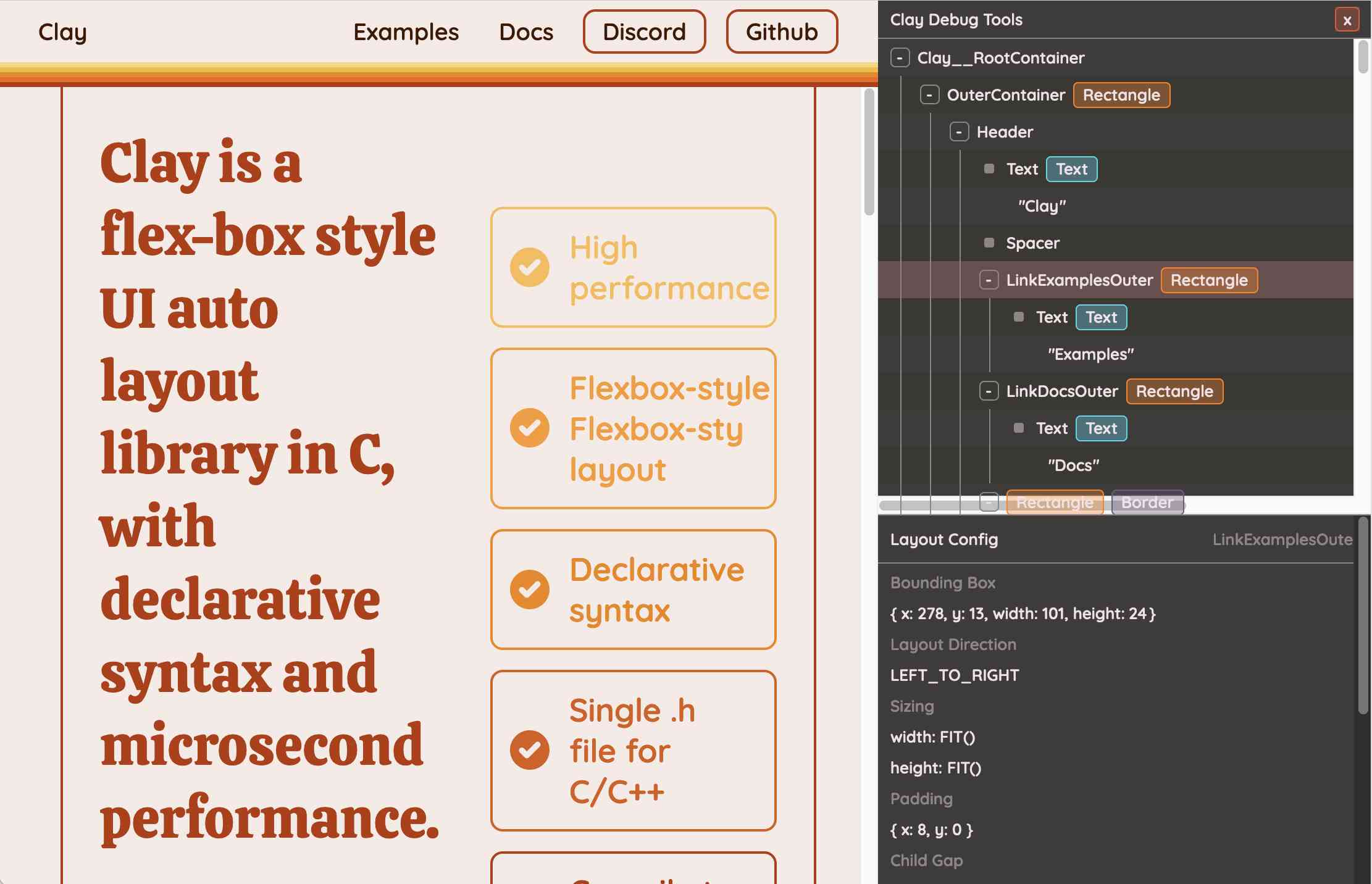

[... 1,510 words]Clay UI library (via) Fascinating project by Nic Barker, who describes Clay like this:

Clay is a flex-box style UI auto layout library in C, with declarative syntax and microsecond performance.

His intro video to the library is outstanding: I learned a ton about how UI layout works from this, and the animated visual explanations are clear, tasteful and really helped land the different concepts:

Clay is a C library delivered in a single ~2000 line clay.h dependency-free header file. It only handles layout calculations: if you want to render the result you need to add an additional rendering layer.

In a fascinating demo of the library, the Clay site itself is rendered using Clay C compiled to WebAssembly! You can even switch between the default HTML renderer and an alternative based on Canvas.

This isn't necessarily a great idea: because the layout is entirely handled using <div> elements positioned using transform: translate(0px, 70px) style CSS attempting to select text across multiple boxes behaves strangely, and it's not clear to me what the accessibility implications are.

Update: Matt Campbell:

The accessibility implications are as serious as you might guess. The links aren't properly labeled, there's no semantic markup such as headings, and since there's a div for every line, continuous reading with a screen reader is choppy, that is, it pauses at the end of every physical line.

It does make for a very compelling demo of what Clay is capable of though, especially when you resize your browser window and the page layout is recalculated in real-time via the Clay WebAssembly bridge.

You can hit "D" on the website and open up a custom Clay debugger showing the hierarchy of layout elements on the page:

This also means that the entire page is defined using C code! Given that, I find the code itself surprisingly readable

void DeclarativeSyntaxPageDesktop() {

CLAY(CLAY_ID("SyntaxPageDesktop"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW(), CLAY_SIZING_FIT({ .min = windowHeight - 50 }) }, .childAlignment = {0, CLAY_ALIGN_Y_CENTER}, .padding = {.x = 50} })) {

CLAY(CLAY_ID("SyntaxPage"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW(), CLAY_SIZING_GROW() }, .childAlignment = { 0, CLAY_ALIGN_Y_CENTER }, .padding = { 32, 32 }, .childGap = 32 }), CLAY_BORDER({ .left = { 2, COLOR_RED }, .right = { 2, COLOR_RED } })) {

CLAY(CLAY_ID("SyntaxPageLeftText"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_PERCENT(0.5) }, .layoutDirection = CLAY_TOP_TO_BOTTOM, .childGap = 8 })) {

CLAY_TEXT(CLAY_STRING("Declarative Syntax"), CLAY_TEXT_CONFIG({ .fontSize = 52, .fontId = FONT_ID_TITLE_56, .textColor = COLOR_RED }));

CLAY(CLAY_ID("SyntaxSpacer"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW({ .max = 16 }) } })) {}

CLAY_TEXT(CLAY_STRING("Flexible and readable declarative syntax with nested UI element hierarchies."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

CLAY_TEXT(CLAY_STRING("Mix elements with standard C code like loops, conditionals and functions."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

CLAY_TEXT(CLAY_STRING("Create your own library of re-usable components from UI primitives like text, images and rectangles."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

}

CLAY(CLAY_ID("SyntaxPageRightImage"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_PERCENT(0.50) }, .childAlignment = {.x = CLAY_ALIGN_X_CENTER} })) {

CLAY(CLAY_ID("SyntaxPageRightImageInner"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW({ .max = 568 }) } }), CLAY_IMAGE({ .sourceDimensions = {1136, 1194}, .sourceURL = CLAY_STRING("/clay/images/declarative.png") })) {}

}

}

}

}I'm not ready to ditch HTML and CSS for writing my web pages in C compiled to WebAssembly just yet, but as an exercise in understanding layout engines (and a potential tool for building non-web interfaces in the future) this is a really interesting project to dig into.

To clarify here: I don't think the web layout / WebAssembly thing is the key idea behind Clay at all - I think it's a neat demo of the library, but it's not what Clay is for. It's certainly an interesting way to provide a demo of a layout library!

Nic confirms:

You totally nailed it, the fact that you can compile to wasm and run in HTML stemmed entirely from a “wouldn’t it be cool if…” It was designed for my C projects first and foremost!

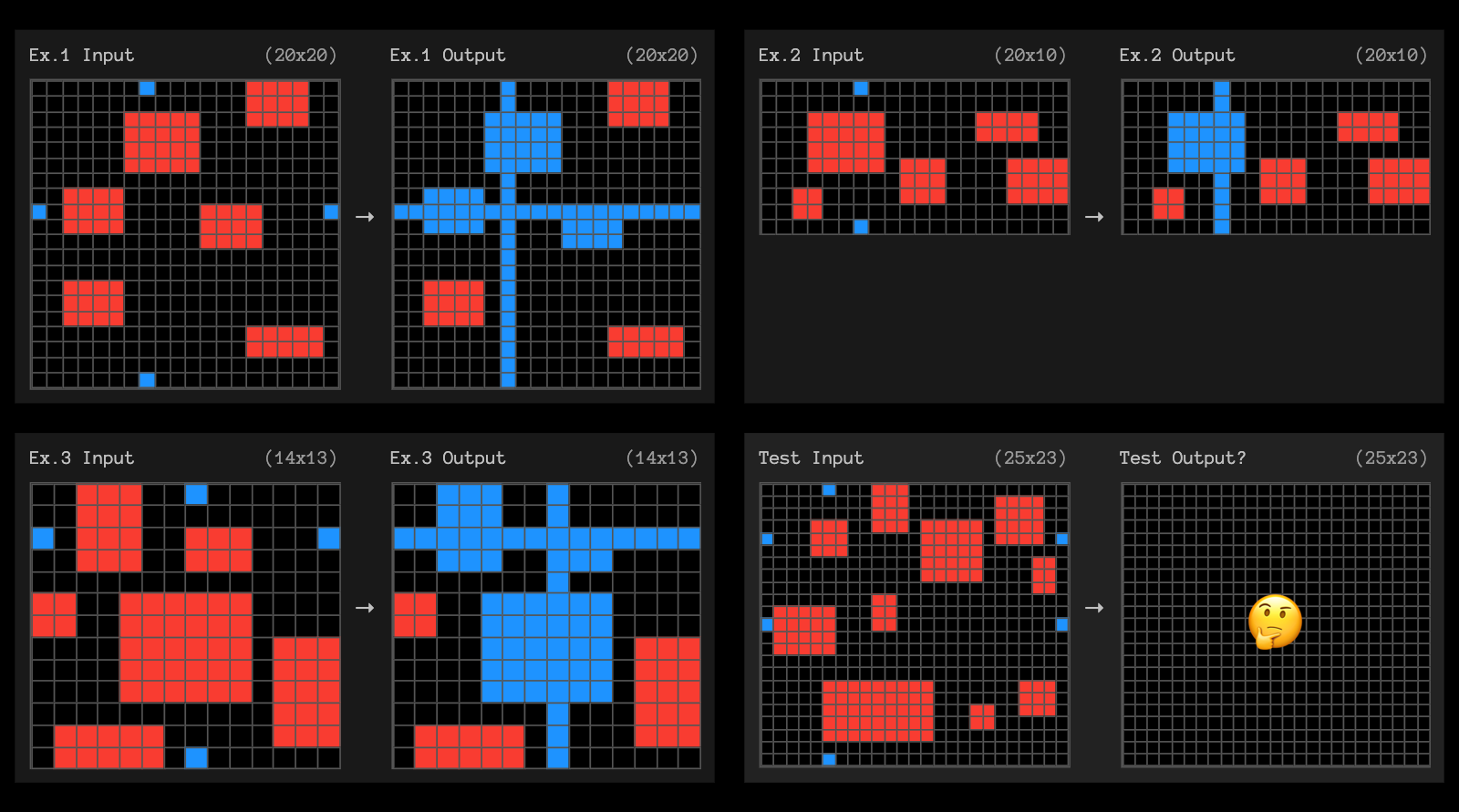

OpenAI o3 breakthrough high score on ARC-AGI-PUB. François Chollet is the co-founder of the ARC Prize and had advanced access to today's o3 results. His article here is the most insightful coverage I've seen of o3, going beyond just the benchmark results to talk about what this all means for the field in general.

One fascinating detail: it cost $6,677 to run o3 in "high efficiency" mode against the 400 public ARC-AGI puzzles for a score of 82.8%, and an undisclosed amount of money to run the "low efficiency" mode model to score 91.5%. A note says:

o3 high-compute costs not available as pricing and feature availability is still TBD. The amount of compute was roughly 172x the low-compute configuration.

So we can get a ballpark estimate here in that 172 * $6,677 = $1,148,444!

Here's how François explains the likely mechanisms behind o3, which reminds me of how a brute-force chess computer might work.

For now, we can only speculate about the exact specifics of how o3 works. But o3's core mechanism appears to be natural language program search and execution within token space – at test time, the model searches over the space of possible Chains of Thought (CoTs) describing the steps required to solve the task, in a fashion perhaps not too dissimilar to AlphaZero-style Monte-Carlo tree search. In the case of o3, the search is presumably guided by some kind of evaluator model. To note, Demis Hassabis hinted back in a June 2023 interview that DeepMind had been researching this very idea – this line of work has been a long time coming.

So while single-generation LLMs struggle with novelty, o3 overcomes this by generating and executing its own programs, where the program itself (the CoT) becomes the artifact of knowledge recombination. Although this is not the only viable approach to test-time knowledge recombination (you could also do test-time training, or search in latent space), it represents the current state-of-the-art as per these new ARC-AGI numbers.

Effectively, o3 represents a form of deep learning-guided program search. The model does test-time search over a space of "programs" (in this case, natural language programs – the space of CoTs that describe the steps to solve the task at hand), guided by a deep learning prior (the base LLM). The reason why solving a single ARC-AGI task can end up taking up tens of millions of tokens and cost thousands of dollars is because this search process has to explore an enormous number of paths through program space – including backtracking.

I'm not sure if o3 (and o1 and similar models) even qualifies as an LLM any more - there's clearly a whole lot more going on here than just next-token prediction.

On the question of if o3 should qualify as AGI (whatever that might mean):

Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don't think o3 is AGI yet. o3 still fails on some very easy tasks, indicating fundamental differences with human intelligence.

Furthermore, early data points suggest that the upcoming ARC-AGI-2 benchmark will still pose a significant challenge to o3, potentially reducing its score to under 30% even at high compute (while a smart human would still be able to score over 95% with no training).

The post finishes with examples of the puzzles that o3 didn't manage to solve, including this one which reassured me that I can still solve at least some puzzles that couldn't be handled with thousands of dollars of GPU compute!

OpenAI's new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

This is a surprising and important step-function increase in AI capabilities, showing novel task adaptation ability never seen before in the GPT-family models. For context, ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o. All intuition about AI capabilities will need to get updated for o3.

— François Chollet, Co-founder, ARC Prize

Live blog: the 12th day of OpenAI—“Early evals for OpenAI o3”

It’s the final day of OpenAI’s 12 Days of OpenAI launch series, and since I built a live blogging system a couple of months ago I’ve decided to roll it out again to provide live commentary during the half hour event, which kicks off at 10am San Francisco time.

[... 76 words]December in LLMs has been a lot

I had big plans for December: for one thing, I was hoping to get to an actual RC of Datasette 1.0, in preparation for a full release in January. Instead, I’ve found myself distracted by a constant barrage of new LLM releases.

[... 901 words]Building effective agents (via) My principal complaint about the term "agents" is that while it has many different potential definitions most of the people who use it seem to assume that everyone else shares and understands the definition that they have chosen to use.

This outstanding piece by Erik Schluntz and Barry Zhang at Anthropic bucks that trend from the start, providing a clear definition that they then use throughout.

They discuss "agentic systems" as a parent term, then define a distinction between "workflows" - systems where multiple LLMs are orchestrated together using pre-defined patterns - and "agents", where the LLMs "dynamically direct their own processes and tool usage". This second definition is later expanded with this delightfully clear description:

Agents begin their work with either a command from, or interactive discussion with, the human user. Once the task is clear, agents plan and operate independently, potentially returning to the human for further information or judgement. During execution, it's crucial for the agents to gain “ground truth” from the environment at each step (such as tool call results or code execution) to assess its progress. Agents can then pause for human feedback at checkpoints or when encountering blockers. The task often terminates upon completion, but it’s also common to include stopping conditions (such as a maximum number of iterations) to maintain control.

That's a definition I can live with!

They also introduce a term that I really like: the augmented LLM. This is an LLM with augmentations such as tools - I've seen people use the term "agents" just for this, which never felt right to me.

The rest of the article is the clearest practical guide to building systems that combine multiple LLM calls that I've seen anywhere.

Most of the focus is actually on workflows. They describe five different patterns for workflows in detail:

- Prompt chaining, e.g. generating a document and then translating it to a separate language as a second LLM call

- Routing, where an initial LLM call decides which model or call should be used next (sending easy tasks to Haiku and harder tasks to Sonnet, for example)

- Parallelization, where a task is broken up and run in parallel (e.g. image-to-text on multiple document pages at once) or processed by some kind of voting mechanism

- Orchestrator-workers, where a orchestrator triggers multiple LLM calls that are then synthesized together, for example running searches against multiple sources and combining the results

- Evaluator-optimizer, where one model checks the work of another in a loop

These patterns all make sense to me, and giving them clear names makes them easier to reason about.

When should you upgrade from basic prompting to workflows and then to full agents? The authors provide this sensible warning:

When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean not building agentic systems at all.

But assuming you do need to go beyond what can be achieved even with the aforementioned workflow patterns, their model for agents may be a useful fit:

Agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path. The LLM will potentially operate for many turns, and you must have some level of trust in its decision-making. Agents' autonomy makes them ideal for scaling tasks in trusted environments.

The autonomous nature of agents means higher costs, and the potential for compounding errors. We recommend extensive testing in sandboxed environments, along with the appropriate guardrails

They also warn against investing in complex agent frameworks before you've exhausted your options using direct API access and simple code.

The article is accompanied by a brand new set of cookbook recipes illustrating all five of the workflow patterns. The Evaluator-Optimizer Workflow example is particularly fun, setting up a code generating prompt and an code reviewing evaluator prompt and having them loop until the evaluator is happy with the result.