March 2025

89 posts: 11 entries, 59 links, 18 quotes, 1 note

March 11, 2025

Languages that allow for a structurally similar codebase offer a significant boon for anyone making code changes because we can easily port changes between the two codebases. In contrast, languages that require fundamental rethinking of memory management, mutation, data structuring, polymorphism, laziness, etc., might be a better fit for a ground-up rewrite, but we're undertaking this more as a port that maintains the existing behavior and critical optimizations we've built into the language. Idiomatic Go strongly resembles the existing coding patterns of the TypeScript codebase, which makes this porting effort much more tractable.

— Ryan Cavanaugh, on why TypeScript chose to rewrite in Go, not Rust

OpenAI API: Responses vs. Chat Completions. OpenAI released a bunch of new API platform features this morning under the headline "New tools for building agents" (their somewhat mushy interpretation of "agents" here is "systems that independently accomplish tasks on behalf of users").

A particularly significant change is the introduction of a new Responses API, which is a slightly different shape from the Chat Completions API that they've offered for the past couple of years and which others in the industry have widely cloned as an ad-hoc standard.

In this guide they illustrate the differences, with a reassuring note that:

The Chat Completions API is an industry standard for building AI applications, and we intend to continue supporting this API indefinitely. We're introducing the Responses API to simplify workflows involving tool use, code execution, and state management. We believe this new API primitive will allow us to more effectively enhance the OpenAI platform into the future.

An API that is going away is the Assistants API, a perpetual beta first launched at OpenAI DevDay in 2023. The new responses API solves effectively the same problems but better, and assistants will be sunset "in the first half of 2026".

The best illustration I've seen of the differences between the two is this giant commit to the openai-python GitHub repository updating ALL of the example code in one go.

The most important feature of the Responses API (a feature it shares with the old Assistants API) is that it can manage conversation state on the server for you. An oddity of the Chat Completions API is that you need to maintain your own records of the current conversation, sending back full copies of it with each new prompt. You end up making API calls that look like this (from their examples):

{

"model": "gpt-4o-mini",

"messages": [

{

"role": "user",

"content": "knock knock.",

},

{

"role": "assistant",

"content": "Who's there?",

},

{

"role": "user",

"content": "Orange."

}

]

}These can get long and unwieldy - especially when attachments such as images are involved - but the real challenge is when you start integrating tools: in a conversation with tool use you'll need to maintain that full state and drop messages in that show the output of the tools the model requested. It's not a trivial thing to work with.

The new Responses API continues to support this list of messages format, but you also get the option to outsource that to OpenAI entirely: you can add a new "store": true property and then in subsequent messages include a "previous_response_id: response_id key to continue that conversation.

This feels a whole lot more natural than the Assistants API, which required you to think in terms of threads, messages and runs to achieve the same effect.

Also fun: the Response API supports HTML form encoding now in addition to JSON:

curl https://api.openai.com/v1/responses \

-u :$OPENAI_API_KEY \

-d model="gpt-4o" \

-d input="What is the capital of France?"

I found that in an excellent Twitter thread providing background on the design decisions in the new API from OpenAI's Atty Eleti. Here's a nitter link for people who don't have a Twitter account.

New built-in tools

A potentially more exciting change today is the introduction of default tools that you can request while using the new Responses API. There are three of these, all of which can be specified in the "tools": [...] array.

{"type": "web_search_preview"}- the same search feature available through ChatGPT. The documentation doesn't clarify which underlying search engine is used - I initially assumed Bing, but the tool documentation links to this Overview of OpenAI Crawlers page so maybe it's entirely in-house now? Web search is priced at between $25 and $50 per thousand queries depending on if you're using GPT-4o or GPT-4o mini and the configurable size of your "search context".{"type": "file_search", "vector_store_ids": [...]}provides integration with the latest version of their file search vector store, mainly used for RAG. "Usage is priced at $2.50 per thousand queries and file storage at $0.10/GB/day, with the first GB free".{"type": "computer_use_preview", "display_width": 1024, "display_height": 768, "environment": "browser"}is the most surprising to me: it's tool access to the Computer-Using Agent system they built for their Operator product. This one is going to be a lot of fun to explore. The tool's documentation includes a warning about prompt injection risks. Though on closer inspection I think this may work more like Claude Computer Use, where you have to run the sandboxed environment yourself rather than outsource that difficult part to them.

I'm still thinking through how to expose these new features in my LLM tool, which is made harder by the fact that a number of plugins now rely on the default OpenAI implementation from core, which is currently built on top of Chat Completions. I've been worrying for a while about the impact of our entire industry building clones of one proprietary API that might change in the future, I guess now we get to see how that shakes out!

OpenAI Agents SDK. OpenAI's other big announcement today (see also) - a Python library (openai-agents) for building "agents", which is a replacement for their previous swarm research project.

In this project, an "agent" is a class that configures an LLM with a system prompt an access to specific tools.

An interesting concept in this one is the concept of handoffs, where one agent can chose to hand execution over to a different system-prompt-plus-tools agent treating it almost like a tool itself. This code example illustrates the idea:

from agents import Agent, handoff billing_agent = Agent( name="Billing agent" ) refund_agent = Agent( name="Refund agent" ) triage_agent = Agent( name="Triage agent", handoffs=[billing_agent, handoff(refund_agent)] )

The library also includes guardrails - classes you can add that attempt to filter user input to make sure it fits expected criteria. Bits of this look suspiciously like trying to solve AI security problems with more AI to me.

March 12, 2025

Notes on Google’s Gemma 3

Google’s Gemma team released an impressive new model today (under their not-open-source Gemma license). Gemma 3 comes in four sizes—1B, 4B, 12B, and 27B—and while 1B is text-only the larger three models are all multi-modal for vision:

[... 804 words]March 13, 2025

Smoke test your Django admin site.

Justin Duke demonstrates a neat pattern for running simple tests against your internal Django admin site: introspect every admin route via django.urls.get_resolver() and loop through them with @pytest.mark.parametrize to check they all return a 200 HTTP status code.

This catches simple mistakes with the admin configuration that trigger exceptions that might otherwise go undetected.

I rarely write automated tests against my own admin sites and often feel guilty about it. I wrote some notes on testing it with pytest-django fixtures a few years ago.

Introducing Command A: Max performance, minimal compute (via) New LLM release from Cohere. It's interesting to see which aspects of the model they're highlighting, as an indicator of what their commercial customers value the most (highlights mine):

Command A delivers maximum performance with minimal hardware costs when compared to leading proprietary and open-weights models, such as GPT-4o and DeepSeek-V3. For private deployments, Command A excels on business-critical agentic and multilingual tasks, while being deployable on just two GPUs, compared to other models that typically require as many as 32. [...]

With a serving footprint of just two A100s or H100s, it requires far less compute than other comparable models on the market. This is especially important for private deployments. [...]

Its 256k context length (2x most leading models) can handle much longer enterprise documents. Other key features include Cohere’s advanced retrieval-augmented generation (RAG) with verifiable citations, agentic tool use, enterprise-grade security, and strong multilingual performance.

It's open weights but very much not open source - the license is Creative Commons Attribution Non-Commercial and also requires adhering to their Acceptable Use Policy.

Cohere offer it for commercial use via "contact us" pricing or through their API. I released llm-command-r 0.3 adding support for this new model, plus their smaller and faster Command R7B (released in December) and support for structured outputs via LLM schemas.

(I found a weird bug with their schema support where schemas that end in an integer output a seemingly limitless integer - in my experiments it affected Command R and the new Command A but not Command R7B.)

Anthropic API: Text editor tool (via) Anthropic released a new "tool" today for text editing. It looks similar to the tool they offered as part of their computer use beta API, and the trick they've been using for a while in both Claude Artifacts and the new Claude Code to more efficiently edit files there.

The new tool requires you to implement several commands:

view- to view a specified file - either the whole thing or a specified rangestr_replace- execute an exact string match replacement on a filecreate- create a new file with the specified contentsinsert- insert new text after a specified line numberundo_edit- undo the last edit made to a specific file

Providing implementations of these commands is left as an exercise for the developer.

Once implemented, you can have conversations with Claude where it knows that it can request the content of existing files, make modifications to them and create new ones.

There's quite a lot of assembly required to start using this. I tried vibe coding an implementation by dumping a copy of the documentation into Claude itself but I didn't get as far as a working program - it looks like I'd need to spend a bunch more time on that to get something to work, so my effort is currently abandoned.

This was introduced as in a post on Token-saving updates on the Anthropic API, which also included a simplification of their token caching API and a new Token-efficient tool use (beta) where sending a token-efficient-tools-2025-02-19 beta header to Claude 3.7 Sonnet can save 14-70% of the tokens needed to define tools and schemas.

Today we release OLMo 2 32B, the most capable and largest model in the OLMo 2 family, scaling up the OLMo 2 training recipe used for our 7B and 13B models released in November. It is trained up to 6T tokens and post-trained using Tulu 3.1. OLMo 2 32B is the first fully-open model (all data, code, weights, and details are freely available) to outperform GPT3.5-Turbo and GPT-4o mini on a suite of popular, multi-skill academic benchmarks.

— Ai2, OLMo 2 32B release announcement

Xata Agent (via) Xata are a hosted PostgreSQL company who also develop the open source pgroll and pgstream schema migration tools.

Their new "Agent" tool is a system that helps monitor and optimize a PostgreSQL server using prompts to LLMs.

Any time I see a new tool like this I go hunting for the prompts. It looks like the main system prompts for orchestrating the tool live here - here's a sample:

Provide clear, concise, and accurate responses to questions. Use the provided tools to get context from the PostgreSQL database to answer questions. When asked why a query is slow, call the explainQuery tool and also take into account the table sizes. During the initial assessment use the getTablesAndInstanceInfo, getPerfromanceAndVacuumSettings, and getPostgresExtensions tools. When asked to run a playbook, use the getPlaybook tool to get the playbook contents. Then use the contents of the playbook as an action plan. Execute the plan step by step.

The really interesting thing is those playbooks, each of which is implemented as a prompt in the lib/tools/playbooks.ts file. There are six of these so far:

SLOW_QUERIES_PLAYBOOKGENERAL_MONITORING_PLAYBOOKTUNING_PLAYBOOKINVESTIGATE_HIGH_CPU_USAGE_PLAYBOOKINVESTIGATE_HIGH_CONNECTION_COUNT_PLAYBOOKINVESTIGATE_LOW_MEMORY_PLAYBOOK

Here's the full text of INVESTIGATE_LOW_MEMORY_PLAYBOOK:

Objective: To investigate and resolve low freeable memory in the PostgreSQL database. Step 1: Get the freeable memory metric using the tool getInstanceMetric. Step 3: Get the instance details and compare the freeable memory with the amount of memory available. Step 4: Check the logs for any indications of memory pressure or out of memory errors. If there are, make sure to report that to the user. Also this would mean that the situation is critical. Step 4: Check active queries. Use the tool getConnectionsGroups to get the currently active queries. If a user or application stands out for doing a lot of work, record that to indicate to the user. Step 5: Check the work_mem setting and shared_buffers setting. Think if it would make sense to reduce these in order to free up memory. Step 6: If there is no clear root cause for using memory, suggest to the user to scale up the Postgres instance. Recommend a particular instance class.

This is the first time I've seen prompts arranged in a "playbooks" pattern like this. What a weird and interesting way to write software!

One of the most essential practices for maintaining the long-term quality of computer code is to write automated tests that ensure the program continues to act as expected, even when other people (including your future self) muck with it.

Adding AI-generated descriptions to my tools collection

The /colophon page on my tools.simonwillison.net site lists all 78 of the HTML+JavaScript tools I’ve built (with AI assistance) along with their commit histories, including links to prompting transcripts. I wrote about how I built that colophon the other day. It now also includes a description of each tool, generated using Claude 3.7 Sonnet.

[... 741 words]March 14, 2025

[...] in 2013, I did not understand that the things I said had meaning. I hate talking about this because it makes me seem more important than I am, but it’s also important to acknowledge. I saw myself at the time as just Steve, some random guy. If I say something on the internet, it’s like I’m talking to a friend in real life, my words are just random words and I’m human and whatever. It is what it is.

But at that time in my life, that wasn’t actually the case. I was on the Rails team, I was speaking at conferences, and people were reading my blog and tweets. I was an “influencer,” for better or worse. But I hadn’t really internalized that change in my life yet. And so I didn’t really understand that if I criticized something, it was something thousands of people would see.

— Steve Klabnik, Choosing Languages

Merklemap runs a 16TB PostgreSQL. Interesting thread on Hacker News where Pierre Barre describes the database architecture behind Merklemap, a certificate transparency search engine.

I run a 100 billion+ rows Postgres database [0], that is around 16TB, it's pretty painless!

There are a few tricks that make it run well (PostgreSQL compiled with a non-standard block size, ZFS, careful VACUUM planning). But nothing too out of the ordinary.

ATM, I insert about 150,000 rows a second, run 40,000 transactions a second, and read 4 million rows a second.

[...]

It's self-hosted on bare metal, with standby replication, normal settings, nothing "weird" there.

6 NVMe drives in raidz-1, 1024GB of memory, a 96 core AMD EPYC cpu.

[...]

About 28K euros of hardware per replica [one-time cost] IIRC + [ongoing] colo costs.

Something Is Rotten in the State of Cupertino. John Gruber's blazing takedown of Apple's failure to ship many of the key Apple Intelligence features they've been actively promoting for the past twelve months.

The fiasco here is not that Apple is late on AI. It's also not that they had to announce an embarrassing delay on promised features last week. Those are problems, not fiascos, and problems happen. They're inevitable. [...] The fiasco is that Apple pitched a story that wasn't true, one that some people within the company surely understood wasn't true, and they set a course based on that.

John divides the Apple Intelligence features into the ones that were demonstrated to members of the press (including himself) at various events over the past year compared to things like "personalized Siri" that were only ever shown as concept videos. The ones that were demonstrated have all shipped. The concept video features are indeterminably delayed.

How ProPublica Uses AI Responsibly in Its Investigations. Charles Ornstein describes how ProPublica used an LLM to help analyze data for their recent story A Study of Mint Plants. A Device to Stop Bleeding. This Is the Scientific Research Ted Cruz Calls “Woke.” by Agnel Philip and Lisa Song.

They ran ~3,400 grant descriptions through a prompt that included the following:

As an investigative journalist, I am looking for the following information

--

woke_description: A short description (at maximum a paragraph) on why this grant is being singled out for promoting "woke" ideology, Diversity, Equity, and Inclusion (DEI) or advanced neo-Marxist class warfare propaganda. Leave this blank if it's unclear.

why_flagged: Look at the "STATUS", "SOCIAL JUSTICE CATEGORY", "RACE CATEGORY", "GENDER CATEGORY" and "ENVIRONMENTAL JUSTICE CATEGORY" fields. If it's filled out, it means that the author of this document believed the grant was promoting DEI ideology in that way. Analyze the "AWARD DESCRIPTIONS" field and see if you can figure out why the author may have flagged it in this way. Write it in a way that is thorough and easy to understand with only one description per type and award.

citation_for_flag: Extract a very concise text quoting the passage of "AWARDS DESCRIPTIONS" that backs up the "why_flagged" data.

This was only the first step in the analysis of the data:

Of course, members of our staff reviewed and confirmed every detail before we published our story, and we called all the named people and agencies seeking comment, which remains a must-do even in the world of AI.

I think journalists are particularly well positioned to take advantage of LLMs in this way, because a big part of journalism is about deriving the truth from multiple unreliable sources of information. Journalists are deeply familiar with fact-checking, which is a critical skill if you're going to report with the assistance of these powerful but unreliable models.

Agnel Philip:

The tech holds a ton of promise in lead generation and pointing us in the right direction. But in my experience, it still needs a lot of human supervision and vetting. If used correctly, it can both really speed up the process of understanding large sets of information, and if you’re creative with your prompts and critically read the output, it can help uncover things that you may not have thought of.

Apple’s Siri Chief Calls AI Delays Ugly and Embarrassing, Promises Fixes (via) Mark Gurman reports on some leaked details from internal Apple meetings concerning the delays in shipping personalized Siri. This note in particular stood out to me:

Walker said the decision to delay the features was made because of quality issues and that the company has found the technology only works properly up to two-thirds to 80% of the time. He said the group “can make more progress to get those percentages up, so that users get something they can really count on.” [...]

But Apple wants to maintain a high bar and only deliver the features when they’re polished, he said. “These are not quite ready to go to the general public, even though our competitors might have launched them in this state or worse.”

I imagine it's a lot harder to get reliable results out of small, local LLMs that run on an iPhone. Features that fail 1/3 to 1/5 of the time are unacceptable for a consumer product like this.

TIL: Styling an HTML dialog modal to take the full height of the viewport.

I spent some time today trying to figure out how to have a modal <dialog> element present as a full height side panel that animates in from the side. The full height bit was hard, until Natalie helped me figure out that browsers apply a default max-height: calc(100% - 6px - 2em); rule which needs to be over-ridden.

Also included: some spelunking through the HTML spec to figure out where that calc() expression was first introduced. The answer was November 2020.

March 15, 2025

Some people today are discouraging others from learning programming on the grounds AI will automate it. This advice will be seen as some of the worst career advice ever given. I disagree with the Turing Award and Nobel prize winner who wrote, “It is far more likely that the programming occupation will become extinct [...] than that it will become all-powerful. More and more, computers will program themselves.” Statements discouraging people from learning to code are harmful!

In the 1960s, when programming moved from punchcards (where a programmer had to laboriously make holes in physical cards to write code character by character) to keyboards with terminals, programming became easier. And that made it a better time than before to begin programming. Yet it was in this era that Nobel laureate Herb Simon wrote the words quoted in the first paragraph. Today’s arguments not to learn to code continue to echo his comment.

As coding becomes easier, more people should code, not fewer!

March 16, 2025

mlx-community/OLMo-2-0325-32B-Instruct-4bit (via) OLMo 2 32B claims to be "the first fully-open model (all data, code, weights, and details are freely available) to outperform GPT3.5-Turbo and GPT-4o mini". Thanks to the MLX project here's a recipe that worked for me to run it on my Mac, via my llm-mlx plugin.

To install the model:

llm install llm-mlx

llm mlx download-model mlx-community/OLMo-2-0325-32B-Instruct-4bit

That downloads 17GB to ~/.cache/huggingface/hub/models--mlx-community--OLMo-2-0325-32B-Instruct-4bit.

To start an interactive chat with OLMo 2:

llm chat -m mlx-community/OLMo-2-0325-32B-Instruct-4bit

Or to run a prompt:

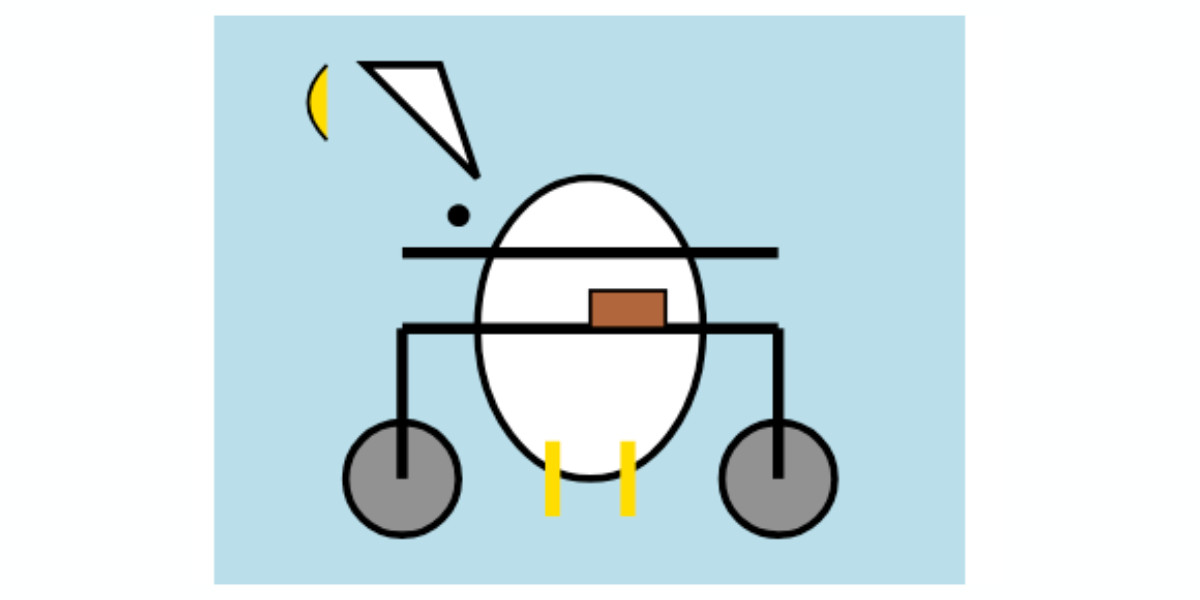

llm -m mlx-community/OLMo-2-0325-32B-Instruct-4bit 'Generate an SVG of a pelican riding a bicycle' -o unlimited 1

The -o unlimited 1 removes the cap on the number of output tokens - the default for llm-mlx is 1024 which isn't enough to attempt to draw a pelican.

The pelican it drew is refreshingly abstract:

Backstory on the default styles for the HTML dialog modal. My TIL about Styling an HTML dialog modal to take the full height of the viewport (here's the interactive demo) showed up on Hacker News this morning, and attracted this fascinating comment from Chromium engineer Ian Kilpatrick.

There's quite a bit of history here, but the abbreviated version is that the dialog element was originally added as a replacement for window.alert(), and there were a libraries polyfilling dialog and being surprisingly widely used.

The mechanism which dialog was originally positioned was relatively complex, and slightly hacky (magic values for the insets).

Changing the behaviour basically meant that we had to add "overflow:auto", and some form of "max-height"/"max-width" to ensure that the content within the dialog was actually reachable.

The better solution to this was to add "max-height:stretch", "max-width:stretch". You can see the discussion for this here.

The problem is that no browser had (and still has) shipped the "stretch" keyword. (Blink likely will "soon")

However this was pushed back against as this had to go in a specification - and nobody implemented it ("-webit-fill-available" would have been an acceptable substitute in Blink but other browsers didn't have this working the same yet).

Hence the calc() variant. (Primarily because of "box-sizing:content-box" being the default, and pre-existing border/padding styles on dialog that we didn't want to touch). [...]

I particularly enjoyed this insight into the challenges of evolving the standards that underlie the web, even for something this small:

One thing to keep in mind is that any changes that changes web behaviour is under some time pressure. If you leave something too long, sites will start relying on the previous behaviour - so it would have been arguably worse not to have done anything.

Also from the comments I learned that Firefox DevTools can show you user-agent styles, but that option is turned off by default - notes on that here. Once I turned this option on I saw references to an html.css stylesheet, so I dug around and found that in the Firefox source code. Here's the commit history for that file on the official GitHub mirror, which provides a detailed history of how Firefox default HTML styles have evolved with the standards over time.

And via uallo here are the same default HTML styles for other browsers:

Now you don’t even need code to be a programmer. But you do still need expertise. My recent piece on how I use LLMs to help me write code got a positive mention in John Naughton's column about vibe-coding in the Guardian this weekend.

My hunch about Apple Intelligence Siri features being delayed due to prompt injection also got a mention in the most recent episode of the New York Times Hard Fork podcast.

March 17, 2025

Mistral Small 3.1. Mistral Small 3 came out in January and was a notable, genuinely excellent local model that used an Apache 2.0 license.

Mistral Small 3.1 offers a significant improvement: it's multi-modal (images) and has an increased 128,000 token context length, while still "fitting within a single RTX 4090 or a 32GB RAM MacBook once quantized" (according to their model card). Mistral's own benchmarks show it outperforming Gemma 3 and GPT-4o Mini, but I haven't seen confirmation from external benchmarks.

Despite their mention of a 32GB MacBook I haven't actually seen any quantized GGUF or MLX releases yet, which is a little surprising since they partnered with Ollama on launch day for their previous Mistral Small 3. I expect we'll see various quantized models released by the community shortly.

Update 20th March 2025: I've now run the text version on my laptop using mlx-community/Mistral-Small-3.1-Text-24B-Instruct-2503-8bit and llm-mlx:

llm mlx download-model mlx-community/Mistral-Small-3.1-Text-24B-Instruct-2503-8bit -a mistral-small-3.1

llm chat -m mistral-small-3.1

The model can be accessed via Mistral's La Plateforme API, which means you can use it via my llm-mistral plugin.

Here's the model describing my photo of two pelicans in flight:

llm install llm-mistral

# Run this if you have previously installed the plugin:

llm mistral refresh

llm -m mistral/mistral-small-2503 'describe' \

-a https://static.simonwillison.net/static/2025/two-pelicans.jpg

The image depicts two brown pelicans in flight against a clear blue sky. Pelicans are large water birds known for their long bills and large throat pouches, which they use for catching fish. The birds in the image have long, pointed wings and are soaring gracefully. Their bodies are streamlined, and their heads and necks are elongated. The pelicans appear to be in mid-flight, possibly gliding or searching for food. The clear blue sky in the background provides a stark contrast, highlighting the birds' silhouettes and making them stand out prominently.

I added Mistral's API prices to my tools.simonwillison.net/llm-prices pricing calculator by pasting screenshots of Mistral's pricing tables into Claude.

suitenumerique/docs. New open source (MIT licensed) collaborative text editing web application, similar to Google Docs or Notion, notable because it's a joint effort funded by the French and German governments and "currently onboarding the Netherlands".

It's built using Django and React:

Docs is built on top of Django Rest Framework, Next.js, BlockNote.js, HocusPocus and Yjs.

Deployments currently require Kubernetes, PostgreSQL, memcached, an S3 bucket (or compatible) and an OIDC provider.

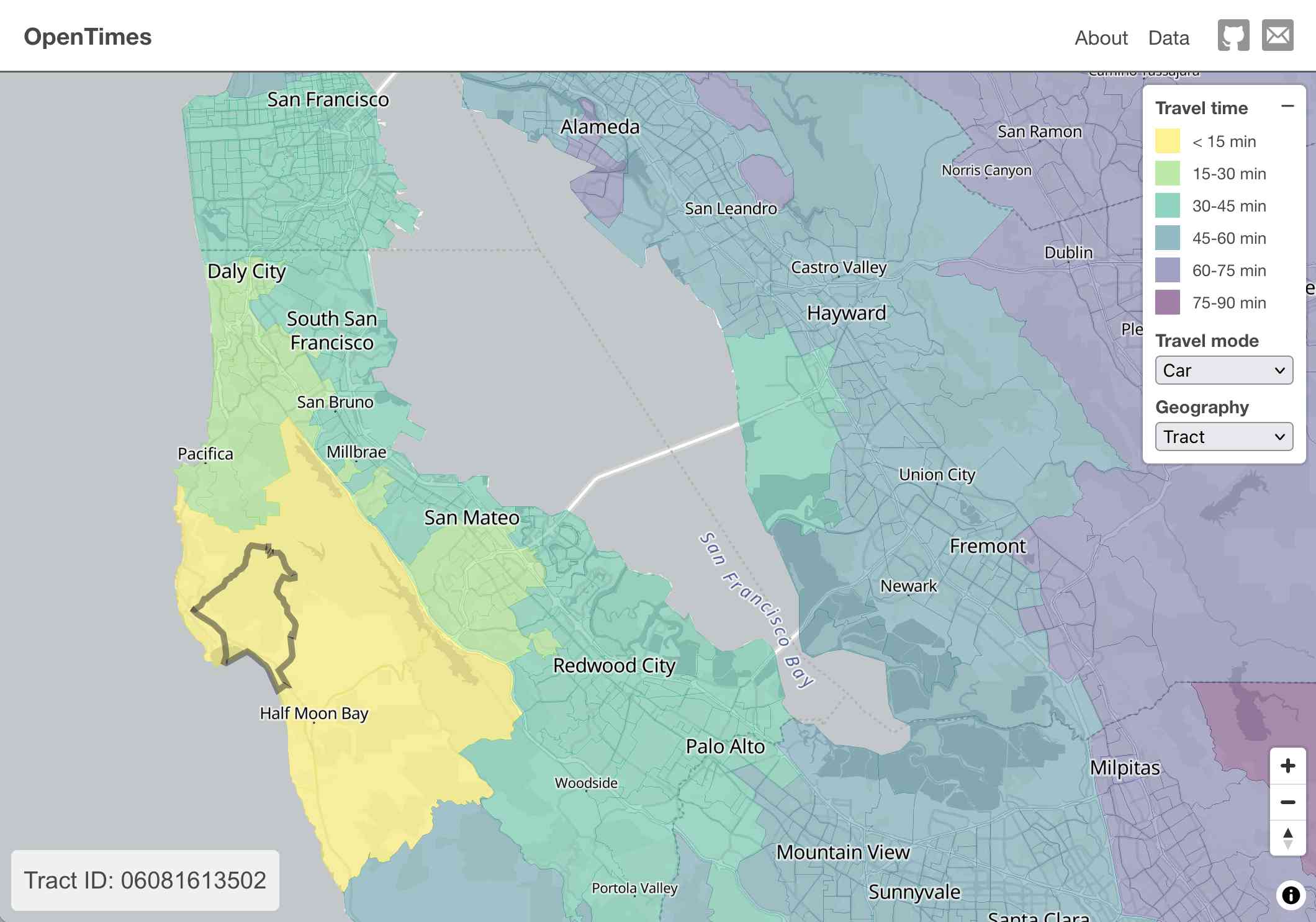

OpenTimes (via) Spectacular new open geospatial project by Dan Snow:

OpenTimes is a database of pre-computed, point-to-point travel times between United States Census geographies. It lets you download bulk travel time data for free and with no limits.

Here's what I get for travel times by car from El Granada, California:

The technical details are fascinating:

- The entire OpenTimes backend is just static Parquet files on Cloudflare's R2. There's no RDBMS or running service, just files and a CDN. The whole thing costs about $10/month to host and costs nothing to serve. In my opinion, this is a great way to serve infrequently updated, large public datasets at low cost (as long as you partition the files correctly).

Sure enough, R2 pricing charges "based on the total volume of data stored" - $0.015 / GB-month for standard storage, then $0.36 / million requests for "Class B" operations which include reads. They charge nothing for outbound bandwidth.

- All travel times were calculated by pre-building the inputs (OSM, OSRM networks) and then distributing the compute over hundreds of GitHub Actions jobs. This worked shockingly well for this specific workload (and was also completely free).

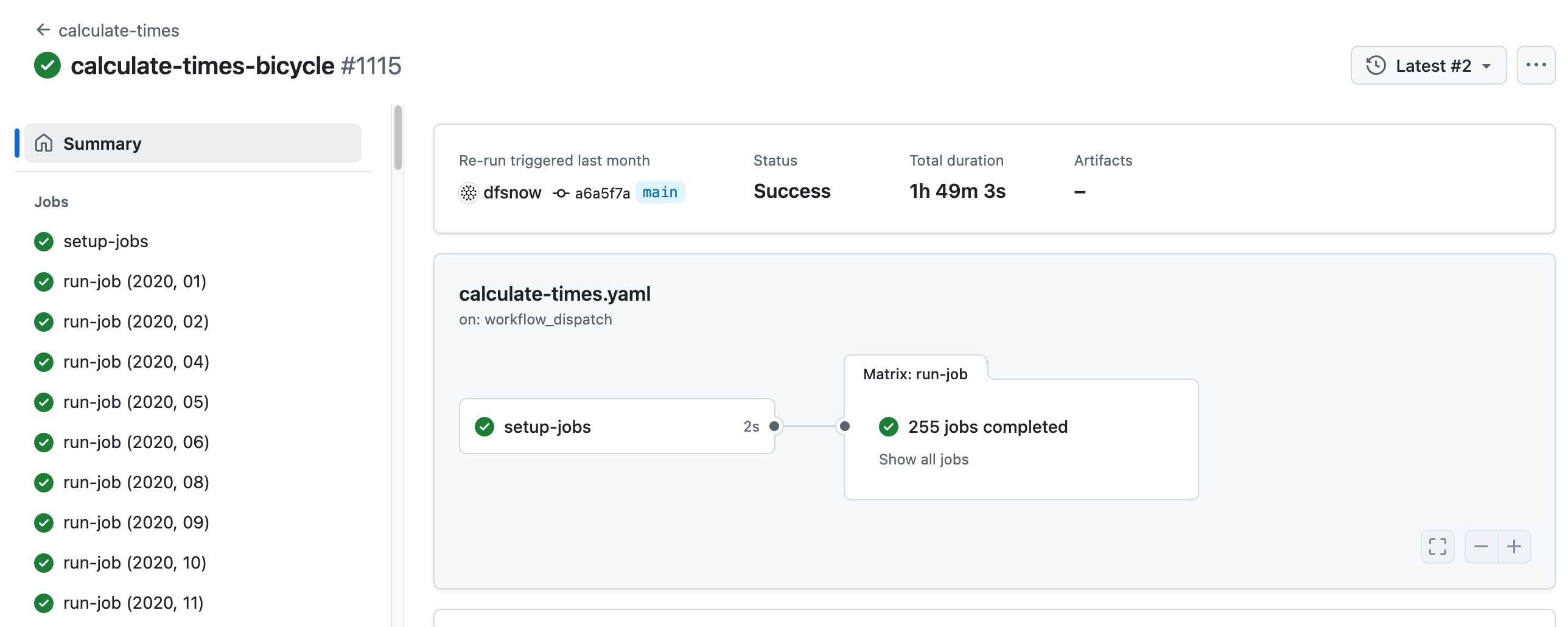

Here's a GitHub Actions run of the calculate-times.yaml workflow which uses a matrix to run 255 jobs!

Relevant YAML:

matrix:

year: ${{ fromJSON(needs.setup-jobs.outputs.years) }}

state: ${{ fromJSON(needs.setup-jobs.outputs.states) }}

Where those JSON files were created by the previous step, which reads in the year and state values from this params.yaml file.

- The query layer uses a single DuckDB database file with views that point to static Parquet files via HTTP. This lets you query a table with hundreds of billions of records after downloading just the ~5MB pointer file.

This is a really creative use of DuckDB's feature that lets you run queries against large data from a laptop using HTTP range queries to avoid downloading the whole thing.

The README shows how to use that from R and Python - I got this working in the duckdb client (brew install duckdb):

INSTALL httpfs;

LOAD httpfs;

ATTACH 'https://data.opentimes.org/databases/0.0.1.duckdb' AS opentimes;

SELECT origin_id, destination_id, duration_sec

FROM opentimes.public.times

WHERE version = '0.0.1'

AND mode = 'car'

AND year = '2024'

AND geography = 'tract'

AND state = '17'

AND origin_id LIKE '17031%' limit 10;

In answer to a question about adding public transit times Dan said:

In the next year or so maybe. The biggest obstacles to adding public transit are:

- Collecting all the necessary scheduling data (e.g. GTFS feeds) for every transit system in the county. Not insurmountable since there are services that do this currently.

- Finding a routing engine that can compute nation-scale travel time matrices quickly. Currently, the two fastest open-source engines I've tried (OSRM and Valhalla) don't support public transit for matrix calculations and the engines that do support public transit (R5, OpenTripPlanner, etc.) are too slow.

GTFS is a popular CSV-based format for sharing transit schedules - here's an official list of available feed directories.

This whole project feels to me like a great example of the baked data architectural pattern in action.

March 18, 2025

Building and deploying a custom site using GitHub Actions and GitHub Pages. I figured out a minimal example of how to use GitHub Actions to run custom scripts to build a website and then publish that static site to GitHub Pages. I turned the example into a template repository, which should make getting started for a new project extremely quick.

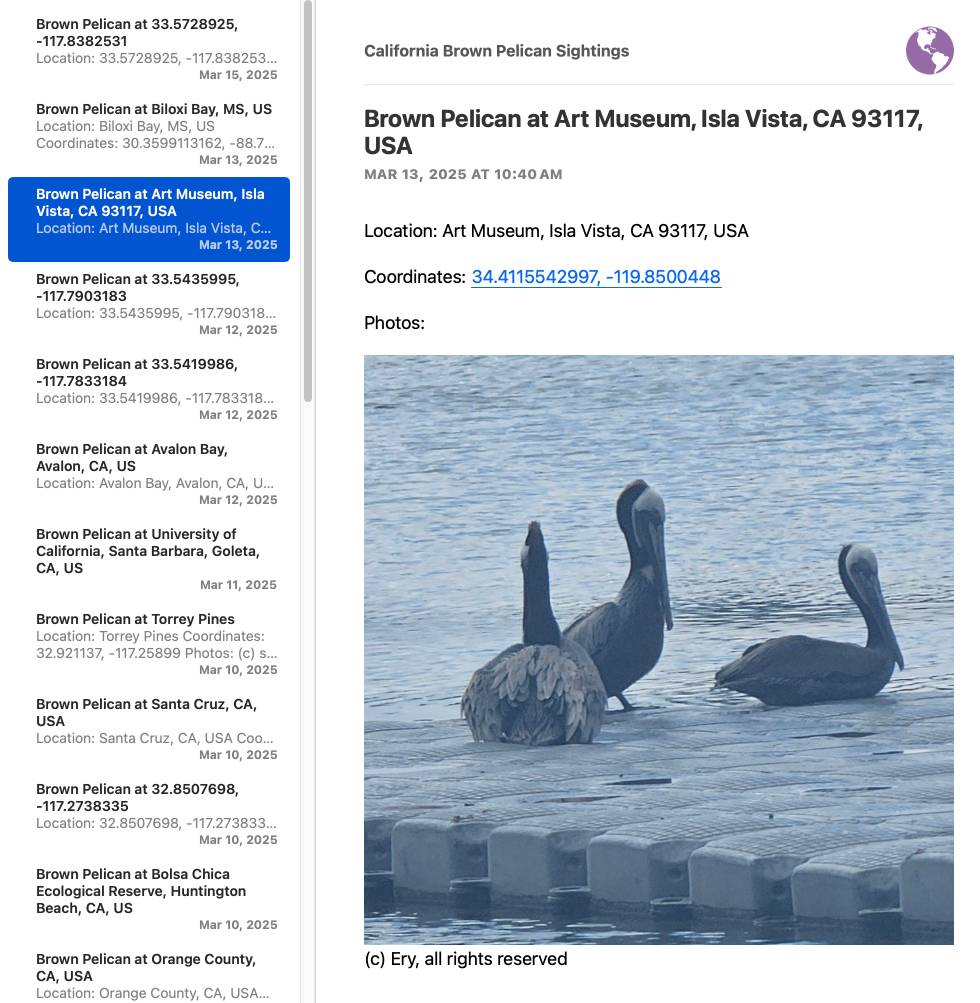

I've needed this for various projects over the years, but today I finally put these notes together while setting up a system for scraping the iNaturalist API for recent sightings of the California Brown Pelican and converting those into an Atom feed that I can subscribe to in NetNewsWire:

I got Claude to write me the script that converts the scraped JSON to atom.

Update: I just found out iNaturalist have their own atom feeds! Here's their own feed of recent Pelican observations.

March 19, 2025

An agent is something that acts in an environment; it does something. Agents include worms, dogs, thermostats, airplanes, robots, humans, companies, and countries.

— David L. Poole and Alan K. Mackworth, Artificial Intelligence: Foundations of Computational Agents

My Thoughts on the Future of “AI”. Nicholas Carlini, previously deeply skeptical about the utility of LLMs, discusses at length his thoughts on where the technology might go.

He presents compelling, detailed arguments for both ends of the spectrum - his key message is that it's best to maintain very wide error bars for what might happen next:

I wouldn't be surprised if, in three to five years, language models are capable of performing most (all?) cognitive economically-useful tasks beyond the level of human experts. And I also wouldn't be surprised if, in five years, the best models we have are better than the ones we have today, but only in “normal” ways where costs continue to decrease considerably and capabilities continue to get better but there's no fundamental paradigm shift that upends the world order. To deny the potential for either of these possibilities seems to me to be a mistake.

If LLMs do hit a wall, it's not at all clear what that wall might be:

I still believe there is something fundamental that will get in the way of our ability to build LLMs that grow exponentially in capability. But I will freely admit to you now that I have no earthly idea what that limitation will be. I have no evidence that this line exists, other than to make some form of vague argument that when you try and scale something across many orders of magnitude, you'll probably run into problems you didn't see coming.

There's lots of great stuff in here. I particularly liked this explanation of how you get R1:

You take DeepSeek v3, and ask it to solve a bunch of hard problems, and when it gets the answers right, you train it to do more of that and less of whatever it did when it got the answers wrong. The idea here is actually really simple, and it works surprisingly well.

Not all AI-assisted programming is vibe coding (but vibe coding rocks)

Vibe coding is having a moment. The term was coined by Andrej Karpathy just a few weeks ago (on February 6th) and has since been featured in the New York Times, Ars Technica, the Guardian and countless online discussions.

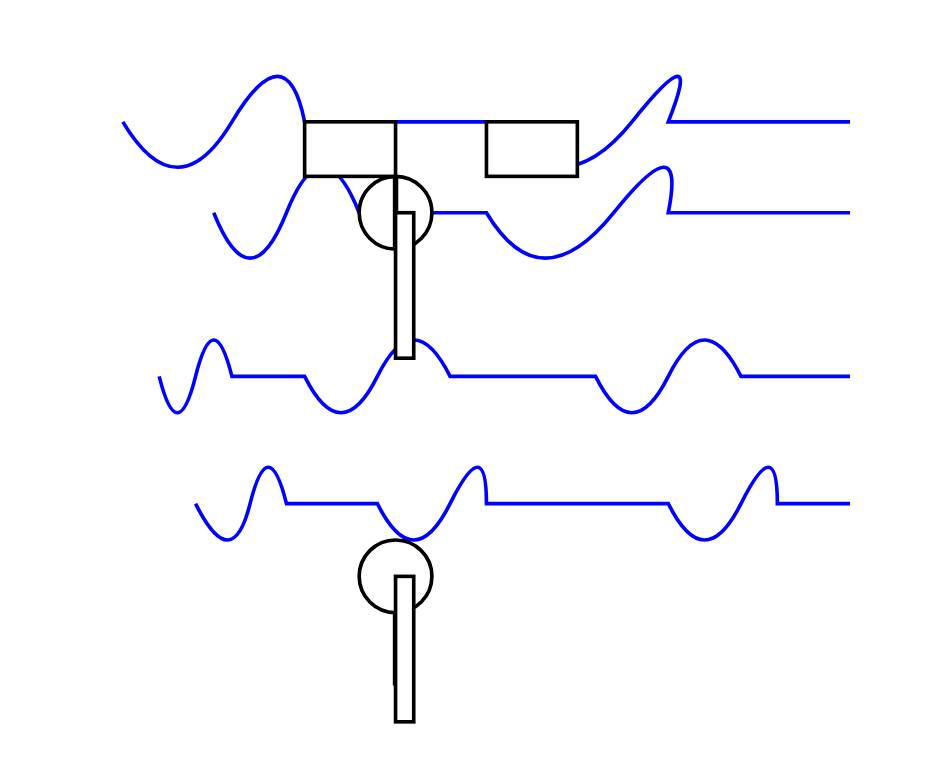

[... 1,486 words]OpenAI platform: o1-pro. OpenAI have a new most-expensive model: o1-pro can now be accessed through their API at a hefty $150/million tokens for input and $600/million tokens for output. That's 10x the price of their o1 and o1-preview models and a full 1,000x times more expensive than their cheapest model, gpt-4o-mini!

Aside from that it has mostly the same features as o1: a 200,000 token context window, 100,000 max output tokens, Sep 30 2023 knowledge cut-off date and it supports function calling, structured outputs and image inputs.

o1-pro doesn't support streaming, and most significantly for developers is the first OpenAI model to only be available via their new Responses API. This means tools that are built against their Chat Completions API (like my own LLM) have to do a whole lot more work to support the new model - my issue for that is here.

Since LLM doesn't support this new model yet I had to make do with curl:

curl https://api.openai.com/v1/responses \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $(llm keys get openai)" \

-d '{

"model": "o1-pro",

"input": "Generate an SVG of a pelican riding a bicycle"

}'

Here's the full JSON I got back - 81 input tokens and 1552 output tokens for a total cost of 94.335 cents.

I took a risk and added "reasoning": {"effort": "high"} to see if I could get a better pelican with more reasoning:

curl https://api.openai.com/v1/responses \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $(llm keys get openai)" \

-d '{

"model": "o1-pro",

"input": "Generate an SVG of a pelican riding a bicycle",

"reasoning": {"effort": "high"}

}'

Surprisingly that used less output tokens - 1459 compared to 1552 earlier (cost: 88.755 cents) - producing this JSON which rendered as a slightly better pelican:

It was cheaper because while it spent 960 reasoning tokens as opposed to 704 for the previous pelican it omitted the explanatory text around the SVG, saving on total output.

March 20, 2025

Calling a wrap on my weeknotes

After 192 posts that ranged from weekly to roughly once-a-month, I’ve decided to call a wrap on my weeknotes habit. The original goal was to stay transparent during my 2019-2020 JSK fellowship, and I kept them up after that as an accountability mechanism and to get into a habit of writing regularly.

[... 281 words]