515 posts tagged “projects”

Posts about projects I have worked on.

2021

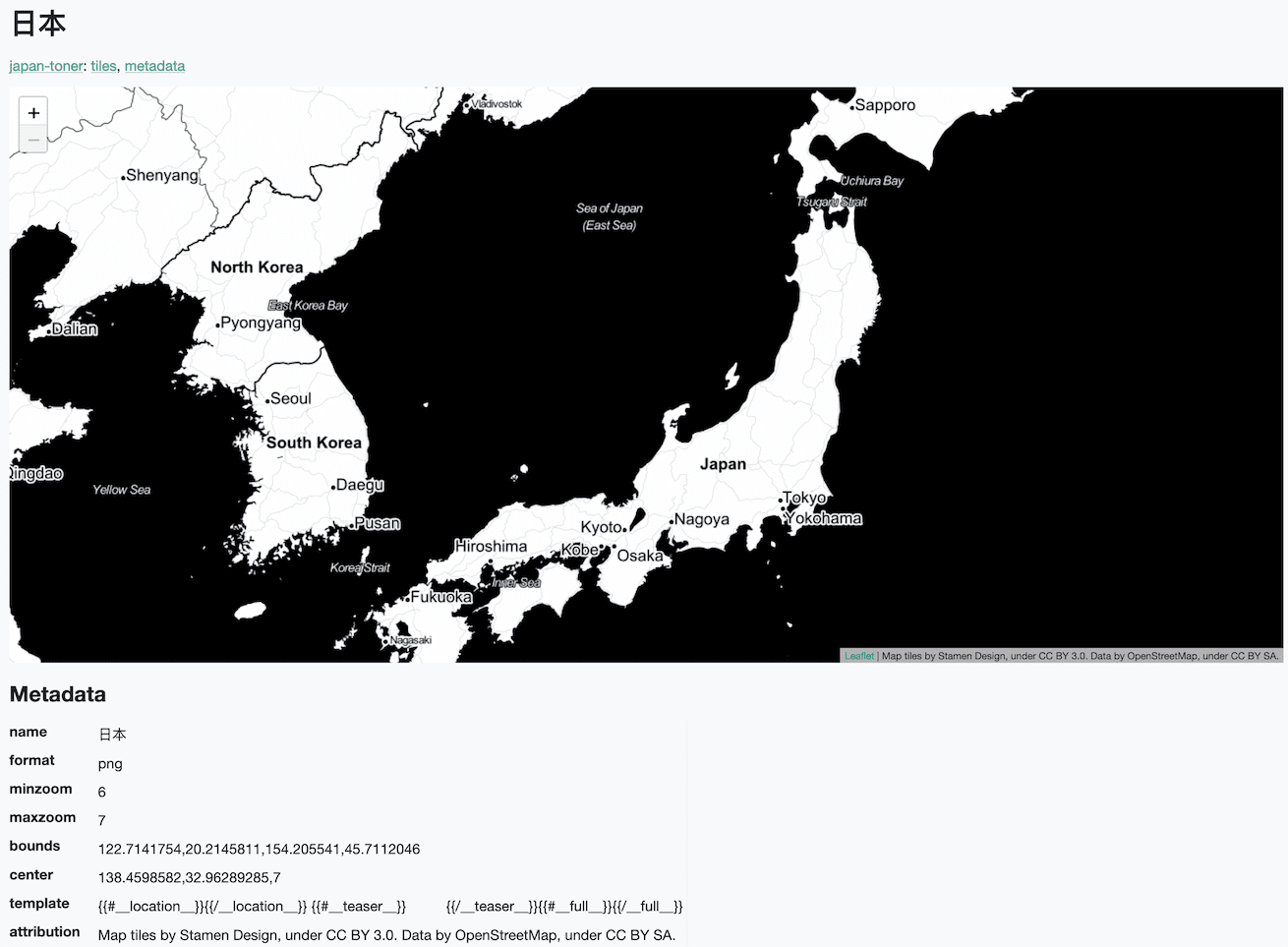

Serving map tiles from SQLite with MBTiles and datasette-tiles

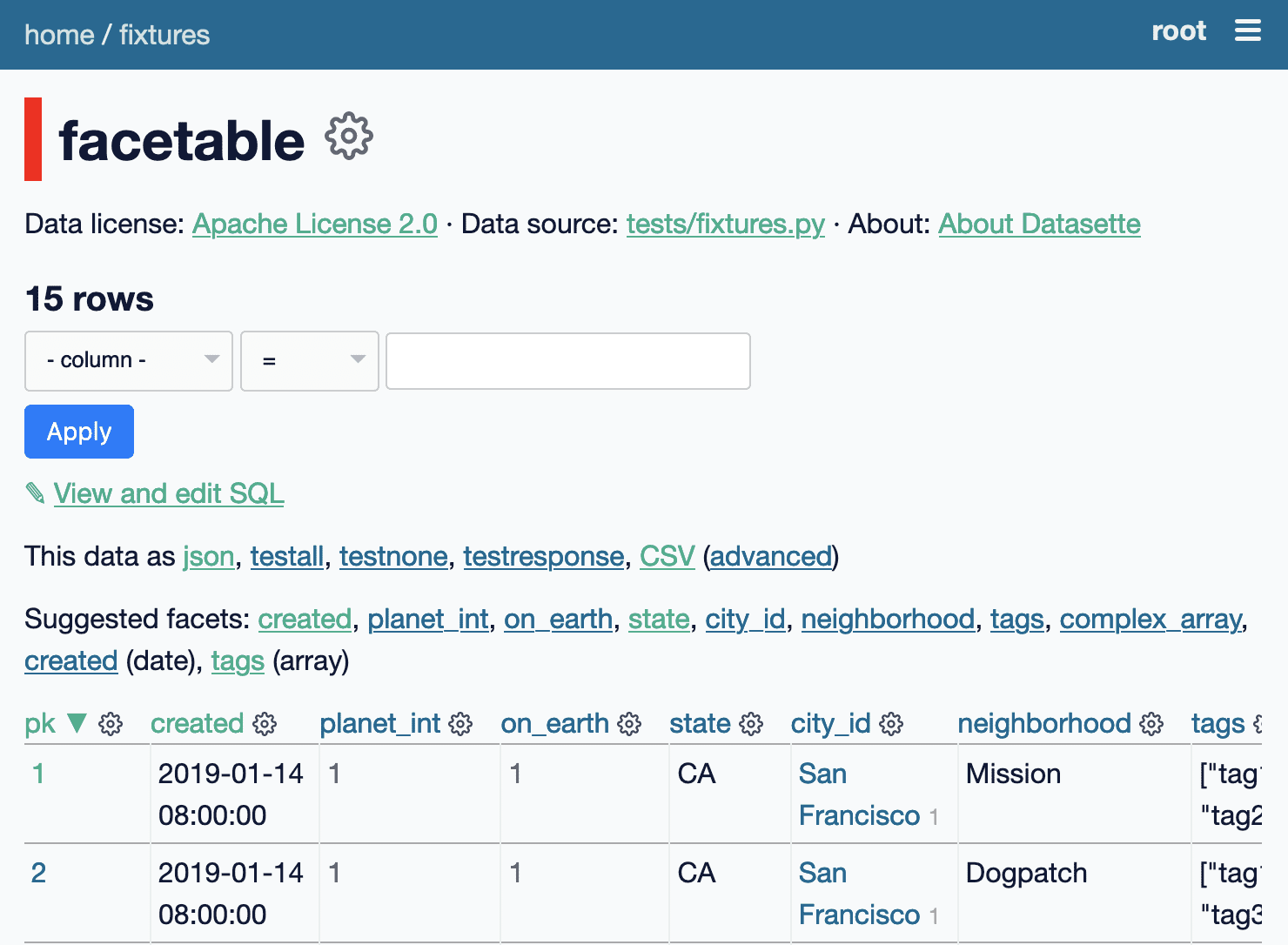

Working on datasette-leaflet last week re-kindled my interest in using Datasette as a GIS (Geographic Information System) platform. SQLite already has strong GIS functionality in the form of SpatiaLite and datasette-cluster-map is currently the most downloaded plugin. Most importantly, maps are fun!

[... 1,334 words]Weeknotes: datasette-leaflet, datasette-plugin cookiecutter upgrades

This week I shipped Datasette 0.54, sent out the latest Datasette Newsletter and then mostly worked on follow-up projects.

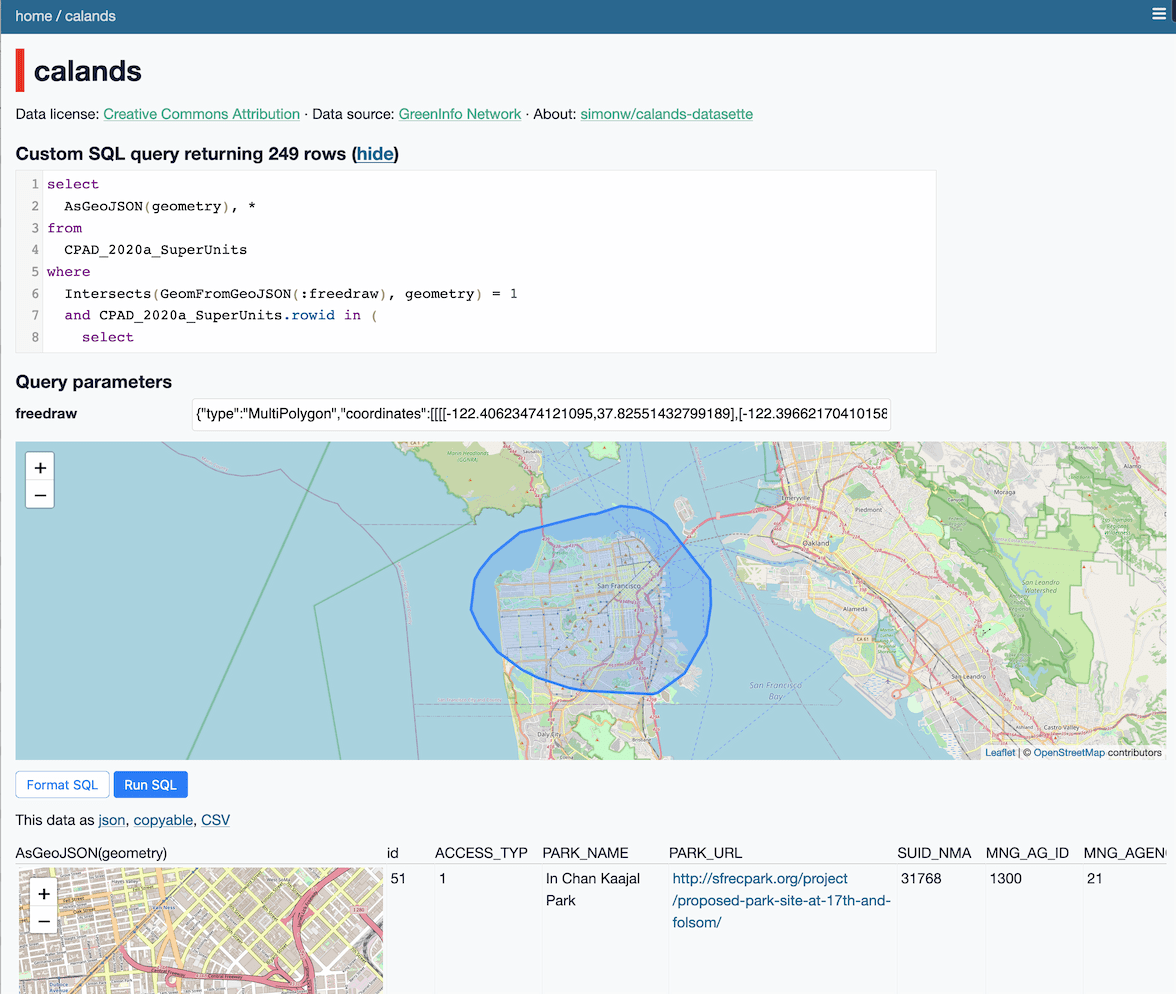

[... 552 words]Drawing shapes on a map to query a SpatiaLite database (and other weeknotes)

This week I built a Datasette plugin that lets you query a database by drawing shapes on a map!

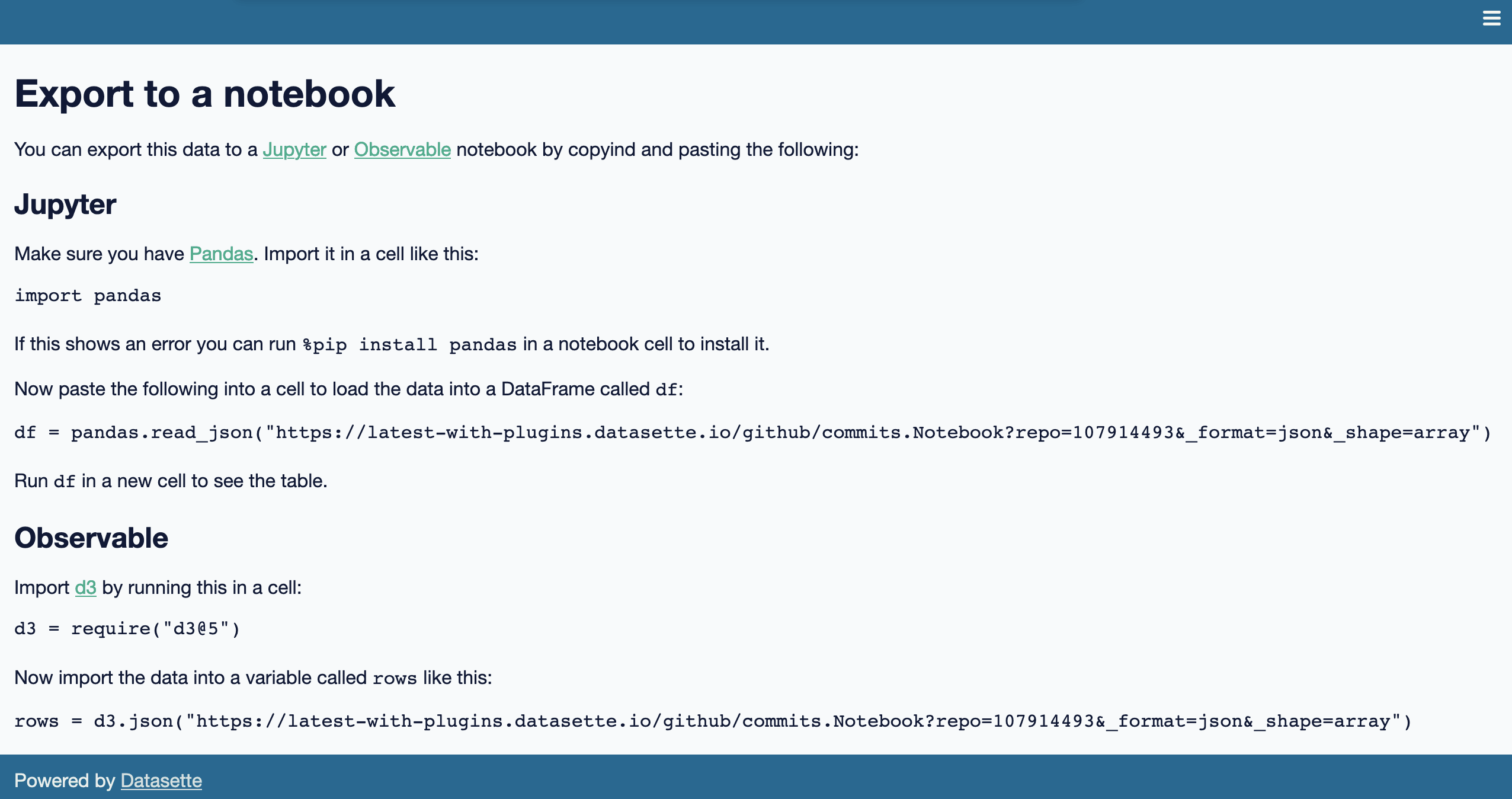

[... 950 words]Weeknotes: datasette-export-notebook, PyInstaller packaged Datasette, CBSAs

What a terrible week. I’ve found it hard to concentrate on anything substantial. In a mostly futile attempt to distract myself from doomscrolling I’ve mainly been building some experimental output plugins, fiddling with PyInstaller and messing around with shapefiles.

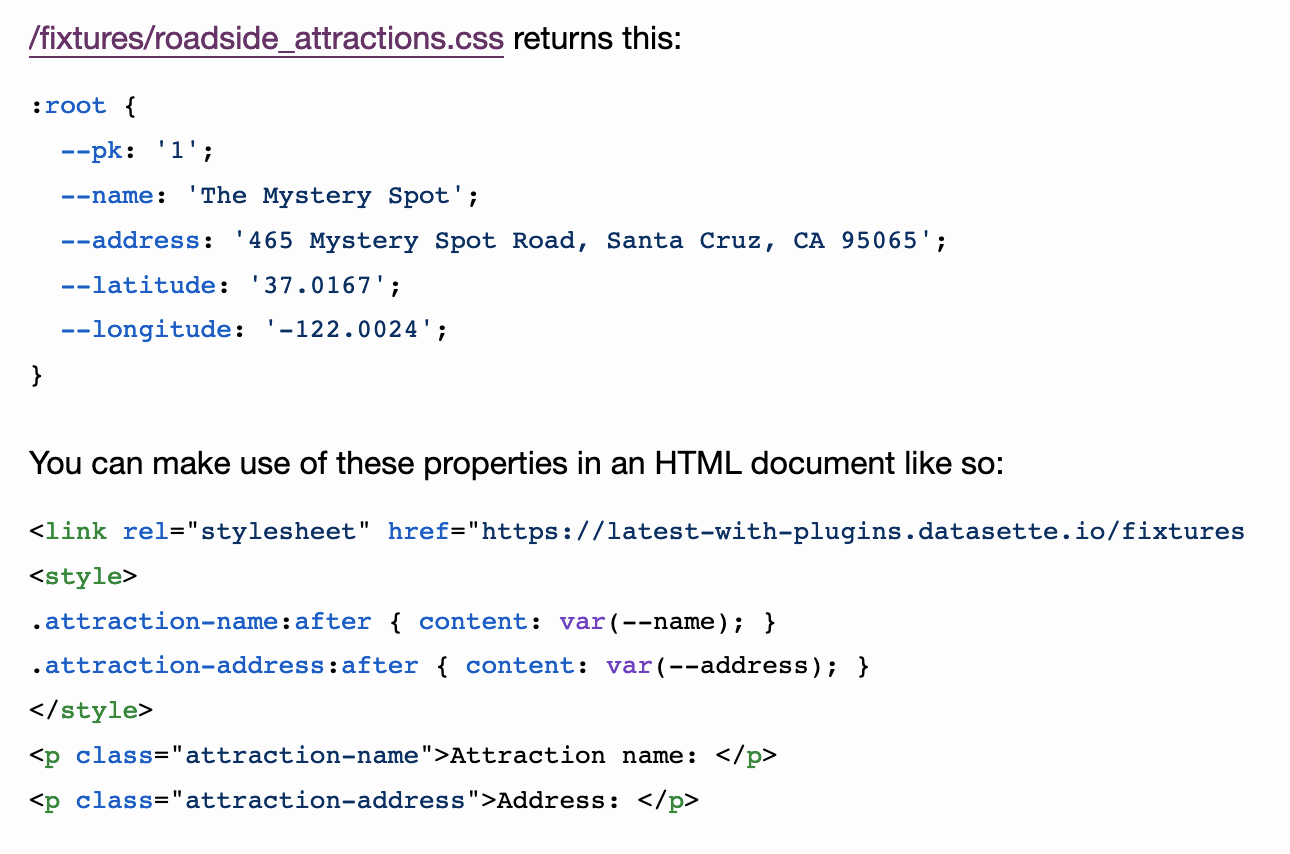

[... 732 words]APIs from CSS without JavaScript: the datasette-css-properties plugin

I built a new Datasette plugin called datasette-css-properties. It’s very, very weird—it adds a .css output extension to Datasette which outputs the result of a SQL query using CSS custom property format. This means you can display the results of database queries using pure CSS and HTML, no JavaScript required!

datasette-css-properties (via) My new Datasette plugin defines a “.css” output format which returns the data from the query as a valid CSS stylesheet defining custom properties for each returned column. This means you can build a page using just HTML and CSS that consumes API data from Datasette, no JavaScript required! Whether this is a good idea or not is left as an exercise for the reader.

sqlite-utils 3.2 (via) As discussed in my weeknotes yesterday, this is the release of sqlite-utils that adds the new “cached table counts via triggers” mechanism.

Weeknotes: A flurry of not-quite-finished features

My Christmas present to myself this year was to allow myself to spend a week working on stuff I found interesting, rather than sticking to the most important things. This may have been a mistake: it’s left me with a flurry of interesting but not-quite-finished features.

[... 2,249 words]2020

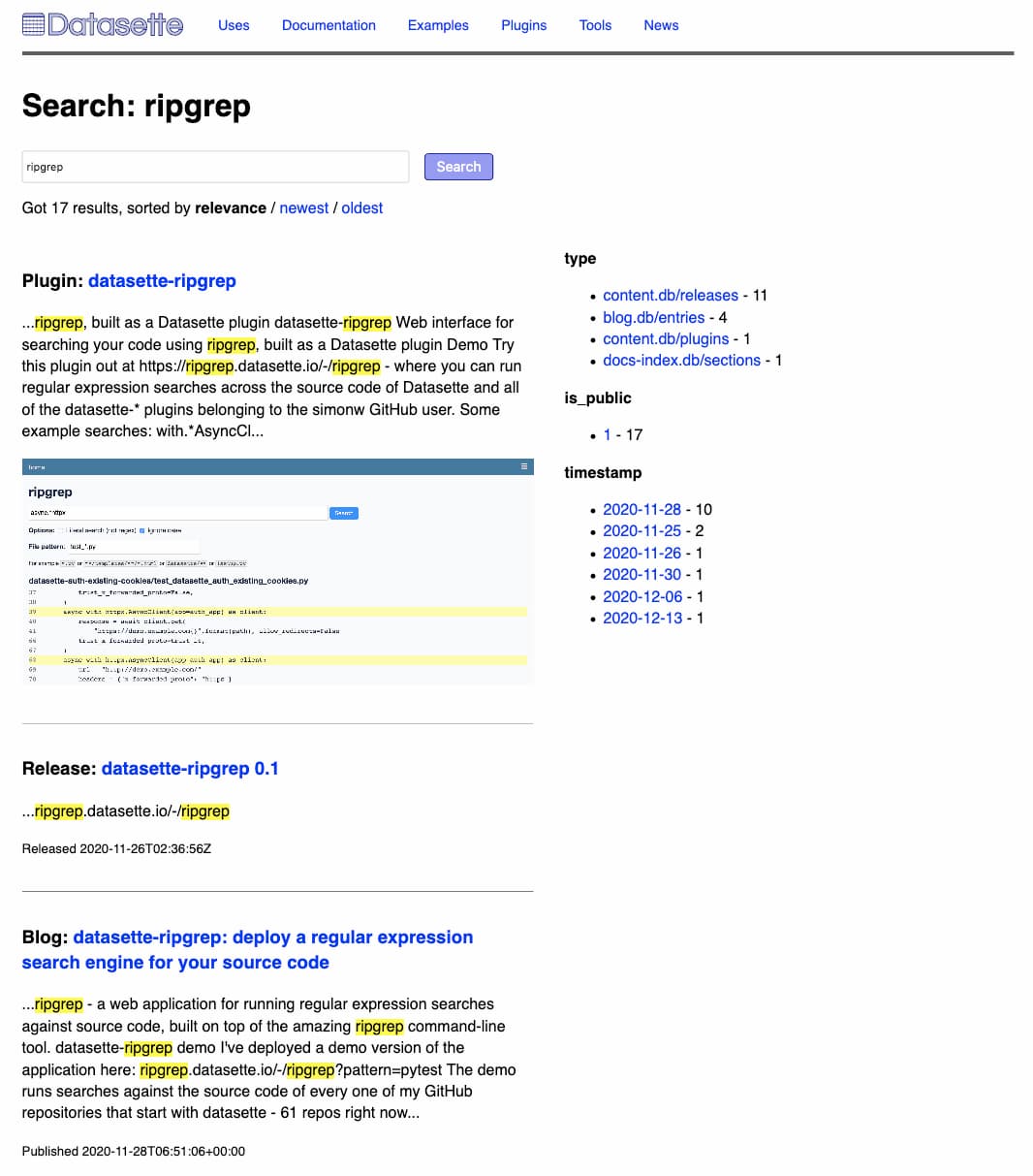

Building a search engine for datasette.io

This week I added a search engine to datasette.io, using the search indexing tool I’ve been building for Dogsheep.

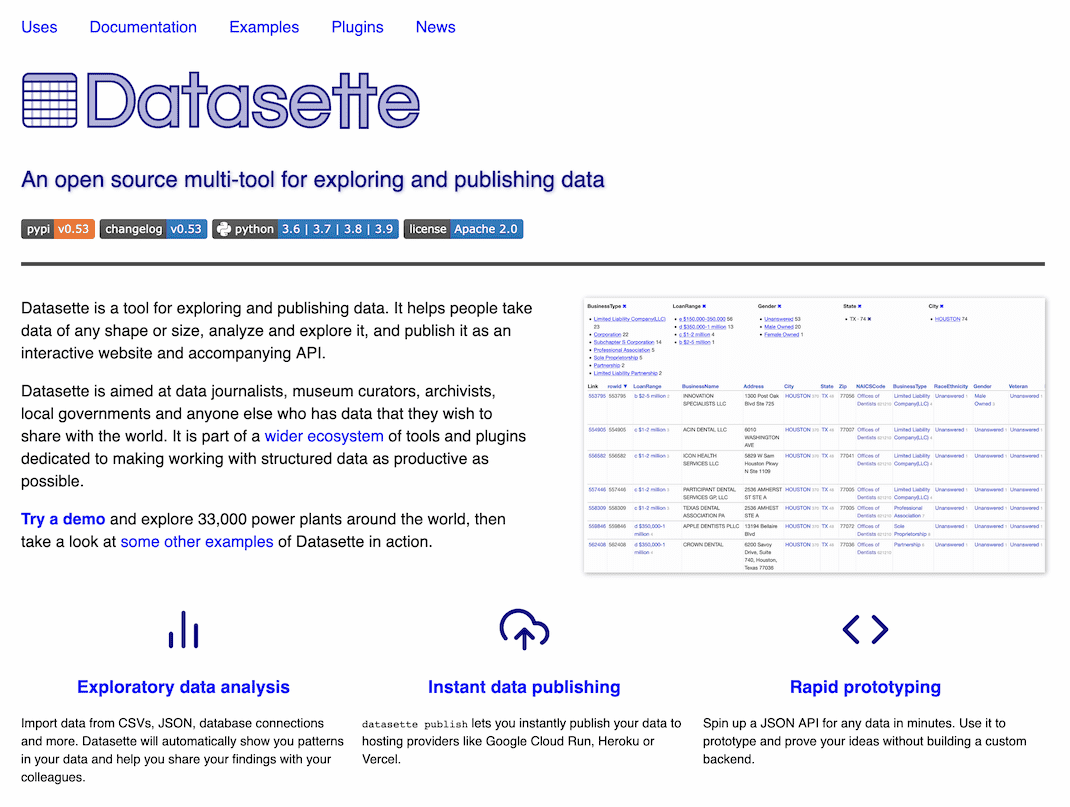

[... 1,391 words]datasette.io, an official project website for Datasette

This week I launched datasette.io—the new official project website for Datasette.

[... 1,971 words]datasette.io (via) Datasette finally has an official project website, three years after the first release of the software. I built it using Datasette, with custom templates to define the various pages. The site includes news, latest releases, example sites and a new searchable plugin directory.

Weeknotes: github-to-sqlite workflows, datasette-ripgrep enhancements, Datasette 0.52

This week: Improvements to datasette-ripgrep, github-to-sqlite and datasette-graphql, plus Datasette 0.52 and a flurry of dot-releases.

Datasette 0.52. A relatively small release—it has a new plugin hook (database_actions(), for adding links to a new database actions menu), renames the --config option to --setting and adds a new “datasette publish cloudrun --apt-get-install” option.

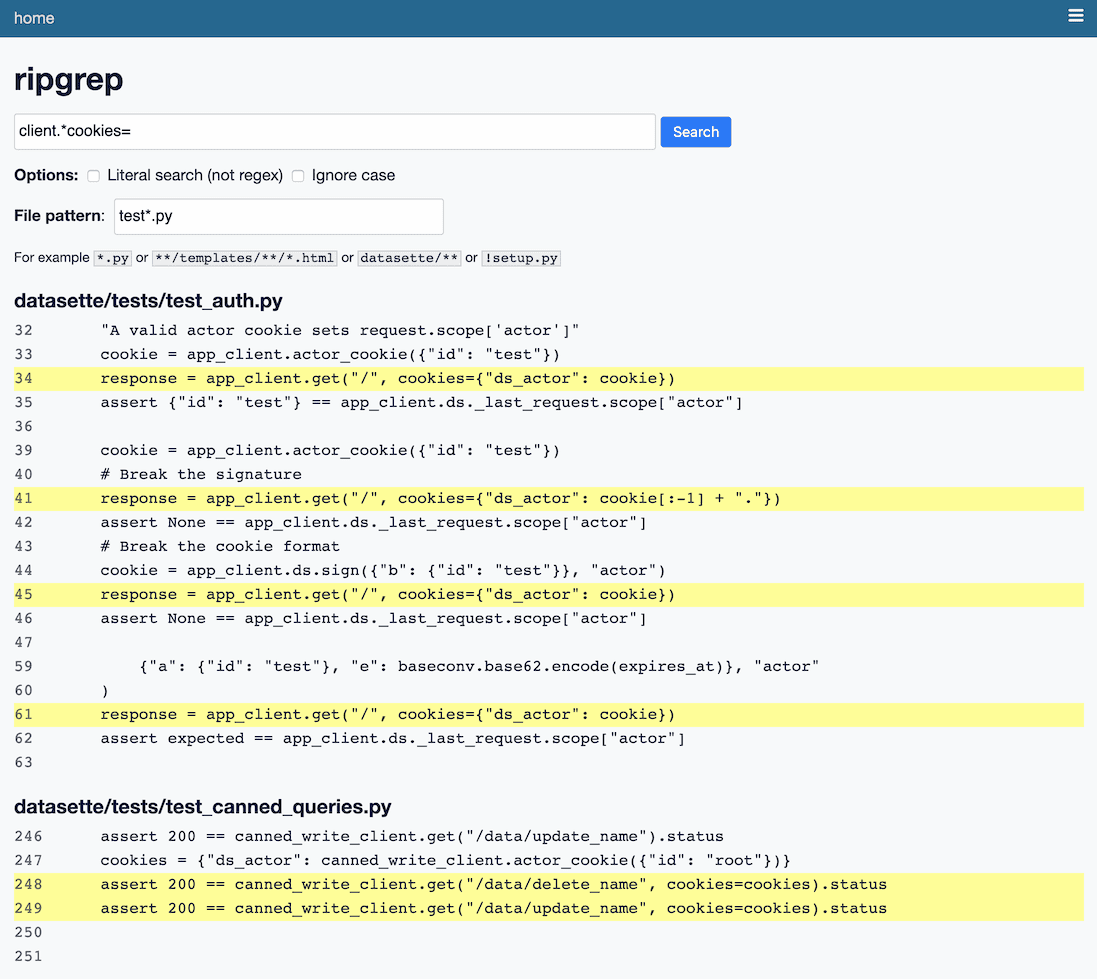

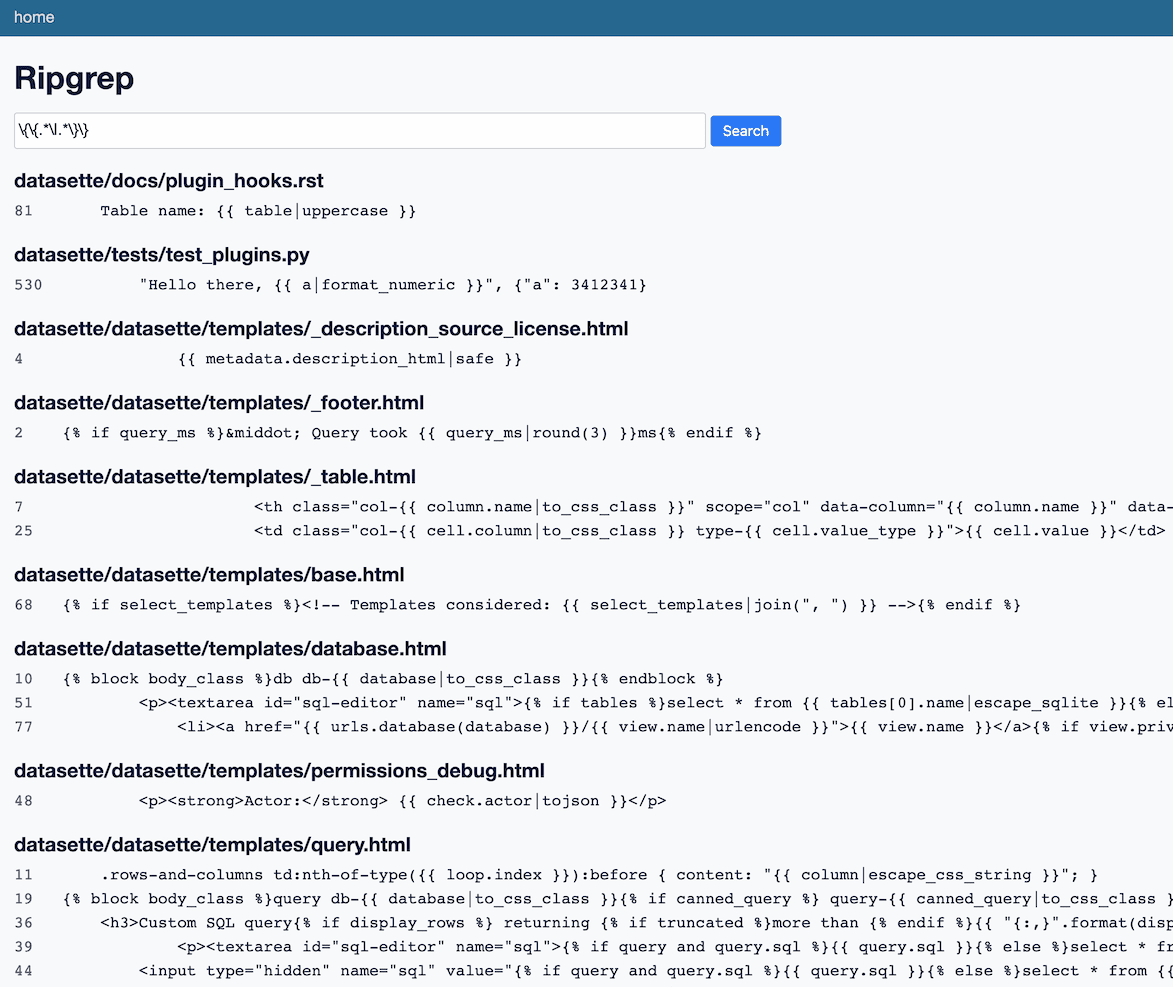

datasette-ripgrep: deploy a regular expression search engine for your source code

This week I built datasette-ripgrep—a web application for running regular expression searches against source code, built on top of the amazing ripgrep command-line tool.

[... 1,362 words]Weeknotes: datasette-indieauth, datasette-graphql, PyCon Argentina

Last week’s weeknotes took the form of my Personal Data Warehouses: Reclaiming Your Data talk write-up, which represented most of what I got done that week. This week I mainly worked on datasette-indieauth, but I also gave a keynote at PyCon Argentina and released a version of datasette-graphql with a small security fix.

[... 724 words]datasette-graphql 1.2 (via) A new release of the datasette-graphql plugin, fixing a minor security flaw: previous versions of the plugin could expose the schema (but not the actual data) of tables in databases that were otherwise protected by Datasette’s permission system.

Security vulnerability in datasette-indieauth: Implementation trusts the “me” field returned by the authorization server without verifying it. I spotted a critical security vulnerability in my new datasette-indieauth plugin: it accepted the “me” profile URL value returned from the authorization server in the final step of the IndieAuth flow without verifying it, which means a malicious server could imitate any user. I’ve shipped 1.1 with a fix and posted a security advisory to the GitHub repository.

Implementing IndieAuth for Datasette

IndieAuth is a spiritual successor to OpenID, developed and maintained by the IndieWeb community and based on OAuth 2. This weekend I attended IndieWebCamp East Coast and was inspired to try my hand at an implementation. datasette-indieauth is the result, a new plugin which enables IndieAuth logins to a Datasette instance.

[... 1,225 words]Datasette 0.51 (plus weeknotes)

I shipped Datasette 0.51 today, with a new visual design, plugin hooks for adding navigation options, better handling of binary data, URL building utility methods and better support for running Datasette behind a proxy. It’s a lot of stuff! Here are the annotated release notes.

[... 2,020 words]Weeknotes: incremental improvements

I’ve been writing my talk for PyCon Argentina this week, which has proved surprisingly time consuming. I hope to have that wrapped up soon—I’m pre-recording it, which it turns out is much more work than preparing a talk to stream live.

[... 630 words]Weeknotes: evernote-to-sqlite, Datasette Weekly, scrapers, csv-diff, sqlite-utils

This week I built evernote-to-sqlite (see Building an Evernote to SQLite exporter), launched the Datasette Weekly newsletter, worked on some scrapers and pushed out some small improvements to several other projects.

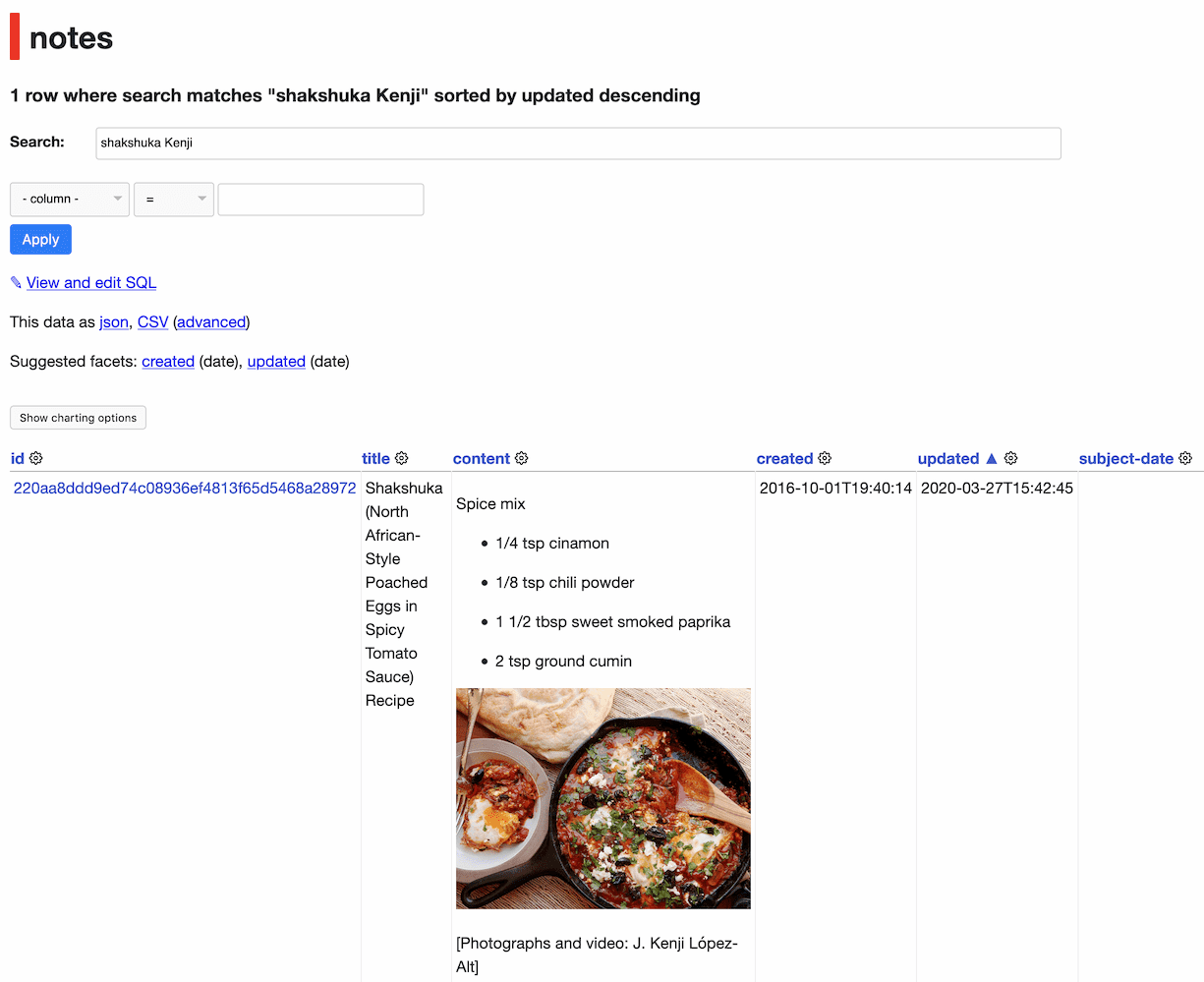

Building an Evernote to SQLite exporter

I’ve been using Evernote for over a decade, and I’ve long wanted to export my data from it so I can do interesting things with it.

[... 1,879 words]xml-analyser. In building evernote-to-sqlite I dusted off an ancient (2009) project I built that scans through an XML file and provides a summary of what elements are present in the document and how they relate to each other. I’ve now packaged it up as a CLI app and published it on PyPI.

evernote-to-sqlite (via) The latest tool in my Dogsheep series of utilities for personal analytics: evernote-to-sqlite takes Evernote note exports en their ENEX XML format and loads them into a SQLite database. Embedded images are loaded into a BLOB column and the output of their cloud-based OCR system is added to a full-text search index. Notes have a latitude and longitude which means you can visualize your notes on a map using Datasette and datasette-cluster-map.

Datasette Weekly: Datasette 0.50, git scraping, extracting columns (via) The first edition of the new Datasette Weekly newsletter—covering Datasette 0.50, Git scraping, extracting columns with sqlite-utils and featuring datasette-graphql as the first “plugin of the week”

Datasette Weekly (via) I’m trying something new: I’ve decided to start an email newsletter called the Datasette Weekly (I’m already worried I’ll regret that weekly promise) which will share news about Datasette and the Datasette ecosystem, plus tips and tricks for getting the most out of Datasette and SQLite.

Datasette 0.50: The annotated release notes

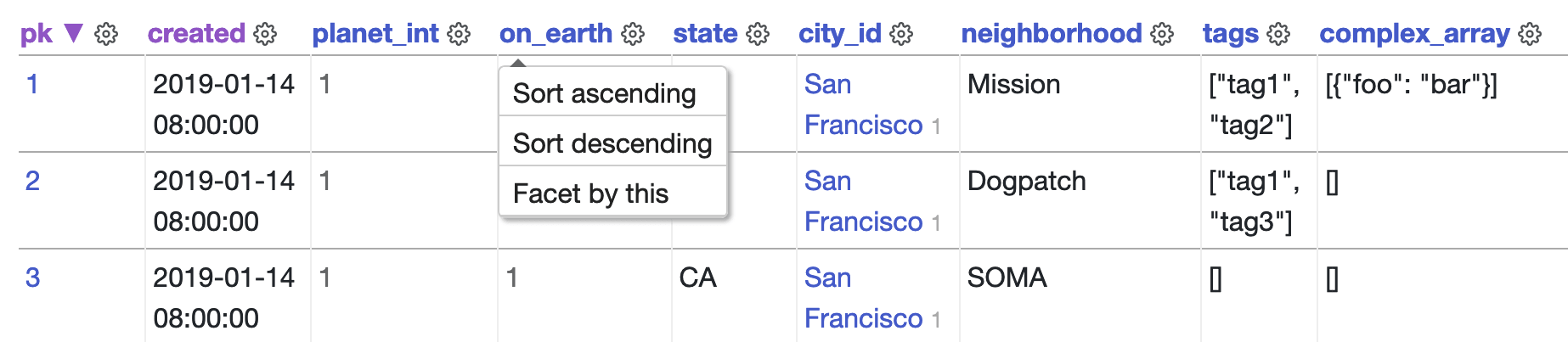

I released Datasette 0.50 this morning, with a new user-facing column actions menu feature and a way for plugins to make internal HTTP requests to consume the JSON API of their parent Datasette instance.

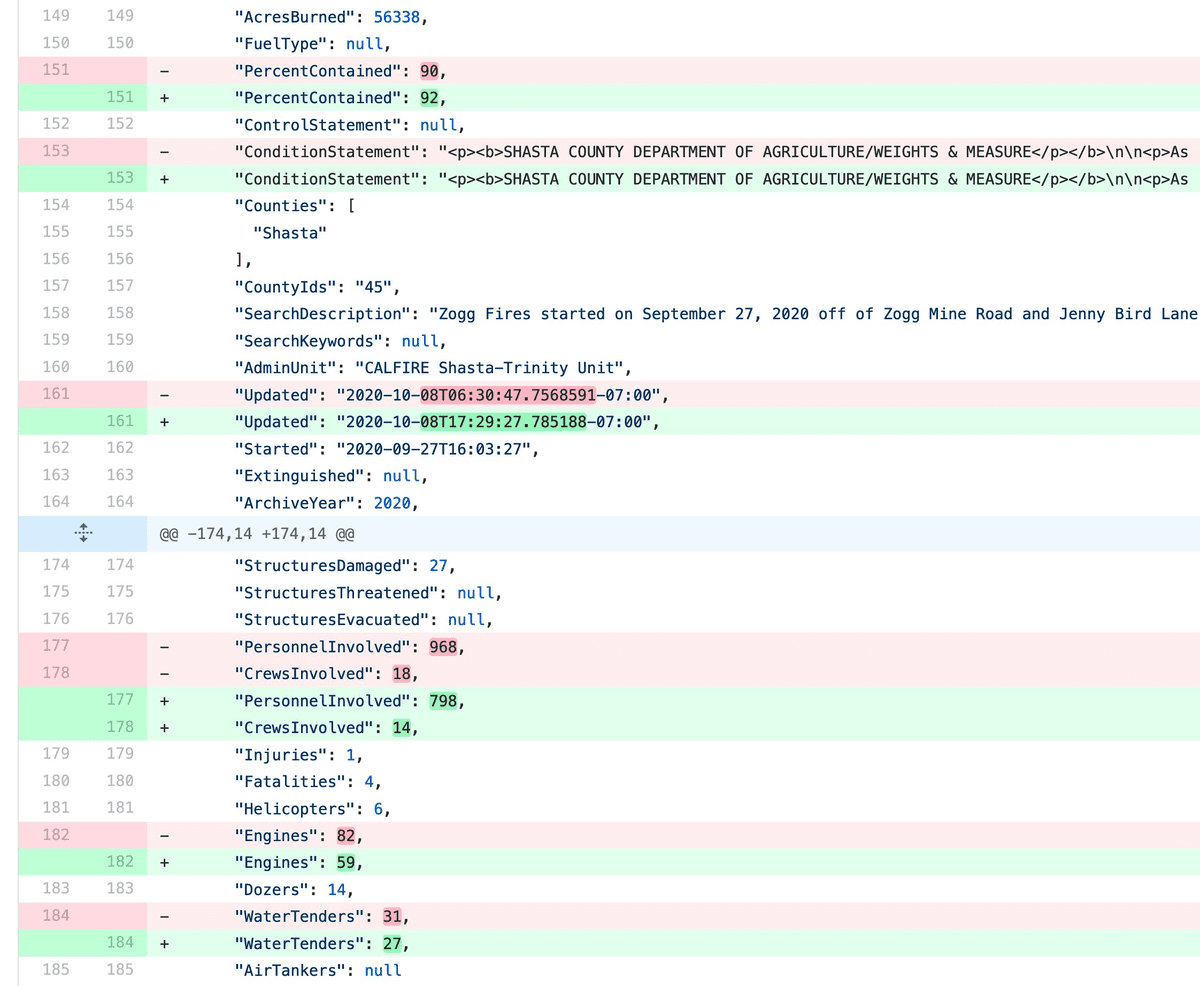

[... 792 words]Git scraping: track changes over time by scraping to a Git repository

Git scraping is the name I’ve given a scraping technique that I’ve been experimenting with for a few years now. It’s really effective, and more people should use it.

[... 963 words]Weeknotes: Datasette column actions, plus three new plugins

A renewed emphasis on building out Datasette Cloud has produced three new plugins this week: datasette-dateutil, datasette-import-table and datasette-edit-schema, plus a major improvement to Datasette’s default interface for browsing tables.

[... 1,093 words]datasette-dateutil (via) New Datasette plugin exposing date/time parsing custom SQL functions powered by the classic dateutil Python library.