Weeknotes: datasette-copyable, datasette-insert-api

23rd July 2020

Two new Datasette plugins this week: datasette-copyable, helping users copy-and-paste data from Datasette into other places, and datasette-insert-api, providing a JSON API for inserting and updating data and creating tables.

datasette-copyable

Datasette has long provided CSV and JSON export, but when you’re working with smaller amounts of data it’s often most convenient to move that data around using copy and paste.

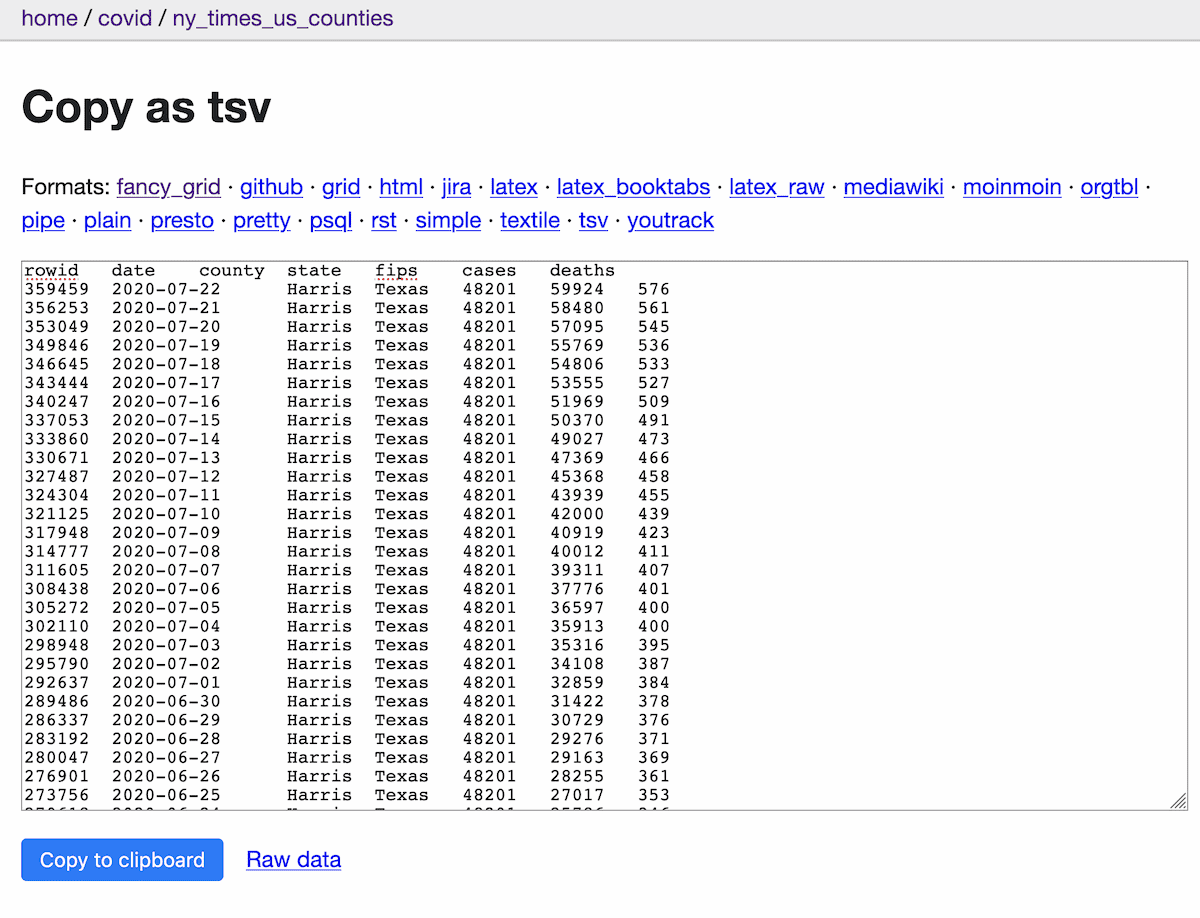

datasette-copyable uses Datasette’s register_output_renderer() plugin hook to add a whole plethora of new export options, powered by the excellent tabulate Python library (which I’m also using in sqlite-utils).

The default output format is TSV, because if you copy tab-separated values to your clipboard and then hit paste on a cell in Google Sheets, Excel or Numbers the data will be imported directly into your spreadsheet, preserving the columns and rows!

The other supported formats are everything that tabulate supports—LaTeX, GitHub Markdown, MediaWiki, JIRA and more.

You can try the plugin out on some New York Times Covid-19 data (for Harris county, Texas) over here.

Clicking the “copy to clipboard” button selects the content in the textarea and copies it to your clipboard. I wrote about how this works in a TIL.

datasette-insert-api

Ever since Datasette plugins gained the ability to write to the database back in February I’ve been looking forward to building something that really put it through its paces.

datasette-insert-api is a relatively thin wrapper around my sqlite-utils Python library which unlocks a powerful set of functionality:

- POST a JSON array to Datasette to insert those objects as rows in a database table

- If the table does not yet exist, Datasette can create it with the corresponding columns

- If the table exists and has a primary key, records with matching primary keys can replace existing rows (a simple form of update)

- If unknown columns are present in the data, the plugin can optionally alter the table to add the necessary columns

The README has the details, but the simplest example of it in action (using curl) looks like this:

curl --request POST \

--data '[

{

"id": 1,

"name": "Cleopaws",

"age": 5

},

{

"id": 2,

"name": "Pancakes",

"age": 4

}

]' \

'http://localhost:8001/-/insert/data/dogs?pk=id'The plugin works without authentication—useful for piping JSON into a database on your own laptop—or you can couple it with a plugin such as datasette-auth-tokens to provide authenticated access over the public internet.

Speaking at Boston Python

I gave a talk about Datasette and Dogsheep for Boston Python on Wednesday. Ned turned around the video really quickly—I start talking at 8 minutes and 52 seconds in.

I used a collaborative Google Doc for the Q&A which worked really well, as the questions accumulated while I was presenting and I then got to address them at the end. I spent some time after the talk fleshing out my answers with extra links—here’s the resulting document.

Upgrading this blog to Django 3.1

The first Django 3.1 release candidate came out earlier this week, and I’ve upgraded my blog to this (a one-line change I made through the GitHub web interface thanks to Heroku continuous deployment).

I also upgraded my Heroku PostgreSQL database to v11 so I could take advantage of the new search_type="websearch" option for PostgreSQL full-text search added in Django 3.1—James Turk contributed that improvement and wrote about it on his blog.

This means my blog search engine now supports advanced operators, like “phrase searches” and -subtractions—try that out here: “signed cookies” -django.

content-length and datasette-clone progress bars

When Datasette runs against an immutable SQLite database it lets you download that database file directly. Datasette development version now includes a content-length header specifying the size of that download.

... which means my datasette-clone tool can now show you a progress bar!

$ datasette-clone https://fivethirtyeight.datasettes.com -v

Fetching fivethirtyeight.db, current hash 7ba632a14f29375c289d96400947e2d5fcdad3a4b5a90883d6286bd4e83ede78 != None

268.79 MB [##########--------------------------] 28% 00:02:14github-to-sqlite tags

I wanted to build myself a SQL query to show me my GitHub repositories that have commits which have not yet been included in a release.

It turned out I needed to build a new import command for this: github-to-sqlite tags github.db simonw/datasette—which fetches all of the tags for the specified repository.

Here’s the relevant issue and here’s a monstrous SQL query that shows me repositories with commits that are ready to be shipped in a release:

with most_recent_releases as (

with ranked as (

select

repo,

tag_name,

published_at,

row_number() OVER (

partition BY repo

ORDER BY

published_at DESC

) rank

FROM

releases

)

select

*

from

ranked

where

rank = 1

)

select

repos.full_name as repo,

most_recent_releases.tag_name as release,

commits.committer_date as release_commit_date,

(

select

count(*)

from

commits c2

where

c2.repo = repos.id

and c2.committer_date > commits.committer_date

) as commits_since_release,

'https://github.com/' || repos.full_name || '/compare/' || most_recent_releases.tag_name || '...' || repos.default_branch as view_commits

from

most_recent_releases

join repos on most_recent_releases.repo = repos.id

join tags on tags.repo = repos.id

and tags.name = most_recent_releases.tag_name

join commits on tags.sha = commits.sha

order by

commits_since_release desc

TIL this week

- Upgrading a Heroku PostgreSQL database with pg:copy

- Registering temporary pluggy plugins inside tests

- Updating a Markdown table of contents with a GitHub Action

Releases this week

- twitter-to-sqlite 0.21.3—2020-07-23

- datasette-clone 0.5—2020-07-22

- datasette-copyable 0.2—2020-07-21

- datasette-copyable 0.1—2020-07-21

- datasette-insert-api 0.4—2020-07-21

- datasette-auth-tokens 0.2.2—2020-07-20

- datasette-insert-api 0.3—2020-07-20

- datasette-insert-api 0.2a—2020-07-19

- twitter-to-sqlite 0.21.2—2020-07-18

- github-to-sqlite 2.4—2020-07-18

More recent articles

- Phoenix.new is Fly's entry into the prompt-driven app development space - 23rd June 2025

- Trying out the new Gemini 2.5 model family - 17th June 2025

- The lethal trifecta for AI agents: private data, untrusted content, and external communication - 16th June 2025