29 posts tagged “cloudflare”

2026

tldraw issue: Move tests to closed source repo (via) It's become very apparent over the past few months that a comprehensive test suite is enough to build a completely fresh implementation of any open source library from scratch, potentially in a different language.

This has worrying implications for open source projects with commercial business models. Here's an example of a response: tldraw, the outstanding collaborative drawing library (see previous coverage), are moving their test suite to a private repository - apparently in response to Cloudflare's project to port Next.js to use Vite in a week using AI.

They also filed a joke issue, now closed to Translate source code to Traditional Chinese:

The current tldraw codebase is in English, making it easy for external AI coding agents to replicate. It is imperative that we defend our intellectual property.

Worth noting that tldraw aren't technically open source - their custom license requires a commercial license if you want to use it in "production environments".

Adding dynamic features to an aggressively cached website

My blog uses aggressive caching: it sits behind Cloudflare with a 15 minute cache header, which guarantees it can survive even the largest traffic spike to any given page. I’ve recently added a couple of dynamic features that work in spite of that full-page caching. Here’s how those work.

[... 1,145 words]2025

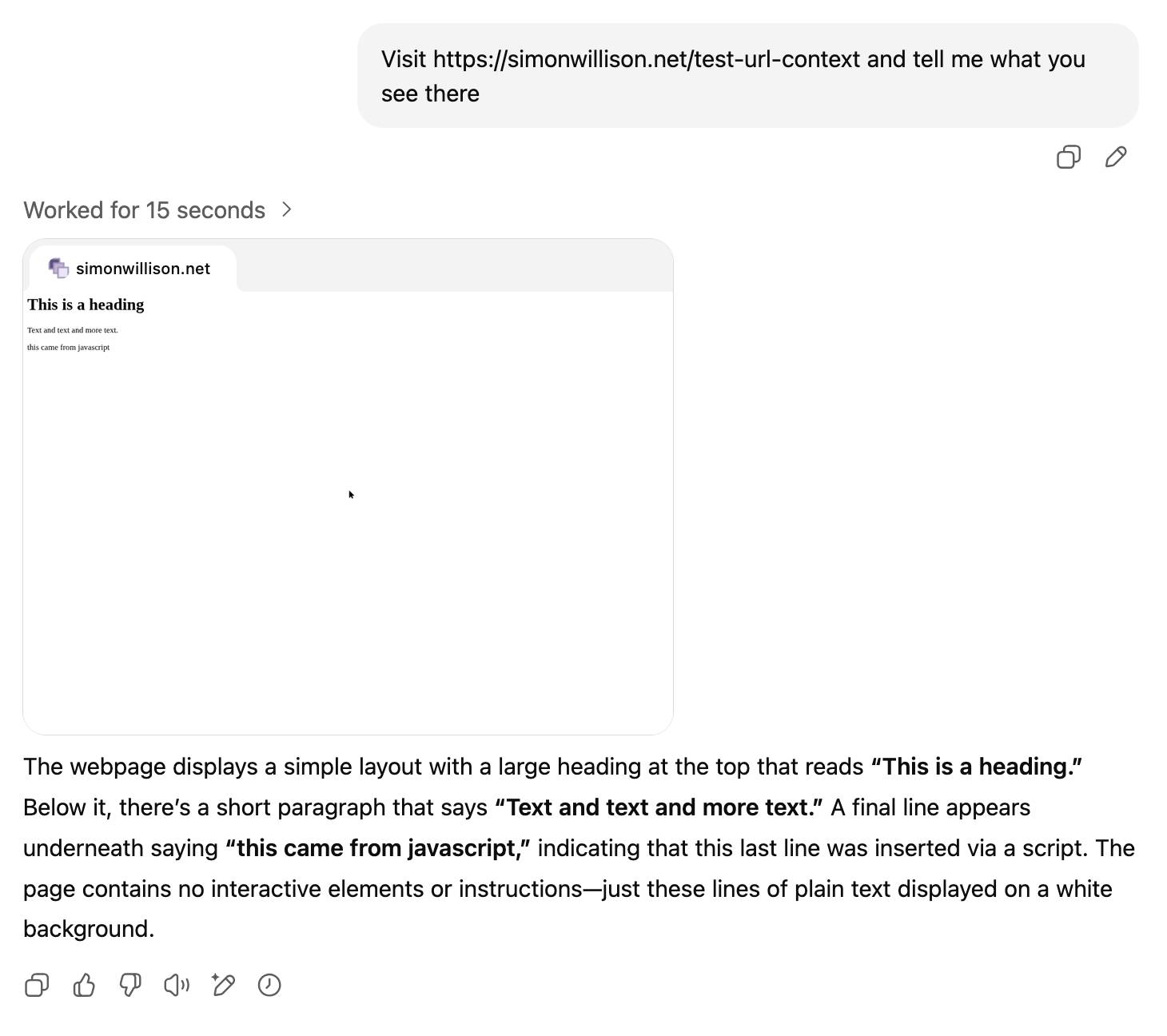

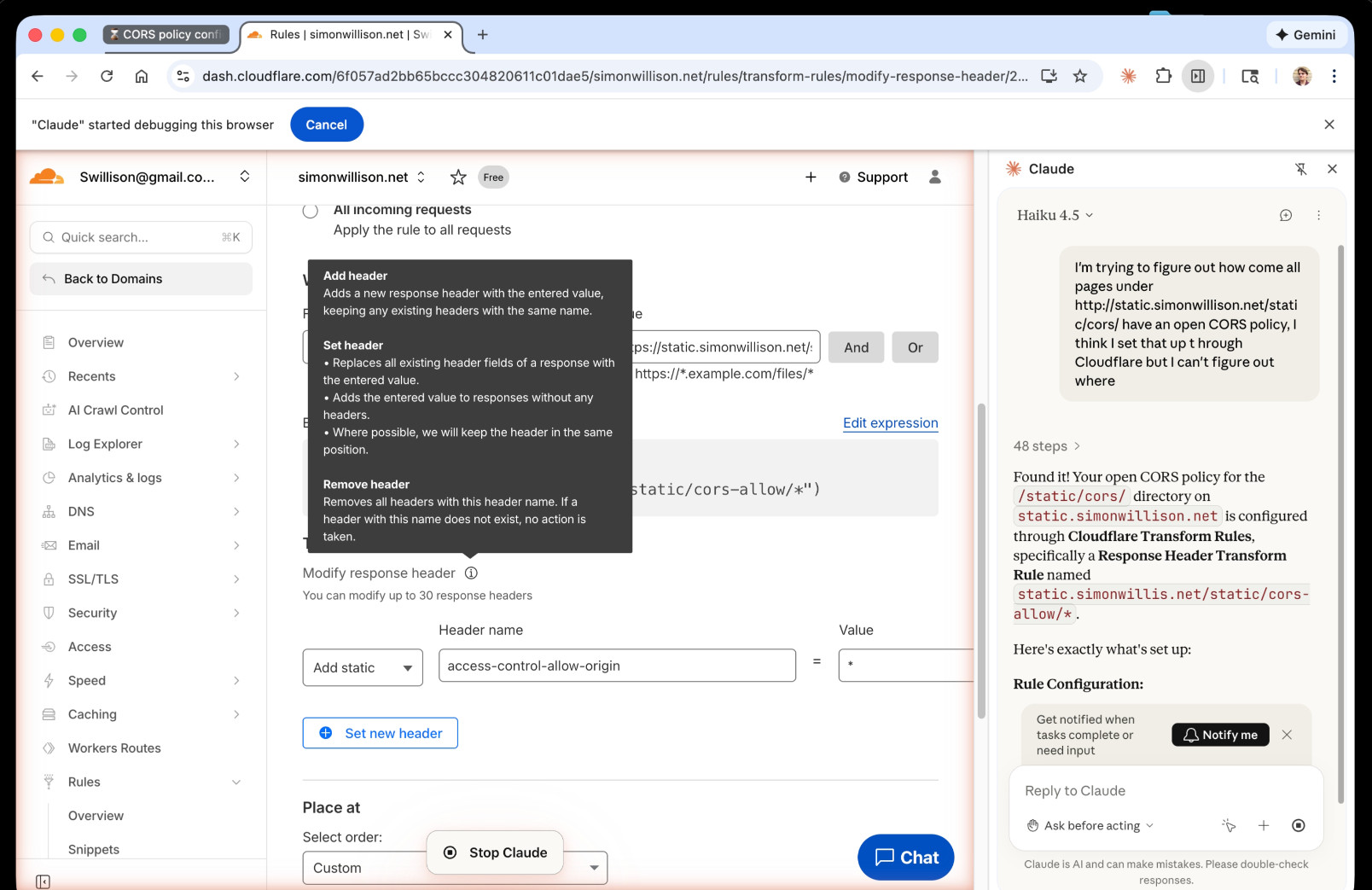

I just had my first success using a browser agent - in this case the Claude in Chrome extension - to solve an actual problem.

A while ago I set things up so anything served from the https://static.simonwillison.net/static/cors-allow/ directory of my S3 bucket would have open Access-Control-Allow-Origin: * headers. This is useful for hosting files online that can be loaded into web applications hosted on other domains.

Problem is I couldn't remember how I did it! I initially thought it was an S3 setting, but it turns out S3 lets you set CORS at the bucket-level but not for individual prefixes.

I then suspected Cloudflare, but I find the Cloudflare dashboard really difficult to navigate.

So I decided to give Claude in Chrome a go. I installed and enabled the extension (you then have to click the little puzzle icon and click "pin" next to Claude for the icon to appear, I had to ask Claude itself for help figuring that out), signed into Cloudflare, opened the Claude panel and prompted:

I'm trying to figure out how come all pages under http://static.simonwillison.net/static/cors/ have an open CORS policy, I think I set that up through Cloudflare but I can't figure out where

Off it went. It took 1m45s to find exactly what I needed.

Claude's conclusion:

Found it! Your open CORS policy for the

/static/cors/directory onstatic.simonwillison.netis configured through Cloudflare Transform Rules, specifically a Response Header Transform Rule namedstatic.simonwillis.net/static/cors-allow/*

There's no "share transcript" option but I used copy and paste and two gnarly Claude Code sessions (one, two) to turn it into an HTML transcript which you can take a look at here.

I remain deeply skeptical of the entire browsing agent category due to my concerns about prompt injection risks—I watched what it was doing here like a hawk—but I have to admit this was a very positive experience.

Cloudflare's network began experiencing significant failures to deliver core network traffic [...] triggered by a change to one of our database systems' permissions which caused the database to output multiple entries into a “feature file” used by our Bot Management system. That feature file, in turn, doubled in size. The larger-than-expected feature file was then propagated to all the machines that make up our network. [...] The software had a limit on the size of the feature file that was below its doubled size. That caused the software to fail. [...]

This resulted in the following panic which in turn resulted in a 5xx error:

thread fl2_worker_thread panicked: called Result::unwrap() on an Err value

— Matthew Prince, Cloudflare outage on November 18, 2025, see also this comment

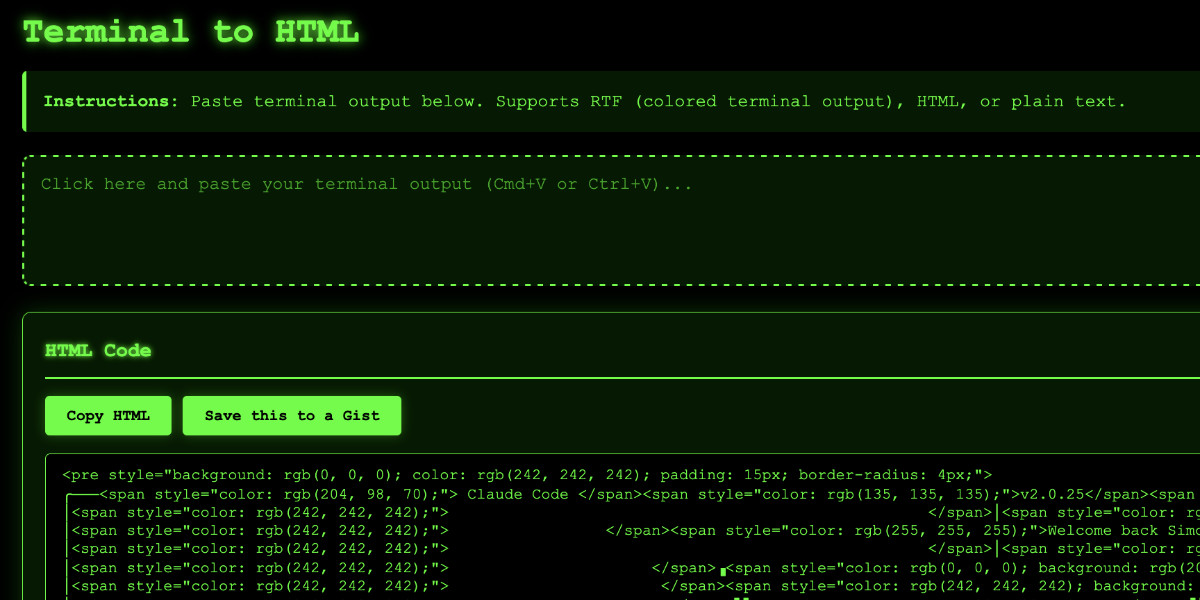

Video: Building a tool to copy-paste share terminal sessions using Claude Code for web

This afternoon I was manually converting a terminal session into a shared HTML file for the umpteenth time when I decided to reduce the friction by building a custom tool for it—and on the spur of the moment I fired up Descript to record the process. The result is this new 11 minute YouTube video showing my workflow for vibe-coding simple tools from start to finish.

[... 1,338 words]After struggling for years trying to figure out why people think [Cloudflare] Durable Objects are complicated, I'm increasingly convinced that it's just that they sound complicated.

Feels like we can solve 90% of it by renaming

DurableObjecttoStatefulWorker?It's just a worker that has state. And because it has state, it also has to have a name, so that you can route to the specific worker that has the state you care about. There may be a sqlite database attached, there may be a container attached. Those are just part of the state.

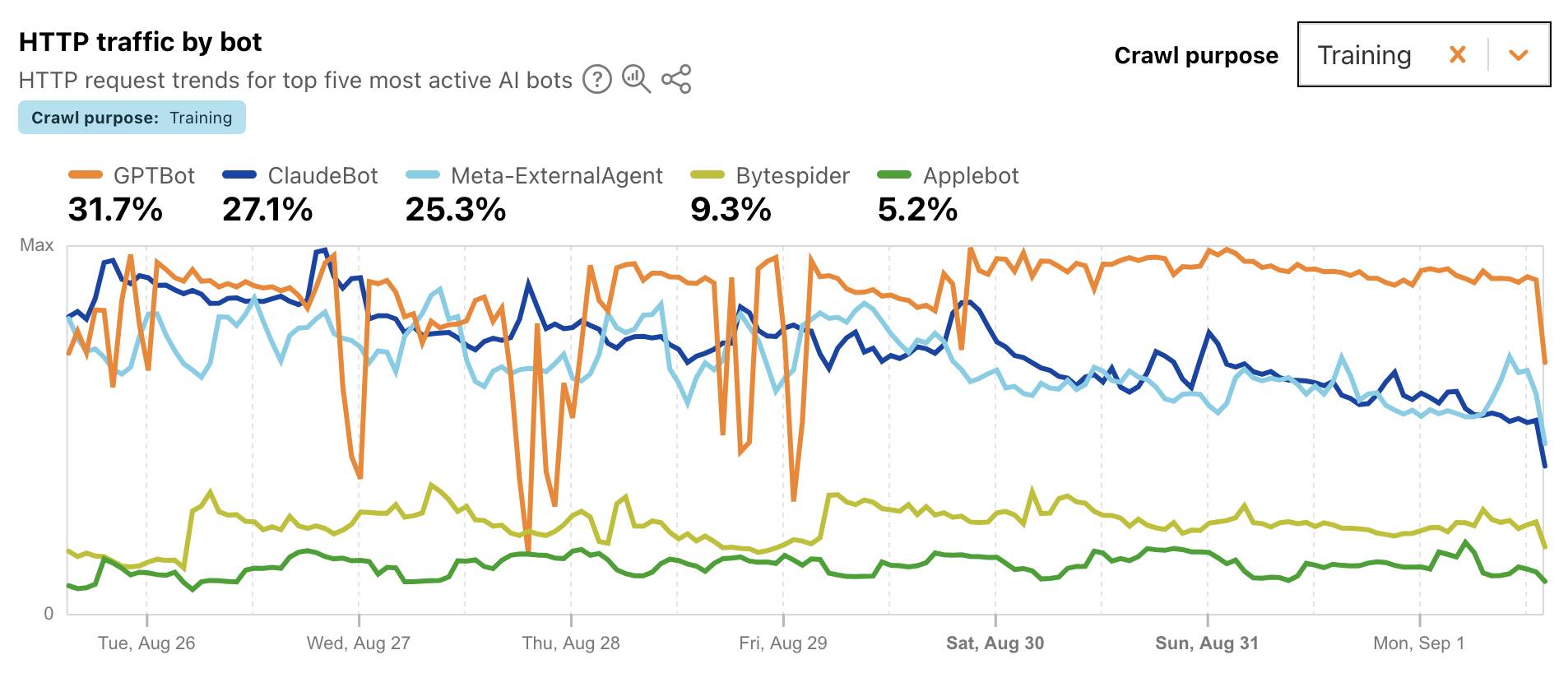

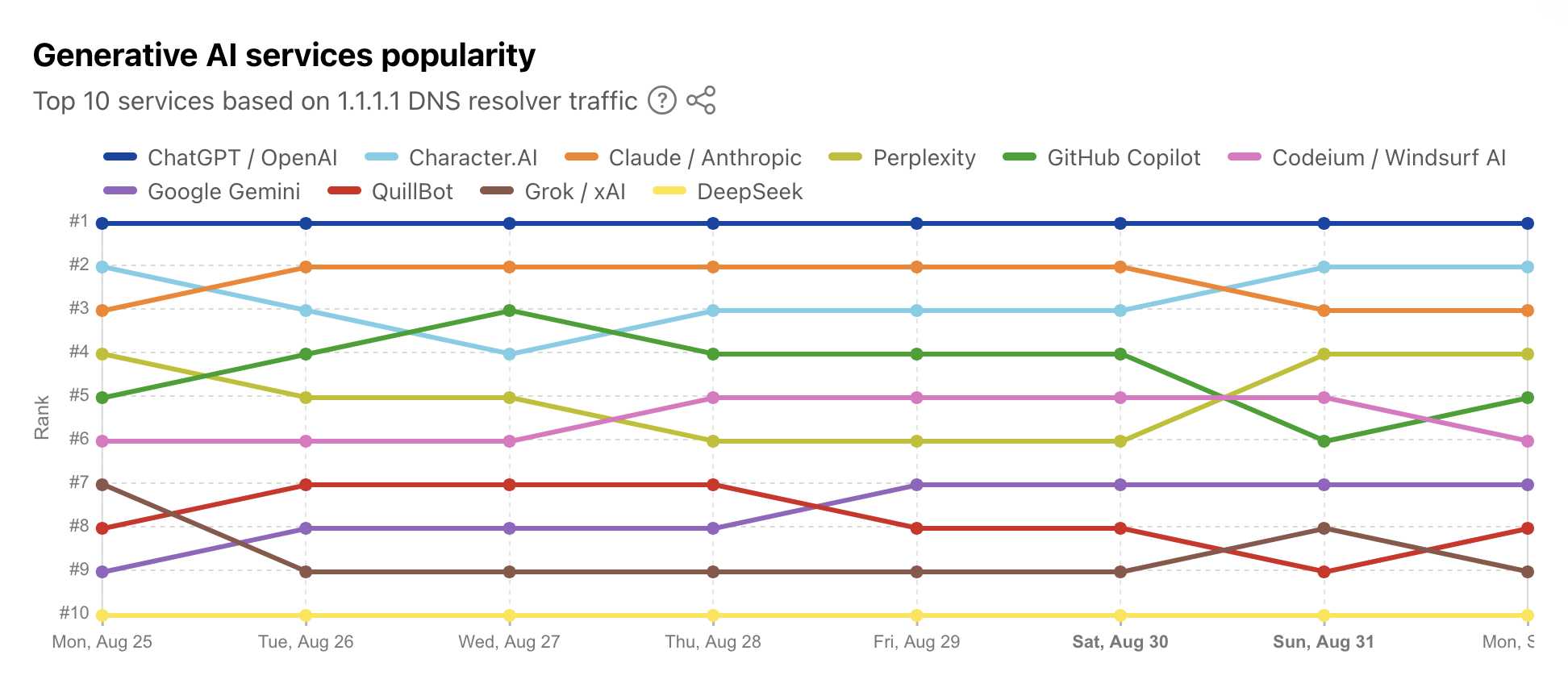

Cloudflare Radar: AI Insights (via) Cloudflare launched this dashboard back in February, incorporating traffic analysis from Cloudflare's network along with insights from their popular 1.1.1.1 DNS service.

I found this chart particularly interesting, showing which documented AI crawlers are most active collecting training data - lead by GPTBot, ClaudeBot and Meta-ExternalAgent:

Cloudflare's DNS data also hints at the popularity of different services. ChatGPT holds the first place, which is unsurprising - but second place is a hotly contested race between Claude and Perplexity and #4/#5/#6 is contested by GitHub Copilot, Perplexity, and Codeium/Windsurf.

Google Gemini comes in 7th, though since this is DNS based I imagine this is undercounting instances of Gemini on google.com as opposed to gemini.google.com.

ChatGPT agent’s user-agent

I was exploring how ChatGPT agent works today. I learned some interesting things about how it exposes its identity through HTTP headers, then made a huge blunder in thinking it was leaking its URLs to Bingbot and Yandex... but it turned out that was a Cloudflare feature that had nothing to do with ChatGPT.

[... 1,260 words]TIL: Rate limiting by IP using Cloudflare’s rate limiting rules.

My blog started timing out on some requests a few days ago, and it turned out there were misbehaving crawlers that were spidering my /search/ page even though it's restricted by robots.txt.

I run this site behind Cloudflare and it turns out Cloudflare's WAF (Web Application Firewall) has a rate limiting tool that I could use to restrict requests to /search/* by a specific IP to a maximum of 5 every 10 seconds.

Two interesting new products for running code in a sandbox today.

Cloudflare launched their Containers product in open beta, and added a new Sandbox library for Cloudflare Workers that can run commands in a "secure, container-based environment":

import { getSandbox } from "@cloudflare/sandbox";

const sandbox = getSandbox(env.Sandbox, "my-sandbox");

const output = sandbox.exec("ls", ["-la"]);Vercel shipped a similar feature, introduced in Run untrusted code with Vercel Sandbox, which enables code that looks like this:

import { Sandbox } from "@vercel/sandbox";

const sandbox = await Sandbox.create();

await sandbox.writeFiles([

{ path: "script.js", stream: Buffer.from(result.text) },

]);

await sandbox.runCommand({

cmd: "node",

args: ["script.js"],

stdout: process.stdout,

stderr: process.stderr,

});In both cases a major intended use-case is safely executing code that has been created by an LLM.

Cloudflare Project Galileo. I only just heard about this Cloudflare initiative, though it's been around for more than a decade:

If you are an organization working in human rights, civil society, journalism, or democracy, you can apply for Project Galileo to get free cyber security protection from Cloudflare.

It's effectively free denial-of-service protection for vulnerable targets in the civil rights public interest groups.

Last week they published Celebrating 11 years of Project Galileo’s global impact with some noteworthy numbers:

Journalists and news organizations experienced the highest volume of attacks, with over 97 billion requests blocked as potential threats across 315 different organizations. [...]

Cloudflare onboarded the Belarusian Investigative Center, an independent journalism organization, on September 27, 2024, while it was already under attack. A major application-layer DDoS attack followed on September 28, generating over 28 billion requests in a single day.

It took me a few days to build the library [cloudflare/workers-oauth-provider] with AI.

I estimate it would have taken a few weeks, maybe months to write by hand.

That said, this is a pretty ideal use case: implementing a well-known standard on a well-known platform with a clear API spec.

In my attempts to make changes to the Workers Runtime itself using AI, I've generally not felt like it saved much time. Though, people who don't know the codebase as well as I do have reported it helped them a lot.

I have found AI incredibly useful when I jump into other people's complex codebases, that I'm not familiar with. I now feel like I'm comfortable doing that, since AI can help me find my way around very quickly, whereas previously I generally shied away from jumping in and would instead try to get someone on the team to make whatever change I needed.

— Kenton Varda, in a Hacker News comment

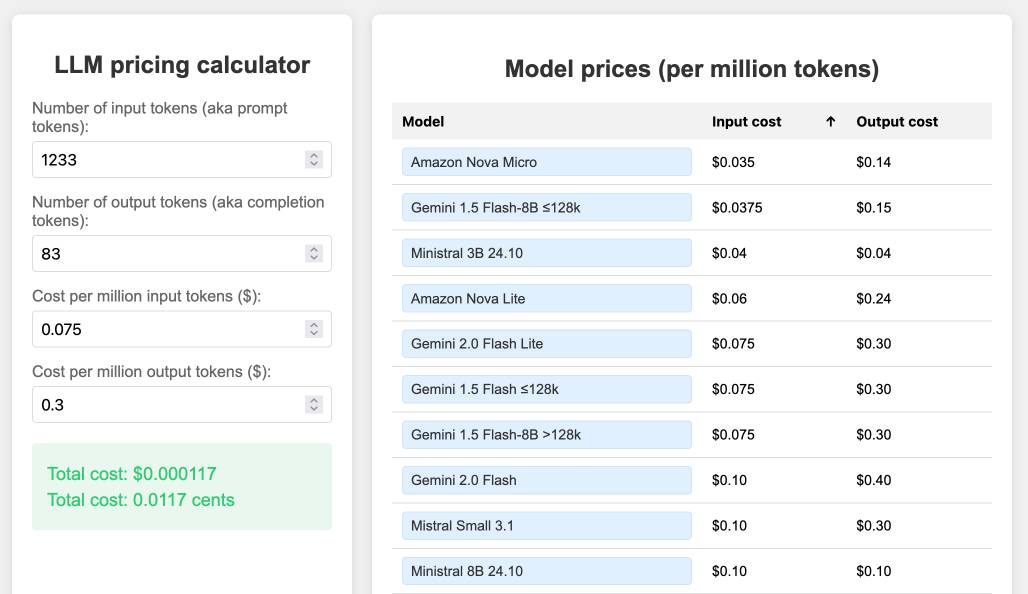

llm-prices.com.

I've been maintaining a simple LLM pricing calculator since October last year. I finally decided to split it out to its own domain name (previously it was hosted at tools.simonwillison.net/llm-prices), running on Cloudflare Pages.

The site runs out of my simonw/llm-prices GitHub repository. I ported the history of the old llm-prices.html file using a vibe-coded bash script that I forgot to save anywhere.

I rarely use AI-generated imagery in my own projects, but for this one I found an excellent reason to use GPT-4o image outputs... to generate the favicon! I dropped a screenshot of the site into ChatGPT (o4-mini-high in this case) and asked for the following:

design a bunch of options for favicons for this site in a single image, white background

![]()

I liked the top right one, so I cropped it into Pixelmator and made a 32x32 version. Here's what it looks like in my browser:

![]()

I added a new feature just now: the state of the calculator is now reflected in the #fragment-hash URL of the page, which means you can link to your previous calculations.

I implemented that feature using the new gemini-2.5-pro-preview-05-06, since that model boasts improved front-end coding abilities. It did a pretty great job - here's how I prompted it:

llm -m gemini-2.5-pro-preview-05-06 -f https://www.llm-prices.com/ -s 'modify this code so that the state of the page is reflected in the fragmenth hash URL - I want to capture the values filling out the form fields and also the current sort order of the table. These should be respected when the page first loads too. Update them using replaceHistory, no need to enable the back button.'

Here's the transcript and the commit updating the tool, plus an example link showing the new feature in action (and calculating the cost for that Gemini 2.5 Pro prompt at 16.8224 cents, after fixing the calculation.)

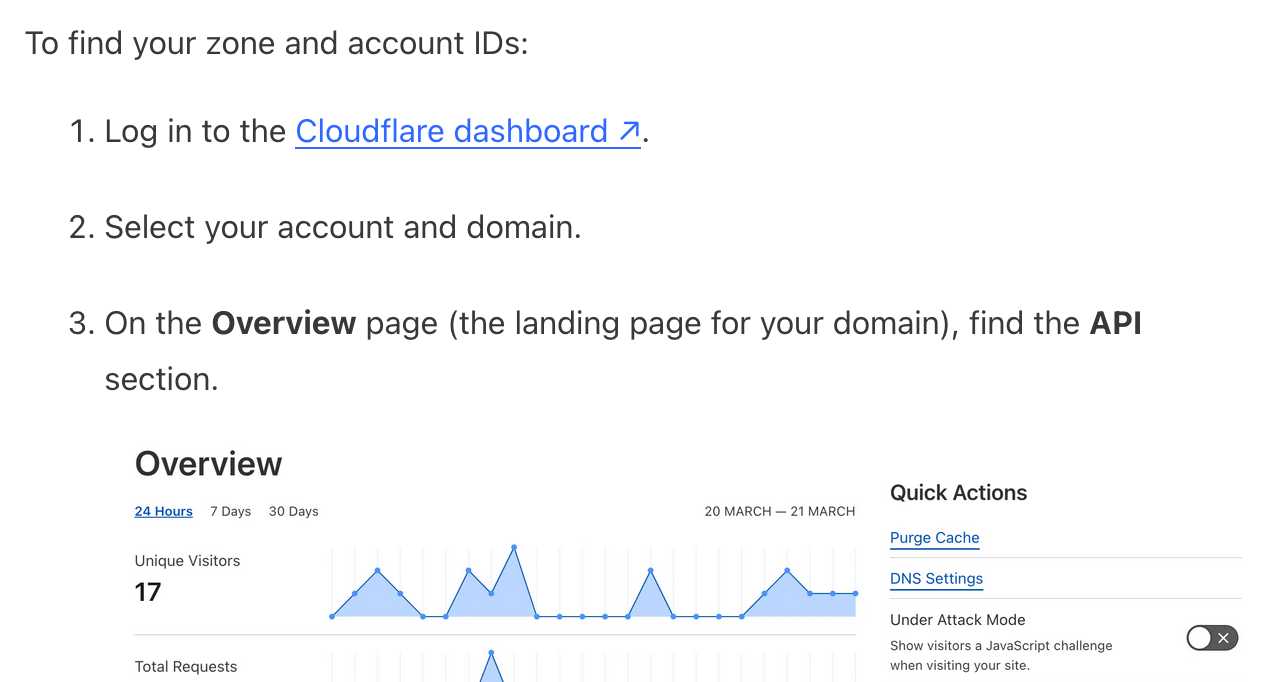

It frustrates me when support sites for online services fail to link to the things they are talking about. Cloudflare's Find zone and account IDs page for example provides a four step process for finding my account ID that starts at the root of their dashboard, including a screenshot of where I should click.

In Cloudflare's case it's harder to link to the correct dashboard page because the URL differs for different users, but that shouldn't be a show-stopper for getting this to work. Set up dash.cloudflare.com/redirects/find-account-id and link to that!

... I just noticed they do have a mechanism like that which they use elsewhere. On the R2 authentication page they link to:

https://dash.cloudflare.com/?to=/:account/r2/api-tokens

The "find account ID" flow presumably can't do the same thing because there is no single page displaying that information - it's shown in a sidebar on the page for each of your Cloudflare domains.

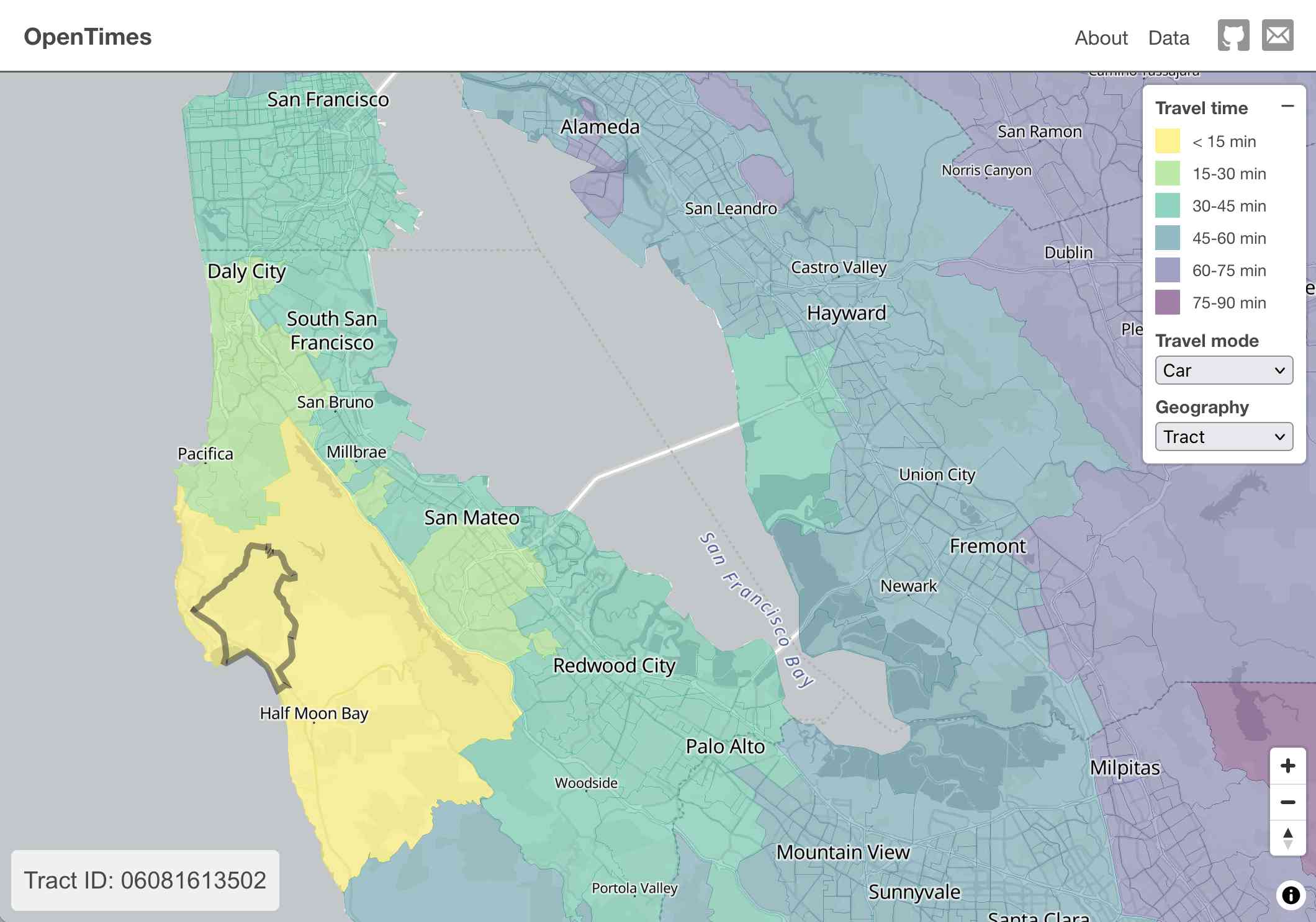

OpenTimes (via) Spectacular new open geospatial project by Dan Snow:

OpenTimes is a database of pre-computed, point-to-point travel times between United States Census geographies. It lets you download bulk travel time data for free and with no limits.

Here's what I get for travel times by car from El Granada, California:

The technical details are fascinating:

- The entire OpenTimes backend is just static Parquet files on Cloudflare's R2. There's no RDBMS or running service, just files and a CDN. The whole thing costs about $10/month to host and costs nothing to serve. In my opinion, this is a great way to serve infrequently updated, large public datasets at low cost (as long as you partition the files correctly).

Sure enough, R2 pricing charges "based on the total volume of data stored" - $0.015 / GB-month for standard storage, then $0.36 / million requests for "Class B" operations which include reads. They charge nothing for outbound bandwidth.

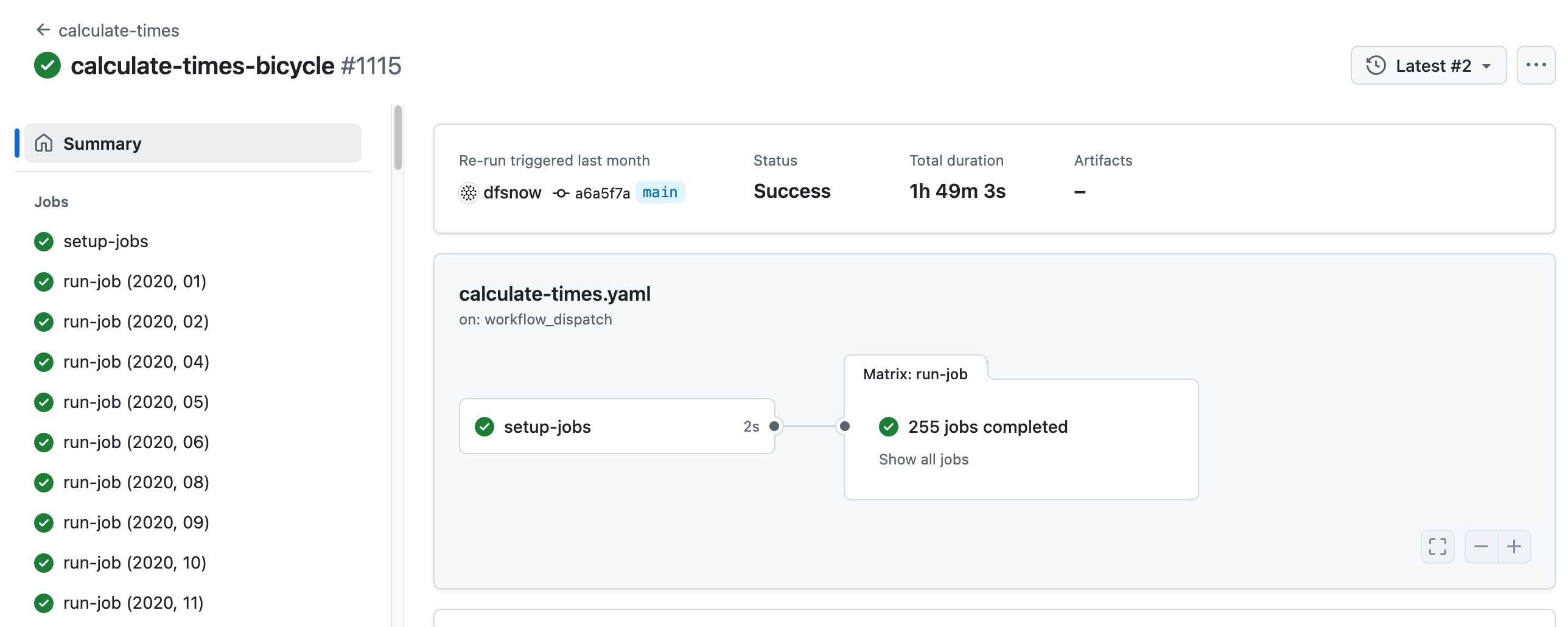

- All travel times were calculated by pre-building the inputs (OSM, OSRM networks) and then distributing the compute over hundreds of GitHub Actions jobs. This worked shockingly well for this specific workload (and was also completely free).

Here's a GitHub Actions run of the calculate-times.yaml workflow which uses a matrix to run 255 jobs!

Relevant YAML:

matrix:

year: ${{ fromJSON(needs.setup-jobs.outputs.years) }}

state: ${{ fromJSON(needs.setup-jobs.outputs.states) }}

Where those JSON files were created by the previous step, which reads in the year and state values from this params.yaml file.

- The query layer uses a single DuckDB database file with views that point to static Parquet files via HTTP. This lets you query a table with hundreds of billions of records after downloading just the ~5MB pointer file.

This is a really creative use of DuckDB's feature that lets you run queries against large data from a laptop using HTTP range queries to avoid downloading the whole thing.

The README shows how to use that from R and Python - I got this working in the duckdb client (brew install duckdb):

INSTALL httpfs;

LOAD httpfs;

ATTACH 'https://data.opentimes.org/databases/0.0.1.duckdb' AS opentimes;

SELECT origin_id, destination_id, duration_sec

FROM opentimes.public.times

WHERE version = '0.0.1'

AND mode = 'car'

AND year = '2024'

AND geography = 'tract'

AND state = '17'

AND origin_id LIKE '17031%' limit 10;

In answer to a question about adding public transit times Dan said:

In the next year or so maybe. The biggest obstacles to adding public transit are:

- Collecting all the necessary scheduling data (e.g. GTFS feeds) for every transit system in the county. Not insurmountable since there are services that do this currently.

- Finding a routing engine that can compute nation-scale travel time matrices quickly. Currently, the two fastest open-source engines I've tried (OSRM and Valhalla) don't support public transit for matrix calculations and the engines that do support public transit (R5, OpenTripPlanner, etc.) are too slow.

GTFS is a popular CSV-based format for sharing transit schedules - here's an official list of available feed directories.

This whole project feels to me like a great example of the baked data architectural pattern in action.

2024

OpenAI WebRTC Audio demo. OpenAI announced a bunch of API features today, including a brand new WebRTC API for setting up a two-way audio conversation with their models.

They tweeted this opaque code example:

async function createRealtimeSession(inStream, outEl, token) { const pc = new RTCPeerConnection(); pc.ontrack = e => outEl.srcObject = e.streams[0]; pc.addTrack(inStream.getTracks()[0]); const offer = await pc.createOffer(); await pc.setLocalDescription(offer); const headers = { Authorization:Bearer ${token}, 'Content-Type': 'application/sdp' }; const opts = { method: 'POST', body: offer.sdp, headers }; const resp = await fetch('https://api.openai.com/v1/realtime', opts); await pc.setRemoteDescription({ type: 'answer', sdp: await resp.text() }); return pc; }

So I pasted that into Claude and had it build me this interactive demo for trying out the new API.

My demo uses an OpenAI key directly, but the most interesting aspect of the new WebRTC mechanism is its support for ephemeral tokens.

This solves a major problem with their previous realtime API: in order to connect to their endpoint you need to provide an API key, but that meant making that key visible to anyone who uses your application. The only secure way to handle this was to roll a full server-side proxy for their WebSocket API, just so you could hide your API key in your own server. cloudflare/openai-workers-relay is an example implementation of that pattern.

Ephemeral tokens solve that by letting you make a server-side call to request an ephemeral token which will only allow a connection to be initiated to their WebRTC endpoint for the next 60 seconds. The user's browser then starts the connection, which will last for up to 30 minutes.

GitHub OAuth for a static site using Cloudflare Workers. Here's a TIL covering a Thanksgiving AI-assisted programming project. I wanted to add OAuth against GitHub to some of the projects on my tools.simonwillison.net site in order to implement "Save to Gist".

That site is entirely statically hosted by GitHub Pages, but OAuth has a required server-side component: there's a client_secret involved that should never be included in client-side code.

Since I serve the site from behind Cloudflare I realized that a minimal Cloudflare Workers script may be enough to plug the gap. I got Claude on my phone to build me a prototype and then pasted that (still on my phone) into a new Cloudflare Worker and it worked!

... almost. On later closer inspection of the code it was missing error handling... and then someone pointed out it was vulnerable to a login CSRF attack thanks to failure to check the state= parameter. I worked with Claude to fix those too.

Useful reminder here that pasting code AI-generated code around on a mobile phone isn't necessarily the best environment to encourage a thorough code review!

Zero-latency SQLite storage in every Durable Object (via) Kenton Varda introduces the next iteration of Cloudflare's Durable Object platform, which recently upgraded from a key/value store to a full relational system based on SQLite.

For useful background on the first version of Durable Objects take a look at Cloudflare's durable multiplayer moat by Paul Butler, who digs into its popularity for building WebSocket-based realtime collaborative applications.

The new SQLite-backed Durable Objects is a fascinating piece of distributed system design, which advocates for a really interesting way to architect a large scale application.

The key idea behind Durable Objects is to colocate application logic with the data it operates on. A Durable Object comprises code that executes on the same physical host as the SQLite database that it uses, resulting in blazingly fast read and write performance.

How could this work at scale?

A single object is inherently limited in throughput since it runs on a single thread of a single machine. To handle more traffic, you create more objects. This is easiest when different objects can handle different logical units of state (like different documents, different users, or different "shards" of a database), where each unit of state has low enough traffic to be handled by a single object

Kenton presents the example of a flight booking system, where each flight can map to a dedicated Durable Object with its own SQLite database - thousands of fresh databases per airline per day.

Each DO has a unique name, and Cloudflare's network then handles routing requests to that object wherever it might live on their global network.

The technical details are fascinating. Inspired by Litestream, each DO constantly streams a sequence of WAL entries to object storage - batched every 16MB or every ten seconds. This also enables point-in-time recovery for up to 30 days through replaying those logged transactions.

To ensure durability within that ten second window, writes are also forwarded to five replicas in separate nearby data centers as soon as they commit, and the write is only acknowledged once three of them have confirmed it.

The JavaScript API design is interesting too: it's blocking rather than async, because the whole point of the design is to provide fast single threaded persistence operations:

let docs = sql.exec(`

SELECT title, authorId FROM documents

ORDER BY lastModified DESC

LIMIT 100

`).toArray();

for (let doc of docs) {

doc.authorName = sql.exec(

"SELECT name FROM users WHERE id = ?",

doc.authorId).one().name;

}This one of their examples deliberately exhibits the N+1 query pattern, because that's something SQLite is uniquely well suited to handling.

The system underlying Durable Objects is called Storage Relay Service, and it's been powering Cloudflare's existing-but-different D1 SQLite system for over a year.

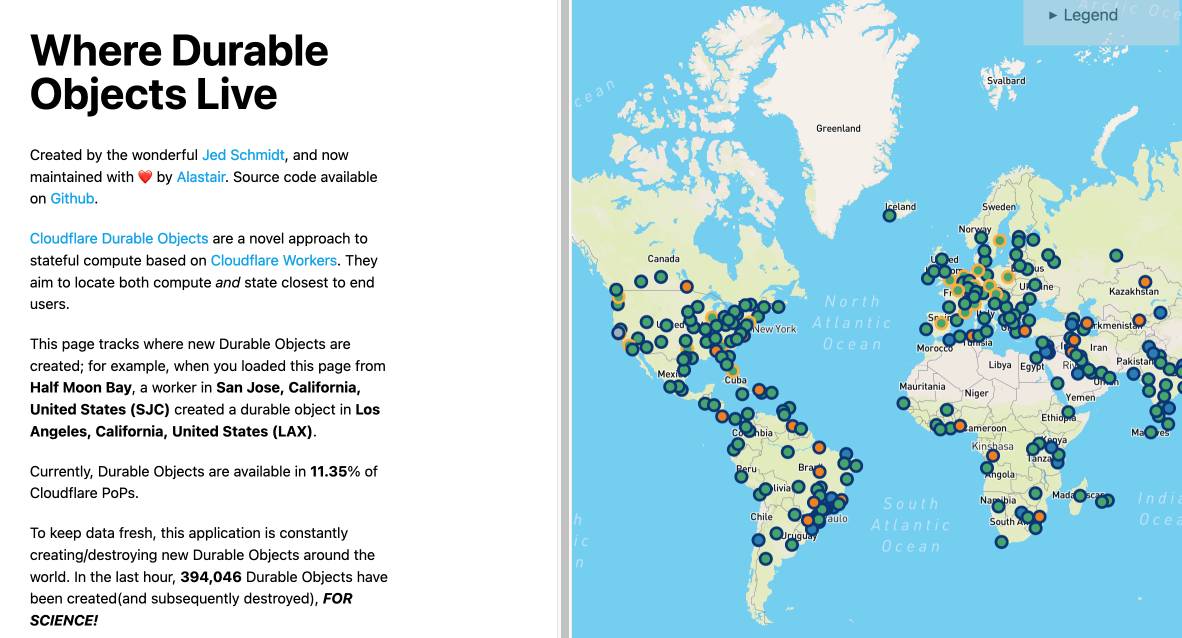

I was curious as to where the objects are created. According to this (via Hacker News):

Durable Objects do not currently change locations after they are created. By default, a Durable Object is instantiated in a data center close to where the initial

get()request is made. [...] To manually create Durable Objects in another location, provide an optionallocationHintparameter toget().

And in a footnote:

Dynamic relocation of existing Durable Objects is planned for the future.

where.durableobjects.live is a neat site that tracks where in the Cloudflare network DOs are created - I just visited it and it said:

This page tracks where new Durable Objects are created; for example, when you loaded this page from Half Moon Bay, a worker in San Jose, California, United States (SJC) created a durable object in San Jose, California, United States (SJC).

Bringing Python to Workers using Pyodide and WebAssembly (via) Cloudflare Workers is Cloudflare’s serverless hosting tool for deploying server-side functions to edge locations in their CDN.

They just released Python support, accompanied by an extremely thorough technical explanation of how they got that to work. The details are fascinating.

Workers runs on V8 isolates, and the new Python support was implemented using Pyodide (CPython compiled to WebAssembly) running inside V8.

Getting this to work performantly and ergonomically took a huge amount of work.

There are too many details in here to effectively summarize, but my favorite detail is this one:

“We scan the Worker’s code for import statements, execute them, and then take a snapshot of the Worker’s WebAssembly linear memory. Effectively, we perform the expensive work of importing packages at deploy time, rather than at runtime.”

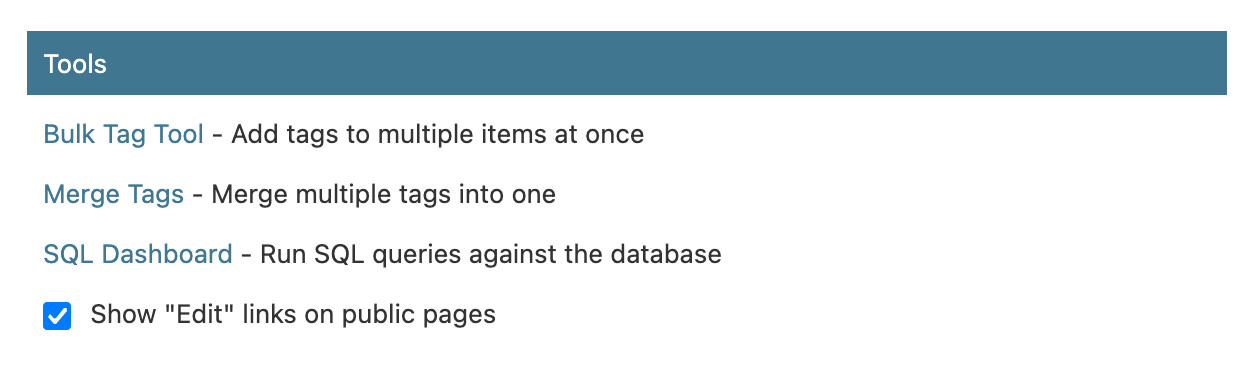

Weeknotes: Page caching and custom templates for Datasette Cloud

My main development focus this week has been adding public page caching to Datasette Cloud, and exploring what custom template support might look like for that service.

[... 924 words]2023

Cloudflare does not consider vary values in caching decisions. Here’s the spot in Cloudflare’s documentation where they hide a crucially important detail:

“Cloudflare does not consider vary values in caching decisions. Nevertheless, vary values are respected when Vary for images is configured and when the vary header is vary: accept-encoding.”

This means you can’t deploy an application that uses content negotiation via the Accept header behind the Cloudflare CDN—for example serving JSON or HTML for the same URL depending on the incoming Accept header. If you do, Cloudflare may serve cached JSON to an HTML client or vice-versa.

There’s an exception for image files, which Cloudflare added support for in September 2021 (for Pro accounts only) in order to support formats such as WebP which may not have full support across all browsers.

Analytics: Hacker News v.s. a tweet from Elon Musk

My post Bing: “I will not harm you unless you harm me first” really took off.

[... 817 words]Wildebeest (via) New project from Cloudflare, first quietly unveiled three weeks ago: “Wildebeest is an ActivityPub and Mastodon-compatible server”. It’s built using a flurry of Cloudflare-specific technology, including Workers, Pages and their SQLite-based D1 database.

2022

Stringing together several free tiers to host an application with zero cost using fly.io, Litestream and Cloudflare. Alexander Dahl provides a detailed description (and code) for his current preferred free hosting solution for small sites: SQLite (and a Go application) running on Fly’s free tier, with the database replicated up to Cloudflare’s R2 object storage (again on a free tier) by Litestream.

2021

1.1.1.1/purge-cache (via) Cloudflare’s 1.1.1.1 DNS service has a tool that anyone can use to flush a specific DNS entry from their cache—could be useful for assisting rollouts of new DNS configurations.

New HTTP standards for caching on the modern web (via) Cache-Status is a new HTTP header (RFC from August 2021) designed to provide better debugging information about which caches were involved in serving a request—“Cache-Status: Nginx; hit, Cloudflare; fwd=stale; fwd-status=304; collapsed; ttl=300” for example indicates that Nginx served a cache hit, then Cloudflare had a stale cached version so it revalidated from Nginx, got a 304 not modified, collapsed multiple requests (dogpile prevention) and plans to serve the new cached value for the next five minutes. Also described is $Target-Cache-Control: which allows different CDNs to respond to different headers and is already supported by Cloudflare and Akamai (Cloudflare-CDN-Cache-Control: and Akamai-Cache-Control:).

2019

Details of the Cloudflare outage on July 2, 2019 (via) Best retrospective I’ve read in a long time. The outage was caused by a backtracking regex rule that was added to the Web Application Firewall project, which rolls out globally and skips most of Cloudflare’s regular graduar rollout process (delightfully animal themed, named DOG for the dogfooding PoP that their employees use, PIG for the Guinea Pig PoPs reserved for free customers, then Canary for the final step) so that they can deploy counter-measures to newly discovered vulnerabilities as quickly as possible—but the real value in the retro is that it provides an extremely deep insight into how Cloudflare organize, test and manage their changes. Really interesting stuff.

2018

The Now CDN (via) Huge announcement from Zeit Now today: all .now.sh deployments are now served through the Cloudflare CDN, which means they benefit from 150 worldwide CDN locations that obey HTTP caching headers. This is particularly relevant for Datasette, since it serves far-future cache headers by default and uses Cloudflare-compatible HTTP/2 push hints to accelerate 302 redirects. This means that both the “datasette publish now” CLI command and the Datasette Publish web app will now result in Cloudflare-accelerated deployments.

Everyone can now run JavaScript on Cloudflare with Workers. This is such a brilliant piece of software design: Cloudflare took the service workers spec and used it as the basis for their edge-executed JacaScript feature. This means you can run server-side JavaScript in hundreds of edge locations worldwide, applying custom dynamic logic (including additional async cached fetch() calls) with only around 1ms if additional overhead. The pricing model is a steal: $0.50 per million requests with a $5/month minimum.