41 items tagged “privacy”

2024

One of the core constitutional principles that guides our AI model development is privacy. We do not train our generative models on user-submitted data unless a user gives us explicit permission to do so. To date we have not used any customer or user-submitted data to train our generative models.

Private Cloud Compute: A new frontier for AI privacy in the cloud. Here are the details about Apple's Private Cloud Compute infrastructure, and they are pretty extraordinary.

The goal with PCC is to allow Apple to run larger AI models that won't fit on a device, but in a way that guarantees that private data passed from the device to the cloud cannot leak in any way - not even to Apple engineers with SSH access who are debugging an outage.

This is an extremely challenging problem, and their proposed solution includes a wide range of new innovations in private computing.

The most impressive part is their approach to technically enforceable guarantees and verifiable transparency. How do you ensure that privacy isn't broken by a future code change? And how can you allow external experts to verify that the software running in your data center is the same software that they have independently audited?

When we launch Private Cloud Compute, we’ll take the extraordinary step of making software images of every production build of PCC publicly available for security research. This promise, too, is an enforceable guarantee: user devices will be willing to send data only to PCC nodes that can cryptographically attest to running publicly listed software.

These code releases will be included in an "append-only and cryptographically tamper-proof transparency log" - similar to certificate transparency logs.

Thoughts on the WWDC 2024 keynote on Apple Intelligence

Today’s WWDC keynote finally revealed Apple’s new set of AI features. The AI section (Apple are calling it Apple Intelligence) started over an hour into the keynote—this link jumps straight to that point in the archived YouTube livestream, or you can watch it embedded here:

[... 855 words]Update on the Recall preview feature for Copilot+ PCs (via) This feels like a very good call to me: in response to widespread criticism Microsoft are making Recall an opt-in feature (during system onboarding), adding encryption to the database and search index beyond just disk encryption and requiring Windows Hello face scanning to access the search feature.

In fact, Microsoft goes so far as to promise that it cannot see the data collected by Windows Recall, that it can't train any of its AI models on your data, and that it definitely can't sell that data to advertisers. All of this is true, but that doesn't mean people believe Microsoft when it says these things. In fact, many have jumped to the conclusion that even if it's true today, it won't be true in the future.

Stealing everything you’ve ever typed or viewed on your own Windows PC is now possible with two lines of code — inside the Copilot+ Recall disaster (via) Recall is a new feature in Windows 11 which takes a screenshot every few seconds, runs local device OCR on it and stores the resulting text in a SQLite database. This means you can search back through your previous activity, against local data that has remained on your device.

The security and privacy implications here are still enormous because malware can now target a single file with huge amounts of valuable information:

During testing this with an off the shelf infostealer, I used Microsoft Defender for Endpoint — which detected the off the shelve infostealer — but by the time the automated remediation kicked in (which took over ten minutes) my Recall data was already long gone.

I like Kevin Beaumont's argument here about the subset of users this feature is appropriate for:

At a surface level, it is great if you are a manager at a company with too much to do and too little time as you can instantly search what you were doing about a subject a month ago.

In practice, that audience’s needs are a very small (tiny, in fact) portion of Windows userbase — and frankly talking about screenshotting the things people in the real world, not executive world, is basically like punching customers in the face.

But increasingly, I’m worried that attempts to crack down on the cryptocurrency industry — scummy though it may be — may result in overall weakening of financial privacy, and may hurt vulnerable people the most. As they say, “hard cases make bad law”.

Text Embeddings Reveal (Almost) As Much As Text. Embeddings of text—where a text string is converted into a fixed-number length array of floating point numbers—are demonstrably reversible: “a multi-step method that iteratively corrects and re-embeds text is able to recover 92% of 32-token text inputs exactly”.

This means that if you’re using a vector database for embeddings of private data you need to treat those embedding vectors with the same level of protection as the original text.

2023

Google was accidentally leaking its Bard AI chats into public search results. I’m quoted in this piece about yesterday’s Bard privacy bug: it turned out the share URL and “Let anyone with the link see what you’ve selected” feature wasn’t correctly setting a noindex parameter, and so some shared conversations were being swept up by the Google search crawlers. Thankfully this was a mistake, not a deliberate design decision, and it should be fixed by now.

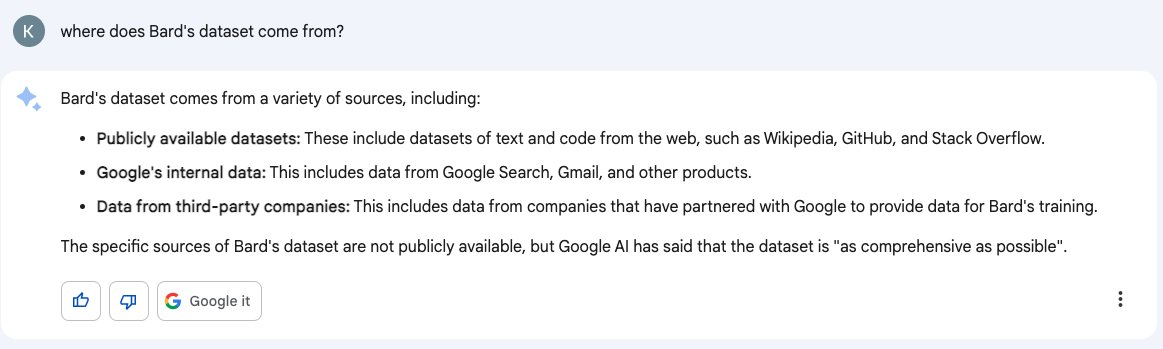

Don’t trust AI to talk accurately about itself: Bard wasn’t trained on Gmail

Earlier this month I wrote about how ChatGPT can’t access the internet, even though it really looks like it can. Consider this part two in the series. Here’s another common and non-intuitive mistake people make when interacting with large language model AI systems: asking them questions about themselves.

[... 1,950 words]2022

Let websites framebust out of native apps (via) Adrian Holovaty makes a compelling case that it is Not OK that we allow native mobile apps to embed our websites in their own browsers, including the ability for them to modify and intercept those pages (it turned out today that Instagram injects extra JavaScript into pages loaded within the Instagram in-app browser). He compares this to frame-busting on the regular web, and proposes that the X-Frame-Options: DENY header which browsers support to prevent a page from being framed should be upgraded to apply to native embedded browsers as well.

I’m not convinced that reusing X-Frame-Options: DENY would be the best approach—I think it would break too many existing legitimate uses—but a similar option (or a similar header) specifically for native apps which causes pages to load in the native OS browser instead sounds like a fantastic idea to me.

2021

Technology does not need vast troves of personal data stitched together across dozens of websites and apps in order to succeed. Advertising existed and thrived for decades without it, and we're here today because the path of least resistance is rarely the path of wisdom.

— Tim Cook

2020

At GitHub, we want to protect developer privacy, and we find cookie banners quite irritating, so we decided to look for a solution. After a brief search, we found one: just don’t use any non-essential cookies. Pretty simple, really. 🤔

So, we have removed all non-essential cookies from GitHub, and visiting our website does not send any information to third-party analytics services.

2018

Using achievement stats to estimate sales on steam (via) Really interesting data leak exploit here: Valve’s Steam API was showing the percentage of users that gained a specific achievement up to 16 decimal places—which inadvertently leaked their exact usage statistics, since if 0.012782207690179348 percent of players get an achievement the only possible input is 8 players out of 62,587.

Cookies-over-HTTP Bad (via) Mike West from the Chrome security team proposes a way for browsers to start discouraging the use of tracking cookies sent over HTTP—which represent a significant threat to user privacy from network attackers. It’s a clever piece of thinking: browsers would slowly ramp up the forced expiry deadline for non-HTTPS cookies, further encouraging sites to switch to HTTPS cookies while giving them ample time to adapt.

Protecting Against HSTS Abuse (via) Any web feature that can be used to persist information will eventually be used to build super-cookies. In this case it’s HSTS—a web feature that allows sites to tell browsers “in the future always load this domain over HTTPS even if the request specified HTTP”. The WebKit team caught this being exploited in the wild, by encoding a user identifier in binary across 32 separate sub domains. They have a couple of mitigations in place now—I expect other browser vendors will follow suit.

What we need to do is come up with a way to help people understand that there are ways to never be lost again, and to listen to any music you want, and to video chat with someone on the other side of the world, without them having to feel disquieted about it. That it's not OK that you're made to feel weirded out. That it's possible for there to be alternatives. That having to feel someone rooting around in your life is not a price you should have to pay.

2010

Facebook’s Instant Personalization: An Analysis of Fundamental Privacy Flaws (via) Oh FFS. “Instant Personalization” means you visit one of Facebook’s “partner websites” and Facebook instantly tells them your full identity and gives them access to full Facebook connect functionality—without you performing any action other than visiting the site. This will not end well.

The Evolution of Privacy on Facebook. Brilliant infographic showing exactly how the visibility of different aspects of your Facebook profile has changed in increments since 2005. Also a nice example of Processing.js in action.

The new Facebook API exposes the events you attend to anyone on the Internet. I’m generally impressed by the new set of Facebook APIs—they’re a whole lot easier to work with than the older stuff—but they’re also clearly a bit half-baked and the privacy model needs some urgent work. The Graph API allows to to see all “open” events that any user has attended or is attending, which can exposes things like their friend’s home addresses. Yes, this means you can stalk Mark Zuckerberg.

A new Buzz start-up experience based on your feedback. Buzz is switching to the more obvious model: use existing Gmail behaviour to suggest a list of people to follow, rather than auto-following them. It feels pretty clear to me that this is how following recommendations should work.

WARNING: Google Buzz Has A Huge Privacy Flaw. Interesting one this: by default, Buzz creates a public profile for you that lists the people you follow—but your default set of followers is derived from the people you contact most frequently using Gmail. This means users of Buzz may inadvertently reveal their most frequent contacts, which is an issue for people like journalists with anonymous sources, unhappy employees seeking new work or even people having e-mail based affairs.

2009

Google Dashboard. New Google product which shows exactly how much information Google have stored against your account, all on one page. This is a really useful tool, and hopefully will help set a powerful precedent for other sites to follow.

You Deleted Your Cookies? Think Again (via) Flash cookies last longer than browser cookies and are harder to delete. Some services are sneakily “respawning” their cookies—if you clear the regular tracking cookie it will be reinstated from the Flash data next time you visit a page.

TOSBack | The Terms-Of-Service Tracker. Fantastic idea (and implementation) from the EFF—a site that currently tracks 44 website policy documents and highlights changes to them using a diff engine (from Drupal). A global RSS feed is available—it would be useful if individual feeds for different sites and organisations were also provided.

On the Anonymity of Home/Work Location Pairs. Most people can be uniquely identified by the rough location of their home combined with the rough location of their work. US Census data shows that 5% of people can be uniquely identified by this combination even at just census tract level (1,500 people).

For the record, I'm a noted privacy freak and I don't pretend to speak for anyone else on this topic. I know that resistance is futile. I continue to believe that there is a great divide on sensitivity about privacy - you've either had your identity stolen or been stalked or had some great intrusion you couldn't fend off, or you haven't. I'm in the former camp and it colors the way I view and think about privacy online. It makes me indescribably sad to see how clearly I and others in my camp are losing this battle.

2008

eval() Kerfuffle. The ability to read supposedly private variables in Firefox using a second argument to eval() will be removed in Firefox 3.1.

Since 9/11, approximately three things have potentially improved airline security: reinforcing the cockpit doors, passengers realizing they have to fight back and - possibly - sky marshals. Everything else - all the security measures that affect privacy - is just security theater and a waste of effort.