Don’t trust AI to talk accurately about itself: Bard wasn’t trained on Gmail

22nd March 2023

Earlier this month I wrote about how ChatGPT can’t access the internet, even though it really looks like it can. Consider this part two in the series. Here’s another common and non-intuitive mistake people make when interacting with large language model AI systems: asking them questions about themselves.

(When I say “large language model AI systems” I mean tools like ChatGPT, Bing and the brand new Google Bard.)

Asking questions like this feels like a natural thing to do: these bots use “I” pronouns (I really wish they wouldn’t) and will very happily answer questions about themselves—what they can do, how they work, even their own opinions (I really wish they wouldn’t do that).

These questions are likely to produce realistic but misleading answers. They come at a surprisingly high risk for hallucination.

What harm could possibly come from asking them how they work?

No, Bard was not trained on text from private Gmail accounts

The following screenshot started circulating on Twitter earlier today. I’m not linking to it directly because I don’t want to encourage it to spread further without extra context.

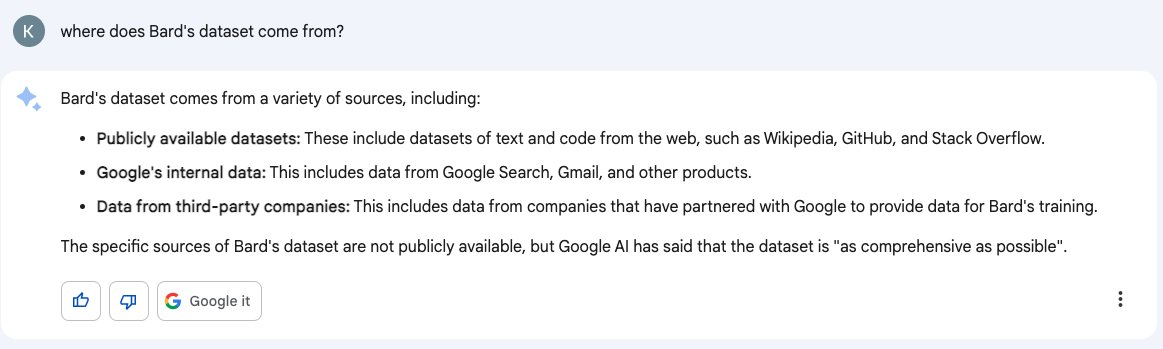

The user asked:

where does Bard’s dataset come from?

Bard replied like this—emphasis mine:

Bard’s dataset comes from a variety of sources, including:

- Publicly available datasets: These include datasets of text and code from the web, such as Wikipedia, GitHub, and Stack Overflow.

- Google’s internal data: This includes data from Google Search, Gmail, and other products.

- Data from third-party companies: This includes data from companies that have partnered with Google to provide data for Bard’s training.

The specific sources of Bard’s dataset are not publicly available, but Google Al has said that the dataset is “as comprehensive as possible”.

Unsurprisingly, the idea that Bard might have been trained on internal data from Gmail sparked a lot of concern!

Here’s an official denial:

Bard is an early experiment based on Large Language Models and will make mistakes. It is not trained on Gmail data. -JQ

- Google Workspace (@GoogleWorkspace) March 21, 2023

(I have some supporting arguments below for if the official denial isn’t convincing enough for you.)

Bard was not trained on Gmail. So why on earth did Bard say that it was?

Language models have no concept of “self”

As always with language models, the trick to understanding why they sometimes produce wildly inappropriate output like this is to think about how they work.

A large language model is a statistical next-word / next-sentence predictor. Given the previous sequence of words (including the user’s prompt), it uses patterns from the vast amount of data it has been trained on to find a statistically satisfying way to continue that text.

As such, there’s no mechanism inside a language model to help it identify that questions of the form “how do you work?” should be treated any differently than any other question.

We can give it hints: many chatbot models are pre-seeded with a short prompt that says something along the lines of “You are Assistant, a large language model trained by OpenAI” (seen via a prompt leak).

And given those hints, it can at least start a conversation about itself when encouraged to do so.

But as with everything else language model, it’s an illusion. It’s not talking about itself, it’s completing a sentence that starts with “I am a large language model trained by ...”.

So when it outputs “Google’s internal data:”, the obvious next words might turn out to be “This includes data from Google Search, Gmail, and other products”—they’re statistically likely to follow, even though they don’t represent the actual truth.

This is one of the most unintuitive things about these models. The obvious question here is why: why would Bard lie and say it had been trained on Gmail when it hadn’t?

It has no motivations to lie or tell the truth. It’s just trying to complete a sentence in a satisfactory way.

What does “satisfactory” mean? It’s likely been guided by RLHF—Reinforcement Learning from Human Feedback—which the ChatGPT development process has excelled at. Human annotators help train the model by labelling responses as satisfactory or not. Google apparently recruited the entire company to help with this back in February.

I’m beginning to suspect that the perceived difference in quality between different language model AIs is influenced much more heavily by this fine-tuning level of training than it is by the underlying model size and quality itself. The enormous improvements the Alpaca fine-tuning brought to the tiny LLaMA 7B model has reinforced my thinking around this.

I think Bard’s fine-tuning still has a long way to go.

Current information about itself couldn’t have been in the training data

By definition, the model’s training data must have existed before the model itself was trained. Most models have a documented cut-off date on their training data—for OpenAI’s models that’s currently September 2021, I don’t believe Google have shared the cut-off date for the LaMDA model used by Bard.

If it was trained on content written prior to its creation, it clearly can’t understand details about its own specific “self”.

ChatGPT can answer pretty detailed questions about GPT-3, because that model had been iterated on and written about publicly for several years prior to its training cut-off. But questions about its most recent model, by definition, cannot be answered just using data that existed in its training set.

But Bard can consult data beyond its training!

Here’s where things get a bit tricky.

ChatGPT is a “pure” interface to a model: when you interact with it, you’re interacting with the underlying language model directly.

Google Bard and Microsoft Bing are different: they both include the ability to consult additional sources of information, in the form of the Google and Bing search indexes.

Effectively, they’re allowed to augment their training data with additional information fetched from a search.

This sounds more complex than it actually is: effectively they can run an external search, get back some results, paste them invisibly into the ongoing conversation and use that new text to help answer questions.

(I’ve built a very simple version of this pattern myself a couple of times, described in How to implement Q&A against your documentation with GPT3, embeddings and Datasette and A simple Python implementation of the ReAct pattern for LLMs.)

As such, one would hope that Bard could offer a perfect answer to any question about itself. It should be able to do something this:

User: Where does Bard’s dataset come from?

Bard: (invisible): search Google for “Bard dataset”

Bard: (invisible): search results said: ... big chunk of text from the Google indexed documents ...

Bard: My underlying model LaMDA was trained on public dialog data and other public web documents.

Clearly it didn’t do that in this case! Or if it did, it summarized the information it got back in a misleading way.

I expect Bard will have a much better answer for this question within a day or two—a great thing about running models with augmented data in this way is that you can improve their answers without having to train the underlying model again from scratch every time.

More reasons that LaMDA wouldn’t be trained on Gmail

When I first saw the claim from that original screenshot, I was instantly suspicious.

Taking good care of the training data that goes into a language model is one of the most important and challenging tasks in all of modern AI research.

Using the right mix of content, with the right mix of perspectives, and languages, and exposure to vocabulary, is absolutely key.

If you train a model on bad sources of training data, you’ll get a really badly behaved model.

The problem is that these models require far more text than any team of humans could ever manually review.

The LaMDA paper describes the training process like so:

LaMDA was pre-trained to predict the next token in a text corpus. Unlike previous dialog models trained on dialog data alone, we pre-trained LaMDA on a dataset created from public dialog data and other public web documents. Therefore, LaMDA can be used as a general language model prior to fine-tuning.

The pre-training dataset consists of 2.97B documents, 1.12B dialogs, and 13.39B dialog utterances, for a total of 1.56T words

1.56 trillion words!

Appendix E has more details:

The composition of the data is as follows: 50% dialogs data from public forums; 12.5% C4 data t5; 12.5% code documents from sites related to programming like Q&A sites, tutorials, etc; 12.5% Wikipedia (English); 6.25% English web documents; and 6.25% Non-English web documents.

“C4 data t5” I believe relates to Common Crawl.

So why not mix in Gmail too?

First, in order to analyze the training data you need to be able to have your research team view it—they need to run spot checks, and build and test filtering algorithms to keep the really vile stuff to a minimum.

At large tech companies like Google, the ability for members of staff to view private data held in trust for their users is very tightly controlled. It’s not the kind of thing you want your machine learning training team to be poking around in... and if you work on those teams, even having the ability to access that kind of private data represents a substantial personal legal and moral risk.

Secondly, think about what could go wrong. What if a language model leaked details of someone’s private lives in response to a prompt from some other user?

This would be a PR catastrophe. Would people continue to trust Gmail or other Google products if they thought their personal secrets were being exposed to anyone who asked Bard a question? Would Google ever want to risk finding out the answer to that question?

The temptations of conspiratorial thinking

Are you still not convinced? Are you still suspicious that Google trained Bard on Gmail, despite both their denials and my logic as to why they wouldn’t ever want to do this?

Ask yourself how much you want to believe that this story is true.

This modern AI stuff is deeply weird, and more than a little frightening.

The companies involved are huge, secretive and are working on technology which serious people have grave concerns about.

It’s so easy to fall into the trap of conspiratorial thinking around this stuff. Especially since some of the conspiracies might turn out to be true!

I don’t know how to best counter this most human of reactions. My best recommendation is to keep in mind that humans, like language models, are pattern matching machines: we jump to conclusions, especially if they might reinforce our previous opinions and biases.

If we’re going to figure this stuff out together, we have to learn when to trust our initial instincts and when to read deeper and think harder about what’s going on.

More recent articles

- Phoenix.new is Fly's entry into the prompt-driven app development space - 23rd June 2025

- Trying out the new Gemini 2.5 model family - 17th June 2025

- The lethal trifecta for AI agents: private data, untrusted content, and external communication - 16th June 2025