310 posts tagged “sqlite”

SQLite is the world's most widely deployed database engine.

2024

Stealing everything you’ve ever typed or viewed on your own Windows PC is now possible with two lines of code — inside the Copilot+ Recall disaster (via) Recall is a new feature in Windows 11 which takes a screenshot every few seconds, runs local device OCR on it and stores the resulting text in a SQLite database. This means you can search back through your previous activity, against local data that has remained on your device.

The security and privacy implications here are still enormous because malware can now target a single file with huge amounts of valuable information:

During testing this with an off the shelf infostealer, I used Microsoft Defender for Endpoint — which detected the off the shelve infostealer — but by the time the automated remediation kicked in (which took over ten minutes) my Recall data was already long gone.

I like Kevin Beaumont's argument here about the subset of users this feature is appropriate for:

At a surface level, it is great if you are a manager at a company with too much to do and too little time as you can instantly search what you were doing about a subject a month ago.

In practice, that audience’s needs are a very small (tiny, in fact) portion of Windows userbase — and frankly talking about screenshotting the things people in the real world, not executive world, is basically like punching customers in the face.

fastlite (via) New Python library from Jeremy Howard that adds some neat utility functions and syntactic sugar to my sqlite-utils Python library, specifically for interactive use in Jupyter notebooks.

The autocomplete support through newly exposed dynamic properties is particularly neat, as is the diagram(db.tables) utility for rendering a graphviz diagram showing foreign key relationships between all of the tables.

The default prefix used to be "sqlite_". But then Mcafee started using SQLite in their anti-virus product and it started putting files with the "sqlite" name in the c:/temp folder. This annoyed many windows users. Those users would then do a Google search for "sqlite", find the telephone numbers of the developers and call to wake them up at night and complain. For this reason, the default name prefix is changed to be "sqlite" spelled backwards.

— D. Richard Hipp, 18 years ago

Modern SQLite: Generated columns (via) The second in Anton Zhiyanov's series on SQLite features you might have missed.

It turns out I had an incorrect mental model of generated columns. In SQLite these can be "virtual" or "stored" (written to disk along with the rest of the table, a bit like a materialized view). Anton noted that "stored are rarely used in practice", which surprised me because I thought that storing them was necessary for them to participate in indexes.

It turns out that's not the case. Anton's example here shows a generated column providing indexed access to a value stored inside a JSON key:

create table events (

id integer primary key,

event blob,

etime text as (event ->> 'time'),

etype text as (event ->> 'type')

);

create index events_time on events(etime);

insert into events(event) values (

'{"time": "2024-05-01", "type": "credit"}'

);

Update: snej reminded me that this isn't a new capability either: SQLite has been able to create indexes on expressions for years.

I’m writing a new vector search SQLite Extension.

Alex Garcia is working on sqlite-vec, a spiritual successor to his sqlite-vss project. The new SQLite C extension will have zero other dependencies (sqlite-vss used some tricky C++ libraries) and will work using virtual tables, storing chunks of vectors in shadow tables to avoid needing to load everything into memory at once.

Performance analysis indicates that SQLite spends very little time doing bytecode decoding and dispatch. Most CPU cycles are consumed in walking B-Trees, doing value comparisons, and decoding records - all of which happens in compiled C code. Bytecode dispatch is using less than 3% of the total CPU time, according to my measurements.

So at least in the case of SQLite, compiling all the way down to machine code might provide a performance boost 3% or less. That's not very much, considering the size, complexity, and portability costs involved.

Why SQLite Uses Bytecode (via) Brand new SQLite architecture documentation by D. Richard Hipp explaining the trade-offs between a bytecode based query plan and a tree of objects.

SQLite uses the bytecode approach, which provides an important characteristic that SQLite can very easily execute queries incrementally—stopping after each row, for example. This is more useful for a local library database than for a network server where the assumption is that the entire query will be executed before results are returned over the wire.

We [Bluesky] took a somewhat novel approach of giving every user their own SQLite database. By removing the Postgres dependency, we made it possible to run a ‘PDS in a box’ [Personal Data Server] without having to worry about managing a database. We didn’t have to worry about things like replicas or failover. For those thinking this is irresponsible: don’t worry, we are backing up all the data on our PDSs!

SQLite worked really well because the PDS – in its ideal form – is a single-tenant system. We owned up to that by having these single tenant SQLite databases.

qrank (via) Interesting and very niche project by Colin Dellow.

Wikidata has pages for huge numbers of concepts, people, places and things.

One of the many pieces of data they publish is QRank—“ranking Wikidata entities by aggregating page views on Wikipedia, Wikispecies, Wikibooks, Wikiquote, and other Wikimedia projects”. Every item gets a score and these scores can be used to answer questions like “which island nations get the most interest across Wikipedia”—potentially useful for things like deciding which labels to display on a highly compressed map of the world.

QRank is published as a gzipped CSV file.

Colin’s hikeratlas/qrank GitHub repository runs weekly, fetches the latest qrank.csv.gz file and loads it into a SQLite database using SQLite’s “.import” mechanism. Then it publishes the resulting SQLite database as an asset attached to the “latest” GitHub release on that repo—currently a 307MB file.

The database itself has just a single table mapping the Wikidata ID (a primary key integer) to the latest QRank—another integer. You’d need your own set of data with Wikidata IDs to join against this to do anything useful.

I’d never thought of using GitHub Releases for this kind of thing. I think it’s a really interesting pattern.

redka (via) Anton Zhiyanov’s new project to build a subset of Redis (including protocol support) using Go and SQLite. Also works as a Go library.

The guts of the SQL implementation are in the internal/sqlx folder.

Optimizing SQLite for servers (via) Sylvain Kerkour's comprehensive set of lessons learned running SQLite for server-based applications.

There's a lot of useful stuff in here, including detailed coverage of the different recommended PRAGMA settings.

There was also a tip I haven't seen before about BEGIN IMMEDIATE transactions:

By default, SQLite starts transactions in

DEFERREDmode: they are considered read only. They are upgraded to a write transaction that requires a database lock in-flight, when query containing a write/update/delete statement is issued.The problem is that by upgrading a transaction after it has started, SQLite will immediately return a

SQLITE_BUSYerror without respecting thebusy_timeoutpreviously mentioned, if the database is already locked by another connection.This is why you should start your transactions with

BEGIN IMMEDIATEinstead of onlyBEGIN. If the database is locked when the transaction starts, SQLite will respectbusy_timeout.

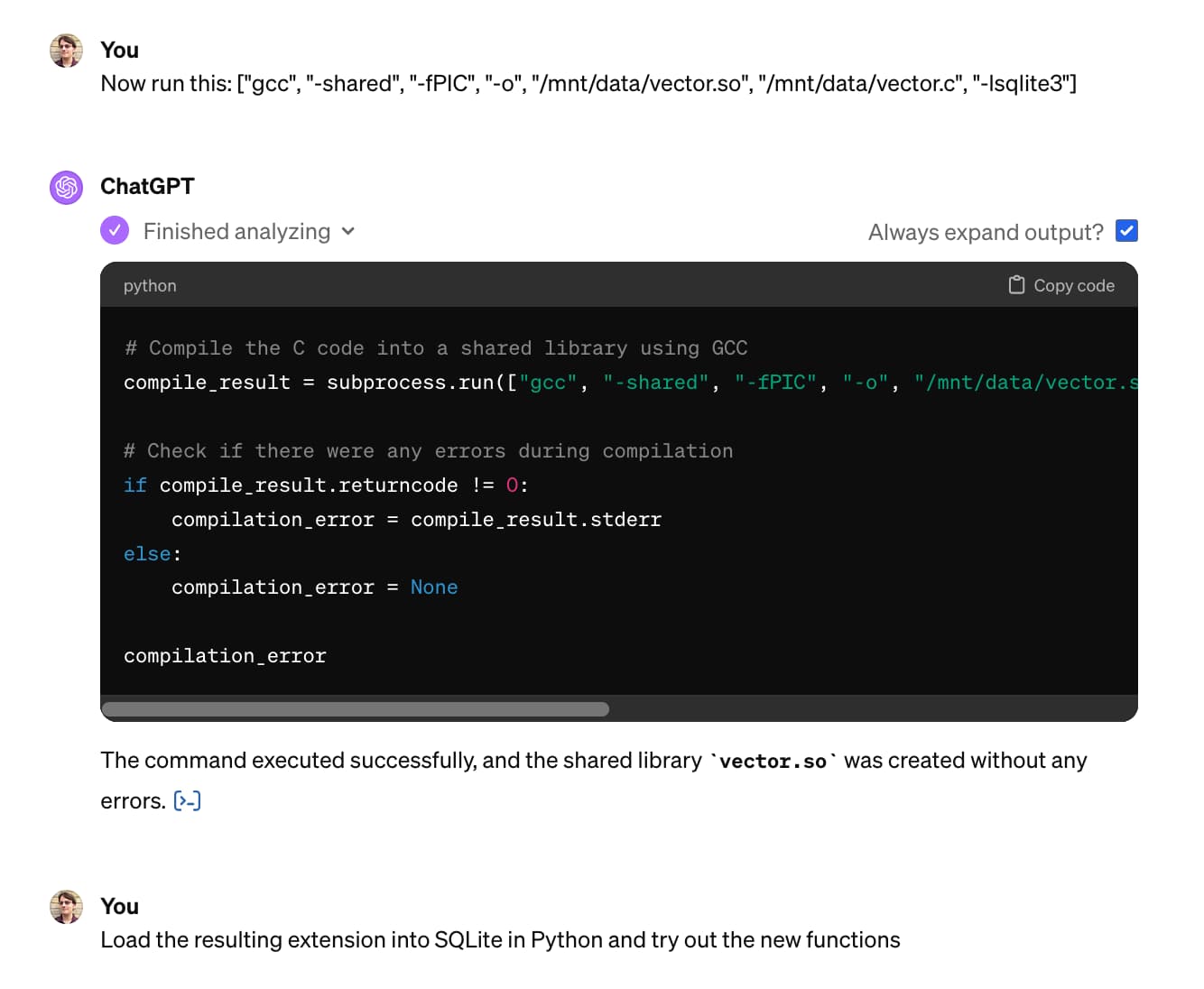

Building and testing C extensions for SQLite with ChatGPT Code Interpreter

I wrote yesterday about how I used Claude and ChatGPT Code Interpreter for simple ad-hoc side quests—in that case, for converting a shapefile to GeoJSON and merging it into a single polygon.

[... 4,612 words]DiskCache (via) Grant Jenks built DiskCache as an alternative caching backend for Django (also usable without Django), using a SQLite database on disk. The performance numbers are impressive—it even beats memcached in microbenchmarks, due to avoiding the need to access the network.

The source code (particularly in core.py) is a great case-study in SQLite performance optimization, after five years of iteration on making it all run as fast as possible.

How does SQLite store data? Michal Pitr explores the design of the SQLite on-disk file format, as part of building an educational implementation of SQLite from scratch in Go.

Astro DB. A new scale-to-zero hosted SQLite offering, described as “A fully-managed SQL database designed exclusively for Astro”. It’s built on top of LibSQL, the SQLite fork maintained by the Turso database team.

Astro DB encourages defining your tables with TypeScript, and querying them via the Drizzle ORM.

Running Astro locally uses a local SQLite database. Deployed to Astro Cloud switches to their DB product, where the free tier currently includes 1GB of storage, one billion row reads per month and one million row writes per month.

Astro itself is a “web framework for content-driven websites”—so hosted SQLite is a bit of an unexpected product from them, though it does broadly fit the ecosystem they are building.

This approach reminds me of how Deno K/V works—another local SQLite storage solution that offers a proprietary cloud hosted option for deployment.

Datasette 1.0a10. The only changes in this alpha release concern the way Datasette handles database transactions. The database.execute_write_fn() internal method used to leave functions to implement transactions on their own—it now defaults to wrapping them in a transaction unless they opt out with the new transaction=False parameter.

In implementing this I found several places inside Datasette—in particular parts of the JSON write API—which had not been handling transactions correctly. Those are all now fixed.

wddbfs – Mount a sqlite database as a filesystem. Ingenious hack from Adam Obeng. Install this Python tool and run it against a SQLite database:

wddbfs --anonymous --db-path path/to/content.db

Then tell the macOS Finder to connect to Go -> Connect to Server -> http://127.0.0.1:8080/ (connect as guest) - connecting via WebDAV.

/Volumes/127.0.0.1/content.db will now be a folder full of CSV, TSV, JSON and JSONL files - one of each format for every table.

This means you can open data from SQLite directly in any application that supports that format, and you can even run CLI commands such as grep, ripgrep or jq directly against the data!

Adam used WebDAV because "Despite how clunky it is, this seems to be the best way to implement a filesystem given that getting FUSE support is not straightforward". What a neat trick.

Announcing DuckDB 0.10.0.

Somewhat buried in this announcement: DuckDB has Fixed-Length Arrays now, along with array_cross_product(a1, a2), array_cosine_similarity(a1, a2) and array_inner_product(a1, a2) functions.

This means you can now use DuckDB to find related content (and other tricks) using vector embeddings!

Also notable:

DuckDB can now attach MySQL, Postgres, and SQLite databases in addition to databases stored in its own format. This allows data to be read into DuckDB and moved between these systems in a convenient manner, as attached databases are fully functional, appear just as regular tables, and can be updated in a safe, transactional manner.

SQL for Data Scientists in 100 Queries. New comprehensive SQLite SQL tutorial from Greg Wilson, author of Teaching Tech Together and founder of The Carpentries.

stanchion (via) Dan Gallagher’s new (under-development) SQLite extension that adds column-oriented tables to SQLite, using a virtual table implemented in Zig that stores records in row groups, where each row group has multiple segments (one for each column) and those segments are stored as SQLite BLOBs.

I’m surprised that this is possible using the virtual table mechanism. It has the potential to bring some of the analytical querying performance we’ve seen in engines like DuckDB to SQLite itself.

SQLite 3.45. Released today. The big new feature is JSONB support, a new, specific-to-SQLite binary internal representation of JSON which can provide up to a 3x performance improvement for JSON-heavy operations, plus a 5-10% saving it terms of bytes stored on disk.

2023

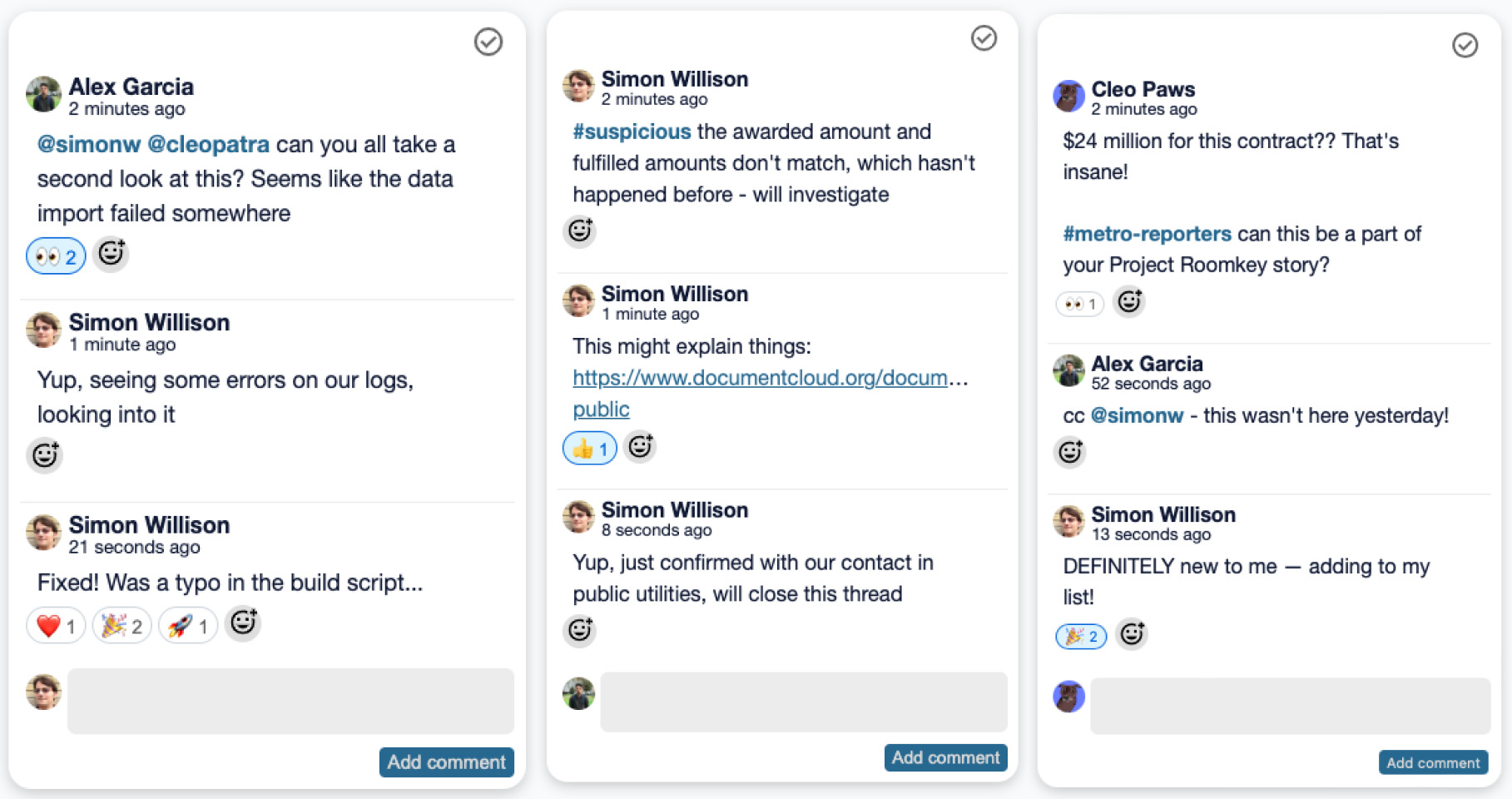

Weeknotes: datasette-enrichments, datasette-comments, sqlite-chronicle

I’ve mainly been working on Datasette Enrichments and continuing to explore the possibilities enabled by sqlite-chronicle.

[... 1,123 words]Tracking SQLite Database Changes in Git (via) A neat trick from Garrit Franke that I hadn’t seen before: you can teach “git diff” how to display human readable versions of the differences between binary files with a specific extension using the following:

git config diff.sqlite3.binary true

git config diff.sqlite3.textconv “echo .dump | sqlite3”

That way you can store binary files in your repo but still get back SQL diffs to compare them.

I still worry about the efficiency of storing binary files in Git, since I expect multiple versions of a text text file to compress together better.

SQLite 3.44: Interactive release notes. Anton Zhiyanov compiled interactive release notes for the new release of SQLite, demonstrating several of the new features. I’m most excited about order by in aggregates—group_concat(name order by name desc)—which is something I’ve wanted in the past. Anton demonstrates how it works with JSON aggregate functions as well. The new date formatting options look useful as well.

New sqlite3 CLI tool in Python 3.12. The newly released Python 3.12 includes a SQLite shell, which you can open using “python -m sqlite3”—handy for when you’re using a machine that has Python installed but no sqlite3 binary.

I installed Python 3.12 for macOS using the official installer from Python.org and now “/usr/local/bin/python3 -m sqlite3” gives me a SQLite 3.41.1 shell—a pleasantly recent version from March 2023 (the latest SQLite is 3.43.1, released in September).

Weeknotes: the Datasette Cloud API, a podcast appearance and more

Datasette Cloud now has a documented API, plus a podcast appearance, some LLM plugins work and some geospatial excitement.

[... 1,243 words]Batch size one billion: SQLite insert speedups, from the useful to the absurd (via) Useful, detailed review of ways to maximize the performance of inserting a billion integers into a SQLite database table.

Upsert in SQL (via) Anton Zhiyanov is currently on a one-man quest to write detailed documentation for all of the fundamental SQL operations, comparing and contrasting how they work across multiple engines, generally with interactive examples.

Useful tips in here on why “insert... on conflict” is usually a better option than “insert or replace into” because the latter can perform a delete and then an insert, firing triggers that you may not have wanted to be fired.

Geospatial SQL queries in SQLite using TG, sqlite-tg and datasette-sqlite-tg. Alex Garcia built sqlite-tg—a SQLite extension that uses the brand new TG geospatial library to provide a whole suite of custom SQL functions for working with geospatial data.

Here are my notes on trying out his initial alpha releases. The extension already provides tools for converting between GeoJSON, WKT and WKB, plus the all important tg_intersects() function for testing if a polygon or point overlap each other.

It’s pretty useful already. Without any geospatial indexing at all I was still able to get 700ms replies to a brute-force point-in-polygon query against 150MB of GeoJSON timezone boundaries stored as JSON text in a table.

TG: Polygon indexing (via) TG is a brand new geospatial library by Josh Baker, author of the Tile38 in-memory spatial server (kind of a geospatial Redis). TG is written in pure C and delivered as a single C file, reminiscent of the SQLite amalgamation.

TG looks really interesting. It implements almost the exact subset of geospatial functionality that I find most useful: point-in-polygon, intersect, WKT, WKB, and GeoJSON—all with no additional dependencies.

The most interesting thing about it is the way it handles indexing. In this documentation Josh describes two approaches he uses to speeding up point-in-polygon and intersection using a novel approach that goes beyond the usual RTree implementation.

I think this could make the basis of a really useful SQLite extension—a lighter-weight alternative to SpatiaLite.