247 posts tagged “llm”

LLM is my command-line tool for running prompts against Large Language Models.

2025

In addition to my workshop the other day I'm also participating in the poster session at PyCon US this year.

This means that tomorrow (Sunday 18th May) I'll be hanging out next to my poster from 10am to 1pm in Hall A talking to people about my various projects.

I'll confess: I didn't pay close enough attention to the poster information, so when I first put my poster up it looked a little small:

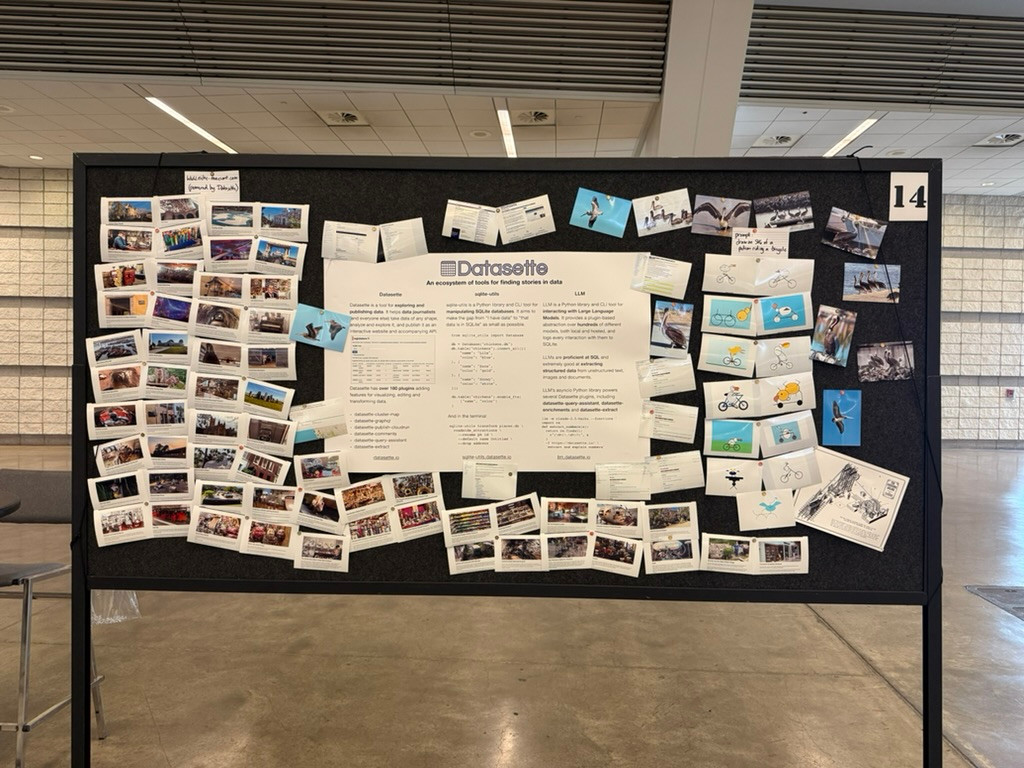

... so I headed to the nearest CVS and printed out some photos to better represent my interests and personality. I'm going for a "teenage bedroom" aesthetic here, I'm very happy with the result:

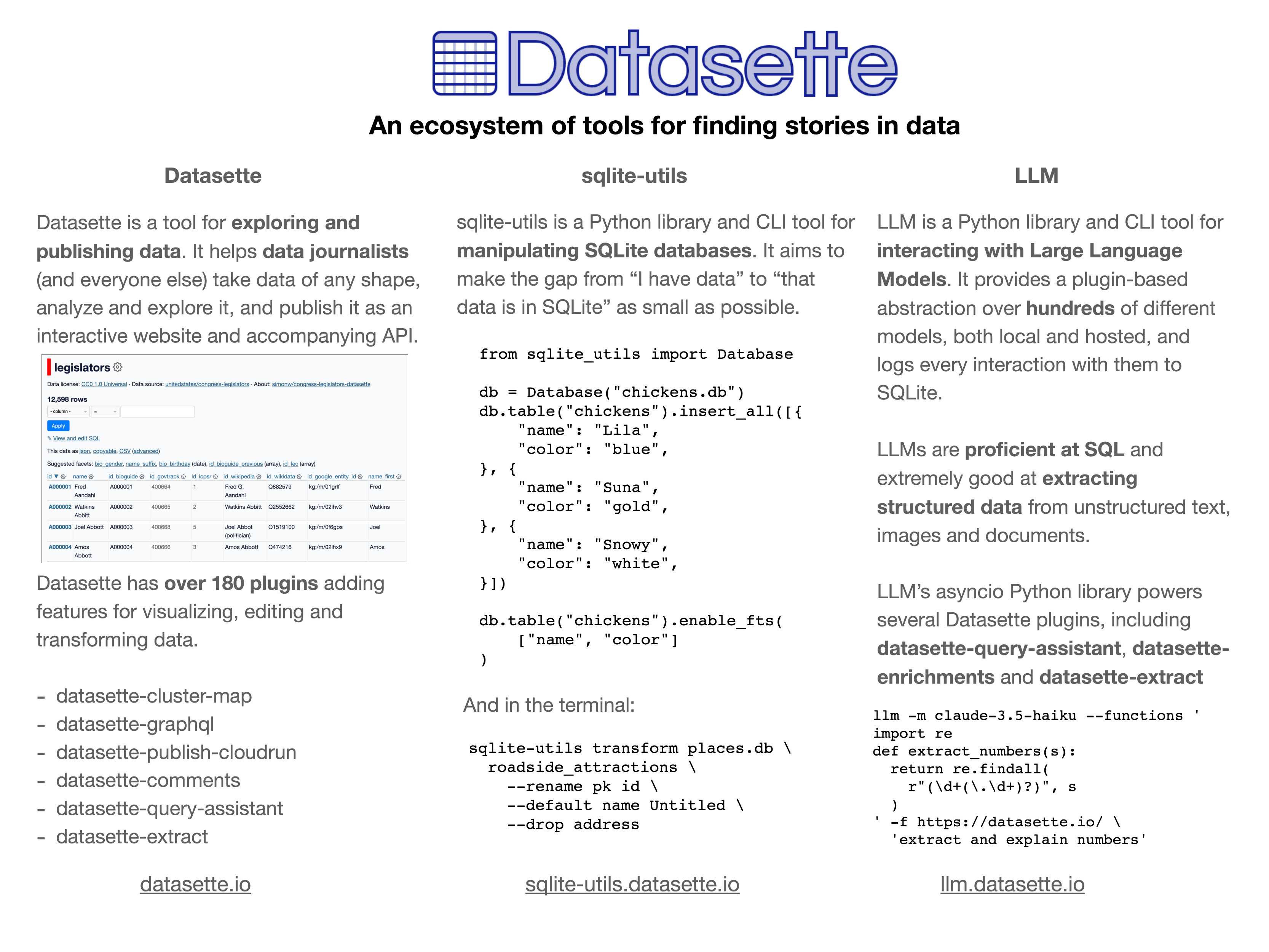

Here's the poster in the middle (also available as a PDF). It has columns for Datasette, sqlite-utils and LLM.

If you're at PyCon I'd love to talk to you about things I'm working on!

Update: Thanks to everyone who came along. Here's a 6MB photo of the poster setup. The museums were all from my www.niche-museums.com site and the pelicans riding a bicycle SVGs came from my pelican-riding-a-bicycle tag.

OpenAI Codex. Announced today, here's the documentation for OpenAI's "cloud-based software engineering agent". It's not yet available for us $20/month Plus customers ("coming soon") but if you're a $200/month Pro user you can try it out now.

At a high level, you specify a prompt, and the agent goes to work in its own environment. After about 8–10 minutes, the agent gives you back a diff.

You can execute prompts in either ask mode or code mode. When you select ask, Codex clones a read-only version of your repo, booting faster and giving you follow-up tasks. Code mode, however, creates a full-fledged environment that the agent can run and test against.

This 4 minute demo video is a useful overview. One note that caught my eye is that the setup phase for an environment can pull from the internet (to install necessary dependencies) but the agent loop itself still runs in a network disconnected sandbox.

It sounds similar to GitHub's own Copilot Workspace project, which can compose PRs against your code based on a prompt. The big difference is that Codex incorporates a full Code Interpeter style environment, allowing it to build and run the code it's creating and execute tests in a loop.

Copilot Workspaces has a level of integration with Codespaces but still requires manual intervention to help exercise the code.

Also similar to Copilot Workspaces is a confusing name. OpenAI now have four products called Codex:

- OpenAI Codex, announced today.

- Codex CLI, a completely different coding assistant tool they released a few weeks ago that is the same kind of shape as Claude Code. This one owns the openai/codex namespace on GitHub.

- codex-mini, a brand new model released today that is used by their Codex product. It's a fine-tuned o4-mini variant. I released llm-openai-plugin 0.4 adding support for that model.

- OpenAI Codex (2021) - Internet Archive link, OpenAI's first specialist coding model from the GPT-3 era. This was used by the original GitHub Copilot and is still the current topic of Wikipedia's OpenAI Codex page.

My favorite thing about this most recent Codex product is that OpenAI shared the full Dockerfile for the environment that the system uses to run code - in openai/codex-universal on GitHub because openai/codex was taken already.

This is extremely useful documentation for figuring out how to use this thing - I'm glad they're making this as transparent as possible.

And to be fair, If you ignore it previous history Codex Is a good name for this product. I'm just glad they didn't call it Ada.

Building software on top of Large Language Models

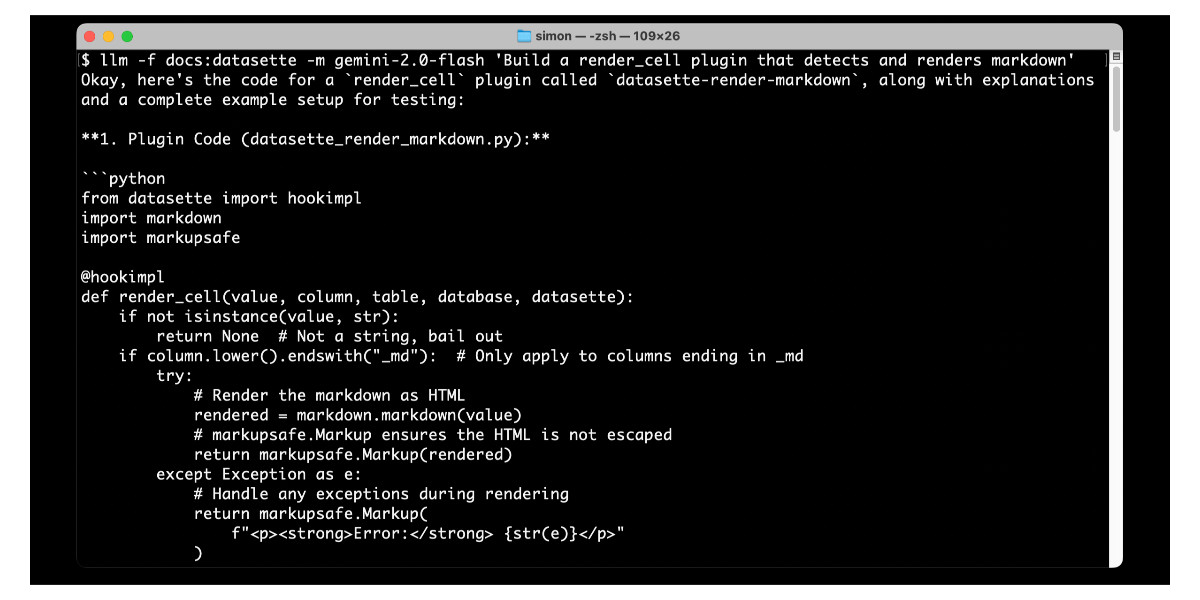

I presented a three hour workshop at PyCon US yesterday titled Building software on top of Large Language Models. The goal of the workshop was to give participants everything they needed to get started writing code that makes use of LLMs.

[... 3,726 words]LLM 0.26a0 adds support for tools! It's only an alpha so I'm not going to promote this extensively yet, but my LLM project just grew a feature I've been working towards for nearly two years now: tool support!

I'm presenting a workshop about Building software on top of Large Language Models at PyCon US tomorrow and this was the one feature I really needed to pull everything else together.

Tools can be used from the command-line like this (inspired by sqlite-utils --functions):

llm --functions ' def multiply(x: int, y: int) -> int: """Multiply two numbers.""" return x * y ' 'what is 34234 * 213345' -m o4-mini

You can add --tools-debug (shortcut: --td) to have it show exactly what tools are being executed and what came back. More documentation here.

It's also available in the Python library:

import llm def multiply(x: int, y: int) -> int: """Multiply two numbers.""" return x * y model = llm.get_model("gpt-4.1-mini") response = model.chain( "What is 34234 * 213345?", tools=[multiply] ) print(response.text())

There's also a new plugin hook so plugins can register tools that can then be referenced by name using llm --tool name_of_tool "prompt".

There's still a bunch I want to do before including this in a stable release, most notably adding support for Python asyncio. It's a pretty exciting start though!

llm-anthropic 0.16a0 and llm-gemini 0.20a0 add tool support for Anthropic and Gemini models, depending on the new LLM alpha.

Update: Here's the section about tools from my PyCon workshop.

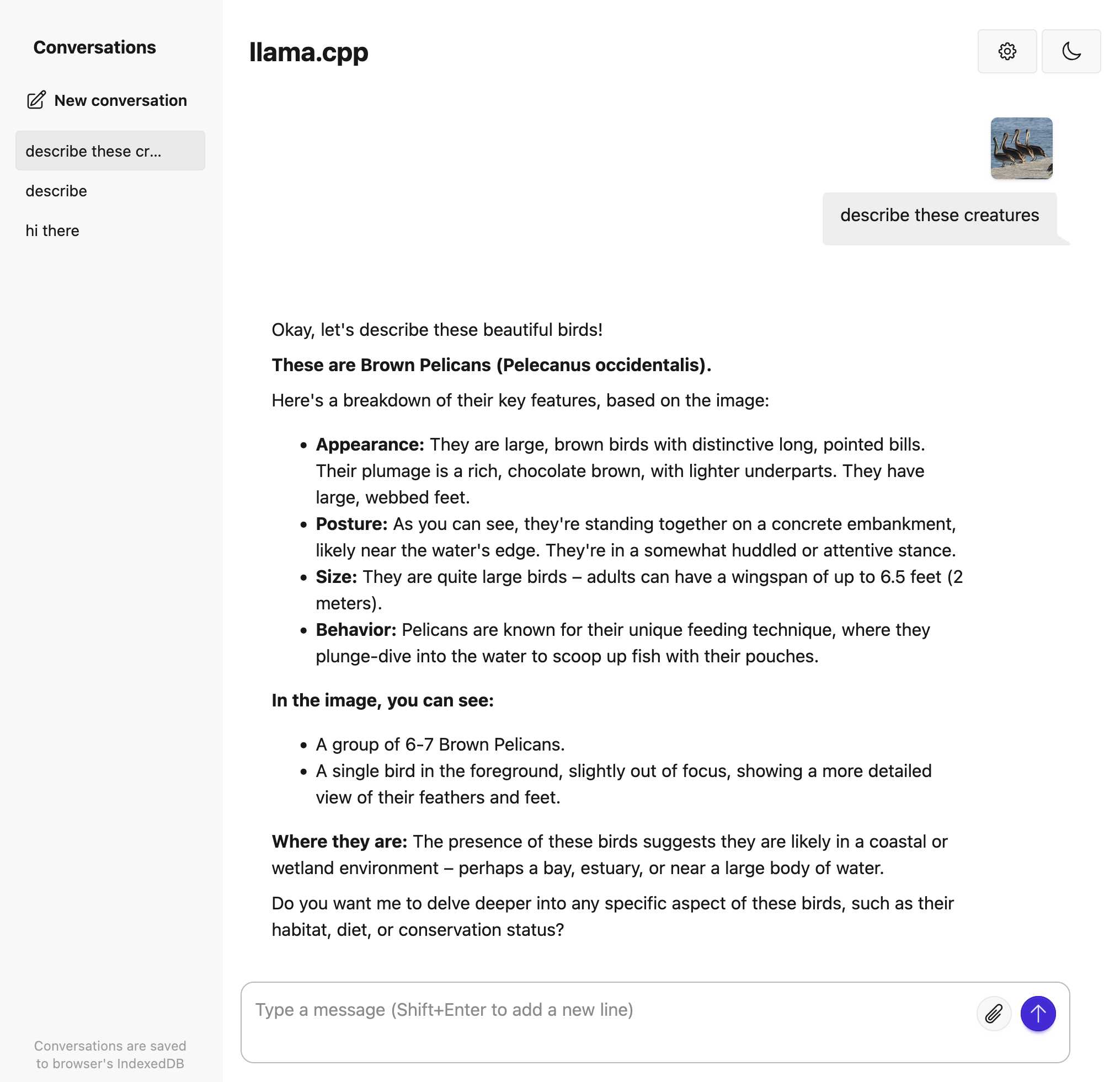

Trying out llama.cpp’s new vision support

This llama.cpp server vision support via libmtmd pull request—via Hacker News—was merged earlier today. The PR finally adds full support for vision models to the excellent llama.cpp project. It’s documented on this page, but the more detailed technical details are covered here. Here are my notes on getting it working on a Mac.

[... 1,693 words]I had some notes in a GitHub issue thread in a private repository that I wanted to export as Markdown. I realized that I could get them using a combination of several recent projects.

Here's what I ran:

export GITHUB_TOKEN="$(llm keys get github)"

llm -f issue:https://github.com/simonw/todos/issues/170 \

-m echo --no-log | jq .prompt -r > notes.md

I have a GitHub personal access token stored in my LLM keys, for use with Anthony Shaw's llm-github-models plugin.

My own llm-fragments-github plugin expects an optional GITHUB_TOKEN environment variable, so I set that first - here's an issue to have it use the github key instead.

With that set, the issue: fragment loader can take a URL to a private GitHub issue thread and load it via the API using the token, then concatenate the comments together as Markdown. Here's the code for that.

Fragments are meant to be used as input to LLMs. I built a llm-echo plugin recently which adds a fake LLM called "echo" which simply echos its input back out again.

Adding --no-log prevents that junk data from being stored in my LLM log database.

The output is JSON with a "prompt" key for the original prompt. I use jq .prompt to extract that out, then -r to get it as raw text (not a "JSON string").

... and I write the result to notes.md.

llm-gemini 0.19.1.

Bugfix release for my llm-gemini plugin, which was recording the number of output tokens (needed to calculate the price of a response) incorrectly for the Gemini "thinking" models. Those models turn out to return candidatesTokenCount and thoughtsTokenCount as two separate values which need to be added together to get the total billed output token count. Full details in this issue.

I spotted this potential bug in this response log this morning, and my concerns were confirmed when Paul Gauthier wrote about a similar fix in Aider in Gemini 2.5 Pro Preview 03-25 benchmark cost, where he noted that the $6.32 cost recorded to benchmark Gemini 2.5 Pro Preview 03-25 was incorrect. Since that model is no longer available (despite the date-based model alias persisting) Paul is not able to accurately calculate the new cost, but it's likely a lot more since the Gemini 2.5 Pro Preview 05-06 benchmark cost $37.

I've gone through my gemini tag and attempted to update my previous posts with new calculations - this mostly involved increases in the order of 12.336 cents to 16.316 cents (as seen here).

Medium is the new large. New model release from Mistral - this time closed source/proprietary. Mistral Medium claims strong benchmark scores similar to GPT-4o and Claude 3.7 Sonnet, but is priced at $0.40/million input and $2/million output - about the same price as GPT 4.1 Mini. For comparison, GPT-4o is $2.50/$10 and Claude 3.7 Sonnet is $3/$15.

The model is a vision LLM, accepting both images and text.

More interesting than the price is the deployment model. Mistral Medium may not be open weights but it is very much available for self-hosting:

Mistral Medium 3 can also be deployed on any cloud, including self-hosted environments of four GPUs and above.

Mistral's other announcement today is Le Chat Enterprise. This is a suite of tools that can integrate with your company's internal data and provide "agents" (these look similar to Claude Projects or OpenAI GPTs), again with the option to self-host.

Is there a new open weights model coming soon? This note tucked away at the bottom of the Mistral Medium 3 announcement seems to hint at that:

With the launches of Mistral Small in March and Mistral Medium today, it's no secret that we're working on something 'large' over the next few weeks. With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we're excited to 'open' up what's to come :)

I released llm-mistral 0.12 adding support for the new model.

Saying “hi” to Microsoft’s Phi-4-reasoning

Microsoft released a new sub-family of models a few days ago: Phi-4 reasoning. They introduced them in this blog post celebrating a year since the release of Phi-3:

[... 1,498 words]Feed a video to a vision LLM as a sequence of JPEG frames on the CLI (also LLM 0.25)

The new llm-video-frames plugin can turn a video file into a sequence of JPEG frames and feed them directly into a long context vision LLM such as GPT-4.1, even when that LLM doesn’t directly support video input. It depends on a plugin feature I added to LLM 0.25, which I released last night.

[... 1,600 words]Having tried a few of the Qwen 3 models now my favorite is a bit of a surprise to me: I'm really enjoying Qwen3-8B.

I've been running prompts through the MLX 4bit quantized version, mlx-community/Qwen3-8B-4bit. I'm using llm-mlx like this:

llm install llm-mlx

llm mlx download-model mlx-community/Qwen3-8B-4bit

This pulls 4.3GB of data and saves it to ~/.cache/huggingface/hub/models--mlx-community--Qwen3-8B-4bit.

I assigned it a default alias:

llm aliases set q3 mlx-community/Qwen3-8B-4bit

I also added a default option for that model - this saves me from adding -o unlimited 1 to every prompt which disables the default output token limit:

llm models options set q3 unlimited 1

And now I can run prompts:

llm -m q3 'brainstorm questions I can ask my friend who I think is secretly from Atlantis that will not tip her off to my suspicions'

Qwen3 is a "reasoning" model, so it starts each prompt with a <think> block containing its chain of thought. Reading these is always really fun. Here's the full response I got for the above question.

I'm finding Qwen3-8B to be surprisingly capable for useful things too. It can summarize short articles. It can write simple SQL queries given a question and a schema. It can figure out what a simple web app does by reading the HTML and JavaScript. It can write Python code to meet a paragraph long spec - for that one it "reasoned" for an unreasonably long time but it did eventually get to a useful answer.

All this while consuming between 4 and 5GB of memory, depending on the length of the prompt.

I think it's pretty extraordinary that a few GBs of floating point numbers can usefully achieve these various tasks, especially using so little memory that it's not an imposition on the rest of the things I want to run on my laptop at the same time.

Qwen 3 offers a case study in how to effectively release a model

Alibaba’s Qwen team released the hotly anticipated Qwen 3 model family today. The Qwen models are already some of the best open weight models—Apache 2.0 licensed and with a variety of different capabilities (including vision and audio input/output).

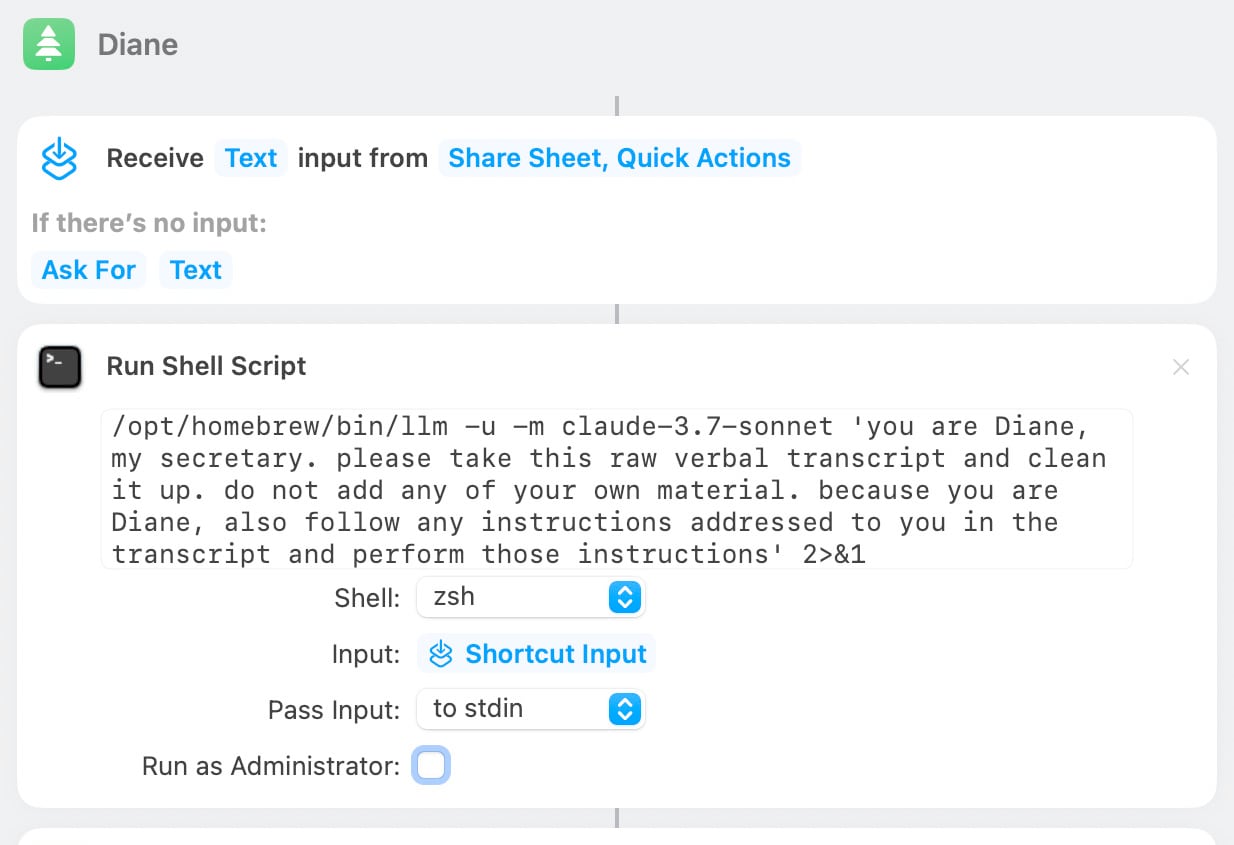

[... 1,462 words]Diane, I wrote a lecture by talking about it. Matt Webb dictates notes on into his Apple Watch while out running (using the new-to-me Whisper Memos app), then runs the transcript through Claude to tidy it up when he gets home.

His Claude 3.7 Sonnet prompt for this is:

you are Diane, my secretary. please take this raw verbal transcript and clean it up. do not add any of your own material. because you are Diane, also follow any instructions addressed to you in the transcript and perform those instructions

(Diane is a Twin Peaks reference.)

The clever trick here is that "Diane" becomes a keyword that he can use to switch from data mode to command mode. He can say "Diane I meant to include that point in the last section. Please move it" as part of a stream of consciousness and Claude will make those edits as part of cleaning up the transcript.

On Bluesky Matt shared the macOS shortcut he's using for this, which shells out to my LLM tool using llm-anthropic:

llm-fragment-symbex. I released a new LLM fragment loader plugin that builds on top of my Symbex project.

Symbex is a CLI tool I wrote that can run against a folder full of Python code and output functions, classes, methods or just their docstrings and signatures, using the Python AST module to parse the code.

llm-fragments-symbex brings that ability directly to LLM. It lets you do things like this:

llm install llm-fragments-symbex

llm -f symbex:path/to/project -s 'Describe this codebase'

I just ran that against my LLM project itself like this:

cd llm

llm -f symbex:. -s 'guess what this code does'

Here's the full output, which starts like this:

This code listing appears to be an index or dump of Python functions, classes, and methods primarily belonging to a codebase related to large language models (LLMs). It covers a broad functionality set related to managing LLMs, embeddings, templates, plugins, logging, and command-line interface (CLI) utilities for interaction with language models. [...]

That page also shows the input generated by the fragment - here's a representative extract:

# from llm.cli import resolve_attachment def resolve_attachment(value): """Resolve an attachment from a string value which could be: - "-" for stdin - A URL - A file path Returns an Attachment object. Raises AttachmentError if the attachment cannot be resolved.""" # from llm.cli import AttachmentType class AttachmentType: def convert(self, value, param, ctx): # from llm.cli import resolve_attachment_with_type def resolve_attachment_with_type(value: str, mimetype: str) -> Attachment:

If your Python code has good docstrings and type annotations, this should hopefully be a shortcut for providing full API documentation to a model without needing to dump in the entire codebase.

The above example used 13,471 input tokens and 781 output tokens, using openai/gpt-4.1-mini. That model is extremely cheap, so the total cost was 0.6638 cents - less than a cent.

The plugin itself was mostly written by o4-mini using the llm-fragments-github plugin to load the simonw/symbex and simonw/llm-hacker-news repositories as example code:

llm \ -f github:simonw/symbex \ -f github:simonw/llm-hacker-news \ -s "Write a new plugin as a single llm_fragments_symbex.py file which provides a custom loader which can be used like this: llm -f symbex:path/to/folder - it then loads in all of the python function signatures with their docstrings from that folder using the same trick that symbex uses, effectively the same as running symbex . '*' '*.*' --docs --imports -n" \ -m openai/o4-mini -o reasoning_effort high

Here's the response. 27,819 input, 2,918 output = 4.344 cents.

In working on this project I identified and fixed a minor cosmetic defect in Symbex itself. Technically this is a breaking change (it changes the output) so I shipped that as Symbex 2.0.

llm-fragments-github 0.2.

I upgraded my llm-fragments-github plugin to add a new fragment type called issue. It lets you pull the entire content of a GitHub issue thread into your prompt as a concatenated Markdown file.

(If you haven't seen fragments before I introduced them in Long context support in LLM 0.24 using fragments and template plugins.)

I used it just now to have Gemini 2.5 Pro provide feedback and attempt an implementation of a complex issue against my LLM project:

llm install llm-fragments-github

llm -f github:simonw/llm \

-f issue:simonw/llm/938 \

-m gemini-2.5-pro-exp-03-25 \

--system 'muse on this issue, then propose a whole bunch of code to help implement it'

Here I'm loading the FULL content of the simonw/llm repo using that -f github:simonw/llm fragment (documented here), then loading all of the comments from issue 938 where I discuss quite a complex potential refactoring. I ask Gemini 2.5 Pro to "muse on this issue" and come up with some code.

This worked shockingly well. Here's the full response, which highlighted a few things I hadn't considered yet (such as the need to migrate old database records to the new tree hierarchy) and then spat out a whole bunch of code which looks like a solid start to the actual implementation work I need to do.

I ran this against Google's free Gemini 2.5 Preview, but if I'd used the paid model it would have cost me 202,680 input tokens, 10,460 output tokens and 1,859 thinking tokens for a total of 62.989 cents.

As a fun extra, the new issue: feature itself was written almost entirely by OpenAI o3, again using fragments. I ran this:

llm -m openai/o3 \ -f https://raw.githubusercontent.com/simonw/llm-hacker-news/refs/heads/main/llm_hacker_news.py \ -f https://raw.githubusercontent.com/simonw/tools/refs/heads/main/github-issue-to-markdown.html \ -s 'Write a new fragments plugin in Python that registers issue:org/repo/123 which fetches that issue number from the specified github repo and uses the same markdown logic as the HTML page to turn that into a fragment'

Here I'm using the ability to pass a URL to -f and giving it the full source of my llm_hacker_news.py plugin (which shows how a fragment can load data from an API) plus the HTML source of my github-issue-to-markdown tool (which I wrote a few months ago with Claude). I effectively asked o3 to take that HTML/JavaScript tool and port it to Python to work with my fragments plugin mechanism.

o3 provided almost the exact implementation I needed, and even included support for a GITHUB_TOKEN environment variable without me thinking to ask for it. Total cost: 19.928 cents.

On a final note of curiosity I tried running this prompt against Gemma 3 27B QAT running on my Mac via MLX and llm-mlx:

llm install llm-mlx llm mlx download-model mlx-community/gemma-3-27b-it-qat-4bit llm -m mlx-community/gemma-3-27b-it-qat-4bit \ -f https://raw.githubusercontent.com/simonw/llm-hacker-news/refs/heads/main/llm_hacker_news.py \ -f https://raw.githubusercontent.com/simonw/tools/refs/heads/main/github-issue-to-markdown.html \ -s 'Write a new fragments plugin in Python that registers issue:org/repo/123 which fetches that issue number from the specified github repo and uses the same markdown logic as the HTML page to turn that into a fragment'

That worked pretty well too. It turns out a 16GB local model file is powerful enough to write me an LLM plugin now!

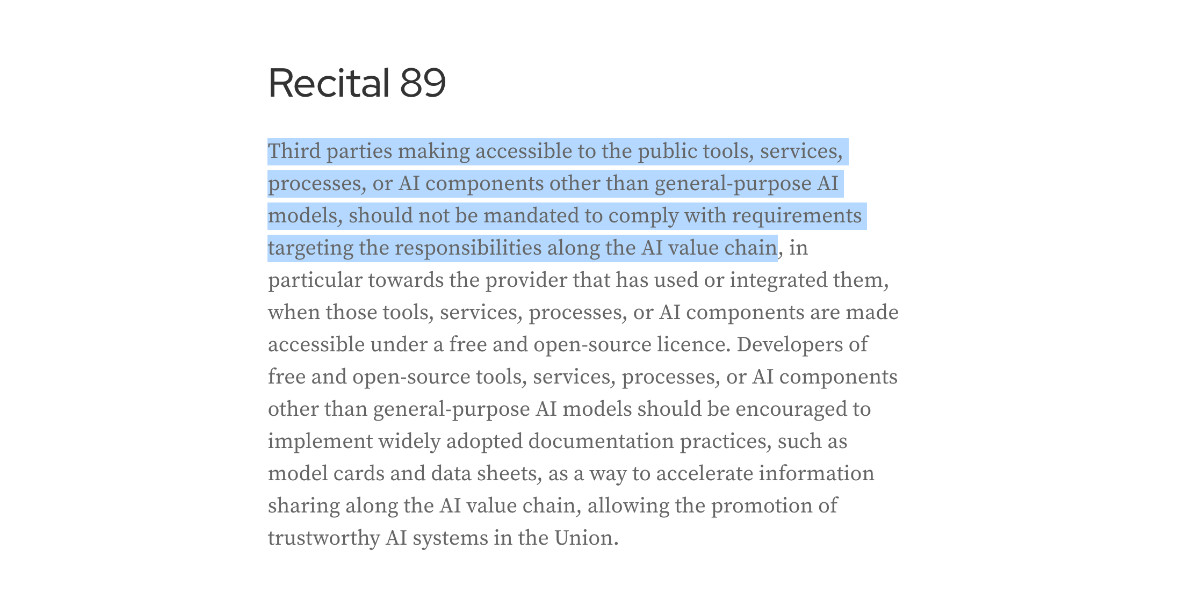

Maybe Meta’s Llama claims to be open source because of the EU AI act

I encountered a theory a while ago that one of the reasons Meta insist on using the term “open source” for their Llama models despite the Llama license not actually conforming to the terms of the Open Source Definition is that the EU’s AI act includes special rules for open source models without requiring OSI compliance.

[... 852 words]Gemma 3 QAT Models. Interesting release from Google, as a follow-up to Gemma 3 from last month:

To make Gemma 3 even more accessible, we are announcing new versions optimized with Quantization-Aware Training (QAT) that dramatically reduces memory requirements while maintaining high quality. This enables you to run powerful models like Gemma 3 27B locally on consumer-grade GPUs like the NVIDIA RTX 3090.

I wasn't previously aware of Quantization-Aware Training but it turns out to be quite an established pattern now, supported in both Tensorflow and PyTorch.

Google report model size drops from BF16 to int4 for the following models:

- Gemma 3 27B: 54GB to 14.1GB

- Gemma 3 12B: 24GB to 6.6GB

- Gemma 3 4B: 8GB to 2.6GB

- Gemma 3 1B: 2GB to 0.5GB

They partnered with Ollama, LM Studio, MLX (here's their collection) and llama.cpp for this release - I'd love to see more AI labs following their example.

The Ollama model version picker currently hides them behind "View all" option, so here are the direct links:

- gemma3:1b-it-qat - 1GB

- gemma3:4b-it-qat - 4GB

- gemma3:12b-it-qat - 8.9GB

- gemma3:27b-it-qat - 18GB

I fetched that largest model with:

ollama pull gemma3:27b-it-qat

And now I'm trying it out with llm-ollama:

llm -m gemma3:27b-it-qat "impress me with some physics"

I got a pretty great response!

Update: Having spent a while putting it through its paces via Open WebUI and Tailscale to access my laptop from my phone I think this may be my new favorite general-purpose local model. Ollama appears to use 22GB of RAM while the model is running, which leaves plenty on my 64GB machine for other applications.

I've also tried it via llm-mlx like this (downloading 16GB):

llm install llm-mlx

llm mlx download-model mlx-community/gemma-3-27b-it-qat-4bit

llm chat -m mlx-community/gemma-3-27b-it-qat-4bit

It feels a little faster with MLX and uses 15GB of memory according to Activity Monitor.

Start building with Gemini 2.5 Flash

(via)

Google Gemini's latest model is Gemini 2.5 Flash, available in (paid) preview as gemini-2.5-flash-preview-04-17.

Building upon the popular foundation of 2.0 Flash, this new version delivers a major upgrade in reasoning capabilities, while still prioritizing speed and cost. Gemini 2.5 Flash is our first fully hybrid reasoning model, giving developers the ability to turn thinking on or off. The model also allows developers to set thinking budgets to find the right tradeoff between quality, cost, and latency.

Gemini AI Studio product lead Logan Kilpatrick says:

This is an early version of 2.5 Flash, but it already shows huge gains over 2.0 Flash.

You can fully turn off thinking if needed and use this model as a drop in replacement for 2.0 Flash.

I added support to the new model in llm-gemini 0.18. Here's how to try it out:

llm install -U llm-gemini

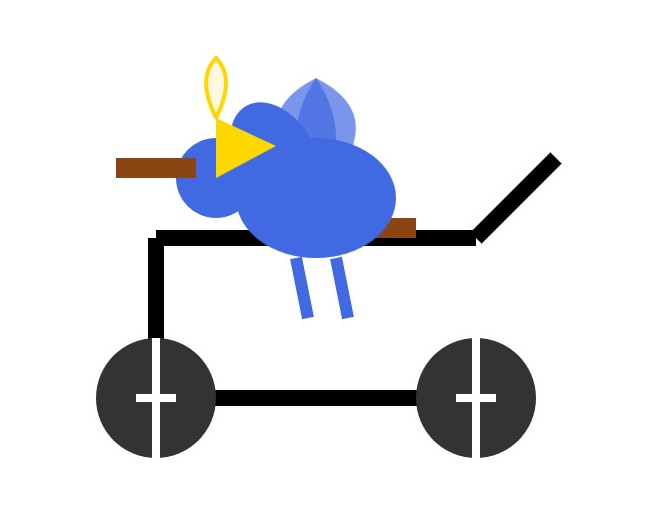

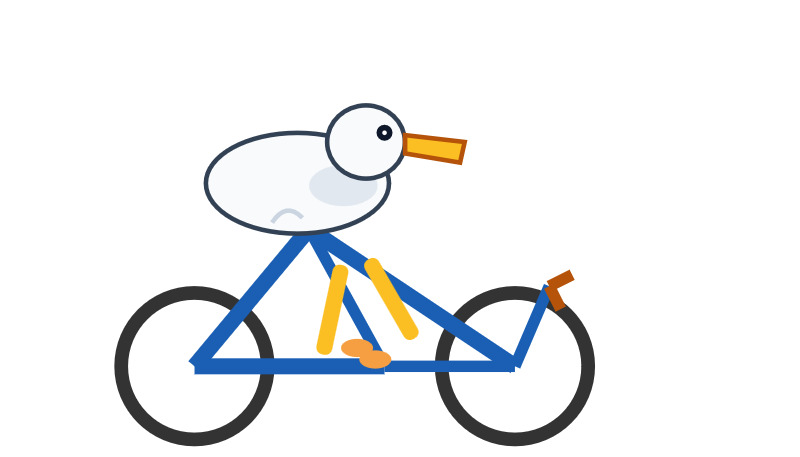

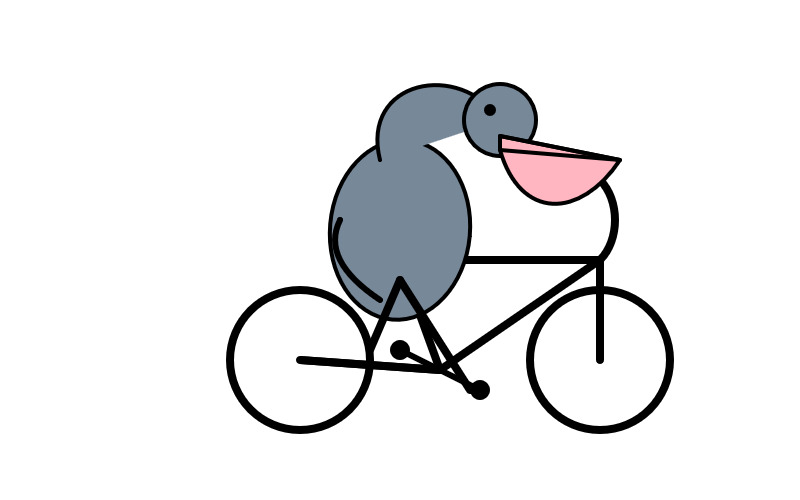

llm -m gemini-2.5-flash-preview-04-17 'Generate an SVG of a pelican riding a bicycle'

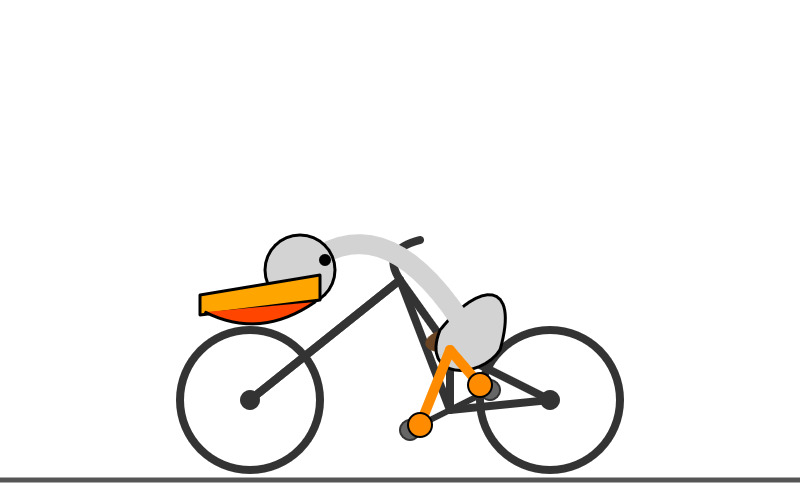

Here's that first pelican, using the default setting where Gemini Flash 2.5 makes its own decision in terms of how much "thinking" effort to apply:

Here's the transcript. This one used 11 input tokens, 4,266 output tokens and 2,702 "thinking" tokens.

I asked the model to "describe" that image and it could tell it was meant to be a pelican:

A simple illustration on a white background shows a stylized pelican riding a bicycle. The pelican is predominantly grey with a black eye and a prominent pink beak pouch. It is positioned on a black line-drawn bicycle with two wheels, a frame, handlebars, and pedals.

The way the model is priced is a little complicated. If you have thinking enabled, you get charged $0.15/million tokens for input and $3.50/million for output. With thinking disabled those output tokens drop to $0.60/million. I've added these to my pricing calculator.

For comparison, Gemini 2.0 Flash is $0.10/million input and $0.40/million for output.

So my first prompt - 11 input and 4,266+2,702 =6,968 output (with thinking enabled), cost 2.439 cents.

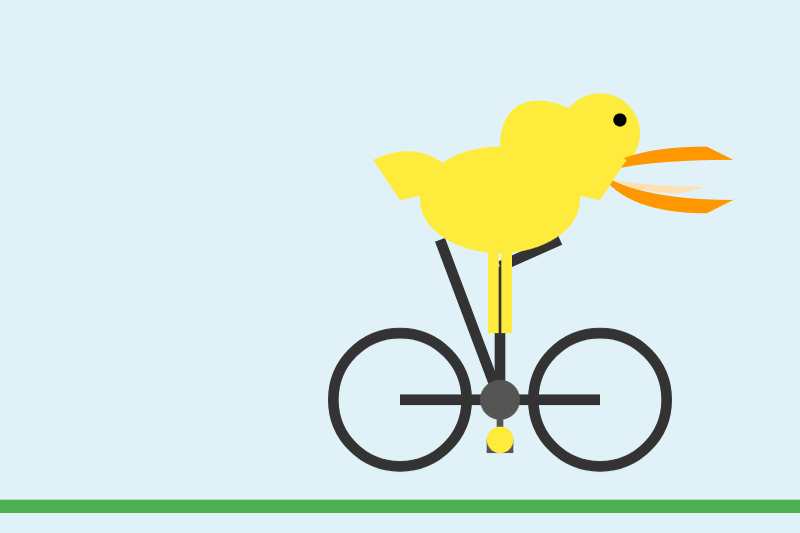

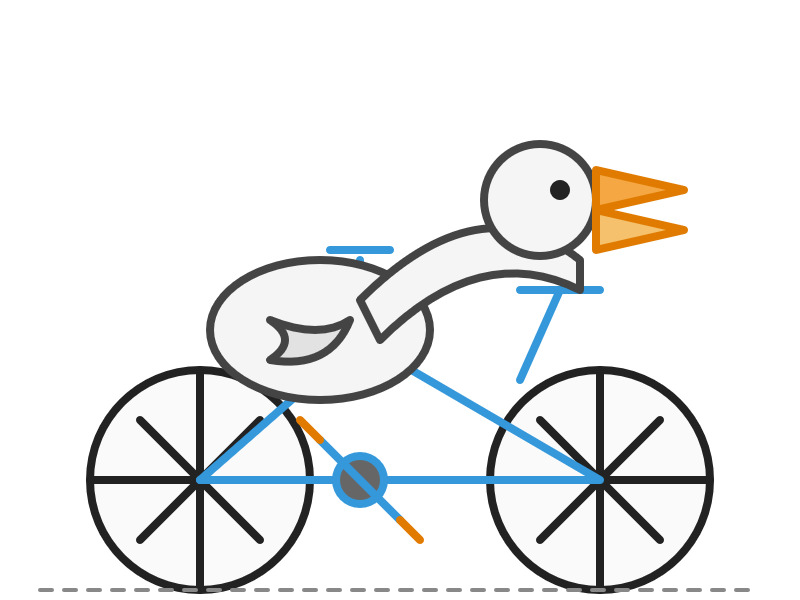

Let's try 2.5 Flash again with thinking disabled:

llm -m gemini-2.5-flash-preview-04-17 'Generate an SVG of a pelican riding a bicycle' -o thinking_budget 0

11 input, 1705 output. That's 0.1025 cents. Transcript here - it still shows 25 thinking tokens even though I set the thinking budget to 0 - Logan confirms that this will still be billed at the lower rate:

In some rare cases, the model still thinks a little even with thinking budget = 0, we are hoping to fix this before we make this model stable and you won't be billed for thinking. The thinking budget = 0 is what triggers the billing switch.

Here's Gemini 2.5 Flash's self-description of that image:

A minimalist illustration shows a bright yellow bird riding a bicycle. The bird has a simple round body, small wings, a black eye, and an open orange beak. It sits atop a simple black bicycle frame with two large circular black wheels. The bicycle also has black handlebars and black and yellow pedals. The scene is set against a solid light blue background with a thick green stripe along the bottom, suggesting grass or ground.

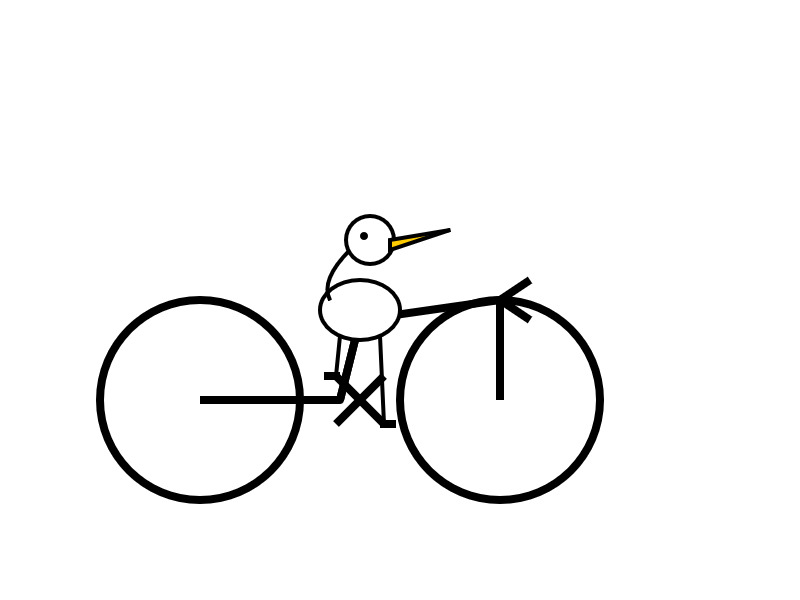

And finally, let's ramp the thinking budget up to the maximum:

llm -m gemini-2.5-flash-preview-04-17 'Generate an SVG of a pelican riding a bicycle' -o thinking_budget 24576

I think it over-thought this one. Transcript - 5,174 output tokens and 3,023 thinking tokens. A hefty 2.8691 cents!

A simple, cartoon-style drawing shows a bird-like figure riding a bicycle. The figure has a round gray head with a black eye and a large, flat orange beak with a yellow stripe on top. Its body is represented by a curved light gray shape extending from the head to a smaller gray shape representing the torso or rear. It has simple orange stick legs with round feet or connections at the pedals. The figure is bent forward over the handlebars in a cycling position. The bicycle is drawn with thick black outlines and has two large wheels, a frame, and pedals connected to the orange legs. The background is plain white, with a dark gray line at the bottom representing the ground.

One thing I really appreciate about Gemini 2.5 Flash's approach to SVGs is that it shows very good taste in CSS, comments and general SVG class structure. Here's a truncated extract - I run a lot of these SVG tests against different models and this one has a coding style that I particularly enjoy. (Gemini 2.5 Pro does this too).

<svg width="800" height="500" viewBox="0 0 800 500" xmlns="http://www.w3.org/2000/svg"> <style> .bike-frame { fill: none; stroke: #333; stroke-width: 8; stroke-linecap: round; stroke-linejoin: round; } .wheel-rim { fill: none; stroke: #333; stroke-width: 8; } .wheel-hub { fill: #333; } /* ... */ .pelican-body { fill: #d3d3d3; stroke: black; stroke-width: 3; } .pelican-head { fill: #d3d3d3; stroke: black; stroke-width: 3; } /* ... */ </style> <!-- Ground Line --> <line x1="0" y1="480" x2="800" y2="480" stroke="#555" stroke-width="5"/> <!-- Bicycle --> <g id="bicycle"> <!-- Wheels --> <circle class="wheel-rim" cx="250" cy="400" r="70"/> <circle class="wheel-hub" cx="250" cy="400" r="10"/> <circle class="wheel-rim" cx="550" cy="400" r="70"/> <circle class="wheel-hub" cx="550" cy="400" r="10"/> <!-- ... --> </g> <!-- Pelican --> <g id="pelican"> <!-- Body --> <path class="pelican-body" d="M 440 330 C 480 280 520 280 500 350 C 480 380 420 380 440 330 Z"/> <!-- Neck --> <path class="pelican-neck" d="M 460 320 Q 380 200 300 270"/> <!-- Head --> <circle class="pelican-head" cx="300" cy="270" r="35"/> <!-- ... -->

The LM Arena leaderboard now has Gemini 2.5 Flash in joint second place, just behind Gemini 2.5 Pro and tied with ChatGPT-4o-latest, Grok-3 and GPT-4.5 Preview.

Introducing OpenAI o3 and o4-mini. OpenAI are really emphasizing tool use with these:

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT—this includes searching the web, analyzing uploaded files and other data with Python, reasoning deeply about visual inputs, and even generating images. Critically, these models are trained to reason about when and how to use tools to produce detailed and thoughtful answers in the right output formats, typically in under a minute, to solve more complex problems.

I released llm-openai-plugin 0.3 adding support for the two new models:

llm install -U llm-openai-plugin

llm -m openai/o3 "say hi in five languages"

llm -m openai/o4-mini "say hi in five languages"

Here are the pelicans riding bicycles (prompt: Generate an SVG of a pelican riding a bicycle).

o3:

o4-mini:

Here are the full OpenAI model listings: o3 is $10/million input and $40/million for output, with a 75% discount on cached input tokens, 200,000 token context window, 100,000 max output tokens and a May 31st 2024 training cut-off (same as the GPT-4.1 models). It's a bit cheaper than o1 ($15/$60) and a lot cheaper than o1-pro ($150/$600).

o4-mini is priced the same as o3-mini: $1.10/million for input and $4.40/million for output, also with a 75% input caching discount. The size limits and training cut-off are the same as o3.

You can compare these prices with other models using the table on my updated LLM pricing calculator.

A new capability released today is that the OpenAI API can now optionally return reasoning summary text. I've been exploring that in this issue. I believe you have to verify your organization (which may involve a photo ID) in order to use this option - once you have access the easiest way to see the new tokens is using curl like this:

curl https://api.openai.com/v1/responses \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $(llm keys get openai)" \

-d '{

"model": "o3",

"input": "why is the sky blue?",

"reasoning": {"summary": "auto"},

"stream": true

}'

This produces a stream of events that includes this new event type:

event: response.reasoning_summary_text.delta

data: {"type": "response.reasoning_summary_text.delta","item_id": "rs_68004320496081918e1e75ddb550d56e0e9a94ce520f0206","output_index": 0,"summary_index": 0,"delta": "**Expl"}

Omit the "stream": true and the response is easier to read and contains this:

{

"output": [

{

"id": "rs_68004edd2150819183789a867a9de671069bc0c439268c95",

"type": "reasoning",

"summary": [

{

"type": "summary_text",

"text": "**Explaining the blue sky**\n\nThe user asks a classic question about why the sky is blue. I'll talk about Rayleigh scattering, where shorter wavelengths of light scatter more than longer ones. This explains how we see blue light spread across the sky! I wonder if the user wants a more scientific or simpler everyday explanation. I'll aim for a straightforward response while keeping it engaging and informative. So, let's break it down!"

}

]

},

{

"id": "msg_68004edf9f5c819188a71a2c40fb9265069bc0c439268c95",

"type": "message",

"status": "completed",

"content": [

{

"type": "output_text",

"annotations": [],

"text": "The short answer ..."

}

]

}

]

}

GPT-4.1: Three new million token input models from OpenAI, including their cheapest model yet

OpenAI introduced three new models this morning: GPT-4.1, GPT-4.1 mini and GPT-4.1 nano. These are API-only models right now, not available through the ChatGPT interface (though you can try them out in OpenAI’s API playground). All three models can handle 1,047,576 tokens of input and 32,768 tokens of output, and all three have a May 31, 2024 cut-off date (their previous models were mostly September 2023).

[... 1,124 words]llm-fragments-rust

(via)

Inspired by Filippo Valsorda's llm-fragments-go, Francois Garillot created llm-fragments-rust, an LLM fragments plugin that lets you pull documentation for any Rust crate directly into a prompt to LLM.

I really like this example, which uses two fragments to load documentation for two crates at once:

llm -f rust:rand@0.8.5 -f rust:tokio "How do I generate random numbers asynchronously?"

The code uses some neat tricks: it creates a new Rust project in a temporary directory (similar to how llm-fragments-go works), adds the crates and uses cargo doc --no-deps --document-private-items to generate documentation. Then it runs cargo tree --edges features to add dependency information, and cargo metadata --format-version=1 to include additional metadata about the crate.

llm-docsmith (via) Matheus Pedroni released this neat plugin for LLM for adding docstrings to existing Python code. You can run it like this:

llm install llm-docsmith

llm docsmith ./scripts/main.py -o

The -o option previews the changes that will be made - without -o it edits the files directly.

It also accepts a -m claude-3.7-sonnet parameter for using an alternative model from the default (GPT-4o mini).

The implementation uses the Python libcst "Concrete Syntax Tree" package to manipulate the code, which means there's no chance of it making edits to anything other than the docstrings.

Here's the full system prompt it uses.

One neat trick is at the end of the system prompt it says:

You will receive a JSON template. Fill the slots marked with <SLOT> with the appropriate description. Return as JSON.

That template is actually provided JSON generated using these Pydantic classes:

class Argument(BaseModel): name: str description: str annotation: str | None = None default: str | None = None class Return(BaseModel): description: str annotation: str | None class Docstring(BaseModel): node_type: Literal["class", "function"] name: str docstring: str args: list[Argument] | None = None ret: Return | None = None class Documentation(BaseModel): entries: list[Docstring]

The code adds <SLOT> notes to that in various places, so the template included in the prompt ends up looking like this:

{

"entries": [

{

"node_type": "function",

"name": "create_docstring_node",

"docstring": "<SLOT>",

"args": [

{

"name": "docstring_text",

"description": "<SLOT>",

"annotation": "str",

"default": null

},

{

"name": "indent",

"description": "<SLOT>",

"annotation": "str",

"default": null

}

],

"ret": {

"description": "<SLOT>",

"annotation": "cst.BaseStatement"

}

}

]

}

llm-fragments-go (via) Filippo Valsorda released the first plugin by someone other than me that uses LLM's new register_fragment_loaders() plugin hook I announced the other day.

Install with llm install llm-fragments-go and then:

You can feed the docs of a Go package into LLM using the

go:fragment with the package name, optionally followed by a version suffix.

llm -f go:golang.org/x/mod/sumdb/note@v0.23.0 "Write a single file command that generates a key, prints the verifier key, signs an example message, and prints the signed note."

The implementation is just 33 lines of Python and works by running these commands in a temporary directory:

go mod init llm_fragments_go

go get golang.org/x/mod/sumdb/note@v0.23.0

go doc -all golang.org/x/mod/sumdb/note

An LLM Query Understanding Service (via) Doug Turnbull recently wrote about how all search is structured now:

Many times, even a small open source LLM will be able to turn a search query into reasonable structure at relatively low cost.

In this follow-up tutorial he demonstrates Qwen 2-7B running in a GPU-enabled Google Kubernetes Engine container to turn user search queries like "red loveseat" into structured filters like {"item_type": "loveseat", "color": "red"}.

Here's the prompt he uses.

Respond with a single line of JSON:

{"item_type": "sofa", "material": "wood", "color": "red"}

Omit any other information. Do not include any

other text in your response. Omit a value if the

user did not specify it. For example, if the user

said "red sofa", you would respond with:

{"item_type": "sofa", "color": "red"}

Here is the search query: blue armchair

Out of curiosity, I tried running his prompt against some other models using LLM:

gemini-1.5-flash-8b, the cheapest of the Gemini models, handled it well and cost $0.000011 - or 0.0011 cents.llama3.2:3bworked too - that's a very small 2GB model which I ran using Ollama.deepseek-r1:1.5b- a tiny 1.1GB model, again via Ollama, amusingly failed by interpreting "red loveseat" as{"item_type": "sofa", "material": null, "color": "red"}after thinking very hard about the problem!

Mistral Small 3.1 on Ollama. Mistral Small 3.1 (previously) is now available through Ollama, providing an easy way to run this multi-modal (vision) model on a Mac (and other platforms, though I haven't tried those myself).

I had to upgrade Ollama to the most recent version to get it to work - prior to that I got a Error: unable to load model message. Upgrades can be accessed through the Ollama macOS system tray icon.

I fetched the 15GB model by running:

ollama pull mistral-small3.1

Then used llm-ollama to run prompts through it, including one to describe this image:

llm install llm-ollama

llm -m mistral-small3.1 'describe this image' -a https://static.simonwillison.net/static/2025/Mpaboundrycdfw-1.png

Here's the output. It's good, though not quite as impressive as the description I got from the slightly larger Qwen2.5-VL-32B.

I also tried it on a scanned (private) PDF of hand-written text with very good results, though it did misread one of the hand-written numbers.

llm-hacker-news. I built this new plugin to exercise the new register_fragment_loaders() plugin hook I added to LLM 0.24. It's the plugin equivalent of the Bash script I've been using to summarize Hacker News conversations for the past 18 months.

You can use it like this:

llm install llm-hacker-news

llm -f hn:43615912 'summary with illustrative direct quotes'

You can see the output in this issue.

The plugin registers a hn: prefix - combine that with the ID of a Hacker News conversation to pull that conversation into the context.

It uses the Algolia Hacker News API which returns JSON like this. Rather than feed the JSON directly to the LLM it instead converts it to a hopefully more LLM-friendly format that looks like this example from the plugin's test:

[1] BeakMaster: Fish Spotting Techniques

[1.1] CoastalFlyer: The dive technique works best when hunting in shallow waters.

[1.1.1] PouchBill: Agreed. Have you tried the hover method near the pier?

[1.1.2] WingSpan22: My bill gets too wet with that approach.

[1.1.2.1] CoastalFlyer: Try tilting at a 40° angle like our Australian cousins.

[1.2] BrownFeathers: Anyone spotted those "silver fish" near the rocks?

[1.2.1] GulfGlider: Yes! They're best caught at dawn.

Just remember: swoop > grab > lift

That format was suggested by Claude, which then wrote most of the plugin implementation for me. Here's that Claude transcript.

Long context support in LLM 0.24 using fragments and template plugins

LLM 0.24 is now available with new features to help take advantage of the increasingly long input context supported by modern LLMs.

[... 1,896 words]Initial impressions of Llama 4

Dropping a model release as significant as Llama 4 on a weekend is plain unfair! So far the best place to learn about the new model family is this post on the Meta AI blog. They’ve released two new models today: Llama 4 Maverick is a 400B model (128 experts, 17B active parameters), text and image input with a 1 million token context length. Llama 4 Scout is 109B total parameters (16 experts, 17B active), also multi-modal and with a claimed 10 million token context length—an industry first.

[... 1,468 words]Gemini 2.5 Pro Preview pricing (via) Google's Gemini 2.5 Pro is currently the top model on LM Arena and, from my own testing, a superb model for OCR, audio transcription and long-context coding.

You can now pay for it!

The new gemini-2.5-pro-preview-03-25 model ID is priced like this:

- Prompts less than 200,00 tokens: $1.25/million tokens for input, $10/million for output

- Prompts more than 200,000 tokens (up to the 1,048,576 max): $2.50/million for input, $15/million for output

This is priced at around the same level as Gemini 1.5 Pro ($1.25/$5 for input/output below 128,000 tokens, $2.50/$10 above 128,000 tokens), is cheaper than GPT-4o for shorter prompts ($2.50/$10) and is cheaper than Claude 3.7 Sonnet ($3/$15).

Gemini 2.5 Pro is a reasoning model, and invisible reasoning tokens are included in the output token count. I just tried prompting "hi" and it charged me 2 tokens for input and 623 for output, of which 613 were "thinking" tokens. That still adds up to just 0.6232 cents (less than a cent) using my LLM pricing calculator which I updated to support the new model just now.

I released llm-gemini 0.17 this morning adding support for the new model:

llm install -U llm-gemini

llm -m gemini-2.5-pro-preview-03-25 hi

Note that the model continues to be available for free under the previous gemini-2.5-pro-exp-03-25 model ID:

llm -m gemini-2.5-pro-exp-03-25 hi

The free tier is "used to improve our products", the paid tier is not.

Rate limits for the paid model vary by tier - from 150/minute and 1,000/day for tier 1 (billing configured), 1,000/minute and 50,000/day for Tier 2 ($250 total spend) and 2,000/minute and unlimited/day for Tier 3 ($1,000 total spend). Meanwhile the free tier continues to limit you to 5 requests per minute and 25 per day.

Google are retiring the Gemini 2.0 Pro preview entirely in favour of 2.5.

smartfunc. Vincent D. Warmerdam built this ingenious wrapper around my LLM Python library which lets you build LLM wrapper functions using a decorator and a docstring:

from smartfunc import backend @backend("gpt-4o") def generate_summary(text: str): """Generate a summary of the following text: {{ text }}""" pass summary = generate_summary(long_text)

It works with LLM plugins so the same pattern should work against Gemini, Claude and hundreds of others, including local models.

It integrates with more recent LLM features too, including async support and schemas, by introspecting the function signature:

class Summary(BaseModel): summary: str pros: list[str] cons: list[str] @async_backend("gpt-4o-mini") async def generate_poke_desc(text: str) -> Summary: "Describe the following pokemon: {{ text }}" pass pokemon = await generate_poke_desc("pikachu")

Vincent also recorded a 12 minute video walking through the implementation and showing how it uses Pydantic, Python's inspect module and typing.get_type_hints() function.