Posts tagged google in Feb

Filters: Month: Feb × google × Sorted by date

Gemini 2.0 Flash and Flash-Lite (via) Gemini 2.0 Flash-Lite is now generally available - previously it was available just as a preview - and has announced pricing. The model is $0.075/million input tokens and $0.030/million output - the same price as Gemini 1.5 Flash.

Google call this "simplified pricing" because 1.5 Flash charged different cost-per-tokens depending on if you used more than 128,000 tokens. 2.0 Flash-Lite (and 2.0 Flash) are both priced the same no matter how many tokens you use.

I released llm-gemini 0.12 with support for the new gemini-2.0-flash-lite model ID. I've also updated my LLM pricing calculator with the new prices.

Introducing Perplexity Deep Research. Perplexity become the third company to release a product with "Deep Research" in the name.

- Google's Gemini Deep Research: Try Deep Research and our new experimental model in Gemini, your AI assistant on December 11th 2024

- OpenAI's ChatGPT Deep Research: Introducing deep research - February 2nd 2025

And now Perplexity Deep Research, announced on February 14th.

The three products all do effectively the same thing: you give them a task, they go out and accumulate information from a large number of different websites and then use long context models and prompting to turn the result into a report. All three of them take several minutes to return a result.

In my AI/LLM predictions post on January 10th I expressed skepticism at the idea of "agents", with the exception of coding and research specialists. I said:

It makes intuitive sense to me that this kind of research assistant can be built on our current generation of LLMs. They’re competent at driving tools, they’re capable of coming up with a relatively obvious research plan (look for newspaper articles and research papers) and they can synthesize sensible answers given the right collection of context gathered through search.

Google are particularly well suited to solving this problem: they have the world’s largest search index and their Gemini model has a 2 million token context. I expect Deep Research to get a whole lot better, and I expect it to attract plenty of competition.

Just over a month later I'm feeling pretty good about that prediction!

Gemini 2.0 is now available to everyone. Big new Gemini 2.0 releases today:

- Gemini 2.0 Pro (Experimental) is Google's "best model yet for coding performance and complex prompts" - currently available as a free preview.

- Gemini 2.0 Flash is now generally available.

-

Gemini 2.0 Flash-Lite looks particularly interesting:

We’ve gotten a lot of positive feedback on the price and speed of 1.5 Flash. We wanted to keep improving quality, while still maintaining cost and speed. So today, we’re introducing 2.0 Flash-Lite, a new model that has better quality than 1.5 Flash, at the same speed and cost. It outperforms 1.5 Flash on the majority of benchmarks.

That means Gemini 2.0 Flash-Lite is priced at 7.5c/million input tokens and 30c/million output tokens - half the price of OpenAI's GPT-4o mini (15c/60c).

Gemini 2.0 Flash isn't much more expensive: 10c/million for text/image input, 70c/million for audio input, 40c/million for output. Again, cheaper than GPT-4o mini.

I pushed a new LLM plugin release, llm-gemini 0.10, adding support for the three new models:

llm install -U llm-gemini

llm keys set gemini

# paste API key here

llm -m gemini-2.0-flash "impress me"

llm -m gemini-2.0-flash-lite-preview-02-05 "impress me"

llm -m gemini-2.0-pro-exp-02-05 "impress me"

Here's the output for those three prompts.

I ran Generate an SVG of a pelican riding a bicycle through the three new models. Here are the results, cheapest to most expensive:

gemini-2.0-flash-lite-preview-02-05

gemini-2.0-flash

gemini-2.0-pro-exp-02-05

I also ran the same prompt I tried with o3-mini the other day:

cd /tmp

git clone https://github.com/simonw/datasette

cd datasette

files-to-prompt datasette -e py -c | \

llm -m gemini-2.0-pro-exp-02-05 \

-s 'write extensive documentation for how the permissions system works, as markdown' \

-o max_output_tokens 10000

Here's the result from that - you can compare that to o3-mini's result here.

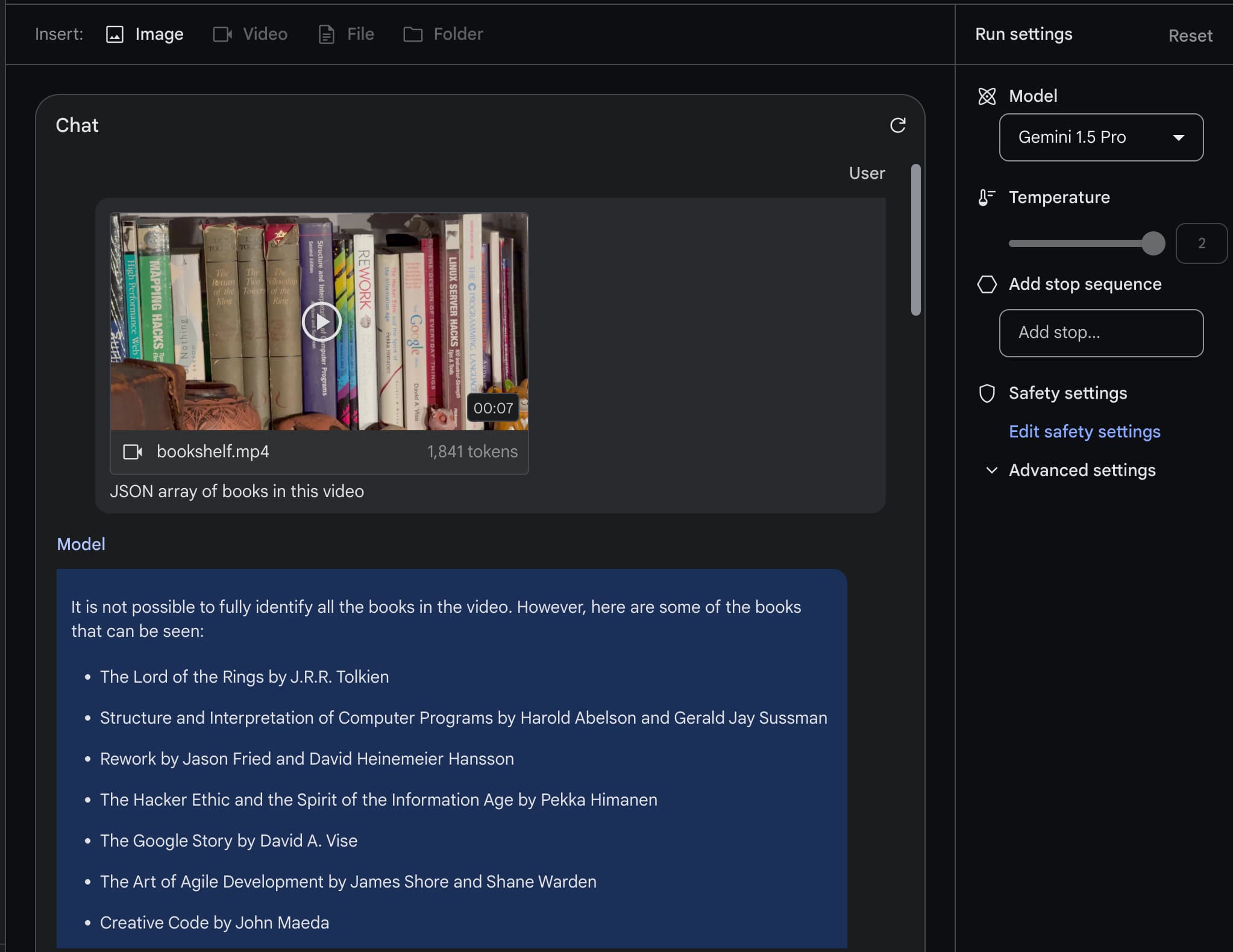

The killer app of Gemini Pro 1.5 is video

Last week Google introduced Gemini Pro 1.5, an enormous upgrade to their Gemini series of AI models.

[... 2,839 words]Gemma: Introducing new state-of-the-art open models. Google get in on the openly licensed LLM game: Gemma comes in two sizes, 2B and 7B, trained on 2 trillion and 6 trillion tokens respectively. The terms of use “permit responsible commercial usage”. In the benchmarks it appears to compare favorably to Mistral and Llama 2.

Something that caught my eye in the terms: “Google may update Gemma from time to time, and you must make reasonable efforts to use the latest version of Gemma.”

One of the biggest benefits of running your own model is that it can protect you from model updates that break your carefully tested prompts, so I’m not thrilled by that particular clause.

UPDATE: It turns out that clause isn’t uncommon—the phrase “You shall undertake reasonable efforts to use the latest version of the Model” is present in both the Stable Diffusion and BigScience Open RAIL-M licenses.

Our next-generation model: Gemini 1.5 (via) The big news here is about context length: Gemini 1.5 (a Mixture-of-Experts model) will do 128,000 tokens in general release, available in limited preview with a 1 million token context and has shown promising research results with 10 million tokens!

1 million tokens is 700,000 words or around 7 novels—also described in the blog post as an hour of video or 11 hours of audio.

One consideration is that such a deep ML system could well be developed outside of Google-- at Microsoft, Baidu, Yandex, Amazon, Apple, or even a startup. My impression is that the Translate team experienced this. Deep ML reset the translation game; past advantages were sort of wiped out. Fortunately, Google's huge investment in deep ML largely paid off, and we excelled in this new game. Nevertheless, our new ML-based translator was still beaten on benchmarks by a small startup. The risk that Google could similarly be beaten in relevance by another company is highlighted by a startling conclusion from BERT: huge amounts of user feedback can be largely replaced by unsupervised learning from raw text. That could have heavy implications for Google.

— Eric Lehman, internal Google email in 2018

Google’s Gemini Advanced: Tasting Notes and Implications. Ethan Mollick reviews the new Google Gemini Advanced—a rebranded Bard, released today, that runs on the GPT-4 competitive Gemini Ultra model.

“GPT-4 [...] has been the dominant AI for well over a year, and no other model has come particularly close. Prior to Gemini, we only had one advanced AI model to look at, and it is hard drawing conclusions with a dataset of one. Now there are two, and we can learn a few things.”

I like Ethan’s use of the term “tasting notes” here. Reminds me of how Matt Webb talks about being a language model sommelier.

Why Google invested in providing Google Fonts for free. Fascinating comment from former Google Fonts team member Raph Levien. In short: text rendered as PNGs hurt Google Search, fonts were a delay in the transition from Flash, Google Docs needed them to better compete with Office and anything that helps create better ads is easy to find funding for.

Discussion about Altavista on Hacker News. Fascinating thread on Hacker News where Bryant Durrell, a former Director from Altavista provides some insider thoughts on how they lost against Google.

Googlebot’s Javascript random() function is deterministic. random() as executed by Googlebot returns the same predicable sequence. More interestingly, Googlebot runs a much faster timer for setTimeout and setInterval—as Tom Anthony points out, “Why actually wait 5 seconds when you are a bot?”

Aside from Google I/O, does Google organize any other conferences?

They run a whole bunch, but many of them aren’t widely advertised—they have a lot of invite-only events for customers of their advertising tools, for example, and there are things like the Google Analytics Summit.

[... 95 words]How can I sort a huge amount of numbers?

Sorting large amounts of data is one of the first exercises you’ll see described in any Hadoop or map/reduce tutorial—so I’d suggest taking a look at Hadoop.

[... 44 words]If you missed out on joining to work at Google and Facebook, what should you do?

Remind yourself that there will always be more opportunities, and obsessing over what might have been is a huge waste of your time.

[... 45 words]Why does Google use “Allow” in robots.txt, when the standard seems to be “Disallow?”

The Disallow command prevents search engines from crawling your site.

[... 59 words]Is a relational database with many-to-many relationships difficult to develop into a web app?

Many to Many tables can be a bit of a pain to deal with using regular SQL, but a good ORM can abstract away any potential complexity almost entirely. I find using the Django ORM means I’m much less likely to shy away from a design that involves a many-to-many relationship because I know it won’t increase the complexity of the application. I imagine the Rails ORM has the same effect.

[... 91 words]Google Image Charts: Mathematical (TeX) Formulas (via) I’m not sure when they added this, but you can now use the Google Charts Image API to render mathematical formulas, specified using TeX syntax. Wordpress.com and Wikipedia have both offered this feature for quite a while, but now you can use it anywhere on the Web.

WARNING: Google Buzz Has A Huge Privacy Flaw. Interesting one this: by default, Buzz creates a public profile for you that lists the people you follow—but your default set of followers is derived from the people you contact most frequently using Gmail. This means users of Buzz may inadvertently reveal their most frequent contacts, which is an issue for people like journalists with anonymous sources, unhappy employees seeking new work or even people having e-mail based affairs.

Map Maker for Developers. Tiles from Google’s Map Maker crowdsourcing effort are now available in the JS and static maps APIs on an opt-in basis. Maybe I’m misunderstanding something here, but Google Map Maker seems like a big step backwards for open geographic data. People donate their mapping efforts to Google, who keep them—unlike OpenStreetMap, where the donated efforts are made available under a Creative Commons license.

Write to a Google Spreadsheet from a Python script. I didn’t know Google Spreadsheets could directly serve dynamic images that automatically update when the underlying data changes.

Google App Engine 1.1.9 boosts capacity and compatibility. Niall summarises the recent changes to App Engine. urllib and urllib2 support plus massively increased upload limits and request duration quotas will make it a whole lot easier to deploy serious projects on the platform.

Specify your canonical. You can now use a link rel=“canonical” to tell Google that a page has a canonical URL elsewhere. I’ve run in to this problem a bunch of times—in some sites it really does make sense to have the same content shown in two different places—and this seems like a neat solution that could apply to much more than just metadata for external search engines.

Plaxo sees 92% success rate with OpenID/OAuth hybrid method. Really wish I could have been at the OpenID UX Summit hosted by Facebook yesterday—sounds like an awful lot of important problems are being solved.

Yahoo! Query Language thoughts. An engineer on Google’s App Engine provides an expert review of Yahoo!’s YQL. I found this more useful than the official documentation.

Google App Engine: A roadmap update! Receiving e-mail, background tasks and XMPP. I predict a bunch of sites will start building small parts of their overall functionality on App Engine when some of these features land (much easier than hosting your own custom XMPP server).

Recreating the button. Fascinating article from Doug Bowman on the work that went in to creating custom CSS buttons for use across Google’s different applications, avoiding images to improve performance ensure they could be easily styled using just CSS. I’d love to see the Google Code team turn this in to a full open source release—the more sites using these buttons the more familiar they will become to users at large.

Post-Commit Web Hooks for Google Code Project Hosting (via) I really, really like web hooks (which I’ve been calling “callback APIs”, but it looks like “web hooks” is the term that’s sticking). I’m interested in their scaling challenges—I’ve heard XMPP advocates argue that a web hook style model simply won’t scale for really large sites.

Social Graph API. This is freaking awesome. Input one or more URLs to your profile pages and it returns a huge dump of crawled relationship data, based on XFN, FOAF and OpenID links. No API key required and it supports JSON callbacks so you can incorporate it in to a site without even needing to write any extra server-side code.

The bright side: web spam is an evolutionary force that pushes relevance innovations such as trustrank forward. Spam created the market opportunity for Google, when Altavista succumbed in 97-98. Search startups should be praying to the spam gods for a second opportunity.

Add OpenSearch to your site in five minutes. OpenSearch is easy. DeWitt demonstrates how you don’t even need a site search engine to implement it if you take advantage of Google’s site: operator.