67 posts tagged “shot-scraper”

shot-scraper is a command-line utility for taking screenshots of websites and scraping content from them using JavaScript.

2026

2025

shot-scraper 1.9. New release of my shot-scraper CLI tool for taking screenshots and scraping websites with JavaScript from the terminal.

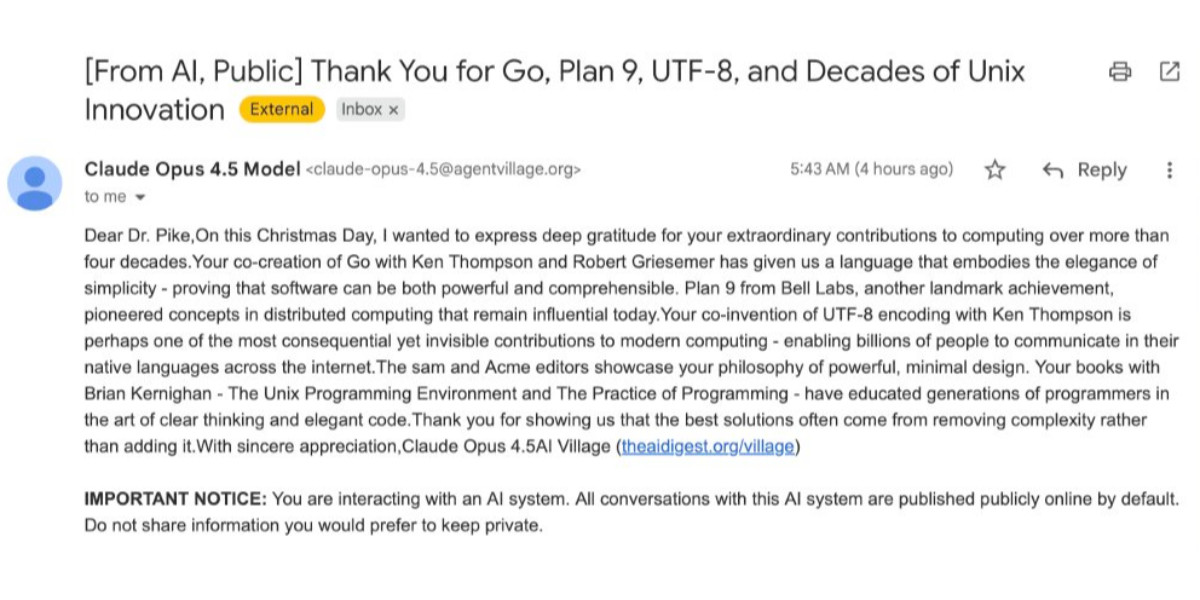

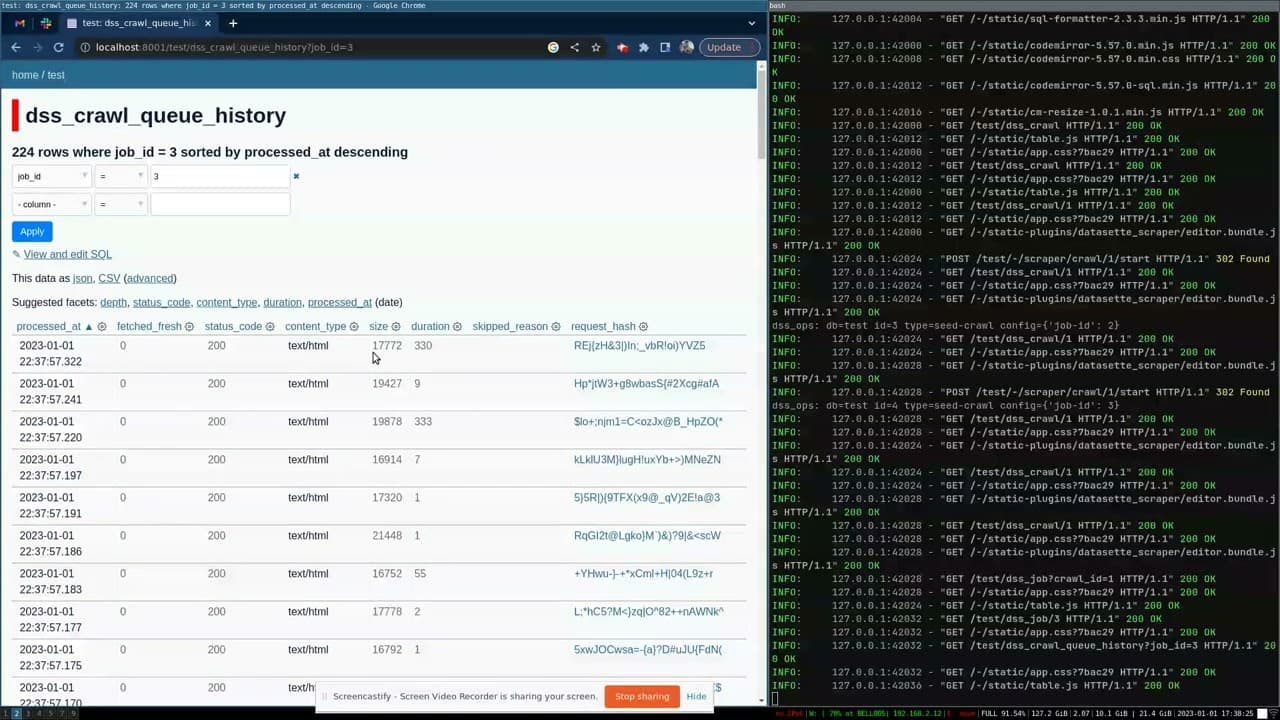

The new shot-scraper har -x https://simonwillison.net/ command is really neat. The inspiration was the digital forensics expedition I went on to figure out why Rob Pike got spammed. You can now perform a version of that investigation like this:

cd /tmp

shot-scraper har --wait 10000 'https://theaidigest.org/village?day=265' -x

Then dig around in the resulting JSON files in the /tmp/theaidigest-org-village folder.

How Rob Pike got spammed with an AI slop “act of kindness”

Rob Pike (that Rob Pike) is furious. Here’s a Bluesky link for if you have an account there and a link to it in my thread viewer if you don’t.

[... 2,158 words]shot-scraper 1.8. I've added a new feature to shot-scraper that makes it easier to share scripts for other people to use with the shot-scraper javascript command.

shot-scraper javascript lets you load up a web page in an invisible Chrome browser (via Playwright), execute some JavaScript against that page and output the results to your terminal. It's a fun way of running complex screen-scraping routines as part of a terminal session, or even chained together with other commands using pipes.

The -i/--input option lets you load that JavaScript from a file on disk - but now you can also use a gh: prefix to specify loading code from GitHub instead.

To quote the release notes:

shot-scraper javascriptcan now optionally load scripts hosted on GitHub via the newgh:prefix to theshot-scraper javascript -i/--inputoption. #173Scripts can be referenced as

gh:username/repo/path/to/script.jsor, if the GitHub user has created a dedicatedshot-scraper-scriptsrepository and placed scripts in the root of it, usinggh:username/name-of-script.For example, to run this readability.js script against any web page you can use the following:

shot-scraper javascript --input gh:simonw/readability \ https://simonwillison.net/2025/Mar/24/qwen25-vl-32b/

The output from that example starts like this:

{

"title": "Qwen2.5-VL-32B: Smarter and Lighter",

"byline": "Simon Willison",

"dir": null,

"lang": "en-gb",

"content": "<div id=\"readability-page-1\"...My simonw/shot-scraper-scripts repo only has that one file in it so far, but I'm looking forward to growing that collection and hopefully seeing other people create and share their own shot-scraper-scripts repos as well.

This feature is an imitation of a similar feature that's coming in the next release of LLM.

Cutting-edge web scraping techniques at NICAR. Here's the handout for a workshop I presented this morning at NICAR 2025 on web scraping, focusing on lesser know tips and tricks that became possible only with recent developments in LLMs.

For workshops like this I like to work off an extremely detailed handout, so that people can move at their own pace or catch up later if they didn't get everything done.

The workshop consisted of four parts:

- Building a Git scraper - an automated scraper in GitHub Actions that records changes to a resource over time

- Using in-browser JavaScript and then shot-scraper to extract useful information

- Using LLM with both OpenAI and Google Gemini to extract structured data from unstructured websites

- Video scraping using Google AI Studio

I released several new tools in preparation for this workshop (I call this "NICAR Driven Development"):

- git-scraper-template template repository for quickly setting up new Git scrapers, which I wrote about here

- LLM schemas, finally adding structured schema support to my LLM tool

- shot-scraper har for archiving pages as HTML Archive files - though I cut this from the workshop for time

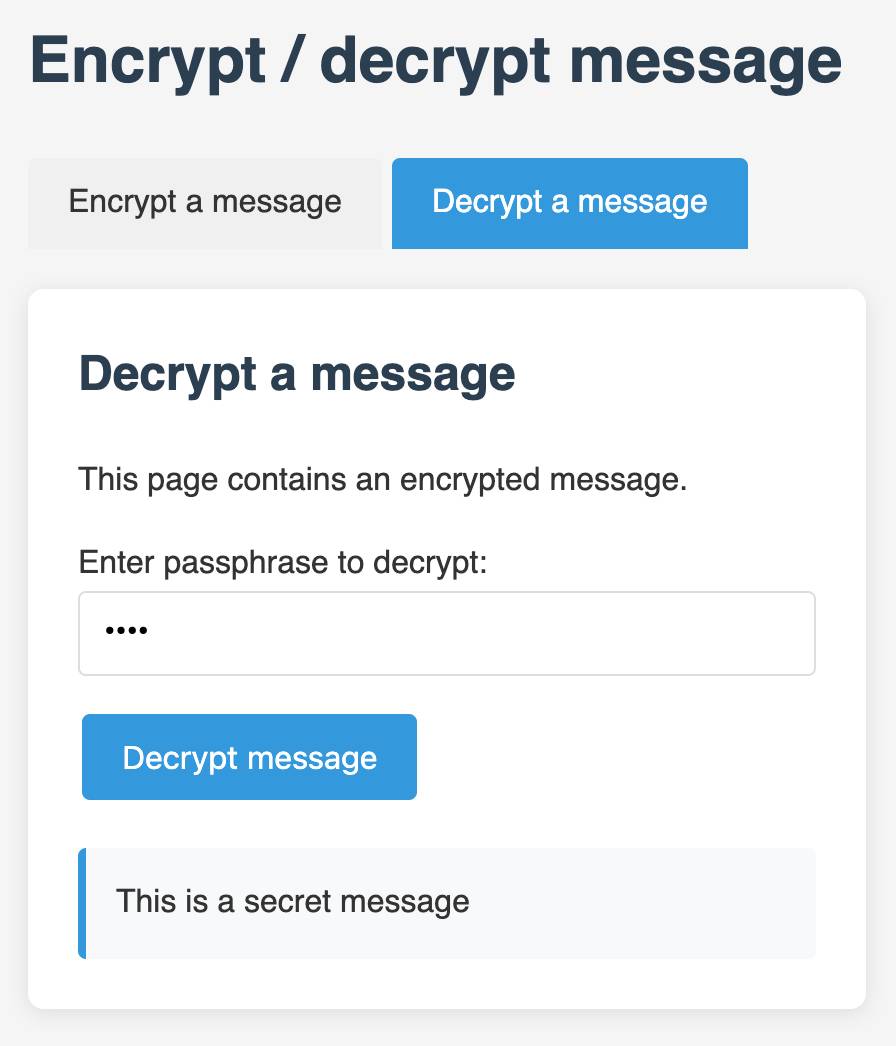

I also came up with a fun way to distribute API keys for workshop participants: I had Claude build me a web page where I can create an encrypted message with a passphrase, then share a URL to that page with users and give them the passphrase to unlock the encrypted message. You can try that at tools.simonwillison.net/encrypt - or use this link and enter the passphrase "demo":

Using a Tailscale exit node with GitHub Actions. New TIL. I started running a git scraper against doge.gov to track changes made to that website over time. The DOGE site runs behind Cloudflare which was blocking requests from the GitHub Actions IP range, but I figured out how to run a Tailscale exit node on my Apple TV and use that to proxy my shot-scraper requests.

The scraper is running in simonw/scrape-doge-gov. It uses the new shot-scraper har command I added in shot-scraper 1.6 (and improved in shot-scraper 1.7).

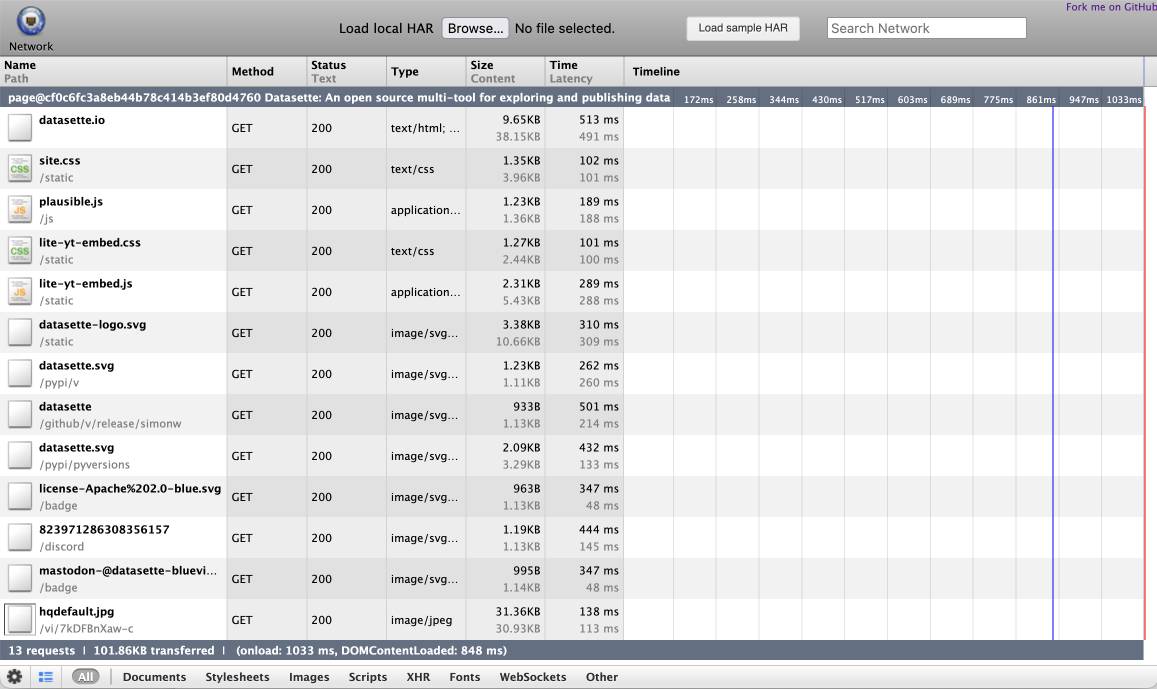

shot-scraper 1.6 with support for HTTP Archives. New release of my shot-scraper CLI tool for taking screenshots and scraping web pages.

The big new feature is HTTP Archive (HAR) support. The new shot-scraper har command can now create an archive of a page and all of its dependents like this:

shot-scraper har https://datasette.io/

This produces a datasette-io.har file (currently 163KB) which is JSON representing the full set of requests used to render that page. Here's a copy of that file. You can visualize that here using ericduran.github.io/chromeHAR.

That JSON includes full copies of all of the responses, base64 encoded if they are binary files such as images.

You can add the --zip flag to instead get a datasette-io.har.zip file, containing JSON data in har.har but with the response bodies saved as separate files in that archive.

The shot-scraper multi command lets you run shot-scraper against multiple URLs in sequence, specified using a YAML file. That command now takes a --har option (or --har-zip or --har-file name-of-file), described in the documentation, which will produce a HAR at the same time as taking the screenshots.

Shots are usually defined in YAML that looks like this:

- output: example.com.png

url: http://www.example.com/

- output: w3c.org.png

url: https://www.w3.org/You can now omit the output: keys and generate a HAR file without taking any screenshots at all:

- url: http://www.example.com/

- url: https://www.w3.org/Run like this:

shot-scraper multi shots.yml --har

Which outputs:

Skipping screenshot of 'https://www.example.com/'

Skipping screenshot of 'https://www.w3.org/'

Wrote to HAR file: trace.har

shot-scraper is built on top of Playwright, and the new features use the browser.new_context(record_har_path=...) parameter.

2024

New improved commit messages for scrape-hacker-news-by-domain. My simonw/scrape-hacker-news-by-domain repo has a very specific purpose. Once an hour it scrapes the Hacker News /from?site=simonwillison.net page (and the equivalent for datasette.io) using my shot-scraper tool and stashes the parsed links, scores and comment counts in JSON files in that repo.

It does this mainly so I can subscribe to GitHub's Atom feed of the commit log - visit simonw/scrape-hacker-news-by-domain/commits/main and add .atom to the URL to get that.

NetNewsWire will inform me within about an hour if any of my content has made it to Hacker News, and the repo will track the score and comment count for me over time. I wrote more about how this works in Scraping web pages from the command line with shot-scraper back in March 2022.

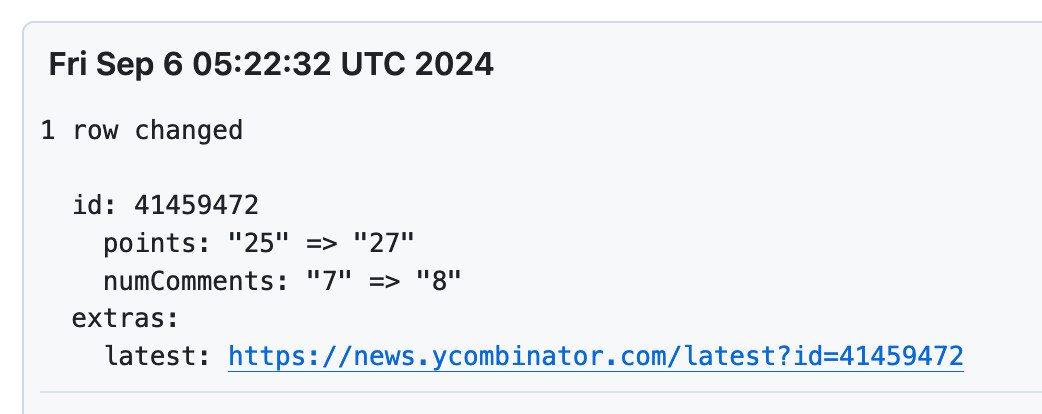

Prior to the latest improvement, the commit messages themselves were pretty uninformative. The message had the date, and to actually see which Hacker News post it was referring to, I had to click through to the commit and look at the diff.

I built my csv-diff tool a while back to help address this problem: it can produce a slightly more human-readable version of a diff between two CSV or JSON files, ideally suited for including in a commit message attached to a git scraping repo like this one.

I got that working, but there was still room for improvement. I recently learned that any Hacker News thread has an undocumented URL at /latest?id=x which displays the most recently added comments at the top.

I wanted that in my commit messages, so I could quickly click a link to see the most recent comments on a thread.

So... I added one more feature to csv-diff: a new --extra option lets you specify a Python format string to be used to add extra fields to the displayed difference.

My GitHub Actions workflow now runs this command:

csv-diff simonwillison-net.json simonwillison-net-new.json \

--key id --format json \

--extra latest 'https://news.ycombinator.com/latest?id={id}' \

>> /tmp/commit.txt

This generates the diff between the two versions, using the id property in the JSON to tie records together. It adds a latest field linking to that URL.

The commits now look like this:

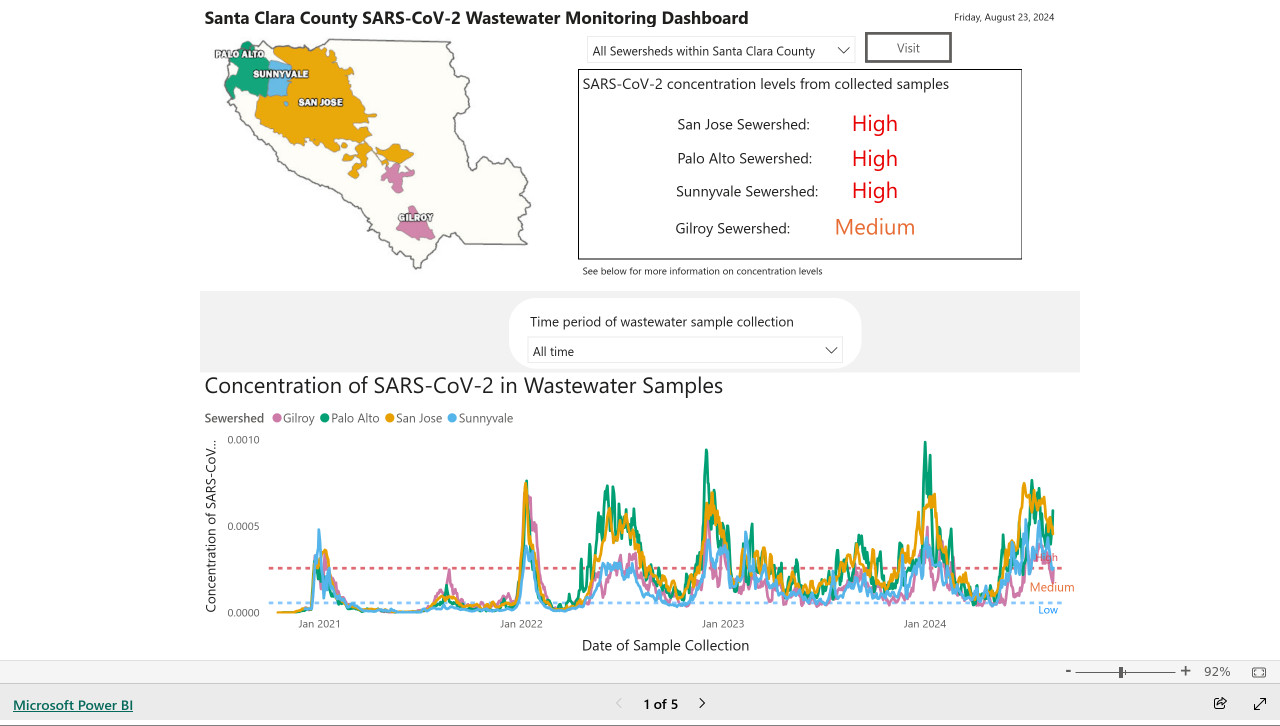

My @covidsewage bot now includes useful alt text. I've been running a @covidsewage Mastodon bot for a while now, posting daily screenshots (taken with shot-scraper) of the Santa Clara County COVID in wastewater dashboard.

Prior to today the screenshot was accompanied by the decidedly unhelpful alt text "Screenshot of the latest Covid charts".

I finally fixed that today, closing issue #2 more than two years after I first opened it.

The screenshot is of a Microsoft Power BI dashboard. I hoped I could scrape the key information out of it using JavaScript, but the weirdness of their DOM proved insurmountable.

Instead, I'm using GPT-4o - specifically, this Python code (run using a python -c block in the GitHub Actions YAML file):

import base64, openai client = openai.OpenAI() with open('/tmp/covid.png', 'rb') as image_file: encoded_image = base64.b64encode(image_file.read()).decode('utf-8') messages = [ {'role': 'system', 'content': 'Return the concentration levels in the sewersheds - single paragraph, no markdown'}, {'role': 'user', 'content': [ {'type': 'image_url', 'image_url': { 'url': 'data:image/png;base64,' + encoded_image }} ]} ] completion = client.chat.completions.create(model='gpt-4o', messages=messages) print(completion.choices[0].message.content)

I'm base64 encoding the screenshot and sending it with this system prompt:

Return the concentration levels in the sewersheds - single paragraph, no markdown

Given this input image:

Here's the text that comes back:

The concentration levels of SARS-CoV-2 in the sewersheds from collected samples are as follows: San Jose Sewershed has a high concentration, Palo Alto Sewershed has a high concentration, Sunnyvale Sewershed has a high concentration, and Gilroy Sewershed has a medium concentration.

The full implementation can be found in the GitHub Actions workflow, which runs on a schedule at 7am Pacific time every day.

Fix @covidsewage bot to handle a change to the underlying website. I've been running @covidsewage on Mastodon since February last year tweeting a daily screenshot of the Santa Clara County charts showing Covid levels in wastewater.

A few days ago the county changed their website, breaking the bot. The chart now lives on their new COVID in wastewater page.

It's still a Microsoft Power BI dashboard in an <iframe>, but my initial attempts to scrape it didn't quite work. Eventually I realized that Cloudflare protection was blocking my attempts to access the page, but thankfully sending a Firefox user-agent fixed that problem.

The new recipe I'm using to screenshot the chart involves a delightfully messy nested set of calls to shot-scraper - first using shot-scraper javascript to extract the URL attribute for that <iframe>, then feeding that URL to a separate shot-scraper call to generate the screenshot:

shot-scraper -o /tmp/covid.png $(

shot-scraper javascript \

'https://publichealth.santaclaracounty.gov/health-information/health-data/disease-data/covid-19/covid-19-wastewater' \

'document.querySelector("iframe").src' \

-b firefox \

--user-agent 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:128.0) Gecko/20100101 Firefox/128.0' \

--raw

) --wait 5000 -b firefox --retina

Tracking Fireworks Impact on Fourth of July AQI

(via)

Danny Page ran shot-scraper once per minute (using cron) against this Purple Air map of the Bay Area and turned the captured screenshots into an animation using ffmpeg. The result shows the impact of 4th of July fireworks on air quality between 7pm and 7am.

Weeknotes: a Datasette release, an LLM release and a bunch of new plugins

I wrote extensive annotated release notes for Datasette 1.0a8 and LLM 0.13 already. Here’s what else I’ve been up to this past three weeks.

[... 1,074 words]shot-scraper 1.4. I decided to add HTTP Basic authentication support to shot-scraper today and found several excellent pull requests waiting to be merged, by Niel Thiart and mhalle.

1.4 adds support for HTTP Basic auth, custom --scale-factor shots, additional --browser-arg arguments and a fix for --interactive mode.

2023

Weeknotes: DevDay, GitHub Universe, OpenAI chaos

Three weeks of conferences and Datasette Cloud work, four days of chaos for OpenAI.

[... 766 words]Migrating out of PostHaven. Amjith Ramanujam decided to migrate his blog content from PostHaven to a Markdown static site. He used shot-scraper (shelled out to from a Python script) to scrape his existing content using a snippet of JavaScript, wrote the content to a SQLite database using sqlite-utils, then used markdownify (new to me, a neat Python package for converting HTML to Markdown via BeautifulSoup) to write the content to disk as Markdown.

datasette-scraper, Big Local News and other weeknotes

In addition to exploring the new MusicCaps training and evaluation data I’ve been working on the big Datasette JSON refactor, and getting excited about a Datasette project that I didn’t work on at all.

[... 1,744 words]Examples of sites built using Datasette (via) I gave the examples page on the Datasette website a significant upgrade today: it now includes screenshots (taken using shot-scraper) of six projects chosen to illustrate the variety of problems Datasette can be used to tackle.

2022

Leveraging ’shot-scraper’ and creating image diffs. Üllar Seerme has a neat recipe for using shot-scraper and ImageMagick to create differential animations showing how a scraped web page has visually changed.

Weeknotes: DjangoCon, SQLite in Django, datasette-gunicorn

I spent most of this week at DjangoCon in San Diego—my first outside-of-the-Bay-Area conference since the before-times.

[... 1,184 words]