1,645 posts tagged “llms”

Large Language Models (LLMs) are the class of technology behind generative text AI systems like OpenAI's ChatGPT, Google's Gemini and Anthropic's Claude.

2024

marimo v0.9.0 with mo.ui.chat. The latest release of the Marimo Python reactive notebook project includes a neat new feature: you can now easily embed a custom chat interface directly inside of your notebook.

Marimo co-founder Myles Scolnick posted this intriguing demo on Twitter, demonstrating a chat interface to my LLM library “in only 3 lines of code”:

import marimo as mo import llm model = llm.get_model() conversation = model.conversation() mo.ui.chat(lambda messages: conversation.prompt(messages[-1].content))

I tried that out today - here’s the result:

![Screenshot of a Marimo notebook editor, with lines of code and an embedded chat interface. Top: import marimo as mo and import llm. Middle: Chat messages - User: Hi there, Three jokes about pelicans. AI: Hello! How can I assist you today?, Sure! Here are three pelican jokes for you: 1. Why do pelicans always carry a suitcase? Because they have a lot of baggage to handle! 2. What do you call a pelican that can sing? A tune-ican! 3. Why did the pelican break up with his girlfriend? She said he always had his head in the clouds and never winged it! Hope these made you smile! Bottom code: model = llm.get_model(), conversation = model.conversation(), mo.ui.chat(lambda messages:, conversation.prompt(messages[-1].content))](https://static.simonwillison.net/static/2024/marimo-pelican-jokes.jpg)

marimo.ui.chat() takes a function which is passed a list of Marimo chat messages (representing the current state of that widget) and returns a string - or other type of renderable object - to add as the next message in the chat. This makes it trivial to hook in any custom chat mechanism you like.

Marimo also ship their own built-in chat handlers for OpenAI, Anthropic and Google Gemini which you can use like this:

mo.ui.chat( mo.ai.llm.anthropic( "claude-3-5-sonnet-20240620", system_message="You are a helpful assistant.", api_key="sk-ant-...", ), show_configuration_controls=True )

Gemini 1.5 Flash-8B is now production ready (via) Gemini 1.5 Flash-8B is "a smaller and faster variant of 1.5 Flash" - and is now released to production, at half the price of the 1.5 Flash model.

It's really, really cheap:

- $0.0375 per 1 million input tokens on prompts <128K

- $0.15 per 1 million output tokens on prompts <128K

- $0.01 per 1 million input tokens on cached prompts <128K

Prices are doubled for prompts longer than 128K.

I believe images are still charged at a flat rate of 258 tokens, which I think means a single non-cached image with Flash should cost 0.00097 cents - a number so tiny I'm doubting if I got the calculation right.

OpenAI's cheapest model remains GPT-4o mini, at $0.15/1M input - though that drops to half of that for reused prompt prefixes thanks to their new prompt caching feature (or by half if you use batches, though those can’t be combined with OpenAI prompt caching. Gemini also offer half-off for batched requests).

Anthropic's cheapest model is still Claude 3 Haiku at $0.25/M, though that drops to $0.03/M for cached tokens (if you configure them correctly).

I've released llm-gemini 0.2 with support for the new model:

llm install -U llm-gemini

llm keys set gemini

# Paste API key here

llm -m gemini-1.5-flash-8b-latest "say hi"

At first, I struggled to understand why anyone would want to write this way. My dialogue with ChatGPT was frustratingly meandering, as though I were excavating an essay instead of crafting one. But, when I thought about the psychological experience of writing, I began to see the value of the tool. ChatGPT was not generating professional prose all at once, but it was providing starting points: interesting research ideas to explore; mediocre paragraphs that might, with sufficient editing, become usable. For all its inefficiencies, this indirect approach did feel easier than staring at a blank page; “talking” to the chatbot about the article was more fun than toiling in quiet isolation. In the long run, I wasn’t saving time: I still needed to look up facts and write sentences in my own voice. But my exchanges seemed to reduce the maximum mental effort demanded of me.

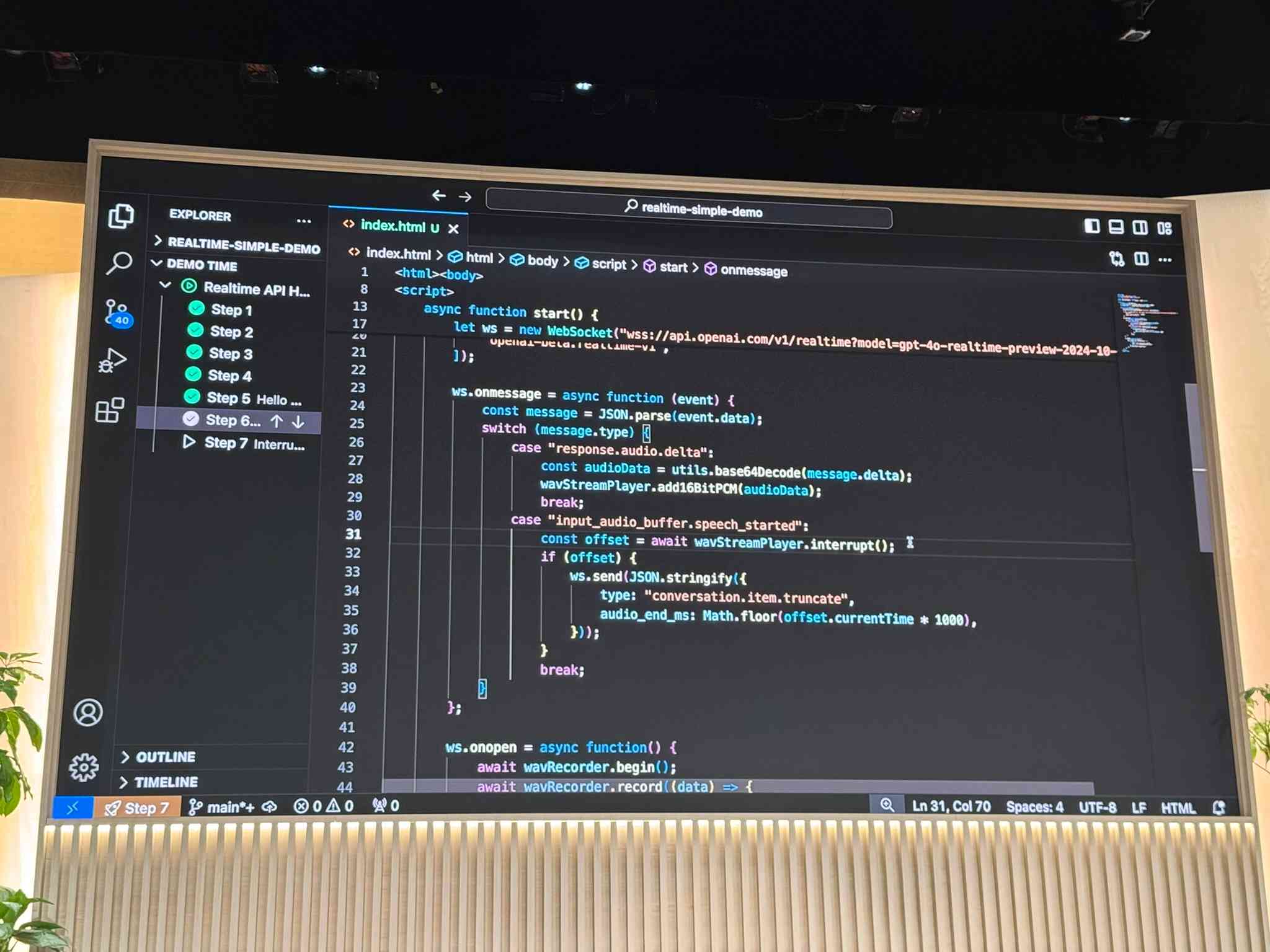

OpenAI DevDay: Let’s build developer tools, not digital God

I had a fun time live blogging OpenAI DevDay yesterday—I’ve now shared notes about the live blogging system I threw other in a hurry on the day (with assistance from Claude and GPT-4o). Now that the smoke has settled a little, here are my impressions from the event.

[... 2,090 words]Building an automatically updating live blog in Django. Here's an extended write-up of how I implemented the live blog feature I used for my coverage of OpenAI DevDay yesterday. I built the first version using Claude while waiting for the keynote to start, then upgraded it during the lunch break with the help of GPT-4o to add sort options and incremental fetching of new updates.

OpenAI DevDay 2024 live blog

I’m at OpenAI DevDay in San Francisco, and I’m trying something new: a live blog, where this entry will be updated with new notes during the event.

[... 68 words]I listened to the whole 15-minute podcast this morning. It was, indeed, surprisingly effective. It remains somewhere in the uncanny valley, but not at all in a creepy way. Just more in a “this is a bit vapid and phony” way. [...] But ultimately the conversation has all the flavor of a bowl of unseasoned white rice.

Weeknotes: Three podcasts, two trips and a new plugin system

I fell behind a bit on my weeknotes. Here’s most of what I’ve been doing in September.

[... 693 words]llama-3.2-webgpu (via) Llama 3.2 1B is a really interesting models, given its 128,000 token input and its tiny size (barely more than a GB).

This page loads a 1.24GB q4f16 ONNX build of the Llama-3.2-1B-Instruct model and runs it with a React-powered chat interface directly in the browser, using Transformers.js and WebGPU. Source code for the demo is here.

It worked for me just now in Chrome; in Firefox and Safari I got a “WebGPU is not supported by this browser” error message.

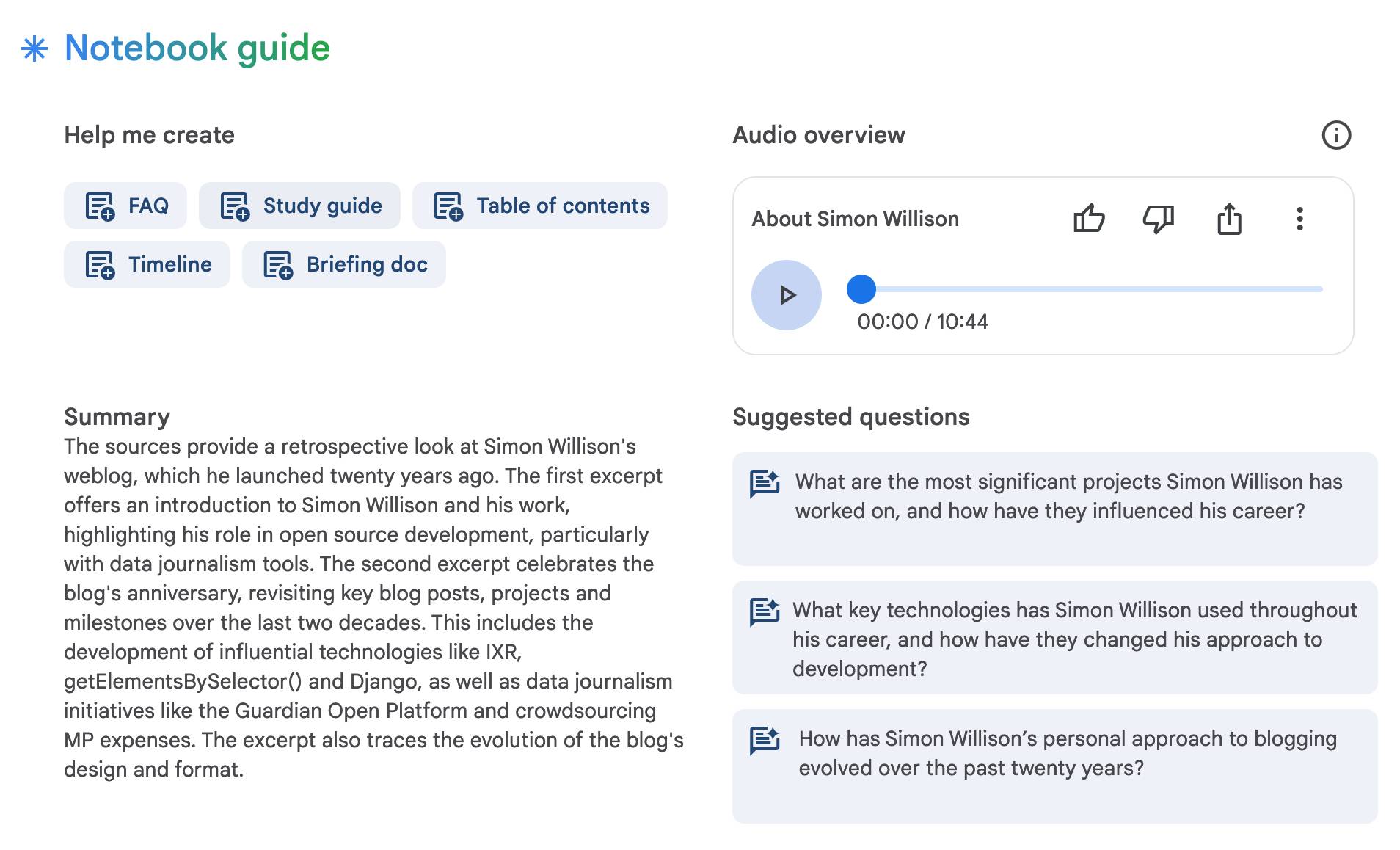

NotebookLM’s automatically generated podcasts are surprisingly effective

Audio Overview is a fun new feature of Google’s NotebookLM which is getting a lot of attention right now. It generates a one-off custom podcast against content you provide, where two AI hosts start up a “deep dive” discussion about the collected content. These last around ten minutes and are very podcast, with an astonishingly convincing audio back-and-forth conversation.

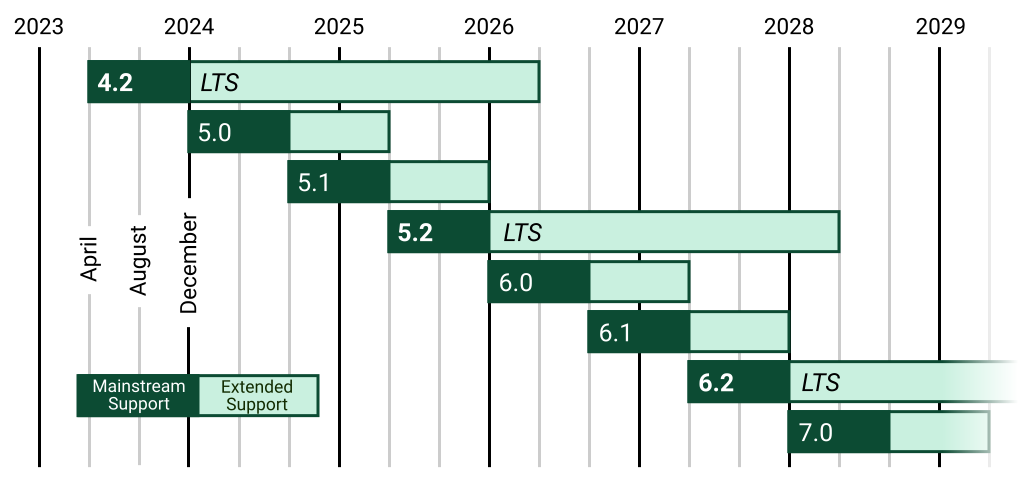

[... 1,489 words]mlx-vlm (via) The MLX ecosystem of libraries for running machine learning models on Apple Silicon continues to expand. Prince Canuma is actively developing this library for running vision models such as Qwen-2 VL and Pixtral and LLaVA using Python running on a Mac.

I used uv to run it against this image with this shell one-liner:

uv run --with mlx-vlm \

python -m mlx_vlm.generate \

--model Qwen/Qwen2-VL-2B-Instruct \

--max-tokens 1000 \

--temp 0.0 \

--image https://static.simonwillison.net/static/2024/django-roadmap.png \

--prompt "Describe image in detail, include all text"

The --image option works equally well with a URL or a path to a local file on disk.

This first downloaded 4.1GB to my ~/.cache/huggingface/hub/models--Qwen--Qwen2-VL-2B-Instruct folder and then output this result, which starts:

The image is a horizontal timeline chart that represents the release dates of various software versions. The timeline is divided into years from 2023 to 2029, with each year represented by a vertical line. The chart includes a legend at the bottom, which distinguishes between different types of software versions.

Legend

Mainstream Support:

- 4.2 (2023)

- 5.0 (2024)

- 5.1 (2025)

- 5.2 (2026)

- 6.0 (2027) [...]

In the future, we won't need programmers; just people who can describe to a computer precisely what they want it to do.

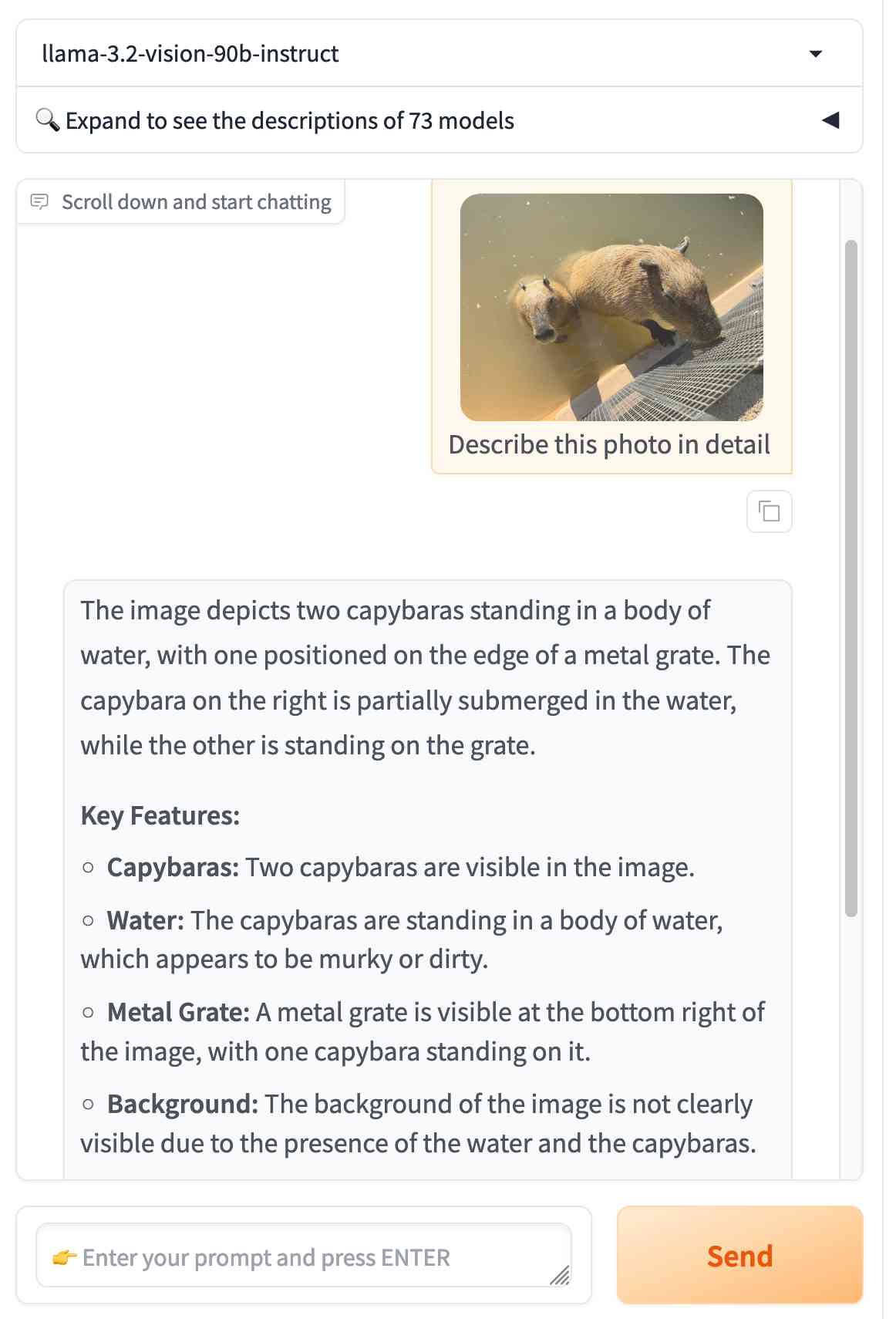

Llama 3.2. In further evidence that AI labs are terrible at naming things, Llama 3.2 is a huge upgrade to the Llama 3 series - they've released their first multi-modal vision models!

Today, we’re releasing Llama 3.2, which includes small and medium-sized vision LLMs (11B and 90B), and lightweight, text-only models (1B and 3B) that fit onto edge and mobile devices, including pre-trained and instruction-tuned versions.

The 1B and 3B text-only models are exciting too, with a 128,000 token context length and optimized for edge devices (Qualcomm and MediaTek hardware get called out specifically).

Meta partnered directly with Ollama to help with distribution, here's the Ollama blog post. They only support the two smaller text-only models at the moment - this command will get the 3B model (2GB):

ollama run llama3.2

And for the 1B model (a 1.3GB download):

ollama run llama3.2:1b

I had to first upgrade my Ollama by clicking on the icon in my macOS task tray and selecting "Restart to update".

The two vision models are coming to Ollama "very soon".

Once you have fetched the Ollama model you can access it from my LLM command-line tool like this:

pipx install llm

llm install llm-ollama

llm chat -m llama3.2:1b

I tried running my djp codebase through that tiny 1B model just now and got a surprisingly good result - by no means comprehensive, but way better than I would ever expect from a model of that size:

files-to-prompt **/*.py -c | llm -m llama3.2:1b --system 'describe this code'

Here's a portion of the output:

The first section defines several test functions using the

@djp.hookimpldecorator from the djp library. These hook implementations allow you to intercept and manipulate Django's behavior.

test_middleware_order: This function checks that the middleware order is correct by comparing theMIDDLEWAREsetting with a predefined list.test_middleware: This function tests various aspects of middleware:- It retrieves the response from the URL

/from-plugin/using theClientobject, which simulates a request to this view.- It checks that certain values are present in the response:

X-DJP-Middleware-AfterX-DJP-MiddlewareX-DJP-Middleware-Before[...]

I found the GGUF file that had been downloaded by Ollama in my ~/.ollama/models/blobs directory. The following command let me run that model directly in LLM using the llm-gguf plugin:

llm install llm-gguf

llm gguf register-model ~/.ollama/models/blobs/sha256-74701a8c35f6c8d9a4b91f3f3497643001d63e0c7a84e085bed452548fa88d45 -a llama321b

llm chat -m llama321b

Meta themselves claim impressive performance against other existing models:

Our evaluation suggests that the Llama 3.2 vision models are competitive with leading foundation models, Claude 3 Haiku and GPT4o-mini on image recognition and a range of visual understanding tasks. The 3B model outperforms the Gemma 2 2.6B and Phi 3.5-mini models on tasks such as following instructions, summarization, prompt rewriting, and tool-use, while the 1B is competitive with Gemma.

Here's the Llama 3.2 collection on Hugging Face. You need to accept the new Llama 3.2 Community License Agreement there in order to download those models.

You can try the four new models out via the Chatbot Arena - navigate to "Direct Chat" there and select them from the dropdown menu. You can upload images directly to the chat there to try out the vision features.

Solving a bug with o1-preview, files-to-prompt and LLM.

I added a new feature to DJP this morning: you can now have plugins specify their middleware in terms of how it should be positioned relative to other middleware - inserted directly before or directly after django.middleware.common.CommonMiddleware for example.

At one point I got stuck with a weird test failure, and after ten minutes of head scratching I decided to pipe the entire thing into OpenAI's o1-preview to see if it could spot the problem. I used files-to-prompt to gather the code and LLM to run the prompt:

files-to-prompt **/*.py -c | llm -m o1-preview "

The middleware test is failing showing all of these - why is MiddlewareAfter repeated so many times?

['MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware2', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware4', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware2', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware', 'MiddlewareBefore']"The model whirled away for a few seconds and spat out an explanation of the problem - one of my middleware classes was accidentally calling self.get_response(request) in two different places.

I did enjoy how o1 attempted to reference the relevant Django documentation and then half-repeated, half-hallucinated a quote from it:

This took 2,538 input tokens and 4,354 output tokens - by my calculations at $15/million input and $60/million output that prompt cost just under 30 cents.

The Pragmatic Engineer Podcast: AI tools for software engineers, but without the hype – with Simon Willison. Gergely Orosz has a brand new podcast, and I was the guest for the first episode. We covered a bunch of ground, but my favorite topic was an exploration of the (very legitimate) reasons that many engineers are resistant to taking advantage of AI-assisted programming tools.

Updated production-ready Gemini models.

Two new models from Google Gemini today: gemini-1.5-pro-002 and gemini-1.5-flash-002. Their -latest aliases will update to these new models in "the next few days", and new -001 suffixes can be used to stick with the older models. The new models benchmark slightly better in various ways and should respond faster.

Flash continues to have a 1,048,576 input token and 8,192 output token limit. Pro is 2,097,152 input tokens.

Google also announced a significant price reduction for Pro, effective on the 1st of October. Inputs less than 128,000 tokens drop from $3.50/million to $1.25/million (above 128,000 tokens it's dropping from $7 to $5) and output costs drop from $10.50/million to $2.50/million ($21 down to $10 for the >128,000 case).

For comparison, GPT-4o is currently $5/m input and $15/m output and Claude 3.5 Sonnet is $3/m input and $15/m output. Gemini 1.5 Pro was already the cheapest of the frontier models and now it's even cheaper.

Correction: I missed gpt-4o-2024-08-06 which is listed later on the OpenAI pricing page and priced at $2.50/m input and $10/m output. So the new Gemini 1.5 Pro prices are undercutting that.

Gemini has always offered finely grained safety filters - it sounds like those are now turned down to minimum by default, which is a welcome change:

For the models released today, the filters will not be applied by default so that developers can determine the configuration best suited for their use case.

Also interesting: they've tweaked the expected length of default responses:

For use cases like summarization, question answering, and extraction, the default output length of the updated models is ~5-20% shorter than previous models.

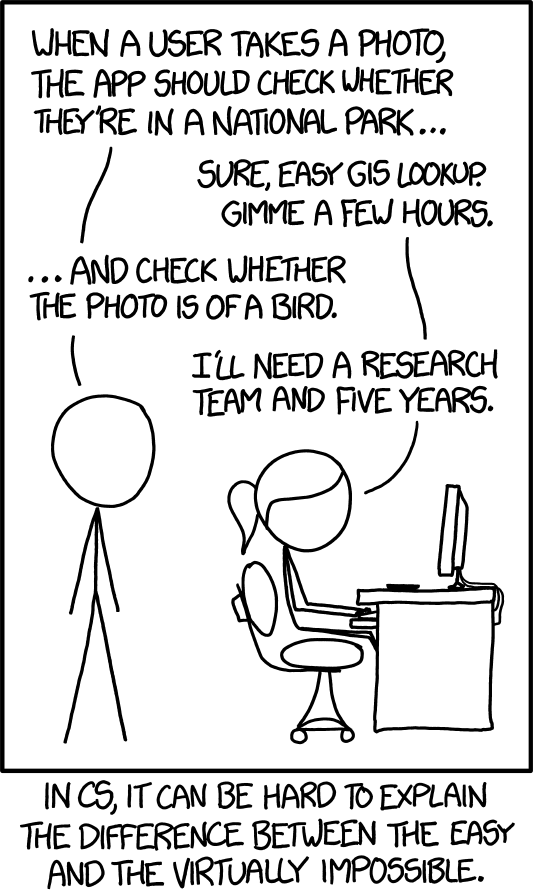

XKCD 1425 (Tasks) turns ten years old today (via) One of the all-time great XKCDs. It's amazing that "check whether the photo is of a bird" has gone from PhD-level to trivially easy to solve (with a vision LLM, or CLIP, or ResNet+ImageNet among others).

The key idea still very much stands though. Understanding the difference between easy and hard challenges in software development continues to require an enormous depth of experience.

I'd argue that LLMs have made this even worse.

Understanding what kind of tasks LLMs can and cannot reliably solve remains incredibly difficult and unintuitive. They're computer systems that are terrible at maths and that can't reliably lookup facts!

On top of that, the rise of AI-assisted programming tools means more people than ever are beginning to create their own custom software.

These brand new AI-assisted proto-programmers are having a crash course in this easy-v.s.-hard problem.

I saw someone recently complaining that they couldn't build a Claude Artifact that could analyze images, even though they knew Claude itself could do that. Understanding why that's not possible involves understanding how the CSP headers that are used to serve Artifacts prevent the generated code from making its own API calls out to an LLM!

How streaming LLM APIs work.

New TIL. I used curl to explore the streaming APIs provided by OpenAI, Anthropic and Google Gemini and wrote up detailed notes on what I learned.

Also includes example code for receiving streaming events in Python with HTTPX and receiving streaming events in client-side JavaScript using fetch().

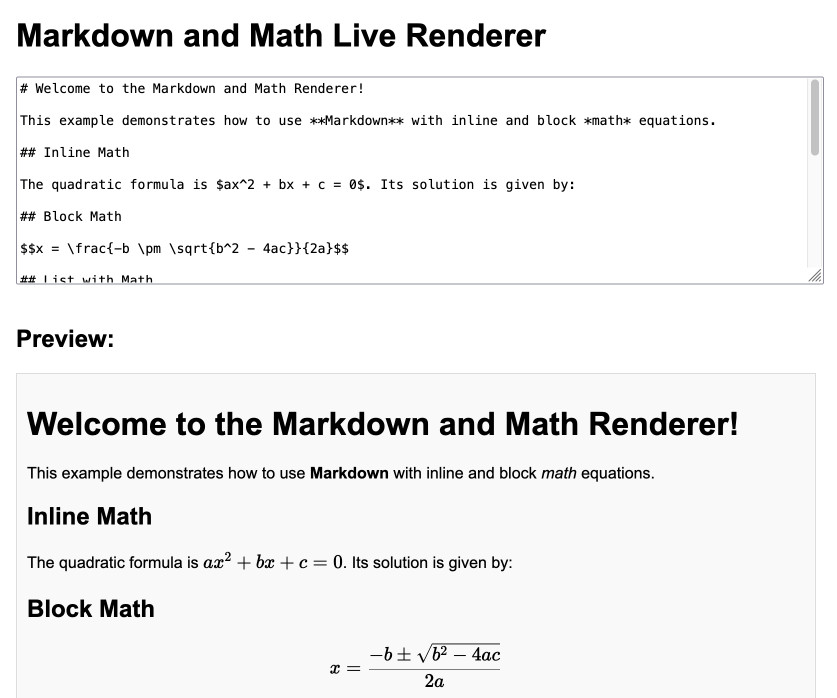

Markdown and Math Live Renderer.

Another of my tiny Claude-assisted JavaScript tools. This one lets you enter Markdown with embedded mathematical expressions (like $ax^2 + bx + c = 0$) and live renders those on the page, with an HTML version using MathML that you can export through copy and paste.

Here's the Claude transcript. I started by asking:

Are there any client side JavaScript markdown libraries that can also handle inline math and render it?

Claude gave me several options including the combination of Marked and KaTeX, so I followed up by asking:

Build an artifact that demonstrates Marked plus KaTeX - it should include a text area I can enter markdown in (repopulated with a good example) and live update the rendered version below. No react.

Which gave me this artifact, instantly demonstrating that what I wanted to do was possible.

I iterated on it a tiny bit to get to the final version, mainly to add that HTML export and a Copy button. The final source code is here.

YouTube Thumbnail Viewer.

I wanted to find the best quality thumbnail image for a YouTube video, so I could use it as a social media card. I know from past experience that GPT-4 has memorized the various URL patterns for img.youtube.com, so I asked it to guess the URL for my specific video.

This piqued my interest as to what the other patterns were, so I got it to spit those out too. Then, to save myself from needing to look those up again in the future, I asked it to build me a little HTML and JavaScript tool for turning a YouTube video URL into a set of visible thumbnails.

I iterated on the code a bit more after pasting it into Claude and ended up with this, now hosted in my tools collection.

Notes on using LLMs for code

I was recently the guest on TWIML—the This Week in Machine Learning & AI podcast. Our episode is titled Supercharging Developer Productivity with ChatGPT and Claude with Simon Willison, and the focus of the conversation was the ways in which I use LLM tools in my day-to-day work as a software developer and product engineer.

[... 861 words]Introducing Contextual Retrieval (via) Here's an interesting new embedding/RAG technique, described by Anthropic but it should work for any embedding model against any other LLM.

One of the big challenges in implementing semantic search against vector embeddings - often used as part of a RAG system - is creating "chunks" of documents that are most likely to semantically match queries from users.

Anthropic provide this solid example where semantic chunks might let you down:

Imagine you had a collection of financial information (say, U.S. SEC filings) embedded in your knowledge base, and you received the following question: "What was the revenue growth for ACME Corp in Q2 2023?"

A relevant chunk might contain the text: "The company's revenue grew by 3% over the previous quarter." However, this chunk on its own doesn't specify which company it's referring to or the relevant time period, making it difficult to retrieve the right information or use the information effectively.

Their proposed solution is to take each chunk at indexing time and expand it using an LLM - so the above sentence would become this instead:

This chunk is from an SEC filing on ACME corp's performance in Q2 2023; the previous quarter's revenue was $314 million. The company's revenue grew by 3% over the previous quarter.

This chunk was created by Claude 3 Haiku (their least expensive model) using the following prompt template:

<document>

{{WHOLE_DOCUMENT}}

</document>

Here is the chunk we want to situate within the whole document

<chunk>

{{CHUNK_CONTENT}}

</chunk>

Please give a short succinct context to situate this chunk within the overall document for the purposes of improving search retrieval of the chunk. Answer only with the succinct context and nothing else.

Here's the really clever bit: running the above prompt for every chunk in a document could get really expensive thanks to the inclusion of the entire document in each prompt. Claude added context caching last month, which allows you to pay around 1/10th of the cost for tokens cached up to your specified beakpoint.

By Anthropic's calculations:

Assuming 800 token chunks, 8k token documents, 50 token context instructions, and 100 tokens of context per chunk, the one-time cost to generate contextualized chunks is $1.02 per million document tokens.

Anthropic provide a detailed notebook demonstrating an implementation of this pattern. Their eventual solution combines cosine similarity and BM25 indexing, uses embeddings from Voyage AI and adds a reranking step powered by Cohere.

The notebook also includes an evaluation set using JSONL - here's that evaluation data in Datasette Lite.

Moshi (via) Moshi is "a speech-text foundation model and full-duplex spoken dialogue framework". It's effectively a text-to-text model - like an LLM but you input audio directly to it and it replies with its own audio.

It's fun to play around with, but it's not particularly useful in comparison to other pure text models: I tried to talk to it about California Brown Pelicans and it gave me some very basic hallucinated thoughts about California Condors instead.

It's very easy to run locally, at least on a Mac (and likely on other systems too). I used uv and got the 8 bit quantized version running as a local web server using this one-liner:

uv run --with moshi_mlx python -m moshi_mlx.local_web -q 8

That downloads ~8.17G of model to a folder in ~/.cache/huggingface/hub/ - or you can use -q 4 and get a 4.81G version instead (albeit even lower quality).

o1 prompting is alien to me. Its thinking, gloriously effective at times, is also dreamlike and unamenable to advice.

Just say what you want and pray. Any notes on “how” will be followed with the diligence of a brilliant intern on ketamine.

Speed matters (via) Jamie Brandon in 2021, talking about the importance of optimizing for the speed at which you can work as a developer:

Being 10x faster also changes the kinds of projects that are worth doing.

Last year I spent something like 100 hours writing a text editor. […] If I was 10x slower it would have been 20-50 weeks. Suddenly that doesn't seem like such a good deal any more - what a waste of a year!

It’s not just about speed of writing code:

When I think about speed I think about the whole process - researching, planning, designing, arguing, coding, testing, debugging, documenting etc.

Often when I try to convince someone to get faster at one of those steps, they'll argue that the others are more important so it's not worthwhile trying to be faster. Eg choosing the right idea is more important than coding the wrong idea really quickly.

But that's totally conditional on the speed of everything else! If you could code 10x as fast then you could try out 10 different ideas in the time it would previously have taken to try out 1 idea. Or you could just try out 1 idea, but have 90% of your previous coding time available as extra idea time.

Jamie’s model here helps explain the effect I described in AI-enhanced development makes me more ambitious with my projects. Prompting an LLM to write portions of my code for me gives me that 5-10x boost in the time I spend typing code into a computer, which has a big effect on my ambitions despite being only about 10% of the activities I perform relevant to building software.

I also increasingly lean on LLMs as assistants in the research phase - exploring library options, building experimental prototypes - and for activities like writing tests and even a little bit of documentation.

[… OpenAI’s o1] could work its way to a correct (and well-written) solution if provided a lot of hints and prodding, but did not generate the key conceptual ideas on its own, and did make some non-trivial mistakes. The experience seemed roughly on par with trying to advise a mediocre, but not completely incompetent, graduate student. However, this was an improvement over previous models, whose capability was closer to an actually incompetent graduate student.

It's a bit sad and confusing that LLMs ("Large Language Models") have little to do with language; It's just historical. They are highly general purpose technology for statistical modeling of token streams. A better name would be Autoregressive Transformers or something.

They don't care if the tokens happen to represent little text chunks. It could just as well be little image patches, audio chunks, action choices, molecules, or whatever. If you can reduce your problem to that of modeling token streams (for any arbitrary vocabulary of some set of discrete tokens), you can "throw an LLM at it".

Believe it or not, the name Strawberry does not come from the “How many r’s are in strawberry” meme. We just chose a random word. As far as we know it was a complete coincidence.

— Noam Brown, OpenAI

o1-mini is the most surprising research result I've seen in the past year

Obviously I cannot spill the secret, but a small model getting >60% on AIME math competition is so good that it's hard to believe

— Jason Wei, OpenAI

LLM 0.16.

New release of LLM adding support for the o1-preview and o1-mini OpenAI models that were released today.