Blogmarks

Filters: Sorted by date

Why The Atlantic signed a deal with OpenAI. Interesting conversation between Nilay Patel and The Atlantic CEO (and former journalist/editor) Nicholas Thompson about the relationship between media organizations and LLM companies like OpenAI.

On the impact of these deals on the ongoing New York Times lawsuit:

One of the ways that we [The Atlantic] can help the industry is by making deals and setting a market. I believe that us doing a deal with OpenAI makes it easier for us to make deals with the other large language model companies if those come about, I think it makes it easier for other journalistic companies to make deals with OpenAI and others, and I think it makes it more likely that The Times wins their lawsuit.

How could it help? Because deals like this establish a market value for training content, important for the fair use component of the legal argument.

The economics of a Postgres free tier (via) Xata offer a hosted PostgreSQL service with a generous free tier (15GB of volume). I'm very suspicious of free tiers that don't include a detailed breakdown of the unit economics... and in this post they've described exactly that, in great detail.

The trick is that they run their free tier on shared clusters - with each $630/month cluster supporting 2,000 free instances for $0.315 per instance per month. Then inactive databases get downgraded to even cheaper auto-scaling clusters that can host 20,000 databases for $180/month (less than 1c each).

They also cover the volume cost of $0.10/GB/month - so up to $1.50/month per free instance, but most instances only use a small portion of that space.

It's reassuring to see this spelled out in so much detail.

Early Apple tech bloggers are shocked to find their name and work have been AI-zombified (via)

TUAW (“The Unofficial Apple Weblog”) was shut down by AOL in 2015, but this past year, a new owner scooped up the domain and began posting articles under the bylines of former writers who haven’t worked there for over a decade.

They're using AI-generated images against real names of original contributors, then publishing LLM-rewritten articles because they didn't buy the rights to the original content!

Anthropic cookbook: multimodal. I'm currently on the lookout for high quality sources of information about vision LLMs, including prompting tricks for getting the most out of them.

This set of Jupyter notebooks from Anthropic (published four months ago to accompany the original Claude 3 models) is the best I've found so far. Best practices for using vision with Claude includes advice on multi-shot prompting with example, plus this interesting think step-by-step style prompt for improving Claude's ability to count the dogs in an image:

You have perfect vision and pay great attention to detail which makes you an expert at counting objects in images. How many dogs are in this picture? Before providing the answer in

<answer>tags, think step by step in<thinking>tags and analyze every part of the image.

Vision language models are blind (via) A new paper exploring vision LLMs, comparing GPT-4o, Gemini 1.5 Pro, Claude 3 Sonnet and Claude 3.5 Sonnet (I'm surprised they didn't include Claude 3 Opus and Haiku, which are more interesting than Claude 3 Sonnet in my opinion).

I don't like the title and framing of this paper. They describe seven tasks that vision models have trouble with - mainly geometric analysis like identifying intersecting shapes or counting things - and use those to support the following statement:

The shockingly poor performance of four state-of-the-art VLMs suggests their vision is, at best, like of a person with myopia seeing fine details as blurry, and at worst, like an intelligent person that is blind making educated guesses.

While the failures they describe are certainly interesting, I don't think they justify that conclusion.

I've felt starved for information about the strengths and weaknesses of these vision LLMs since the good ones started becoming available last November (GPT-4 Vision at OpenAI DevDay) so identifying tasks like this that they fail at is useful. But just like pointing out an LLM can't count letters doesn't mean that LLMs are useless, these limitations of vision models shouldn't be used to declare them "blind" as a sweeping statement.

Claude: You can now publish, share, and remix artifacts. Artifacts is the feature Anthropic released a few weeks ago to accompany Claude 3.5 Sonnet, allowing Claude to create interactive HTML+JavaScript tools in response to prompts.

This morning they added the ability to make those artifacts public and share links to them, which makes them even more useful!

Here's my box shadow playground from the other day, and an example page I requested demonstrating the Milligram CSS framework - Artifacts can load most code that is available via cdnjs so they're great for quickly trying out new libraries.

hangout_services/thunk.js

(via)

It turns out Google Chrome (via Chromium) includes a default extension which makes extra services available to code running on the *.google.com domains - tweeted about today by Luca Casonato, but the code has been there in the public repo since October 2013 as far as I can tell.

It looks like it's a way to let Google Hangouts (or presumably its modern predecessors) get additional information from the browser, including the current load on the user's CPU. Update: On Hacker News a Googler confirms that the Google Meet "troubleshooting" feature uses this to review CPU utilization.

I got GPT-4o to help me figure out how to trigger it (I tried Claude 3.5 Sonnet first but it refused, saying "Doing so could potentially violate terms of service or raise security and privacy concerns"). Paste the following into your Chrome DevTools console on any Google site to see the result:

chrome.runtime.sendMessage(

"nkeimhogjdpnpccoofpliimaahmaaome",

{ method: "cpu.getInfo" },

(response) => {

console.log(JSON.stringify(response, null, 2));

},

);

I get back a response that starts like this:

{

"value": {

"archName": "arm64",

"features": [],

"modelName": "Apple M2 Max",

"numOfProcessors": 12,

"processors": [

{

"usage": {

"idle": 26890137,

"kernel": 5271531,

"total": 42525857,

"user": 10364189

}

}, ...

The code doesn't do anything on non-Google domains.

Luca says this - I'm inclined to agree:

This is interesting because it is a clear violation of the idea that browser vendors should not give preference to their websites over anyone elses.

Deactivating an API, one step at a time (via) Bruno Pedro describes a sensible approach for web API deprecation, using API keys to first block new users from using the old API, then track which existing users are depending on the old version and reaching out to them with a sunset period.

The only suggestion I'd add is to implement API brownouts - short periods of time where the deprecated API returns errors, several months before the final deprecation. This can help give users who don't read emails from you notice that they need to pay attention before their integration breaks entirely.

I've seen GitHub use this brownout technique successfully several times over the last few years - here's one example.

Jevons paradox (via) I've been thinking recently about how the demand for professional software engineers might be affected by the fact that LLMs are getting so good at producing working code, when prompted in the right way.

One possibility is that the price for writing code will fall, in a way that massively increases the demand for custom solutions - resulting in a greater demand for software engineers since the increased value they can provide makes it much easier to justify the expense of hiring them in the first place.

TIL about the related idea of the Jevons paradox, currently explained by Wikipedia like so:

[...] when technological progress increases the efficiency with which a resource is used (reducing the amount necessary for any one use), but the falling cost of use induces increases in demand enough that resource use is increased, rather than reduced.

Type click type by Brian Grubb. I just found out my favourite TV writer, Brian Grubb, is no longer with Uproxx and is now writing for his own newsletter - free on Sunday, paid-subscribers only on Friday. I hit subscribe so fast.

In addition to TV, Brian's coverage of heists - most recently Lego and an attempted heist of Graceland ("It really does look like a bunch of idiots tried to steal and auction off Graceland using Hotmail accounts and they almost got away with it") - is legendary.

I'd love to see more fun little Friday night shows too.

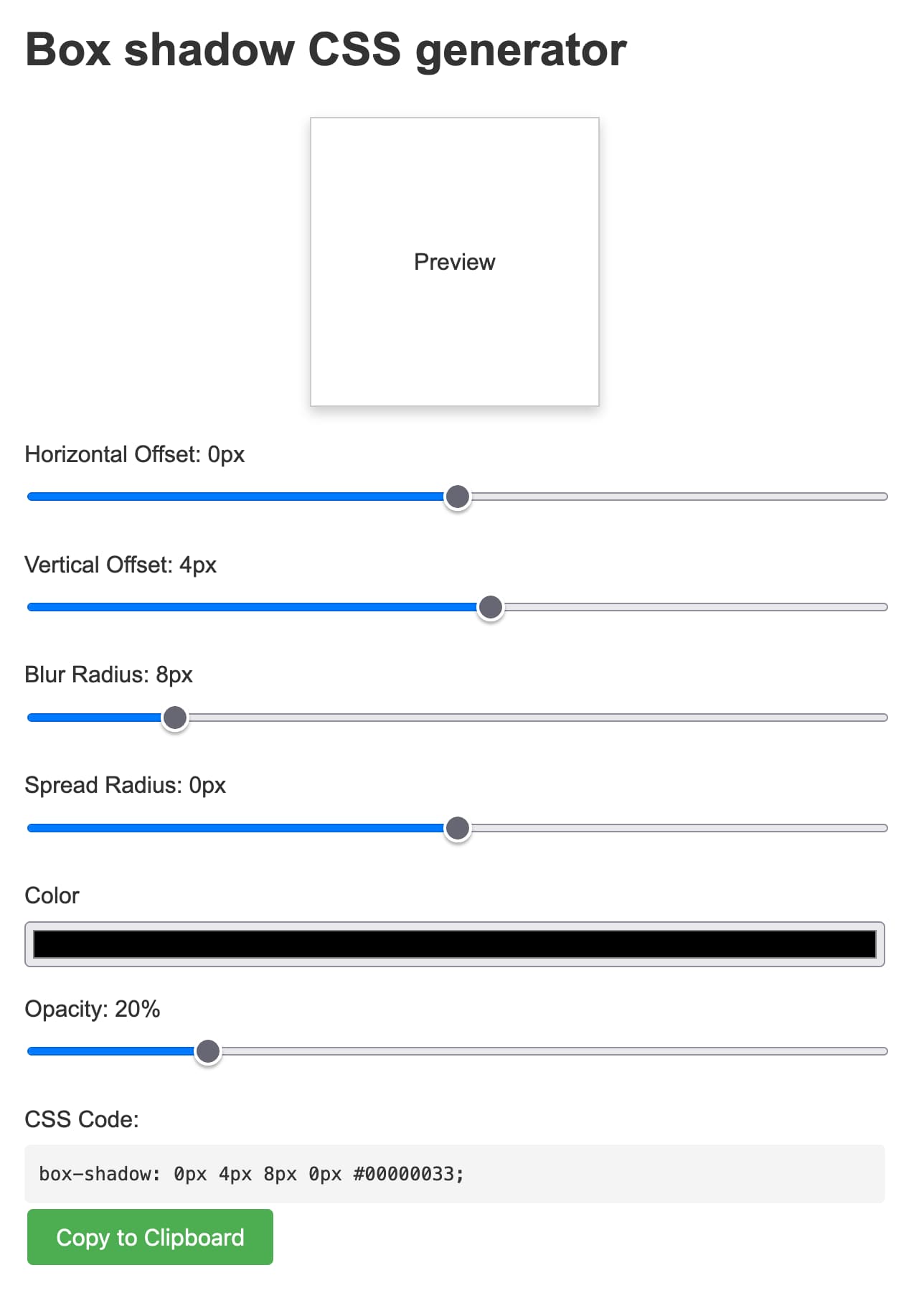

Box shadow CSS generator (via) Another example of a tiny personal tool I built using Claude 3.5 Sonnet and artifacts. In this case my prompt was:

CSS for a slight box shadow, build me a tool that helps me twiddle settings and preview them and copy and paste out the CSS

I changed my mind half way through typing the prompt and asked it for a custom tool, and it built me this!

Here's the full transcript - in a follow-up prompt I asked for help deploying it and it rewrote the tool to use <script type="text/babel"> and the babel-standalone library to add React JSX support directly in the browser - a bit of a hefty dependency (387KB compressed / 2.79MB total) but I think acceptable for this kind of one-off tool.

Being able to knock out tiny custom tools like this on a whim is a really interesting new capability. It's also a lot of fun!

Geomys, a blueprint for a sustainable open source maintenance firm (via) Filippo Valsorda has been working as a full-time professional open source maintainer for nearly two years now, accepting payments on retainer from companies that depend on his cryptography Go packages.

This has worked well enough that he's now expanding: Geomys (a genus of gophers) is a new company which adds two new "associate maintainers" and an administrative director, covering more projects and providing clients with access to more expertise.

Filipino describes the model like this:

If you’re betting your business on a critical open source technology, you

- want it to be sustainably and predictably maintained; and

- need occasional access to expertise that would be blisteringly expensive to acquire and retain.

Getting maintainers on retainer solves both problems for a fraction of the cost of a fully-loaded full-time engineer. From the maintainers’ point of view, it’s steady income to keep doing what they do best, and to join one more Slack Connect channel to answer high-leverage questions. It’s a great deal for both sides.

For more on this model, watch Filippo's FOSDEM talk from earlier this year.

Reasons to use your shell’s job control.

Julia Evans summarizes an informal survey of useful things you can do with shell job control features - fg, bg, Ctrl+Z and the like. Running tcdump in the background so you can see its output merged in with calls to curl is a neat trick.

Home-Cooked Software and Barefoot Developers. I really enjoyed this talk by Maggie Appleton from this year's Local-first Conference in Berlin.

For the last ~year I've been keeping a close eye on how language models capabilities meaningfully change the speed, ease, and accessibility of software development. The slightly bold theory I put forward in this talk is that we're on a verge of a golden age of local, home-cooked software and a new kind of developer – what I've called the barefoot developer.

It's a great talk, and the design of the slides is outstanding.

It reminded me of Robin Sloan's An app can be a home-cooked meal, which Maggie references in the talk. Also relevant: this delightful recent Hacker News thread, Ask HN: Is there any software you only made for your own use but nobody else?

My favourite version of our weird new LLM future is one where the pool of people who can use computers to automate things in their life is massively expanded.

The other videos from the conference are worth checking out too.

interactive-feed (via) Sam Morris maintains this project which gathers interactive, graphic and data visualization stories from various newsrooms around the world and publishes them on Twitter, Mastodon and Bluesky.

It runs automatically using GitHub Actions, and gathers data using a number of different techniques - XML feeds, custom API integrations (for the NYT, Guardian and Washington Post) and in some cases by scraping index pages on news websites using CSS selectors and cheerio.

The data it collects is archived as JSON in the data/ directory of the repository.

UK Parliament election results, now with Datasette. The House of Commons Library maintains a website of UK parliamentary election results data, currently listing 2010 through 2019 and with 2024 results coming soon.

The site itself is a Rails and PostgreSQL app, but I was delighted to learn today that they're also running a Datasette instance with the election results data, linked to from their homepage!

The raw data is also available as CSV files in their GitHub repository. Here's their Datasette configuration, which includes a copy of their SQLite database.

Tracking Fireworks Impact on Fourth of July AQI

(via)

Danny Page ran shot-scraper once per minute (using cron) against this Purple Air map of the Bay Area and turned the captured screenshots into an animation using ffmpeg. The result shows the impact of 4th of July fireworks on air quality between 7pm and 7am.

jqjq: jq implementation of jq (via) 2,854 lines of jq that implements a full, working version of jq itself. “A great way to show that jq is a very expressive, capable and neat language!”

Exorcising us of the Primer (via) Andy Matuschak talks about the need for educational technologists to break free from the siren's call of "The Young Lady’s Illustrated Primer" - the universal interactive textbook described by Neal Stephenson in his novel The Diamond Age.

The Primer offers an incredibly compelling vision, and Andy uses fifteen years of his own experience exploring related ideas to pick it apart and highlight its flaws.

I want to exorcise myself of the Primer. I want to clearly delineate what makes its vision so compelling—what I want to carry in my heart as a creative fuel. But I also want to sharply clarify the lessons we shouldn’t take from the Primer, and what it simply ignores. Then I want to reconstitute all that into something new, a vision I can use to drive my work forward.

On the Primer's authoritarianism:

The Primer has an agenda. It is designed to instill a set of values and ideas, and while it’s supportive of Nell’s curiosities, those are “side quests” to its central structure. Each of the twelve “Lands Beyond” focuses on different topics, but they’re not specific to Nell, and Nell didn’t choose them. In fact, Nell doesn’t even know the Primer’s goals for her—she’s never told. Its goals are its own privileged secret. Nell is manipulated so completely by the Primer, for so much of her life, that it’s hard to determine whether she has meaningful goals or values, other than those the Primer’s creators have deemed “good for her”.

I'm also reminded of Stephenson's piece of advice to people who may have missed an important lesson from the novel:

Kids need to get answers from humans who love them.

Chrome Prompt Playground.

Google Chrome Canary is currently shipping an experimental on-device LLM, in the form of Gemini Nano. You can access it via the new window.ai API, after first enabling the "Prompt API for Gemini Nano" experiment in chrome://flags (and then waiting an indeterminate amount of time for the ~1.7GB model file to download - I eventually spotted it in ~/Library/Application Support/Google/Chrome Canary/OptGuideOnDeviceModel).

I got Claude 3.5 Sonnet to build me this playground interface for experimenting with the model. You can execute prompts, stream the responses and all previous prompts and responses are stored in localStorage.

Here's the full Sonnet transcript, and the final source code for the app.

The best documentation I've found for the new API is is explainers-by-googlers/prompt-api on GitHub.

gemma-2-27b-it-llamafile (via) Justine Tunney shipped llamafile packages of Google's new openly licensed (though definitely not open source) Gemma 2 27b model this morning.

I downloaded the gemma-2-27b-it.Q5_1.llamafile version (20.5GB) to my Mac, ran chmod 755 gemma-2-27b-it.Q5_1.llamafile and then ./gemma-2-27b-it.Q5_1.llamafile and now I'm trying it out through the llama.cpp default web UI in my browser. It works great.

It's a very capable model - currently sitting at position 12 on the LMSYS Arena making it the highest ranked open weights model - one position ahead of Llama-3-70b-Instruct and within striking distance of the GPT-4 class models.

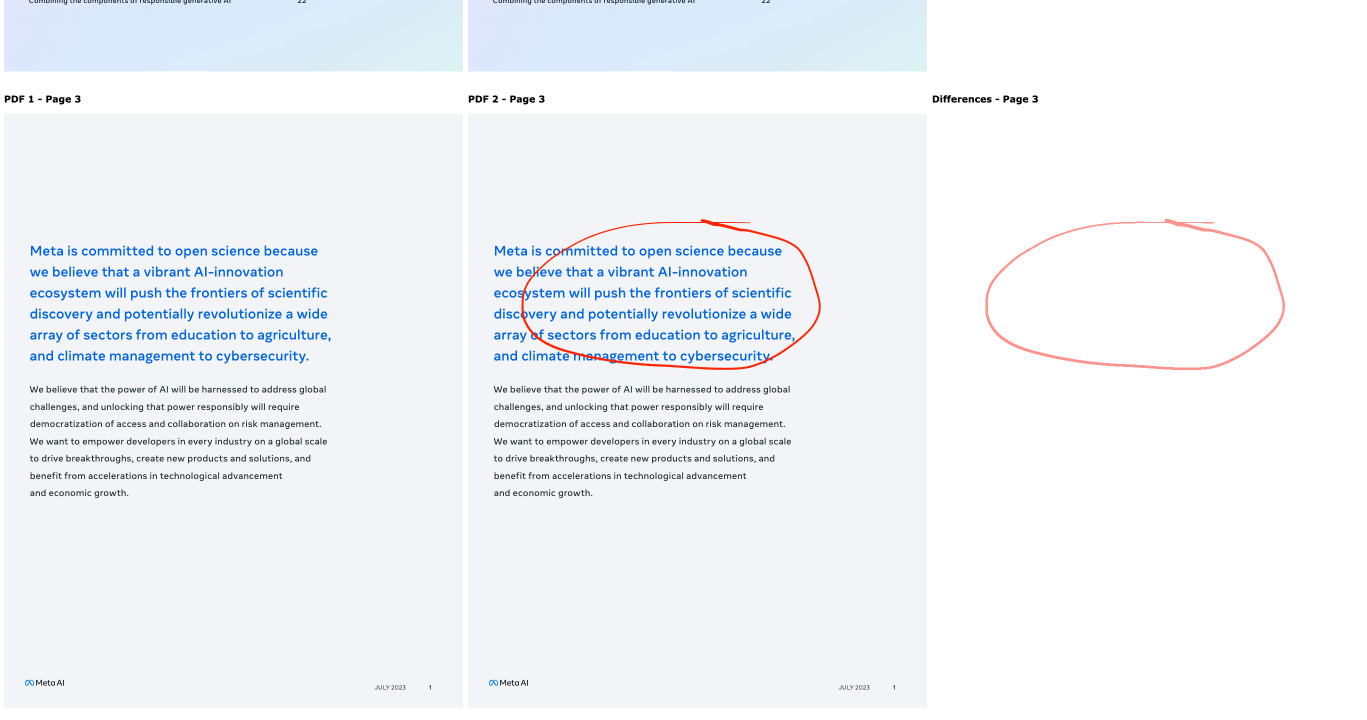

Compare PDFs. Inspired by this thread on Hacker News about the C++ diff-pdf tool I decided to see what it would take to produce a web-based PDF diff visualization tool using Claude 3.5 Sonnet.

It took two prompts:

Build a tool where I can drag and drop on two PDF files and it uses PDF.js to turn each of their pages into canvas elements and then displays those pages side by side with a third image that highlights any differences between them, if any differences exist

That give me a React app that didn't quite work, so I followed-up with this:

rewrite that code to not use React at all

Which gave me a working tool! You can see the full Claude transcript in this Gist. Here's a screenshot of the tool in action:

Being able to knock out little custom interactive web tools like this in a couple of minutes is so much fun.

Optimizing Large-Scale OpenStreetMap Data with SQLite (via) JT Archie describes his project to take 9GB of compressed OpenStreetMap protobufs data for the whole of the United States and load it into a queryable SQLite database.

OSM tags are key/value pairs. The trick used here for FTS-accelerated tag queries is really neat: build a SQLite FTS table containing the key/value pairs as space concatenated text, then run queries that look like this:

SELECT

id

FROM

entries e

JOIN search s ON s.rowid = e.id

WHERE

-- use FTS index to find subset of possible results

search MATCH 'amenity cafe'

-- use the subset to find exact matches

AND tags->>'amenity' = 'cafe';

JT ended up building a custom SQLite Go extension, SQLiteZSTD, to further accelerate things by supporting queries against read-only zstd compresses SQLite files. Apparently zstd has a feature that allows "compressed data to be stored so that subranges of the data can be efficiently decompressed without requiring the entire document to be decompressed", which works well with SQLite's page format.

Russell Keith-Magee: Build a cross-platform app with BeeWare. The session videos from PyCon US 2024 have started showing up on YouTube. So far just for the tutorials, which gave me a chance to catch up on the BeeWare project with this tutorial run by Russell Keith-Magee.

Here are the accompanying slides (PDF), or you can work through the official tutorial in the BeeWare documentation.

The tutorial did a great job of clarifying the difference between Briefcase and Toga, the two key components of the BeeWare ecosystem - each of which can be used independently of the other.

Briefcase solves packaging and installation: it allows a Python project to be packaged as a native application across macOS, Windows, iOS, Android and various flavours of Linux.

Toga is a toolkit for building cross-platform GUI applications in Python. A UI built using Toga will render with native widgets across all of those supported platforms, and experimental new modes also allow Toga apps to run as SPA web applications and as Rich-powered terminal tools (via toga-textual).

Russell is excellent at both designing and presenting tutorial-style workshops, and I made a bunch of mental notes on the structure of this one which I hope to apply to my own in the future.

Announcing the Ladybird Browser Initiative (via) Andreas Kling's Ladybird is a really exciting project: a from-scratch implementation of a web browser, initially built as part of the Serenity OS project, which aims to provide a completely independent, open source and fully standards compliant browser.

Last month Andreas forked Ladybird away from Serenity, recognizing that the potential impact of the browser project on its own was greater than as a component of that project. Crucially, Serenity OS avoids any outside code - splitting out Ladybird allows Ladybird to add dependencies like libjpeg and ffmpeg. The Ladybird June update video talks through some of the dependencies they've been able to add since making that decision.

The new Ladybird Browser Initiative puts some financial weight behind the project: it's a US 501(c)(3) non-profit initially funded with $1m from GitHub co-founder Chris Chris Wanstrath. The money is going on engineers: Andreas says:

We are 4 full-time engineers today, and we'll be adding another 3 in the near future

Here's a 2m28s video from Chris introducing the new foundation and talking about why this project is worth supporting.

A write-ahead log is not a universal part of durability (via) Phil Eaton uses pseudo code to provide a clear description of how write-ahead logs in transactional database systems work, useful for understanding the tradeoffs they make and the guarantees they can provided.

I particularly liked the pseudo code explanation of group commits, where clients block waiting for their commit to be acknowledged as part of a batch of writes flushed to disk.

The Super Effectiveness of Pokémon Embeddings Using Only Raw JSON and Images.

A deep dive into embeddings from Max Woolf, exploring 1,000 different Pokémon (loaded from PokéAPI using this epic GraphQL query) and then embedding the cleaned up JSON data using nomic-embed-text-v1.5 and the official Pokémon image representations using nomic-embed-vision-v1.5.

I hadn't seen nomic-embed-vision-v1.5 before: it brings multimodality to Nomic embeddings and operates in the same embedding space as nomic-embed-text-v1.5 which means you can use it to perform CLIP-style tricks comparing text and images. Here's their announcement from June 5th:

Together, Nomic Embed is the only unified embedding space that outperforms OpenAI CLIP and OpenAI Text Embedding 3 Small on multimodal and text tasks respectively.

Sadly the new vision weights are available under a non-commercial Creative Commons license (unlike the text weights which are Apache 2), so if you want to use the vision weights commercially you'll need to access them via Nomic's paid API.

Nomic do say this though:

As Nomic releases future models, we intend to re-license less recent models in our catalogue under the Apache-2.0 license.

Update 17th January 2025: Nomic Embed Vision 1.5 is now Apache 2.0 licensed.

marimo.app. The Marimo reactive notebook (previously) - a Python notebook that's effectively a cross between Jupyter and Observable - now also has a version that runs entirely in your browser using WebAssembly and Pyodide. Here's the documentation.

Accidental GPT-4o voice preview (via) Reddit user RozziTheCreator was one of a small group who were accidentally granted access to the new multimodal GPT-4o audio voice feature. They captured this video of it telling them a spooky story, complete with thunder sound effects added to the background and in a very realistic voice that clearly wasn't the one from the 4o demo that sounded similar to Scarlet Johansson.

OpenAI provided a comment for this Tom's Guide story confirming the accidental rollout so I don't think this is a faked video.

Serving a billion web requests with boring code (via) Bill Mill provides a deep retrospective from his work helping build a relaunch of the medicare.gov/plan-compare site.

It's a fascinating case study of the choose boring technology mantra put into action. The "boring" choices here were PostgreSQL, Go and React, all three of which are so widely used and understood at this point that you're very unlikely to stumble into surprises with them.

Key goals for the site were accessibility, in terms of users, devices and performance. Despite best efforts:

The result fell prey after a few years to a common failure mode of react apps, and became quite heavy and loaded somewhat slowly.

I've seen this pattern myself many times over, and I'd love to understand why. React itself isn't a particularly large dependency but somehow it always seems to lead to architectural bloat over time. Maybe that's more of an SPA thing than something that's specific to React.

Loads of other interesting details in here. The ETL details - where brand new read-only RDS databases were spun up every morning after a four hour build process - are particularly notable.