1,616 posts tagged “generative-ai”

Machine learning systems that can generate new content: text, images, audio, video and more.

2025

Getting DeepSeek-OCR working on an NVIDIA Spark via brute force using Claude Code

DeepSeek released a new model yesterday: DeepSeek-OCR, a 6.6GB model fine-tuned specifically for OCR. They released it as model weights that run using PyTorch and CUDA. I got it running on the NVIDIA Spark by having Claude Code effectively brute force the challenge of getting it working on that particular hardware.

[... 1,971 words]TIL: Exploring OpenAI’s deep research API model o4-mini-deep-research. I landed a PR by Manuel Solorzano adding pricing information to llm-prices.com for OpenAI's o4-mini-deep-research and o3-deep-research models, which they released in June and document here.

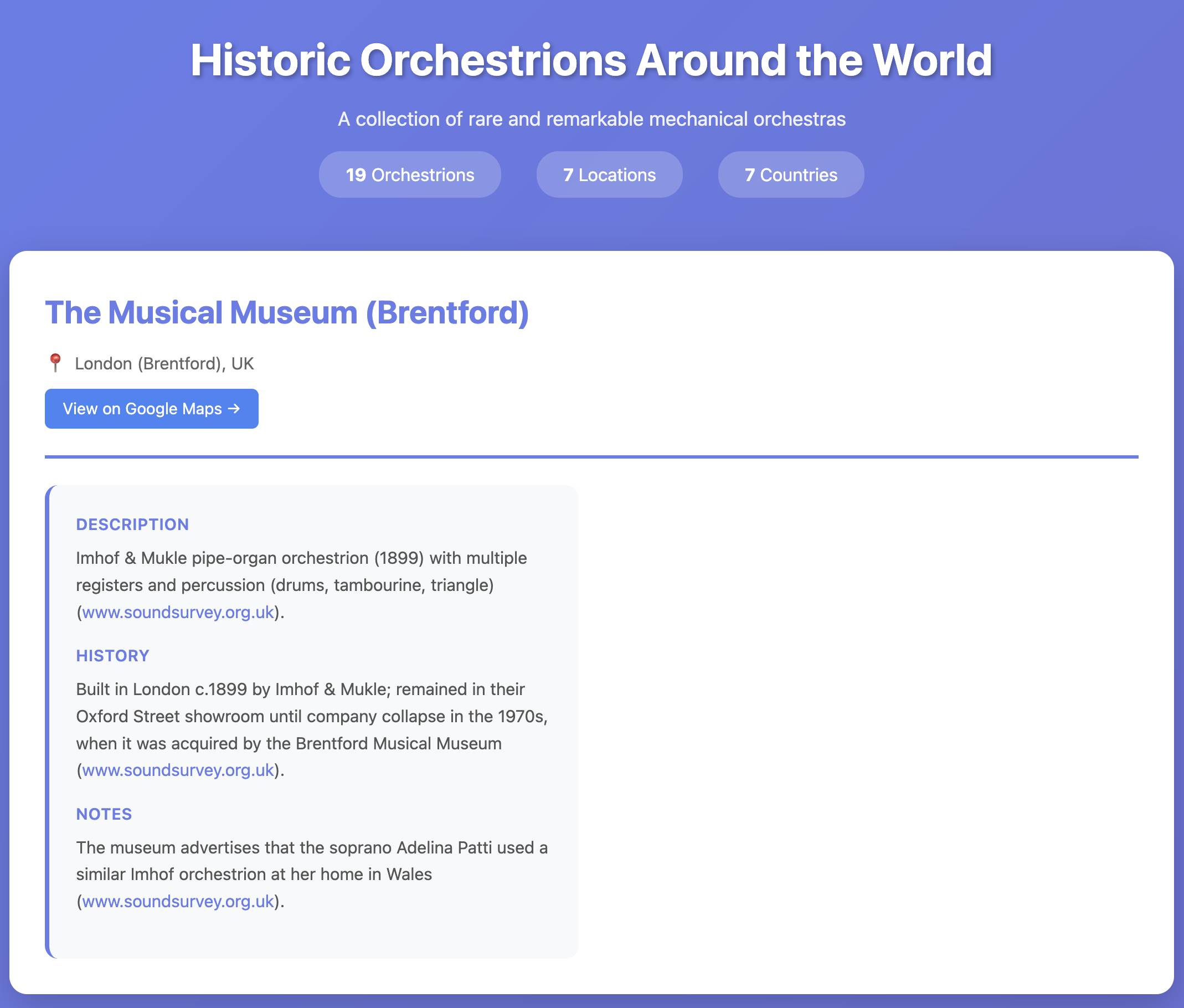

I realized I'd never tried these before, so I put o4-mini-deep-research through its paces researching locations of surviving orchestrions for me (I really like orchestrions).

The API cost me $1.10 and triggered a small flurry of extra vibe-coded tools, including this new tool for visualizing Responses API traces from deep research models and this mocked up page listing the 19 orchestrions it found (only one of which I have fact-checked myself).

Andrej Karpathy — AGI is still a decade away (via) Extremely high signal 2 hour 25 minute (!) conversation between Andrej Karpathy and Dwarkesh Patel.

It starts with Andrej's claim that "the year of agents" is actually more likely to take a decade. Seeing as I accepted 2025 as the year of agents just yesterday this instantly caught my attention!

It turns out Andrej is using a different definition of agents to the one that I prefer - emphasis mine:

When you’re talking about an agent, or what the labs have in mind and maybe what I have in mind as well, you should think of it almost like an employee or an intern that you would hire to work with you. For example, you work with some employees here. When would you prefer to have an agent like Claude or Codex do that work?

Currently, of course they can’t. What would it take for them to be able to do that? Why don’t you do it today? The reason you don’t do it today is because they just don’t work. They don’t have enough intelligence, they’re not multimodal enough, they can’t do computer use and all this stuff.

They don’t do a lot of the things you’ve alluded to earlier. They don’t have continual learning. You can’t just tell them something and they’ll remember it. They’re cognitively lacking and it’s just not working. It will take about a decade to work through all of those issues.

Yeah, continual learning human-replacement agents definitely isn't happening in 2025! Coding agents that are really good at running tools in the loop on the other hand are here already.

I loved this bit introducing an analogy of LLMs as ghosts or spirits, as opposed to having brains like animals or humans:

Brains just came from a very different process, and I’m very hesitant to take inspiration from it because we’re not actually running that process. In my post, I said we’re not building animals. We’re building ghosts or spirits or whatever people want to call it, because we’re not doing training by evolution. We’re doing training by imitation of humans and the data that they’ve put on the Internet.

You end up with these ethereal spirit entities because they’re fully digital and they’re mimicking humans. It’s a different kind of intelligence. If you imagine a space of intelligences, we’re starting off at a different point almost. We’re not really building animals. But it’s also possible to make them a bit more animal-like over time, and I think we should be doing that.

The post Andrej mentions is Animals vs Ghosts on his blog.

Dwarkesh asked Andrej about this tweet where he said that Claude Code and Codex CLI "didn't work well enough at all and net unhelpful" for his nanochat project. Andrej responded:

[...] So the agents are pretty good, for example, if you’re doing boilerplate stuff. Boilerplate code that’s just copy-paste stuff, they’re very good at that. They’re very good at stuff that occurs very often on the Internet because there are lots of examples of it in the training sets of these models. There are features of things where the models will do very well.

I would say nanochat is not an example of those because it’s a fairly unique repository. There’s not that much code in the way that I’ve structured it. It’s not boilerplate code. It’s intellectually intense code almost, and everything has to be very precisely arranged. The models have so many cognitive deficits. One example, they kept misunderstanding the code because they have too much memory from all the typical ways of doing things on the Internet that I just wasn’t adopting.

Update: Here's an essay length tweet from Andrej clarifying a whole bunch of the things he talked about on the podcast.

Skills actually came out of a prototype I built demonstrating that Claude Code is a general-purpose agent :-)

It was a natural conclusion once we realized that bash + filesystem were all we needed

— Barry Zhang, Anthropic

Claude Skills are awesome, maybe a bigger deal than MCP

Anthropic this morning introduced Claude Skills, a new pattern for making new abilities available to their models:

[... 1,864 words]NVIDIA DGX Spark + Apple Mac Studio = 4x Faster LLM Inference with EXO 1.0 (via) EXO Labs wired a 256GB M3 Ultra Mac Studio up to an NVIDIA DGX Spark and got a 2.8x performance boost serving Llama-3.1 8B (FP16) with an 8,192 token prompt.

Their detailed explanation taught me a lot about LLM performance.

There are two key steps in executing a prompt. The first is the prefill phase that reads the incoming prompt and builds a KV cache for each of the transformer layers in the model. This is compute-bound as it needs to process every token in the input and perform large matrix multiplications across all of the layers to initialize the model's internal state.

Performance in the prefill stage influences TTFT - time‑to‑first‑token.

The second step is the decode phase, which generates the output one token at a time. This part is limited by memory bandwidth - there's less arithmetic, but each token needs to consider the entire KV cache.

Decode performance influences TPS - tokens per second.

EXO noted that the Spark has 100 TFLOPS but only 273GB/s of memory bandwidth, making it a better fit for prefill. The M3 Ultra has 26 TFLOPS but 819GB/s of memory bandwidth, making it ideal for the decode phase.

They run prefill on the Spark, streaming the KV cache to the Mac over 10Gb Ethernet. They can start streaming earlier layers while the later layers are still being calculated. Then the Mac runs the decode phase, returning tokens faster than if the Spark had run the full process end-to-end.

Pro se litigants [people representing themselves in court without a lawyer] account for the majority of the cases in the United States where a party submitted a court filing containing AI hallucinations. In a country where legal representation is unaffordable for most people, it is no wonder that pro se litigants are depending on free or low-cost AI tools. But it is a scandal that so many have been betrayed by them, to the detriment of the cases they are litigating all on their own.

— Riana Pfefferkorn, analyzing the AI Hallucination Cases database for CIS at Stanford Law

Last year the most useful exercise for getting a feel for how good LLMs were at writing code was vibe coding (before that name had even been coined) - seeing if you could create a useful small application through prompting alone.

Today I think there's a new, more ambitious and significantly more intimidating exercise: spend a day working on real production code through prompting alone, making no manual edits yourself.

This doesn't mean you can't control exactly what goes into each file - you can even tell the model "update line 15 to use this instead" if you have to - but it's a great way to get more of a feel for how well the latest coding agents can wield their edit tools.

While Sonnet 4.5 remains the default [in Claude Code], Haiku 4.5 now powers the Explore subagent which can rapidly gather context on your codebase to build apps even faster.

You can select Haiku 4.5 to be your default model in /model. When selected, you’ll automatically use Sonnet 4.5 in Plan mode and Haiku 4.5 for execution for smarter plans and faster results.

— Catherine Wu, Claude Code PM, Anthropic

Introducing Claude Haiku 4.5 (via) Anthropic released Claude Haiku 4.5 today, the cheapest member of the Claude 4.5 family that started with Sonnet 4.5 a couple of weeks ago.

It's priced at $1/million input tokens and $5/million output tokens, slightly more expensive than Haiku 3.5 ($0.80/$4) and a lot more expensive than the original Claude 3 Haiku ($0.25/$1.25), both of which remain available at those prices.

It's a third of the price of Sonnet 4 and Sonnet 4.5 (both $3/$15) which is notable because Anthropic's benchmarks put it in a similar space to that older Sonnet 4 model. As they put it:

What was recently at the frontier is now cheaper and faster. Five months ago, Claude Sonnet 4 was a state-of-the-art model. Today, Claude Haiku 4.5 gives you similar levels of coding performance but at one-third the cost and more than twice the speed.

I've been hoping to see Anthropic release a fast, inexpensive model that's price competitive with the cheapest models from OpenAI and Gemini, currently $0.05/$0.40 (GPT-5-Nano) and $0.075/$0.30 (Gemini 2.0 Flash Lite). Haiku 4.5 certainly isn't that, it looks like they're continuing to focus squarely on the "great at code" part of the market.

The new Haiku is the first Haiku model to support reasoning. It sports a 200,000 token context window, 64,000 maximum output (up from just 8,192 for Haiku 3.5) and a "reliable knowledge cutoff" of February 2025, one month later than the January 2025 date for Sonnet 4 and 4.5 and Opus 4 and 4.1.

Something that caught my eye in the accompanying system card was this note about context length:

For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much context-window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic “laziness” (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).

I've added the new price to llm-prices.com, released llm-anthropic 0.20 with the new model and updated my Haiku-from-your-webcam demo (source) to use Haiku 4.5 as well.

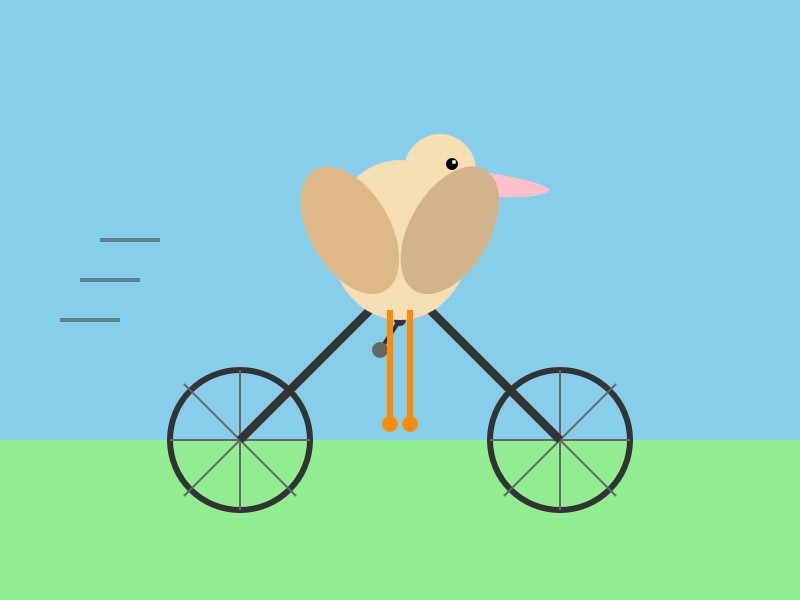

Here's llm -m claude-haiku-4.5 'Generate an SVG of a pelican riding a bicycle' (transcript).

18 input tokens and 1513 output tokens = 0.7583 cents.

Previous system cards have reported results on an expanded version of our earlier agentic misalignment evaluation suite: three families of exotic scenarios meant to elicit the model to commit blackmail, attempt a murder, and frame someone for financial crimes. We choose not to report full results here because, similarly to Claude Sonnet 4.5, Claude Haiku 4.5 showed many clear examples of verbalized evaluation awareness on all three of the scenarios tested in this suite. Since the suite only consisted of many similar variants of three core scenarios, we expect that the model maintained high unverbalized awareness across the board, and we do not trust it to be representative of behavior in the real extreme situations the suite is meant to emulate.

NVIDIA DGX Spark: great hardware, early days for the ecosystem

NVIDIA sent me a preview unit of their new DGX Spark desktop “AI supercomputer”. I’ve never had hardware to review before! You can consider this my first ever sponsored post if you like, but they did not pay me any cash and aside from an embargo date they did not request (nor would I grant) any editorial input into what I write about the device.

[... 1,846 words]Just Talk To It—the no-bs Way of Agentic Engineering. Peter Steinberger's long, detailed description of his current process for using Codex CLI and GPT-5 Codex. This is information dense and full of actionable tips, plus plenty of strong opinions about the differences between Claude 4.5 an GPT-5:

While Claude reacts well to 🚨 SCREAMING ALL-CAPS 🚨 commands that threaten it that it will imply ultimate failure and 100 kittens will die if it runs command X, that freaks out GPT-5. (Rightfully so). So drop all of that and just use words like a human.

Peter is a heavy user of parallel agents:

I've completely moved to

codexcli as daily driver. I run between 3-8 in parallel in a 3x3 terminal grid, most of them in the same folder, some experiments go in separate folders. I experimented with worktrees, PRs but always revert back to this setup as it gets stuff done the fastest.

He shares my preference for CLI utilities over MCPs:

I can just refer to a cli by name. I don't need any explanation in my agents file. The agent will try $randomcrap on the first call, the cli will present the help menu, context now has full info how this works and from now on we good. I don't have to pay a price for any tools, unlike MCPs which are a constant cost and garbage in my context. Use GitHub's MCP and see 23k tokens gone. Heck, they did make it better because it was almost 50.000 tokens when it first launched. Or use the

ghcli which has basically the same feature set, models already know how to use it, and pay zero context tax.

It's worth reading the section on why he abandoned spec driven development in full.

nanochat (via) Really interesting new project from Andrej Karpathy, described at length in this discussion post.

It provides a full ChatGPT-style LLM, including training, inference and a web Ui, that can be trained for as little as $100:

This repo is a full-stack implementation of an LLM like ChatGPT in a single, clean, minimal, hackable, dependency-lite codebase.

It's around 8,000 lines of code, mostly Python (using PyTorch) plus a little bit of Rust for training the tokenizer.

Andrej suggests renting a 8XH100 NVIDA node for around $24/ hour to train the model. 4 hours (~$100) is enough to get a model that can hold a conversation - almost coherent example here. Run it for 12 hours and you get something that slightly outperforms GPT-2. I'm looking forward to hearing results from longer training runs!

The resulting model is ~561M parameters, so it should run on almost anything. I've run a 4B model on my iPhone, 561M should easily fit on even an inexpensive Raspberry Pi.

The model defaults to training on ~24GB from karpathy/fineweb-edu-100b-shuffle derived from FineWeb-Edu, and then midtrains on 568K examples from SmolTalk (460K), MMLU auxiliary train (100K), and GSM8K (8K), followed by supervised finetuning on 21.4K examples from ARC-Easy (2.3K), ARC-Challenge (1.1K), GSM8K (8K), and SmolTalk (10K).

Here's the code for the web server, which is fronted by this pleasantly succinct vanilla JavaScript HTML+JavaScript frontend.

Update: Sam Dobson pushed a build of the model to sdobson/nanochat on Hugging Face. It's designed to run on CUDA but I pointed Claude Code at a checkout and had it hack around until it figured out how to run it on CPU on macOS, which eventually resulted in this script which I've published as a Gist. You should be able to try out the model using uv like this:

cd /tmp

git clone https://huggingface.co/sdobson/nanochat

uv run https://gist.githubusercontent.com/simonw/912623bf00d6c13cc0211508969a100a/raw/80f79c6a6f1e1b5d4485368ef3ddafa5ce853131/generate_cpu.py \

--model-dir /tmp/nanochat \

--prompt "Tell me about dogs."

I got this (truncated because it ran out of tokens):

I'm delighted to share my passion for dogs with you. As a veterinary doctor, I've had the privilege of helping many pet owners care for their furry friends. There's something special about training, about being a part of their lives, and about seeing their faces light up when they see their favorite treats or toys.

I've had the chance to work with over 1,000 dogs, and I must say, it's a rewarding experience. The bond between owner and pet

Claude Code includes the ability to run sub-agents, where a separate agent loop with a fresh token context is dispatched to achieve a goal and report back when it's done. I wrote a bit about how these work in June when I traced Claude Code's activity by intercepting its API calls.

I recently learned that you can deliberately trigger Claude Code to use sub-agents just by telling it to do so!

Here's an example. I ran this using Claude Code against a fresh checkout of simonw/datasette (commit ec38ad37689f3c14d307770977a17aed5efc5cb9), with no additional custom instructions:

Write markdown documentation for the context passed to each of the templates in this project. Use sub-agents.

Here's a snippet of the output:

⏺ I'll help you write markdown documentation for the context passed to each template in this project. Let me start by exploring the codebase to understand the template structure and then use sub-agents to document them comprehensively.

⏺ Search(pattern: "**/*.html") ⎿ Found 47 files (ctrl+o to expand) ⏺ Search(pattern: "render.*template|render_template") ⎿ Found 16 files (ctrl+o to expand)[...]

⏺ Now let me launch sub-agents to analyze different template contexts in parallel:

⏺ Task(Document index template context) ⎿ Done (12 tool uses · 86.4k tokens · 1m 13s) ⏺ Task(Document database template context) ⎿ Done (15 tool uses · 55.4k tokens · 1m 41s) ⏺ Task(Document table template context) ⎿ Done (14 tool uses · 85.2k tokens · 1m 44s) ⏺ Task(Document query/row template contexts) ⎿ Done (17 tool uses · 78.7k tokens · 1m 55s) ⏺ Task(Document special template contexts) ⎿ Done (26 tool uses · 116.1k tokens · 1m 50s)

It churned away for a couple of minutes (those sub-agents execute in parallel, another benefit of this pattern) and made me this template_contexts.md markdown file. It's very comprehensive.

Vibing a Non-Trivial Ghostty Feature (via) Mitchell Hashimoto provides a comprehensive answer to the frequent demand for a detailed description of shipping a non-trivial production feature to an existing project using AI-assistance. In this case it's a slick unobtrusive auto-update UI for his Ghostty terminal emulator, written in Swift.

Mitchell shares full transcripts of the 16 coding sessions he carried out using Amp Code across 2 days and around 8 hours of computer time, at a token cost of $15.98.

Amp has the nicest shared transcript feature of any of the coding agent tools, as seen in this example. I'd love to see Claude Code and Codex CLI and Gemini CLI and friends imitate this.

There are plenty of useful tips in here. I like this note about the importance of a cleanup step:

The cleanup step is really important. To cleanup effectively you have to have a pretty good understanding of the code, so this forces me to not blindly accept AI-written code. Subsequently, better organized and documented code helps future agentic sessions perform better.

I sometimes tongue-in-cheek refer to this as the "anti-slop session".

And this on how sometimes you can write manual code in a way that puts the agent the right track:

I spent some time manually restructured the view model. This involved switching to a tagged union rather than the struct with a bunch of optionals. I renamed some types, moved stuff around.

I knew from experience that this small bit of manual work in the middle would set the agents up for success in future sessions for both the frontend and backend. After completing it, I continued with a marathon set of cleanup sessions.

Here's one of those refactoring prompts:

Turn each @macos/Sources/Features/Update/UpdatePopoverView.swift case into a dedicated fileprivate Swift view that takes the typed value as its parameter so that we can remove the guards.

Mitchell advises ending every session with a prompt like this one, asking the agent about any obvious omissions:

Are there any other improvements you can see to be made with the @macos/Sources/Features/Update feature? Don't write any code. Consult the oracle. Consider parts of the code that can also get more unit tests added.

("Consult the oracle" is an Amp-specific pattern for running a task through a more expensive, more capable model.)

Is this all worthwhile? Mitchell thinks so:

Many people on the internet argue whether AI enables you to work faster or not. In this case, I think I shipped this faster than I would have if I had done it all myself, in particular because iterating on minor SwiftUI styling is so tedious and time consuming for me personally and AI does it so well.

I think the faster/slower argument for me personally is missing the thing I like the most: the AI can work for me while I step away to do other things.

Here's the resulting PR, which touches 21 files.

I'm beginning to suspect that a key skill in working effectively with coding agents is developing an intuition for when you don't need to closely review every line of code they produce. This feels deeply uncomfortable!

simonw/claude-skills. One of the tips I picked up from Jesse Vincent's Claude Code Superpowers post (previously) was this:

Skills are what give your agents Superpowers.

The first time they really popped up on my radar was a few weeks ago when Anthropic rolled out improved Office document creation. When the feature rolled out, I went poking around a bit -- I asked Claude to tell me all about its new skills. And it was only too happy to dish.

In that transcript Jesse asks how the skill system works and Claude replies:

Looking at the skills system, here's how it works:

Current Skills (in

/mnt/skills/public/):

- docx - Word document handling

- pdf - PDF processing and form filling

- pptx - PowerPoint presentations

- xlsx - Excel spreadsheets

This looks like it's part of Claude's brand new Code Interpreter feature! I wrote about that extensively last month, but I missed that there was a /mnt/skills/public/ folder full of fascinating implementation details.

So I fired up a fresh Claude instance (fun fact: Code Interpreter also works in the Claude iOS app now, which it didn't when they first launched) and prompted:

Create a zip file of everything in your /mnt/skills folder

This worked, and gave me a .zip to download. You can run the prompt yourself here, though you'll need to enable the new feature first.

I've pushed the contents of that zip to my new simonw/claude-skills GitHub repo.

So now you can see the prompts Anthropic wrote to enable the creation and manipulation of the following files in their Claude consumer applications:

In each case the prompts spell out detailed instructions for manipulating those file types using Python, using libraries that come pre-installed on Claude's containers.

Skills are more than just prompts though: the repository also includes dozens of pre-written Python scripts for performing common operations.

pdf/scripts/fill_fillable_fields.py for example is a custom CLI tool that uses pypdf to find and then fill in a bunch of PDF form fields, specified as JSON, then render out the resulting combined PDF.

This is a really sophisticated set of tools for document manipulation, and I love that Anthropic have made those visible - presumably deliberately - to users of Claude who know how to ask for them.

Superpowers: How I’m using coding agents in October 2025. A follow-up to Jesse Vincent's post about September, but this is a really significant piece in its own right.

Jesse is one of the most creative users of coding agents (Claude Code in particular) that I know. He's put a great amount of work into evolving an effective process for working with them, encourage red/green TDD (watch the test fail first), planning steps, self-updating memory notes and even implementing a feelings journal ("I feel engaged and curious about this project" - Claude).

Claude Code just launched plugins, and Jesse is celebrating by wrapping up a whole host of his accumulated tricks as a new plugin called Superpowers. You can add it to your Claude Code like this:

/plugin marketplace add obra/superpowers-marketplace

/plugin install superpowers@superpowers-marketplace

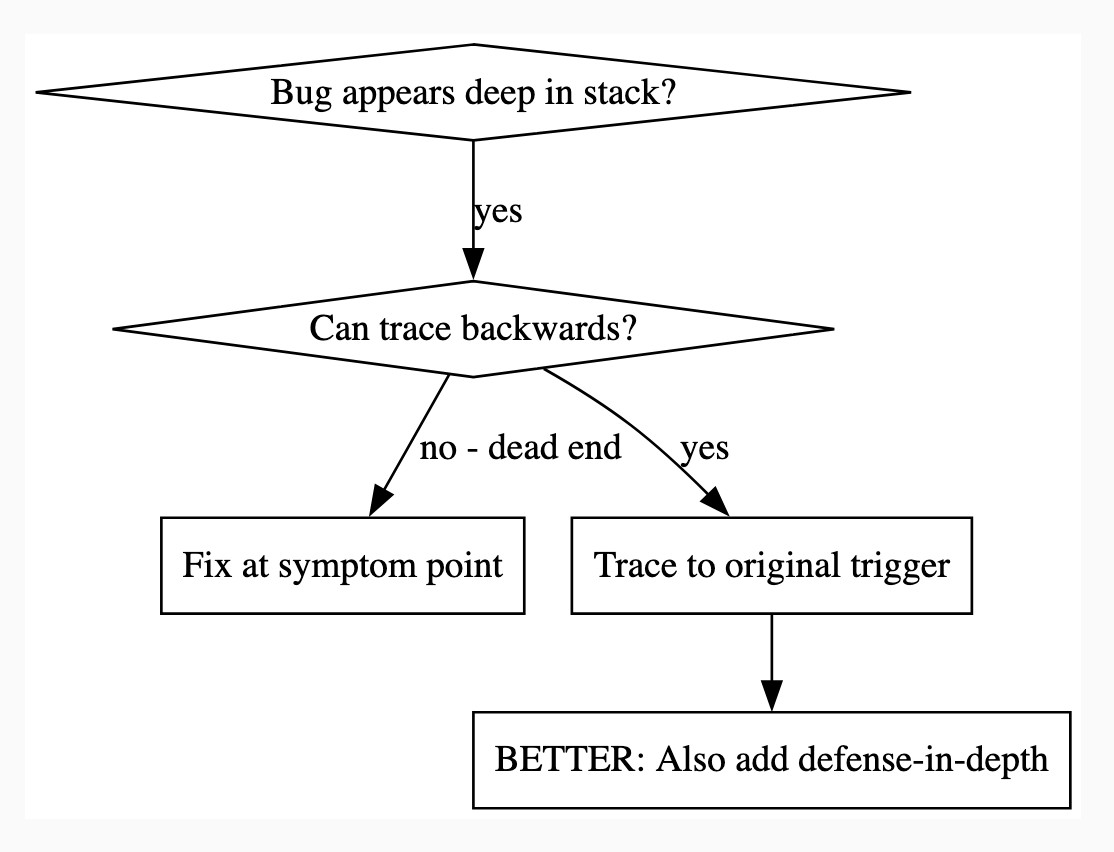

There's a lot in here! It's worth spending some time browsing the repository - here's just one fun example, in skills/debugging/root-cause-tracing/SKILL.md:

--- name: Root Cause Tracing description: Systematically trace bugs backward through call stack to find original trigger when_to_use: Bug appears deep in call stack but you need to find where it originates version: 1.0.0 languages: all ---Overview

Bugs often manifest deep in the call stack (git init in wrong directory, file created in wrong location, database opened with wrong path). Your instinct is to fix where the error appears, but that's treating a symptom.

Core principle: Trace backward through the call chain until you find the original trigger, then fix at the source.

When to Use

digraph when_to_use { "Bug appears deep in stack?" [shape=diamond]; "Can trace backwards?" [shape=diamond]; "Fix at symptom point" [shape=box]; "Trace to original trigger" [shape=box]; "BETTER: Also add defense-in-depth" [shape=box]; "Bug appears deep in stack?" -> "Can trace backwards?" [label="yes"]; "Can trace backwards?" -> "Trace to original trigger" [label="yes"]; "Can trace backwards?" -> "Fix at symptom point" [label="no - dead end"]; "Trace to original trigger" -> "BETTER: Also add defense-in-depth"; }[...]

This one is particularly fun because it then includes a Graphviz DOT graph illustrating the process - it turns out Claude can interpret those as workflow instructions just fine, and Jesse has been wildly experimenting with them.

I vibe-coded up a quick URL-based DOT visualizer, here's that one rendered:

There is so much to learn about putting these tools to work in the most effective way possible. Jesse is way ahead of the curve, so it's absolutely worth spending some time exploring what he's shared so far.

And if you're worried about filling up your context with a bunch of extra stuff, here's a reassuring note from Jesse:

The core of it is VERY token light. It pulls in one doc of fewer than 2k tokens. As it needs bits of the process, it runs a shell script to search for them. The long end to end chat for the planning and implementation process for that todo list app was 100k tokens.

It uses subagents to manage token-heavy stuff, including all the actual implementation.

(Jesse's post also tipped me off about Claude's /mnt/skills/public folder, see my notes here.)

Video of GPT-OSS 20B running on a phone. GPT-OSS 20B is a very good model. At launch OpenAI claimed:

The gpt-oss-20b model delivers similar results to OpenAI o3‑mini on common benchmarks and can run on edge devices with just 16 GB of memory

Nexa AI just posted a video on Twitter demonstrating exactly that: the full GPT-OSS 20B running on a Snapdragon Gen 5 phone in their Nexa Studio Android app. It requires at least 16GB of RAM, and benefits from Snapdragon using a similar trick to Apple Silicon where the system RAM is available to both the CPU and the GPU.

The latest iPhone 17 Pro Max is still stuck at 12GB of RAM, presumably not enough to run this same model.

I get a feeling that working with multiple AI agents is something that comes VERY natural to most senior+ engineers or tech lead who worked at a large company

You already got used to overseeing parallel work (the goto code reviewer!) + making progress with small chunks of work... because your day has been a series of nonstop interactions, so you had to figure out how to do deep work in small chunks that could have been interrupted

Claude can write complete Datasette plugins now

This isn’t necessarily surprising, but it’s worth noting anyway. Claude Sonnet 4.5 is capable of building a full Datasette plugin now.

[... 1,296 words]The cognitive debt of LLM-laden coding extends beyond disengagement of our craft. We’ve all heard the stories. Hyped up, vibed up, slop-jockeys with attention spans shorter than the framework-hopping JavaScript devs of the early 2010s, sling their sludge in pull requests and design docs, discouraging collaboration and disrupting teams. Code reviewing coworkers are rapidly losing their minds as they come to the crushing realization that they are now the first layer of quality control instead of one of the last. Asked to review; forced to pick apart. Calling out freshly added functions that are never called, hallucinated library additions, and obvious runtime or compilation errors. All while the author—who clearly only skimmed their “own” code—is taking no responsibility, going “whoopsie, Claude wrote that. Silly AI, ha-ha.”

— Simon Højberg, The Programmer Identity Crisis

Vibe engineering

I feel like vibe coding is pretty well established now as covering the fast, loose and irresponsible way of building software with AI—entirely prompt-driven, and with no attention paid to how the code actually works. This leaves us with a terminology gap: what should we call the other end of the spectrum, where seasoned professionals accelerate their work with LLMs while staying proudly and confidently accountable for the software they produce?

[... 1,313 words]Deloitte to pay money back to Albanese government after using AI in $440,000 report. Ouch:

Deloitte will provide a partial refund to the federal government over a $440,000 report that contained several errors, after admitting it used generative artificial intelligence to help produce it.

(I was initially confused by the "Albanese government" reference in the headline since this is a story about the Australian federal government. That's because the current Australia Prime Minister is Anthony Albanese.)

Here's the page for the report. The PDF now includes this note:

This Report was updated on 26 September 2025 and replaces the Report dated 4 July 2025. The Report has been updated to correct those citations and reference list entries which contained errors in the previously issued version, to amend the summary of the Amato proceeding which contained errors, and to make revisions to improve clarity and readability. The updates made in no way impact or affect the substantive content, findings and recommendations in the Report.

gpt-image-1-mini.

OpenAI released a new image model today: gpt-image-1-mini, which they describe as "A smaller image generation model that’s 80% less expensive than the large model."

They released it very quietly - I didn't hear about this in the DevDay keynote but I later spotted it on the DevDay 2025 announcements page.

It wasn't instantly obvious to me how to use this via their API. I ended up vibe coding a Python CLI tool for it so I could try it out.

I dumped the plain text diff version of the commit to the OpenAI Python library titled feat(api): dev day 2025 launches into ChatGPT GPT-5 Thinking and worked with it to figure out how to use the new image model and build a script for it. Here's the transcript and the the openai_image.py script it wrote.

I had it add inline script dependencies, so you can run it with uv like this:

export OPENAI_API_KEY="$(llm keys get openai)"

uv run https://tools.simonwillison.net/python/openai_image.py "A pelican riding a bicycle"

It picked this illustration style without me specifying it:

(This is a very different test from my normal "Generate an SVG of a pelican riding a bicycle" since it's using a dedicated image generator, not having a text-based model try to generate SVG code.)

My tool accepts a prompt, and optionally a filename (if you don't provide one it saves to a filename like /tmp/image-621b29.png).

It also accepts options for model and dimensions and output quality - the --help output lists those, you can see that here.

OpenAI's pricing is a little confusing. The model page claims low quality images should cost around half a cent and medium quality around a cent and a half. It also lists an image token price of $8/million tokens. It turns out there's a default "high" quality setting - most of the images I've generated have reported between 4,000 and 6,000 output tokens, which costs between 3.2 and 4.8 cents.

One last demo, this time using --quality low:

uv run https://tools.simonwillison.net/python/openai_image.py \

'racoon eating cheese wearing a top hat, realistic photo' \

/tmp/racoon-hat-photo.jpg \

--size 1024x1024 \

--output-format jpeg \

--quality low

This saved the following:

And reported this to standard error:

{

"background": "opaque",

"created": 1759790912,

"generation_time_in_s": 20.87331541599997,

"output_format": "jpeg",

"quality": "low",

"size": "1024x1024",

"usage": {

"input_tokens": 17,

"input_tokens_details": {

"image_tokens": 0,

"text_tokens": 17

},

"output_tokens": 272,

"total_tokens": 289

}

}

This took 21s, but I'm on an unreliable conference WiFi connection so I don't trust that measurement very much.

272 output tokens = 0.2 cents so this is much closer to the expected pricing from the model page.

GPT-5 pro. Here's OpenAI's model documentation for their GPT-5 pro model, released to their API today at their DevDay event.

It has similar base characteristics to GPT-5: both share a September 30, 2024 knowledge cutoff and 400,000 context limit.

GPT-5 pro has maximum output tokens 272,000 max, an increase from 128,000 for GPT-5.

As our most advanced reasoning model, GPT-5 pro defaults to (and only supports)

reasoning.effort: high

It's only available via OpenAI's Responses API. My LLM tool doesn't support that in core yet, but the llm-openai-plugin plugin does. I released llm-openai-plugin 0.7 adding support for the new model, then ran this:

llm install -U llm-openai-plugin

llm -m openai/gpt-5-pro "Generate an SVG of a pelican riding a bicycle"

It's very, very slow. The model took 6 minutes 8 seconds to respond and charged me for 16 input and 9,205 output tokens. At $15/million input and $120/million output this pelican cost me $1.10!

Here's the full transcript. It looks visually pretty simpler to the much, much cheaper result I got from GPT-5.

OpenAI DevDay 2025 live blog

I’m at OpenAI DevDay in Fort Mason, San Francisco today. As I did last year, I’m going to be live blogging the announcements from the kenote. Unlike last year, this year there’s a livestream.

[... 57 words]Embracing the parallel coding agent lifestyle

For a while now I’ve been hearing from engineers who run multiple coding agents at once—firing up several Claude Code or Codex CLI instances at the same time, sometimes in the same repo, sometimes against multiple checkouts or git worktrees.

[... 1,275 words]Let the LLM Write the Prompts: An Intro to DSPy in Compound Al Pipelines. I've had trouble getting my head around DSPy in the past. This half hour talk by Drew Breunig at the recent Databricks Data + AI Summit is the clearest explanation I've seen yet of the kinds of problems it can help solve.

Here's Drew's written version of the talk.

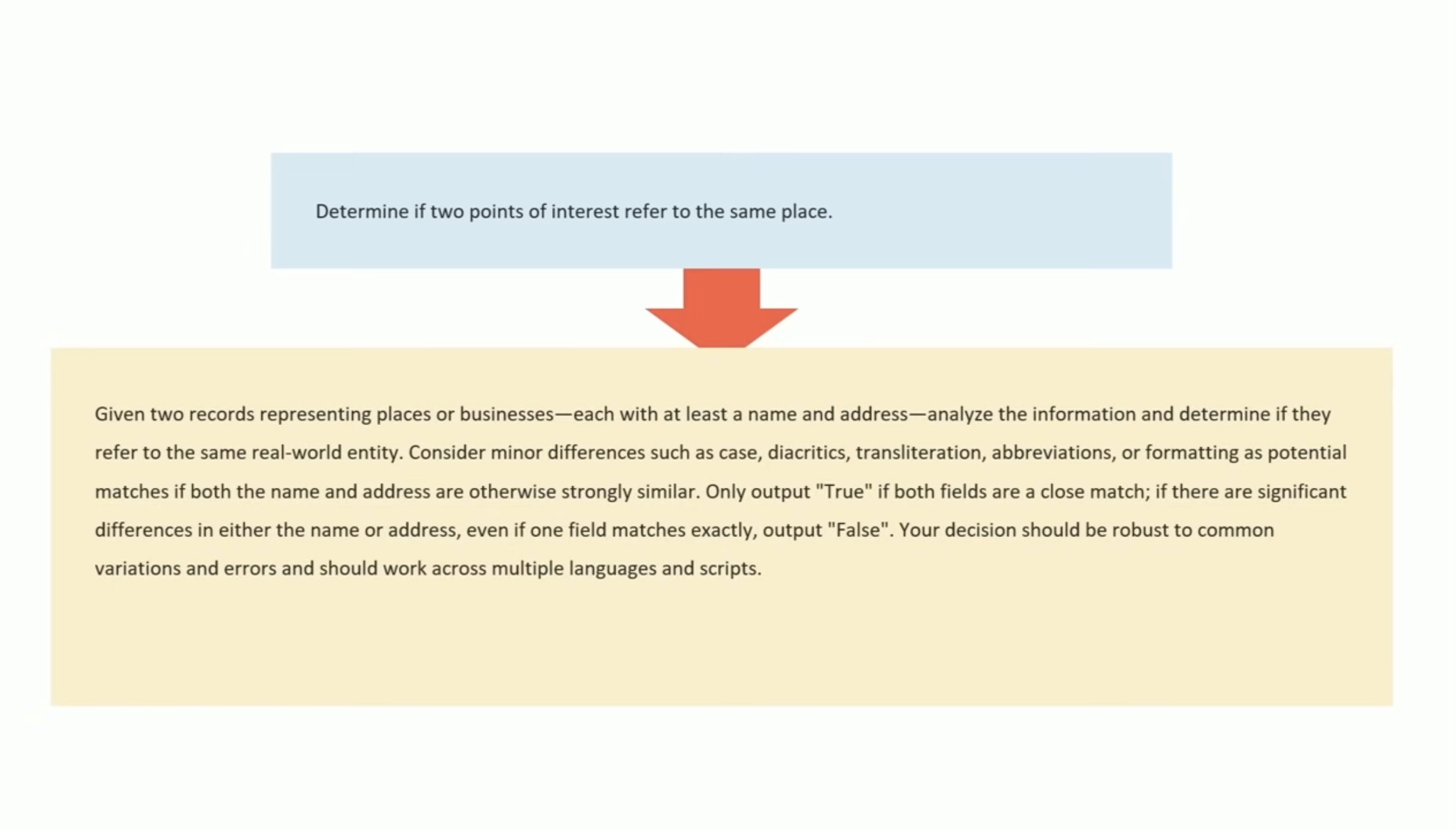

Drew works on Overture Maps, which combines Point Of Interest data from numerous providers to create a single unified POI database. This is an example of conflation, a notoriously difficult task in GIS where multiple datasets are deduped and merged together.

Drew uses an inexpensive local model, Qwen3-0.6B, to compare 70 million addresses and identity matches, for example between Place(address="3359 FOOTHILL BLVD", name="RESTAURANT LOS ARCOS") and Place(address="3359 FOOTHILL BLVD", name="Los Arcos Taqueria"').

DSPy's role is to optimize the prompt used for that smaller model. Drew used GPT-4.1 and the dspy.MIPROv2 optimizer, producing a 700 token prompt that increased the score from 60.7% to 82%.

Why bother? Drew points out that having a prompt optimization pipeline makes it trivial to evaluate and switch to other models if they can score higher with a custom optimized prompt - without needing to execute that trial-and-error optimization by hand.