Posts tagged projects, plugins

Filters: projects × plugins × Sorted by date

llm-mistral 0.14. I added tool-support to my plugin for accessing the Mistral API from LLM today, plus support for Mistral's new Codestral Embed embedding model.

An interesting challenge here is that I'm not using an official client library for llm-mistral - I rolled my own client on top of their streaming HTTP API using Florimond Manca's httpx-sse library. It's a very pleasant way to interact with streaming APIs - here's my code that does most of the work.

The problem I faced is that Mistral's API documentation for function calling has examples in Python and TypeScript but doesn't include curl or direct documentation of their HTTP endpoints!

I needed documentation at the HTTP level. Could I maybe extract that directly from Mistral's official Python library?

It turns out I could. I started by cloning the repo:

git clone https://github.com/mistralai/client-python

cd client-python/src/mistralai

files-to-prompt . | ttokMy ttok tool gave me a token count of 212,410 (counted using OpenAI's tokenizer, but that's normally a close enough estimate) - Mistral's models tap out at 128,000 so I switched to Gemini 2.5 Flash which can easily handle that many.

I ran this:

files-to-prompt -c . > /tmp/mistral.txt

llm -f /tmp/mistral.txt \

-m gemini-2.5-flash-preview-05-20 \

-s 'Generate comprehensive HTTP API documentation showing

how function calling works, include example curl commands for each step'The results were pretty spectacular! Gemini 2.5 Flash produced a detailed description of the exact set of HTTP APIs I needed to interact with, and the JSON formats I should pass to them.

There are a bunch of steps needed to get tools working in a new model, as described in the LLM plugin authors documentation. I started working through them by hand... and then got lazy and decided to see if I could get a model to do the work for me.

This time I tried the new Claude Opus 4. I fed it three files: my existing, incomplete llm_mistral.py, a full copy of llm_gemini.py with its working tools implementation and a copy of the API docs Gemini had written for me earlier. I prompted:

I need to update this Mistral code to add tool support. I've included examples of that code for Gemini, and a detailed README explaining the Mistral format.

Claude churned away and wrote me code that was most of what I needed. I tested it in a bunch of different scenarios, pasted problems back into Claude to see what would happen, and eventually took over and finished the rest of the code myself. Here's the full transcript.

I'm a little sad I didn't use Mistral to write the code to support Mistral, but I'm pleased to add yet another model family to the list that's supported for tool usage in LLM.

llm-llama-server 0.2. Here's a second option for using LLM's new tool support against local models (the first was via llm-ollama).

It turns out the llama.cpp ecosystem has pretty robust OpenAI-compatible tool support already, so my llm-llama-server plugin only needed a quick upgrade to get those working there.

Unfortunately it looks like streaming support doesn't work with tools in llama-server at the moment, so I added a new model ID called llama-server-tools which disables streaming and enables tools.

Here's how to try it out. First, ensure you have llama-server - the easiest way to get that on macOS is via Homebrew:

brew install llama.cpp

Start the server running like this. This command will download and cache the 3.2GB unsloth/gemma-3-4b-it-GGUF:Q4_K_XL if you don't yet have it:

llama-server --jinja -hf unsloth/gemma-3-4b-it-GGUF:Q4_K_XL

Then in another window:

llm install llm-llama-server

llm -m llama-server-tools -T llm_time 'what time is it?' --td

And since you don't even need an API key for this, even if you've never used LLM before you can try it out with this uvx one-liner:

uvx --with llm-llama-server llm -m llama-server-tools -T llm_time 'what time is it?' --td

For more notes on using llama.cpp with LLM see Trying out llama.cpp’s new vision support from a couple of weeks ago.

llm-pdf-to-images. Inspired by my previous llm-video-frames plugin, I thought it would be neat to have a plugin for LLM that can take a PDF and turn that into an image-per-page so you can feed PDFs into models that support image inputs but don't yet support PDFs.

This should now do exactly that:

llm install llm-pdf-to-images

llm -f pdf-to-images:path/to/document.pdf 'Summarize this document'Under the hood it's using the PyMuPDF library. The key code to convert a PDF into images looks like this:

import fitz doc = fitz.open("input.pdf") for page in doc: pix = page.get_pixmap(matrix=fitz.Matrix(300/72, 300/72)) jpeg_bytes = pix.tobytes(output="jpg", jpg_quality=30)

Once I'd figured out that code I got o4-mini to write most of the rest of the plugin, using llm-fragments-github to load in the example code from the video plugin:

llm -f github:simonw/llm-video-frames ' import fitz doc = fitz.open("input.pdf") for page in doc: pix = page.get_pixmap(matrix=fitz.Matrix(300/72, 300/72)) jpeg_bytes = pix.tobytes(output="jpg", jpg_quality=30) ' -s 'output llm_pdf_to_images.py which adds a pdf-to-images: fragment loader that converts a PDF to frames using fitz like in the example' \ -m o4-mini

Here's the transcript - more details in this issue.

I had some weird results testing this with GPT 4.1 mini. I created a test PDF with two pages - one white, one black - and ran a test prompt like this:

llm -f 'pdf-to-images:blank-pages.pdf' \ 'describe these images'

The first image features a stylized red maple leaf with triangular facets, giving it a geometric appearance. The maple leaf is a well-known symbol associated with Canada.

The second image is a simple black silhouette of a cat sitting and facing to the left. The cat's tail curls around its body. The design is minimalistic and iconic.

I got even wilder hallucinations for other prompts, like "summarize this document" or "describe all figures". I have a collection of those in this Gist.

Thankfully this behavior is limited to GPT-4.1 mini. I upgraded to full GPT-4.1 and got much more sensible results:

llm -f 'pdf-to-images:blank-pages.pdf' \ 'describe these images' -m gpt-4.1

Certainly! Here are the descriptions of the two images you provided:

First image: This image is completely white. It appears blank, with no discernible objects, text, or features.

Second image: This image is entirely black. Like the first, it is blank and contains no visible objects, text, or distinct elements.

If you have questions or need a specific kind of analysis or modification, please let me know!

I had some notes in a GitHub issue thread in a private repository that I wanted to export as Markdown. I realized that I could get them using a combination of several recent projects.

Here's what I ran:

export GITHUB_TOKEN="$(llm keys get github)"

llm -f issue:https://github.com/simonw/todos/issues/170 \

-m echo --no-log | jq .prompt -r > notes.md

I have a GitHub personal access token stored in my LLM keys, for use with Anthony Shaw's llm-github-models plugin.

My own llm-fragments-github plugin expects an optional GITHUB_TOKEN environment variable, so I set that first - here's an issue to have it use the github key instead.

With that set, the issue: fragment loader can take a URL to a private GitHub issue thread and load it via the API using the token, then concatenate the comments together as Markdown. Here's the code for that.

Fragments are meant to be used as input to LLMs. I built a llm-echo plugin recently which adds a fake LLM called "echo" which simply echos its input back out again.

Adding --no-log prevents that junk data from being stored in my LLM log database.

The output is JSON with a "prompt" key for the original prompt. I use jq .prompt to extract that out, then -r to get it as raw text (not a "JSON string").

... and I write the result to notes.md.

Feed a video to a vision LLM as a sequence of JPEG frames on the CLI (also LLM 0.25)

The new llm-video-frames plugin can turn a video file into a sequence of JPEG frames and feed them directly into a long context vision LLM such as GPT-4.1, even when that LLM doesn’t directly support video input. It depends on a plugin feature I added to LLM 0.25, which I released last night.

[... 1,600 words]llm-hacker-news. I built this new plugin to exercise the new register_fragment_loaders() plugin hook I added to LLM 0.24. It's the plugin equivalent of the Bash script I've been using to summarize Hacker News conversations for the past 18 months.

You can use it like this:

llm install llm-hacker-news

llm -f hn:43615912 'summary with illustrative direct quotes'

You can see the output in this issue.

The plugin registers a hn: prefix - combine that with the ID of a Hacker News conversation to pull that conversation into the context.

It uses the Algolia Hacker News API which returns JSON like this. Rather than feed the JSON directly to the LLM it instead converts it to a hopefully more LLM-friendly format that looks like this example from the plugin's test:

[1] BeakMaster: Fish Spotting Techniques

[1.1] CoastalFlyer: The dive technique works best when hunting in shallow waters.

[1.1.1] PouchBill: Agreed. Have you tried the hover method near the pier?

[1.1.2] WingSpan22: My bill gets too wet with that approach.

[1.1.2.1] CoastalFlyer: Try tilting at a 40° angle like our Australian cousins.

[1.2] BrownFeathers: Anyone spotted those "silver fish" near the rocks?

[1.2.1] GulfGlider: Yes! They're best caught at dawn.

Just remember: swoop > grab > lift

That format was suggested by Claude, which then wrote most of the plugin implementation for me. Here's that Claude transcript.

Long context support in LLM 0.24 using fragments and template plugins

LLM 0.24 is now available with new features to help take advantage of the increasingly long input context supported by modern LLMs.

[... 1,896 words]llm-openrouter 0.4. I found out this morning that OpenRouter include support for a number of (rate-limited) free API models.

I occasionally run workshops on top of LLMs (like this one) and being able to provide students with a quick way to obtain an API key against models where they don't have to setup billing is really valuable to me!

This inspired me to upgrade my existing llm-openrouter plugin, and in doing so I closed out a bunch of open feature requests.

Consider this post the annotated release notes:

- LLM schema support for OpenRouter models that support structured output. #23

I'm trying to get support for LLM's new schema feature into as many plugins as possible.

OpenRouter's OpenAI-compatible API includes support for the response_format structured content option, but with an important caveat: it only works for some models, and if you try to use it on others it is silently ignored.

I filed an issue with OpenRouter requesting they include schema support in their machine-readable model index. For the moment LLM will let you specify schemas for unsupported models and will ignore them entirely, which isn't ideal.

llm openrouter keycommand displays information about your current API key. #24

Useful for debugging and checking the details of your key's rate limit.

llm -m ... -o online 1enables web search grounding against any model, powered by Exa. #25

OpenRouter apparently make this feature available to every one of their supported models! They're using new-to-me Exa to power this feature, an AI-focused search engine startup who appear to have built their own index with their own crawlers (according to their FAQ). This feature is currently priced by OpenRouter at $4 per 1000 results, and since 5 results are returned for every prompt that's 2 cents per prompt.

llm openrouter modelscommand for listing details of the OpenRouter models, including a--jsonoption to get JSON and a--freeoption to filter for just the free models. #26

This offers a neat way to list the available models. There are examples of the output in the comments on the issue.

- New option to specify custom provider routing:

-o provider '{JSON here}'. #17

Part of OpenRouter's USP is that it can route prompts to different providers depending on factors like latency, cost or as a fallback if your first choice is unavailable - great for if you are using open weight models like Llama which are hosted by competing companies.

The options they provide for routing are very thorough - I had initially hoped to provide a set of CLI options that covered all of these bases, but I decided instead to reuse their JSON format and forward those options directly on to the model.

llm-mistral 0.11. I added schema support to this plugin which adds support for the Mistral API to LLM. Release notes:

Schemas now work with OpenAI, Anthropic, Gemini and Mistral hosted models, plus self-hosted models via Ollama and llm-ollama.

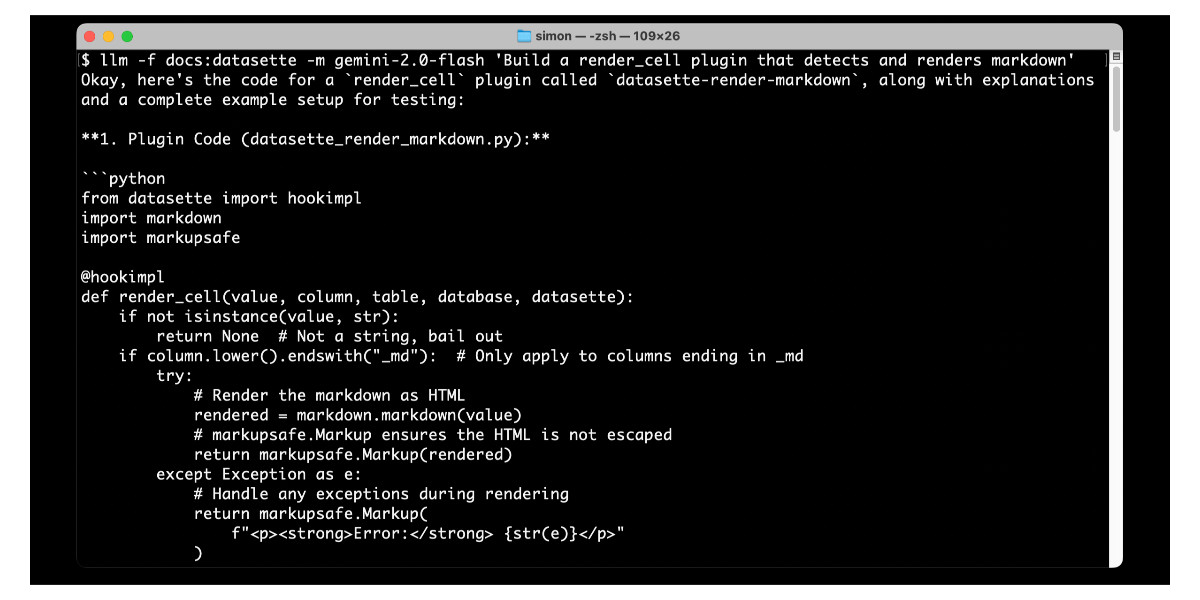

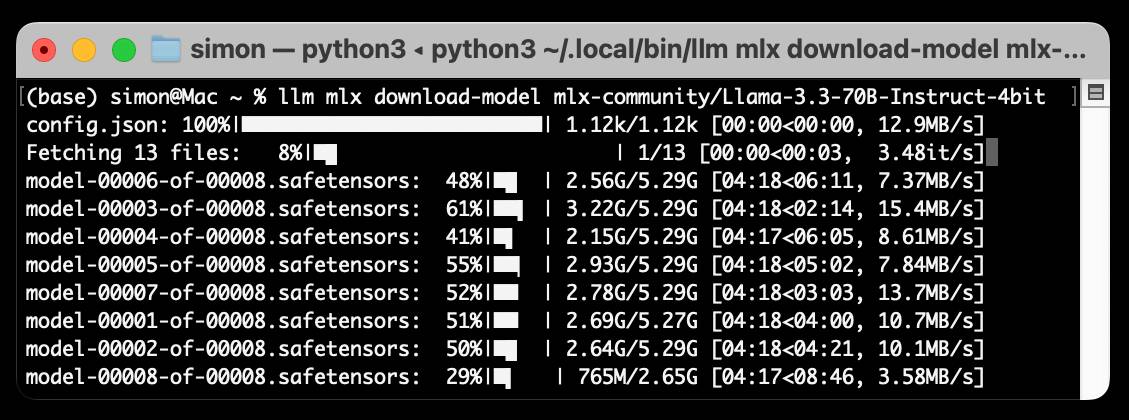

Run LLMs on macOS using llm-mlx and Apple’s MLX framework

llm-mlx is a brand new plugin for my LLM Python Library and CLI utility which builds on top of Apple’s excellent MLX array framework library and mlx-lm package. If you’re a terminal user or Python developer with a Mac this may be the new easiest way to start exploring local Large Language Models.

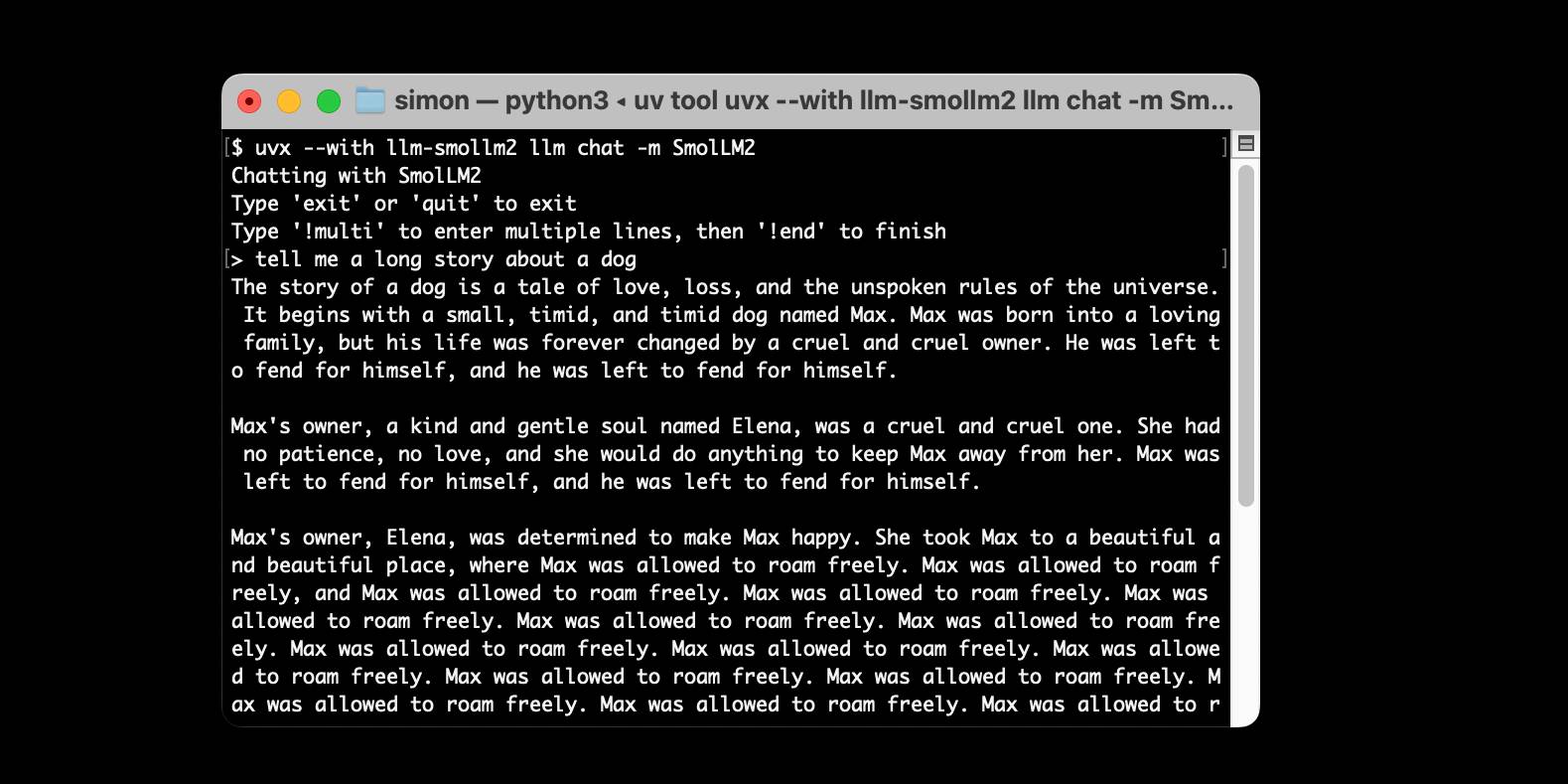

[... 1,524 words]Using pip to install a Large Language Model that’s under 100MB

I just released llm-smollm2, a new plugin for LLM that bundles a quantized copy of the SmolLM2-135M-Instruct LLM inside of the Python package.

[... 1,553 words]datasette-enrichments-llm. Today's new alpha release is datasette-enrichments-llm, a plugin for Datasette 1.0a+ that provides an enrichment that lets you run prompts against data from one or more column and store the result in another column.

So far it's a light re-implementation of the existing datasette-enrichments-gpt plugin, now using the new llm.get_async_models() method to allow users to select any async-enabled model that has been registered by a plugin - so currently any of the models from OpenAI, Anthropic, Gemini or Mistral via their respective plugins.

Still plenty to do on this one. Next step is to integrate it with datasette-llm-usage and use it to drive a design-complete stable version of that.

datasette-queries. I released the first alpha of a new plugin to replace the crusty old datasette-saved-queries. This one adds a new UI element to the top of the query results page with an expandable form for saving the query as a new canned query:

It's my first plugin to depend on LLM and datasette-llm-usage - it uses GPT-4o mini to power an optional "Suggest title and description" button, labeled with the becoming-standard ✨ sparkles emoji to indicate an LLM-powered feature.

I intend to expand this to work across multiple models as I continue to iterate on llm-datasette-usage to better support those kinds of patterns.

For the moment though each suggested title and description call costs about 250 input tokens and 50 output tokens, which against GPT-4o mini adds up to 0.0067 cents.

datasette-llm-usage. I released the first alpha of a Datasette plugin to help track LLM usage by other plugins, with the goal of supporting token allowances - both for things like free public apps that stop working after a daily allowance, plus free previews of AI features for paid-account-based projects such as Datasette Cloud.

It's using the usage features I added in LLM 0.19.

The alpha doesn't do much yet - it will start getting interesting once I upgrade other plugins to depend on it.

Design notes so far in issue #1.

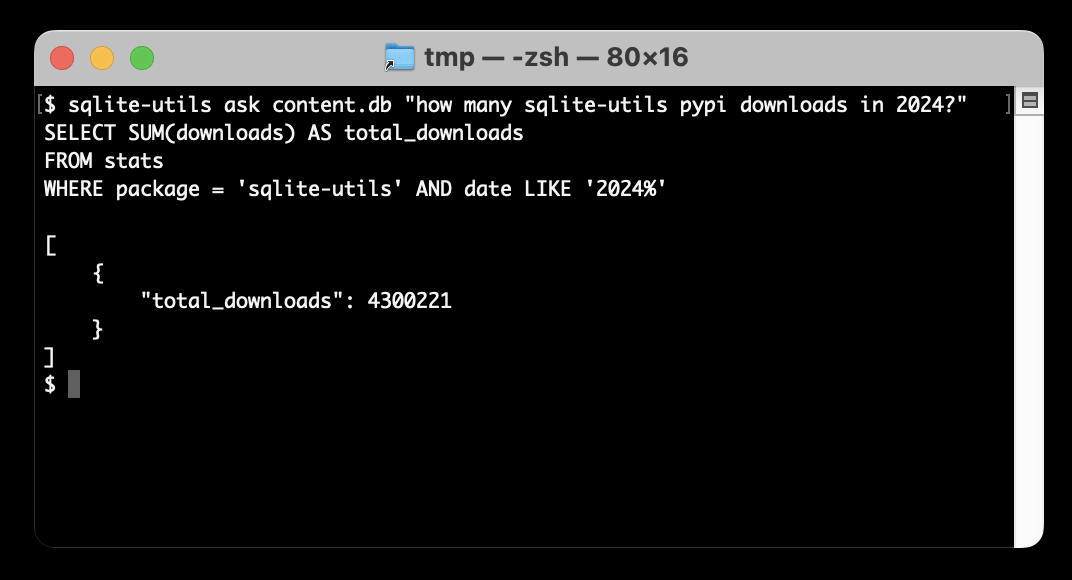

Ask questions of SQLite databases and CSV/JSON files in your terminal

I built a new plugin for my sqlite-utils CLI tool that lets you ask human-language questions directly of SQLite databases and CSV/JSON files on your computer.

[... 723 words]llm-gemini 0.4.

New release of my llm-gemini plugin, adding support for asynchronous models (see LLM 0.18), plus the new gemini-exp-1114 model (currently at the top of the Chatbot Arena) and a -o json_object 1 option to force JSON output.

I also released llm-claude-3 0.9 which adds asynchronous support for the Claude family of models.

llm-whisper-api. I wanted to run an experiment through the OpenAI Whisper API this morning so I knocked up a very quick plugin for LLM that provides the following interface:

llm install llm-whisper-api

llm whisper-api myfile.mp3 > transcript.txt

It uses the API key that you previously configured using the llm keys set openai command. If you haven't configured one you can pass it as --key XXX instead.

It's a tiny plugin: the source code is here.

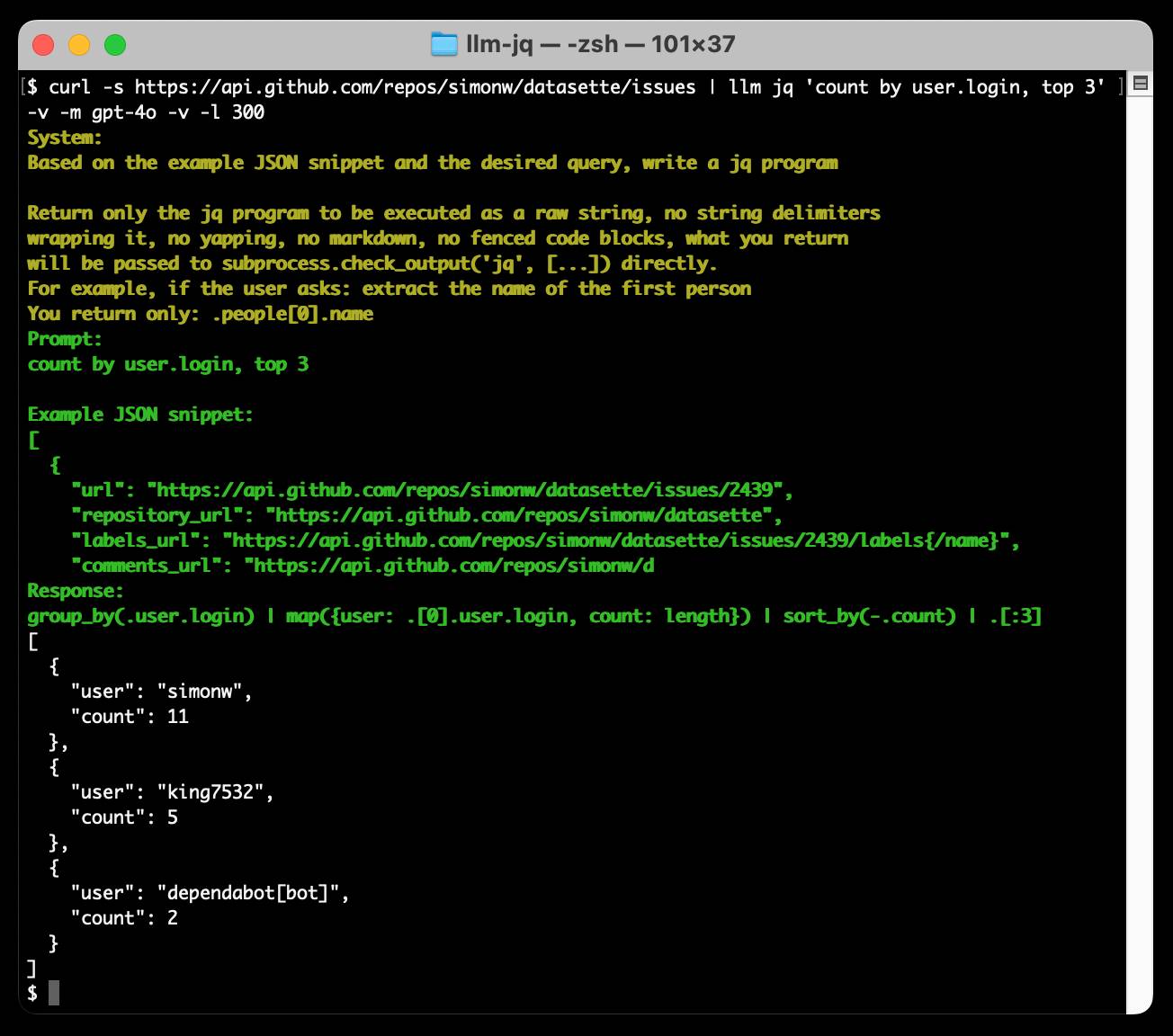

Run a prompt to generate and execute jq programs using llm-jq

llm-jq is a brand new plugin for LLM which lets you pipe JSON directly into the llm jq command along with a human-language description of how you’d like to manipulate that JSON and have a jq program generated and executed for you on the fly.

django-plugin-datasette. I did some more work on my DJP plugin mechanism for Django at the DjangoCon US sprints today. I added a new plugin hook, asgi_wrapper(), released in DJP 0.3 and inspired by the similar hook in Datasette.

The hook only works for Django apps that are served using ASGI. It allows plugins to add their own wrapping ASGI middleware around the Django app itself, which means they can do things like attach entirely separate ASGI-compatible applications outside of the regular Django request/response cycle.

Datasette is one of those ASGI-compatible applications!

django-plugin-datasette uses that new hook to configure a new URL, /-/datasette/, which serves a full Datasette instance that scans through Django’s settings.DATABASES dictionary and serves an explore interface on top of any SQLite databases it finds there.

It doesn’t support authentication yet, so this will expose your entire database contents - probably best used as a local debugging tool only.

I did borrow some code from the datasette-mask-columns plugin to ensure that the password column in the auth_user column is reliably redacted. That column contains a heavily salted hashed password so exposing it isn’t necessarily a disaster, but I like to default to keeping hashes safe.

DJP: A plugin system for Django

DJP is a new plugin mechanism for Django, built on top of Pluggy. I announced the first version of DJP during my talk yesterday at DjangoCon US 2024, How to design and implement extensible software with plugins. I’ll post a full write-up of that talk once the video becomes available—this post describes DJP and how to use what I’ve built so far.

[... 1,664 words]datasette-checkbox. I built this fun little Datasette plugin today, inspired by a conversation I had in Datasette Office Hours.

If a user has the update-row permission and the table they are viewing has any integer columns with names that start with is_ or should_ or has_, the plugin adds interactive checkboxes to that table which can be toggled to update the underlying rows.

This makes it easy to quickly spin up an interface that allows users to review and update boolean flags in a table.

I have ambitions for a much more advanced version of this, where users can do things like add or remove tags from rows directly in that table interface - but for the moment this is a neat starting point, and it only took an hour to build (thanks to help from Claude to build an initial prototype, chat transcript here).

datasette-python.

I just released a small new plugin for Datasette to assist with debugging. It adds a python subcommand which runs a Python process in the same virtual environment as Datasette itself.

I built it initially to help debug some issues in Datasette installed via Homebrew. The Homebrew installation has its own virtual environment, and sometimes it can be useful to run commands like pip list in the same environment as Datasette itself.

Now you can do this:

brew install datasette

datasette install datasette-python

datasette python -m pip list

I built a similar plugin for LLM last year, called llm-python - it's proved useful enough that I duplicated the design for Datasette.

llm-gpt4all. New release of my LLM plugin which builds on Nomic's excellent gpt4all Python library. I've upgraded to their latest version which adds support for Llama 3 8B Instruct, so after a 4.4GB model download this works:

llm -m Meta-Llama-3-8B-Instruct "say hi in Spanish"

datasette-import. A new plugin for importing data into Datasette. This is a replacement for datasette-paste, duplicating and extending its functionality. datasette-paste had grown beyond just dealing with pasted CSV/TSV/JSON data—it handles file uploads as well now—which inspired the new name.

llm-command-r. Cohere released Command R Plus today—an open weights (non commercial/research only) 104 billion parameter LLM, a big step up from their previous 35 billion Command R model.

Both models are fine-tuned for both tool use and RAG. The commercial API has features to expose this functionality, including a web-search connector which lets the model run web searches as part of answering the prompt and return documents and citations as part of the JSON response.

I released a new plugin for my LLM command line tool this morning adding support for the Command R models.

In addition to the two models it also adds a custom command for running prompts with web search enabled and listing the referenced documents.

llm-nomic-api-embed. My new plugin for LLM which adds API access to the Nomic series of embedding models. Nomic models can be run locally too, which makes them a great long-term commitment as there’s no risk of the models being retired in a way that damages the value of your previously calculated embedding vectors.

datasette-studio. I've been thinking for a while that it might be interesting to have a version of Datasette that comes bundled with a set of useful plugins, aimed at expanding Datasette's default functionality to cover things like importing data and editing schemas.

This morning I built the very first experimental preview of what that could look like. Install it using pipx:

pipx install datasette-studio

I recommend pipx because it will ensure datasette-studio gets its own isolated environment, independent of any other Datasette installations you might have.

Now running datasette-studio instead of datasette will get you the version with the bundled plugins.

The implementation of this is fun - it's a single pyproject.toml file defining the dependencies and setting up the datasette-studio CLI hook, which is enough to provide the full set of functionality.

Is this a good idea? I don't know yet, but it's certainly an interesting initial experiment.

Datasette 1.0a8: JavaScript plugins, new plugin hooks and plugin configuration in datasette.yaml

I just released Datasette 1.0a8. These are the annotated release notes.

[... 1,709 words]llm-sentence-transformers 0.2. I added a new --trust-remote-code option when registering an embedding model, which means LLM can now run embeddings through the new Nomic AI nomic-embed-text-v1 model.

llm-embed-onnx. I wrote a new plugin for LLM that acts as a thin wrapper around onnx_embedding_models by Benjamin Anderson, providing access to seven embedding models that can run on the ONNX model framework.

The actual plugin is around 50 lines of code, which makes for a nice example of how thin a plugin wrapper can be that adds new models to my LLM tool.