September 2024

82 posts: 10 entries, 49 links, 23 quotes

Sept. 15, 2024

UV — I am (somewhat) sold

(via)

Oliver Andrich's detailed notes on adopting uv. Oliver has some pretty specific requirements:

I need to have various Python versions installed locally to test my work and my personal projects. Ranging from Python 3.8 to 3.13. [...] I also require decent dependency management in my projects that goes beyond manually editing a

pyproject.tomlfile. Likewise, I am way too accustomed topoetry add .... And I run a number of Python-based tools --- djhtml, poetry, ipython, llm, mkdocs, pre-commit, tox, ...

He's braver than I am!

I started by removing all Python installations, pyenv, pipx and Homebrew from my machine. Rendering me unable to do my work.

Here's a neat trick: first install a specific Python version with uv like this:

uv python install 3.11

Then create an alias to run it like this:

alias python3.11 'uv run --python=3.11 python3'

And install standalone tools with optional extra dependencies like this (a replacement for pipx and pipx inject):

uv tool install --python=3.12 --with mkdocs-material mkdocs

Oliver also links to Anže Pečar's handy guide on using UV with Django.

Sept. 16, 2024

o1 prompting is alien to me. Its thinking, gloriously effective at times, is also dreamlike and unamenable to advice.

Just say what you want and pray. Any notes on “how” will be followed with the diligence of a brilliant intern on ketamine.

Sept. 17, 2024

Do not fall into the trap of anthropomorphizing Larry Ellison. You need to think of Larry Ellison the way you think of a lawnmower. You don’t anthropomorphize your lawnmower, the lawnmower just mows the lawn - you stick your hand in there and it’ll chop it off, the end. You don’t think "oh, the lawnmower hates me" – lawnmower doesn’t give a shit about you, lawnmower can’t hate you. Don’t anthropomorphize the lawnmower. Don’t fall into that trap about Oracle.

Supercharging Developer Productivity with ChatGPT and Claude with Simon Willison (via) I'm the guest for the latest episode of the TWIML AI podcast - This Week in Machine Learning & AI, hosted by Sam Charrington.

We mainly talked about how I use LLM tooling for my own work - Claude, ChatGPT, Code Interpreter, Claude Artifacts, LLM and GitHub Copilot - plus a bit about my experiments with local models.

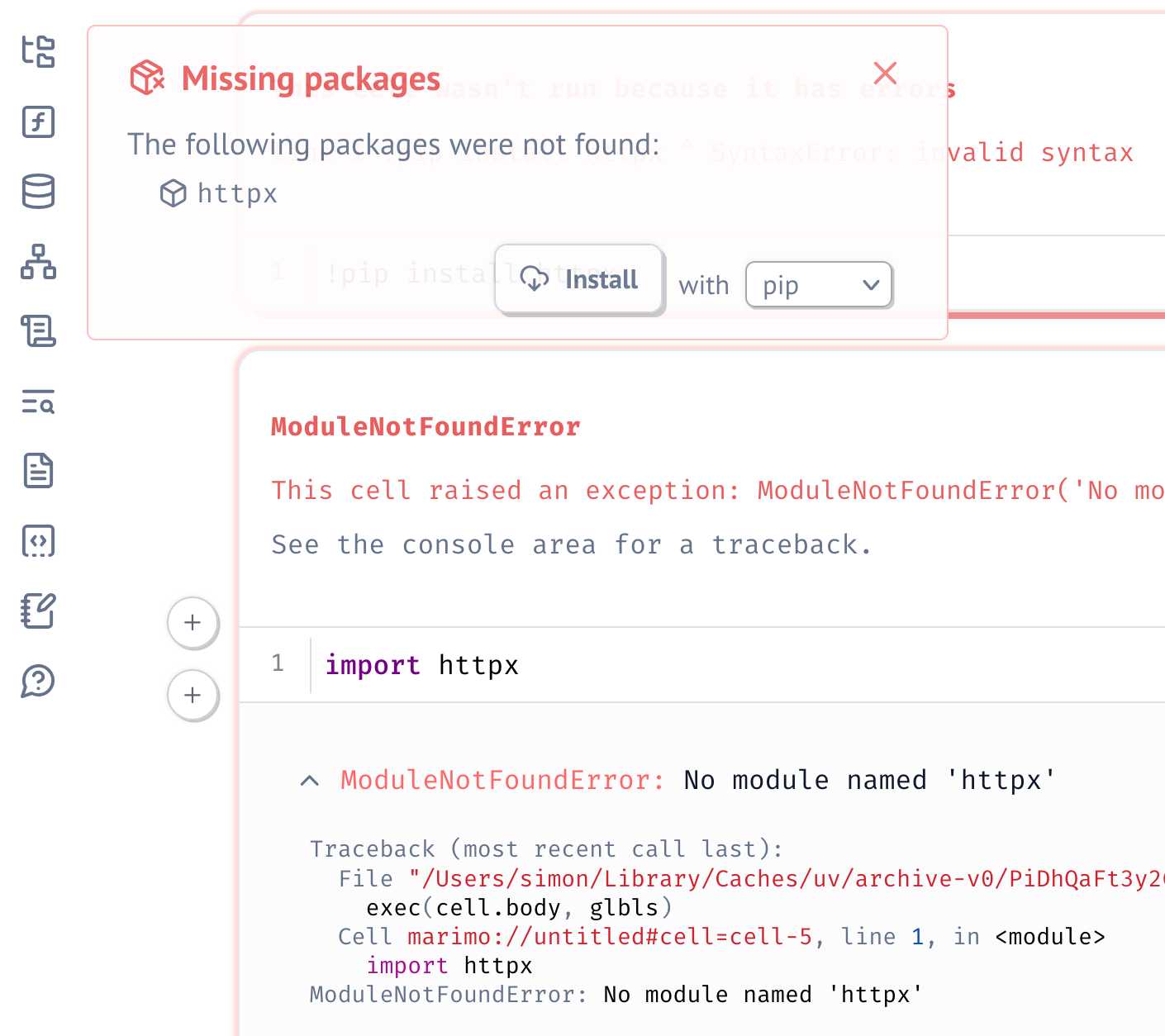

Serializing package requirements in marimo notebooks. The latest release of Marimo - a reactive alternative to Jupyter notebooks - has a very neat new feature enabled by its integration with uv:

One of marimo’s goals is to make notebooks reproducible, down to the packages used in them. To that end, it’s now possible to create marimo notebooks that have their package requirements serialized into them as a top-level comment.

This takes advantage of the PEP 723 inline metadata mechanism, where a code comment at the top of a Python file can list package dependencies (and their versions).

I tried this out by installing marimo using uv:

uv tool install --python=3.12 marimo

Then grabbing one of their example notebooks:

wget 'https://raw.githubusercontent.com/marimo-team/spotlights/main/001-anywidget/tldraw_colorpicker.py'

And running it in a fresh dependency sandbox like this:

marimo run --sandbox tldraw_colorpicker.py

Also neat is that when editing a notebook using marimo edit:

marimo edit --sandbox notebook.py

Just importing a missing package is enough for Marimo to prompt to add that to the dependencies - at which point it automatically adds that package to the comment at the top of the file:

Something that I confirmed that other conference organisers are also experiencing is last-minute ticket sales. This is something that happened with UX London this year. For most of the year, ticket sales were trickling along. Then in the last few weeks before the event we sold more tickets than we had sold in the six months previously. […]

When I was in Ireland I had a chat with a friend of mine who works at the Everyman Theatre in Cork. They’re experiencing something similar. So maybe it’s not related to the tech industry specifically.

In general, the claims about how long people are living mostly don’t stack up. I’ve tracked down 80% of the people aged over 110 in the world (the other 20% are from countries you can’t meaningfully analyse). Of those, almost none have a birth certificate. [...]

Regions where people most often reach 100-110 years old are the ones where there’s the most pressure to commit pension fraud, and they also have the worst records.

Oracle, it’s time to free JavaScript. (via) Oracle have held the trademark on JavaScript since their acquisition of Sun Microsystems in 2009. They’ve continued to renew that trademark over the years despite having no major products that use the mark.

Their December 2019 renewal included a screenshot of the Node.js homepage as a supporting specimen!

Now a group lead by a team that includes Ryan Dahl and Brendan Eich is coordinating a legal challenge to have the USPTO treat the trademark as abandoned and “recognize it as a generic name for the world’s most popular programming language, which has multiple implementations across the industry.”

Sept. 18, 2024

Things I’ve learned serving on the board of the Python Software Foundation

Two years ago I was elected to the board of directors for the Python Software Foundation—the PSF. I recently returned from the annual PSF board retreat (this one was in Lisbon, Portugal) and this feels like a good opportunity to write up some of the things I’ve learned along the way.

[... 2,702 words]The problem that you face is that it's relatively easy to take a model and make it look like it's aligned. You ask GPT-4, “how do I end all of humans?” And the model says, “I can't possibly help you with that”. But there are a million and one ways to take the exact same question - pick your favorite - and you can make the model still answer the question even though initially it would have refused. And the question this reminds me a lot of coming from adversarial machine learning. We have a very simple objective: Classify the image correctly according to the original label. And yet, despite the fact that it was essentially trivial to find all of the bugs in principle, the community had a very hard time coming up with actually effective defenses. We wrote like over 9,000 papers in ten years, and have made very very very limited progress on this one small problem. You all have a harder problem and maybe less time.

Sept. 19, 2024

The web’s clipboard, and how it stores data of different types.

Alex Harri's deep dive into the Web clipboard API, the more recent alternative to the old document.execCommand() mechanism for accessing the clipboard.

There's a lot to understand here! Some of these APIs have a history dating back to Internet Explorer 4 in 1997, and there have been plenty of changes over the years to account for improved understanding of the security risks of allowing untrusted code to interact with the system clipboard.

Today, the most reliable data formats for interacting with the clipboard are the "standard" formats of text/plain, text/html and image/png.

Figma does a particularly clever trick where they share custom Figma binary data structures by encoding them as base64 in data-metadata and data-buffer attributes on a <span> element, then write the result to the clipboard as HTML. This enables copy-and-paste between the Figma web and native apps via the system clipboard.

Moshi (via) Moshi is "a speech-text foundation model and full-duplex spoken dialogue framework". It's effectively a text-to-text model - like an LLM but you input audio directly to it and it replies with its own audio.

It's fun to play around with, but it's not particularly useful in comparison to other pure text models: I tried to talk to it about California Brown Pelicans and it gave me some very basic hallucinated thoughts about California Condors instead.

It's very easy to run locally, at least on a Mac (and likely on other systems too). I used uv and got the 8 bit quantized version running as a local web server using this one-liner:

uv run --with moshi_mlx python -m moshi_mlx.local_web -q 8

That downloads ~8.17G of model to a folder in ~/.cache/huggingface/hub/ - or you can use -q 4 and get a 4.81G version instead (albeit even lower quality).

Sept. 20, 2024

Introducing Contextual Retrieval (via) Here's an interesting new embedding/RAG technique, described by Anthropic but it should work for any embedding model against any other LLM.

One of the big challenges in implementing semantic search against vector embeddings - often used as part of a RAG system - is creating "chunks" of documents that are most likely to semantically match queries from users.

Anthropic provide this solid example where semantic chunks might let you down:

Imagine you had a collection of financial information (say, U.S. SEC filings) embedded in your knowledge base, and you received the following question: "What was the revenue growth for ACME Corp in Q2 2023?"

A relevant chunk might contain the text: "The company's revenue grew by 3% over the previous quarter." However, this chunk on its own doesn't specify which company it's referring to or the relevant time period, making it difficult to retrieve the right information or use the information effectively.

Their proposed solution is to take each chunk at indexing time and expand it using an LLM - so the above sentence would become this instead:

This chunk is from an SEC filing on ACME corp's performance in Q2 2023; the previous quarter's revenue was $314 million. The company's revenue grew by 3% over the previous quarter.

This chunk was created by Claude 3 Haiku (their least expensive model) using the following prompt template:

<document>

{{WHOLE_DOCUMENT}}

</document>

Here is the chunk we want to situate within the whole document

<chunk>

{{CHUNK_CONTENT}}

</chunk>

Please give a short succinct context to situate this chunk within the overall document for the purposes of improving search retrieval of the chunk. Answer only with the succinct context and nothing else.

Here's the really clever bit: running the above prompt for every chunk in a document could get really expensive thanks to the inclusion of the entire document in each prompt. Claude added context caching last month, which allows you to pay around 1/10th of the cost for tokens cached up to your specified beakpoint.

By Anthropic's calculations:

Assuming 800 token chunks, 8k token documents, 50 token context instructions, and 100 tokens of context per chunk, the one-time cost to generate contextualized chunks is $1.02 per million document tokens.

Anthropic provide a detailed notebook demonstrating an implementation of this pattern. Their eventual solution combines cosine similarity and BM25 indexing, uses embeddings from Voyage AI and adds a reranking step powered by Cohere.

The notebook also includes an evaluation set using JSONL - here's that evaluation data in Datasette Lite.

Notes on using LLMs for code

I was recently the guest on TWIML—the This Week in Machine Learning & AI podcast. Our episode is titled Supercharging Developer Productivity with ChatGPT and Claude with Simon Willison, and the focus of the conversation was the ways in which I use LLM tools in my day-to-day work as a software developer and product engineer.

[... 861 words]YouTube Thumbnail Viewer.

I wanted to find the best quality thumbnail image for a YouTube video, so I could use it as a social media card. I know from past experience that GPT-4 has memorized the various URL patterns for img.youtube.com, so I asked it to guess the URL for my specific video.

This piqued my interest as to what the other patterns were, so I got it to spit those out too. Then, to save myself from needing to look those up again in the future, I asked it to build me a little HTML and JavaScript tool for turning a YouTube video URL into a set of visible thumbnails.

I iterated on the code a bit more after pasting it into Claude and ended up with this, now hosted in my tools collection.

Sept. 21, 2024

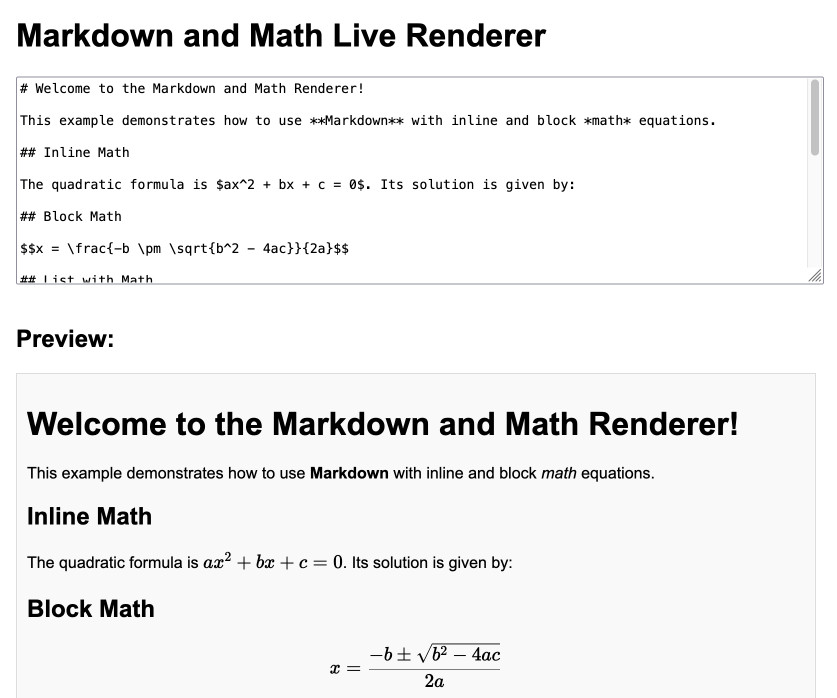

Markdown and Math Live Renderer.

Another of my tiny Claude-assisted JavaScript tools. This one lets you enter Markdown with embedded mathematical expressions (like $ax^2 + bx + c = 0$) and live renders those on the page, with an HTML version using MathML that you can export through copy and paste.

Here's the Claude transcript. I started by asking:

Are there any client side JavaScript markdown libraries that can also handle inline math and render it?

Claude gave me several options including the combination of Marked and KaTeX, so I followed up by asking:

Build an artifact that demonstrates Marked plus KaTeX - it should include a text area I can enter markdown in (repopulated with a good example) and live update the rendered version below. No react.

Which gave me this artifact, instantly demonstrating that what I wanted to do was possible.

I iterated on it a tiny bit to get to the final version, mainly to add that HTML export and a Copy button. The final source code is here.

Whether you think coding with AI works today or not doesn’t really matter.

But if you think functional AI helping to code will make humans dumber or isn’t real programming just consider that’s been the argument against every generation of programming tools going back to Fortran.

Sept. 22, 2024

How streaming LLM APIs work.

New TIL. I used curl to explore the streaming APIs provided by OpenAI, Anthropic and Google Gemini and wrote up detailed notes on what I learned.

Also includes example code for receiving streaming events in Python with HTTPX and receiving streaming events in client-side JavaScript using fetch().

Jiter (via) One of the challenges in dealing with LLM streaming APIs is the need to parse partial JSON - until the stream has ended you won't have a complete valid JSON object, but you may want to display components of that JSON as they become available.

I've solved this previously using the ijson streaming JSON library, see my previous TIL.

Today I found out about Jiter, a new option from the team behind Pydantic. It's written in Rust and extracted from pydantic-core, so the Python wrapper for it can be installed using:

pip install jiter

You can feed it an incomplete JSON bytes object and use partial_mode="on" to parse the valid subset:

import jiter partial_json = b'{"name": "John", "age": 30, "city": "New Yor' jiter.from_json(partial_json, partial_mode="on") # {'name': 'John', 'age': 30}

Or use partial_mode="trailing-strings" to include incomplete string fields too:

jiter.from_json(partial_json, partial_mode="trailing-strings") # {'name': 'John', 'age': 30, 'city': 'New Yor'}

The current README was a little thin, so I submiitted a PR with some extra examples. I got some help from files-to-prompt and Claude 3.5 Sonnet):

cd crates/jiter-python/ && files-to-prompt -c README.md tests | llm -m claude-3.5-sonnet --system 'write a new README with comprehensive documentation'

The problem I have with [pipenv shell] is that the act of manipulating the shell environment is crappy and can never be good. What all these "X shell" things do is just an abomination we should not promote IMO.

Tools should be written so that you do not need to reconfigure shells. That we normalized this over the last 10 years was a mistake and we are not forced to continue walking down that path :)

Sept. 23, 2024

SPAs incur complexity that simply doesn't exist with traditional server-based websites: issues such as search engine optimization, browser history management, web analytics and first page load time all need to be addressed. Proper analysis and consideration of the trade-offs is required to determine if that complexity is warranted for business or user experience reasons. Too often teams are skipping that trade-off analysis, blindly accepting the complexity of SPAs by default even when business needs don't justify it. We still see some developers who aren't aware of an alternative approach because they've spent their entire career in a framework like React.

— Thoughtworks, October 2022

simonw/docs cookiecutter template. Over the last few years I’ve settled on the combination of Sphinx, the Furo theme and the myst-parser extension (enabling Markdown in place of reStructuredText) as my documentation toolkit of choice, maintained in GitHub and hosted using ReadTheDocs.

My LLM and shot-scraper projects are two examples of that stack in action.

Today I wanted to spin up a new documentation site so I finally took the time to construct a cookiecutter template for my preferred configuration. You can use it like this:

pipx install cookiecutter

cookiecutter gh:simonw/docs

Or with uv:

uv tool run cookiecutter gh:simonw/docs

Answer a few questions:

[1/3] project (): shot-scraper

[2/3] author (): Simon Willison

[3/3] docs_directory (docs):

And it creates a docs/ directory ready for you to start editing docs:

cd docs

pip install -r requirements.txt

make livehtml

Sept. 24, 2024

Things I’ve Learned Serving on the Board of The Perl Foundation (via) My post about the PSF board inspired Perl Foundation secretary Makoto Nozaki to publish similar notes about how TPF (also known since 2019 as TPRF, for The Perl and Raku Foundation) operates.

Seeing this level of explanation about other open source foundations is fascinating. I’d love to see more of these.

Along those lines, I found the 2024 Financial Report from the Zig foundation really interesting too.

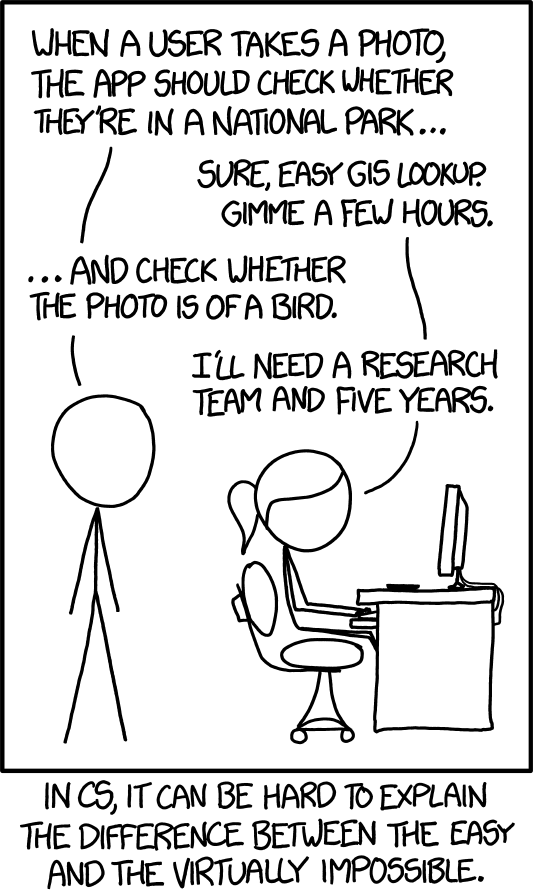

XKCD 1425 (Tasks) turns ten years old today (via) One of the all-time great XKCDs. It's amazing that "check whether the photo is of a bird" has gone from PhD-level to trivially easy to solve (with a vision LLM, or CLIP, or ResNet+ImageNet among others).

The key idea still very much stands though. Understanding the difference between easy and hard challenges in software development continues to require an enormous depth of experience.

I'd argue that LLMs have made this even worse.

Understanding what kind of tasks LLMs can and cannot reliably solve remains incredibly difficult and unintuitive. They're computer systems that are terrible at maths and that can't reliably lookup facts!

On top of that, the rise of AI-assisted programming tools means more people than ever are beginning to create their own custom software.

These brand new AI-assisted proto-programmers are having a crash course in this easy-v.s.-hard problem.

I saw someone recently complaining that they couldn't build a Claude Artifact that could analyze images, even though they knew Claude itself could do that. Understanding why that's not possible involves understanding how the CSP headers that are used to serve Artifacts prevent the generated code from making its own API calls out to an LLM!

nanodjango. Richard Terry demonstrated this in a lightning talk at DjangoCon US today. It's the latest in a long line of attempts to get Django to work with a single file (I had a go at this problem 15 years ago with djng) but this one is really compelling.

I tried nanodjango out just now and it works exactly as advertised. First install it like this:

pip install nanodjango

Create a counter.py file:

from django.db import models from nanodjango import Django app = Django() @app.admin # Registers with the Django admin class CountLog(models.Model): timestamp = models.DateTimeField(auto_now_add=True) @app.route("/") def count(request): CountLog.objects.create() return f"<p>Number of page loads: {CountLog.objects.count()}</p>"

Then run it like this (it will run migrations and create a superuser as part of that first run):

nanodjango run counter.py

That's it! This gave me a fully configured Django application with models, migrations, the Django Admin configured and a bunch of other goodies such as Django Ninja for API endpoints.

Here's the full documentation.

Updated production-ready Gemini models.

Two new models from Google Gemini today: gemini-1.5-pro-002 and gemini-1.5-flash-002. Their -latest aliases will update to these new models in "the next few days", and new -001 suffixes can be used to stick with the older models. The new models benchmark slightly better in various ways and should respond faster.

Flash continues to have a 1,048,576 input token and 8,192 output token limit. Pro is 2,097,152 input tokens.

Google also announced a significant price reduction for Pro, effective on the 1st of October. Inputs less than 128,000 tokens drop from $3.50/million to $1.25/million (above 128,000 tokens it's dropping from $7 to $5) and output costs drop from $10.50/million to $2.50/million ($21 down to $10 for the >128,000 case).

For comparison, GPT-4o is currently $5/m input and $15/m output and Claude 3.5 Sonnet is $3/m input and $15/m output. Gemini 1.5 Pro was already the cheapest of the frontier models and now it's even cheaper.

Correction: I missed gpt-4o-2024-08-06 which is listed later on the OpenAI pricing page and priced at $2.50/m input and $10/m output. So the new Gemini 1.5 Pro prices are undercutting that.

Gemini has always offered finely grained safety filters - it sounds like those are now turned down to minimum by default, which is a welcome change:

For the models released today, the filters will not be applied by default so that developers can determine the configuration best suited for their use case.

Also interesting: they've tweaked the expected length of default responses:

For use cases like summarization, question answering, and extraction, the default output length of the updated models is ~5-20% shorter than previous models.

Sept. 25, 2024

DJP: A plugin system for Django

DJP is a new plugin mechanism for Django, built on top of Pluggy. I announced the first version of DJP during my talk yesterday at DjangoCon US 2024, How to design and implement extensible software with plugins. I’ll post a full write-up of that talk once the video becomes available—this post describes DJP and how to use what I’ve built so far.

[... 1,664 words]The Pragmatic Engineer Podcast: AI tools for software engineers, but without the hype – with Simon Willison. Gergely Orosz has a brand new podcast, and I was the guest for the first episode. We covered a bunch of ground, but my favorite topic was an exploration of the (very legitimate) reasons that many engineers are resistant to taking advantage of AI-assisted programming tools.

We used this model [periodically transmitting configuration to different hosts] to distribute translations, feature flags, configuration, search indexes, etc at Airbnb. But instead of SQLite we used Sparkey, a KV file format developed by Spotify. In early years there was a Cron job on every box that pulled that service’s thingies; then once we switched to Kubernetes we used a daemonset & host tagging (taints?) to pull a variety of thingies to each host and then ensure the services that use the thingies only ran on the hosts that had the thingies.

Solving a bug with o1-preview, files-to-prompt and LLM.

I added a new feature to DJP this morning: you can now have plugins specify their middleware in terms of how it should be positioned relative to other middleware - inserted directly before or directly after django.middleware.common.CommonMiddleware for example.

At one point I got stuck with a weird test failure, and after ten minutes of head scratching I decided to pipe the entire thing into OpenAI's o1-preview to see if it could spot the problem. I used files-to-prompt to gather the code and LLM to run the prompt:

files-to-prompt **/*.py -c | llm -m o1-preview "

The middleware test is failing showing all of these - why is MiddlewareAfter repeated so many times?

['MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware2', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware4', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware2', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware5', 'MiddlewareAfter', 'Middleware3', 'MiddlewareAfter', 'Middleware', 'MiddlewareBefore']"The model whirled away for a few seconds and spat out an explanation of the problem - one of my middleware classes was accidentally calling self.get_response(request) in two different places.

I did enjoy how o1 attempted to reference the relevant Django documentation and then half-repeated, half-hallucinated a quote from it:

This took 2,538 input tokens and 4,354 output tokens - by my calculations at $15/million input and $60/million output that prompt cost just under 30 cents.