June 2024

105 posts: 7 entries, 64 links, 25 quotes, 9 beats

June 17, 2024

How researchers cracked an 11-year-old password to a crypto wallet. If you used the RoboForm password manager to generate a password prior to their 2015 bug fix that password was generated using a pseudo-random number generator based on your device’s current time—which means an attacker may be able to brute-force the password from a shorter list of options if they can derive the rough date when it was created.

(In this case the password cracking was consensual, to recover a lost wallet, but this still serves as a warning to any RoboForm users with passwords from that era.)

pkgutil.resolve_name(name) (via) Adam Johnson pointed out this utility method, added to the Python standard library in Python 3.9. It lets you provide a string that specifies a Python identifier to import from a module - a pattern frequently used in things like Django's configuration.

Path = pkgutil.resolve_name("pathlib:Path")

June 18, 2024

Anthropic release notes (via) Anthropic have started publishing release notes! Currently available for their API and their apps (mobile and web).

What I'd really like to see are release notes for the models themselves, though as far as I can tell there haven't been any updates to those since the Claude 3 models were first released (the Haiku model name in the API is still claude-3-haiku-20240307 and Anthropic say they'll change that identifier after any updates to the model).

Claude: Building evals and test cases. More documentation updates from Anthropic: this section on writing evals for Claude is new today and includes Python code examples for a number of different evaluation techniques.

Included are several examples of the LLM-as-judge pattern, plus an example using cosine similarity and another that uses the new-to-me Rouge Python library that implements the ROUGE metric for evaluating the quality of summarized text.

Tags with descriptions. Tiny new feature on my blog: I can now add optional descriptions to my tag pages, for example on datasette and sqlite-utils and prompt-injection.

I built this feature on a live call this morning as an unplanned demonstration of GitHub's new Copilot Workspace feature, where you can run a prompt against a repository and have it plan, implement and file a pull request implementing a change to the code.

My prompt was:

Add a feature that lets me add a description to my tag pages, stored in the database table for tags and visible on the /tags/x/ page at the top

It wasn't as compelling a demo as I expected: Copilot Workspace currently has to stream an entire copy of each file it modifies, which can take a long time if your codebase includes several large files that need to be changed.

It did create a working implementation on its first try, though I had given it an extra tip not to forget the database migration. I ended up making a bunch of changes myself before I shipped it, listed in the pull request.

I've been using Copilot Workspace quite a bit recently as a code explanation tool - I'll prompt it to e.g. "add architecture documentation to the README" on a random repository not owned by me, then read its initial plan to see what it's figured out without going all the way through to the implementation and PR phases. Example in this tweet where I figured out the rough design of the Jina AI Reader API for this post.

June 19, 2024

I’ve stopped using box plots. Should you? (via) Nick Desbarats explains box plots (including with this excellent short YouTube video) and then discusses why he thinks "typically less than 20 percent" of participants in his workshops already understand how to read them.

A key problem is that they are unintuitive: a box plot has four sections, two thin lines (the top and bottom whisker segments) and two larger boxes, joined around the median. Each of these elements represents the same number of samples (one quartile each) but the thin lines v.s. thick boxes imply that the whiskers contain less samples than the boxes.

About the Lawrence Times (via) The town of Lawrence, Kansas is where Django was born. I'm delighted to learn that it has a new independent online news publication as-of March 2021 - the Lawrence Times.

It's always exciting to see local media startups like this one, and they've been publishing for three years now supported by both advertiser revenue and optional paid subscriptions.

Weeknotes: Datasette Studio and a whole lot of blogging

I’m still spinning back up after my trip back to the UK, so actual time spent building things has been less than I’d like. I presented an hour long workshop on command-line LLM usage, wrote five full blog entries (since my last weeknotes) and I’ve also been leaning more into short-form link blogging—a lot more prominent on this site now since my homepage redesign last week.

[... 736 words]Civic Band. Exciting new civic tech project from Philip James: 30 (and counting) Datasette instances serving full-text search enabled collections of OCRd meeting minutes for different civic governments. Includes 20,000 pages for Alameda, 17,000 for Pittsburgh, 3,567 for Baltimore and an enormous 117,000 for Maui County.

Philip includes some notes on how they're doing it. They gather PDF minute notes from anywhere that provides API access to them, then run local Tesseract for OCR (the cost of cloud-based OCR proving prohibitive given the volume of data). The collection is then deployed to a single VPS running multiple instances of Datasette via Caddy, one instance for each of the covered regions.

June 20, 2024

State-of-the-art music scanning by Soundslice. It's been a while since I checked in on Soundslice, Adrian Holovaty's beautiful web application focused on music education.

The latest feature is spectacular. The Soundslice music editor - already one of the most impressive web applications I've ever experienced - can now import notation directly from scans or photos of sheet music.

The attention to detail is immaculate. The custom machine learning model can handle a wide variety of notation details, and the system asks the user to verify or correct details that it couldn't perfectly determine using a neatly designed flow.

Free accounts can scan two single page documents a month, and paid plans get a much higher allowance. I tried it out just now on a low resolution image I found on Wikipedia and it did a fantastic job, even allowing me to listen to a simulated piano rendition of the music once it had finished processing.

It's worth spending some time with the release notes for the feature to appreciate how much work they've out into improving it since the initial release.

If you're new to Soundslice, here's an example of their core player interface which syncs the display of music notation to an accompanying video.

Adrian wrote up some detailed notes on the machine learning behind the feature when they first launched it in beta back in November 2022.

OMR [Optical Music Recognition] is an inherently hard problem, significantly more difficult than text OCR. For one, music symbols have complex spatial relationships, and mistakes have a tendency to cascade. A single misdetected key signature might result in multiple incorrect note pitches. And there’s a wide diversity of symbols, each with its own behavior and semantics — meaning the problems and subproblems aren’t just hard, there are many of them.

[...] And then some absolute son of a bitch created ChatGPT, and now look at us. Look at us, resplendent in our pauper's robes, stitched from corpulent greed and breathless credulity, spending half of the planet's engineering efforts to add chatbot support to every application under the sun when half of the industry hasn't worked out how to test database backups regularly.

Claude 3.5 Sonnet. Anthropic released a new model this morning, and I think it's likely now the single best available LLM. Claude 3 Opus was already mostly on-par with GPT-4o, and the new 3.5 Sonnet scores higher than Opus on almost all of Anthropic's internal evals.

It's also twice the speed and one fifth of the price of Opus (it's the same price as the previous Claude 3 Sonnet). To compare:

- gpt-4o: $5/million input tokens and $15/million output

- Claude 3.5 Sonnet: $3/million input, $15/million output

- Claude 3 Opus: $15/million input, $75/million output

Similar to Claude 3 Haiku then, which both under-cuts and out-performs OpenAI's GPT-3.5 model.

In addition to the new model, Anthropic also added a "artifacts" feature to their Claude web interface. The most exciting part of this is that any of the Claude models can now build and then render web pages and SPAs, directly in the Claude interface.

This means you can prompt them to e.g. "Build me a web app that teaches me about mandelbrot fractals, with interactive widgets" and they'll do exactly that - I tried that prompt on Claude 3.5 Sonnet earlier and the results were spectacular (video demo).

An unsurprising note at the end of the post:

To complete the Claude 3.5 model family, we’ll be releasing Claude 3.5 Haiku and Claude 3.5 Opus later this year.

If the pricing stays consistent with Claude 3, Claude 3.5 Haiku is going to be a very exciting model indeed.

One of the core constitutional principles that guides our AI model development is privacy. We do not train our generative models on user-submitted data unless a user gives us explicit permission to do so. To date we have not used any customer or user-submitted data to train our generative models.

llm-claude-3 0.4. LLM plugin release adding support for the new Claude 3.5 Sonnet model:

pipx install llm

llm install -U llm-claude-3

llm keys set claude

# paste AP| key here

llm -m claude-3.5-sonnet \

'a joke about a pelican and a walrus having lunch'

June 21, 2024

It is in the public good to have AI produce quality and credible (if ‘hallucinations’ can be overcome) output. It is in the public good that there be the creation of original quality, credible, and artistic content. It is not in the public good if quality, credible content is excluded from AI training and output OR if quality, credible content is not created.

Val Vibes: Semantic search in Val Town. A neat case-study by JP Posma on how Val Town's developers can use Val Town Vals to build prototypes of new features that later make it into Val Town core.

This one explores building out semantic search against Vals using OpenAI embeddings and the PostgreSQL pgvector extension.

OpenAI was founded to build artificial general intelligence safely, free of outside commercial pressures. And now every once in a while it shoots out a new AI firm whose mission is to build artificial general intelligence safely, free of the commercial pressures at OpenAI.

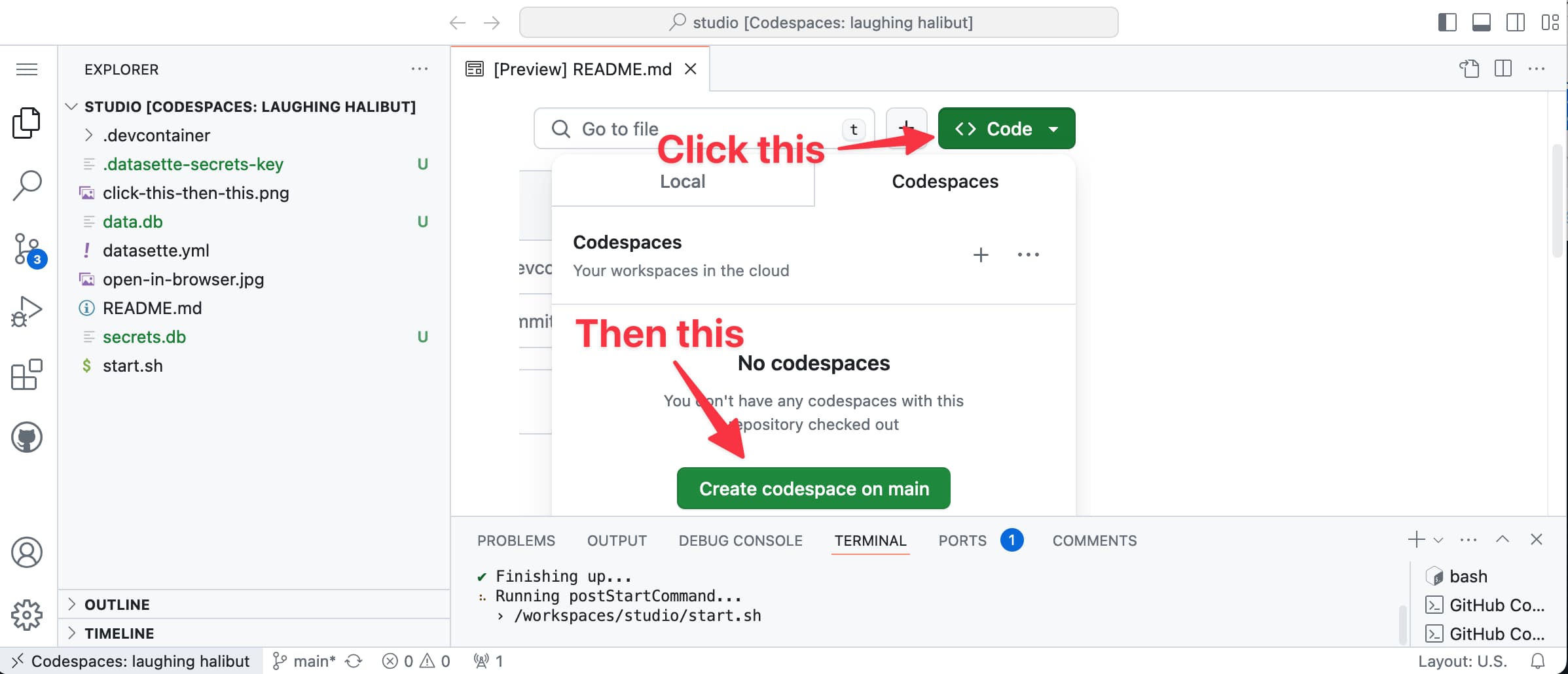

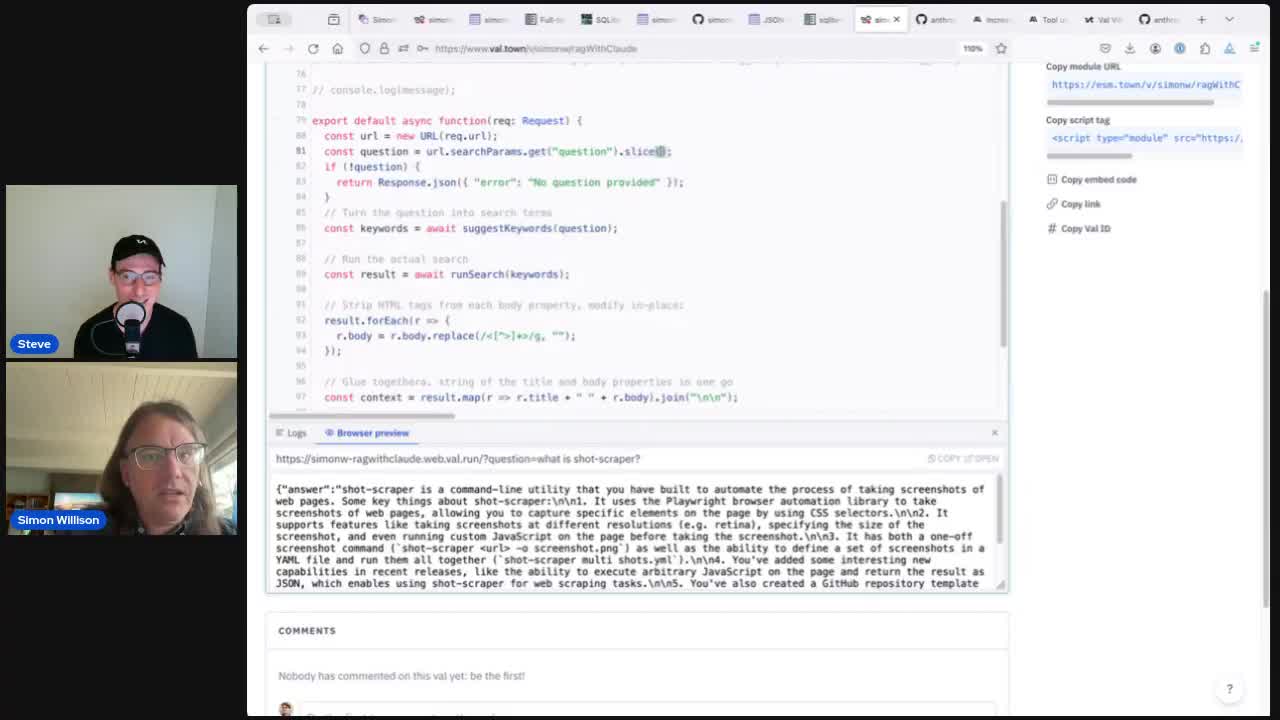

Building search-based RAG using Claude, Datasette and Val Town

Retrieval Augmented Generation (RAG) is a technique for adding extra “knowledge” to systems built on LLMs, allowing them to answer questions against custom information not included in their training data. A common way to implement this is to take a question from a user, translate that into a set of search queries, run those against a search engine and then feed the results back into the LLM to generate an answer.

[... 3,372 words]Datasette 0.64.8. A very small Datasette release, fixing a minor potential security issue where the name of missing databases or tables was reflected on the 404 page in a way that could allow an attacker to present arbitrary text to a user who followed a link. Not an XSS attack (no code could be executed) but still a potential vector for confusing messages.

June 22, 2024

Wikipedia Manual of Style: Linking (via) I started a conversation on Mastodon about the grammar of linking: how to decide where in a phrase an inline link should be placed.

Lots of great (and varied) replies there. The most comprehensive style guide I've seen so far is this one from Wikipedia, via Tom Morris.

In our “who validates the validators” user studies, we found that people expected—and also desired—for the LLM to learn from any human interaction. That too, “as efficiently as possible” (ie after 1-2 demonstrations, the LLM should “get it”)

June 23, 2024

The people who are most confident AI can replace writers are the ones who think writing is typing.

llama.ttf (via) llama.ttf is "a font file which is also a large language model and an inference engine for that model".

You can see it kick into action at 8m28s in this video, where creator Søren Fuglede Jørgensen types "Once upon a time" followed by dozens of exclamation marks, and those exclamation marks then switch out to render a continuation of the story. But... when they paste the code out of the editor again it shows as the original exclamation marks were preserved - the LLM output was presented only in the way they were rendered.

The key trick here is that the font renderer library HarfBuzz (used by Firefox, Chrome, Android, GNOME and more) added a new WebAssembly extension in version 8.0 last year, which is powerful enough to run a full LLM based on the tinyllama-15M model - which fits in a 60MB font file.

(Here's a related demo from Valdemar Erk showing Tetris running in a WASM font, at 22m56s in this video.)

The source code for llama.ttf is available on GitHub.

For some reason, many people still believe that browsers need to include non-standard hacks in HTML parsing to display the web correctly.

In reality, the HTML parsing spec is exhaustively detailed. If you implement it as described, you will have a web-compatible parser.

June 24, 2024

Microfeatures I Love in Blogs and Personal Websites (via) This post by Daniel Fedorin (and the accompanying Hacker News thread) is a nice reminder of one of the most fun things about building your own personal website: it gives you a low-risk place to experiment with details like footnotes, tables of contents, linkable headings, code blocks, RSS feeds, link previews and more.

New blog feature: Support for markdown in quotations. Another incremental improvement to my blog. I've been collecting quotations here since 2006 - I now render them using Markdown (previously they were just plain text). Here's one example. The full set of 920 (and counting) quotations can be explored using this search filter.