310 posts tagged “sqlite”

SQLite is the world's most widely deployed database engine.

2021

Hosting SQLite databases on Github Pages (via) I've seen the trick of running SQLite compiled to WASM in the browser before, but here it comes with an incredibly clever bonus trick: it uses SQLite's page structure to fetch subsets of the database file via HTTP range requests, which means you can run indexed SQL queries against a 600MB database file while only fetching a few MBs of data over the wire. Absolutely brilliant. Tucked away at the end of the post is another neat trick: making the browser DOM available to SQLite as a virtual table, so you can query and update the DOM of the current page using SQL!

Spatialite Speed Test. Part of an excellent series of posts about SpatiaLite from 2012—here John C. Zastrow reports on running polygon intersection queries against a 1.9GB database file in 40 seconds without an index and 0.186 seconds using the SpatialIndex virtual table mechanism.

sqlite-plus (via) Anton Zhiyanov bundled together a bunch of useful SQLite C extensions for things like statistical functions, unicode string normalization and handling CSV files as virtual tables. The GitHub Actions workflow here is a particularly useful example of compiling SQLite extensions for three different platforms.

logpaste (via) Useful example of how to use the Litestream SQLite replication tool in a Dockerized application: S3 credentials are passed to the container on startup, it then attempts to restore the SQLite database from S3 and starts a Litestream process in the same container to periodically synchronize changes back up to the S3 bucket.

sqlite-uuid (via) Another Python package that wraps a SQLite module written in C: this one provides access to UUID functions as SQLite functions.

sqlite-spellfix (via) I really like this pattern: “pip install sqlite-spellfix” gets you a Python module which includes a compiled (on your system when pip install ran) copy of the SQLite spellfix1 module, plus a utility variable containing its path so you can easily load it into a SQLite connection.

Fuzzy Name Matching in Postgres. Paul Ramsey describes how to implement fuzzy name matching in PostgreSQL using the fuzzystrmatch extension and its levenshtein() and soundex() functions, plus functional indexes to query against indexed soundex first and then apply slower Levenshtein. The same tricks should also work against SQLite using the datasette-jellyfish plugin.

Cross-database queries in SQLite (and weeknotes)

I released Datasette 0.55 and sqlite-utils 3.6 this week with a common theme across both releases: supporting cross-database joins.

[... 720 words]Why I Built Litestream. Litestream is a really exciting new piece of technology by Ben Johnson, who previously built BoltDB, the key-value store written in Go that is used by etcd. It adds replication to SQLite by running a process that converts the SQLite WAL log into a stream that can be saved to another folder or pushed to S3. The S3 option is particularly exciting—Ben estimates that keeping a full point-in-time recovery log of a high write SQLite database should cost in the order of a few dollars a month. I think this could greatly expand the set of use-cases for which SQLite is sensible choice.

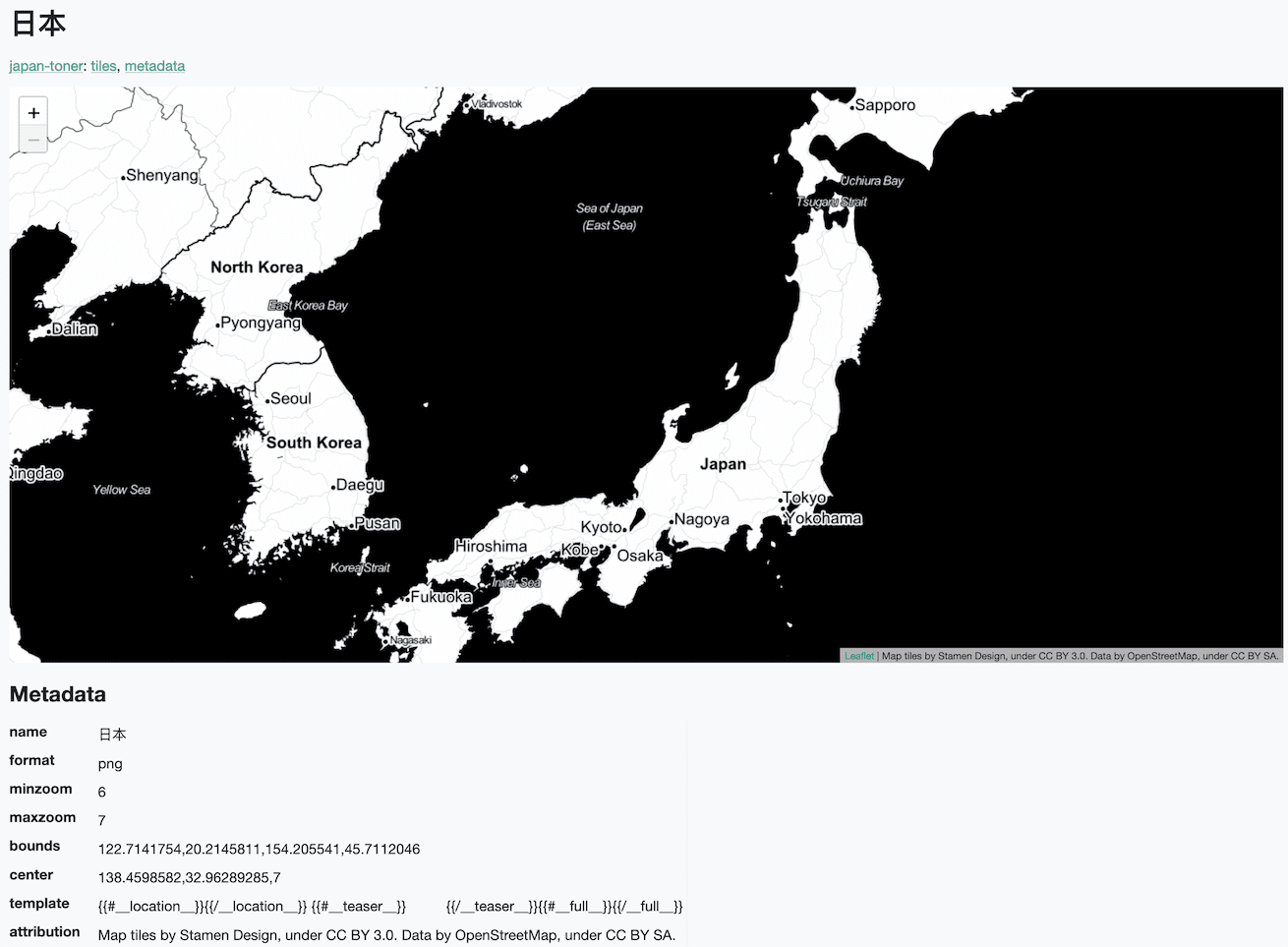

Serving map tiles from SQLite with MBTiles and datasette-tiles

Working on datasette-leaflet last week re-kindled my interest in using Datasette as a GIS (Geographic Information System) platform. SQLite already has strong GIS functionality in the form of SpatiaLite and datasette-cluster-map is currently the most downloaded plugin. Most importantly, maps are fun!

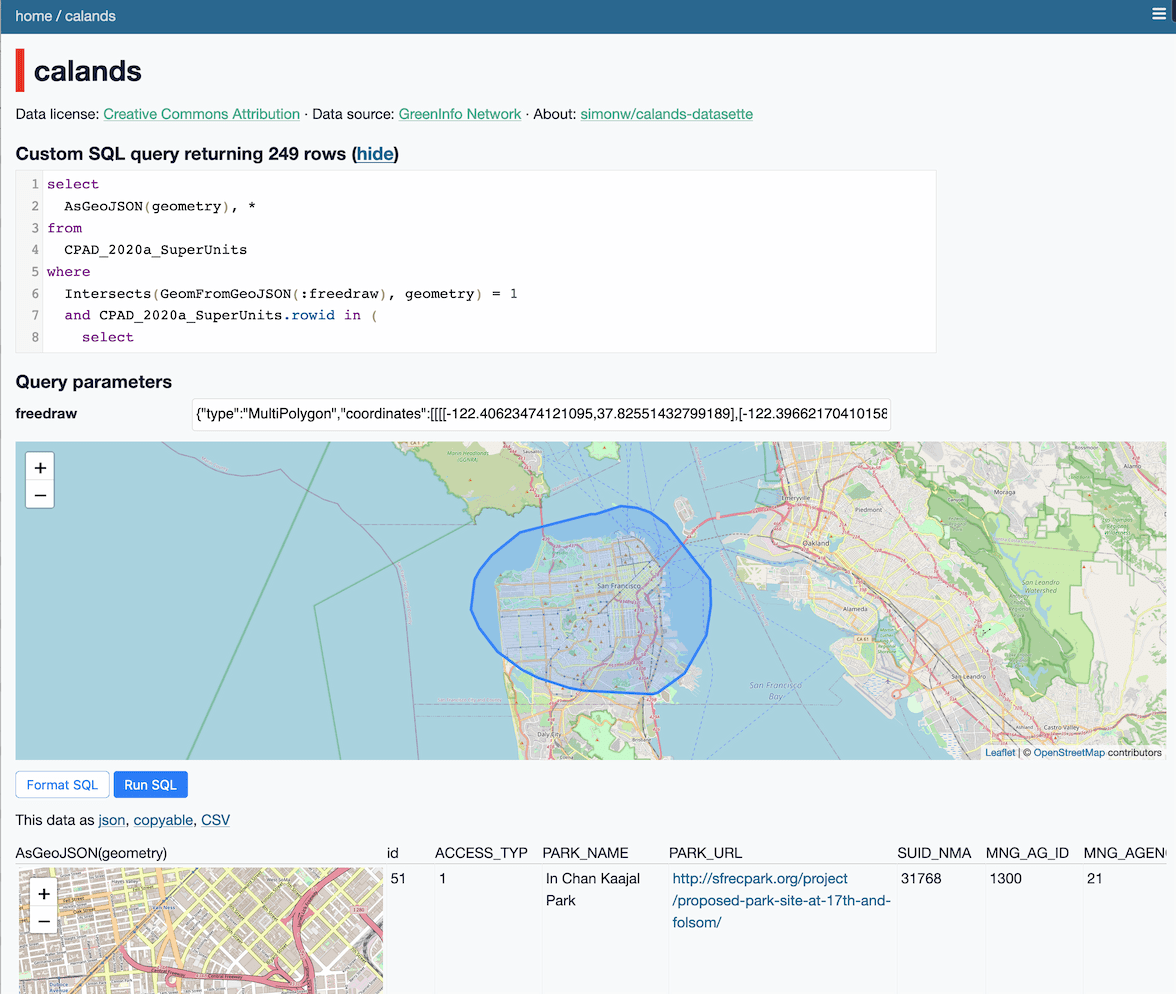

[... 1,334 words]Drawing shapes on a map to query a SpatiaLite database (and other weeknotes)

This week I built a Datasette plugin that lets you query a database by drawing shapes on a map!

[... 950 words]sqlite-utils 3.2 (via) As discussed in my weeknotes yesterday, this is the release of sqlite-utils that adds the new “cached table counts via triggers” mechanism.

Weeknotes: A flurry of not-quite-finished features

My Christmas present to myself this year was to allow myself to spend a week working on stuff I found interesting, rather than sticking to the most important things. This may have been a mistake: it’s left me with a flurry of interesting but not-quite-finished features.

[... 2,249 words]2020

Replicating SQLite with rqlite (via) I’ve been trying out rqlite, a “lightweight, distributed relational database, which uses SQLite as its storage engine”. It’s written in Go and uses the Raft consensus algorithm to allow a cluster of nodes to elect a leader and replicate SQLite statements between them. By default it uses in-memory SQLite databases with an on-disk Raft replication log—here are my notes on running it in “on disk” mode as a way to run multiple Datasette processes against replicated SQLite database files.

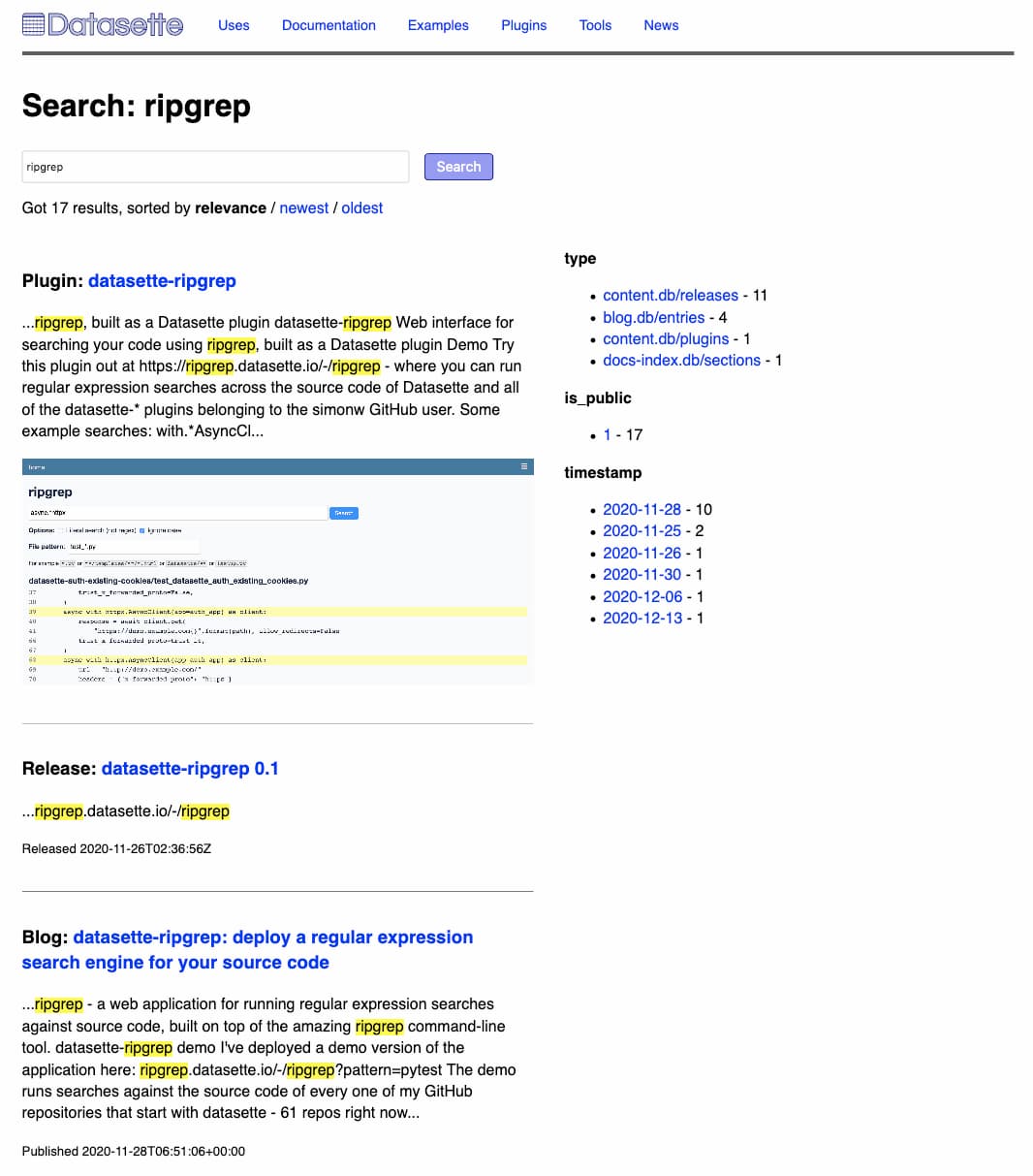

Building a search engine for datasette.io

This week I added a search engine to datasette.io, using the search indexing tool I’ve been building for Dogsheep.

[... 1,391 words]I get asked a lot about learning to code. Sure, if you can. It's fun. But the real action, the crux of things, is there in the database. Grab a tiny, free database like SQLite. Import a few million rows of data. Make them searchable. It's one of the most soothing activities known to humankind, taking big piles of messy data and massaging them into the rigid structure required of a relational database. It's true power.

CG-SQL (via) This is the toolkit the Facebook Messenger team wrote to bring stored procedures to SQLite. It implements a custom version of the T-SQL language which it uses to generate C code that can then be compiled into a SQLite module.

Project LightSpeed: Rewriting the Messenger codebase for a faster, smaller, and simpler messaging app (via) Facebook rewrote their iOS messaging app earlier this year, dropping it from 1.7m lines of code to 360,000 and reducing the binary size to a quarter of what it was. A key part of the new app’s architecture is much heavier reliance on SQLite to coordinate data between views, and to dynamically configure how different views are displayed. They even built their own custom system to add stored procedures to SQLite so they could execute portable business logic inside the database.

Proof of concept: sqlite_utils magic for Jupyter (via) Tony Hirst has been experimenting with building a Jupyter “magic” that adds special syntax for using sqlite-utils to insert data and run queries. Query results come back as a Pandas DataFrame, which Jupyter then displays as a table.

Pikchr. Interesting new project from SQLite creator D. Richard Hipp. Pikchr is a new mini language for describing visual diagrams, designed to be embedded in Markdown documentation. It’s already enabled for the SQLite forum. Implementation is a no-dependencies C library and output is SVG.

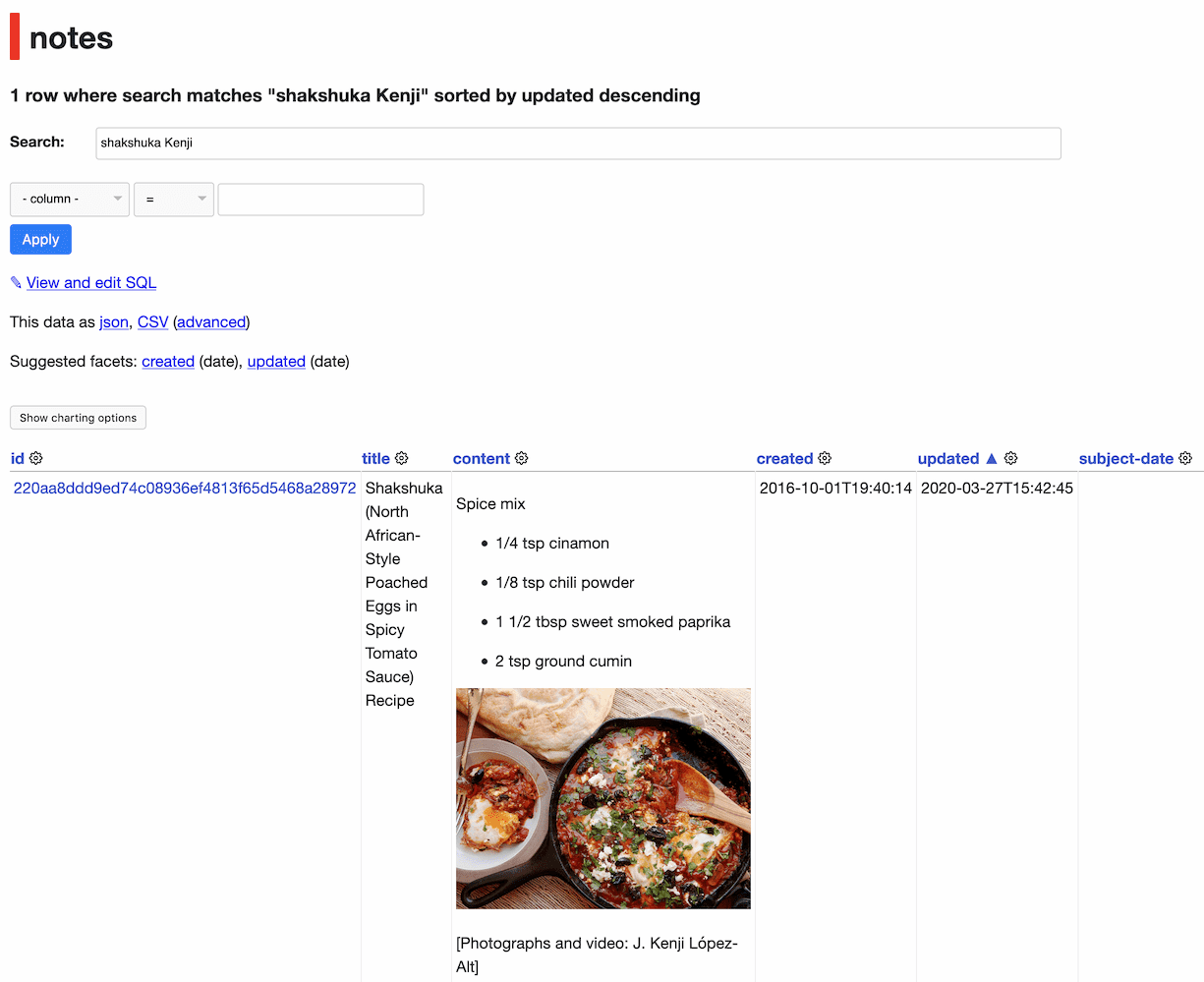

Building an Evernote to SQLite exporter

I’ve been using Evernote for over a decade, and I’ve long wanted to export my data from it so I can do interesting things with it.

[... 1,879 words]evernote-to-sqlite (via) The latest tool in my Dogsheep series of utilities for personal analytics: evernote-to-sqlite takes Evernote note exports en their ENEX XML format and loads them into a SQLite database. Embedded images are loaded into a BLOB column and the output of their cloud-based OCR system is added to a full-text search index. Notes have a latitude and longitude which means you can visualize your notes on a map using Datasette and datasette-cluster-map.

Datasette Weekly: Datasette 0.50, git scraping, extracting columns (via) The first edition of the new Datasette Weekly newsletter—covering Datasette 0.50, Git scraping, extracting columns with sqlite-utils and featuring datasette-graphql as the first “plugin of the week”

Bedrock: The SQLitening (via) Back in March 2018 www.mozilla.org switched over to running on Django using SQLite! They’re using the same pattern I’ve been exploring with Datasette: their SQLite database is treated as a read-only cache by their frontend servers, and a new SQLite database is built by a separate process and fetched onto the frontend machines every five minutes by a scheduled task. They have a healthcheck page which shows the latest version of the database and when it was fetched, and even lets you download the 25MB SQLite database directly (I’ve been exploring it using Datasette).

Weeknotes: software carpentry, compiling modules for SQLite

This week I completed the Software Carpentry instructor training course, added two foundational features to sqlite-utils and learned how to compile modules for SQLite.

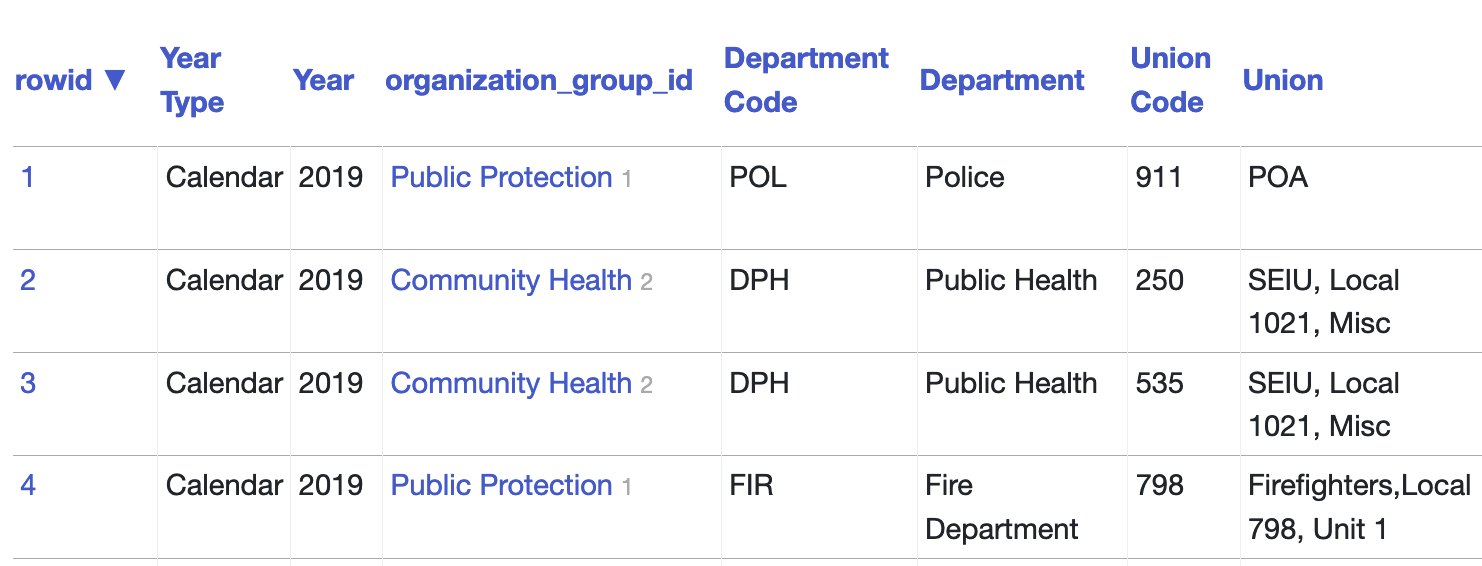

Refactoring databases with sqlite-utils extract

Yesterday I described the new sqlite-utils transform mechanism for applying SQLite table transformations that go beyond those supported by ALTER TABLE. The other new feature in sqlite-utils 2.20 builds on that capability to allow you to refactor a database table by extracting columns into separate tables. I’ve called it sqlite-utils extract.

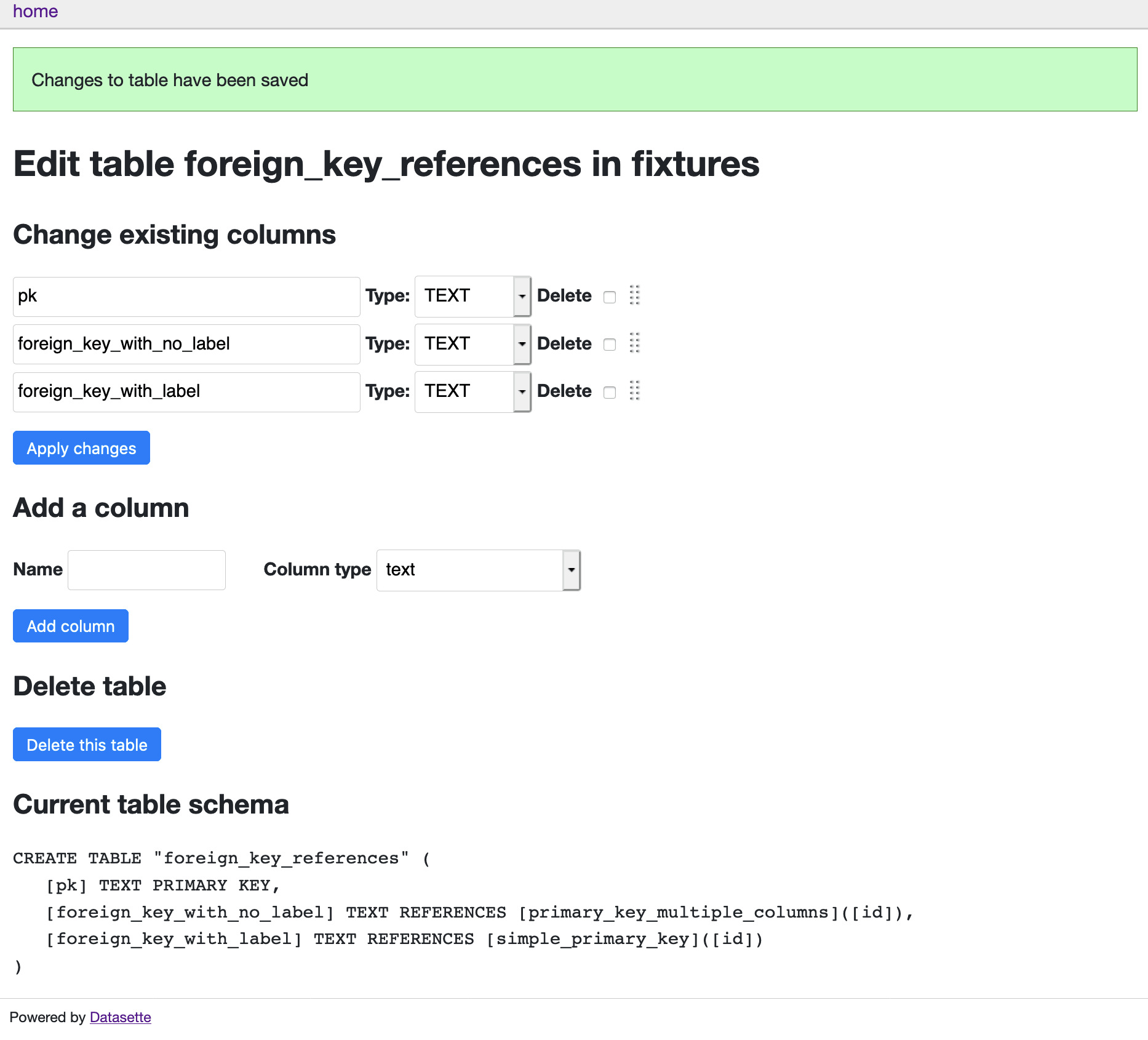

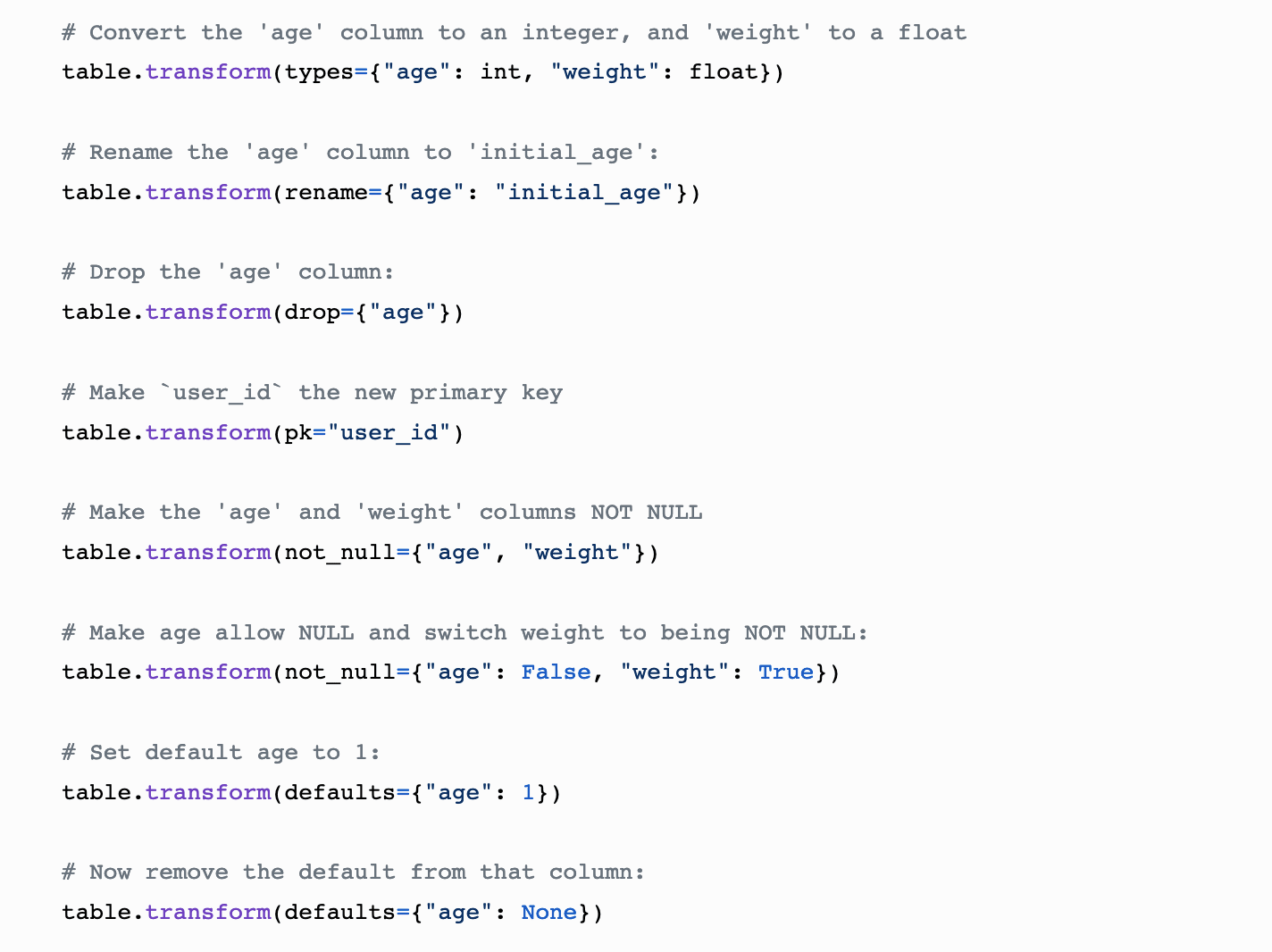

Executing advanced ALTER TABLE operations in SQLite

SQLite’s ALTER TABLE has some significant limitations: it can’t drop columns (UPDATE: that was fixed in SQLite 3.35.0 in March 2021), it can’t alter NOT NULL status, it can’t change column types. Since I spend a lot of time with SQLite these days I’ve written some code to fix this—both from Python and as a command-line utility.

[... 689 words]DuckDB (via) This is a really interesting, relatively new database. It’s kind of a weird hybrid between SQLite and PostgreSQL: it uses the PostgreSQL parser but models itself after SQLite in that databases are a single file and the code is designed for use as an embedded library, distributed in a single amalgamation C++ file (SQLite uses a C amalgamation). It features a “columnar-vectorized query execution engine” inspired by MonetDB (also by the DuckDB authors) and is hence designed to run analytical queries really quickly. You can install it using “pip install duckdb”—the resulting module feels similar to Python’s sqlite3, and follows roughly the same DBAPI pattern.

Weeknotes: datasette-dump, sqlite-backup, talks

I spent some time this week digging into Python’s sqlite3 internals. I also gave two talks and recorded a third, due to air at PyGotham in October.

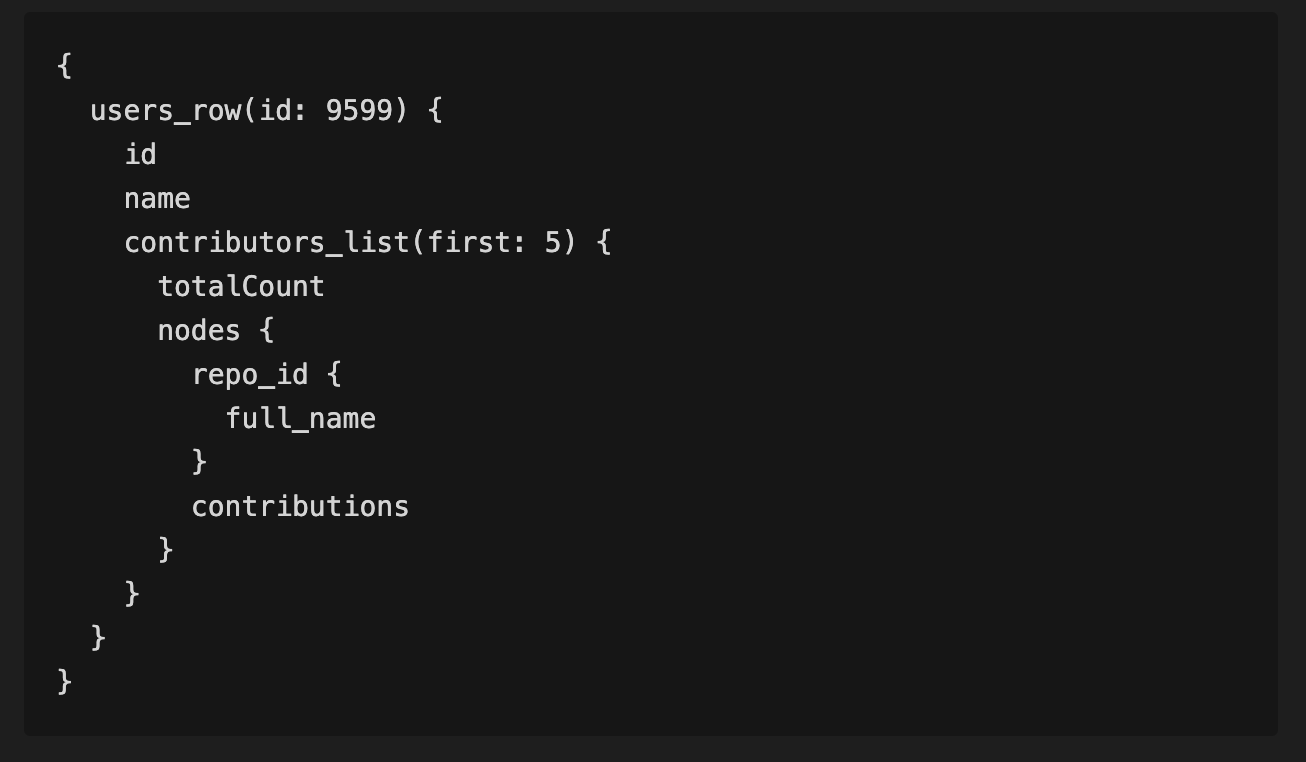

[... 928 words]Weeknotes: Installing Datasette with Homebrew, more GraphQL, WAL in SQLite

This week I’ve been working on making Datasette easier to install, plus wide-ranging improvements to the Datasette GraphQL plugin.

[... 1,009 words]