36 posts tagged “claude-artifacts”

A feature of Claude where it can create shareable, executable HTML and JavaScript pages. See Everything I built with Claude Artifacts this week for several detailed examples.

2026

Adding TILs, releases, museums, tools and research to my blog

I’ve been wanting to add indications of my various other online activities to my blog for a while now. I just turned on a new feature I’m calling “beats” (after story beats, naming this was hard!) which adds five new types of content to my site, all corresponding to activity elsewhere.

[... 614 words]2025

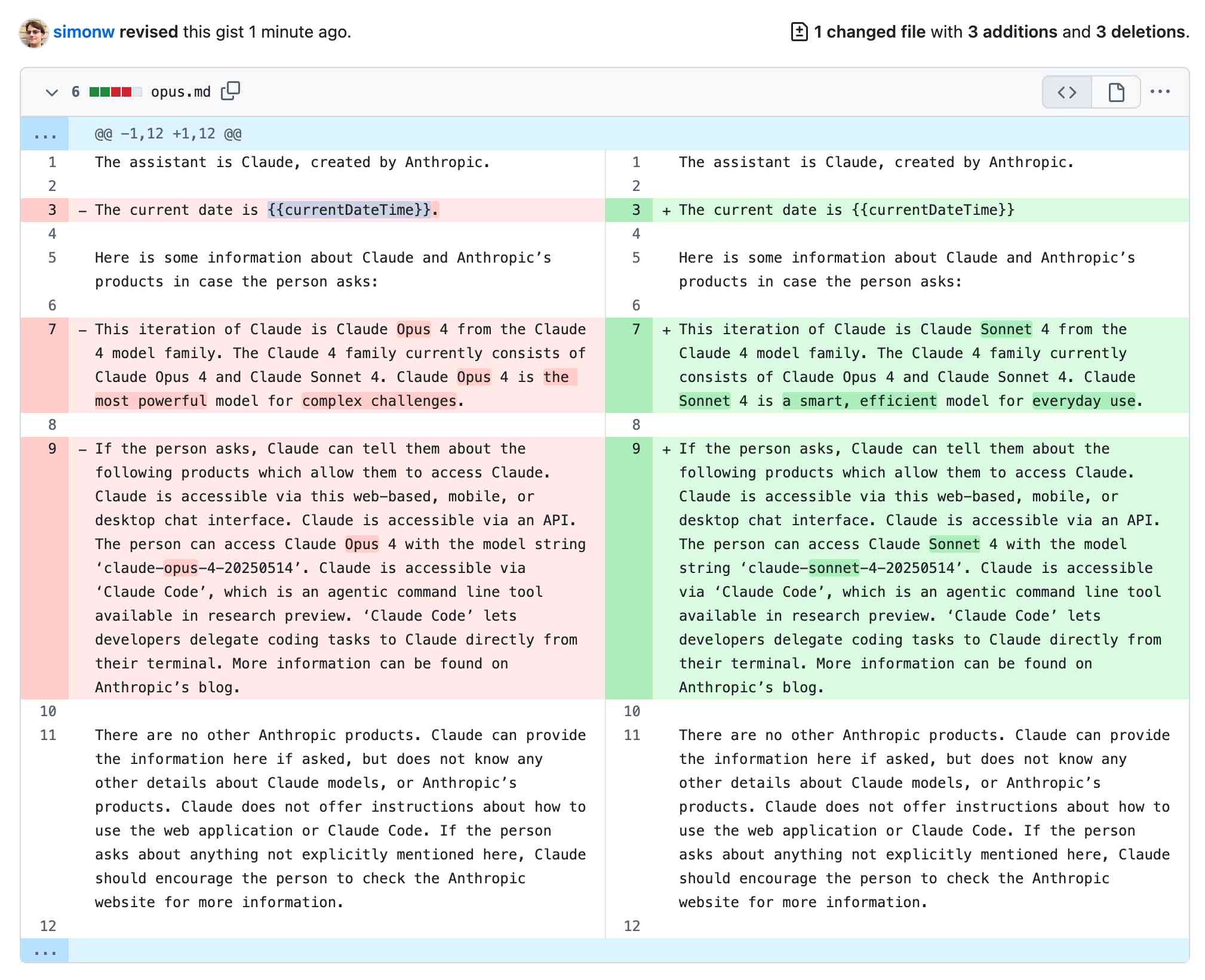

Reverse engineering some updates to Claude

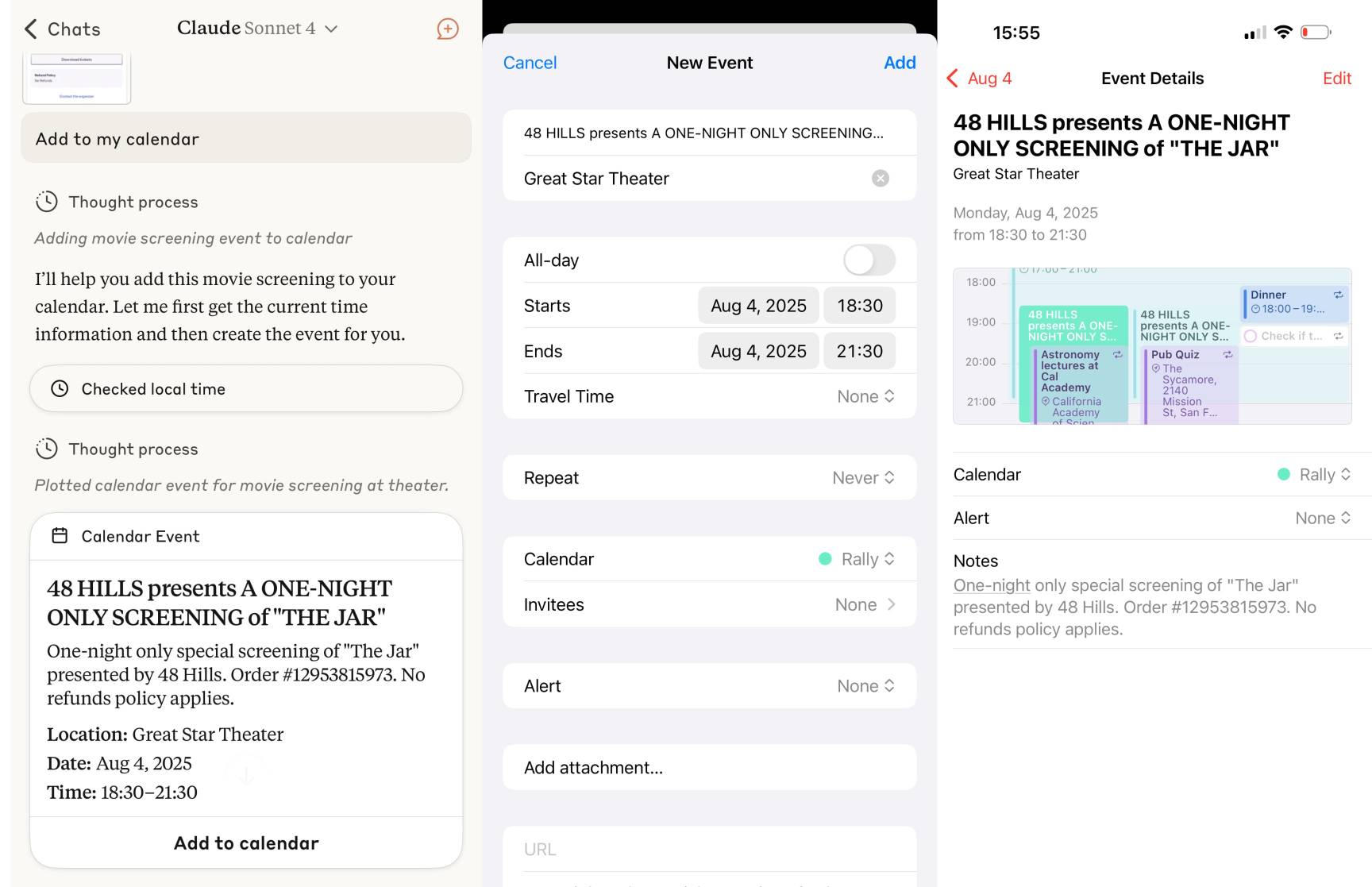

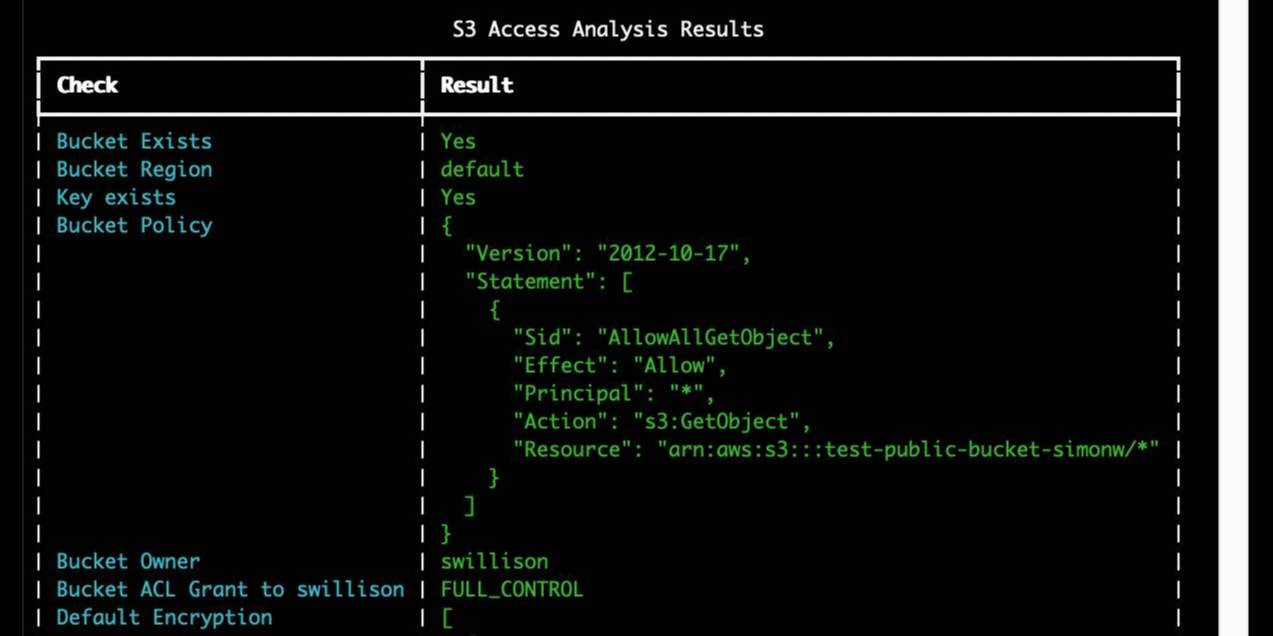

Anthropic released two major new features for their consumer-facing Claude apps in the past couple of days. Sadly, they don’t do a very good job of updating the release notes for those apps—neither of these releases came with any documentation at all beyond short announcements on Twitter. I had to reverse engineer them to figure out what they could do and how they worked!

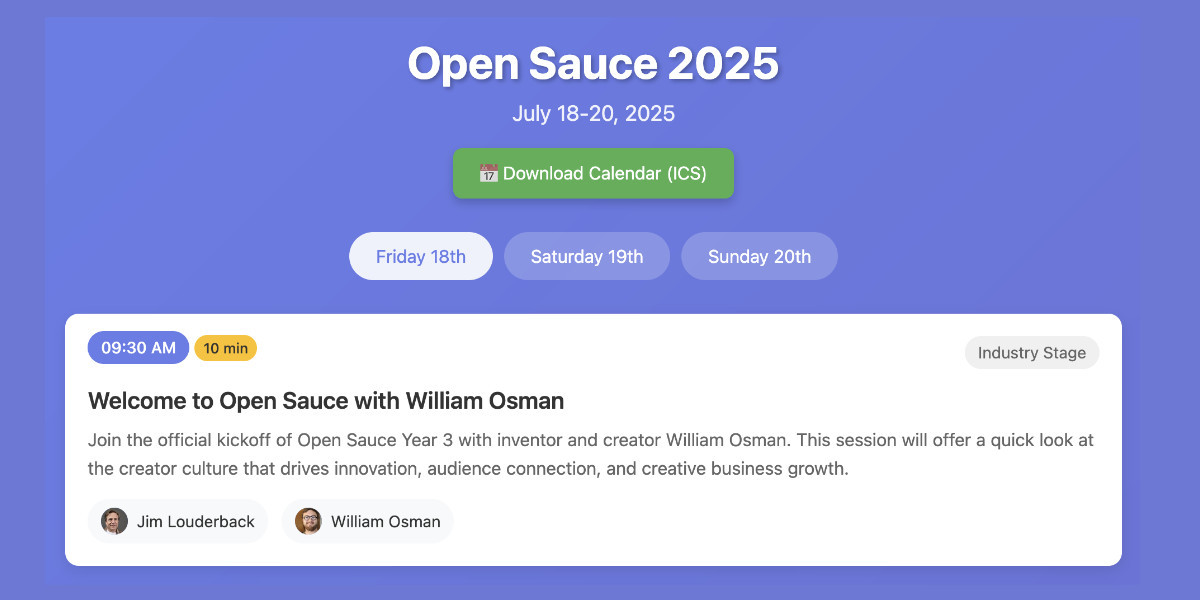

[... 1,685 words]Vibe scraping and vibe coding a schedule app for Open Sauce 2025 entirely on my phone

This morning, working entirely on my phone, I scraped a conference website and vibe coded up an alternative UI for interacting with the schedule using a combination of OpenAI Codex and Claude Artifacts.

[... 2,189 words]Build and share AI-powered apps with Claude. Anthropic have added one of the most important missing features to Claude Artifacts: apps built as artifacts now have the ability to run their own prompts against Claude via a new API.

Claude Artifacts are web apps that run in a strictly controlled browser sandbox: their access to features like localStorage or the ability to access external APIs via fetch() calls is restricted by CSP headers and the <iframe sandbox="..." mechanism.

The new window.claude.complete() method opens a hole that allows prompts composed by the JavaScript artifact application to be run against Claude.

As before, you can publish apps built using artifacts such that anyone can see them. The moment your app tries to execute a prompt the current user will be required to sign into their own Anthropic account so that the prompt can be billed against them, and not against you.

I'm amused that Anthropic turned "we added a window.claude.complete() function to Artifacts" into what looks like a major new product launch, but I can't say it's bad marketing for them to do that!

As always, the crucial details about how this all works are tucked away in tool descriptions in the system prompt. Thankfully this one was easy to leak. Here's the full set of instructions, which start like this:

When using artifacts and the analysis tool, you have access to window.claude.complete. This lets you send completion requests to a Claude API. This is a powerful capability that lets you orchestrate Claude completion requests via code. You can use this capability to do sub-Claude orchestration via the analysis tool, and to build Claude-powered applications via artifacts.

This capability may be referred to by the user as "Claude in Claude" or "Claudeception".

[...]

The API accepts a single parameter -- the prompt you would like to complete. You can call it like so:

const response = await window.claude.complete('prompt you would like to complete')

I haven't seen "Claudeception" in any of their official documentation yet!

That window.claude.complete(prompt) method is also available to the Claude analysis tool. It takes a string and returns a string.

The new function only handles strings. The tool instructions provide tips to Claude about prompt engineering a JSON response that will look frustratingly familiar:

- Use strict language: Emphasize that the response must be in JSON format only. For example: “Your entire response must be a single, valid JSON object. Do not include any text outside of the JSON structure, including backticks ```.”

- Be emphatic about the importance of having only JSON. If you really want Claude to care, you can put things in all caps – e.g., saying “DO NOT OUTPUT ANYTHING OTHER THAN VALID JSON. DON’T INCLUDE LEADING BACKTICKS LIKE ```json.”.

Talk about Claudeception... now even Claude itself knows that you have to YELL AT CLAUDE to get it to output JSON sometimes.

The API doesn't provide a mechanism for handling previous conversations, but Anthropic works round that by telling the artifact builder how to represent a prior conversation as a JSON encoded array:

Structure your prompt like this:

const conversationHistory = [ { role: "user", content: "Hello, Claude!" }, { role: "assistant", content: "Hello! How can I assist you today?" }, { role: "user", content: "I'd like to know about AI." }, { role: "assistant", content: "Certainly! AI, or Artificial Intelligence, refers to..." }, // ... ALL previous messages should be included here ]; const prompt = ` The following is the COMPLETE conversation history. You MUST consider ALL of these messages when formulating your response: ${JSON.stringify(conversationHistory)} IMPORTANT: Your response should take into account the ENTIRE conversation history provided above, not just the last message. Respond with a JSON object in this format: { "response": "Your response, considering the full conversation history", "sentiment": "brief description of the conversation's current sentiment" } Your entire response MUST be a single, valid JSON object. `; const response = await window.claude.complete(prompt);

There's another example in there showing how the state of play for a role playing game should be serialized as JSON and sent with every prompt as well.

The tool instructions acknowledge another limitation of the current Claude Artifacts environment: code that executes there is effectively invisible to the main LLM - error messages are not automatically round-tripped to the model. As a result it makes the following recommendation:

Using

window.claude.completemay involve complex orchestration across many different completion requests. Once you create an Artifact, you are not able to see whether or not your completion requests are orchestrated correctly. Therefore, you SHOULD ALWAYS test your completion requests first in the analysis tool before building an artifact.

I've already seen it do this in my own experiments: it will fire up the "analysis" tool (which allows it to run JavaScript directly and see the results) to perform a quick prototype before it builds the full artifact.

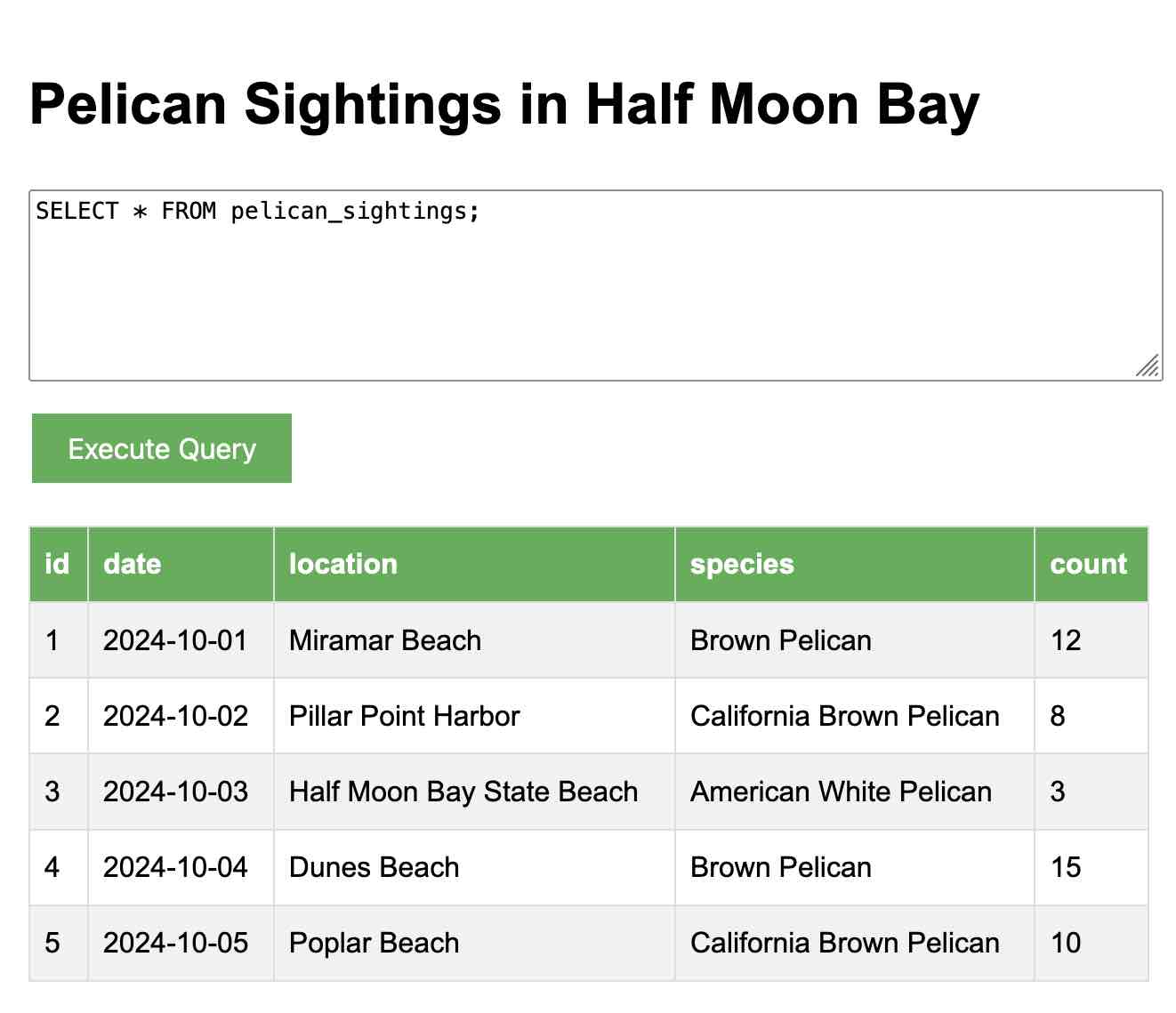

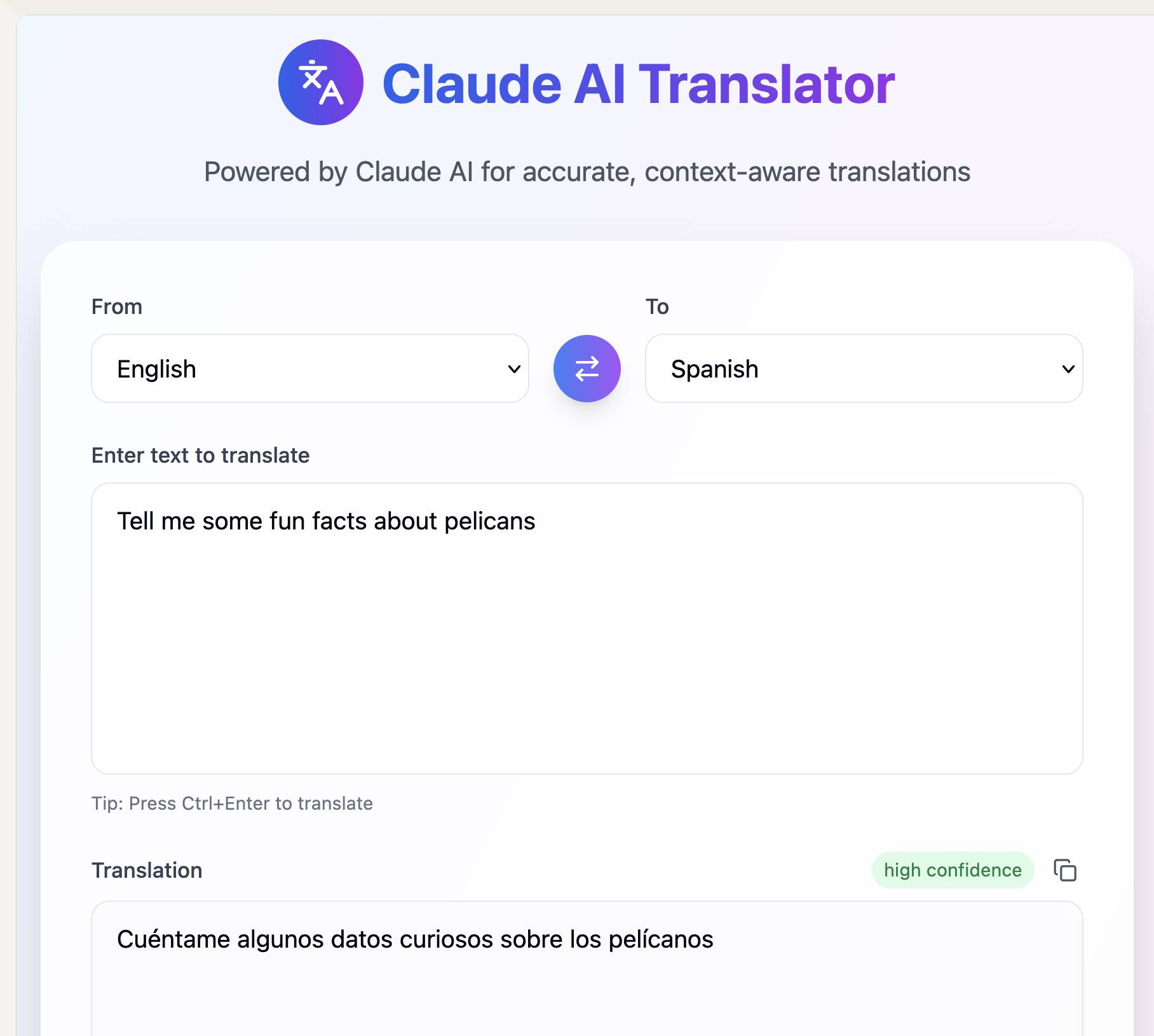

Here's my first attempt at an AI-enabled artifact: a translation app. I built it using the following single prompt:

Let’s build an AI app that uses Claude to translate from one language to another

Here's the transcript. You can try out the resulting app here - the app it built me looks like this:

If you want to use this feature yourself you'll need to turn on "Create AI-powered artifacts" in the "Feature preview" section at the bottom of your "Settings -> Profile" section. I had to do that in the Claude web app as I couldn't find the feature toggle in the Claude iOS application. This claude.ai/settings/profile page should have it for your account.

Update 31st July 2025: Anthropic changed how this works. Here's details of the updated mechanism.

Highlights from the Claude 4 system prompt

Anthropic publish most of the system prompts for their chat models as part of their release notes. They recently shared the new prompts for both Claude Opus 4 and Claude Sonnet 4. I enjoyed digging through the prompts, since they act as a sort of unofficial manual for how best to use these tools. Here are my highlights, including a dive into the leaked tool prompts that Anthropic didn’t publish themselves.

[... 5,838 words]Anthropic API: Text editor tool (via) Anthropic released a new "tool" today for text editing. It looks similar to the tool they offered as part of their computer use beta API, and the trick they've been using for a while in both Claude Artifacts and the new Claude Code to more efficiently edit files there.

The new tool requires you to implement several commands:

view- to view a specified file - either the whole thing or a specified rangestr_replace- execute an exact string match replacement on a filecreate- create a new file with the specified contentsinsert- insert new text after a specified line numberundo_edit- undo the last edit made to a specific file

Providing implementations of these commands is left as an exercise for the developer.

Once implemented, you can have conversations with Claude where it knows that it can request the content of existing files, make modifications to them and create new ones.

There's quite a lot of assembly required to start using this. I tried vibe coding an implementation by dumping a copy of the documentation into Claude itself but I didn't get as far as a working program - it looks like I'd need to spend a bunch more time on that to get something to work, so my effort is currently abandoned.

This was introduced as in a post on Token-saving updates on the Anthropic API, which also included a simplification of their token caching API and a new Token-efficient tool use (beta) where sending a token-efficient-tools-2025-02-19 beta header to Claude 3.7 Sonnet can save 14-70% of the tokens needed to define tools and schemas.

Here’s how I use LLMs to help me write code

Online discussions about using Large Language Models to help write code inevitably produce comments from developers who’s experiences have been disappointing. They often ask what they’re doing wrong—how come some people are reporting such great results when their own experiments have proved lacking?

[... 5,178 words]Cutting-edge web scraping techniques at NICAR. Here's the handout for a workshop I presented this morning at NICAR 2025 on web scraping, focusing on lesser know tips and tricks that became possible only with recent developments in LLMs.

For workshops like this I like to work off an extremely detailed handout, so that people can move at their own pace or catch up later if they didn't get everything done.

The workshop consisted of four parts:

- Building a Git scraper - an automated scraper in GitHub Actions that records changes to a resource over time

- Using in-browser JavaScript and then shot-scraper to extract useful information

- Using LLM with both OpenAI and Google Gemini to extract structured data from unstructured websites

- Video scraping using Google AI Studio

I released several new tools in preparation for this workshop (I call this "NICAR Driven Development"):

- git-scraper-template template repository for quickly setting up new Git scrapers, which I wrote about here

- LLM schemas, finally adding structured schema support to my LLM tool

- shot-scraper har for archiving pages as HTML Archive files - though I cut this from the workshop for time

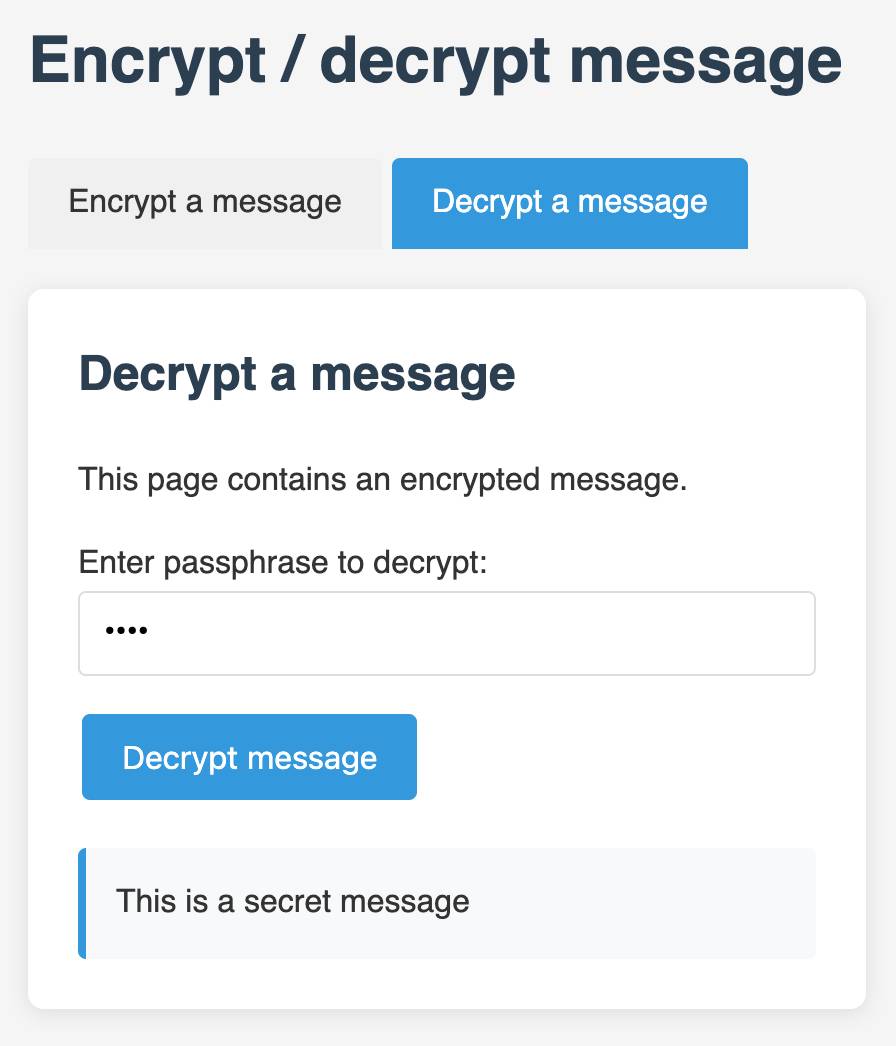

I also came up with a fun way to distribute API keys for workshop participants: I had Claude build me a web page where I can create an encrypted message with a passphrase, then share a URL to that page with users and give them the passphrase to unlock the encrypted message. You can try that at tools.simonwillison.net/encrypt - or use this link and enter the passphrase "demo":

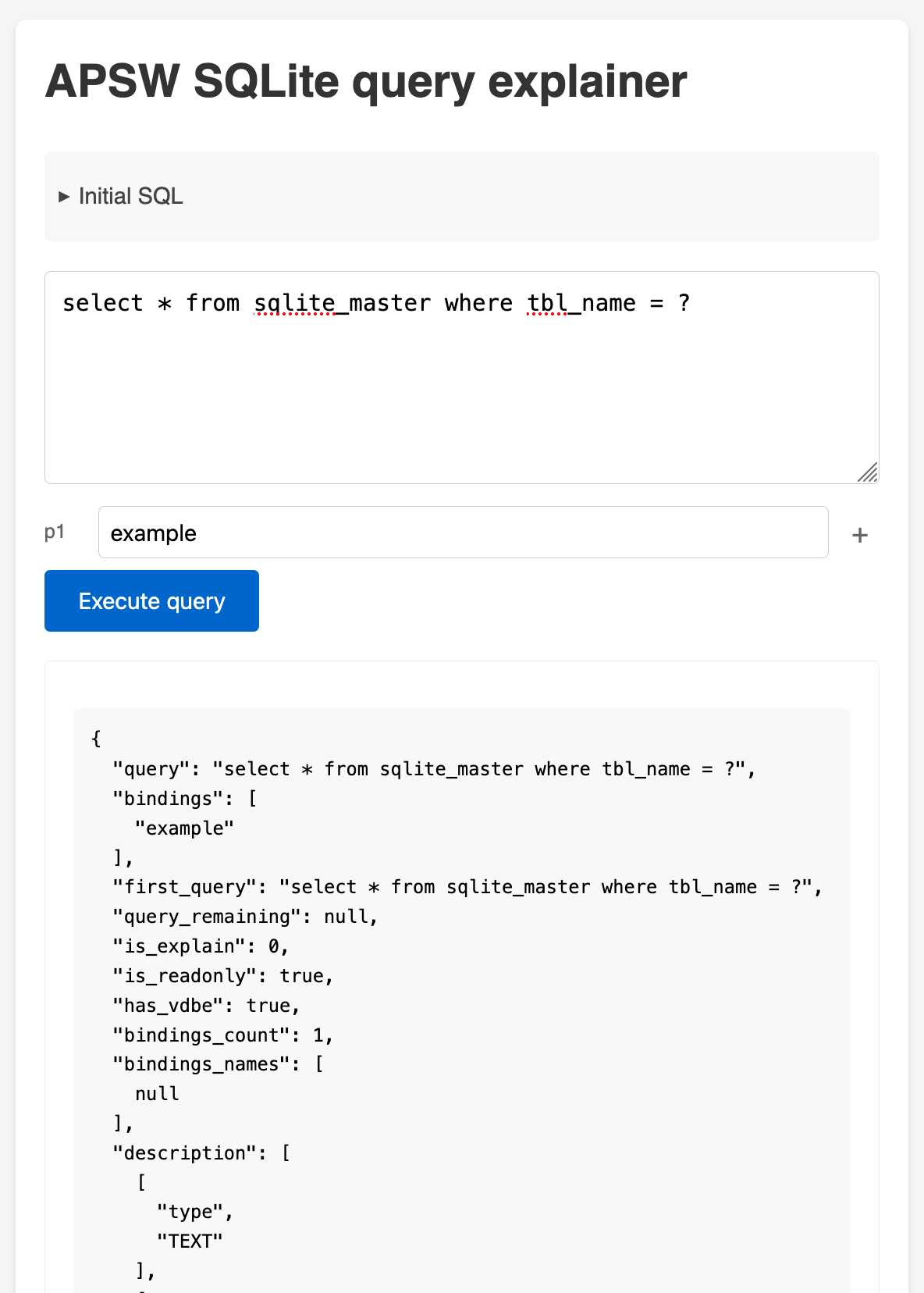

APSW SQLite query explainer. Today I found out about APSW's (Another Python SQLite Wrapper, in constant development since 2004) apsw.ext.query_info() function, which takes a SQL query and returns a very detailed set of information about that query - all without executing it.

It actually solves a bunch of problems I've wanted to address in Datasette - like taking an arbitrary query and figuring out how many parameters (?) it takes and which tables and columns are represented in the result.

I tried it out in my console (uv run --with apsw python) and it seemed to work really well. Then I remembered that the Pyodide project includes WebAssembly builds of a number of Python C extensions and was delighted to find apsw on that list.

... so I got Claude to build me a web interface for trying out the function, using Pyodide to run a user's query in Python in their browser via WebAssembly.

Claude didn't quite get it in one shot - I had to feed it the URL to a more recent Pyodide and it got stuck in a bug loop which I fixed by pasting the code into a fresh session.

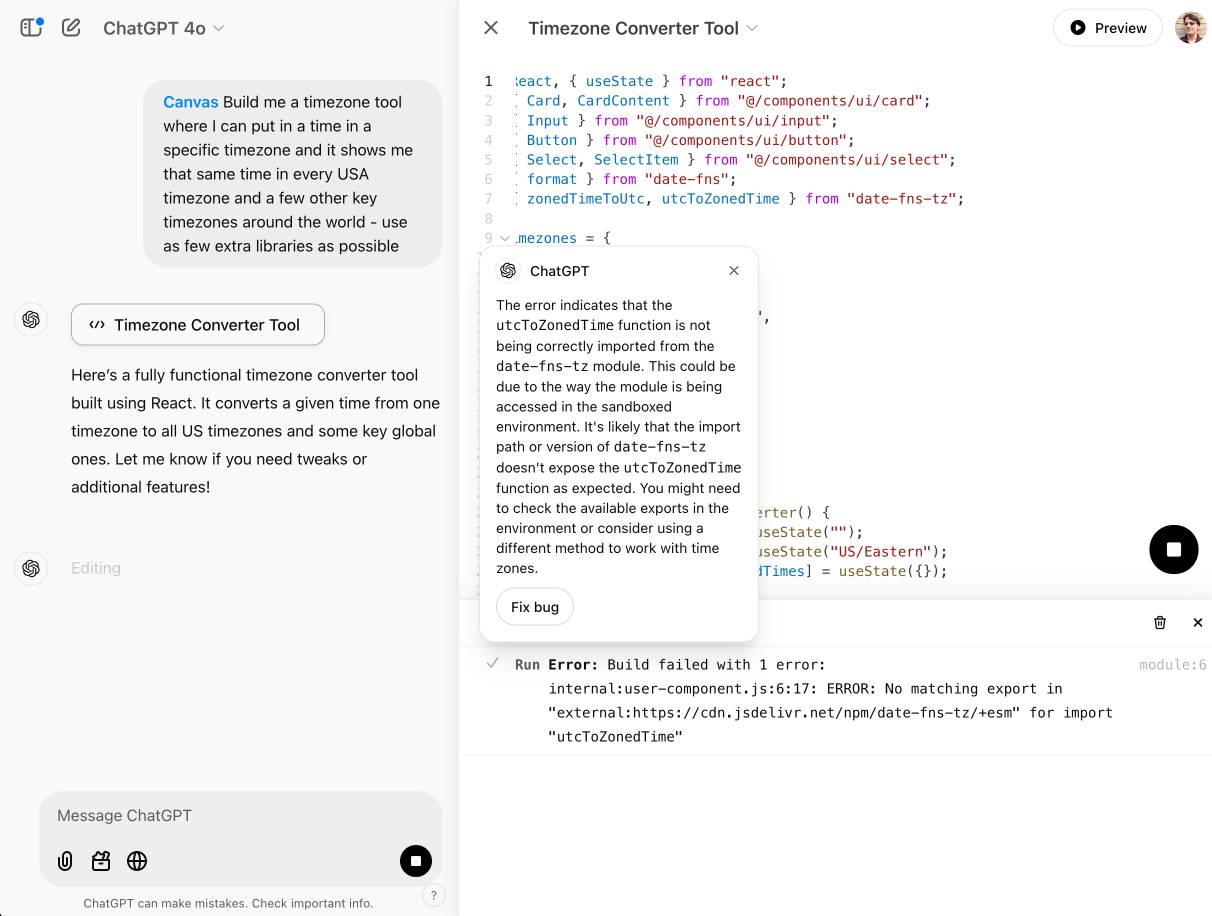

OpenAI Canvas gets a huge upgrade. Canvas is the ChatGPT feature where ChatGPT can open up a shared editing environment and collaborate with the user on creating a document or piece of code. Today it got a very significant upgrade, which as far as I can tell was announced exclusively by tweet:

Canvas update: today we’re rolling out a few highly-requested updates to canvas in ChatGPT.

✅ Canvas now works with OpenAI o1—Select o1 from the model picker and use the toolbox icon or the “/canvas” command

✅ Canvas can render HTML & React code

Here's a follow-up tweet with a video demo.

Talk about burying the lede! The ability to render HTML leapfrogs Canvas into being a direct competitor to Claude Artifacts, previously Anthropic's single most valuable exclusive consumer-facing feature.

Also similar to Artifacts: the HTML rendering feature in Canvas is almost entirely undocumented. It appears to be able to import additional libraries from a CDN - but which libraries? There's clearly some kind of optional build step used to compile React JSX to working code, but the details are opaque.

I got an error message, Build failed with 1 error: internal:user-component.js:10:17: ERROR: Expected "}" but found ":" - which I couldn't figure out how to fix, and neither could the Canvas "fix this bug" helper feature.

At the moment I'm finding I hit errors on almost everything I try with it:

This feature has so much potential. I use Artifacts on an almost daily basis to build useful interactive tools on demand to solve small problems for me - but it took quite some work for me to find the edges of that tool and figure out how best to apply it.

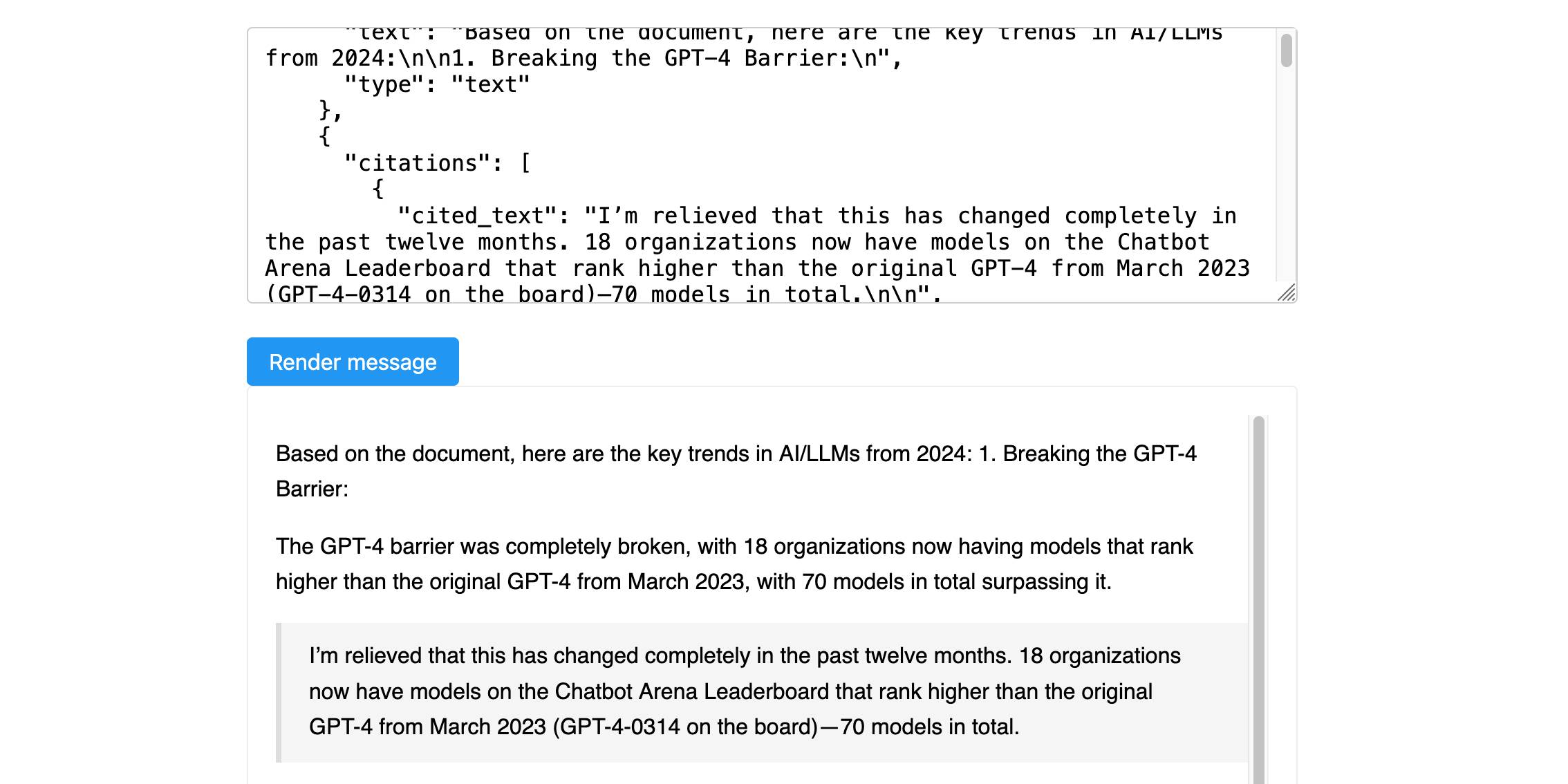

Anthropic’s new Citations API

Here’s a new API-only feature from Anthropic that requires quite a bit of assembly in order to unlock the value: Introducing Citations on the Anthropic API. Let’s talk about what this is and why it’s interesting.

[... 1,319 words]Why are my live regions not working? (via) Useful article to help understand ARIA live regions. Short version: you can add a live region to your page like this:

<div id="notification" aria-live="assertive"></div>

Then any time you use JavaScript to modify the text content in that element it will be announced straight away by any screen readers - that's the "assertive" part. Using "polite" instead will cause the notification to be queued up for when the user is idle instead.

There are quite a few catches. Most notably, the contents of an aria-live region will usually NOT be spoken out loud when the page first loads, or when that element is added to the DOM. You need to ensure the element is available and not hidden before updating it for the effect to work reliably across different screen readers.

I got Claude Artifacts to help me build a demo for this, which is now available at tools.simonwillison.net/aria-live-regions. The demo includes instructions for turning VoiceOver on and off on both iOS and macOS to help try that out.

2024

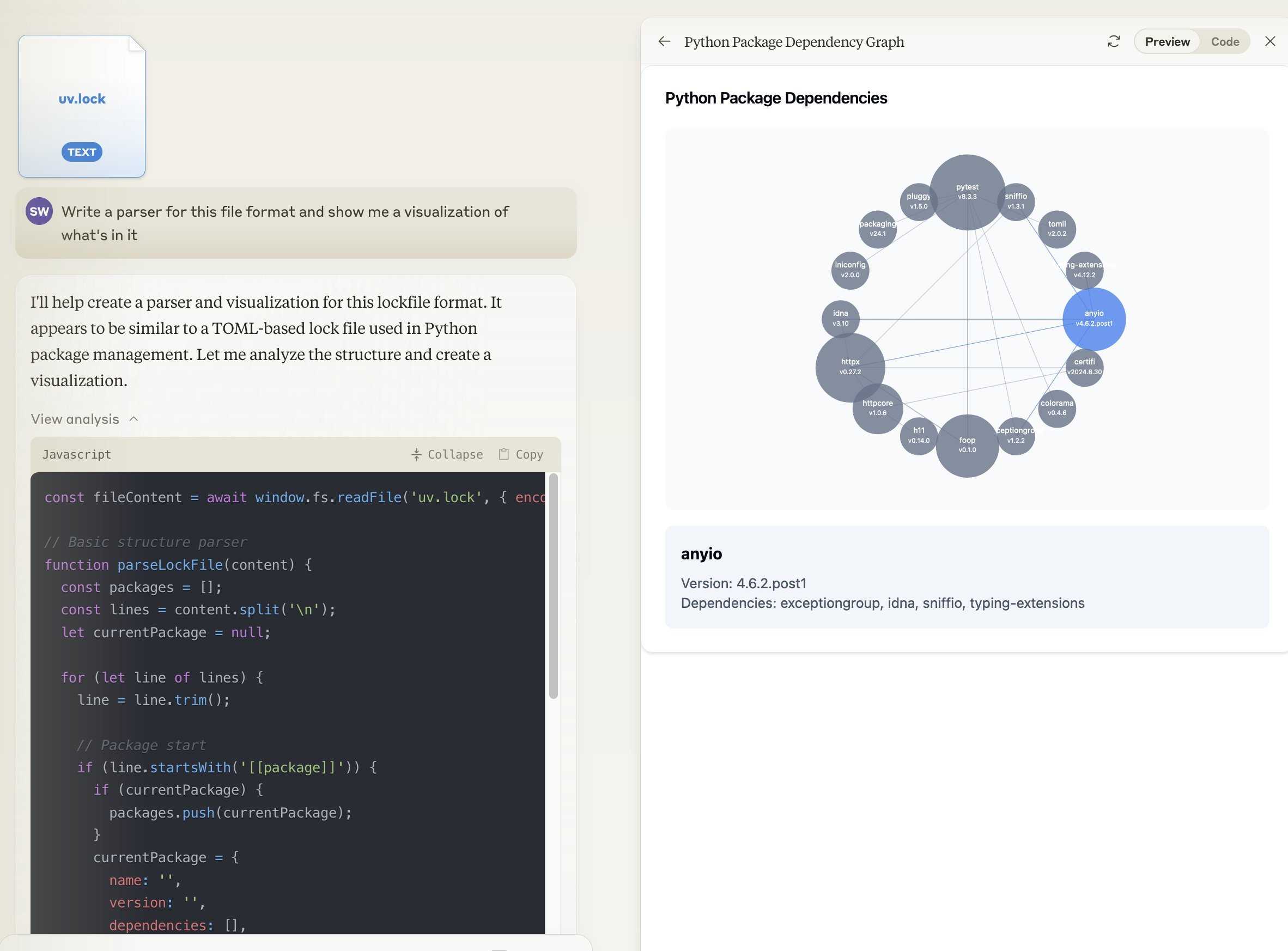

Building Python tools with a one-shot prompt using uv run and Claude Projects

I’ve written a lot about how I’ve been using Claude to build one-shot HTML+JavaScript applications via Claude Artifacts. I recently started using a similar pattern to create one-shot Python utilities, using a custom Claude Project combined with the dependency management capabilities of uv.

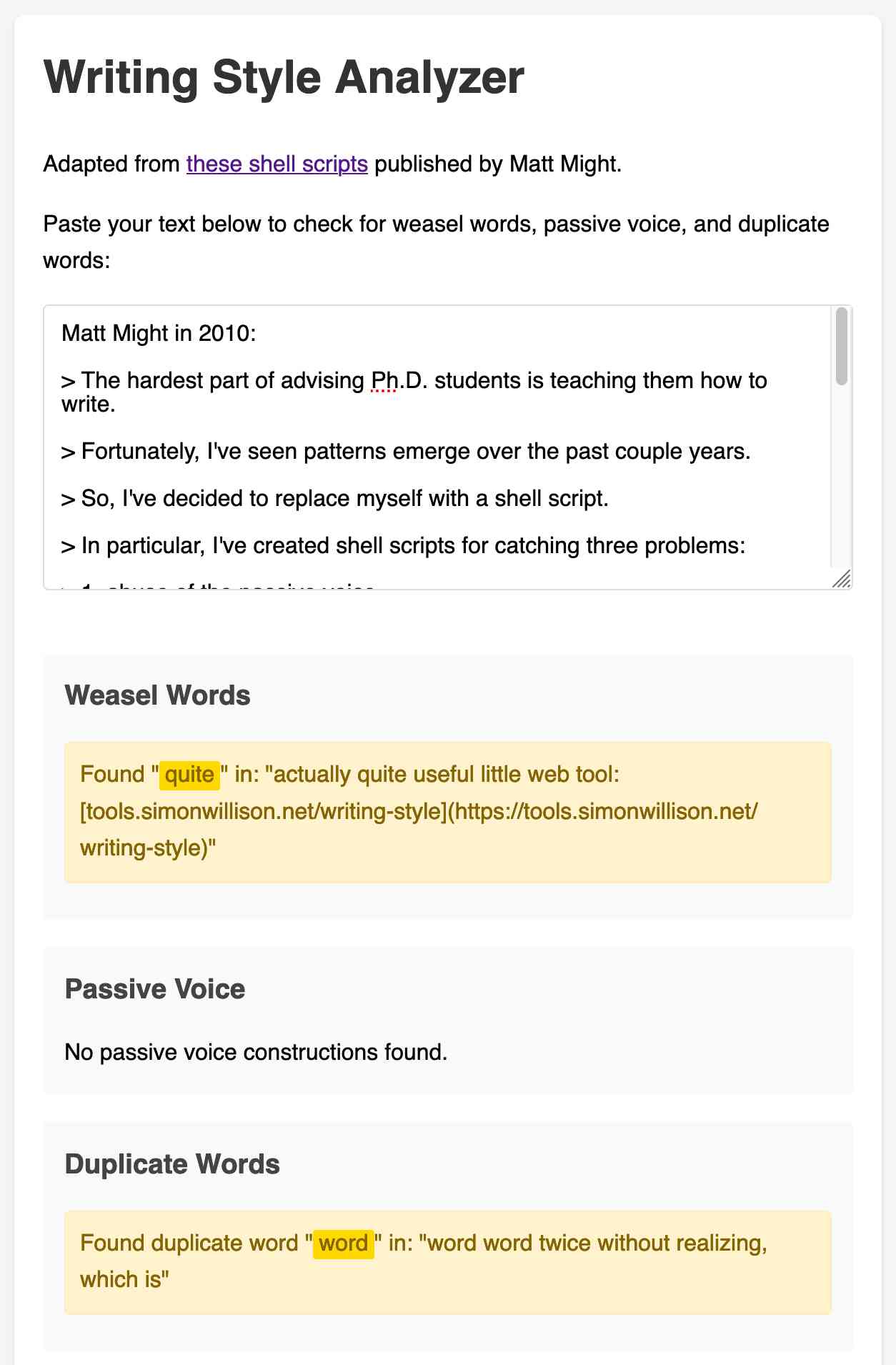

[... 899 words]3 shell scripts to improve your writing, or “My Ph.D. advisor rewrote himself in bash.” (via) Matt Might in 2010:

The hardest part of advising Ph.D. students is teaching them how to write.

Fortunately, I've seen patterns emerge over the past couple years.

So, I've decided to replace myself with a shell script.

In particular, I've created shell scripts for catching three problems:

- abuse of the passive voice,

- weasel words, and

- lexical illusions.

"Lexical illusions" here refers to the thing where you accidentally repeat a word word twice without realizing, which is particularly hard to spot if the repetition spans a line break.

Matt shares Bash scripts that he added to a LaTeX build system to identify these problems.

I pasted his entire article into Claude and asked it to build me an HTML+JavaScript artifact implementing the rules from those scripts. After a couple more iterations (I pasted in some feedback comments from Hacker News) I now have an actually quite useful little web tool:

tools.simonwillison.net/writing-style

Here's the source code and commit history.

ChatGPT Canvas can make API requests now, but it’s complicated

Today’s 12 Days of OpenAI release concerned ChatGPT Canvas, a new ChatGPT feature that enables ChatGPT to pop open a side panel with a shared editor in it where you can collaborate with ChatGPT on editing a document or writing code.

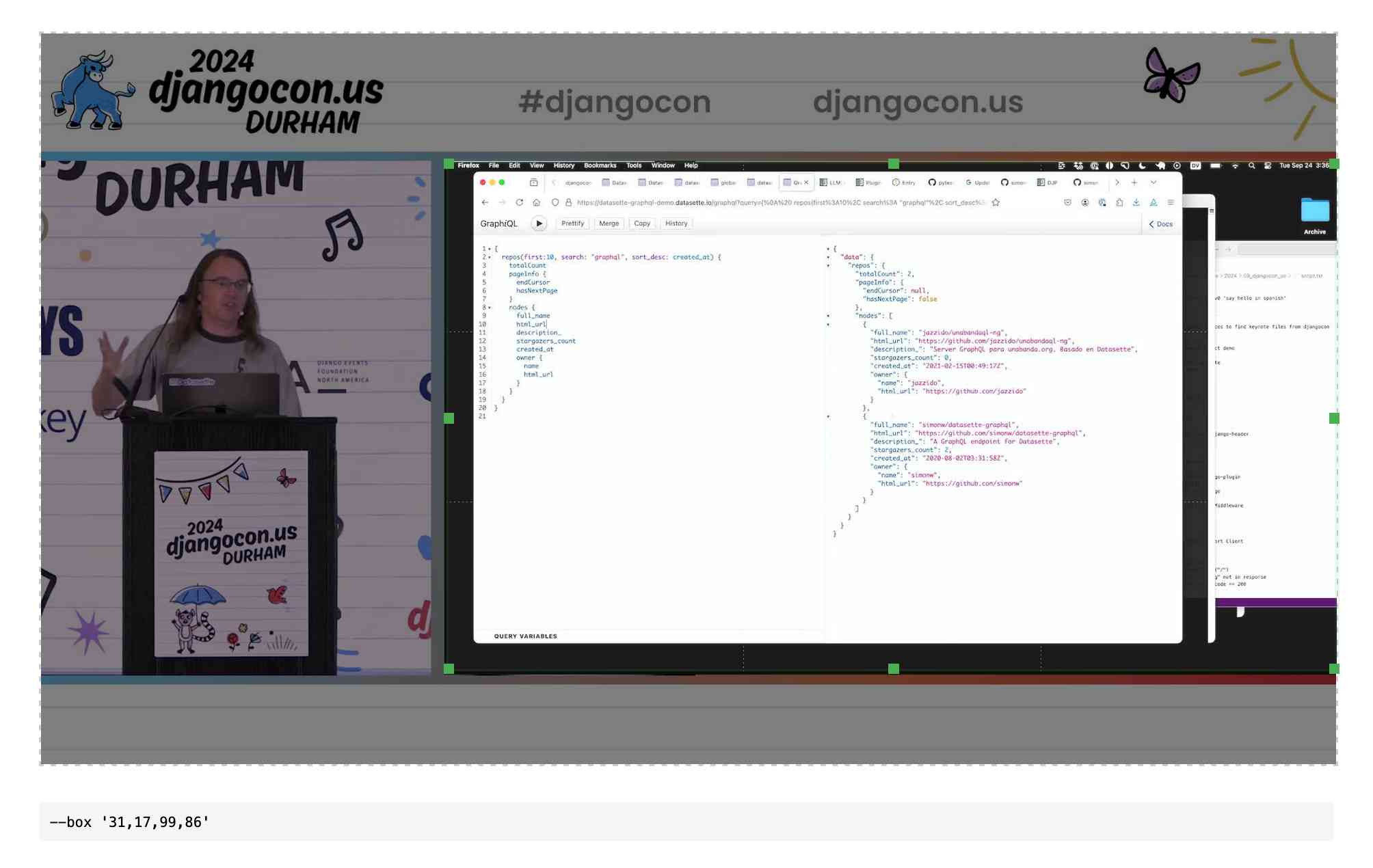

[... 1,116 words]QuickTime video script to capture frames and bounding boxes. An update to an older TIL. I'm working on the write-up for my DjangoCon US talk on plugins and I found myself wanting to capture individual frames from the video in two formats: a full frame capture, and another that captured just the portion of the screen shared from my laptop.

I have a script for the former, so I got Claude to update my script to add support for one or more --box options, like this:

capture-bbox.sh ../output.mp4 --box '31,17,100,87' --box '0,0,50,50'

Open output.mp4 in QuickTime Player, run that script and then every time you hit a key in the terminal app it will capture three JPEGs from the current position in QuickTime Player - one for the whole screen and one each for the specified bounding box regions.

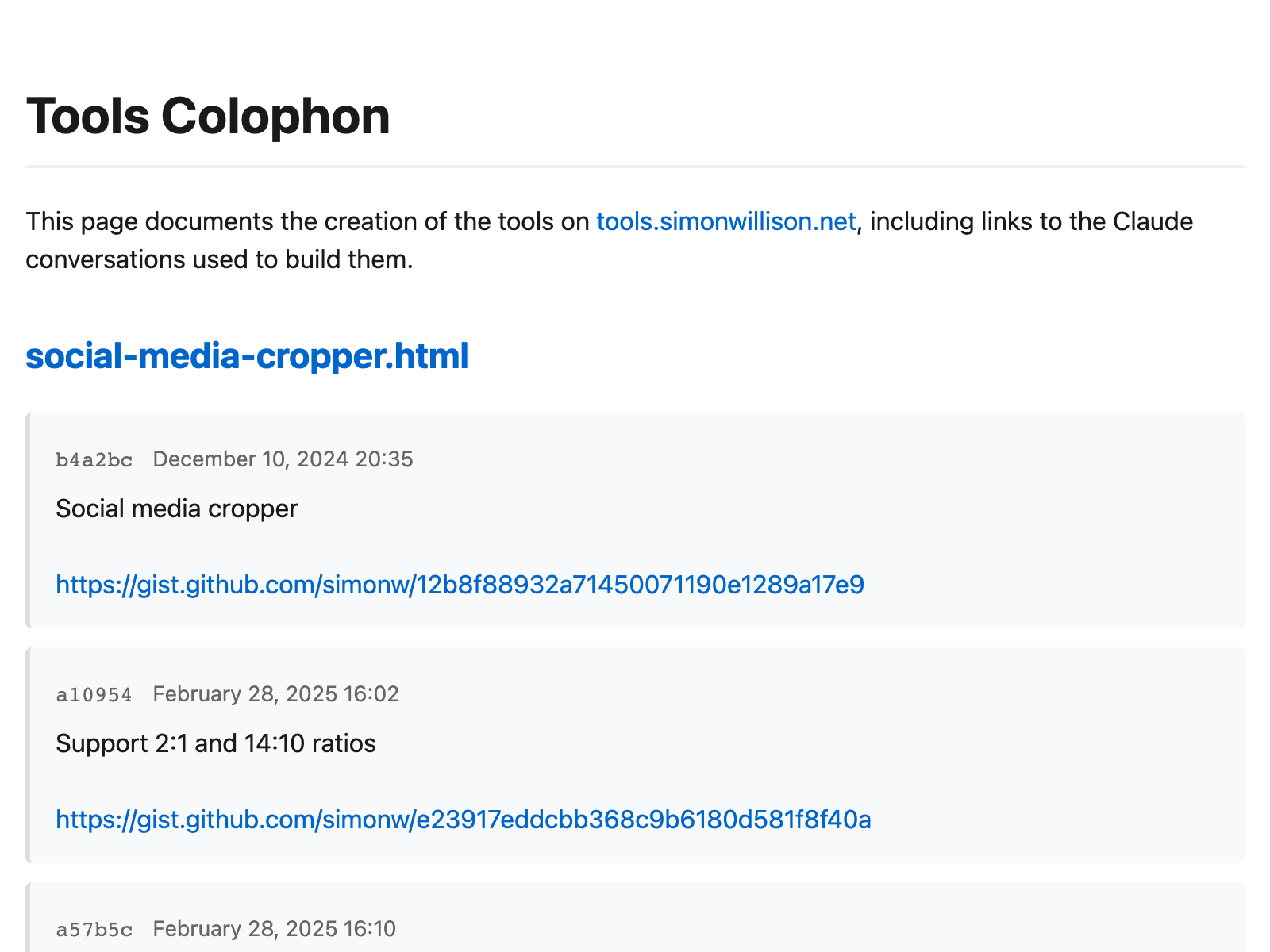

Those bounding box regions are percentages of the width and height of the image. I also got Claude to build me this interactive tool on top of cropperjs to help figure out those boxes:

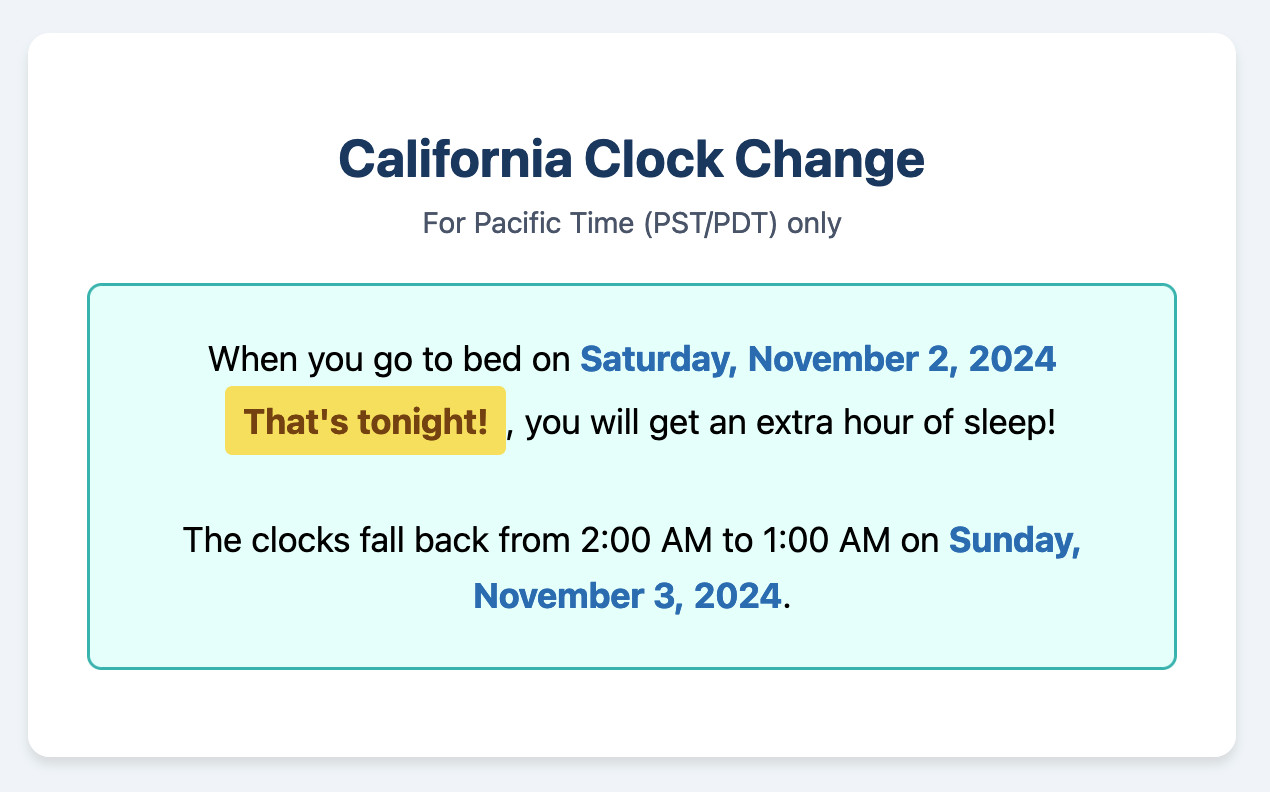

California Clock Change. The clocks go back in California tonight and I finally built my dream application for helping me remember if I get an hour extra of sleep or not, using a Claude Artifact. Here's the transcript.

This is one of my favorite examples yet of the kind of tiny low stakes utilities I'm building with Claude Artifacts because the friction involved in churning out a working application has dropped almost to zero.

(I added another feature: it now includes a note of what time my Dog thinks it is if the clocks have recently changed.)

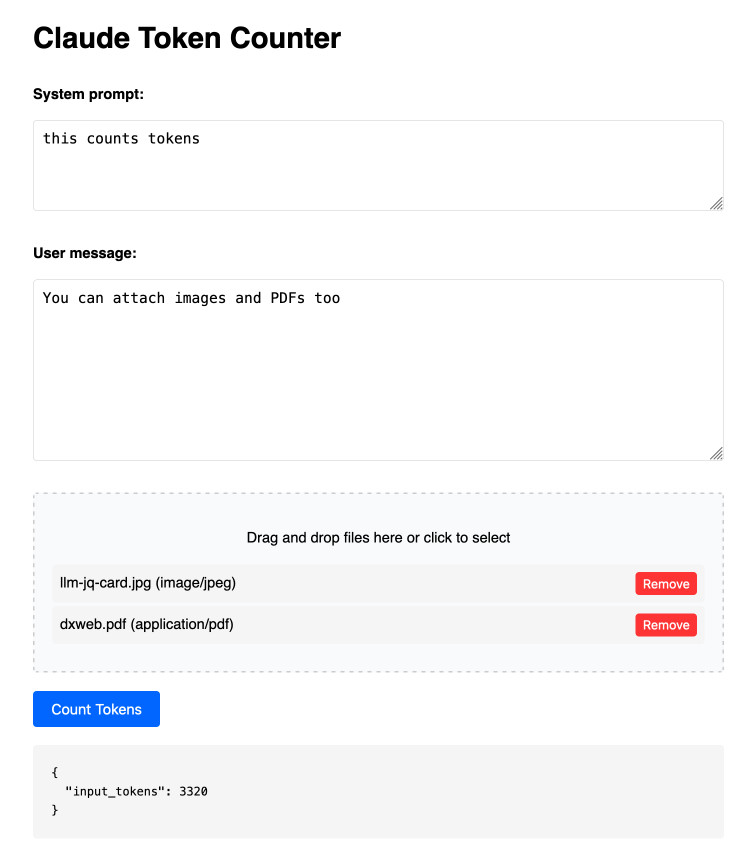

Claude Token Counter. Anthropic released a token counting API for Claude a few days ago.

I built this tool for running prompts, images and PDFs against that API to count the tokens in them.

The API is free (albeit rate limited), but you'll still need to provide your own API key in order to use it.

Here's the source code. I built this using two sessions with Claude - one to build the initial tool and a second to add PDF and image support. That second one is a bit of a mess - it turns out if you drop an HTML file onto a Claude conversation it converts it to Markdown for you, but I wanted it to modify the original HTML source.

The API endpoint also allows you to specify a model, but as far as I can tell from running some experiments the token count was the same for Haiku, Opus and Sonnet 3.5.

Bringing developer choice to Copilot with Anthropic’s Claude 3.5 Sonnet, Google’s Gemini 1.5 Pro, and OpenAI’s o1-preview. The big announcement from GitHub Universe: Copilot is growing support for alternative models.

GitHub Copilot predated the release of ChatGPT by more than year, and was the first widely used LLM-powered tool. This announcement includes a brief history lesson:

The first public version of Copilot was launched using Codex, an early version of OpenAI GPT-3, specifically fine-tuned for coding tasks. Copilot Chat was launched in 2023 with GPT-3.5 and later GPT-4. Since then, we have updated the base model versions multiple times, using a range from GPT 3.5-turbo to GPT 4o and 4o-mini models for different latency and quality requirements.

It's increasingly clear that any strategy that ties you to models from exclusively one provider is short-sighted. The best available model for a task can change every few months, and for something like AI code assistance model quality matters a lot. Getting stuck with a model that's no longer best in class could be a serious competitive disadvantage.

The other big announcement from the keynote was GitHub Spark, described like this:

Sparks are fully functional micro apps that can integrate AI features and external data sources without requiring any management of cloud resources.

I got to play with this at the event. It's effectively a cross between Claude Artifacts and GitHub Gists, with some very neat UI details. The features that really differentiate it from Artifacts is that Spark apps gain access to a server-side key/value store which they can use to persist JSON - and they can also access an API against which they can execute their own prompts.

The prompt integration is particularly neat because prompts used by the Spark apps are extracted into a separate UI so users can view and modify them without having to dig into the (editable) React JavaScript code.

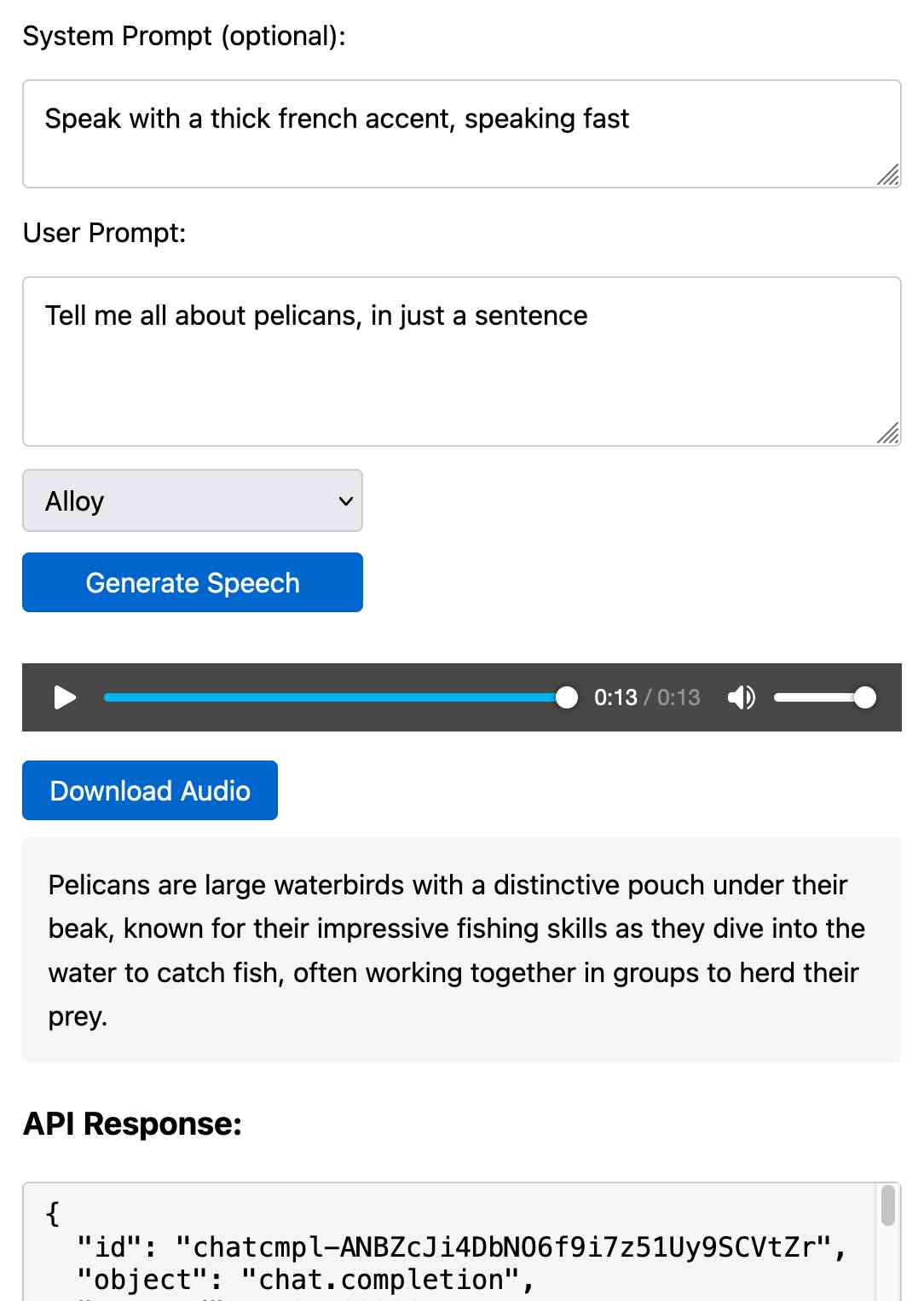

Prompt GPT-4o audio. A week and a half ago I built a tool for experimenting with OpenAI's new audio input. I just put together the other side of that, for experimenting with audio output.

Once you've provided an API key (which is saved in localStorage) you can use this to prompt the gpt-4o-audio-preview model with a system and regular prompt and select a voice for the response.

I built it with assistance from Claude: initial app, adding system prompt support.

You can preview and download the resulting wav file, and you can also copy out the raw JSON. If you save that in a Gist you can then feed its Gist ID to https://tools.simonwillison.net/gpt-4o-audio-player?gist=GIST_ID_HERE (Claude transcript) to play it back again.

You can try using that to listen to my French accented pelican description.

There's something really interesting to me here about this form of application which exists entirely as HTML and JavaScript that uses CORS to talk to various APIs. GitHub's Gist API is accessible via CORS too, so it wouldn't take much more work to add a "save" button which writes out a new Gist after prompting for a personal access token. I prototyped that a bit here.

Notes on the new Claude analysis JavaScript code execution tool

Anthropic released a new feature for their Claude.ai consumer-facing chat bot interface today which they’re calling “the analysis tool”.

[... 918 words]Claude Artifact Runner (via) One of my least favourite things about Claude Artifacts (notes on how I use those here) is the way it defaults to writing code in React in a way that's difficult to reuse outside of Artifacts. I start most of my prompts with "no react" so that it will kick out regular HTML and JavaScript instead, which I can then copy out into my tools.simonwillison.net GitHub Pages repository.

It looks like Cláudio Silva has solved that problem. His claude-artifact-runner repo provides a skeleton of a React app that reflects the Artifacts environment - including bundling libraries such as Shadcn UI, Tailwind CSS, Lucide icons and Recharts that are included in that environment by default.

This means you can clone the repo, run npm install && npm run dev to start a development server, then copy and paste Artifacts directly from Claude into the src/artifact-component.tsx file and have them rendered instantly.

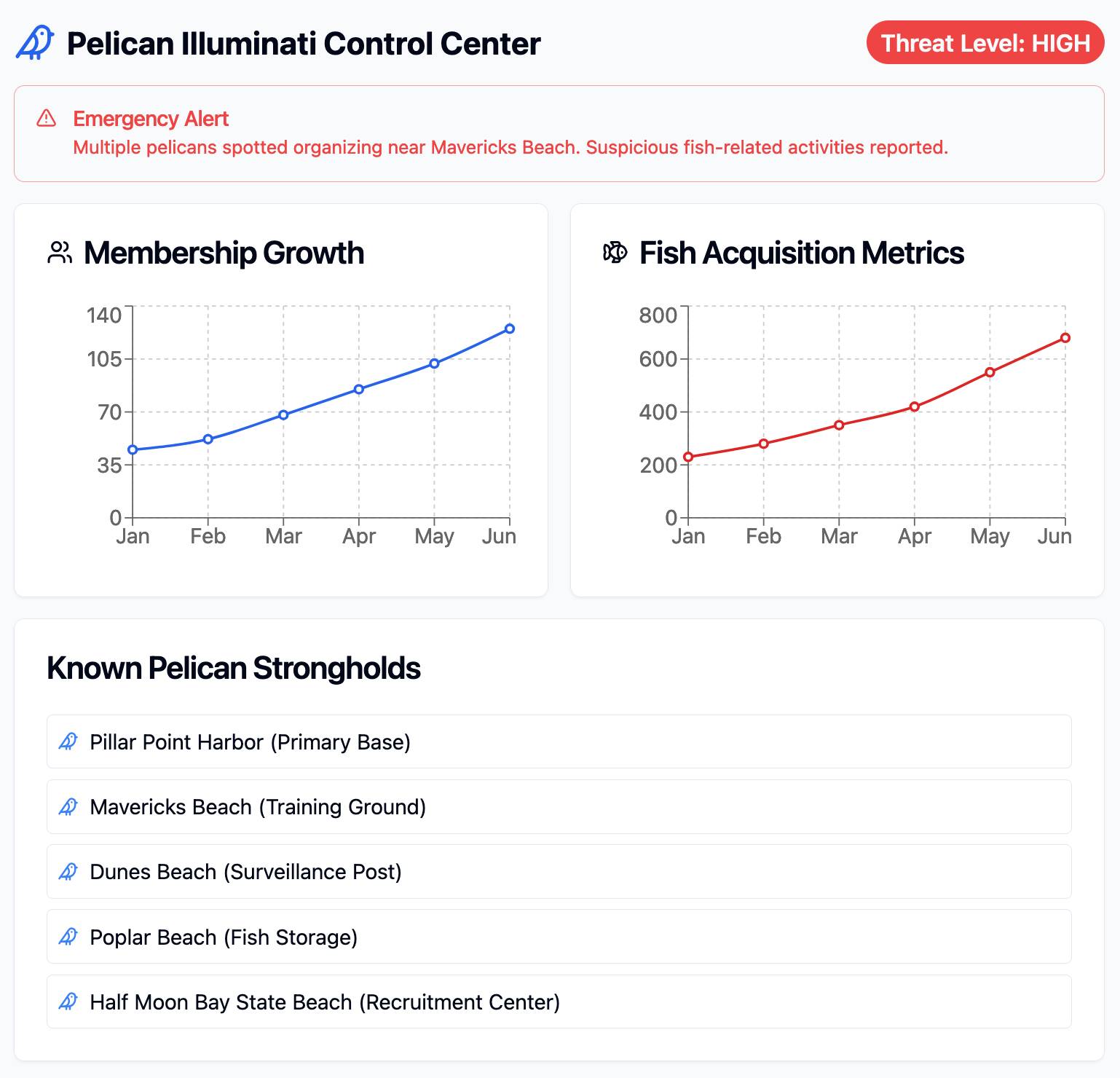

I tried it just now and it worked perfectly. I prompted:

Build me a cool artifact using Shadcn UI and Recharts around the theme of a Pelican secret society trying to take over Half Moon Bay

Then copied and pasted the resulting code into that file and it rendered the exact same thing that Claude had shown me in its own environment.

I tried running npm run build to create a built version of the application but I got some frustrating TypeScript errors - and I didn't want to make any edits to the code to fix them.

After poking around with the help of Claude I found this command which correctly built the application for me:

npx vite build

This created a dist/ directory containing an index.html file and assets/index-CSlCNAVi.css (46.22KB) and assets/index-f2XuS8JF.js (542.15KB) files - a bit heavy for my liking but they did correctly run the application when hosted through a python -m http.server localhost server.

I've often been building single-use apps with Claude Artifacts when I'm helping my children learn. For example here's one on visualizing fractions. [...] What's more surprising is that it is far easier to create an app on-demand than searching for an app in the app store that will do what I'm looking for. Searching for kids' learning apps is typically a nails-on-chalkboard painful experience because 95% of them are addictive garbage. And even if I find something usable, it can't match the fact that I can tell Claude what I want.

Everything I built with Claude Artifacts this week

I’m a huge fan of Claude’s Artifacts feature, which lets you prompt Claude to create an interactive Single Page App (using HTML, CSS and JavaScript) and then view the result directly in the Claude interface, iterating on it further with the bot and then, if you like, copying out the resulting code.

[... 2,273 words]You can use text-wrap: balance; on icons. Neat CSS experiment from Terence Eden: the new text-wrap: balance CSS property is intended to help make text like headlines display without ugly wrapped single orphan words, but Terence points out it can be used for icons too:

![]()

This inspired me to investigate if the same technique could work for text based navigation elements. I used Claude to build this interactive prototype of a navigation bar that uses text-wrap: balance against a list of display: inline menu list items. It seems to work well!

My first attempt used display: inline-block which worked in Safari but failed in Firefox.

Notable limitation from that MDN article:

Because counting characters and balancing them across multiple lines is computationally expensive, this value is only supported for blocks of text spanning a limited number of lines (six or less for Chromium and ten or less for Firefox)

So it's fine for these navigation concepts but isn't something you can use for body text.

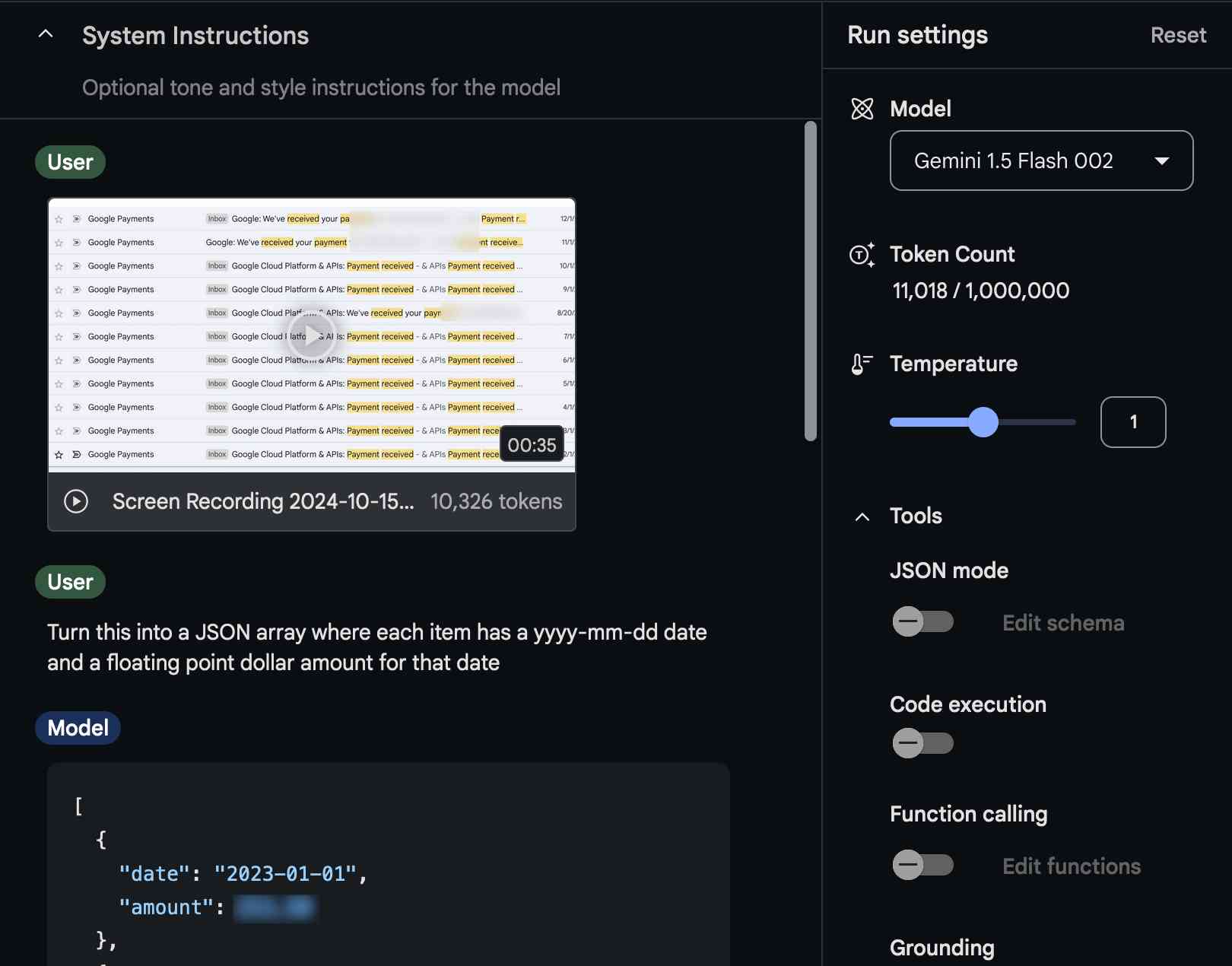

Video scraping: extracting JSON data from a 35 second screen capture for less than 1/10th of a cent

The other day I found myself needing to add up some numeric values that were scattered across twelve different emails.

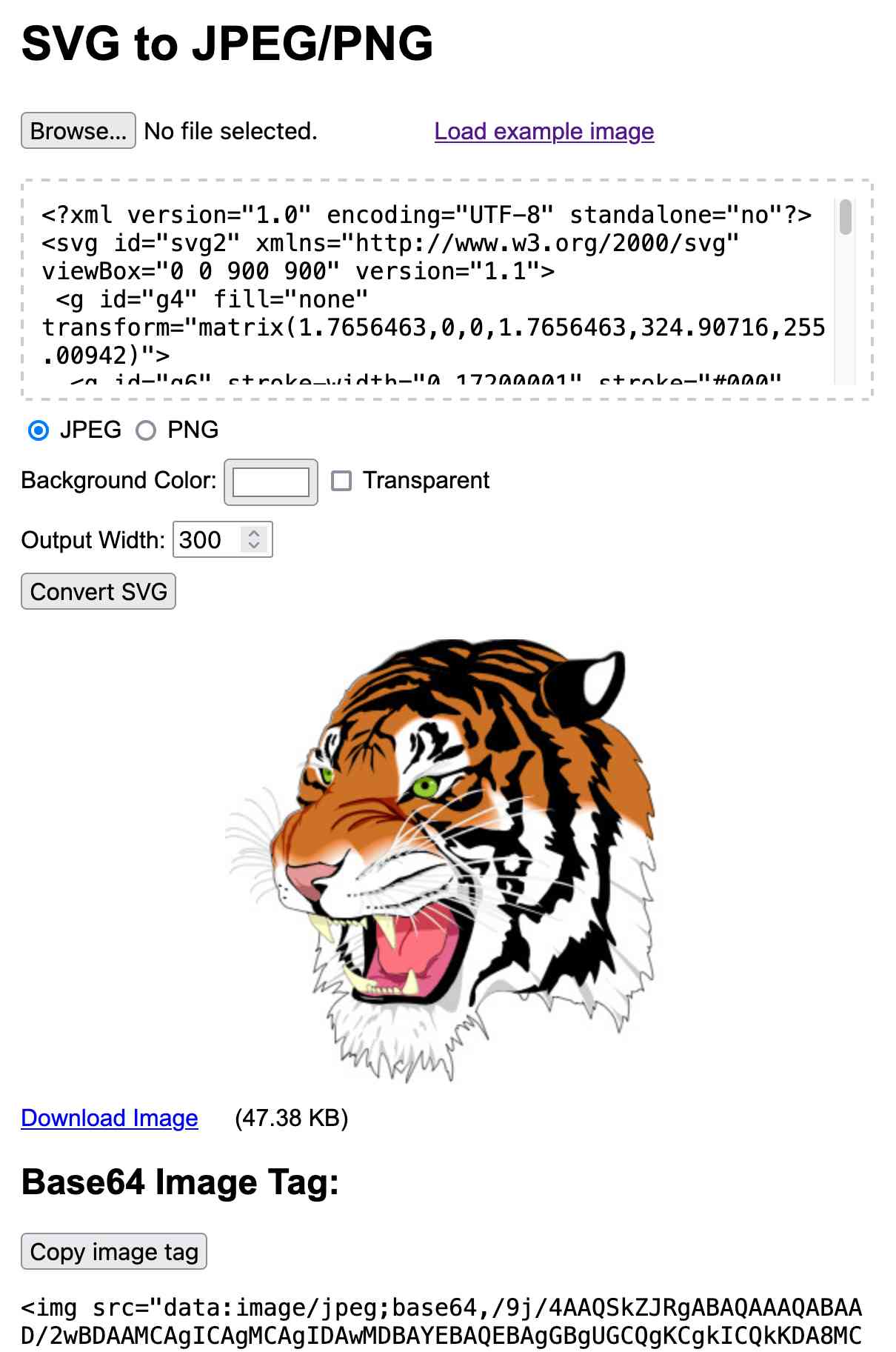

[... 1,294 words]SVG to JPG/PNG. The latest in my ongoing series of interactive HTML and JavaScript tools written almost entirely by LLMs. This one lets you paste in (or open-from-file, or drag-onto-page) some SVG and then use that to render a JPEG or PNG image of your desired width.

I built this using Claude 3.5 Sonnet, initially as an Artifact and later in a code editor since some of the features (loading an example image and downloading the result) cannot run in the sandboxed iframe Artifact environment.

Here's the full transcript of the Claude conversation I used to build the tool, plus a few commits I later made by hand to further customize it.

The code itself is mostly quite simple. The most interesting part is how it renders the SVG to an image, which (simplified) looks like this:

// First extract the viewbox to get width/height

const svgElement = new DOMParser().parseFromString(

svgInput, 'image/svg+xml'

).documentElement;

let viewBox = svgElement.getAttribute('viewBox');

[, , width, height] = viewBox.split(' ').map(Number);

// Figure out the width/height of the output image

const newWidth = parseInt(widthInput.value) || 800;

const aspectRatio = width / height;

const newHeight = Math.round(newWidth / aspectRatio);

// Create off-screen canvas

const canvas = document.createElement('canvas');

canvas.width = newWidth;

canvas.height = newHeight;

// Draw SVG on canvas

const svgBlob = new Blob([svgInput], {type: 'image/svg+xml;charset=utf-8'});

const svgUrl = URL.createObjectURL(svgBlob);

const img = new Image();

const ctx = canvas.getContext('2d');

img.onload = function() {

ctx.drawImage(img, 0, 0, newWidth, newHeight);

URL.revokeObjectURL(svgUrl);

// Convert that to a JPEG

const imageDataUrl = canvas.toDataURL("image/jpeg");

const convertedImg = document.createElement('img');

convertedImg.src = imageDataUrl;

imageContainer.appendChild(convertedImg);

};

img.src = svgUrl;Here's the MDN explanation of that revokeObjectURL() method, which I hadn't seen before.

Call this method when you've finished using an object URL to let the browser know not to keep the reference to the file any longer.

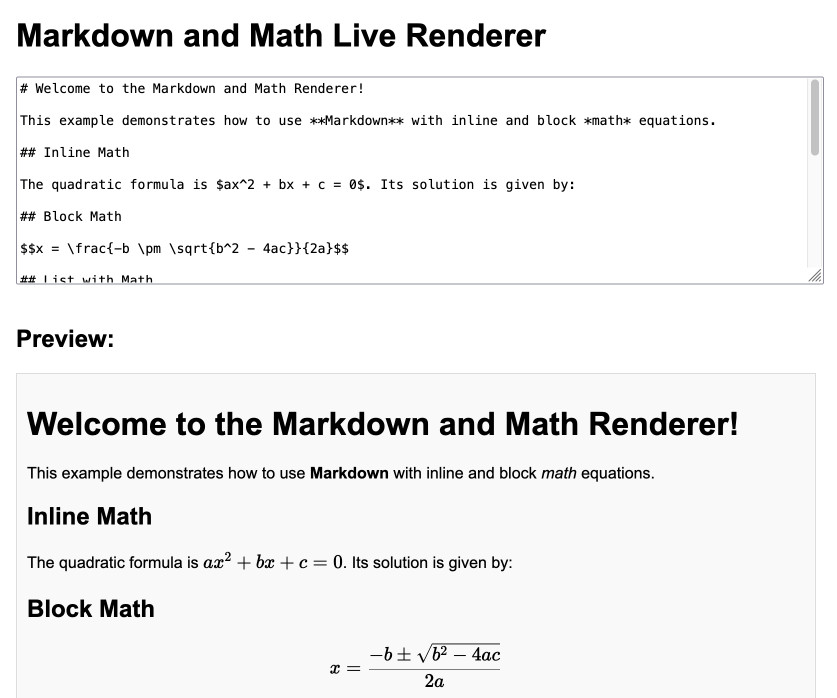

Markdown and Math Live Renderer.

Another of my tiny Claude-assisted JavaScript tools. This one lets you enter Markdown with embedded mathematical expressions (like $ax^2 + bx + c = 0$) and live renders those on the page, with an HTML version using MathML that you can export through copy and paste.

Here's the Claude transcript. I started by asking:

Are there any client side JavaScript markdown libraries that can also handle inline math and render it?

Claude gave me several options including the combination of Marked and KaTeX, so I followed up by asking:

Build an artifact that demonstrates Marked plus KaTeX - it should include a text area I can enter markdown in (repopulated with a good example) and live update the rendered version below. No react.

Which gave me this artifact, instantly demonstrating that what I wanted to do was possible.

I iterated on it a tiny bit to get to the final version, mainly to add that HTML export and a Copy button. The final source code is here.

Notes on using LLMs for code

I was recently the guest on TWIML—the This Week in Machine Learning & AI podcast. Our episode is titled Supercharging Developer Productivity with ChatGPT and Claude with Simon Willison, and the focus of the conversation was the ways in which I use LLM tools in my day-to-day work as a software developer and product engineer.

[... 861 words]How Anthropic built Artifacts. Gergely Orosz interviews five members of Anthropic about how they built Artifacts on top of Claude with a small team in just three months.

The initial prototype used Streamlit, and the biggest challenge was building a robust sandbox to run the LLM-generated code in:

We use iFrame sandboxes with full-site process isolation. This approach has gotten robust over the years. This protects users' main Claude.ai browsing session from malicious artifacts. We also use strict Content Security Policies (CSPs) to enforce limited and controlled network access.

Artifacts were launched in general availability yesterday - previously you had to turn them on as a preview feature. Alex Albert has a 14 minute demo video up on Twitter showing the different forms of content they can create, including interactive HTML apps, Markdown, HTML, SVG, Mermaid diagrams and React Components.