Blogmarks tagged projects, llms

Filters: Type: blogmark × projects × llms × Sorted by date

llm-mistral 0.14. I added tool-support to my plugin for accessing the Mistral API from LLM today, plus support for Mistral's new Codestral Embed embedding model.

An interesting challenge here is that I'm not using an official client library for llm-mistral - I rolled my own client on top of their streaming HTTP API using Florimond Manca's httpx-sse library. It's a very pleasant way to interact with streaming APIs - here's my code that does most of the work.

The problem I faced is that Mistral's API documentation for function calling has examples in Python and TypeScript but doesn't include curl or direct documentation of their HTTP endpoints!

I needed documentation at the HTTP level. Could I maybe extract that directly from Mistral's official Python library?

It turns out I could. I started by cloning the repo:

git clone https://github.com/mistralai/client-python

cd client-python/src/mistralai

files-to-prompt . | ttokMy ttok tool gave me a token count of 212,410 (counted using OpenAI's tokenizer, but that's normally a close enough estimate) - Mistral's models tap out at 128,000 so I switched to Gemini 2.5 Flash which can easily handle that many.

I ran this:

files-to-prompt -c . > /tmp/mistral.txt

llm -f /tmp/mistral.txt \

-m gemini-2.5-flash-preview-05-20 \

-s 'Generate comprehensive HTTP API documentation showing

how function calling works, include example curl commands for each step'The results were pretty spectacular! Gemini 2.5 Flash produced a detailed description of the exact set of HTTP APIs I needed to interact with, and the JSON formats I should pass to them.

There are a bunch of steps needed to get tools working in a new model, as described in the LLM plugin authors documentation. I started working through them by hand... and then got lazy and decided to see if I could get a model to do the work for me.

This time I tried the new Claude Opus 4. I fed it three files: my existing, incomplete llm_mistral.py, a full copy of llm_gemini.py with its working tools implementation and a copy of the API docs Gemini had written for me earlier. I prompted:

I need to update this Mistral code to add tool support. I've included examples of that code for Gemini, and a detailed README explaining the Mistral format.

Claude churned away and wrote me code that was most of what I needed. I tested it in a bunch of different scenarios, pasted problems back into Claude to see what would happen, and eventually took over and finished the rest of the code myself. Here's the full transcript.

I'm a little sad I didn't use Mistral to write the code to support Mistral, but I'm pleased to add yet another model family to the list that's supported for tool usage in LLM.

llm-pdf-to-images. Inspired by my previous llm-video-frames plugin, I thought it would be neat to have a plugin for LLM that can take a PDF and turn that into an image-per-page so you can feed PDFs into models that support image inputs but don't yet support PDFs.

This should now do exactly that:

llm install llm-pdf-to-images

llm -f pdf-to-images:path/to/document.pdf 'Summarize this document'Under the hood it's using the PyMuPDF library. The key code to convert a PDF into images looks like this:

import fitz doc = fitz.open("input.pdf") for page in doc: pix = page.get_pixmap(matrix=fitz.Matrix(300/72, 300/72)) jpeg_bytes = pix.tobytes(output="jpg", jpg_quality=30)

Once I'd figured out that code I got o4-mini to write most of the rest of the plugin, using llm-fragments-github to load in the example code from the video plugin:

llm -f github:simonw/llm-video-frames ' import fitz doc = fitz.open("input.pdf") for page in doc: pix = page.get_pixmap(matrix=fitz.Matrix(300/72, 300/72)) jpeg_bytes = pix.tobytes(output="jpg", jpg_quality=30) ' -s 'output llm_pdf_to_images.py which adds a pdf-to-images: fragment loader that converts a PDF to frames using fitz like in the example' \ -m o4-mini

Here's the transcript - more details in this issue.

I had some weird results testing this with GPT 4.1 mini. I created a test PDF with two pages - one white, one black - and ran a test prompt like this:

llm -f 'pdf-to-images:blank-pages.pdf' \ 'describe these images'

The first image features a stylized red maple leaf with triangular facets, giving it a geometric appearance. The maple leaf is a well-known symbol associated with Canada.

The second image is a simple black silhouette of a cat sitting and facing to the left. The cat's tail curls around its body. The design is minimalistic and iconic.

I got even wilder hallucinations for other prompts, like "summarize this document" or "describe all figures". I have a collection of those in this Gist.

Thankfully this behavior is limited to GPT-4.1 mini. I upgraded to full GPT-4.1 and got much more sensible results:

llm -f 'pdf-to-images:blank-pages.pdf' \ 'describe these images' -m gpt-4.1

Certainly! Here are the descriptions of the two images you provided:

First image: This image is completely white. It appears blank, with no discernible objects, text, or features.

Second image: This image is entirely black. Like the first, it is blank and contains no visible objects, text, or distinct elements.

If you have questions or need a specific kind of analysis or modification, please let me know!

LLM 0.26a0 adds support for tools! It's only an alpha so I'm not going to promote this extensively yet, but my LLM project just grew a feature I've been working towards for nearly two years now: tool support!

I'm presenting a workshop about Building software on top of Large Language Models at PyCon US tomorrow and this was the one feature I really needed to pull everything else together.

Tools can be used from the command-line like this (inspired by sqlite-utils --functions):

llm --functions ' def multiply(x: int, y: int) -> int: """Multiply two numbers.""" return x * y ' 'what is 34234 * 213345' -m o4-mini

You can add --tools-debug (shortcut: --td) to have it show exactly what tools are being executed and what came back. More documentation here.

It's also available in the Python library:

import llm def multiply(x: int, y: int) -> int: """Multiply two numbers.""" return x * y model = llm.get_model("gpt-4.1-mini") response = model.chain( "What is 34234 * 213345?", tools=[multiply] ) print(response.text())

There's also a new plugin hook so plugins can register tools that can then be referenced by name using llm --tool name_of_tool "prompt".

There's still a bunch I want to do before including this in a stable release, most notably adding support for Python asyncio. It's a pretty exciting start though!

llm-anthropic 0.16a0 and llm-gemini 0.20a0 add tool support for Anthropic and Gemini models, depending on the new LLM alpha.

Update: Here's the section about tools from my PyCon workshop.

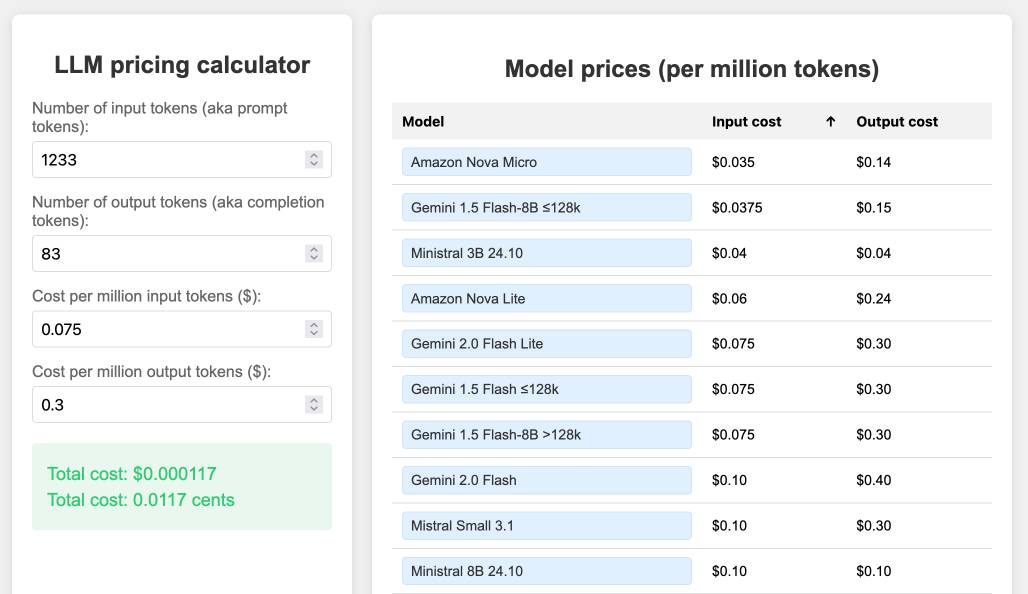

llm-prices.com.

I've been maintaining a simple LLM pricing calculator since October last year. I finally decided to split it out to its own domain name (previously it was hosted at tools.simonwillison.net/llm-prices), running on Cloudflare Pages.

The site runs out of my simonw/llm-prices GitHub repository. I ported the history of the old llm-prices.html file using a vibe-coded bash script that I forgot to save anywhere.

I rarely use AI-generated imagery in my own projects, but for this one I found an excellent reason to use GPT-4o image outputs... to generate the favicon! I dropped a screenshot of the site into ChatGPT (o4-mini-high in this case) and asked for the following:

design a bunch of options for favicons for this site in a single image, white background

![]()

I liked the top right one, so I cropped it into Pixelmator and made a 32x32 version. Here's what it looks like in my browser:

![]()

I added a new feature just now: the state of the calculator is now reflected in the #fragment-hash URL of the page, which means you can link to your previous calculations.

I implemented that feature using the new gemini-2.5-pro-preview-05-06, since that model boasts improved front-end coding abilities. It did a pretty great job - here's how I prompted it:

llm -m gemini-2.5-pro-preview-05-06 -f https://www.llm-prices.com/ -s 'modify this code so that the state of the page is reflected in the fragmenth hash URL - I want to capture the values filling out the form fields and also the current sort order of the table. These should be respected when the page first loads too. Update them using replaceHistory, no need to enable the back button.'

Here's the transcript and the commit updating the tool, plus an example link showing the new feature in action (and calculating the cost for that Gemini 2.5 Pro prompt at 16.8224 cents, after fixing the calculation.)

llm-fragment-symbex. I released a new LLM fragment loader plugin that builds on top of my Symbex project.

Symbex is a CLI tool I wrote that can run against a folder full of Python code and output functions, classes, methods or just their docstrings and signatures, using the Python AST module to parse the code.

llm-fragments-symbex brings that ability directly to LLM. It lets you do things like this:

llm install llm-fragments-symbex

llm -f symbex:path/to/project -s 'Describe this codebase'

I just ran that against my LLM project itself like this:

cd llm

llm -f symbex:. -s 'guess what this code does'

Here's the full output, which starts like this:

This code listing appears to be an index or dump of Python functions, classes, and methods primarily belonging to a codebase related to large language models (LLMs). It covers a broad functionality set related to managing LLMs, embeddings, templates, plugins, logging, and command-line interface (CLI) utilities for interaction with language models. [...]

That page also shows the input generated by the fragment - here's a representative extract:

# from llm.cli import resolve_attachment def resolve_attachment(value): """Resolve an attachment from a string value which could be: - "-" for stdin - A URL - A file path Returns an Attachment object. Raises AttachmentError if the attachment cannot be resolved.""" # from llm.cli import AttachmentType class AttachmentType: def convert(self, value, param, ctx): # from llm.cli import resolve_attachment_with_type def resolve_attachment_with_type(value: str, mimetype: str) -> Attachment:

If your Python code has good docstrings and type annotations, this should hopefully be a shortcut for providing full API documentation to a model without needing to dump in the entire codebase.

The above example used 13,471 input tokens and 781 output tokens, using openai/gpt-4.1-mini. That model is extremely cheap, so the total cost was 0.6638 cents - less than a cent.

The plugin itself was mostly written by o4-mini using the llm-fragments-github plugin to load the simonw/symbex and simonw/llm-hacker-news repositories as example code:

llm \ -f github:simonw/symbex \ -f github:simonw/llm-hacker-news \ -s "Write a new plugin as a single llm_fragments_symbex.py file which provides a custom loader which can be used like this: llm -f symbex:path/to/folder - it then loads in all of the python function signatures with their docstrings from that folder using the same trick that symbex uses, effectively the same as running symbex . '*' '*.*' --docs --imports -n" \ -m openai/o4-mini -o reasoning_effort high

Here's the response. 27,819 input, 2,918 output = 4.344 cents.

In working on this project I identified and fixed a minor cosmetic defect in Symbex itself. Technically this is a breaking change (it changes the output) so I shipped that as Symbex 2.0.

llm-hacker-news. I built this new plugin to exercise the new register_fragment_loaders() plugin hook I added to LLM 0.24. It's the plugin equivalent of the Bash script I've been using to summarize Hacker News conversations for the past 18 months.

You can use it like this:

llm install llm-hacker-news

llm -f hn:43615912 'summary with illustrative direct quotes'

You can see the output in this issue.

The plugin registers a hn: prefix - combine that with the ID of a Hacker News conversation to pull that conversation into the context.

It uses the Algolia Hacker News API which returns JSON like this. Rather than feed the JSON directly to the LLM it instead converts it to a hopefully more LLM-friendly format that looks like this example from the plugin's test:

[1] BeakMaster: Fish Spotting Techniques

[1.1] CoastalFlyer: The dive technique works best when hunting in shallow waters.

[1.1.1] PouchBill: Agreed. Have you tried the hover method near the pier?

[1.1.2] WingSpan22: My bill gets too wet with that approach.

[1.1.2.1] CoastalFlyer: Try tilting at a 40° angle like our Australian cousins.

[1.2] BrownFeathers: Anyone spotted those "silver fish" near the rocks?

[1.2.1] GulfGlider: Yes! They're best caught at dawn.

Just remember: swoop > grab > lift

That format was suggested by Claude, which then wrote most of the plugin implementation for me. Here's that Claude transcript.

simonw/ollama-models-atom-feed. I setup a GitHub Actions + GitHub Pages Atom feed of scraped recent models data from the Ollama latest models page - Ollama remains one of the easiest ways to run models on a laptop so a new model release from them is worth hearing about.

I built the scraper by pasting example HTML into Claude and asking for a Python script to convert it to Atom - here's the script we wrote together.

Update 25th March 2025: The first version of this included all 160+ models in a single feed. I've upgraded the script to output two feeds - the original atom.xml one and a new atom-recent-20.xml feed containing just the most recent 20 items.

I modified the script using Google's new Gemini 2.5 Pro model, like this:

cat to_atom.py | llm -m gemini-2.5-pro-exp-03-25 \

-s 'rewrite this script so that instead of outputting Atom to stdout it saves two files, one called atom.xml with everything and another called atom-recent-20.xml with just the most recent 20 items - remove the output option entirely'

Here's the full transcript.

llm-openrouter 0.4. I found out this morning that OpenRouter include support for a number of (rate-limited) free API models.

I occasionally run workshops on top of LLMs (like this one) and being able to provide students with a quick way to obtain an API key against models where they don't have to setup billing is really valuable to me!

This inspired me to upgrade my existing llm-openrouter plugin, and in doing so I closed out a bunch of open feature requests.

Consider this post the annotated release notes:

- LLM schema support for OpenRouter models that support structured output. #23

I'm trying to get support for LLM's new schema feature into as many plugins as possible.

OpenRouter's OpenAI-compatible API includes support for the response_format structured content option, but with an important caveat: it only works for some models, and if you try to use it on others it is silently ignored.

I filed an issue with OpenRouter requesting they include schema support in their machine-readable model index. For the moment LLM will let you specify schemas for unsupported models and will ignore them entirely, which isn't ideal.

llm openrouter keycommand displays information about your current API key. #24

Useful for debugging and checking the details of your key's rate limit.

llm -m ... -o online 1enables web search grounding against any model, powered by Exa. #25

OpenRouter apparently make this feature available to every one of their supported models! They're using new-to-me Exa to power this feature, an AI-focused search engine startup who appear to have built their own index with their own crawlers (according to their FAQ). This feature is currently priced by OpenRouter at $4 per 1000 results, and since 5 results are returned for every prompt that's 2 cents per prompt.

llm openrouter modelscommand for listing details of the OpenRouter models, including a--jsonoption to get JSON and a--freeoption to filter for just the free models. #26

This offers a neat way to list the available models. There are examples of the output in the comments on the issue.

- New option to specify custom provider routing:

-o provider '{JSON here}'. #17

Part of OpenRouter's USP is that it can route prompts to different providers depending on factors like latency, cost or as a fallback if your first choice is unavailable - great for if you are using open weight models like Llama which are hosted by competing companies.

The options they provide for routing are very thorough - I had initially hoped to provide a set of CLI options that covered all of these bases, but I decided instead to reuse their JSON format and forward those options directly on to the model.

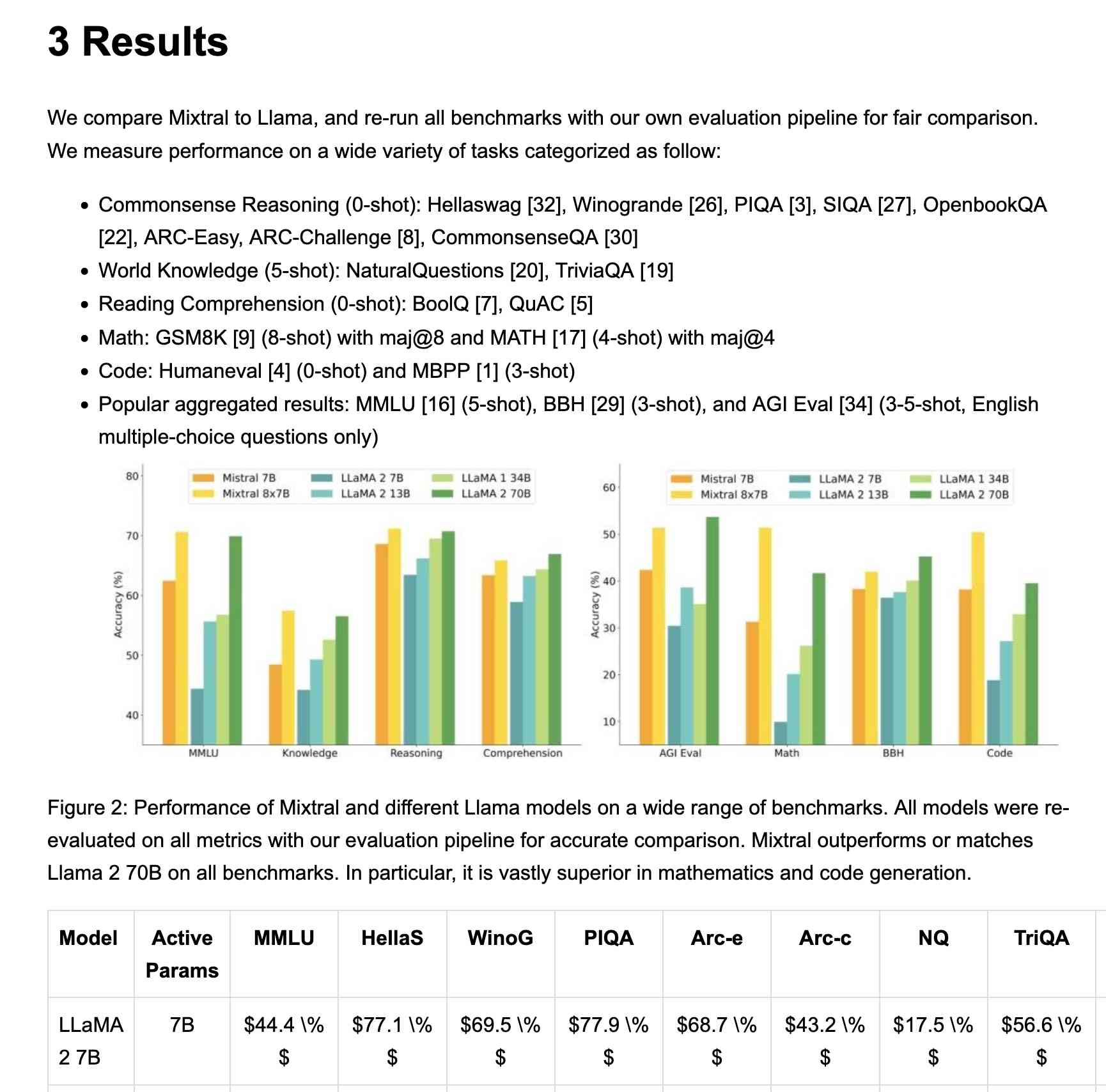

Mistral OCR (via) New closed-source specialist OCR model by Mistral - you can feed it images or a PDF and it produces Markdown with optional embedded images.

It's available via their API, or it's "available to self-host on a selective basis" for people with stringent privacy requirements who are willing to talk to their sales team.

I decided to try out their API, so I copied and pasted example code from their notebook into my custom Claude project and told it:

Turn this into a CLI app, depends on mistralai - it should take a file path and an optional API key defauling to env vironment called MISTRAL_API_KEY

After some further iteration / vibe coding I got to something that worked, which I then tidied up and shared as mistral_ocr.py.

You can try it out like this:

export MISTRAL_API_KEY='...'

uv run http://tools.simonwillison.net/python/mistral_ocr.py \

mixtral.pdf --html --inline-images > mixtral.html

I fed in the Mixtral paper as a PDF. The API returns Markdown, but my --html option renders that Markdown as HTML and the --inline-images option takes any images and inlines them as base64 URIs (inspired by monolith). The result is mixtral.html, a 972KB HTML file with images and text bundled together.

This did a pretty great job!

My script renders Markdown tables but I haven't figured out how to render inline Markdown MathML yet. I ran the command a second time and requested Markdown output (the default) like this:

uv run http://tools.simonwillison.net/python/mistral_ocr.py \

mixtral.pdf > mixtral.md

Here's that Markdown rendered as a Gist - there are a few MathML glitches so clearly the Mistral OCR MathML dialect and the GitHub Formatted Markdown dialect don't quite line up.

My tool can also output raw JSON as an alternative to Markdown or HTML - full details in the documentation.

The Mistral API is priced at roughly 1000 pages per dollar, with a 50% discount for batch usage.

The big question with LLM-based OCR is always how well it copes with accidental instructions in the text (can you safely OCR a document full of prompting examples?) and how well it handles text it can't write.

Mistral's Sophia Yang says it "should be robust" against following instructions in the text, and invited people to try and find counter-examples.

Alexander Doria noted that Mistral OCR can hallucinate text when faced with handwriting that it cannot understand.

llm-mistral 0.11. I added schema support to this plugin which adds support for the Mistral API to LLM. Release notes:

Schemas now work with OpenAI, Anthropic, Gemini and Mistral hosted models, plus self-hosted models via Ollama and llm-ollama.

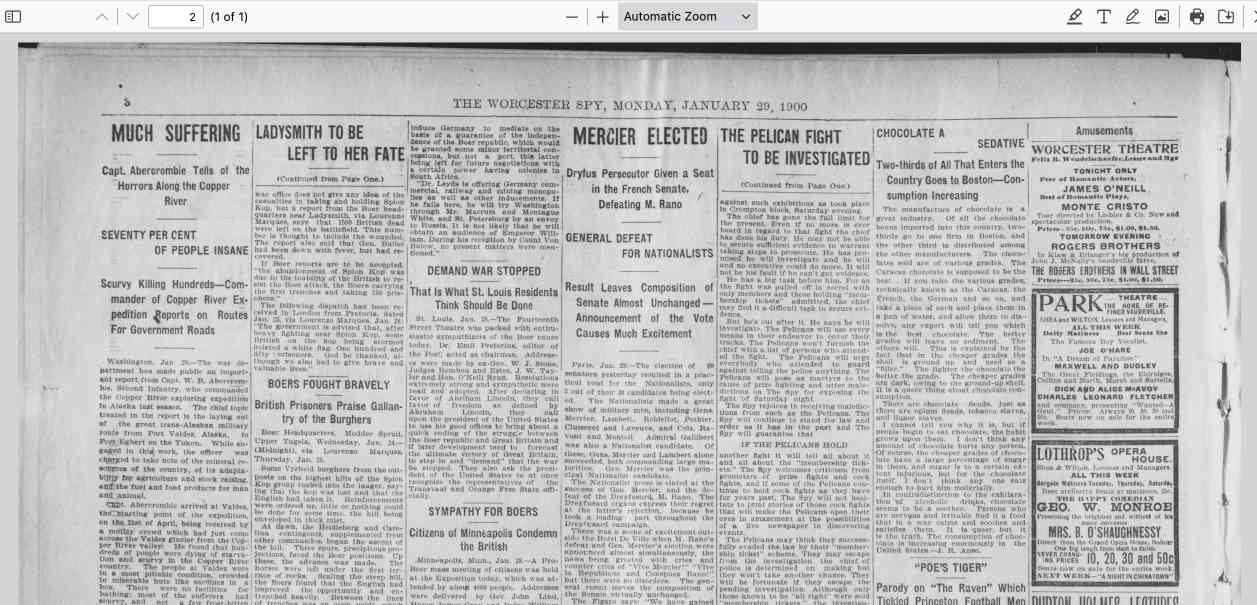

llm-anthropic #24: Use new URL parameter to send attachments. Anthropic released a neat quality of life improvement today. Alex Albert:

We've added the ability to specify a public facing URL as the source for an image / document block in the Anthropic API

Prior to this, any time you wanted to send an image to the Claude API you needed to base64-encode it and then include that data in the JSON. This got pretty bulky, especially in conversation scenarios where the same image data needs to get passed in every follow-up prompt.

I implemented this for llm-anthropic and shipped it just now in version 0.15.1 (here's the commit) - I went with a patch release version number bump because this is effectively a performance optimization which doesn't provide any new features, previously LLM would accept URLs just fine and would download and then base64 them behind the scenes.

In testing this out I had a really impressive result from Claude 3.7 Sonnet. I found a newspaper page from 1900 on the Library of Congress (the "Worcester spy.") and fed a URL to the PDF into Sonnet like this:

llm -m claude-3.7-sonnet \

-a 'https://tile.loc.gov/storage-services/service/ndnp/mb/batch_mb_gaia_ver02/data/sn86086481/0051717161A/1900012901/0296.pdf' \

'transcribe all text from this image, formatted as markdown'

I haven't checked every sentence but it appears to have done an excellent job, at a cost of 16 cents.

As another experiment, I tried running that against my example people template from the schemas feature I released this morning:

llm -m claude-3.7-sonnet \

-a 'https://tile.loc.gov/storage-services/service/ndnp/mb/batch_mb_gaia_ver02/data/sn86086481/0051717161A/1900012901/0296.pdf' \

-t people

That only gave me two results - so I tried an alternative approach where I looped the OCR text back through the same template, using llm logs --cid with the logged conversation ID and -r to extract just the raw response from the logs:

llm logs --cid 01jn7h45x2dafa34zk30z7ayfy -r | \

llm -t people -m claude-3.7-sonnet

... and that worked fantastically well! The result started like this:

{

"items": [

{

"name": "Capt. W. R. Abercrombie",

"organization": "United States Army",

"role": "Commander of Copper River exploring expedition",

"learned": "Reported on the horrors along the Copper River in Alaska, including starvation, scurvy, and mental illness affecting 70% of people. He was tasked with laying out a trans-Alaskan military route and assessing resources.",

"article_headline": "MUCH SUFFERING",

"article_date": "1900-01-28"

},

{

"name": "Edward Gillette",

"organization": "Copper River expedition",

"role": "Member of the expedition",

"learned": "Contributed a chapter to Abercrombie's report on the feasibility of establishing a railroad route up the Copper River valley, comparing it favorably to the Seattle to Skaguay route.",

"article_headline": "MUCH SUFFERING",

"article_date": "1900-01-28"

}Gemini 2.0 Flash and Flash-Lite (via) Gemini 2.0 Flash-Lite is now generally available - previously it was available just as a preview - and has announced pricing. The model is $0.075/million input tokens and $0.030/million output - the same price as Gemini 1.5 Flash.

Google call this "simplified pricing" because 1.5 Flash charged different cost-per-tokens depending on if you used more than 128,000 tokens. 2.0 Flash-Lite (and 2.0 Flash) are both priced the same no matter how many tokens you use.

I released llm-gemini 0.12 with support for the new gemini-2.0-flash-lite model ID. I've also updated my LLM pricing calculator with the new prices.

files-to-prompt 0.6. New release of my CLI tool for turning a whole directory of code into a single prompt ready to pipe or paste into an LLM.

Here are the full release notes:

- New

-m/--markdownoption for outputting results as Markdown with each file in a fenced code block. #42- Support for reading a list of files from standard input. Thanks, Ankit Shankar. #44

Here's how to process just files modified within the last day:find . -mtime -1 | files-to-promptYou can also use the

-0/--nullflag to accept lists of file paths separated by null delimiters, which is useful for handling file names with spaces in them:find . -name "*.txt" -print0 | files-to-prompt -0

I also have a potential fix for a reported bug concerning nested .gitignore files that's currently sitting in a PR. I'm waiting for someone else to confirm that it behaves as they would expect. I've left details in this issue comment, but the short version is that you can try out the version from the PR using this uvx incantation:

uvx --with git+https://github.com/simonw/files-to-prompt@nested-gitignore files-to-prompt

files-to-prompt 0.5.

My files-to-prompt tool (originally built using Claude 3 Opus back in April) had been accumulating a bunch of issues and PRs - I finally got around to spending some time with it and pushed a fresh release:

- New

-n/--line-numbersflag for including line numbers in the output. Thanks, Dan Clayton. #38- Fix for utf-8 handling on Windows. Thanks, David Jarman. #36

--ignorepatterns are now matched against directory names as well as file names, unless you pass the new--ignore-files-onlyflag. Thanks, Nick Powell. #30

I use this tool myself on an almost daily basis - it's fantastic for quickly answering questions about code. Recently I've been plugging it into Gemini 2.0 with its 2 million token context length, running recipes like this one:

git clone https://github.com/bytecodealliance/componentize-py

cd componentize-py

files-to-prompt . -c | llm -m gemini-2.0-pro-exp-02-05 \

-s 'How does this work? Does it include a python compiler or AST trick of some sort?'

I ran that question against the bytecodealliance/componentize-py repo - which provides a tool for turning Python code into compiled WASM - and got this really useful answer.

Here's another example. I decided to have o3-mini review how Datasette handles concurrent SQLite connections from async Python code - so I ran this:

git clone https://github.com/simonw/datasette

cd datasette/datasette

files-to-prompt database.py utils/__init__.py -c | \

llm -m o3-mini -o reasoning_effort high \

-s 'Output in markdown a detailed analysis of how this code handles the challenge of running SQLite queries from a Python asyncio application. Explain how it works in the first section, then explore the pros and cons of this design. In a final section propose alternative mechanisms that might work better.'

Here's the result. It did an extremely good job of explaining how my code works - despite being fed just the Python and none of the other documentation. Then it made some solid recommendations for potential alternatives.

I added a couple of follow-up questions (using llm -c) which resulted in a full working prototype of an alternative threadpool mechanism, plus some benchmarks.

One final example: I decided to see if there were any undocumented features in Litestream, so I checked out the repo and ran a prompt against just the .go files in that project:

git clone https://github.com/benbjohnson/litestream

cd litestream

files-to-prompt . -e go -c | llm -m o3-mini \

-s 'Write extensive user documentation for this project in markdown'

Once again, o3-mini provided a really impressively detailed set of unofficial documentation derived purely from reading the source.

LLM 0.20. New release of my LLM CLI tool and Python library. A bunch of accumulated fixes and features since the start of December, most notably:

- Support for OpenAI's o1 model - a significant upgrade from

o1-previewgiven its 200,000 input and 100,000 output tokens (o1-previewwas 128,000/32,768). #676 - Support for the

gpt-4o-audio-previewandgpt-4o-mini-audio-previewmodels, which can accept audio input:llm -m gpt-4o-audio-preview -a https://static.simonwillison.net/static/2024/pelican-joke-request.mp3#677 - A new

llm -x/--extractoption which extracts and returns the contents of the first fenced code block in the response. This is useful for prompts that generate code. #681 - A new

llm models -q 'search'option for searching available models - useful if you've installed a lot of plugins. Searches are case insensitive. #700

llm-gemini 0.9.

This new release of my llm-gemini plugin adds support for two new experimental models:

learnlm-1.5-pro-experimentalis "an experimental task-specific model that has been trained to align with learning science principles when following system instructions for teaching and learning use cases" - more here.-

gemini-2.0-flash-thinking-exp-01-21is a brand new version of the Gemini 2.0 Flash Thinking model released today:Latest version also includes code execution, a 1M token content window & a reduced likelihood of thought-answer contradictions.

The most exciting new feature though is support for Google search grounding, where some Gemini models can execute Google searches as part of answering a prompt. This feature can be enabled using the new -o google_search 1 option.

datasette-enrichments-llm. Today's new alpha release is datasette-enrichments-llm, a plugin for Datasette 1.0a+ that provides an enrichment that lets you run prompts against data from one or more column and store the result in another column.

So far it's a light re-implementation of the existing datasette-enrichments-gpt plugin, now using the new llm.get_async_models() method to allow users to select any async-enabled model that has been registered by a plugin - so currently any of the models from OpenAI, Anthropic, Gemini or Mistral via their respective plugins.

Still plenty to do on this one. Next step is to integrate it with datasette-llm-usage and use it to drive a design-complete stable version of that.

datasette-queries. I released the first alpha of a new plugin to replace the crusty old datasette-saved-queries. This one adds a new UI element to the top of the query results page with an expandable form for saving the query as a new canned query:

It's my first plugin to depend on LLM and datasette-llm-usage - it uses GPT-4o mini to power an optional "Suggest title and description" button, labeled with the becoming-standard ✨ sparkles emoji to indicate an LLM-powered feature.

I intend to expand this to work across multiple models as I continue to iterate on llm-datasette-usage to better support those kinds of patterns.

For the moment though each suggested title and description call costs about 250 input tokens and 50 output tokens, which against GPT-4o mini adds up to 0.0067 cents.

datasette-llm-usage. I released the first alpha of a Datasette plugin to help track LLM usage by other plugins, with the goal of supporting token allowances - both for things like free public apps that stop working after a daily allowance, plus free previews of AI features for paid-account-based projects such as Datasette Cloud.

It's using the usage features I added in LLM 0.19.

The alpha doesn't do much yet - it will start getting interesting once I upgrade other plugins to depend on it.

Design notes so far in issue #1.

LLM 0.19. I just released version 0.19 of LLM, my Python library and CLI utility for working with Large Language Models.

I released 0.18 a couple of weeks ago adding support for calling models from Python asyncio code. 0.19 improves on that, and also adds a new mechanism for models to report their token usage.

LLM can log those usage numbers to a SQLite database, or make then available to custom Python code.

My eventual goal with these features is to implement token accounting as a Datasette plugin so I can offer AI features in my SaaS platform without worrying about customers spending unlimited LLM tokens.

Those 0.19 release notes in full:

- Tokens used by a response are now logged to new

input_tokensandoutput_tokensinteger columns and atoken_detailsJSON string column, for the default OpenAI models and models from other plugins that implement this feature. #610llm promptnow takes a-u/--usageflag to display token usage at the end of the response.llm logs -u/--usageshows token usage information for logged responses.llm prompt ... --asyncresponses are now logged to the database. #641llm.get_models()andllm.get_async_models()functions, documented here. #640response.usage()and async responseawait response.usage()methods, returning aUsage(input=2, output=1, details=None)dataclass. #644response.on_done(callback)andawait response.on_done(callback)methods for specifying a callback to be executed when a response has completed, documented here. #653- Fix for bug running

llm chaton Windows 11. Thanks, Sukhbinder Singh. #495

I also released three new plugin versions that add support for the new usage tracking feature: llm-gemini 0.5, llm-claude-3 0.10 and llm-mistral 0.9.

GitHub OAuth for a static site using Cloudflare Workers. Here's a TIL covering a Thanksgiving AI-assisted programming project. I wanted to add OAuth against GitHub to some of the projects on my tools.simonwillison.net site in order to implement "Save to Gist".

That site is entirely statically hosted by GitHub Pages, but OAuth has a required server-side component: there's a client_secret involved that should never be included in client-side code.

Since I serve the site from behind Cloudflare I realized that a minimal Cloudflare Workers script may be enough to plug the gap. I got Claude on my phone to build me a prototype and then pasted that (still on my phone) into a new Cloudflare Worker and it worked!

... almost. On later closer inspection of the code it was missing error handling... and then someone pointed out it was vulnerable to a login CSRF attack thanks to failure to check the state= parameter. I worked with Claude to fix those too.

Useful reminder here that pasting code AI-generated code around on a mobile phone isn't necessarily the best environment to encourage a thorough code review!

llm-gemini 0.4.

New release of my llm-gemini plugin, adding support for asynchronous models (see LLM 0.18), plus the new gemini-exp-1114 model (currently at the top of the Chatbot Arena) and a -o json_object 1 option to force JSON output.

I also released llm-claude-3 0.9 which adds asynchronous support for the Claude family of models.

LLM 0.18. New release of LLM. The big new feature is asynchronous model support - you can now use supported models in async Python code like this:

import llm

model = llm.get_async_model("gpt-4o")

async for chunk in model.prompt(

"Five surprising names for a pet pelican"

):

print(chunk, end="", flush=True)

Also new in this release: support for sending audio attachments to OpenAI's gpt-4o-audio-preview model.

QuickTime video script to capture frames and bounding boxes. An update to an older TIL. I'm working on the write-up for my DjangoCon US talk on plugins and I found myself wanting to capture individual frames from the video in two formats: a full frame capture, and another that captured just the portion of the screen shared from my laptop.

I have a script for the former, so I got Claude to update my script to add support for one or more --box options, like this:

capture-bbox.sh ../output.mp4 --box '31,17,100,87' --box '0,0,50,50'

Open output.mp4 in QuickTime Player, run that script and then every time you hit a key in the terminal app it will capture three JPEGs from the current position in QuickTime Player - one for the whole screen and one each for the specified bounding box regions.

Those bounding box regions are percentages of the width and height of the image. I also got Claude to build me this interactive tool on top of cropperjs to help figure out those boxes:

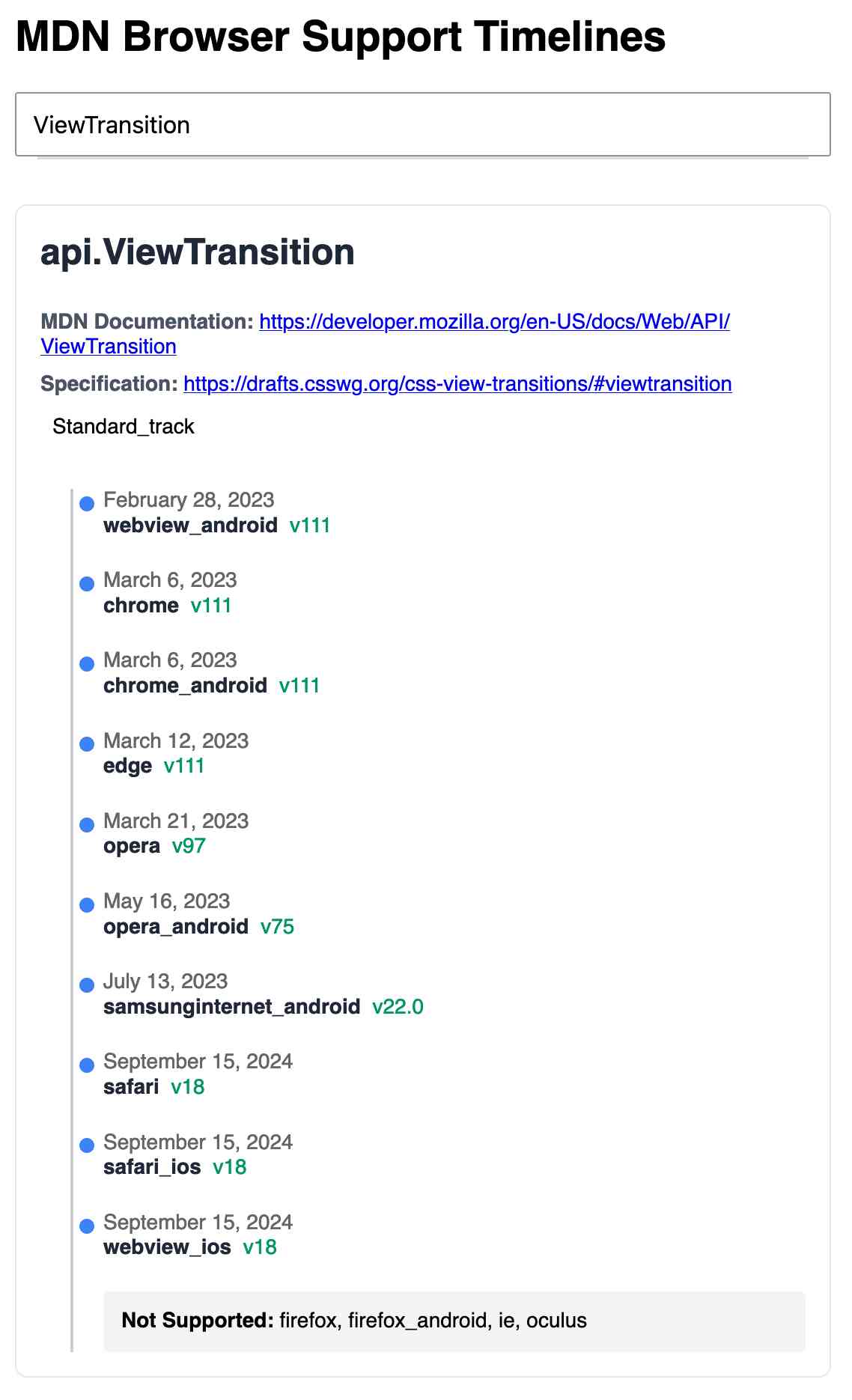

MDN Browser Support Timelines. I complained on Hacker News today that I wished the MDN browser compatibility ables - like this one for the Web Locks API - included an indication as to when each browser was released rather than just the browser numbers.

It turns out they do! If you click on each browser version in turn you can see an expanded area showing the browser release date:

There's even an inline help tip telling you about the feature, which I've been studiously ignoring for years.

I want to see all the information at once without having to click through each browser. I had a poke around in the Firefox network tab and found https://bcd.developer.mozilla.org/bcd/api/v0/current/api.Lock.json - a JSON document containing browser support details (with release dates) for that API... and it was served using access-control-allow-origin: * which means I can hit it from my own little client-side applications.

I decided to build something with an autocomplete drop-down interface for selecting the API. That meant I'd need a list of all of the available APIs, and I used GitHub code search to find that in the mdn/browser-compat-data repository, in the api/ directory.

I needed the list of files in that directory for my autocomplete. Since there are just over 1,000 of those the regular GitHub contents API won't return them all, so I switched to the tree API instead.

Here's the finished tool - source code here:

95% of the code was written by LLMs, but I did a whole lot of assembly and iterating to get it to the finished state. Three of the transcripts for that:

- Web Locks API Browser Support Timeline in which I paste in the original API JSON and ask it to come up with a timeline visualization for it.

- Enhancing API Feature Display with URL Hash where I dumped in a more complex JSON example to get it to show multiple APIs on the same page, and also had it add

#fragmentbookmarking to the tool - Fetch GitHub API Data Hierarchy where I got it to write me an async JavaScript function for fetching a directory listing from that tree API.

Nous Hermes 3. The Nous Hermes family of fine-tuned models have a solid reputation. Their most recent release came out in August, based on Meta's Llama 3.1:

Our training data aggressively encourages the model to follow the system and instruction prompts exactly and in an adaptive manner. Hermes 3 was created by fine-tuning Llama 3.1 8B, 70B and 405B, and training on a dataset of primarily synthetically generated responses. The model boasts comparable and superior performance to Llama 3.1 while unlocking deeper capabilities in reasoning and creativity.

The model weights are on Hugging Face, including GGUF versions of the 70B and 8B models. Here's how to try the 8B model (a 4.58GB download) using the llm-gguf plugin:

llm install llm-gguf

llm gguf download-model 'https://huggingface.co/NousResearch/Hermes-3-Llama-3.1-8B-GGUF/resolve/main/Hermes-3-Llama-3.1-8B.Q4_K_M.gguf' -a Hermes-3-Llama-3.1-8B

llm -m Hermes-3-Llama-3.1-8B 'hello in spanish'

Nous Research partnered with Lambda Labs to provide inference APIs. It turns out Lambda host quite a few models now, currently providing free inference to users with an API key.

I just released the first alpha of a llm-lambda-labs plugin. You can use that to try the larger 405b model (very hard to run on a consumer device) like this:

llm install llm-lambda-labs

llm keys set lambdalabs

# Paste key here

llm -m lambdalabs/hermes3-405b 'short poem about a pelican with a twist'

Here's the source code for the new plugin, which I based on llm-mistral. The plugin uses httpx-sse to consume the stream of tokens from the API.

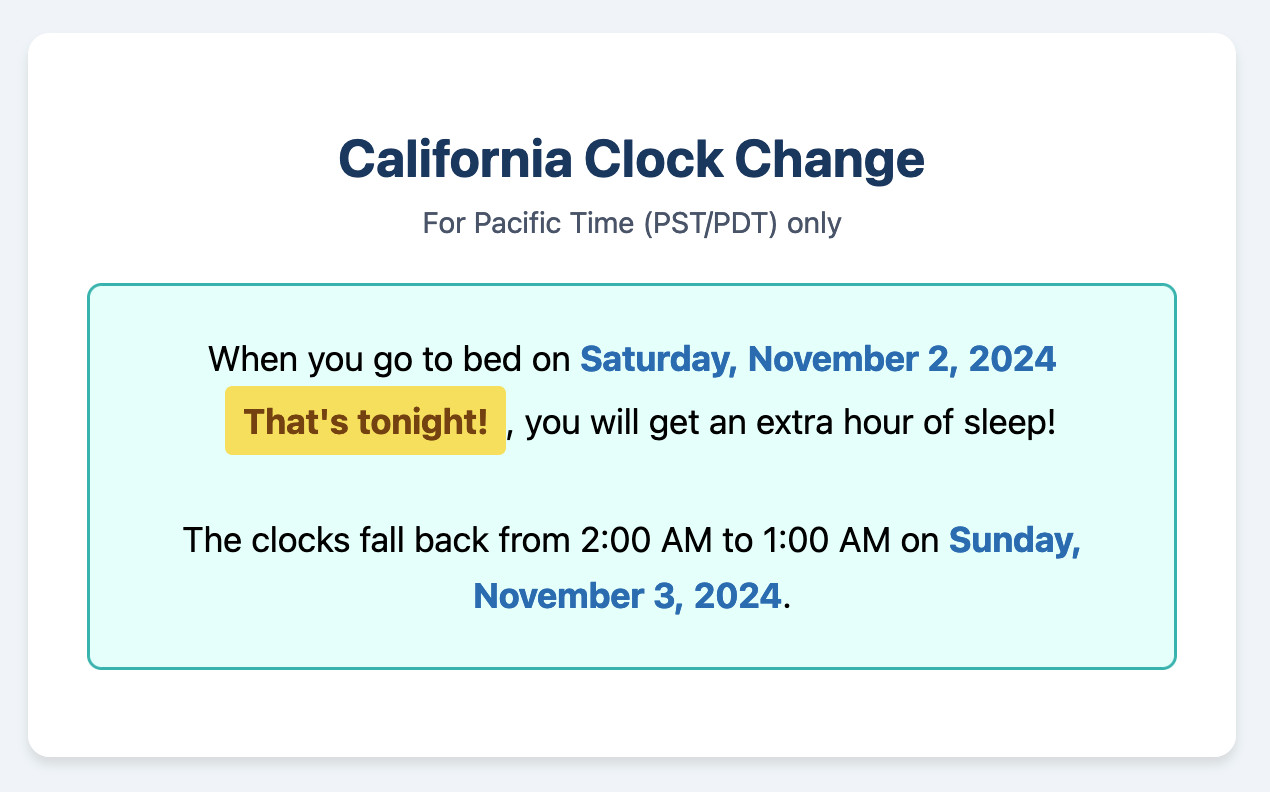

California Clock Change. The clocks go back in California tonight and I finally built my dream application for helping me remember if I get an hour extra of sleep or not, using a Claude Artifact. Here's the transcript.

This is one of my favorite examples yet of the kind of tiny low stakes utilities I'm building with Claude Artifacts because the friction involved in churning out a working application has dropped almost to zero.

(I added another feature: it now includes a note of what time my Dog thinks it is if the clocks have recently changed.)

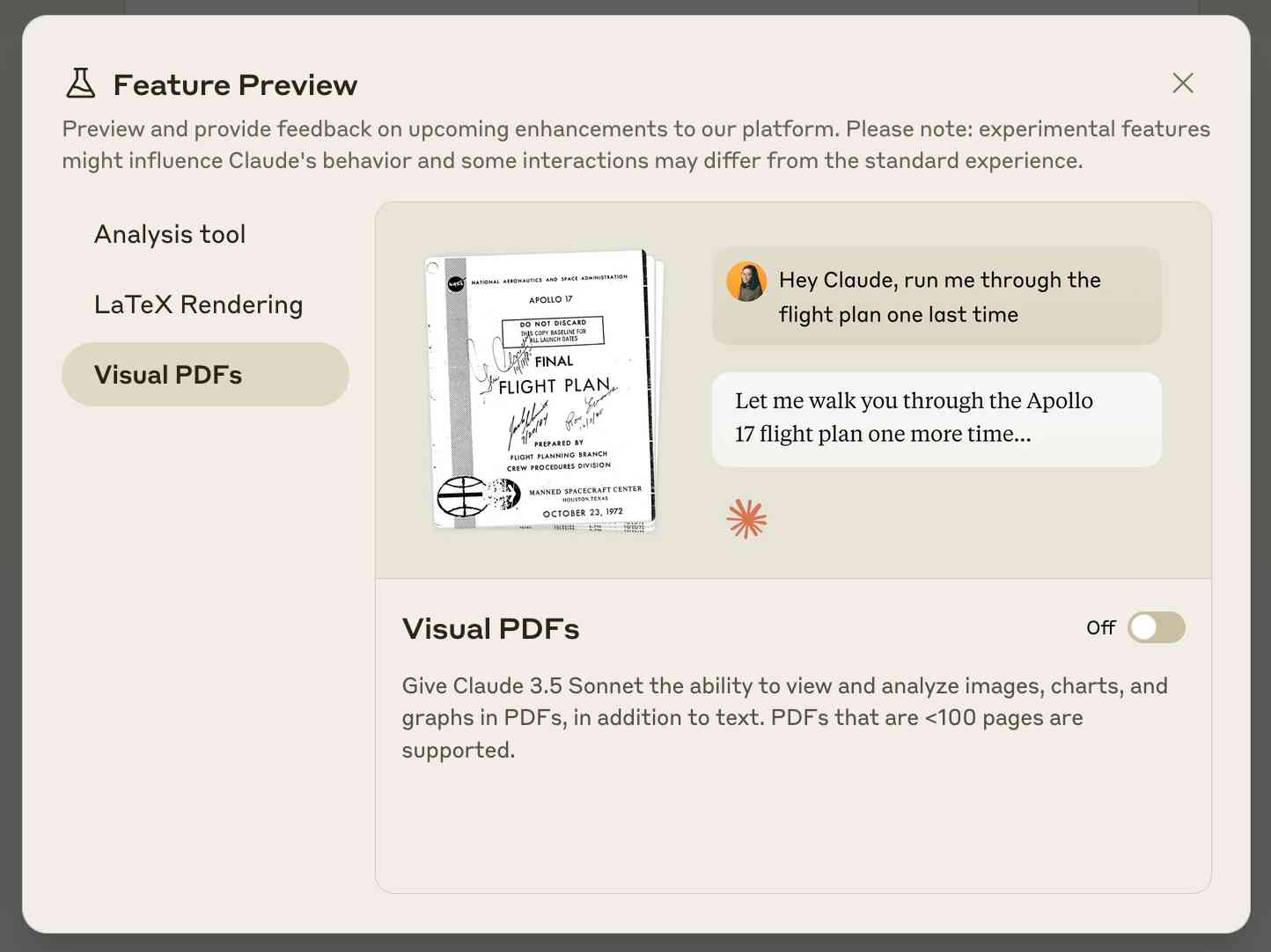

Claude API: PDF support (beta) (via) Claude 3.5 Sonnet now accepts PDFs as attachments:

The new Claude 3.5 Sonnet (

claude-3-5-sonnet-20241022) model now supports PDF input and understands both text and visual content within documents.

I just released llm-claude-3 0.7 with support for the new attachment type (attachments are a very new feature), so now you can do this:

llm install llm-claude-3 --upgrade

llm -m claude-3.5-sonnet 'extract text' -a mydoc.pdf

Visual PDF analysis can also be turned on for the Claude.ai application:

Also new today: Claude now offers a free (albeit rate-limited) token counting API. This addresses a complaint I've had for a while: previously it wasn't possible to accurately estimate the cost of a prompt before sending it to be executed.

Running prompts against images and PDFs with Google Gemini.

New TIL. I've been experimenting with the Google Gemini APIs for running prompts against images and PDFs (in preparation for finally adding multi-modal support to LLM) - here are my notes on how to send images or PDF files to their API using curl and the base64 -i macOS command.

I figured out the curl incantation first and then got Claude to build me a Bash script that I can execute like this:

prompt-gemini 'extract text' example-handwriting.jpg

Playing with this is really fun. The Gemini models charge less than 1/10th of a cent per image, so it's really inexpensive to try them out.

files-to-prompt 0.4. New release of my files-to-prompt tool adding an option for filtering just for files with a specific extension.

The following command will output Claude XML-style markup for all Python and Markdown files in the current directory, and copy that to the macOS clipboard ready to be pasted into an LLM:

files-to-prompt . -e py -e md -c | pbcopy