Quotations

Filters: Sorted by date

A lot of people say AI will make us all "managers" or "editors"...but I think this is a dangerously incomplete view!

Personally, I'm trying to code like a surgeon.

A surgeon isn't a manager, they do the actual work! But their skills and time are highly leveraged with a support team that handles prep, secondary tasks, admin. The surgeon focuses on the important stuff they are uniquely good at. [...]

It turns out there are a LOT of secondary tasks which AI agents are now good enough to help out with. Some things I'm finding useful to hand off these days:

- Before attempting a big task, write a guide to relevant areas of the codebase

- Spike out an attempt at a big change. Often I won't use the result but I'll review it as a sketch of where to go

- Fix typescript errors or bugs which have a clear specification

- Write documentation about what I'm building

I often find it useful to run these secondary tasks async in the background -- while I'm eating lunch, or even literally overnight!

When I sit down for a work session, I want to feel like a surgeon walking into a prepped operating room. Everything is ready for me to do what I'm good at.

— Geoffrey Litt, channeling The Mythical Man-Month

For resiliency, the DNS Enactor operates redundantly and fully independently in three different Availability Zones (AZs). [...] When the second Enactor (applying the newest plan) completed its endpoint updates, it then invoked the plan clean-up process, which identifies plans that are significantly older than the one it just applied and deletes them. At the same time that this clean-up process was invoked, the first Enactor (which had been unusually delayed) applied its much older plan to the regional DDB endpoint, overwriting the newer plan. [...] The second Enactor's clean-up process then deleted this older plan because it was many generations older than the plan it had just applied. As this plan was deleted, all IP addresses for the regional endpoint were immediately removed.

— AWS, Amazon DynamoDB Service Disruption in Northern Virginia (US-EAST-1) Region (14.5 hours long!)

Since getting a modem at the start of the month, and hooking up to the Internet, I’ve spent about an hour every evening actually online (which I guess is costing me about £1 a night), and much of the days and early evenings fiddling about with things. It’s so complicated. All the hype never mentioned that. I guess journalists just have it all set up for them so they don’t have to worry too much about that side of things. It’s been a nightmare, but an enjoyable one, and in the end, satisfying.

— Phil Gyford, Diary entry, Friday February 17th 1995 1.50 am

Prompt injection might be unsolvable in today’s LLMs. LLMs process token sequences, but no mechanism exists to mark token privileges. Every solution proposed introduces new injection vectors: Delimiter? Attackers include delimiters. Instruction hierarchy? Attackers claim priority. Separate models? Double the attack surface. Security requires boundaries, but LLMs dissolve boundaries. [...]

Poisoned states generate poisoned outputs, which poison future states. Try to summarize the conversation history? The summary includes the injection. Clear the cache to remove the poison? Lose all context. Keep the cache for continuity? Keep the contamination. Stateful systems can’t forget attacks, and so memory becomes a liability. Adversaries can craft inputs that corrupt future outputs.

— Bruce Schneier and Barath Raghavan, Agentic AI’s OODA Loop Problem

Using UUIDv7 is generally discouraged for security when the primary key is exposed to end users in external-facing applications or APIs. The main issue is that UUIDv7 incorporates a 48-bit Unix timestamp as its most significant part, meaning the identifier itself leaks the record's creation time.

This leakage is primarily a privacy concern. Attackers can use the timing data as metadata for de-anonymization or account correlation, potentially revealing activity patterns or growth rates within an organization.

— Alexander Fridriksson and Jay Miller, Exploring PostgreSQL 18's new UUIDv7 support

Skills actually came out of a prototype I built demonstrating that Claude Code is a general-purpose agent :-)

It was a natural conclusion once we realized that bash + filesystem were all we needed

— Barry Zhang, Anthropic

Pro se litigants [people representing themselves in court without a lawyer] account for the majority of the cases in the United States where a party submitted a court filing containing AI hallucinations. In a country where legal representation is unaffordable for most people, it is no wonder that pro se litigants are depending on free or low-cost AI tools. But it is a scandal that so many have been betrayed by them, to the detriment of the cases they are litigating all on their own.

— Riana Pfefferkorn, analyzing the AI Hallucination Cases database for CIS at Stanford Law

While Sonnet 4.5 remains the default [in Claude Code], Haiku 4.5 now powers the Explore subagent which can rapidly gather context on your codebase to build apps even faster.

You can select Haiku 4.5 to be your default model in /model. When selected, you’ll automatically use Sonnet 4.5 in Plan mode and Haiku 4.5 for execution for smarter plans and faster results.

— Catherine Wu, Claude Code PM, Anthropic

Previous system cards have reported results on an expanded version of our earlier agentic misalignment evaluation suite: three families of exotic scenarios meant to elicit the model to commit blackmail, attempt a murder, and frame someone for financial crimes. We choose not to report full results here because, similarly to Claude Sonnet 4.5, Claude Haiku 4.5 showed many clear examples of verbalized evaluation awareness on all three of the scenarios tested in this suite. Since the suite only consisted of many similar variants of three core scenarios, we expect that the model maintained high unverbalized awareness across the board, and we do not trust it to be representative of behavior in the real extreme situations the suite is meant to emulate.

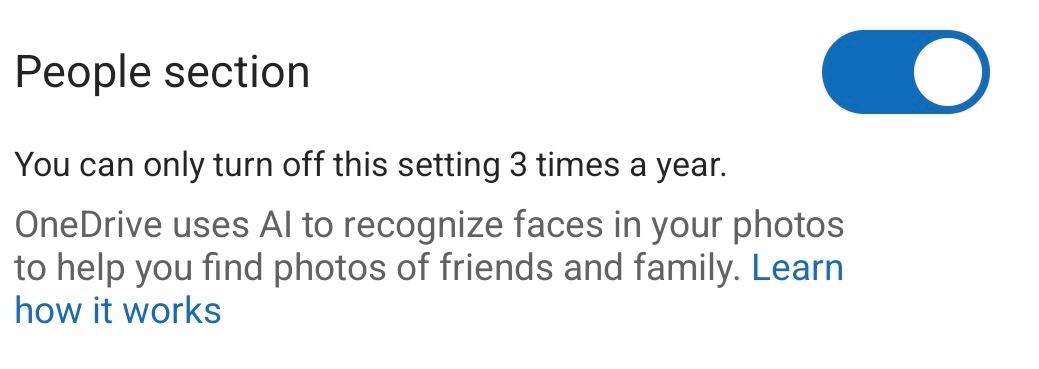

Slashdot: What's the reason OneDrive tells users this setting can only be turned off 3 times a year? (And are those any three times — or does that mean three specific days, like Christmas, New Year's Day, etc.)

[Microsoft's publicist chose not to answer this question.]

— Slashdot, asking the obvious question

I get a feeling that working with multiple AI agents is something that comes VERY natural to most senior+ engineers or tech lead who worked at a large company

You already got used to overseeing parallel work (the goto code reviewer!) + making progress with small chunks of work... because your day has been a series of nonstop interactions, so you had to figure out how to do deep work in small chunks that could have been interrupted

The cognitive debt of LLM-laden coding extends beyond disengagement of our craft. We’ve all heard the stories. Hyped up, vibed up, slop-jockeys with attention spans shorter than the framework-hopping JavaScript devs of the early 2010s, sling their sludge in pull requests and design docs, discouraging collaboration and disrupting teams. Code reviewing coworkers are rapidly losing their minds as they come to the crushing realization that they are now the first layer of quality control instead of one of the last. Asked to review; forced to pick apart. Calling out freshly added functions that are never called, hallucinated library additions, and obvious runtime or compilation errors. All while the author—who clearly only skimmed their “own” code—is taking no responsibility, going “whoopsie, Claude wrote that. Silly AI, ha-ha.”

— Simon Højberg, The Programmer Identity Crisis

For quite some I wanted to write a small static image gallery so I can share my pictures with friends and family. Of course there are a gazillion tools like this, but, well, sometimes I just want to roll my own. [...]

I used the old, well tested technique I call brain coding, where you start with an empty vim buffer and type some code (Perl, HTML, CSS) until you're happy with the result. It helps to think a bit (aka use your brain) during this process.

— Thomas Klausner, coining "brain coding"

I believed that giving users such a simple way to navigate the internet would unlock creativity and collaboration on a global scale. If you could put anything on it, then after a while, it would have everything on it.

But for the web to have everything on it, everyone had to be able to use it, and want to do so. This was already asking a lot. I couldn’t also ask that they pay for each search or upload they made. In order to succeed, therefore, it would have to be free. That’s why, in 1993, I convinced my Cern managers to donate the intellectual property of the world wide web, putting it into the public domain. We gave the web away to everyone.

— Tim Berners-Lee, Why I gave the world wide web away for free

When attention is being appropriated, producers need to weigh the costs and benefits of the transaction. To assess whether the appropriation of attention is net-positive, it’s useful to distinguish between extractive and non-extractive contributions. Extractive contributions are those where the marginal cost of reviewing and merging that contribution is greater than the marginal benefit to the project’s producers. In the case of a code contribution, it might be a pull request that’s too complex or unwieldy to review, given the potential upside

— Nadia Eghbal, Working in Public, via the draft LLVM AI tools policy

Given a week or two to try out ideas and search the literature, I’m pretty sure that Freek and I could’ve solved this problem ourselves. Instead, though, I simply asked GPT5-Thinking. After five minutes, it gave me something confident, plausible-looking, and (I could tell) wrong. But rather than laughing at the silly AI like a skeptic might do, I told GPT5 how I knew it was wrong. It thought some more, apologized, and tried again, and gave me something better. So it went for a few iterations, much like interacting with a grad student or colleague. [...]

Now, in September 2025, I’m here to tell you that AI has finally come for what my experience tells me is the most quintessentially human of all human intellectual activities: namely, proving oracle separations between quantum complexity classes. Right now, it almost certainly can’t write the whole research paper (at least if you want it to be correct and good), but it can help you get unstuck if you otherwise know what you’re doing, which you might call a sweet spot.

— Scott Aaronson, UT Austin Quantum Information Center

We’ve seen the strong reactions to 4o responses and want to explain what is happening.

We’ve started testing a new safety routing system in ChatGPT.

As we previously mentioned, when conversations touch on sensitive and emotional topics the system may switch mid-chat to a reasoning model or GPT-5 designed to handle these contexts with extra care. This is similar to how we route conversations that require extra thinking to our reasoning models; our goal is to always deliver answers aligned with our Model Spec.

Routing happens on a per-message basis; switching from the default model happens on a temporary basis. ChatGPT will tell you which model is active when asked.

— Nick Turley, Head of ChatGPT, OpenAI

Conceptually, Mastodon is a bunch of copies of the same webapp emailing each other. There is no realtime global aggregation across the network so it can only offer a fragmented user experience. While some people might like it, it can't directly compete with closed social products because it doesn't have a full view of the network like they do.

The goal of atproto is enable real competition with closed social products for a broader set of products (e.g. Tangled is like GitHub on atproto, Leaflet is like Medium on atproto, and so on). Because it enables global aggregation, every atproto app has a consistent state of the world. There's no notion of "being on a different instance" and only seeing half the replies, or half the like counts, or other fragmentation artifacts as you have in Mastodon.

I don't think they're really comparable in scope, ambition, or performance characteristics.

— Dan Abramov, Hacker News comment discussing his Open Social article

[2 points] Learn basic NumPy operations with an AI tutor! Use an AI chatbot (e.g., ChatGPT, Claude, Gemini, or Stanford AI Playground) to teach yourself how to do basic vector and matrix operations in NumPy (import numpy as np). AI tutors have become exceptionally good at creating interactive tutorials, and this year in CS221, we're testing how they can help you learn fundamentals more interactively than traditional static exercises.

— Stanford CS221 Autumn 2025, Problem 1: Linear Algebra

We define workslop as AI generated work content that masquerades as good work, but lacks the substance to meaningfully advance a given task.

Here’s how this happens. As AI tools become more accessible, workers are increasingly able to quickly produce polished output: well-formatted slides, long, structured reports, seemingly articulate summaries of academic papers by non-experts, and usable code. But while some employees are using this ability to polish good work, others use it to create content that is actually unhelpful, incomplete, or missing crucial context about the project at hand. The insidious effect of workslop is that it shifts the burden of the work downstream, requiring the receiver to interpret, correct, or redo the work. In other words, it transfers the effort from creator to receiver.

— Kate Niederhoffer, Gabriella Rosen Kellerman, Angela Lee, Alex Liebscher, Kristina Rapuano and Jeffrey T. Hancock, Harvard Business Review

Well, the types of computers we have today are tools. They’re responders: you ask a computer to do something and it will do it. The next stage is going to be computers as “agents.” In other words, it will be as if there’s a little person inside that box who starts to anticipate what you want. Rather than help you, it will start to guide you through large amounts of information. It will almost be like you have a little friend inside that box. I think the computer as an agent will start to mature in the late '80s, early '90s.

— Steve Jobs, 1984 interview with Access Magazine (via)

I thought I had an verbal agreement with them, that “Varnish Cache” was the FOSS project and “Varnish Software” was the commercial entitity, but the current position of Varnish Software’s IP-lawyers is that nobody can use “Varnish Cache” in any context, without their explicit permission. [...]

We have tried to negotiatiate with Varnish Software for many months about this issue, but their IP-Lawyers still insist that Varnish Software owns the Varnish Cache name, and at most we have being offered a strictly limited, subject to their veto, permission for the FOSS project to use the “Varnish Cache” name.

We cannot live with that: We are independent FOSS project with our own name.

So we will change the name of the project.

The new association and the new project will be named “The Vinyl Cache Project”, and this release 8.0.0, will be the last under the “Varnish Cache” name.

— Poul-Henning Kamp, Varnish 8.0.0 release notes

The trick with Claude Code is to give it large, but not too large, extremely well defined problems.

(If the problems are too large then you are now vibe coding… which (a) frequently goes wrong, and (b) is a one-way street: once vibes enter your app, you end up with tangled, write-only code which functions perfectly but can no longer be edited by humans. Great for prototyping, bad for foundations.)

— Matt Webb, What I think about when I think about Claude Code

In Python 3.14, I have implemented several changes to fix thread safety of

asyncioand enable it to scale effectively on the free-threaded build of CPython. It is now implemented using lock-free data structures and per-thread state, allowing for highly efficient task management and execution across multiple threads. In the general case of multiple event loops running in parallel, there is no lock contention and performance scales linearly with the number of threads. [...]For a deeper dive into the implementation, check out the internal docs for asyncio.

— Kumar Aditya, Scaling asyncio on Free-Threaded Python

There has never been a successful, widespread malware attack against iPhone. The only system-level iOS attacks we observe in the wild come from mercenary spyware, which is vastly more complex than regular cybercriminal activity and consumer malware. Mercenary spyware is historically associated with state actors and uses exploit chains that cost millions of dollars to target a very small number of specific individuals and their devices. [...] Known mercenary spyware chains used against iOS share a common denominator with those targeting Windows and Android: they exploit memory safety vulnerabilities, which are interchangeable, powerful, and exist throughout the industry.

— Apple Security Engineering and Architecture, introducing Memory Integrity Enforcement for iPhone 17

Having worked inside AWS I can tell you one big reason [that they don't describe their internals] is the attitude/fear that anything we put in out public docs may end up getting relied on by customers. If customers rely on the implementation to work in a specific way, then changing that detail requires a LOT more work to prevent breaking customer's workloads. If it is even possible at that point.

— TheSoftwareGuy, comment on Hacker News

I recently spoke with the CTO of a popular AI note-taking app who told me something surprising: they spend twice as much on vector search as they do on OpenAI API calls. Think about that for a second. Running the retrieval layer costs them more than paying for the LLM itself.

— James Luan, Engineering architect of Milvus

I agree with the intellectual substance of virtually every common critique of AI. And it's very clear that turning those critiques into a competition about who can frame them in the most scathing way online has done zero to slow down adoption, even if much of that is due to default bundling.

At what point are folks going to try literally any other tactic than condescending rants? Does it matter that LLM apps are at the top of virtually every app store nearly every day because individual people are choosing to download them, and the criticism hasn't been effective in slowing that?

I am once again shocked at how much better image retrieval performance you can get if you embed highly opinionated summaries of an image, a summary that came out of a visual language model, than using CLIP embeddings themselves. If you tell the LLM that the summary is going to be embedded and used to do search downstream. I had one system go from 28% recall at 5 using CLIP to 75% recall at 5 using an LLM summary.

RDF has the same problems as the SQL schemas with information scattered. What fields mean requires documentation.

There - they have a name on a person. What name? Given? Legal? Chosen? Preferred for this use case?

You only have one ID for Apple eh? Companies are complex to model, do you mean Apple just as someone would talk about it? The legal structure of entities that underpins all major companies, what part of it is referred to?

I spent a long time building identifiers for universities and companies (which was taken for ROR later) and it was a nightmare to say what a university even was. What’s the name of Cambridge? It’s not “Cambridge University” or “The university of Cambridge” legally. But it also is the actual name as people use it. [It's The Chancellor, Masters, and Scholars of the University of Cambridge]

The university of Paris went from something like 13 institutes to maybe one to then a bunch more. Are companies locations at their headquarters? Which headquarters?

Someone will suggest modelling to solve this but here lies the biggest problem:

The correct modelling depends on the questions you want to answer.

— IanCal, on Hacker News, discussing RDF