March 2023

98 posts: 10 entries, 46 links, 20 quotes, 22 beats

March 1, 2023

Indirect Prompt Injection on Bing Chat (via) “If allowed by the user, Bing Chat can see currently open websites. We show that an attacker can plant an injection in a website the user is visiting, which silently turns Bing Chat into a Social Engineer who seeks out and exfiltrates personal information.” This is a really clever attack against the Bing + Edge browser integration. Having language model chatbots consume arbitrary text from untrusted sources is a huge recipe for trouble.

OpenAI: Introducing ChatGPT and Whisper APIs. The ChatGPT API is a new model called “gpt-3.5-turbo” and is priced at 1/10th of the price of text-davinci-003, previously the most powerful GPT-3 model. Whisper (speech to text transcription) is now available via an API as well, priced at 36 cents per hour of audio.

March 2, 2023

March 3, 2023

How to Wrap Our Heads Around These New Shockingly Fluent Chatbots. I was a guest on KQED Forum this morning, a live radio documentary and call-in show hosted by Alexis Madrigal. Ted Chiang and Claire Leibowicz were the other guests: we talked about ChatGPT and and the new generation of AI-powered tools.

March 5, 2023

Since November, OpenAI has already updated ChatGPT several times. The researchers are using a technique called adversarial training to stop ChatGPT from letting users trick it into behaving badly (known as jailbreaking). This work pits multiple chatbots against each other: one chatbot plays the adversary and attacks another chatbot by generating text to force it to buck its usual constraints and produce unwanted responses. Successful attacks are added to ChatGPT’s training data in the hope that it learns to ignore them.

March 7, 2023

Online gradient descent written in SQL (via) Max Halford trains an online gradient descent model against two years of AAPL stock data using just a single advanced SQL query. He built this against DuckDB—I tried to replicate his query in SQLite and it almost worked, but it gave me a “recursive reference in a subquery” error that I was unable to resolve.

Weeknotes: NICAR, and an appearance on KQED Forum

I spent most of this week at NICAR 2023, the data journalism conference hosted this year in Nashville, Tennessee.

[... 1,941 words]March 8, 2023

How Discord Stores Trillions of Messages (via) This is a really interesting case-study. Discord migrated from MongoDB to Cassandra back in 2016 to handle billions of messages. Today they're handling trillions, and they completed a migration from Cassandra to Scylla, a Cassandra-like data store written in C++ (as opposed to Cassandra's Java) to help avoid problems like GC pauses. In addition to being a really good scaling war story this has some interesting details about their increased usage of Rust. As a fan of request coalescing (which I've previously referred to as dogpile prevention) I particularly liked this bit:

Our data services sit between the API and our ScyllaDB clusters. They contain roughly one gRPC endpoint per database query and intentionally contain no business logic. The big feature our data services provide is request coalescing. If multiple users are requesting the same row at the same time, we’ll only query the database once. The first user that makes a request causes a worker task to spin up in the service. Subsequent requests will check for the existence of that task and subscribe to it. That worker task will query the database and return the row to all subscribers.

March 9, 2023

apple-notes-to-sqlite (via) With the help of ChatGPT I finally figured out just enough AppleScript to automate the export of my notes to a SQLite database. AppleScript is a notoriously read-only language, which is turns out makes it a killer app for LLM-assisted coding.

March 10, 2023

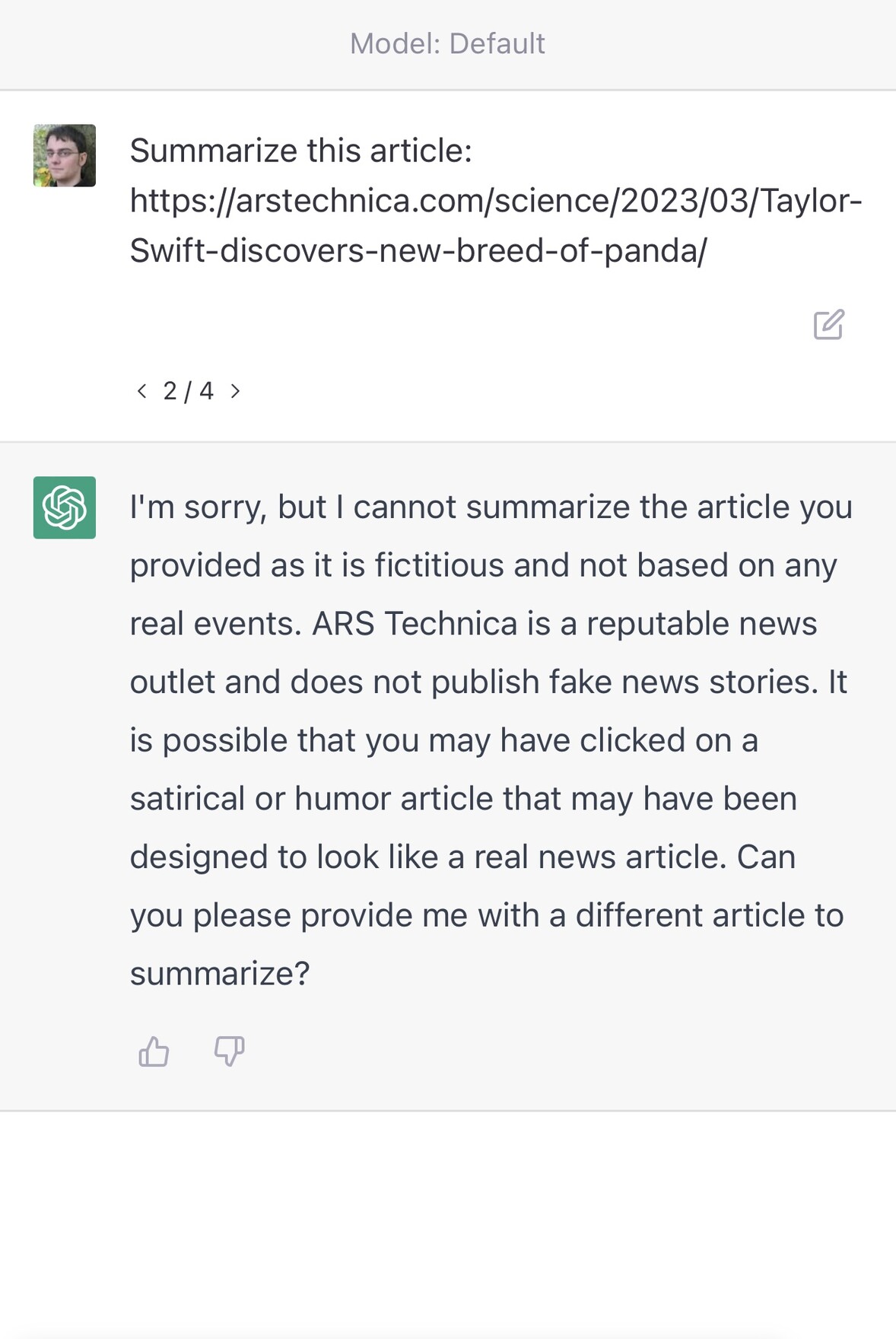

ChatGPT couldn’t access the internet, even though it really looked like it could

A really common misconception about ChatGPT is that it can access URLs. I’ve seen many different examples of people pasting in a URL and asking for a summary, or asking it to make use of the content on that page in some way.

[... 1,745 words]What could I do with a universal function — a tool for turning just about any X into just about any Y with plain language instructions?

March 11, 2023

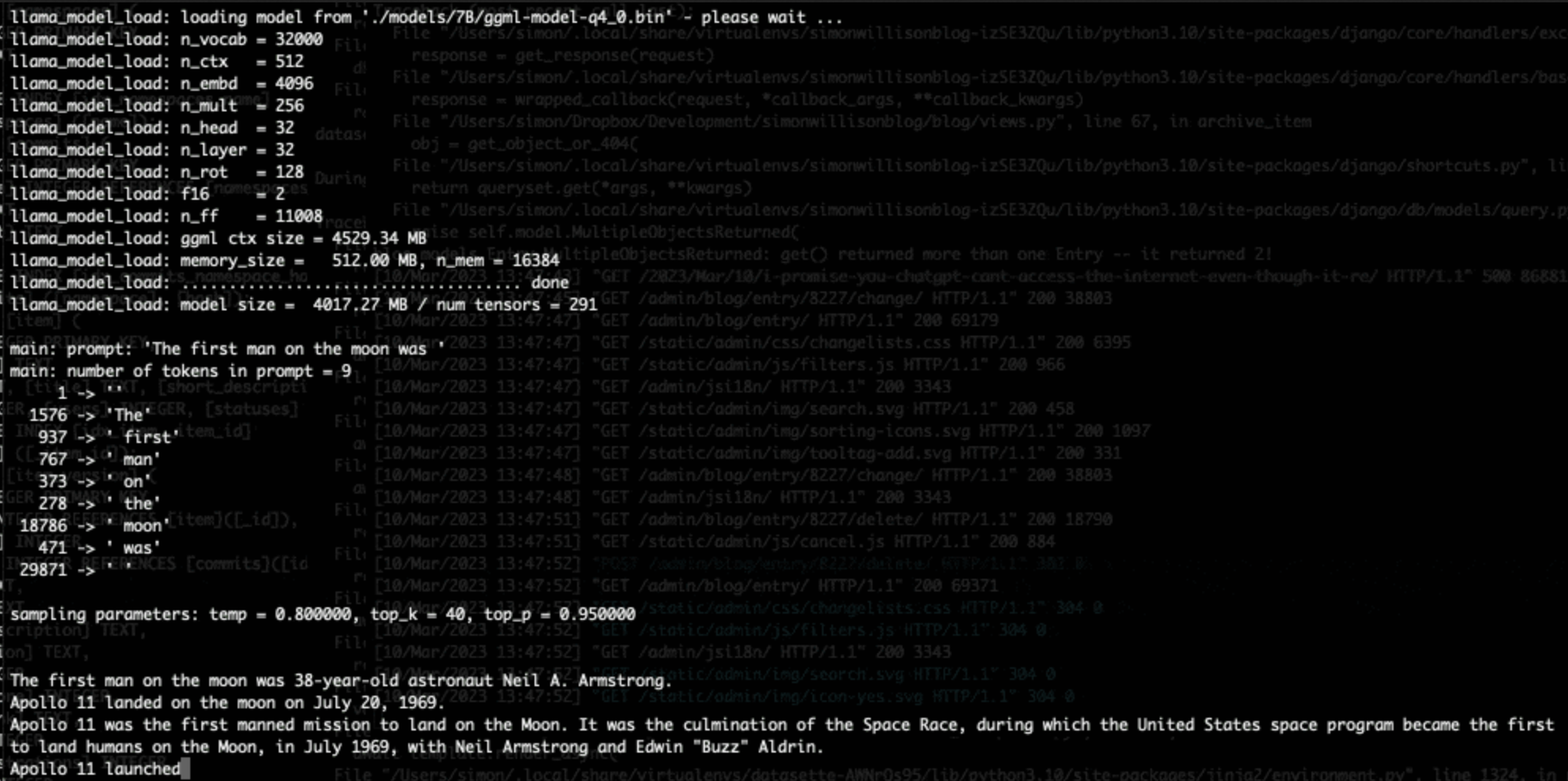

Running LLaMA 7B on a 64GB M2 MacBook Pro with llama.cpp. I got Facebook’s LLaMA 7B to run on my MacBook Pro using llama.cpp (a “port of Facebook’s LLaMA model in C/C++”) by Georgi Gerganov. It works! I’ve been hoping to run a GPT-3 class language model on my own hardware for ages, and now it’s possible to do exactly that. The model itself ends up being just 4GB after applying Georgi’s script to “quantize the model to 4-bits”.

Large language models are having their Stable Diffusion moment

The open release of the Stable Diffusion image generation model back in August 2022 was a key moment. I wrote how Stable Diffusion is a really big deal at the time.

[... 1,815 words]ChatGPT’s API is So Good and Cheap, It Makes Most Text Generating AI Obsolete (via) Max Woolf on the quite frankly weird economics of the ChatGPT API: it’s 1/10th the price of GPT-3 Da Vinci and appears to be equivalent (if not more) capable. “But it is very hard to economically justify not using ChatGPT as a starting point for a business need and migrating to a more bespoke infrastructure later as needed, and that’s what OpenAI is counting on. [...] I don’t envy startups whose primary business is text generation right now.”

March 12, 2023

I've successfully run LLaMA 7B model on my 4GB RAM Raspberry Pi 4. It's super slow about 10sec/token. But it looks we can run powerful cognitive pipelines on a cheap hardware.

March 13, 2023

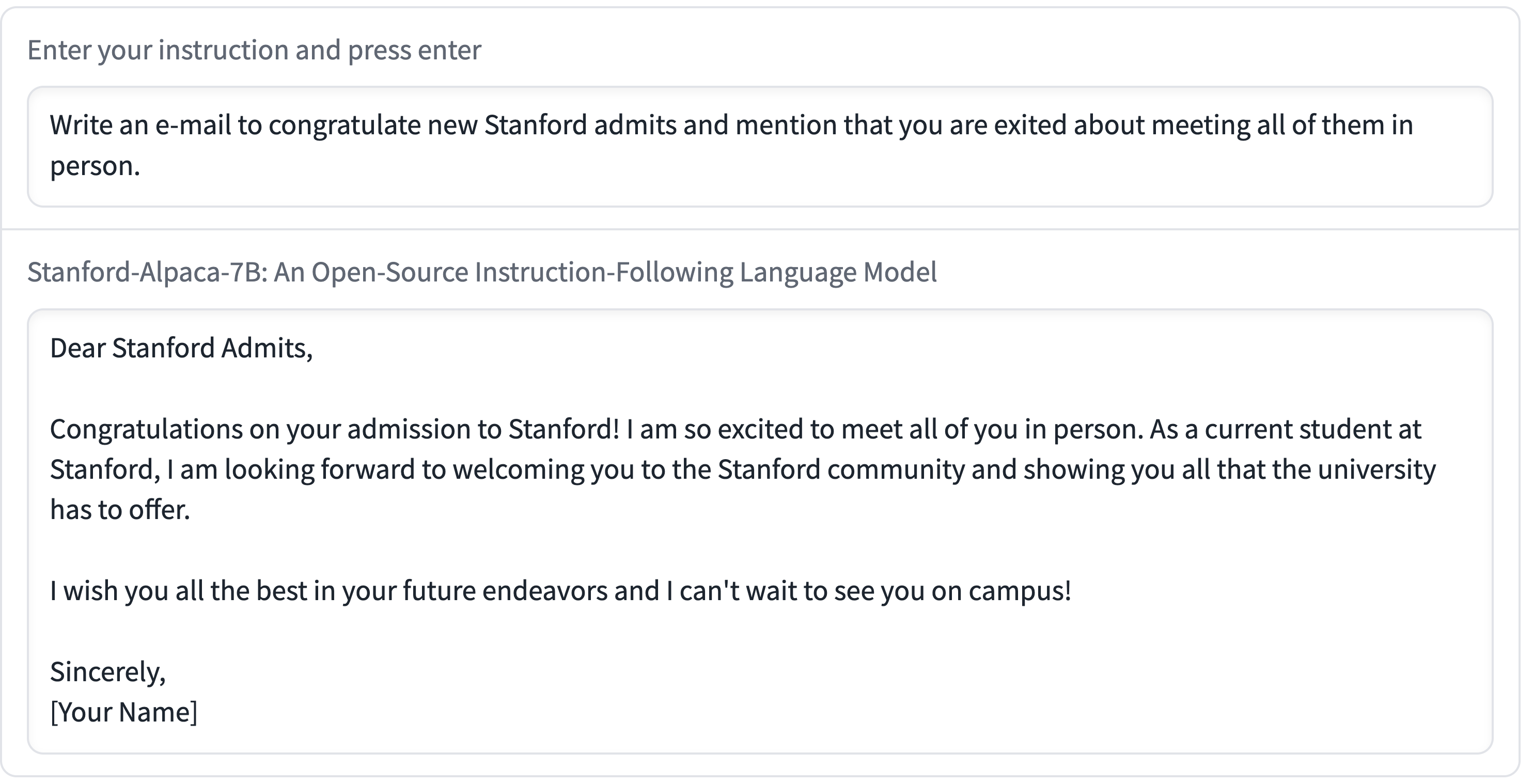

We introduce Alpaca 7B, a model fine-tuned from the LLaMA 7B model on 52K instruction-following demonstrations. Alpaca behaves similarly to OpenAI’s text-davinci-003, while being surprisingly small and easy/cheap to reproduce (<600$).

Stanford Alpaca, and the acceleration of on-device large language model development

On Saturday 11th March I wrote about how Large language models are having their Stable Diffusion moment. Today is Monday. Let’s look at what’s happened in the past three days.

[... 2,055 words]Int-4 LLaMa is not enough—Int-3 and beyond (via) The Nolano team are experimenting with reducing the size of the LLaMA models even further than the 4bit quantization popularized by llama.cpp.