91 posts tagged “testing”

2026

First run the tests

Automated tests are no longer optional when working with coding agents.

The old excuses for not writing them - that they're time consuming and expensive to constantly rewrite while a codebase is rapidly evolving - no longer hold when an agent can knock them into shape in just a few minutes.

They're also vital for ensuring AI-generated code does what it claims to do. If the code has never been executed it's pure luck if it actually works when deployed to production. [... 355 words]

Red/green TDD

"Use red/green TDD" is a pleasingly succinct way to get better results out of a coding agent.

TDD stands for Test Driven Development. It's a programming style where you ensure every piece of code you write is accompanied by automated tests that demonstrate the code works.

The most disciplined form of TDD is test-first development. You write the automated tests first, confirm that they fail, then iterate on the implementation until the tests pass. [... 279 words]

Rodney v0.4.0. My Rodney CLI tool for browser automation attracted quite the flurry of PRs since I announced it last week. Here are the release notes for the just-released v0.4.0:

- Errors now use exit code 2, which means exit code 1 is just for for check failures. #15

- New

rodney assertcommand for running JavaScript tests, exit code 1 if they fail. #19- New directory-scoped sessions with

--local/--globalflags. #14- New

reload --hardandclear-cachecommands. #17- New

rodney start --showoption to make the browser window visible. Thanks, Antonio Cuni. #13- New

rodney connect PORTcommand to debug an already-running Chrome instance. Thanks, Peter Fraenkel. #12- New

RODNEY_HOMEenvironment variable to support custom state directories. Thanks, Senko Rašić. #11- New

--insecureflag to ignore certificate errors. Thanks, Jakub Zgoliński. #10- Windows support: avoid

Setsidon Windows via build-tag helpers. Thanks, adm1neca. #18- Tests now run on

windows-latestandmacos-latestin addition to Linux.

I've been using Showboat to create demos of new features - here those are for rodney assert, rodney reload --hard, rodney exit codes, and rodney start --local.

The rodney assert command is pretty neat: you can now Rodney to test a web app through multiple steps in a shell script that looks something like this (adapted from the README):

#!/bin/bash

set -euo pipefail

FAIL=0

check() {

if ! "$@"; then

echo "FAIL: $*"

FAIL=1

fi

}

rodney start

rodney open "https://example.com"

rodney waitstable

# Assert elements exist

check rodney exists "h1"

# Assert key elements are visible

check rodney visible "h1"

check rodney visible "#main-content"

# Assert JS expressions

check rodney assert 'document.title' 'Example Domain'

check rodney assert 'document.querySelectorAll("p").length' '2'

# Assert accessibility requirements

check rodney ax-find --role navigation

rodney stop

if [ "$FAIL" -ne 0 ]; then

echo "Some checks failed"

exit 1

fi

echo "All checks passed"Introducing Showboat and Rodney, so agents can demo what they’ve built

A key challenge working with coding agents is having them both test what they’ve built and demonstrate that software to you, their supervisor. This goes beyond automated tests—we need artifacts that show their progress and help us see exactly what the agent-produced software is able to do. I’ve just released two new tools aimed at this problem: Showboat and Rodney.

[... 2,023 words]Someone asked on Hacker News if I had any tips for getting coding agents to write decent quality tests. Here's what I said:

I work in Python which helps a lot because there are a TON of good examples of pytest tests floating around in the training data, including things like usage of fixture libraries for mocking external HTTP APIs and snapshot testing and other neat patterns.

Or I can say "use pytest-httpx to mock the endpoints" and Claude knows what I mean.

Keeping an eye on the tests is important. The most common anti-pattern I see is large amounts of duplicated test setup code - which isn't a huge deal, I'm much more more tolerant of duplicated logic in tests than I am in implementation, but it's still worth pushing back on.

"Refactor those tests to use pytest.mark.parametrize" and "extract the common setup into a pytest fixture" work really well there.

Generally though the best way to get good tests out of a coding agent is to make sure it's working in a project with an existing test suite that uses good patterns. Coding agents pick the existing patterns up without needing any extra prompting at all.

I find that once a project has clean basic tests the new tests added by the agents tend to match them in quality. It's similar to how working on large projects with a team of other developers work - keeping the code clean means when people look for examples of how to write a test they'll be pointed in the right direction.

One last tip I use a lot is this:

Clone datasette/datasette-enrichments

from GitHub to /tmp and imitate the

testing patterns it uses

I do this all the time with different existing projects I've written - the quickest way to show an agent how you like something to be done is to have it look at an example.

A Software Library with No Code. Provocative experiment from Drew Breunig, who designed a new library for time formatting ("3 hours ago" kind of thing) called "whenwords" that has no code at all, just a carefully written specification, an AGENTS.md and a collection of conformance tests in a YAML file.

Pass that to your coding agent of choice, tell it what language you need and it will write it for you on demand!

This meshes nearly with my recent interest in conformance suites. If you publish good enough language-independent tests it's pretty astonishing how far today's coding agents can take you!

2025

But once we got that and got this aviation grade testing in place, the number of bugs just dropped to a trickle. Now we still do have bugs but the aviation grade testing allows us to move fast, which is important because in this business you either move fast or you're disrupted. So, we're able to make major changes to the structure of the code that we deliver and be confident that we're not breaking things because we had these intense tests. Probably half the time we spend is actually writing new tests, we're constantly writing new tests. And over the 17-year history, we have amassed a huge suite of tests which we run constantly.

Other database engines don't do this; don't have this level of testing. But they're still high quality, I mean, I noticed in particular, PostgreSQL is a very high-quality database engine, they don't have many bugs. I went to the PostgreSQL and ask them “how do you prevent the bugs”? We talked about this for a while. What I came away with was they've got a very elaborate peer review process, and if they've got code that has worked for 10 years they just don't mess with it, leave it alone, it works. Whereas we change our code fearlessly, and we have a much smaller team and we don't have the peer review process.

— D. Richard Hipp, ACM SIGMOD Record, June 2019 (PDF)

TIL: Subtests in pytest 9.0.0+. I spotted an interesting new feature in the release notes for pytest 9.0.0: subtests.

I'm a big user of the pytest.mark.parametrize decorator - see Documentation unit tests from 2018 - so I thought it would be interesting to try out subtests and see if they're a useful alternative.

Short version: this parameterized test:

@pytest.mark.parametrize("setting", app.SETTINGS) def test_settings_are_documented(settings_headings, setting): assert setting.name in settings_headings

Becomes this using subtests instead:

def test_settings_are_documented(settings_headings, subtests): for setting in app.SETTINGS: with subtests.test(setting=setting.name): assert setting.name in settings_headings

Why is this better? Two reasons:

- It appears to run a bit faster

- Subtests can be created programatically after running some setup code first

I had Claude Code port several tests to the new pattern. I like it.

Vibing a Non-Trivial Ghostty Feature (via) Mitchell Hashimoto provides a comprehensive answer to the frequent demand for a detailed description of shipping a non-trivial production feature to an existing project using AI-assistance. In this case it's a slick unobtrusive auto-update UI for his Ghostty terminal emulator, written in Swift.

Mitchell shares full transcripts of the 16 coding sessions he carried out using Amp Code across 2 days and around 8 hours of computer time, at a token cost of $15.98.

Amp has the nicest shared transcript feature of any of the coding agent tools, as seen in this example. I'd love to see Claude Code and Codex CLI and Gemini CLI and friends imitate this.

There are plenty of useful tips in here. I like this note about the importance of a cleanup step:

The cleanup step is really important. To cleanup effectively you have to have a pretty good understanding of the code, so this forces me to not blindly accept AI-written code. Subsequently, better organized and documented code helps future agentic sessions perform better.

I sometimes tongue-in-cheek refer to this as the "anti-slop session".

And this on how sometimes you can write manual code in a way that puts the agent the right track:

I spent some time manually restructured the view model. This involved switching to a tagged union rather than the struct with a bunch of optionals. I renamed some types, moved stuff around.

I knew from experience that this small bit of manual work in the middle would set the agents up for success in future sessions for both the frontend and backend. After completing it, I continued with a marathon set of cleanup sessions.

Here's one of those refactoring prompts:

Turn each @macos/Sources/Features/Update/UpdatePopoverView.swift case into a dedicated fileprivate Swift view that takes the typed value as its parameter so that we can remove the guards.

Mitchell advises ending every session with a prompt like this one, asking the agent about any obvious omissions:

Are there any other improvements you can see to be made with the @macos/Sources/Features/Update feature? Don't write any code. Consult the oracle. Consider parts of the code that can also get more unit tests added.

("Consult the oracle" is an Amp-specific pattern for running a task through a more expensive, more capable model.)

Is this all worthwhile? Mitchell thinks so:

Many people on the internet argue whether AI enables you to work faster or not. In this case, I think I shipped this faster than I would have if I had done it all myself, in particular because iterating on minor SwiftUI styling is so tedious and time consuming for me personally and AI does it so well.

I think the faster/slower argument for me personally is missing the thing I like the most: the AI can work for me while I step away to do other things.

Here's the resulting PR, which touches 21 files.

TIL: Testing different Python versions with uv with-editable and uv-test.

While tinkering with upgrading various projects to handle Python 3.14 I finally figured out a universal uv recipe for running the tests for the current project in any specified version of Python:

uv run --python 3.14 --isolated --with-editable '.[test]' pytest

This should work in any directory with a pyproject.toml (or even a setup.py) that defines a test set of extra dependencies and uses pytest.

The --with-editable '.[test]' bit ensures that changes you make to that directory will be picked up by future test runs. The --isolated flag ensures no other environments will affect your test run.

I like this pattern so much I built a little shell script that uses it, shown here. Now I can change to any Python project directory and run:

uv-test

Or for a different Python version:

uv-test -p 3.11

I can pass additional pytest options too:

uv-test -p 3.11 -k permissions

Hypothesis is now thread-safe (via) Hypothesis is a property-based testing library for Python. It lets you write tests like this one:

from hypothesis import given, strategies as st @given(st.lists(st.integers())) def test_matches_builtin(ls): assert sorted(ls) == my_sort(ls)

This will automatically create a collection of test fixtures that exercise a large array of expected list and integer shapes. Here's a Gist demonstrating the tests the above code will run, which include things like:

[]

[0]

[-62, 13194]

[44, -19562, 44, -12803, -24012]

[-7531692443171623764, -109369043848442345045856489093298649615]

Hypothesis contributor Liam DeVoe was recently sponsored by Quansight to add thread safety to Hypothesis, which has become important recently due to Python free threading:

While we of course would always have loved for Hypothesis to be thread-safe, thread-safety has historically not been a priority, because running Hypothesis tests under multiple threads is not something we see often.

That changed recently. Python---as both a language, and a community---is gearing up to remove the global interpreter lock (GIL), in a build called free threading. Python packages, especially those that interact with the C API, will need to test that their code still works under the free threaded build. A great way to do this is to run each test in the suite in two or more threads simultaneously. [...]

Nathan mentioned that because Hypothesis is not thread-safe, Hypothesis tests in community packages have to be skipped when testing free threaded compatibility, which removes a substantial battery of coverage.

Now that Hypothesis is thread-safe another blocker to increased Python ecosystem support for free threading has been removed!

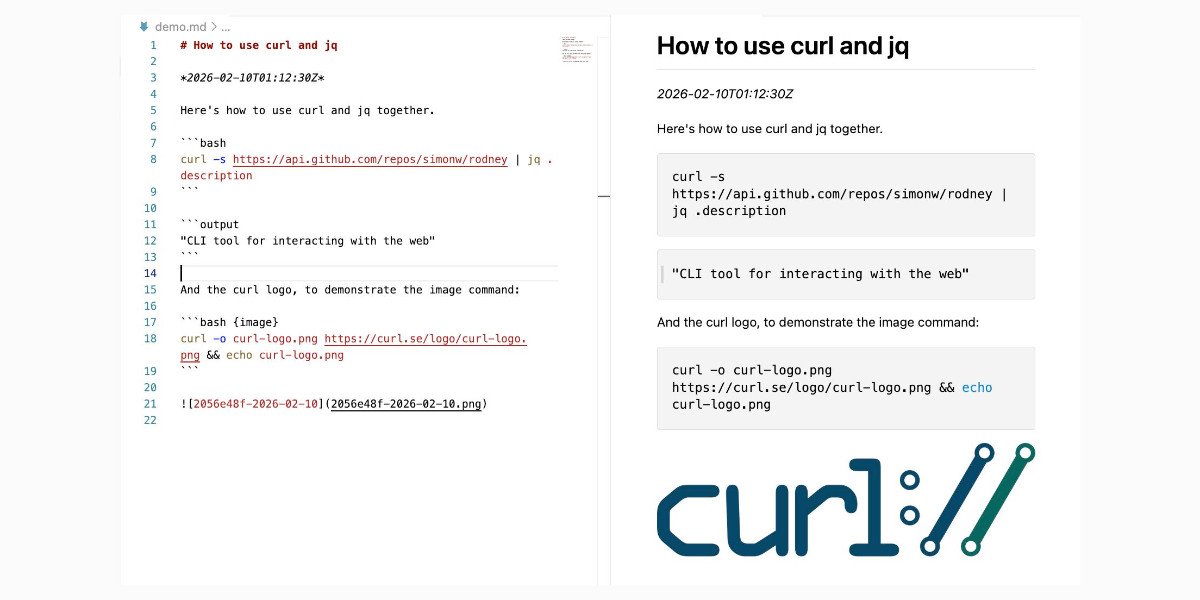

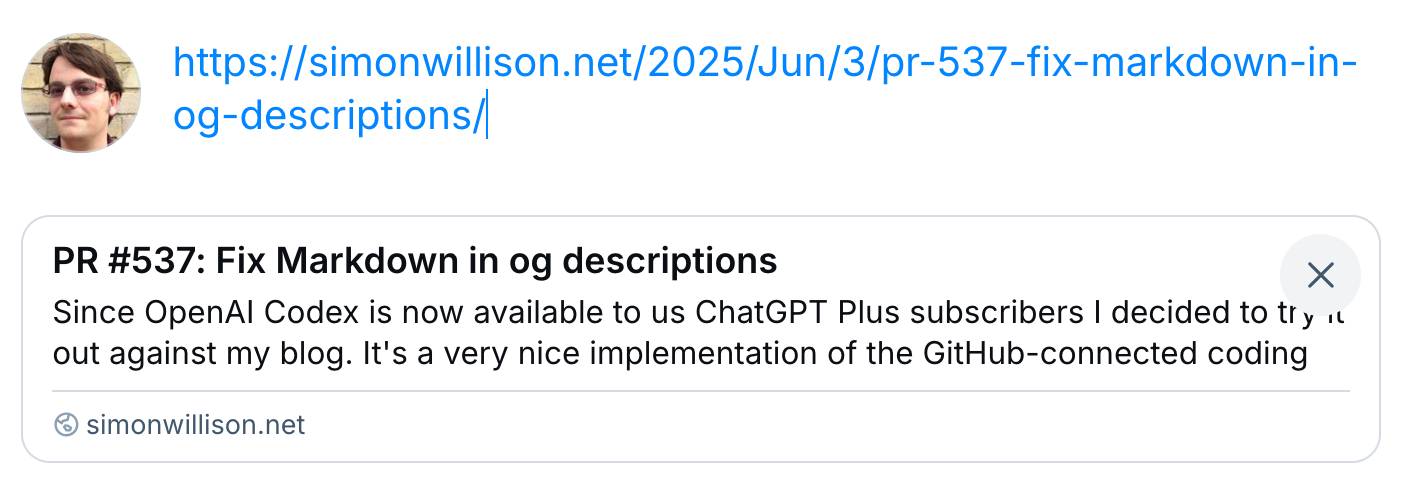

PR #537: Fix Markdown in og descriptions. Since OpenAI Codex is now available to us ChatGPT Plus subscribers I decided to try it out against my blog.

It's a very nice implementation of the GitHub-connected coding "agent" pattern, as also seen in Google's Jules and Microsoft's Copilot Coding Agent.

First I had to configure an environment for it. My Django blog uses PostgreSQL which isn't part of the default Codex container, so I had Claude Sonnet 4 help me come up with a startup recipe to get PostgreSQL working.

I attached my simonw/simonwillisonblog GitHub repo and used the following as the "setup script" for the environment:

# Install PostgreSQL

apt-get update && apt-get install -y postgresql postgresql-contrib

# Start PostgreSQL service

service postgresql start

# Create a test database and user

sudo -u postgres createdb simonwillisonblog

sudo -u postgres psql -c "CREATE USER testuser WITH PASSWORD 'testpass';"

sudo -u postgres psql -c "GRANT ALL PRIVILEGES ON DATABASE simonwillisonblog TO testuser;"

sudo -u postgres psql -c "ALTER USER testuser CREATEDB;"

pip install -r requirements.txt

I left "Agent internet access" off for reasons described previously.

Then I prompted Codex with the following (after one previous experimental task to check that it could run my tests):

Notes and blogmarks can both use Markdown.

They serve

meta property="og:description" content="tags on the page, but those tags include that raw Markdown which looks bad on social media previews.Fix it so they instead use just the text with markdown stripped - so probably render it to HTML and then strip the HTML tags.

Include passing tests.

Try to run the tests, the postgresql details are:

database = simonwillisonblog username = testuser password = testpass

Put those in the DATABASE_URL environment variable.

I left it to churn away for a few minutes (4m12s, to be precise) and it came back with a fix that edited two templates and added one more (passing) test. Here's that change in full.

And sure enough, the social media cards for my posts now look like this - no visible Markdown any more:

I wonder if one of the reasons I'm finding LLMs so much more useful for coding than a lot of people that I see in online discussions is that effectively all of the code I work on has automated tests.

I've been trying to stay true to the idea of a Perfect Commit - one that bundles the implementation, tests and documentation in a single unit - for over five years now. As a result almost every piece of (non vibe-coding) code I work on has pretty comprehensive test coverage.

This massively derisks my use of LLMs. If an LLM writes weird, convoluted code that solves my problem I can prove that it works with tests - and then have it refactor the code until it looks good to me, keeping the tests green the whole time.

LLMs help write the tests, too. I finally have a 24/7 pair programmer who can remember how to use unittest.mock!

Next time someone complains that they've found LLMs to be more of a hindrance than a help in their programming work, I'm going to try to remember to ask after the health of their test suite.

If your library doesn't have any documentation, it can't have any bugs.

Documentation specifies what your code is supposed to do. Your tests specify what it actually does.

Bugs exist when your test-enforced implementation fails to match the behavior described in your documentation. Without documentation a bug is just undefined behavior.

If you aim to follow semantic versioning you bump your major version when you release a backwards incompatible change. Such changes cannot exist if your code is not comprehensively documented!

Inspired by a half-remembered conversation I had with Tom Insam many years ago.

One of the most essential practices for maintaining the long-term quality of computer code is to write automated tests that ensure the program continues to act as expected, even when other people (including your future self) muck with it.

Smoke test your Django admin site.

Justin Duke demonstrates a neat pattern for running simple tests against your internal Django admin site: introspect every admin route via django.urls.get_resolver() and loop through them with @pytest.mark.parametrize to check they all return a 200 HTTP status code.

This catches simple mistakes with the admin configuration that trigger exceptions that might otherwise go undetected.

I rarely write automated tests against my own admin sites and often feel guilty about it. I wrote some notes on testing it with pytest-django fixtures a few years ago.

We find that Claude is really good at test driven development, so we often ask Claude to write tests first and then ask Claude to iterate against the tests.

— Catherine Wu, Anthropic

2024

My Approach to Building Large Technical Projects (via) Mitchell Hashimoto wrote this piece about taking on large projects back in June 2023. The project he described in the post is a terminal emulator written in Zig called Ghostty which just reached its 1.0 release.

I've learned that when I break down my large tasks in chunks that result in seeing tangible forward progress, I tend to finish my work and retain my excitement throughout the project. People are all motivated and driven in different ways, so this may not work for you, but as a broad generalization I've not found an engineer who doesn't get excited by a good demo. And the goal is to always give yourself a good demo.

For backend-heavy projects the lack of an initial UI is a challenge here, so Mitchell advocates for early automated tests as a way to start exercising code and seeing progress right from the start. Don't let tests get in the way of demos though:

No matter what I'm working on, I try to build one or two demos per week intermixed with automated test feedback as explained in the previous section.

Building a demo also provides you with invaluable product feedback. You can quickly intuit whether something feels good, even if it isn't fully functional.

For more on the development of Ghostty see this talk Mitchell gave at Zig Showtime last year:

I want the terminal to be a modern platform for text application development, analogous to the browser being a modern platform for GUI application development (for better or worse).

My preferred approach in many projects is to do some unit testing, but not a ton, early on in the project and wait until the core APIs and concepts of a module have crystallized.

At that point I then test the API exhaustively with integrations tests.

In my experience, these integration tests are much more useful than unit tests, because they remain stable and useful even as you change the implementation around. They aren’t as tied to the current codebase, but rather express higher level invariants that survive refactors much more readily.

[red-knot] type inference/checking test framework (via) Ruff maintainer Carl Meyer recently landed an interesting new design for a testing framework. It's based on Markdown, and could be described as a form of "literate testing" - the testing equivalent of Donald Knuth's literate programming.

A markdown test file is a suite of tests, each test can contain one or more Python files, with optionally specified path/name. The test writes all files to an in-memory file system, runs red-knot, and matches the resulting diagnostics against

Type:andError:assertions embedded in the Python source as comments.

Test suites are Markdown documents with embedded fenced blocks that look like this:

```py

reveal_type(1.0) # revealed: float

```

Tests can optionally include a path= specifier, which can provide neater messages when reporting test failures:

```py path=branches_unify_to_non_union_type.py

def could_raise_returns_str() -> str:

return 'foo'

...

```

A larger example test suite can be browsed in the red_knot_python_semantic/resources/mdtest directory.

This document on control flow for exception handlers (from this PR) is the best example I've found of detailed prose documentation to accompany the tests.

The system is implemented in Rust, but it's easy to imagine an alternative version of this idea written in Python as a pytest plugin. This feels like an evolution of the old Python doctest idea, except that tests are embedded directly in Markdown rather than being embedded in Python code docstrings.

... and it looks like such plugins exist already. Here are two that I've found so far:

- pytest-markdown-docs by Elias Freider and Modal Labs.

- sphinx.ext.doctest is a core Sphinx extension for running test snippets in documentation.

- pytest-doctestplus from the Scientific Python community, first released in 2011.

I tried pytest-markdown-docs by creating a doc.md file like this:

# Hello test doc

```py

assert 1 + 2 == 3

```

But this fails:

```py

assert 1 + 2 == 4

```

And then running it with uvx like this:

uvx --with pytest-markdown-docs pytest --markdown-docs

I got one pass and one fail:

_______ docstring for /private/tmp/doc.md __________

Error in code block:

```

10 assert 1 + 2 == 4

11

```

Traceback (most recent call last):

File "/private/tmp/tt/doc.md", line 10, in <module>

assert 1 + 2 == 4

AssertionError

============= short test summary info ==============

FAILED doc.md::/private/tmp/doc.md

=========== 1 failed, 1 passed in 0.02s ============

I also just learned that the venerable Python doctest standard library module has the ability to run tests in documentation files too, with doctest.testfile("example.txt"): "The file content is treated as if it were a single giant docstring; the file doesn’t need to contain a Python program!"

An LLM TDD loop (via) Super neat demo by David Winterbottom, who wrapped my LLM and files-to-prompt tools in a short Bash script that can be fed a file full of Python unit tests and an empty implementation file and will then iterate on that file in a loop until the tests pass.

inline-snapshot. I'm a big fan of snapshot testing, where expected values are captured the first time a test suite runs and then asserted against in future runs. It's a very productive way to build a robust test suite.

inline-snapshot by Frank Hoffmann is a particularly neat implementation of the pattern. It defines a snapshot() function which you can use in your tests:

assert 1548 * 18489 == snapshot()

When you run that test using pytest --inline-snapshot=create the snapshot() function will be replaced in your code (using AST manipulation) with itself wrapping the repr() of the expected result:

assert 1548 * 18489 == snapshot(28620972)

If you modify the code and need to update the tests you can run pytest --inline-snapshot=fix to regenerate the recorded snapshot values.

Your AI Product Needs Evals (via) Hamel Husain: “I’ve seen many successful and unsuccessful approaches to building LLM products. I’ve found that unsuccessful products almost always share a common root cause: a failure to create robust evaluation systems.”

I’ve been frustrated about this for a while: I know I need to move beyond “vibe checks” for the systems I have started to build on top of LLMs, but I was lacking a thorough guide about how to build automated (and manual) evals in a productive way.

Hamel has provided exactly the tutorial I was needing for this, with a really thorough example case-study.

Using GPT-4 to create test cases is an interesting approach: “Write 50 different instructions that a real estate agent can give to his assistant to create contacts on his CRM. The contact details can include name, phone, email, partner name, birthday, tags, company, address and job.”

Also important: “... unlike traditional unit tests, you don’t necessarily need a 100% pass rate. Your pass rate is a product decision.”

Hamel’s guide then covers the importance of traces for evaluating real-world performance of your deployed application, plus the pros and cons of leaning on automated evaluation using LLMs themselves.

Plus some wisdom from a footnote: “A reasonable heuristic is to keep reading logs until you feel like you aren’t learning anything new.”

time-machine example test for a segfault in Python (via) Here's a really neat testing trick by Adam Johnson. Someone reported a segfault bug in his time-machine library. How you you write a unit test that exercises a segfault without crashing the entire test suite?

Adam's solution is a test that does this:

subprocess.run([sys.executable, "-c", code_that_crashes_python], check=True)

sys.executable is the path to the current Python executable - ensuring the code will run in the same virtual environment as the test suite itself. The -c option can be used to have it run a (multi-line) string of Python code, and check=True causes the subprocess.run() function to raise an error if the subprocess fails to execute cleanly and returns an error code.

I'm absolutely going to be borrowing this pattern next time I need to add tests to cover a crashing bug in one of my projects.

Testcontainers (via) Not sure how I missed this: Testcontainers is a family of testing libraries (for Python, Go, JavaScript, Ruby, Rust and a bunch more) that make it trivial to spin up a service such as PostgreSQL or Redis in a container for the duration of your tests and then spin it back down again.

The Python example code is delightful:

redis = DockerContainer("redis:5.0.3-alpine").with_exposed_ports(6379)

redis.start()

wait_for_logs(redis, "Ready to accept connections")

I much prefer integration-style tests over unit tests, and I like to make sure any of my projects that depend on PostgreSQL or similar can run their tests against a real running instance. I've invested heavily in spinning up Varnish or Elasticsearch ephemeral instances in the past - Testcontainers look like they could save me a lot of time.

The open source project started in 2015, span off a company called AtomicJar in 2021 and was acquired by Docker in December 2023.

Before we even started writing the database, we first wrote a fully-deterministic event-based network simulation that our database could plug into. This system let us simulate an entire cluster of interacting database processes, all within a single-threaded, single-process application, and all driven by the same random number generator. We could run this virtual cluster, inject network faults, kill machines, simulate whatever crazy behavior we wanted, and see how it reacted. Best of all, if one particular simulation run found a bug in our application logic, we could run it over and over again with the same random seed, and the exact same series of events would happen in the exact same order. That meant that even for the weirdest and rarest bugs, we got infinity “tries” at figuring it out, and could add logging, or do whatever else we needed to do to track it down.

[...] At FoundationDB, once we hit the point of having ~zero bugs and confidence that any new ones would be found immediately, we entered into this blessed condition and we flew.

[...] We had built this sophisticated testing system to make our database more solid, but to our shock that wasn’t the biggest effect it had. The biggest effect was that it gave our tiny engineering team the productivity of a team 50x its size.

— Will Wilson, on FoundationDB

2023

promptfoo: How to benchmark Llama2 Uncensored vs. GPT-3.5 on your own inputs. promptfoo is a CLI and library for “evaluating LLM output quality”. This tutorial in their documentation about using it to compare Llama 2 to gpt-3.5-turbo is a good illustration of how it works: it uses YAML files to configure the prompts, and more YAML to define assertions such as “not-icontains: AI language model”.

pytest-icdiff (via) This is neat: “pip install pytest-icdiff” provides an instant usability upgrade to the output of failed tests in pytest, especially if the assertions involve comparing larger strings or nested JSON objects.

pyfakefs usage (via) New to me pytest fixture library that provides a really easy way to mock Python’s filesystem functions—open(), os.path.listdir() and so on—so a test can run against a fake set of files. This looks incredibly useful.

How to simulate a broken database connection for testing in Django (via) Neil Kakkar explores the options using unittest.patch() and then settles on a neater pattern using “with connection.execute_wrapper(QueryTimeoutWrapper()):” to simulate the exact exception he needs to test against.