27 posts tagged “releases”

2025

Large Language Models can run tools in your terminal with LLM 0.26

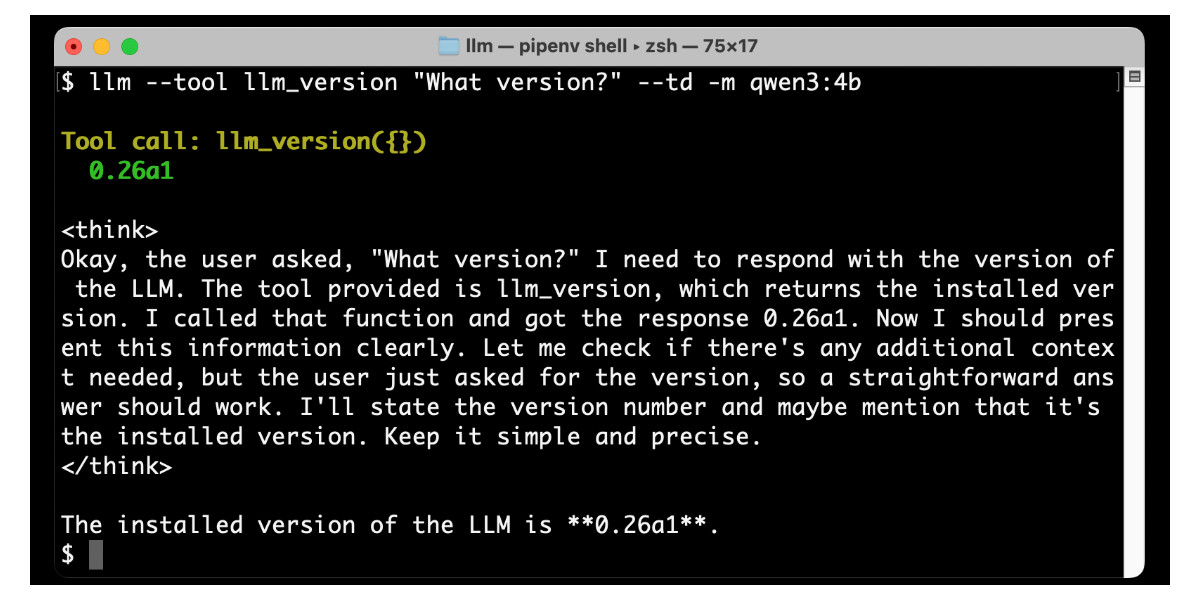

LLM 0.26 is out with the biggest new feature since I started the project: support for tools. You can now use the LLM CLI tool—and Python library—to grant LLMs from OpenAI, Anthropic, Gemini and local models from Ollama with access to any tool that you can represent as a Python function.

[... 2,799 words]2024

llm-openrouter 0.3. New release of my llm-openrouter plugin, which allows LLM to access models hosted by OpenRouter.

Quoting the release notes:

- Enable image attachments for models that support images. Thanks, Adam Montgomery. #12

- Provide async model access. #15

- Fix documentation to list correct

LLM_OPENROUTER_KEYenvironment variable. #10

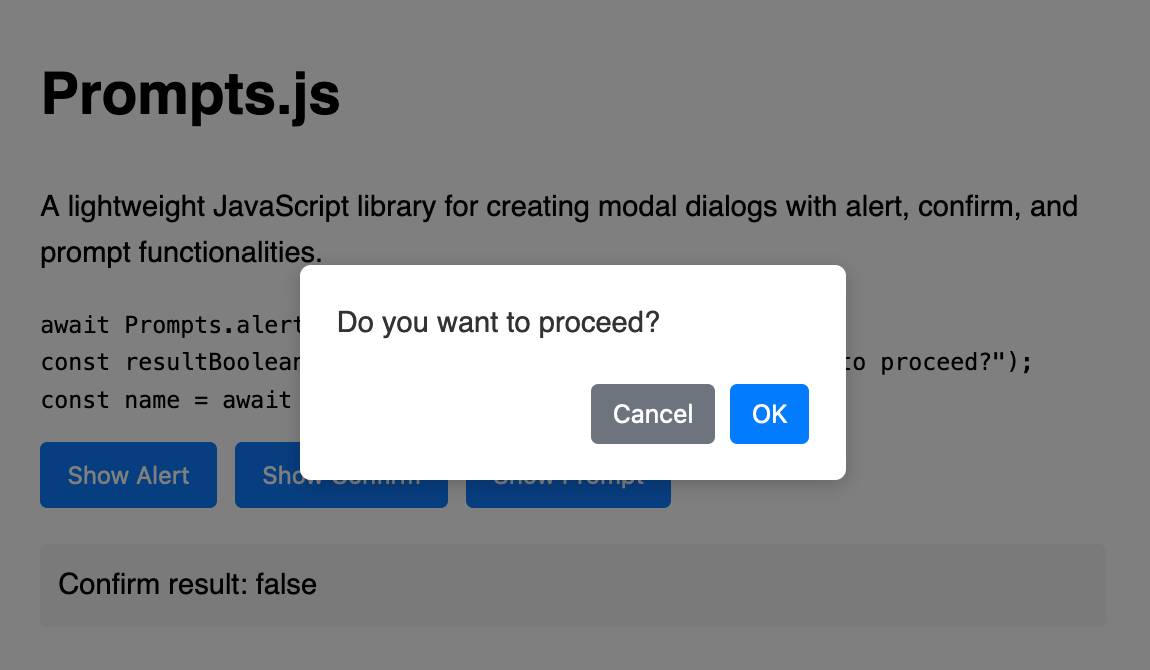

Prompts.js

I’ve been putting the new o1 model from OpenAI through its paces, in particular for code. I’m very impressed—it feels like it’s giving me a similar code quality to Claude 3.5 Sonnet, at least for Python and JavaScript and Bash... but it’s returning output noticeably faster.

[... 1,119 words]New Gemini model: gemini-exp-1206. Google's Jeff Dean:

Today’s the one year anniversary of our first Gemini model releases! And it’s never looked better.

Check out our newest release, Gemini-exp-1206, in Google AI Studio and the Gemini API!

I upgraded my llm-gemini plugin to support the new model and released it as version 0.6 - you can install or upgrade it like this:

llm install -U llm-gemini

Running my SVG pelican on a bicycle test prompt:

llm -m gemini-exp-1206 "Generate an SVG of a pelican riding a bicycle"

Provided this result, which is the best I've seen from any model:

Here's the full output - I enjoyed these two pieces of commentary from the model:

<polygon>: Shapes the distinctive pelican beak, with an added line for the lower mandible.

[...]

transform="translate(50, 30)": This attribute on the pelican's<g>tag moves the entire pelican group 50 units to the right and 30 units down, positioning it correctly on the bicycle.

The new model is also currently in top place on the Chatbot Arena.

Update: a delightful bonus, here's what I got from the follow-up prompt:

llm -c "now animate it"

datasette-enrichments-llm. Today's new alpha release is datasette-enrichments-llm, a plugin for Datasette 1.0a+ that provides an enrichment that lets you run prompts against data from one or more column and store the result in another column.

So far it's a light re-implementation of the existing datasette-enrichments-gpt plugin, now using the new llm.get_async_models() method to allow users to select any async-enabled model that has been registered by a plugin - so currently any of the models from OpenAI, Anthropic, Gemini or Mistral via their respective plugins.

Still plenty to do on this one. Next step is to integrate it with datasette-llm-usage and use it to drive a design-complete stable version of that.

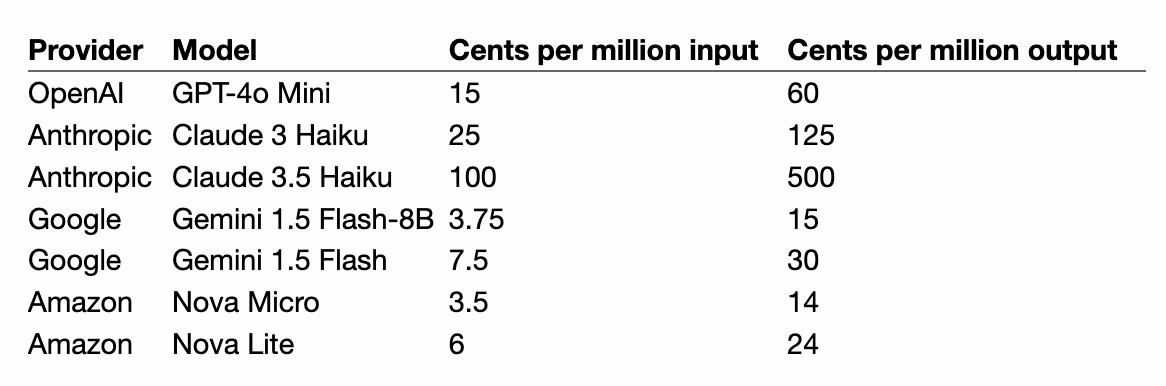

First impressions of the new Amazon Nova LLMs (via a new llm-bedrock plugin)

Amazon released three new Large Language Models yesterday at their AWS re:Invent conference. The new model family is called Amazon Nova and comes in three sizes: Micro, Lite and Pro.

[... 2,385 words]datasette-queries. I released the first alpha of a new plugin to replace the crusty old datasette-saved-queries. This one adds a new UI element to the top of the query results page with an expandable form for saving the query as a new canned query:

It's my first plugin to depend on LLM and datasette-llm-usage - it uses GPT-4o mini to power an optional "Suggest title and description" button, labeled with the becoming-standard ✨ sparkles emoji to indicate an LLM-powered feature.

I intend to expand this to work across multiple models as I continue to iterate on llm-datasette-usage to better support those kinds of patterns.

For the moment though each suggested title and description call costs about 250 input tokens and 50 output tokens, which against GPT-4o mini adds up to 0.0067 cents.

datasette-llm-usage. I released the first alpha of a Datasette plugin to help track LLM usage by other plugins, with the goal of supporting token allowances - both for things like free public apps that stop working after a daily allowance, plus free previews of AI features for paid-account-based projects such as Datasette Cloud.

It's using the usage features I added in LLM 0.19.

The alpha doesn't do much yet - it will start getting interesting once I upgrade other plugins to depend on it.

Design notes so far in issue #1.

LLM 0.19. I just released version 0.19 of LLM, my Python library and CLI utility for working with Large Language Models.

I released 0.18 a couple of weeks ago adding support for calling models from Python asyncio code. 0.19 improves on that, and also adds a new mechanism for models to report their token usage.

LLM can log those usage numbers to a SQLite database, or make then available to custom Python code.

My eventual goal with these features is to implement token accounting as a Datasette plugin so I can offer AI features in my SaaS platform without worrying about customers spending unlimited LLM tokens.

Those 0.19 release notes in full:

- Tokens used by a response are now logged to new

input_tokensandoutput_tokensinteger columns and atoken_detailsJSON string column, for the default OpenAI models and models from other plugins that implement this feature. #610llm promptnow takes a-u/--usageflag to display token usage at the end of the response.llm logs -u/--usageshows token usage information for logged responses.llm prompt ... --asyncresponses are now logged to the database. #641llm.get_models()andllm.get_async_models()functions, documented here. #640response.usage()and async responseawait response.usage()methods, returning aUsage(input=2, output=1, details=None)dataclass. #644response.on_done(callback)andawait response.on_done(callback)methods for specifying a callback to be executed when a response has completed, documented here. #653- Fix for bug running

llm chaton Windows 11. Thanks, Sukhbinder Singh. #495

I also released three new plugin versions that add support for the new usage tracking feature: llm-gemini 0.5, llm-claude-3 0.10 and llm-mistral 0.9.

Datasette 1.0a15. Mainly bug fixes, but a couple of minor new features:

- Datasette now defaults to hiding SQLite "shadow" tables, as seen in extensions such as SQLite FTS and sqlite-vec. Virtual tables that it makes sense to display, such as FTS core tables, are no longer hidden. Thanks, Alex Garcia. (#2296)

- The Datasette homepage is now duplicated at

/-/, using the defaultindex.htmltemplate. This ensures that the information on that page is still accessible even if the Datasette homepage has been customized using a customindex.htmltemplate, for example on sites like datasette.io. (#2393)

Datasette also now serves more user-friendly CSRF pages, an improvement which required me to ship asgi-csrf 0.10.

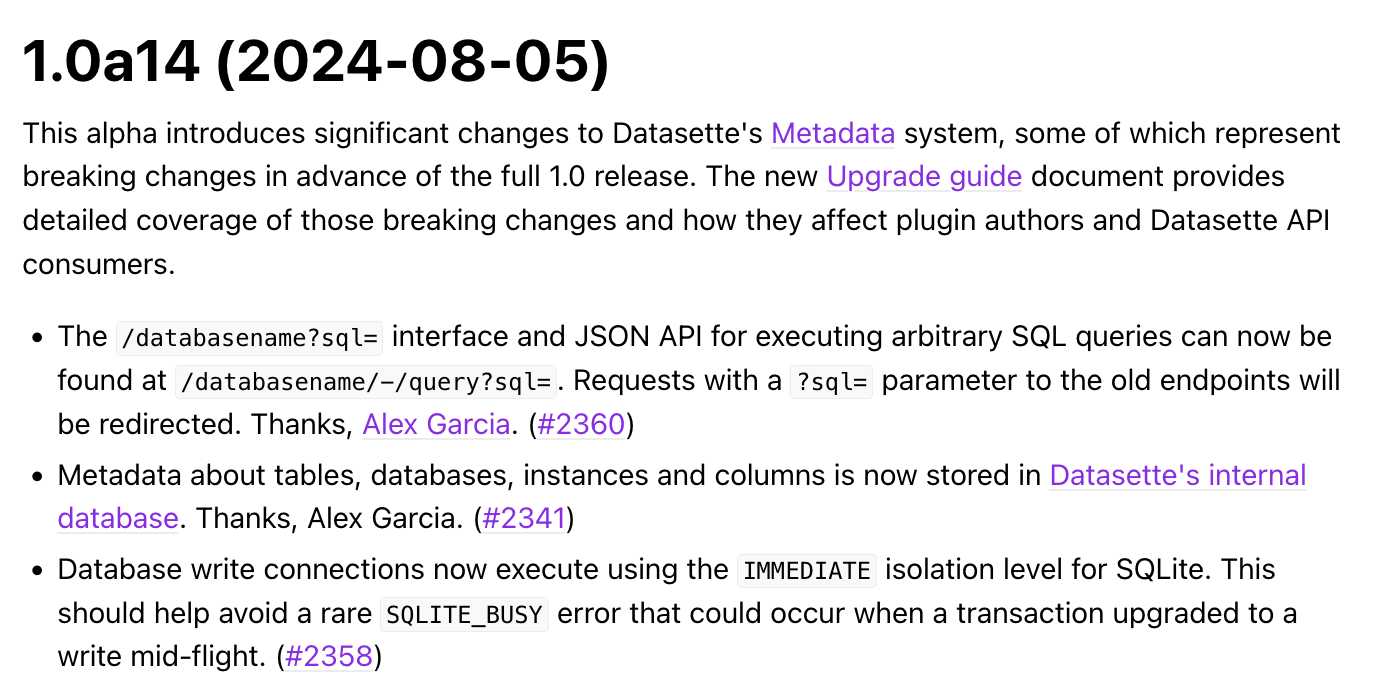

Datasette 1.0a14: The annotated release notes

Released today: Datasette 1.0a14. This alpha includes significant contributions from Alex Garcia, including some backwards-incompatible changes in the run-up to the 1.0 release.

[... 1,424 words]Datasette 0.64.8. A very small Datasette release, fixing a minor potential security issue where the name of missing databases or tables was reflected on the 404 page in a way that could allow an attacker to present arbitrary text to a user who followed a link. Not an XSS attack (no code could be executed) but still a potential vector for confusing messages.

2023

Datasette 1.0 alpha series leaks names of databases and tables to unauthenticated users. I found and fixed a security vulnerability in the Datasette 1.0 alpha series, described in this GitHub security advisory.

The vulnerability allowed unauthenticated users to see the names of the databases and tables in an otherwise private Datasette instance—though not the actual table contents.

The fix is now shipped in Datasette 1.0a4.

The vulnerability affected Datasette Cloud as well, but thankfully I was able to analyze the access logs and confirm that no unauthenticated requests had been made against any of the affected endpoints.

LLM 0.4. I released a major update to my LLM CLI tool today—version 0.4, which adds conversation mode and prompt templates so you can store and re-use interesting prompts, plus a whole bunch of other large and small improvements.

I also released 0.4.1 with some minor fixes and the ability to install the tool using Hombrew: brew install simonw/llm/llm

2010

Django 1.2 release notes (via) Released today, this is a terrific upgrade. Multiple database connections, model validation, improved CSRF protection, a messages framework, the new smart if template tag and lots, lots more. I’ve been using the 1.2 betas for a major new project over the past few months and it’s been smooth sailing all the way.

What’s new in Django 1.2 alpha 1 (via) Multiple database support, improved CSRF prevention, a messages framework (similar to the Rails “flash” feature), model validation, custom e-mail backends, template caching for much faster handling of the include and extends tags, read only fields in the admin, a better if tag and more. Very exciting release.

2009

Django 1.1 release notes (via) Django 1.1 is out! Congratulations everyone who worked on this, it’s a fantastic release. New features include aggregate support in the ORM, proxy models, deferred fields and some really nice admin improvements. Oh, and the testing framework is now up to 10 times thanks to smart use of transactions.

Nmap 5.00 Release Notes. Released today, “the most important Nmap release since 1997”. New features include Ncat, a powerful netcat alternative, Ndiff, a utility for comparing scan results so you can spot changes to your network, and a new Nmap Scripting Engine using Lua.

What’s New In Python 3.1. Lots of stuff, but the best bits are an ordered dictionary type (congrats, Armin), a Counter class for counting unique items in an iterable (I do this on an almost daily basis) and a bunch of performance improvements including a rewrite of the Python 3.0 IO system in C.

Dojo 1.3 now available. Looks like an excellent release. dojo.create is particularly nice—I’d be interested to know why something similar has never shipped with jQuery (presumably there’s a reason) as it feels a lot more elegant than gluing together an HTML-style string. Also interesting: you can swap between Dojo’s Acme selector engine and John Resig’s sizzle.

2008

Python 3.0. “We are pleased to announce the release of Python 3.0 (final), a new production-ready release, on December 3rd, 2008.”

Django 1.0.2 released. An update to last week’s 1.0.1 release, which I failed to link to. 1.0.2 mainly fixes some packaging issues, while 1.0.1 contains “over two hundred fixes to the original Django 1.0 codebase”. The team are holding up the promise to move to a regular release cycle after 1.0.

What’s New in Python 2.6 (via) Python 2.6 final has been released (the last 2.x version before 3.0). multiprocessing and simplejson (as json) are now in the standard library, any backwards compatible 3.0 features have been added and the official docs are now powered by Sphinx (used by Django 1.0 as well). There’s plenty more.

Django’s release process. Django is moving to time-based releases, with minor releases (new features but no backwards incompatible changes) approximately every six months.

2007

[Release] CouchDB 0.7.0. This is a huge milestone for the project—it’s the first official release to include the JSON REST API instead of XML, and it’s also the first release that is “intended for widespread use”.

Opera 9.5 (Kestrel). The latest Opera alpha includes a bunch of CSS3 features (including an almost full implementation of CSS3 Selectors) as well as the ability to use SVG for scalable background images.

Satchmo 0.5 Release. Django powered e-commerce application, “the webshop for perfectionists with deadlines”.