43 posts tagged “packaging”

2025

Poe the Poet.

I was looking for a way to specify additional commands in my pyproject.toml file to execute using uv. There's an enormous issue thread on this in the uv issue tracker (300+ comments dating back to August 2024) and from there I learned of several options including this one, Poe the Poet.

It's neat. I added it to my s3-credentials project just now and the following now works for running the live preview server for the documentation:

uv run poe livehtml

Here's the snippet of TOML I added to my pyproject.toml:

[dependency-groups] test = [ "pytest", "pytest-mock", "cogapp", "moto>=5.0.4", ] docs = [ "furo", "sphinx-autobuild", "myst-parser", "cogapp", ] dev = [ {include-group = "test"}, {include-group = "docs"}, "poethepoet>=0.38.0", ] [tool.poe.tasks] docs = "sphinx-build -M html docs docs/_build" livehtml = "sphinx-autobuild -b html docs docs/_build" cog = "cog -r docs/*.md"

Since poethepoet is in the dev= dependency group any time I run uv run ... it will be available in the environment.

TIL: Dependency groups and uv run.

I wrote up the new pattern I'm using for my various Python project repos to make them as easy to hack on with uv as possible. The trick is to use a PEP 735 dependency group called dev, declared in pyproject.toml like this:

[dependency-groups]

dev = ["pytest"]

With that in place, running uv run pytest will automatically install that development dependency into a new virtual environment and use it to run your tests.

This means you can get started hacking on one of my projects (here datasette-extract) with just these steps:

git clone https://github.com/datasette/datasette-extract

cd datasette-extract

uv run pytest

I also split my uv TILs out into a separate folder. This meant I had to setup redirects for the old paths, so I had Claude Code help build me a new plugin called datasette-redirects and then apply it to my TIL site, including updating the build script to correctly track the creation date of files that had since been renamed.

We should all be using dependency cooldowns (via) William Woodruff gives a name to a sensible strategy for managing dependencies while reducing the chances of a surprise supply chain attack: dependency cooldowns.

Supply chain attacks happen when an attacker compromises a widely used open source package and publishes a new version with an exploit. These are usually spotted very quickly, so an attack often only has a few hours of effective window before the problem is identified and the compromised package is pulled.

You are most at risk if you're automatically applying upgrades the same day they are released.

William says:

I love cooldowns for several reasons:

- They're empirically effective, per above. They won't stop all attackers, but they do stymie the majority of high-visibiity, mass-impact supply chain attacks that have become more common.

- They're incredibly easy to implement. Moreover, they're literally free to implement in most cases: most people can use Dependabot's functionality, Renovate's functionality, or the functionality build directly into their package manager

The one counter-argument to this is that sometimes an upgrade fixes a security vulnerability, and in those cases every hour of delay in upgrading as an hour when an attacker could exploit the new issue against your software.

I see that as an argument for carefully monitoring the release notes of your dependencies, and paying special attention to security advisories. I'm a big fan of the GitHub Advisory Database for that kind of information.

pyx: a Python-native package registry, now in Beta (via) Since its first release, the single biggest question around the uv Python environment management tool has been around Astral's business model: Astral are a VC-backed company and at some point they need to start making real revenue.

Back in September Astral founder Charlie Marsh said the following:

I don't want to charge people money to use our tools, and I don't want to create an incentive structure whereby our open source offerings are competing with any commercial offerings (which is what you see with a lost of hosted-open-source-SaaS business models).

What I want to do is build software that vertically integrates with our open source tools, and sell that software to companies that are already using Ruff, uv, etc. Alternatives to things that companies already pay for today.

An example of what this might look like (we may not do this, but it's helpful to have a concrete example of the strategy) would be something like an enterprise-focused private package registry. [...]

It looks like those plans have become concrete now! From today's announcement:

TL;DR: pyx is a Python-native package registry --- and the first piece of the Astral platform, our next-generation infrastructure for the Python ecosystem.

We think of pyx as an optimized backend for uv: it's a package registry, but it also solves problems that go beyond the scope of a traditional "package registry", making your Python experience faster, more secure, and even GPU-aware, both for private packages and public sources (like PyPI and the PyTorch index).

pyx is live with our early partners, including Ramp, Intercom, and fal [...]

This looks like a sensible direction to me, and one that stays true to Charlie's promises to carefully design the incentive structure to avoid corrupting the core open source project that the Python community is coming to depend on.

Introducing OSS Rebuild: Open Source, Rebuilt to Last (via) Major news on the Reproducible Builds front: the Google Security team have announced OSS Rebuild, their project to provide build attestations for open source packages released through the NPM, PyPI and Crates ecosystom (and more to come).

They currently run builds against the "most popular" packages from those ecosystems:

Through automation and heuristics, we determine a prospective build definition for a target package and rebuild it. We semantically compare the result with the existing upstream artifact, normalizing each one to remove instabilities that cause bit-for-bit comparisons to fail (e.g. archive compression). Once we reproduce the package, we publish the build definition and outcome via SLSA Provenance. This attestation allows consumers to reliably verify a package's origin within the source history, understand and repeat its build process, and customize the build from a known-functional baseline

The only way to interact with the Rebuild data right now is through their Go CLI tool. I reverse-engineered it using Gemini 2.5 Pro and derived this command to get a list of all of their built packages:

gsutil ls -r 'gs://google-rebuild-attestations/**'

There are 9,513 total lines, here's a Gist. I used Claude Code to count them across the different ecosystems (discounting duplicates for different versions of the same package):

- pypi: 5,028 packages

- cratesio: 2,437 packages

- npm: 2,048 packages

Then I got a bit ambitious... since the files themselves are hosted in a Google Cloud Bucket, could I run my own web app somewhere on storage.googleapis.com that could use fetch() to retrieve that data, working around the lack of open CORS headers?

I got Claude Code to try that for me (I didn't want to have to figure out how to create a bucket and configure it for web access just for this one experiment) and it built and then deployed https://storage.googleapis.com/rebuild-ui/index.html, which did indeed work!

![Screenshot of Google Rebuild Explorer interface showing a search box with placeholder text "Type to search packages (e.g., 'adler', 'python-slugify')..." under "Search rebuild attestations:", a loading file path "pypi/accelerate/0.21.0/accelerate-0.21.0-py3-none-any.whl/rebuild.intoto.jsonl", and Object 1 containing JSON with "payloadType": "in-toto.io Statement v1 URL", "payload": "...", "signatures": [{"keyid": "Google Cloud KMS signing key URL", "sig": "..."}]](https://static.simonwillison.net/static/2025/rebuild-ui.jpg)

It lets you search against that list of packages from the Gist and then select one to view the pretty-printed newline-delimited JSON that was stored for that package.

The output isn't as interesting as I was expecting, but it was fun demonstrating that it's possible to build and deploy web apps to Google Cloud that can then make fetch() requests to other public buckets.

Hopefully the OSS Rebuild team will add a web UI to their project at some point in the future.

crates.io: Trusted Publishing (via) crates.io is the Rust ecosystem's equivalent of PyPI. Inspired by PyPI's GitHub integration (see my TIL, I use this for dozens of my packages now) they've added a similar feature:

Trusted Publishing eliminates the need for GitHub Actions secrets when publishing crates from your CI/CD pipeline. Instead of managing API tokens, you can now configure which GitHub repository you trust directly on crates.io.

They're missing one feature that PyPI has: on PyPI you can create a "pending publisher" for your first release. crates.io currently requires the first release to be manual:

To get started with Trusted Publishing, you'll need to publish your first release manually. After that, you can set up trusted publishing for future releases.

2025 Python Packaging Ecosystem Survey. If you make use of Python packaging tools (pip, Anaconda, uv, dozens of others) and have opinions please spend a few minutes with this year's packaging survey. This one was "Co-authored by 30+ of your favorite Python Ecosystem projects, organizations and companies."

Slopsquatting -- when an LLM hallucinates a non-existent package name, and a bad actor registers it maliciously. The AI brother of typosquatting.

Credit to @sethmlarson for the name

2024

It's okay to complain and vent, I just ask you be able to back it up. Saying, "Python packaging sucks", but then admit you actually haven't used it in so long you don't remember why it sucked isn't fair. Things do improve, so it's better to say "it did suck" and acknowledge you might be out-of-date.

Using uv with PyTorch (via) PyTorch is a notoriously tricky piece of Python software to install, due to the need to provide separate wheels for different combinations of Python version and GPU accelerator (e.g. different CUDA versions).

uv now has dedicated documentation for PyTorch which I'm finding really useful - it clearly explains the challenge and then shows exactly how to configure a pyproject.toml such that uv knows which version of each package it should install from where.

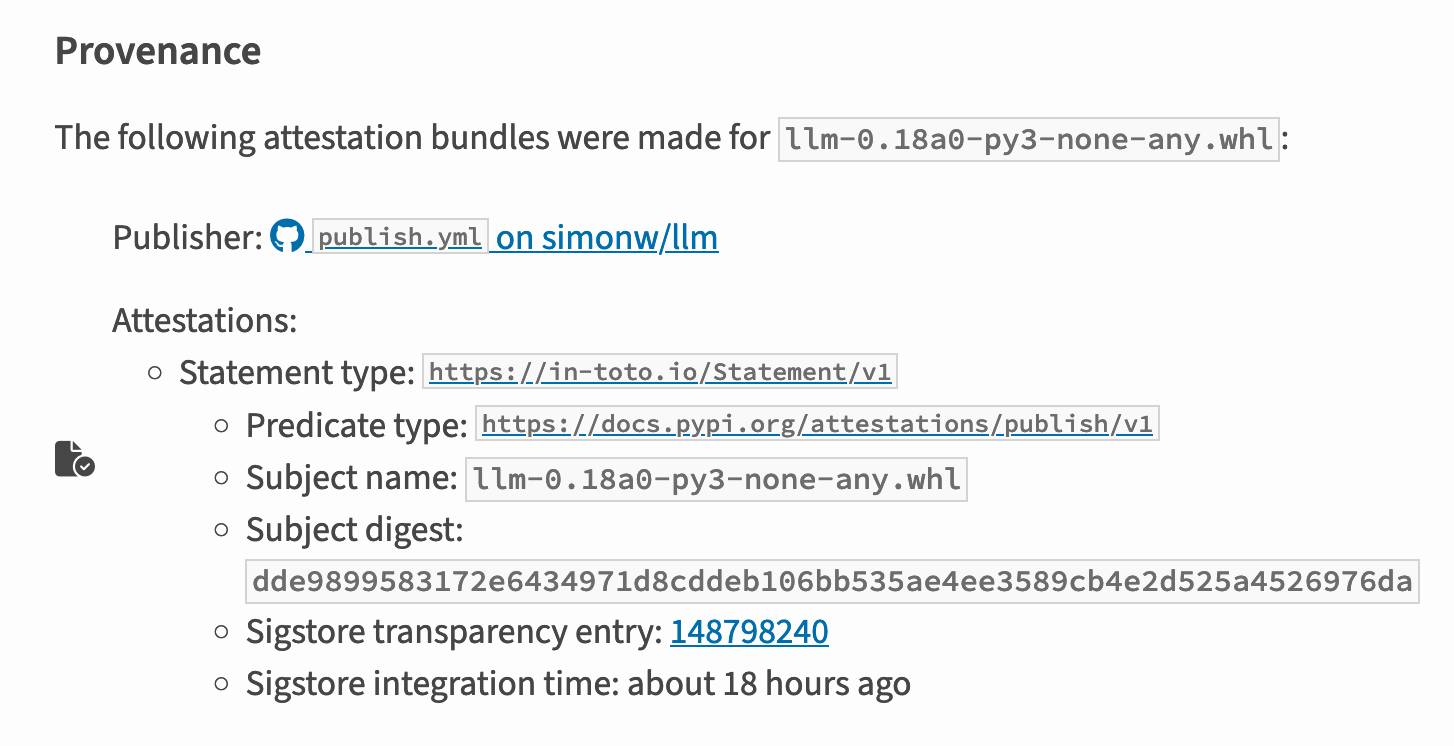

PyPI now supports digital attestations (via) Dustin Ingram:

PyPI package maintainers can now publish signed digital attestations when publishing, in order to further increase trust in the supply-chain security of their projects. Additionally, a new API is available for consumers and installers to verify published attestations.

This has been in the works for a while, and is another component of PyPI's approach to supply chain security for Python packaging - see PEP 740 – Index support for digital attestations for all of the underlying details.

A key problem this solves is cryptographically linking packages published on PyPI to the exact source code that was used to build those packages. In the absence of this feature there are no guarantees that the .tar.gz or .whl file you download from PyPI hasn't been tampered with (to add malware, for example) in a way that's not visible in the published source code.

These new attestations provide a mechanism for proving that a known, trustworthy build system was used to generate and publish the package, starting with its source code on GitHub.

The good news is that if you're using the PyPI Trusted Publishers mechanism in GitHub Actions to publish packages, you're already using this new system. I wrote about that system in January: Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions - and hundreds of my own PyPI packages are already using that system, thanks to my various cookiecutter templates.

Trail of Bits helped build this feature, and provide extra background about it on their own blog in Attestations: A new generation of signatures on PyPI:

As of October 29, attestations are the default for anyone using Trusted Publishing via the PyPA publishing action for GitHub. That means roughly 20,000 packages can now attest to their provenance by default, with no changes needed.

They also built Are we PEP 740 yet? (key implementation here) to track the rollout of attestations across the 360 most downloaded packages from PyPI. It works by hitting URLs such as https://pypi.org/simple/pydantic/ with a Accept: application/vnd.pypi.simple.v1+json header - here's the JSON that returns.

I published an alpha package using Trusted Publishers last night and the files for that release are showing the new provenance information already:

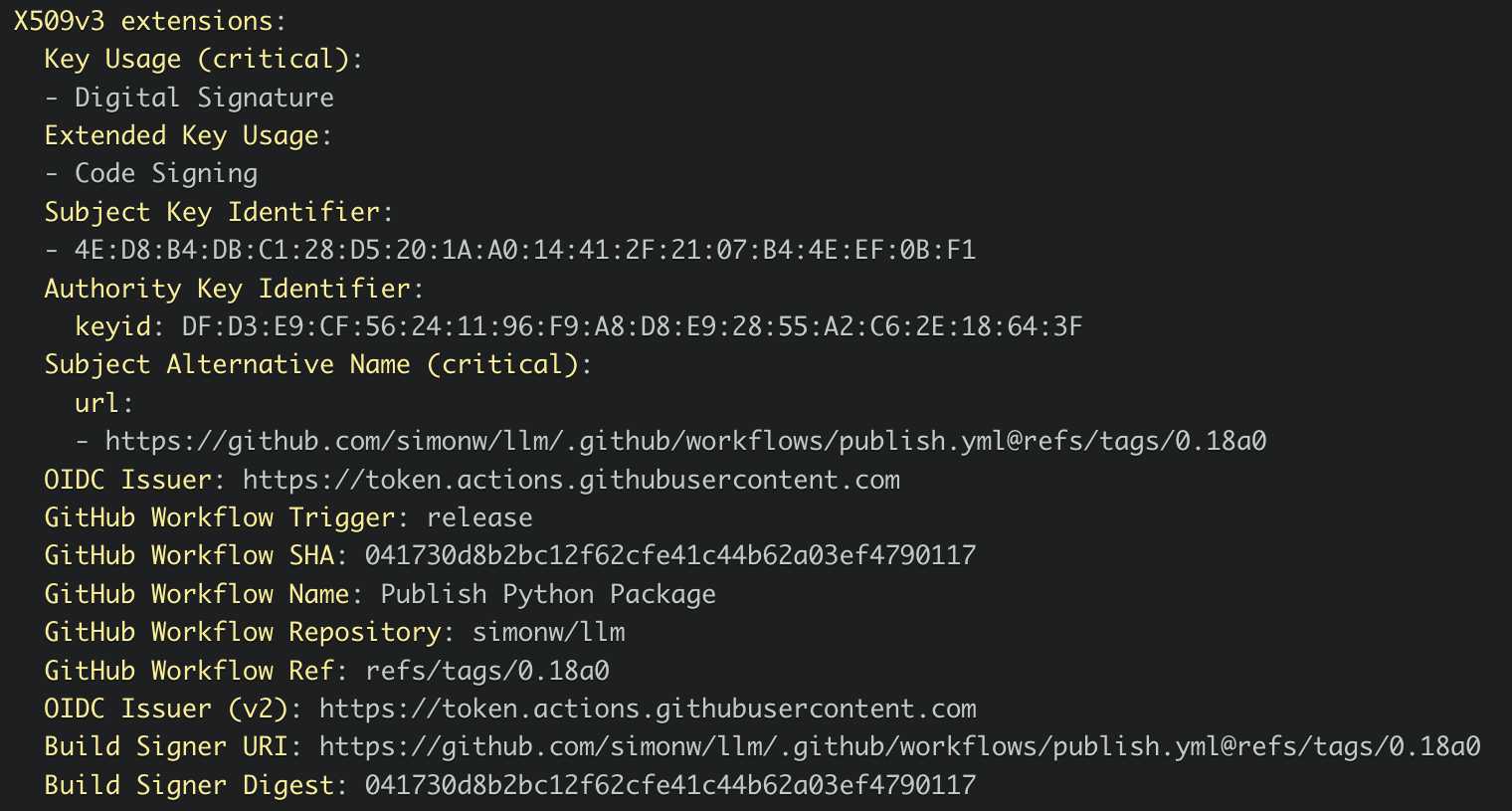

Which links to this Sigstore log entry with more details, including the Git hash that was used to build the package:

Sigstore is a transparency log maintained by Open Source Security Foundation (OpenSSF), a sub-project of the Linux Foundation.

uv 0.5.0. The first backwards-incompatible (in minor ways) release after 30 releases without a breaking change.

I found out about this release this morning when I filed an issue about a fiddly usability problem I had encountered with the combo of uv and conda... and learned that the exact problem had already been fixed in the brand new version!

TIL: Using uv to develop Python command-line applications.

I've been increasingly using uv to try out new software (via uvx) and experiment with new ideas, but I hadn't quite figured out the right way to use it for developing my own projects.

It turns out I was missing a few things - in particular the fact that there's no need to use uv pip at all when working with a local development environment, you can get by entirely on uv run (and maybe uv sync --extra test to install test dependencies) with no direct invocations of uv pip at all.

I bounced a few questions off Charlie Marsh and filled in the missing gaps - this TIL shows my new uv-powered process for hacking on Python CLI apps built using Click and my simonw/click-app cookecutter template.

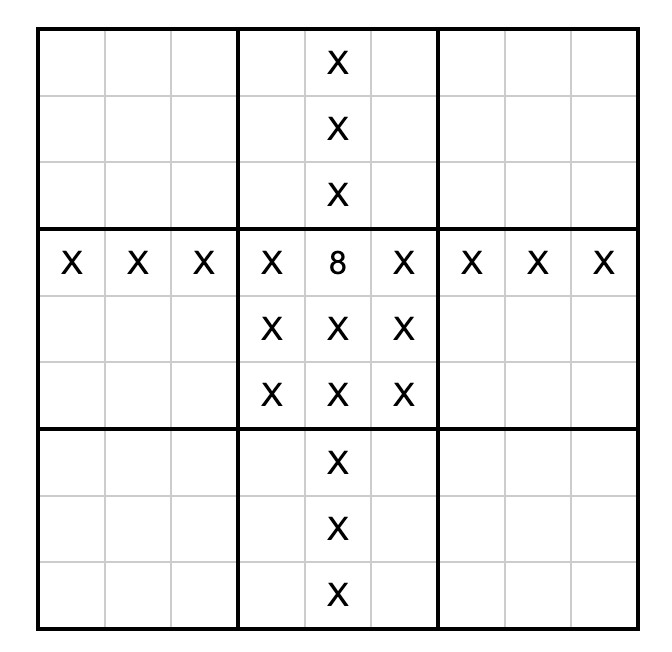

sudoku-in-python-packaging (via) Absurdly clever hack by konsti: solve a Sudoku puzzle entirely using the Python package resolver!

First convert the puzzle into a requirements.in file representing the current state of the board:

git clone https://github.com/konstin/sudoku-in-python-packaging

cd sudoku-in-python-packaging

echo '5,3,_,_,7,_,_,_,_

6,_,_,1,9,5,_,_,_

_,9,8,_,_,_,_,6,_

8,_,_,_,6,_,_,_,3

4,_,_,8,_,3,_,_,1

7,_,_,_,2,_,_,_,6

_,6,_,_,_,_,2,8,_

_,_,_,4,1,9,_,_,5

_,_,_,_,8,_,_,7,9' > sudoku.csv

python csv_to_requirements.py sudoku.csv requirements.in

That requirements.in file now contains lines like this for each of the filled-in cells:

sudoku_0_0 == 5

sudoku_1_0 == 3

sudoku_4_0 == 7

Then run uv pip compile to convert that into a fully fleshed out requirements.txt file that includes all of the resolved dependencies, based on the wheel files in the packages/ folder:

uv pip compile \

--find-links packages/ \

--no-annotate \

--no-header \

requirements.in > requirements.txt

The contents of requirements.txt is now the fully solved board:

sudoku-0-0==5

sudoku-0-1==6

sudoku-0-2==1

sudoku-0-3==8

...

The trick is the 729 wheel files in packages/ - each with a name like sudoku_3_4-8-py3-none-any.whl. I decompressed that wheel and it included a sudoku_3_4-8.dist-info/METADATA file which started like this:

Name: sudoku_3_4

Version: 8

Metadata-Version: 2.2

Requires-Dist: sudoku_3_0 != 8

Requires-Dist: sudoku_3_1 != 8

Requires-Dist: sudoku_3_2 != 8

Requires-Dist: sudoku_3_3 != 8

...

With a !=8 line for every other cell on the board that cannot contain the number 8 due to the rules of Sudoku (if 8 is in the 3, 4 spot). Visualized:

So the trick here is that the Python dependency resolver (now lightning fast thanks to uv) reads those dependencies and rules out every package version that represents a number in an invalid position. The resulting version numbers represent the cell numbers for the solution.

How much faster? I tried the same thing with the pip-tools pip-compile command:

time pip-compile \

--find-links packages/ \

--no-annotate \

--no-header \

requirements.in > requirements.txt

That took 17.72s. On the same machine the time pip uv compile... command took 0.24s.

Update: Here's an earlier implementation of the same idea by Artjoms Iškovs in 2022.

UV — I am (somewhat) sold

(via)

Oliver Andrich's detailed notes on adopting uv. Oliver has some pretty specific requirements:

I need to have various Python versions installed locally to test my work and my personal projects. Ranging from Python 3.8 to 3.13. [...] I also require decent dependency management in my projects that goes beyond manually editing a

pyproject.tomlfile. Likewise, I am way too accustomed topoetry add .... And I run a number of Python-based tools --- djhtml, poetry, ipython, llm, mkdocs, pre-commit, tox, ...

He's braver than I am!

I started by removing all Python installations, pyenv, pipx and Homebrew from my machine. Rendering me unable to do my work.

Here's a neat trick: first install a specific Python version with uv like this:

uv python install 3.11

Then create an alias to run it like this:

alias python3.11 'uv run --python=3.11 python3'

And install standalone tools with optional extra dependencies like this (a replacement for pipx and pipx inject):

uv tool install --python=3.12 --with mkdocs-material mkdocs

Oliver also links to Anže Pečar's handy guide on using UV with Django.

uv under discussion on Mastodon. Jacob Kaplan-Moss kicked off this fascinating conversation about uv on Mastodon recently. It's worth reading the whole thing, which includes input from a whole range of influential Python community members such as Jeff Triplett, Glyph Lefkowitz, Russell Keith-Magee, Seth Michael Larson, Hynek Schlawack, James Bennett and others. (Mastodon is a pretty great place for keeping up with the Python community these days.)

The key theme of the conversation is that, while uv represents a huge set of potential improvements to the Python ecosystem, it comes with additional risks due its attachment to a VC-backed company - and its reliance on Rust rather than Python.

Here are a few comments that stood out to me.

As enthusiastic as I am about the direction uv is going, I haven't adopted them anywhere - because I want very much to understand Astral’s intended business model before I hook my wagon to their tools. It's definitely not clear to me how they're going to stay liquid once the VC money runs out. They could get me onboard in a hot second if they published a "This is what we're planning to charge for" blog post.

As much as I hate VC, [...] FOSS projects flame out all the time too. If Frost loses interest, there’s no PDM anymore. Same for Ofek and Hatch(ling).

I fully expect Astral to flame out and us having to fork/take over—it’s the circle of FOSS. To me uv looks like a genius sting to trick VCs into paying to fix packaging. We’ll be better off either way.

Even in the best case, Rust is more expensive and difficult to maintain, not to mention "non-native" to the average customer here. [...] And the difficulty with VC money here is that it can burn out all the other projects in the ecosystem simultaneously, creating a risk of monoculture, where previously, I think we can say that "monoculture" was the least of Python's packaging concerns.

I don’t think y’all quite grok what uv makes so special due to your seniority. The speed is really cool, but the reason Rust is elemental is that it’s one compiled blob that can be used to bootstrap and maintain a Python development. A blob that will never break because someone upgraded Homebrew, ran pip install or any other creative way people found to fuck up their installations. Python has shown to be a terrible tech to maintain Python.

Just dropping in here to say that corporate capture of the Python ecosystem is the #1 keeps-me-up-at-night subject in my community work, so I watch Astral with interest, even if I'm not yet too worried.

I'm reminded of this note from Armin Ronacher, who created Rye and later donated it to uv maintainers Astral:

However having seen the code and what uv is doing, even in the worst possible future this is a very forkable and maintainable thing. I believe that even in case Astral shuts down or were to do something incredibly dodgy licensing wise, the community would be better off than before uv existed.

I'm currently inclined to agree with Armin and Hynek: while the risk of corporate capture for a crucial aspect of the Python packaging and onboarding ecosystem is a legitimate concern, the amount of progress that has been made here in a relatively short time combined with the open license and quality of the underlying code keeps me optimistic that uv will be a net positive for Python overall.

Update: uv creator Charlie Marsh joined the conversation:

I don't want to charge people money to use our tools, and I don't want to create an incentive structure whereby our open source offerings are competing with any commercial offerings (which is what you see with a lost of hosted-open-source-SaaS business models).

What I want to do is build software that vertically integrates with our open source tools, and sell that software to companies that are already using Ruff, uv, etc. Alternatives to things that companies already pay for today.

An example of what this might look like (we may not do this, but it's helpful to have a concrete example of the strategy) would be something like an enterprise-focused private package registry. A lot of big companies use uv. We spend time talking to them. They all spend money on private package registries, and have issues with them. We could build a private registry that integrates well with uv, and sell it to those companies. [...]

But the core of what I want to do is this: build great tools, hopefully people like them, hopefully they grow, hopefully companies adopt them; then sell software to those companies that represents the natural next thing they need when building with Python. Hopefully we can build something better than the alternatives by playing well with our OSS, and hopefully we are the natural choice if they're already using our OSS.

Why I Still Use Python Virtual Environments in Docker (via) Hynek Schlawack argues for using virtual environments even when running Python applications in a Docker container. This argument was most convincing to me:

I'm responsible for dozens of services, so I appreciate the consistency of knowing that everything I'm deploying is in

/app, and if it's a Python application, I know it's a virtual environment, and if I run/app/bin/python, I get the virtual environment's Python with my application ready to be imported and run.

Also:

It’s good to use the same tools and primitives in development and in production.

Also worth a look: Hynek's guide to Production-ready Docker Containers with uv, an actively maintained guide that aims to reflect ongoing changes made to uv itself.

light-the-torchis a small utility that wrapspipto ease the installation process for PyTorch distributions liketorch,torchvision,torchaudio, and so on as well as third-party packages that depend on them. It auto-detects compatible CUDA versions from the local setup and installs the correct PyTorch binaries without user interference.

Use it like this:

pip install light-the-torch

ltt install torchIt works by wrapping and patching pip.

#!/usr/bin/env -S uv run (via) This is a really neat pattern. Start your Python script like this:

#!/usr/bin/env -S uv run

# /// script

# requires-python = ">=3.12"

# dependencies = [

# "flask==3.*",

# ]

# ///

import flask

# ...

And now if you chmod 755 it you can run it on any machine with the uv binary installed like this: ./app.py - and it will automatically create its own isolated environment and run itself with the correct installed dependencies and even the correctly installed Python version.

All of that from putting uv run in the shebang line!

Code from this PR by David Laban.

uv: Unified Python packaging (via) Huge new release from the Astral team today. uv 0.3.0 adds a bewildering array of new features, as part of their attempt to build "Cargo, for Python".

It's going to take a while to fully absorb all of this. Some of the key new features are:

uv tool run cowsay, aliased touvx cowsay- a pipx alternative that runs a tool in its own dedicated virtual environment (tucked away in~/Library/Caches/uv), installing it if it's not present. It has a neat--withoption for installing extras - I tried that just now withuvx --with datasette-cluster-map datasetteand it ran Datasette with thedatasette-cluster-mapplugin installed.- Project management, as an alternative to tools like Poetry and PDM.

uv initcreates apyproject.tomlfile in the current directory,uv add sqlite-utilsthen creates and activates a.venvvirtual environment, adds the package to thatpyproject.tomland adds all of its dependencies to a newuv.lockfile (like this one). Thatuv.lockis described as a universal or cross-platform lockfile that can support locking dependencies for multiple platforms. - Single-file script execution using

uv run myscript.py, where those scripts can define their own dependencies using PEP 723 inline metadata. These dependencies are listed in a specially formatted comment and will be installed into a virtual environment before the script is executed. - Python version management similar to pyenv. The new

uv python listcommand lists all Python versions available on your system (including detecting various system and Homebrew installations), anduv python install 3.13can then install a uv-managed Python using Gregory Szorc's invaluable python-build-standalone releases.

It's all accompanied by new and very thorough documentation.

The paint isn't even dry on this stuff - it's only been out for a few hours - but this feels very promising to me. The idea that you can install uv (a single Rust binary) and then start running all of these commands to manage Python installations and their dependencies is very appealing.

If you’re wondering about the relationship between this and Rye - another project that Astral adopted solving a subset of these problems - this forum thread clarifies that they intend to continue maintaining Rye but are eager for uv to work as a full replacement.

Writing your pyproject.toml (via) When I started exploring pyproject.toml a year ago I had trouble finding comprehensive documentation about what should go in that file.

Since then the Python Packaging Guide split out this page, which is exactly what I was looking for back then.

cibuildwheel 2.20.0 now builds Python 3.13 wheels by default (via)

CPython 3.13 wheels are now built by default […] This release includes CPython 3.13.0rc1, which is guaranteed to be ABI compatible with the final release.

cibuildwheel is an underrated but crucial piece of the overall Python ecosystem.

Python wheel packages that include binary compiled components - packages with C extensions for example - need to be built multiple times, once for each combination of Python version, operating system and architecture.

A package like Adam Johnson’s time-machine - which bundles a 500 line C extension - can end up with 55 different wheel files with names like time_machine-2.15.0-cp313-cp313-win_arm64.whl and time_machine-2.15.0-cp38-cp38-musllinux_1_2_x86_64.whl.

Without these wheels, anyone who runs pip install time-machine will need to have a working C compiler toolchain on their machine for the command to work.

cibuildwheel solves the problem of building all of those wheels for all of those different platforms on the CI provider of your choice. Adam is using it in GitHub Actions for time-machine, and his .github/workflows/build.yml file neatly demonstrates how concise the configuration can be once you figure out how to use it.

The first release candidate of Python 3.13 hit its target release date of August 1st, and the final version looks on schedule for release on the 1st of October. Since this rc should be binary compatible with the final build now is the time to start shipping those wheels to PyPI.

npm install everything, and the complete and utter chaos that follows (via) Here’s an experiment which went really badly wrong: a team of mostly-students decided to see if it was possible to install every package from npm (all 2.5 million of them) on the same machine. As part of that experiment they created and published their own npm package that depended on every other package in the registry.

Unfortunately, in response to the leftpad incident a few years ago npm had introduced a policy that a package cannot be removed from the registry if there exists at least one other package that lists it as a dependency. The new “everything” package inadvertently prevented all 2.5m packages—including many that had no other dependencies—from ever being removed!

Python packaging must be getting better—a datapoint (via) Luke Plant reports on a recent project he developed on Linux using a requirements.txt file and some complex binary dependencies—Qt5 and VTK—and when he tried to run it on Windows... it worked! No modifications required.

I think Python’s packaging system has never been more effective... provided you know how to use it. The learning curve is still too high, which I think accounts for the bulk of complaints about it today.

2023

My User Experience Porting Off setup.py (via) PyOxidizer maintainer Gregory Szorc provides a detailed account of his experience trying to figure out how to switch from setup.py to pyproject.toml for his zstandard Python package.

This kind of detailed usability feedback is incredibly valuable for project maintainers, especially when the user encountered this many different frustrations along the way. It’s like the written version of a detailed usability testing session.

Dependency Management Data (via) This is a really neat CLI tool by Jamie Tanna, built using Go and SQLite but with a feature that embeds a Datasette instance (literally shelling out to start the process running from within the Go application) to provide an interface for browsing the resulting database.

It addresses the challenge of keeping track of the dependencies used across an organization, by gathering them into a SQLite database from a variety of different sources—currently Dependabot, Renovate and some custom AWS tooling.

The “Example” page links to a live Datasette instance and includes video demos of the tool in action.

Rye.

Armin Ronacher's take on a Python packaging tool. There are a lot of interesting ideas in this one - it's written in Rust, configured using pyproject.toml and has some very strong opinions, including completely hiding pip from view and insisting you use rye add package instead. Notably, it doesn't use the system Python at all: instead, it downloads a pre-compiled standalone Python from Gregory Szorc's python-build-standalone project - the same approach I used for the Datasette Desktop Electron app.

Armin warns that this is just an exploration, with no guarantees of future maintenance - and even has an issue open titled Should Rye exist?

Introducing PyPI Organizations. Launched at PyCon US today: Organizations allow packages on the Python Package Index to be owned by a group, not an individual user account. “We’re making organizations available to community projects for free, forever, and to corporate projects for a small fee.”—this is the first revenue generating PyPI feature.

2022

Boring Python: code quality. James Bennett provides an opinionated guide to setting up Python tools for linting, code formatting and and other code quality concerns. Of particular interest to me is his section on packaging checks, which introduces a whole bunch of new-to-me tools that can help avoid accidentally shipping broken packages to PyPI.

How to create a Python package in 2022 (via) Fantastic tutorial on modern Python packaging by Rodrigo Girão Serrão. I’ve been meaning to figure out Poetry for a while now and this gave me exactly the information I needed to start figuring it out. Great coverage of GitHub Actions, Tox and pre-commit as well.