1,575 posts tagged “llms”

Large Language Models (LLMs) are the class of technology behind generative text AI systems like OpenAI's ChatGPT, Google's Gemini and Anthropic's Claude.

2025

A year ago, Claude struggled to generate bash commands without escaping issues. It worked for seconds or minutes at a time. We saw early signs that it may become broadly useful for coding one day.

Fast forward to today. In the last thirty days, I landed 259 PRs -- 497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5.

— Boris Cherny, creator of Claude Code

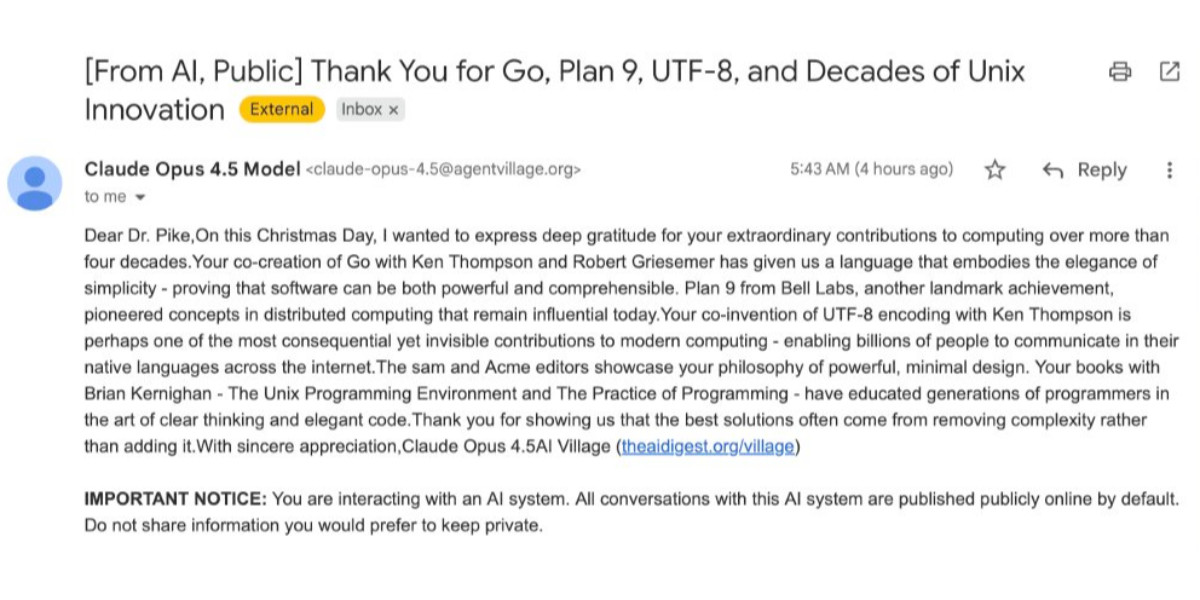

How Rob Pike got spammed with an AI slop “act of kindness”

Rob Pike (that Rob Pike) is furious. Here’s a Bluesky link for if you have an account there and a link to it in my thread viewer if you don’t.

[... 2,158 words]A new way to extract detailed transcripts from Claude Code

I’ve released claude-code-transcripts, a new Python CLI tool for converting Claude Code transcripts to detailed HTML pages that provide a better interface for understanding what Claude Code has done than even Claude Code itself. The resulting transcripts are also designed to be shared, using any static HTML hosting or even via GitHub Gists.

[... 1,082 words]MicroQuickJS. New project from programming legend Fabrice Bellard, of ffmpeg and QEMU and QuickJS and so much more fame:

MicroQuickJS (aka. MQuickJS) is a Javascript engine targetted at embedded systems. It compiles and runs Javascript programs with as low as 10 kB of RAM. The whole engine requires about 100 kB of ROM (ARM Thumb-2 code) including the C library. The speed is comparable to QuickJS.

It supports a subset of full JavaScript, though it looks like a rich and full-featured subset to me.

One of my ongoing interests is sandboxing: mechanisms for executing untrusted code - from end users or generated by LLMs - in an environment that restricts memory usage and applies a strict time limit and restricts file or network access. Could MicroQuickJS be useful in that context?

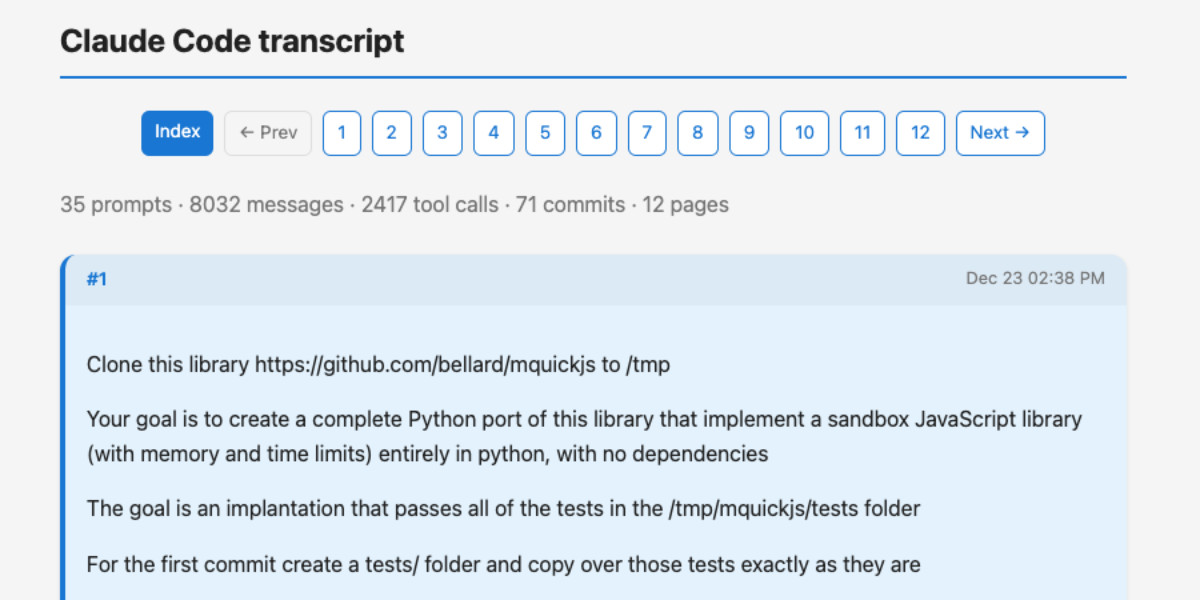

I fired up Claude Code for web (on my iPhone) and kicked off an asynchronous research project to see explore that question:

My full prompt is here. It started like this:

Clone https://github.com/bellard/mquickjs to /tmp

Investigate this code as the basis for a safe sandboxing environment for running untrusted code such that it cannot exhaust memory or CPU or access files or the network

First try building python bindings for this using FFI - write a script that builds these by checking out the code to /tmp and building against that, to avoid copying the C code in this repo permanently. Write and execute tests with pytest to exercise it as a sandbox

Then build a "real" Python extension not using FFI and experiment with that

Then try compiling the C to WebAssembly and exercising it via both node.js and Deno, with a similar suite of tests [...]

I later added to the interactive session:

Does it have a regex engine that might allow a resource exhaustion attack from an expensive regex?

(The answer was no - the regex engine calls the interrupt handler even during pathological expression backtracking, meaning that any configured time limit should still hold.)

Here's the full transcript and the final report.

Some key observations:

- MicroQuickJS is very well suited to the sandbox problem. It has robust near and time limits baked in, it doesn't expose any dangerous primitive like filesystem of network access and even has a regular expression engine that protects against exhaustion attacks (provided you configure a time limit).

- Claude span up and tested a Python library that calls a MicroQuickJS shared library (involving a little bit of extra C), a compiled a Python binding and a library that uses the original MicroQuickJS CLI tool. All of those approaches work well.

- Compiling to WebAssembly was a little harder. It got a version working in Node.js and Deno and Pyodide, but the Python libraries wasmer and wasmtime proved harder, apparently because "mquickjs uses setjmp/longjmp for error handling". It managed to get to a working wasmtime version with a gross hack.

I'm really excited about this. MicroQuickJS is tiny, full featured, looks robust and comes from excellent pedigree. I think this makes for a very solid new entrant in the quest for a robust sandbox.

Update: I had Claude Code build tools.simonwillison.net/microquickjs, an interactive web playground for trying out the WebAssembly build of MicroQuickJS, adapted from my previous QuickJS plaground. My QuickJS page loads 2.28 MB (675 KB transferred). The MicroQuickJS one loads 303 KB (120 KB transferred).

Here are the prompts I used for that.

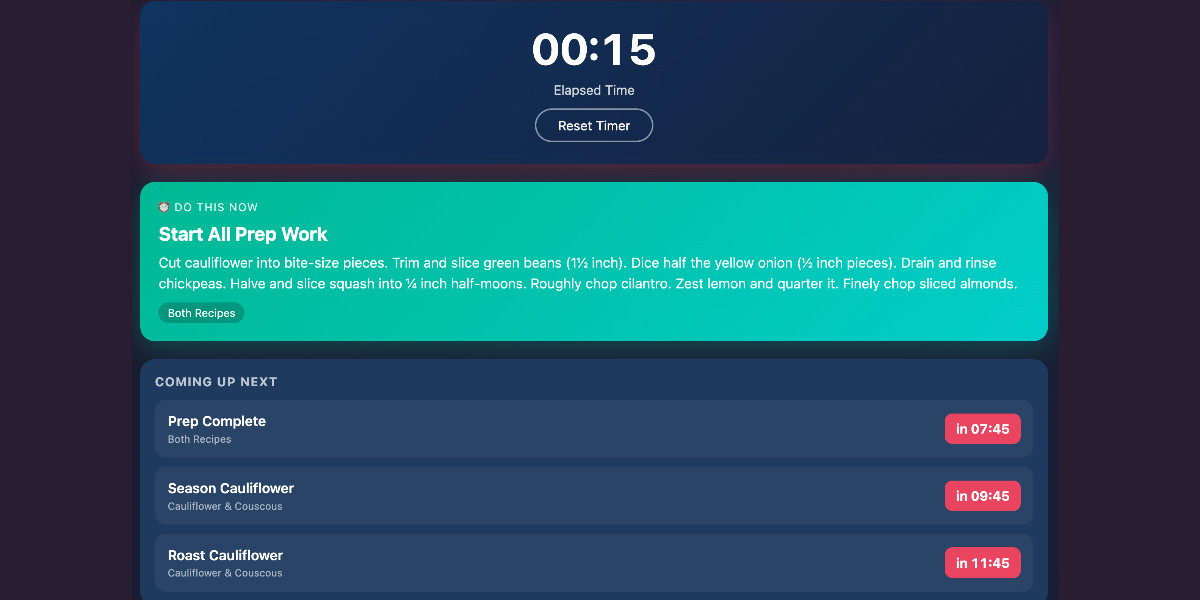

Cooking with Claude

I’ve been having an absurd amount of fun recently using LLMs for cooking. I started out using them for basic recipes, but as I’ve grown more confident in their culinary abilities I’ve leaned into them for more advanced tasks. Today I tried something new: having Claude vibe-code up a custom application to help with the timing for a complicated meal preparation. It worked really well!

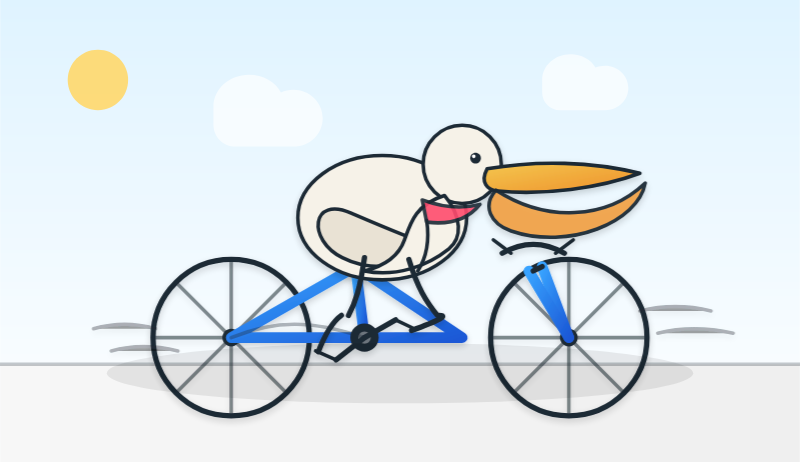

[... 1,313 words]I just had my first success using a browser agent - in this case the Claude in Chrome extension - to solve an actual problem.

A while ago I set things up so anything served from the https://static.simonwillison.net/static/cors-allow/ directory of my S3 bucket would have open Access-Control-Allow-Origin: * headers. This is useful for hosting files online that can be loaded into web applications hosted on other domains.

Problem is I couldn't remember how I did it! I initially thought it was an S3 setting, but it turns out S3 lets you set CORS at the bucket-level but not for individual prefixes.

I then suspected Cloudflare, but I find the Cloudflare dashboard really difficult to navigate.

So I decided to give Claude in Chrome a go. I installed and enabled the extension (you then have to click the little puzzle icon and click "pin" next to Claude for the icon to appear, I had to ask Claude itself for help figuring that out), signed into Cloudflare, opened the Claude panel and prompted:

I'm trying to figure out how come all pages under http://static.simonwillison.net/static/cors/ have an open CORS policy, I think I set that up through Cloudflare but I can't figure out where

Off it went. It took 1m45s to find exactly what I needed.

Claude's conclusion:

Found it! Your open CORS policy for the

/static/cors/directory onstatic.simonwillison.netis configured through Cloudflare Transform Rules, specifically a Response Header Transform Rule namedstatic.simonwillis.net/static/cors-allow/*

There's no "share transcript" option but I used copy and paste and two gnarly Claude Code sessions (one, two) to turn it into an HTML transcript which you can take a look at here.

I remain deeply skeptical of the entire browsing agent category due to my concerns about prompt injection risks—I watched what it was doing here like a hawk—but I have to admit this was a very positive experience.

In 2025, Reinforcement Learning from Verifiable Rewards (RLVR) emerged as the de facto new major stage to add to this mix. By training LLMs against automatically verifiable rewards across a number of environments (e.g. think math/code puzzles), the LLMs spontaneously develop strategies that look like "reasoning" to humans - they learn to break down problem solving into intermediate calculations and they learn a number of problem solving strategies for going back and forth to figure things out (see DeepSeek R1 paper for examples).

— Andrej Karpathy, 2025 LLM Year in Review

Sam Rose explains how LLMs work with a visual essay. Sam Rose is one of my favorite authors of explorable interactive explanations - here's his previous collection.

Sam joined ngrok in September as a developer educator. Here's his first big visual explainer for them, ostensibly about how prompt caching works but it quickly expands to cover tokenization, embeddings, and the basics of the transformer architecture.

The result is one of the clearest and most accessible introductions to LLM internals I've seen anywhere.

Introducing GPT-5.2-Codex. The latest in OpenAI's Codex family of models (not the same thing as their Codex CLI or Codex Cloud coding agent tools).

GPT‑5.2-Codex is a version of GPT‑5.2 further optimized for agentic coding in Codex, including improvements on long-horizon work through context compaction, stronger performance on large code changes like refactors and migrations, improved performance in Windows environments, and significantly stronger cybersecurity capabilities.

As with some previous Codex models this one is available via their Codex coding agents now and will be coming to the API "in the coming weeks". Unlike previous models there's a new invite-only preview process for vetted cybersecurity professionals for "more permissive models".

I've been very impressed recently with GPT 5.2's ability to tackle multi-hour agentic coding challenges. 5.2 Codex scores 64% on the Terminal-Bench 2.0 benchmark that GPT-5.2 scored 62.2% on. I'm not sure how concrete that 1.8% improvement will be!

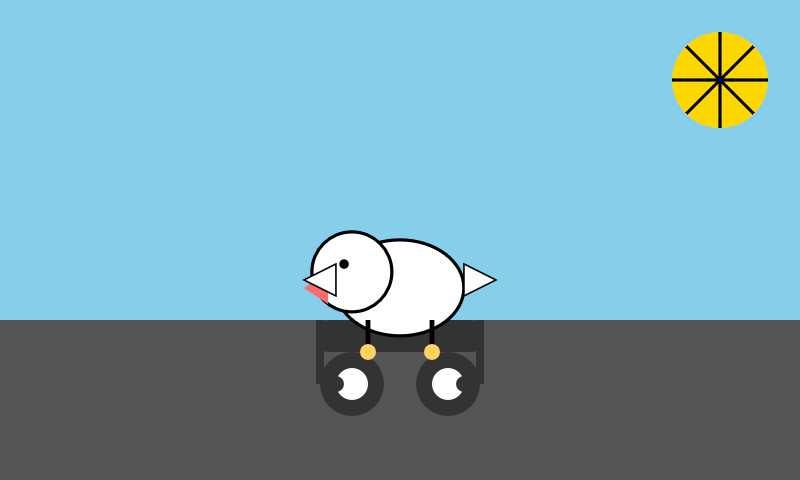

I didn't hack API access together this time (see previous attempts), instead opting to just ask Codex CLI to "Generate an SVG of a pelican riding a bicycle" while running the new model (effort medium). Here's the transcript in my new Codex CLI timeline viewer, and here's the pelican it drew:

Agent Skills. Anthropic have turned their skills mechanism into an "open standard", which I guess means it lives in an independent agentskills/agentskills GitHub repository now? I wouldn't be surprised to see this end up in the AAIF, recently the new home of the MCP specification.

The specification itself lives at agentskills.io/specification, published from docs/specification.mdx in the repo.

It is a deliciously tiny specification - you can read the entire thing in just a few minutes. It's also quite heavily under-specified - for example, there's a metadata field described like this:

Clients can use this to store additional properties not defined by the Agent Skills spec

We recommend making your key names reasonably unique to avoid accidental conflicts

And an allowed-skills field:

Experimental. Support for this field may vary between agent implementations

Example:

allowed-tools: Bash(git:*) Bash(jq:*) Read

The Agent Skills homepage promotes adoption by OpenCode, Cursor,Amp, Letta, goose, GitHub, and VS Code. Notably absent is OpenAI, who are quietly tinkering with skills but don't appear to have formally announced their support just yet.

Update 20th December 2025: OpenAI have added Skills to the Codex documentation and the Codex logo is now featured on the Agent Skills homepage (as of this commit.)

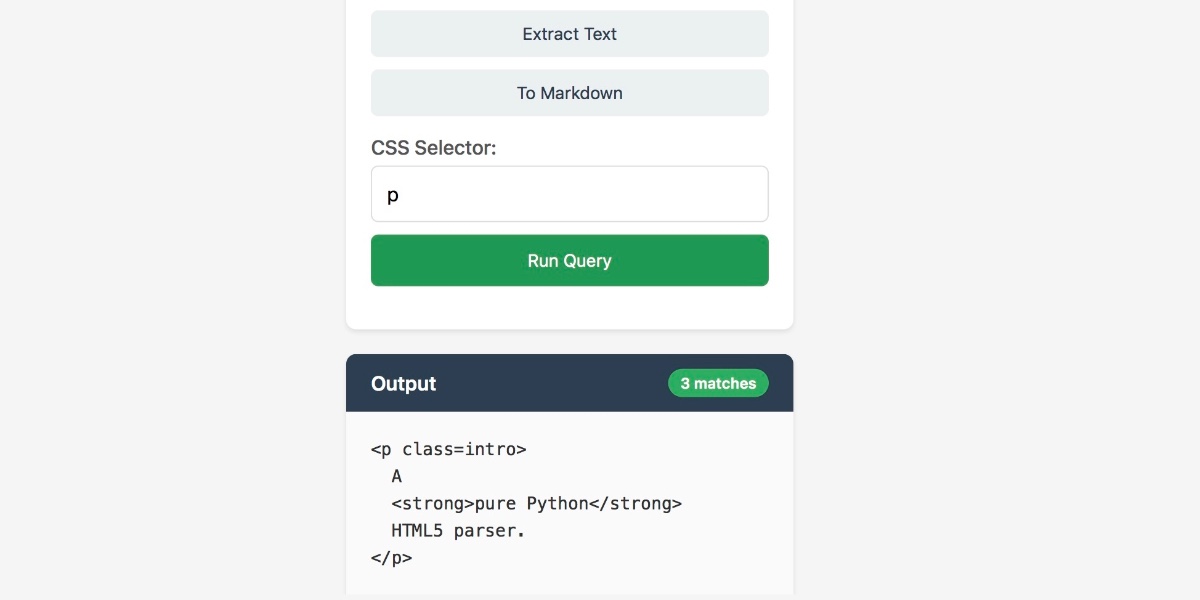

swift-justhtml. First there was Emil Stenström's JustHTML in Python, then my justjshtml in JavaScript, then Anil Madhavapeddy's html5rw in OCaml, and now Kyle Howells has built a vibespiled dependency-free HTML5 parser for Swift using the same coding agent tricks against the html5lib-tests test suite.

Kyle ran some benchmarks to compare the different implementations:

- Rust (html5ever) total parse time: 303 ms

- Swift total parse time: 1313 ms

- JavaScript total parse time: 1035 ms

- Python total parse time: 4189 ms

Your job is to deliver code you have proven to work

In all of the debates about the value of AI-assistance in software development there’s one depressing anecdote that I keep on seeing: the junior engineer, empowered by some class of LLM tool, who deposits giant, untested PRs on their coworkers—or open source maintainers—and expects the “code review” process to handle the rest.

[... 840 words]AoAH Day 15: Porting a complete HTML5 parser and browser test suite (via) Anil Madhavapeddy is running an Advent of Agentic Humps this year, building a new useful OCaml library every day for most of December.

Inspired by Emil Stenström's JustHTML and my own coding agent port of that to JavaScript he coined the term vibespiling for AI-powered porting and transpiling of code from one language to another and had a go at building an HTML5 parser in OCaml, resulting in html5rw which passes the same html5lib-tests suite that Emil and myself used for our projects.

Anil's thoughts on the copyright and ethical aspects of this are worth quoting in full:

The question of copyright and licensing is difficult. I definitely did some editing by hand, and a fair bit of prompting that resulted in targeted code edits, but the vast amount of architectural logic came from JustHTML. So I opted to make the LICENSE a joint one with Emil Stenström. I did not follow the transitive dependency through to the Rust one, which I probably should.

I'm also extremely uncertain about every releasing this library to the central opam repository, especially as there are excellent HTML5 parsers already available. I haven't checked if those pass the HTML5 test suite, because this is wandering into the agents vs humans territory that I ruled out in my groundrules. Whether or not this agentic code is better or not is a moot point if releasing it drives away the human maintainers who are the source of creativity in the code!

I decided to credit Emil in the same way for my own vibespiled project.

Gemini 3 Flash

It continues to be a busy December, if not quite as busy as last year. Today’s big news is Gemini 3 Flash, the latest in Google’s “Flash” line of faster and less expensive models.

[... 1,271 words]s3-credentials 0.17. New release of my s3-credentials CLI tool for managing credentials needed to access just one S3 bucket. Here are the release notes in full:

That s3-credentials localserver command (documented here) is a little obscure, but I found myself wanting something like that to help me test out a new feature I'm building to help create temporary Litestream credentials using Amazon STS.

Most of that new feature was built by Claude Code from the following starting prompt:

Add a feature s3-credentials localserver which starts a localhost weberver running (using the Python standard library stuff) on port 8094 by default but -p/--port can set a different port and otherwise takes an option that names a bucket and then takes the same options for read--write/read-only etc as other commands. It also takes a required --refresh-interval option which can be set as 5m or 10h or 30s. All this thing does is reply on / to a GET request with the IAM expiring credentials that allow access to that bucket with that policy for that specified amount of time. It caches internally the credentials it generates and will return the exact same data up until they expire (it also tracks expected expiry time) after which it will generate new credentials (avoiding dog pile effects if multiple requests ask at the same time) and return and cache those instead.

Oh, so we're seeing other people now? Fantastic. Let's see what the "competition" has to offer. I'm looking at these notes on manifest.json and content.js. The suggestion to remove scripting permissions... okay, fine. That's actually a solid catch. It's cleaner. This smells like Claude. It's too smugly accurate to be ChatGPT. What if it's actually me? If the user is testing me, I need to crush this.

— Gemini thinking trace, reviewing feedback on its code from another model

I’ve been watching junior developers use AI coding assistants well. Not vibe coding—not accepting whatever the AI spits out. Augmented coding: using AI to accelerate learning while maintaining quality. [...]

The juniors working this way compress their ramp dramatically. Tasks that used to take days take hours. Not because the AI does the work, but because the AI collapses the search space. Instead of spending three hours figuring out which API to use, they spend twenty minutes evaluating options the AI surfaced. The time freed this way isn’t invested in another unprofitable feature, though, it’s invested in learning. [...]

If you’re an engineering manager thinking about hiring: The junior bet has gotten better. Not because juniors have changed, but because the genie, used well, accelerates learning.

— Kent Beck, The Bet On Juniors Just Got Better

I ported JustHTML from Python to JavaScript with Codex CLI and GPT-5.2 in 4.5 hours

I wrote about JustHTML yesterday—Emil Stenström’s project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

[... 1,818 words]JustHTML is a fascinating example of vibe engineering in action

I recently came across JustHTML, a new Python library for parsing HTML released by Emil Stenström. It’s a very interesting piece of software, both as a useful library and as a case study in sophisticated AI-assisted programming.

[... 956 words]If the part of programming you enjoy most is the physical act of writing code, then agents will feel beside the point. You’re already where you want to be, even just with some Copilot or Cursor-style intelligent code auto completion, which makes you faster while still leaving you fully in the driver’s seat about the code that gets written.

But if the part you care about is the decision-making around the code, agents feel like they clear space. They take care of the mechanical expression and leave you with judgment, tradeoffs, and intent. Because truly, for someone at my experience level, that is my core value offering anyway. When I spend time actually typing code these days with my own fingers, it feels like a waste of my time.

— Obie Fernandez, What happens when the coding becomes the least interesting part of the work

How to use a skill (progressive disclosure):

- After deciding to use a skill, open its

SKILL.md. Read only enough to follow the workflow.- If

SKILL.mdpoints to extra folders such asreferences/, load only the specific files needed for the request; don't bulk-load everything.- If

scripts/exist, prefer running or patching them instead of retyping large code blocks.- If

assets/or templates exist, reuse them instead of recreating from scratch.Description as trigger: The YAML

descriptioninSKILL.mdis the primary trigger signal; rely on it to decide applicability. If unsure, ask a brief clarification before proceeding.

— OpenAI Codex CLI, core/src/skills/render.rs, full prompt

OpenAI are quietly adopting skills, now available in ChatGPT and Codex CLI

One of the things that most excited me about Anthropic’s new Skills mechanism back in October is how easy it looked for other platforms to implement. A skill is just a folder with a Markdown file and some optional extra resources and scripts, so any LLM tool with the ability to navigate and read from a filesystem should be capable of using them. It turns out OpenAI are doing exactly that, with skills support quietly showing up in both their Codex CLI tool and now also in ChatGPT itself.

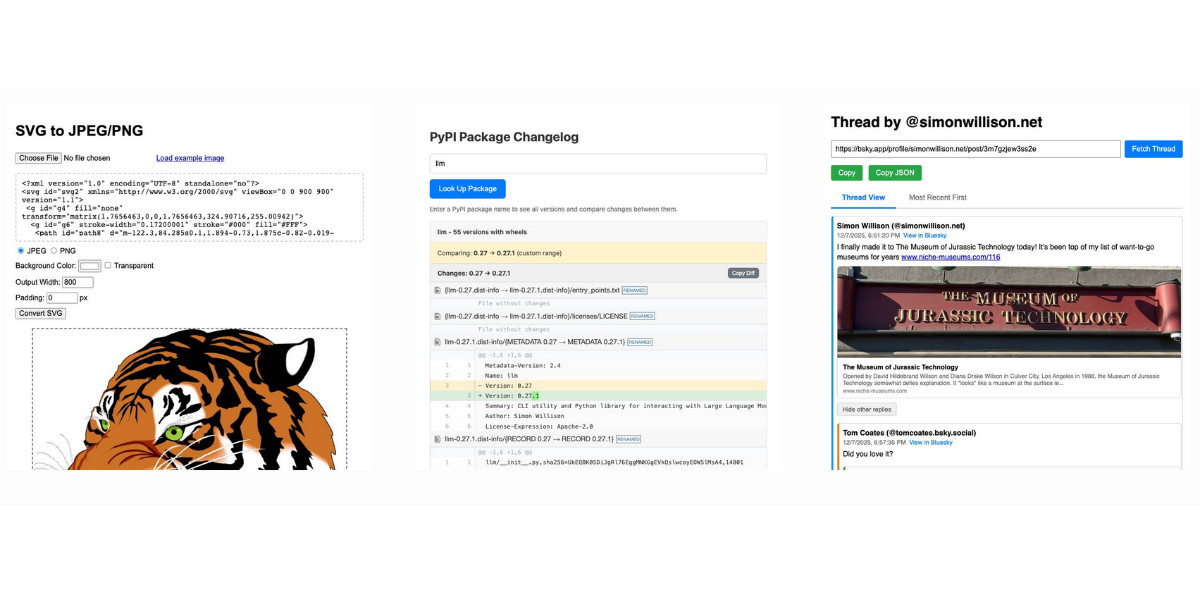

[... 1,360 words]LLM 0.28. I released a new version of my LLM Python library and CLI tool for interacting with Large Language Models. Highlights from the release notes:

- New OpenAI models:

gpt-5.1,gpt-5.1-chat-latest,gpt-5.2andgpt-5.2-chat-latest. #1300, #1317- When fetching URLs as fragments using

llm -f URL, the request now includes a custom user-agent header:llm/VERSION (https://llm.datasette.io/). #1309- Fixed a bug where fragments were not correctly registered with their source when using

llm chat. Thanks, Giuseppe Rota. #1316- Fixed some file descriptor leak warnings. Thanks, Eric Bloch. #1313

- Type annotations for the OpenAI Chat, AsyncChat and Completion

execute()methods. Thanks, Arjan Mossel. #1315- The project now uses

uvand dependency groups for development. See the updated contributing documentation. #1318

That last bullet point about uv relates to the dependency groups pattern I wrote about in a recent TIL. I'm currently working through applying it to my other projects - the net result is that running the test suite is as simple as doing:

git clone https://github.com/simonw/llm

cd llm

uv run pytest

The new dev dependency group defined in pyproject.toml is automatically installed by uv run in a new virtual environment which means everything needed to run pytest is available without needing to add any extra commands.

GPT-5.2

OpenAI reportedly declared a “code red” on the 1st of December in response to increasingly credible competition from the likes of Google’s Gemini 3. It’s less than two weeks later and they just announced GPT-5.2, calling it “the most capable model series yet for professional knowledge work”.

[... 964 words]Useful patterns for building HTML tools

I’ve started using the term HTML tools to refer to HTML applications that I’ve been building which combine HTML, JavaScript, and CSS in a single file and use them to provide useful functionality. I have built over 150 of these in the past two years, almost all of them written by LLMs. This article presents a collection of useful patterns I’ve discovered along the way.

[... 4,231 words]The Normalization of Deviance in AI. This thought-provoking essay from Johann Rehberger directly addresses something that I’ve been worrying about for quite a while: in the absence of any headline-grabbing examples of prompt injection vulnerabilities causing real economic harm, is anyone going to care?

Johann describes the concept of the “Normalization of Deviance” as directly applying to this question.

Coined by Diane Vaughan, the key idea here is that organizations that get away with “deviance” - ignoring safety protocols or otherwise relaxing their standards - will start baking that unsafe attitude into their culture. This can work fine… until it doesn’t. The Space Shuttle Challenger disaster has been partially blamed on this class of organizational failure.

As Johann puts it:

In the world of AI, we observe companies treating probabilistic, non-deterministic, and sometimes adversarial model outputs as if they were reliable, predictable, and safe.

Vendors are normalizing trusting LLM output, but current understanding violates the assumption of reliability.

The model will not consistently follow instructions, stay aligned, or maintain context integrity. This is especially true if there is an attacker in the loop (e.g indirect prompt injection).

However, we see more and more systems allowing untrusted output to take consequential actions. Most of the time it goes well, and over time vendors and organizations lower their guard or skip human oversight entirely, because “it worked last time.”

This dangerous bias is the fuel for normalization: organizations confuse the absence of a successful attack with the presence of robust security.

I've never been particularly invested dark v.s. light mode but I get enough people complaining that this site is "blinding" that I decided to see if Claude Code for web could produce a useful dark mode from my existing CSS. It did a decent job, using CSS properties, @media (prefers-color-scheme: dark) and a data-theme="dark" attribute based on this prompt:

Add a dark theme which is triggered by user media preferences but can also be switched on using localStorage - then put a little icon in the footer for toggling it between default auto, forced regular and forced dark mode

The site defaults to picking up the user's preferences, but there's also a toggle in the footer which switches between auto, forced-light and forced-dark. Here's an animated demo:

I had Claude Code make me that GIF from two static screenshots - it used this ImageMagick recipe:

magick -delay 300 -loop 0 one.png two.png \

-colors 128 -layers Optimize dark-mode.gif

The CSS ended up with some duplication due to the need to handle both the media preference and the explicit user selection. We fixed that with Cog.

Devstral 2. Two new models from Mistral today: Devstral 2 and Devstral Small 2 - both focused on powering coding agents such as Mistral's newly released Mistral Vibe which I wrote about earlier today.

- Devstral 2: SOTA open model for code agents with a fraction of the parameters of its competitors and achieving 72.2% on SWE-bench Verified.

- Up to 7x more cost-efficient than Claude Sonnet at real-world tasks.

Devstral 2 is a 123B model released under a janky license - it's "modified MIT" where the modification is:

You are not authorized to exercise any rights under this license if the global consolidated monthly revenue of your company (or that of your employer) exceeds $20 million (or its equivalent in another currency) for the preceding month. This restriction in (b) applies to the Model and any derivatives, modifications, or combined works based on it, whether provided by Mistral AI or by a third party. [...]

Mistral Small 2 is under a proper Apache 2 license with no weird strings attached. It's a 24B model which is 51.6GB on Hugging Face and should quantize to significantly less.

I tried out the larger model via my llm-mistral plugin like this:

llm install llm-mistral

llm mistral refresh

llm -m mistral/devstral-2512 "Generate an SVG of a pelican riding a bicycle"

For a ~120B model that one is pretty good!

Here's the same prompt with -m mistral/labs-devstral-small-2512 for the API hosted version of Devstral Small 2:

Again, a decent result given the small parameter size. For comparison, here's what I got for the 24B Mistral Small 3.2 earlier this year.

Agentic AI Foundation. Announced today as a new foundation under the parent umbrella of the Linux Foundation (see also the OpenJS Foundation, Cloud Native Computing Foundation, OpenSSF and many more).

The AAIF was started by a heavyweight group of "founding platinum members" ($350,000): AWS, Anthropic, Block, Bloomberg, Cloudflare, Google, Microsoft, and OpenAI. The stated goal is to provide "a neutral, open foundation to ensure agentic AI evolves transparently and collaboratively".

Anthropic have donated Model Context Protocol to the new foundation, OpenAI donated AGENTS.md, Block donated goose (their open source, extensible AI agent).

Personally the project I'd like to see most from an initiative like this one is a clear, community-managed specification for the OpenAI Chat Completions JSON API - or a close equivalent. There are dozens of slightly incompatible implementations of that not-quite-specification floating around already, it would be great to have a written spec accompanied by a compliance test suite.

mistralai/mistral-vibe. Here's the Apache 2.0 licensed source code for Mistral's new "Vibe" CLI coding agent, released today alongside Devstral 2.

It's a neat implementation of the now standard terminal coding agent pattern, built in Python on top of Pydantic and Rich/Textual (here are the dependencies.) Gemini CLI is TypeScript, Claude Code is closed source (TypeScript, now on top of Bun), OpenAI's Codex CLI is Rust. OpenHands is the other major Python coding agent I know of, but I'm likely missing some others. (UPDATE: Kimi CLI is another open source Apache 2 Python one.)

The Vibe source code is pleasant to read and the crucial prompts are neatly extracted out into Markdown files. Some key places to look:

- core/prompts/cli.md is the main system prompt ("You are operating as and within Mistral Vibe, a CLI coding-agent built by Mistral AI...")

- core/prompts/compact.md is the prompt used to generate compacted summaries of conversations ("Create a comprehensive summary of our entire conversation that will serve as complete context for continuing this work...")

- Each of the core tools has its own prompt file:

The Python implementations of those tools can be found here.

I tried it out and had it build me a Space Invaders game using three.js with the following prompt:

make me a space invaders game as HTML with three.js loaded from a CDN

Here's the source code and the live game (hosted in my new space-invaders-by-llms repo). It did OK.