453 posts tagged “datasette”

Datasette is an open source tool for exploring and publishing data.

2020

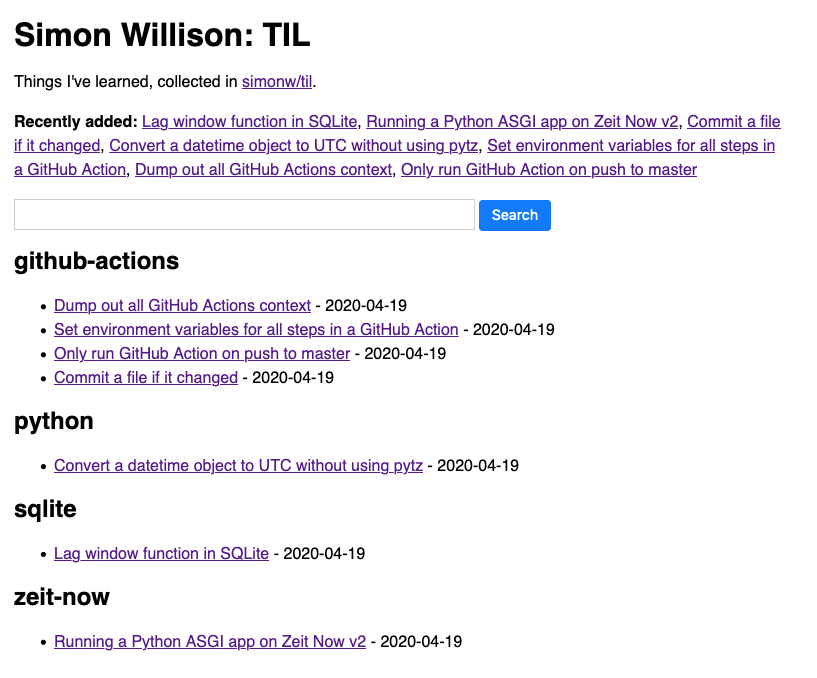

Using a self-rewriting README powered by GitHub Actions to track TILs

I’ve started tracking TILs—Today I Learneds—inspired by this five-year-and-counting collection by Josh Branchaud on GitHub (found via Hacker News). I’m keeping mine in GitHub too, and using GitHub Actions to automatically generate an index page README in the repository and a SQLite-backed search engine.

[... 1,100 words]Weeknotes: Hacking on 23 different projects

I wrote a lot of code this week: 184 commits over 23 repositories! I’ve also started falling for Zeit Now v2, having found workarounds for some of my biggest problems with it.

[... 901 words]Goodbye Zeit Now v1, hello datasette-publish-now—and talking to myself in GitHub issues

This week I’ve been mostly dealing with the finally announced shutdown of Zeit Now v1. And having long-winded conversations with myself in GitHub issues.

[... 2,050 words]Zeit Now v1 to sunset soon: no new deployments from 1st May, total shutdown 7th August. I posted a thread on Twitter with some thoughts. Zeit Now v1 remains the best hosting platform I’ve ever used given my particular tastes. They’ve handled the shutdown very responsibly, but I’m sad to see it go.

Weeknotes: Covid-19, First Python Notebook, more Dogsheep, Tailscale

My covid-19.datasettes.com project publishes information on COVID-19 cases around the world. The project started out using data from Johns Hopkins CSSE, but last week the New York Times started publishing high quality USA county- and state-level daily numbers to their own repository. Here’s the change that added the NY Times data.

[... 993 words]Making Datasets Fly with Datasette and Fly (via) It’s always exciting to see a Datasette tutorial that wasn’t written by me! This one is great—it shows how to load Central Park Squirrel Census data into a SQLite database, explore it with Datasette and then publish it to the Fly hosting platform using datasette-publish-fly and datasette-cluster-map.

Weeknotes: Datasette 0.39 and many other projects

This week’s theme: Well, I’m not going anywhere. So a ton of progress to report on various projects.

[... 806 words]datasette-publish-fly (via) Fly is a neat new Docker hosting provider with a very tempting pricing model: Just $2.67/month for their smallest always-on instance, and they give each user $10/month in free credit. datasette-publish-fly is the first plugin I’ve written using the publish_subcommand plugin hook, which allows extra hosting providers to be added as publish targets. Install the plugin and you can run “datasette publish fly data.db” to deploy SQLite databases to your Fly account.

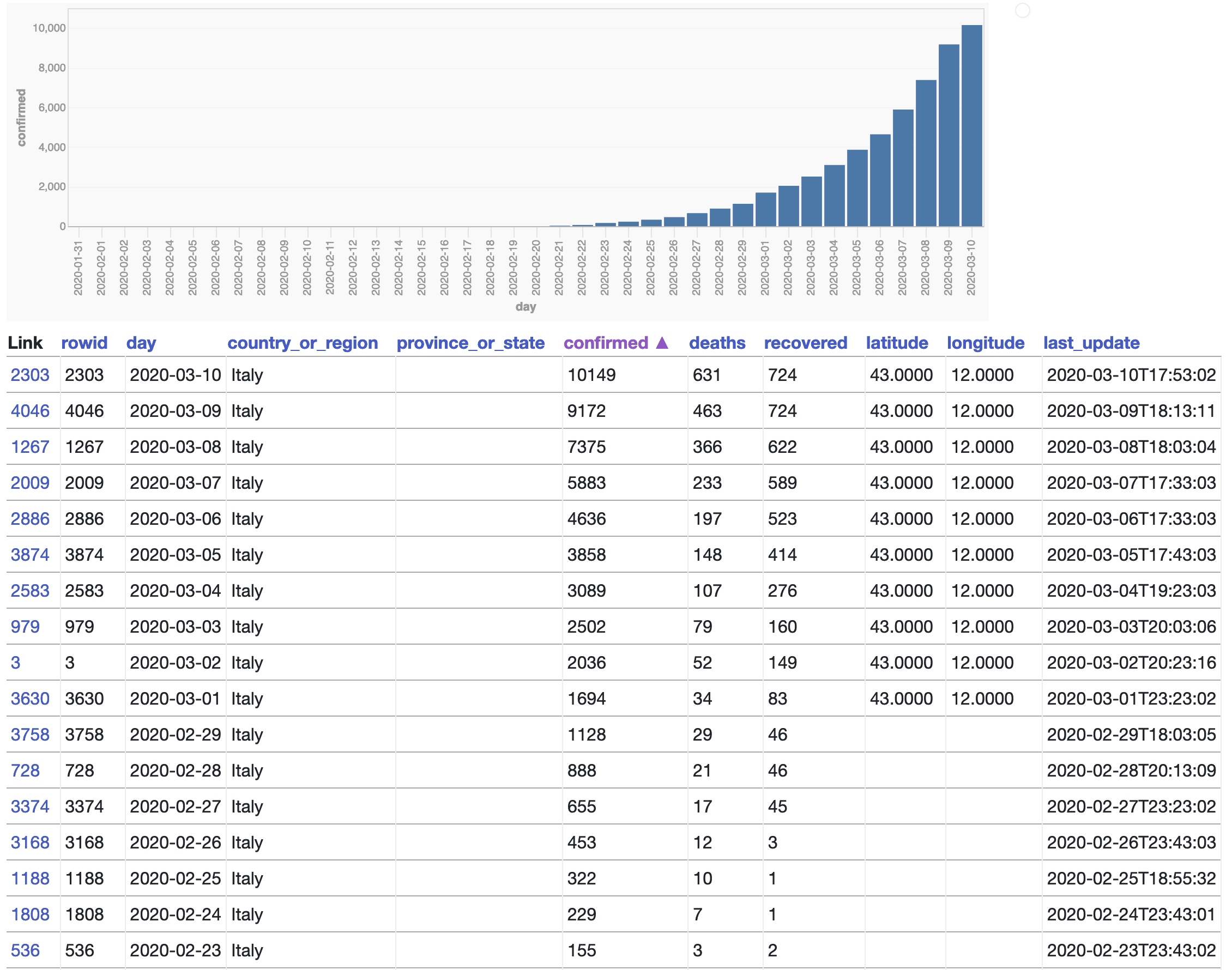

Weeknotes: COVID-19 numbers in Datasette

COVID-19, the disease caused by the novel coronavirus, gets more terrifying every day. Johns Hopkins Center for Systems Science and Engineering (CSSE) have been collating data about the spread of the disease and publishing it as CSV files on GitHub.

[... 644 words]datasette-search-all: a new plugin for searching multiple Datasette tables at once

I just released a new plugin for Datasette, and it’s pretty fun. datasette-search-all is a plugin written mostly in JavaScript that executes the same search query against every searchable table in every database connected to your Datasette instance.

[... 819 words]Weeknotes: datasette-ics, datasette-upload-csvs, datasette-configure-fts, asgi-csrf

I’ve been preparing for the NICAR 2020 Data Journalism conference this week which has lead me into a flurry of activity across a plethora of different projects and plugins.

[... 834 words]Weeknotes: Datasette Writes

As discussed previously, the biggest hole in Datasette’s feature set at the moment involves writing to the database.

[... 604 words]How to cheat at unit tests with pytest and Black

I’ve been making a lot of progress on Datasette Cloud this week. As an application that provides private hosted Datasette instances (initially targeted at data journalists and newsrooms) the majority of the code I’ve written deals with permissions: allowing people to form teams, invite team members, promote and demote team administrators and suchlike.

[... 933 words]Weeknotes: Shaving yaks for Datasette Cloud

I’ve been shaving a lot of yaks, but I’m finally ready to for other people to start kicking the tires on the MVP of Datasette Cloud.

[... 392 words]Weeknotes: datasette-auth-existing-cookies and datasette-sentry

Work on Datasette Cloud continues—I’m tantalizingly close to having a MVP I can start to invite people to try out.

[... 701 words]Weeknotes: Datasette Cloud and zero downtime deployments

Yesterday’s piece on Tracking FARA by deploying a data API using GitHub Actions and Cloud Run was originally intended to be my weeknotes, but ended up getting a bit too involved.

[... 1,431 words]Tracking FARA by deploying a data API using GitHub Actions and Cloud Run

I’m using the combination of GitHub Actions and Google Cloud Run to retrieve data from the U.S. Department of Justice FARA website and deploy it as a queryable API using Datasette.

[... 1,599 words]Building a sitemap.xml with a one-off Datasette plugin

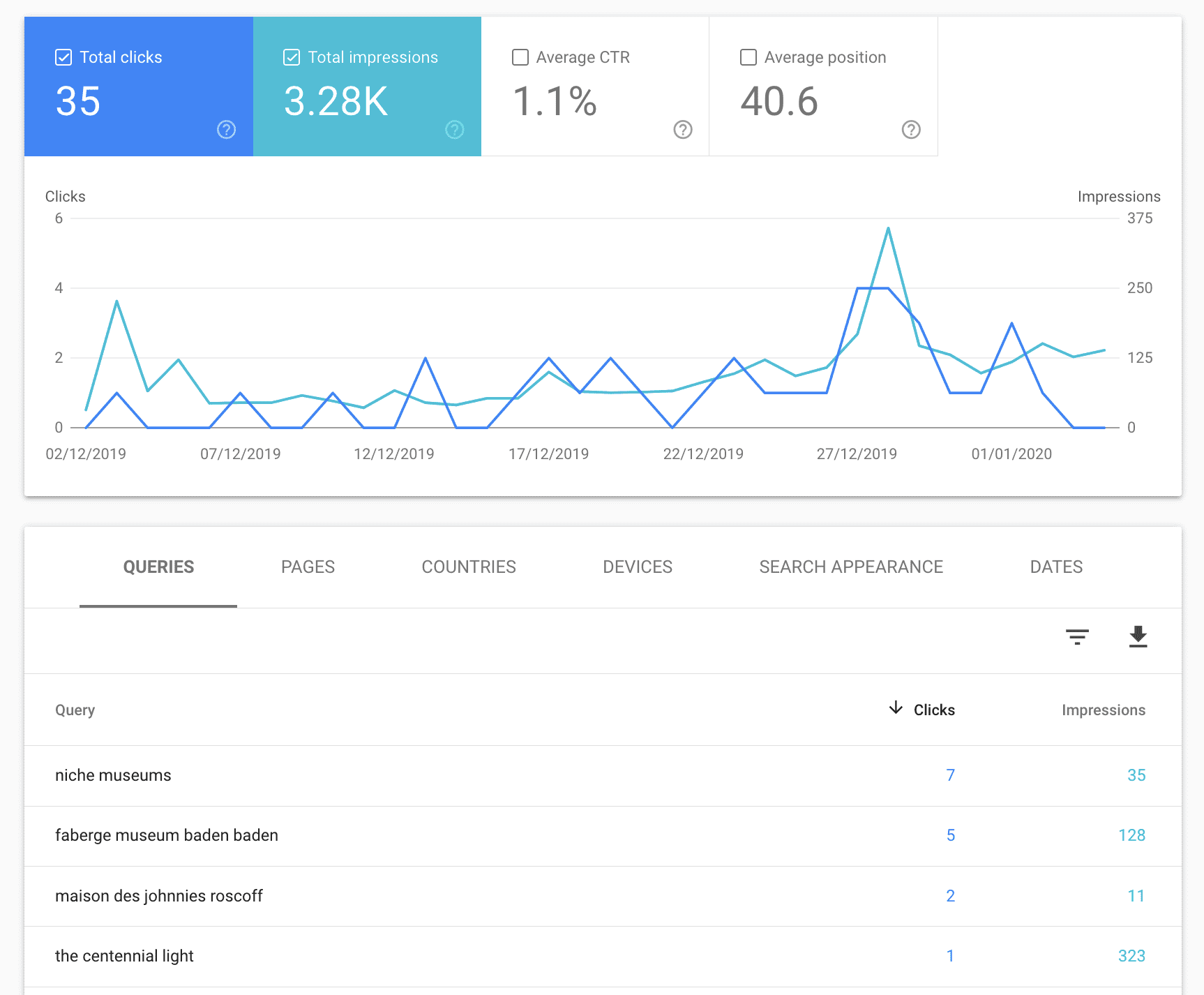

One of the fun things about launching a new website is re-learning what it takes to promote a website from scratch on the modern web. I’ve been thoroughly enjoying using Niche Museums as an excuse to explore 2020-era SEO.

[... 1,078 words]2019

Weeknotes: Datasette 0.33

I released Datasette 0.33 yesterday. The release represents an accumulation of small changes and features since Datasette 0.32 back in November. Duplicating the release notes:

[... 678 words]Building tools to bring data-driven reporting to more newsrooms. I wrote about my fellowship project so far and my goals for the next few months for the JSK Medium publication. My next priority: an invite-only hosted version for newsrooms so that figuring out how to install and manage the software isn’t the biggest barrier to entry.

Logging to SQLite using ASGI middleware

I had some fun playing around with ASGI middleware and logging during our flight back to England for the holidays.

[... 2,535 words]Better presentations through storytelling and STAR moments

Last week I completed GSBGEN 315: Strategic Communication at the Stanford Graduate School of Business.

[... 643 words]datasette-atom: Define an Atom feed using a custom SQL query

I’ve been having a ton of fun iterating on www.niche-museums.com. I put together some notes on how the site works last week, and I’ve been taking advantage of the Thanksgiving break to continue exploring ways in which Datasette can be used to quickly build database-backed static websites.

[... 1,084 words]niche-museums.com, powered by Datasette

I just released a major upgrade to my www.niche-museums.com website (launched last month).

[... 1,154 words]Weeknotes: datasette-template-sql

Last week I talked about wanting to take ona a larger Datasette project, and listed some candidates. I ended up pushing a big project that I hadn’t listed there: the upgrade of Datasette to Python 3.8, which meant dropping support for Python 3.5 (thanks to incompatible dependencies).

[... 521 words]datasette-template-sql (via) New Datasette plugin, celebrating the new ability in Datasette 0.32 to have asynchronous custom template functions in Jinja (which was previously blocked by the need to support Python 3.5). The plugin adds a sql() function which can be used to execute SQL queries that are embedded directly in custom templates.

Datasette 0.31. Released today: this version adds compatibility with Python 3.8 and breaks compatibility with Python 3.5. Since Glitch support Python 3.7.3 now I decided I could finally give up on 3.5. This means Datasette can use f-strings now, but more importantly it opens up the opportunity to start taking advantage of Starlette, which makes all kinds of interesting new ASGI-based plugins much easier to build.

Weeknotes: Python 3.7 on Glitch, datasette-render-markdown

Streaks is really working well for me. I’m at 12 days of commits to Datasette, 16 posting a daily Niche Museum, 19 of actually reviewing my email inbox and 14 of guitar practice. I rewarded myself for that last one by purchasing an actual classical (as opposed to acoustic) guitar.

[... 1,141 words]Setting up Datasette, step by step (via) Tobias describes how he runs Datasette on his own server/VPS, using nginx and systemd. I’m doing something similar for some projects and systemd really does feel like the solution to the “ensure a Python process keeps running” problem I’ve been fighting for over a decade. I really like how Tobias creates a dedicated Linux user for each of his deployed Python projects.