357 posts tagged “ai-assisted-programming”

Using AI tools such as Large Language Models to help write code. Vibe coding is the less responsible subset of this. See Here’s how I use LLMs to help me write code for a description of my process.

2025

TIL: Downloading archived Git repositories from archive.softwareheritage.org

(via)

Back in February I blogged about a neat Python library called sqlite-s3vfs for accessing SQLite databases hosted in an S3 bucket, released as MIT licensed open source by the UK government's Department for Business and Trade.

I went looking for it today and found that the github.com/uktrade/sqlite-s3vfs repository is now a 404.

Since this is taxpayer-funded open source software I saw it as my moral duty to try and restore access! It turns out a full copy had been captured by the Software Heritage archive, so I was able to restore the repository from there. My copy is now archived at simonw/sqlite-s3vfs.

The process for retrieving an archive was non-obvious, so I've written up a TIL and also published a new Software Heritage Repository Retriever tool which takes advantage of the CORS-enabled APIs provided by Software Heritage. Here's the Claude Code transcript from building that.

In essence a language model changes you from a programmer who writes lines of code, to a programmer that manages the context the model has access to, prunes irrelevant things, adds useful material to context, and writes detailed specifications. If that doesn't sound fun to you, you won't enjoy it.

Think about it as if it is a junior developer that has read every textbook in the world but has 0 practical experience with your specific codebase, and is prone to forgetting anything but the most recent hour of things you've told it. What do you want to tell that intern to help them progress?

Eg you might put sticky notes on their desk to remind them of where your style guide lives, what the API documentation is for the APIs you use, some checklists of what is done and what is left to do, etc.

But the intern gets confused easily if it keeps accumulating sticky notes and there are now 100 sticky notes, so you have to periodically clear out irrelevant stickies and replace them with new stickies.

— Liz Fong-Jones, thread on Bluesky

A year ago, Claude struggled to generate bash commands without escaping issues. It worked for seconds or minutes at a time. We saw early signs that it may become broadly useful for coding one day.

Fast forward to today. In the last thirty days, I landed 259 PRs -- 497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5.

— Boris Cherny, creator of Claude Code

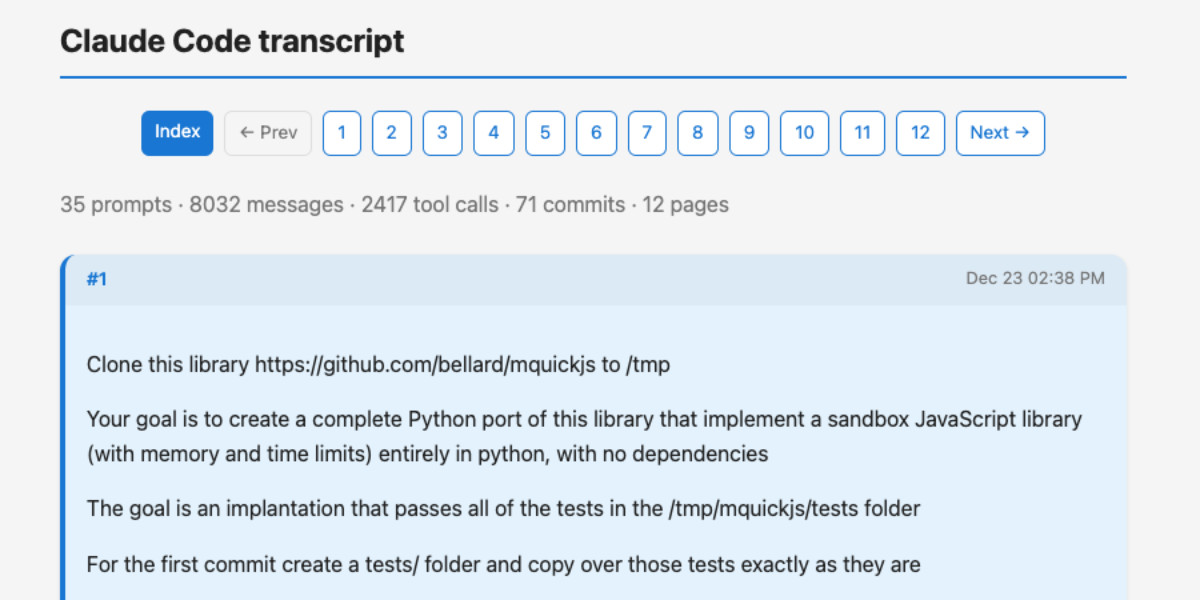

A new way to extract detailed transcripts from Claude Code

I’ve released claude-code-transcripts, a new Python CLI tool for converting Claude Code transcripts to detailed HTML pages that provide a better interface for understanding what Claude Code has done than even Claude Code itself. The resulting transcripts are also designed to be shared, using any static HTML hosting or even via GitHub Gists.

[... 1,082 words]swift-justhtml. First there was Emil Stenström's JustHTML in Python, then my justjshtml in JavaScript, then Anil Madhavapeddy's html5rw in OCaml, and now Kyle Howells has built a vibespiled dependency-free HTML5 parser for Swift using the same coding agent tricks against the html5lib-tests test suite.

Kyle ran some benchmarks to compare the different implementations:

- Rust (html5ever) total parse time: 303 ms

- Swift total parse time: 1313 ms

- JavaScript total parse time: 1035 ms

- Python total parse time: 4189 ms

Your job is to deliver code you have proven to work

In all of the debates about the value of AI-assistance in software development there’s one depressing anecdote that I keep on seeing: the junior engineer, empowered by some class of LLM tool, who deposits giant, untested PRs on their coworkers—or open source maintainers—and expects the “code review” process to handle the rest.

[... 840 words]AoAH Day 15: Porting a complete HTML5 parser and browser test suite (via) Anil Madhavapeddy is running an Advent of Agentic Humps this year, building a new useful OCaml library every day for most of December.

Inspired by Emil Stenström's JustHTML and my own coding agent port of that to JavaScript he coined the term vibespiling for AI-powered porting and transpiling of code from one language to another and had a go at building an HTML5 parser in OCaml, resulting in html5rw which passes the same html5lib-tests suite that Emil and myself used for our projects.

Anil's thoughts on the copyright and ethical aspects of this are worth quoting in full:

The question of copyright and licensing is difficult. I definitely did some editing by hand, and a fair bit of prompting that resulted in targeted code edits, but the vast amount of architectural logic came from JustHTML. So I opted to make the LICENSE a joint one with Emil Stenström. I did not follow the transitive dependency through to the Rust one, which I probably should.

I'm also extremely uncertain about every releasing this library to the central opam repository, especially as there are excellent HTML5 parsers already available. I haven't checked if those pass the HTML5 test suite, because this is wandering into the agents vs humans territory that I ruled out in my groundrules. Whether or not this agentic code is better or not is a moot point if releasing it drives away the human maintainers who are the source of creativity in the code!

I decided to credit Emil in the same way for my own vibespiled project.

I’ve been watching junior developers use AI coding assistants well. Not vibe coding—not accepting whatever the AI spits out. Augmented coding: using AI to accelerate learning while maintaining quality. [...]

The juniors working this way compress their ramp dramatically. Tasks that used to take days take hours. Not because the AI does the work, but because the AI collapses the search space. Instead of spending three hours figuring out which API to use, they spend twenty minutes evaluating options the AI surfaced. The time freed this way isn’t invested in another unprofitable feature, though, it’s invested in learning. [...]

If you’re an engineering manager thinking about hiring: The junior bet has gotten better. Not because juniors have changed, but because the genie, used well, accelerates learning.

— Kent Beck, The Bet On Juniors Just Got Better

I ported JustHTML from Python to JavaScript with Codex CLI and GPT-5.2 in 4.5 hours

I wrote about JustHTML yesterday—Emil Stenström’s project to build a new standards compliant HTML5 parser in pure Python code using coding agents running against the comprehensive html5lib-tests testing library. Last night, purely out of curiosity, I decided to try porting JustHTML from Python to JavaScript with the least amount of effort possible, using Codex CLI and GPT-5.2. It worked beyond my expectations.

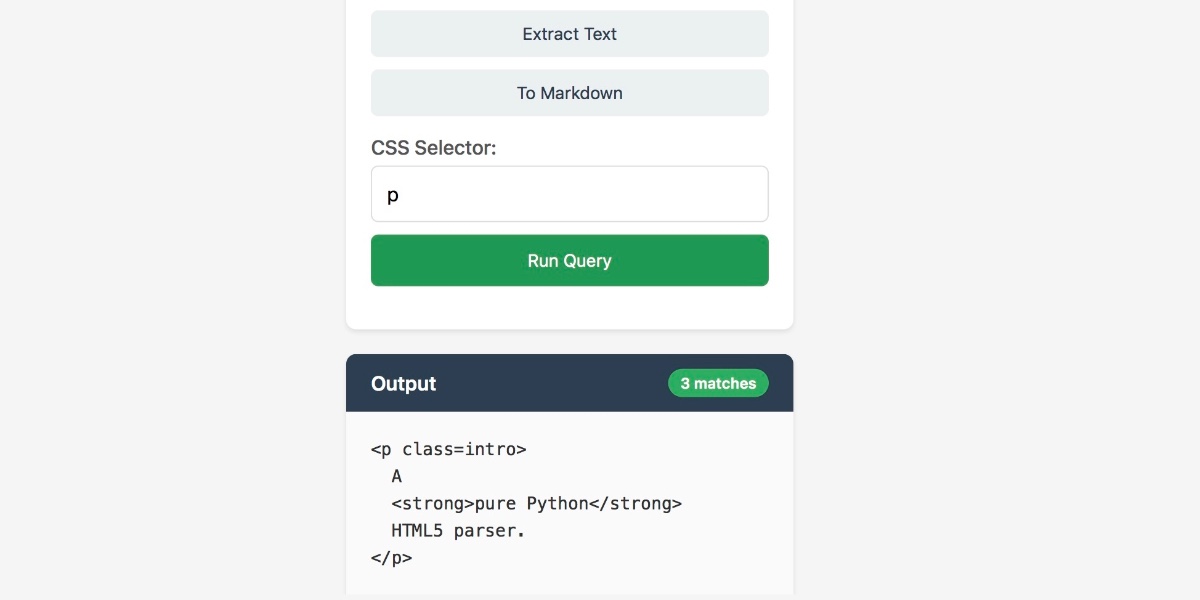

[... 1,818 words]JustHTML is a fascinating example of vibe engineering in action

I recently came across JustHTML, a new Python library for parsing HTML released by Emil Stenström. It’s a very interesting piece of software, both as a useful library and as a case study in sophisticated AI-assisted programming.

[... 956 words]If the part of programming you enjoy most is the physical act of writing code, then agents will feel beside the point. You’re already where you want to be, even just with some Copilot or Cursor-style intelligent code auto completion, which makes you faster while still leaving you fully in the driver’s seat about the code that gets written.

But if the part you care about is the decision-making around the code, agents feel like they clear space. They take care of the mechanical expression and leave you with judgment, tradeoffs, and intent. Because truly, for someone at my experience level, that is my core value offering anyway. When I spend time actually typing code these days with my own fingers, it feels like a waste of my time.

— Obie Fernandez, What happens when the coding becomes the least interesting part of the work

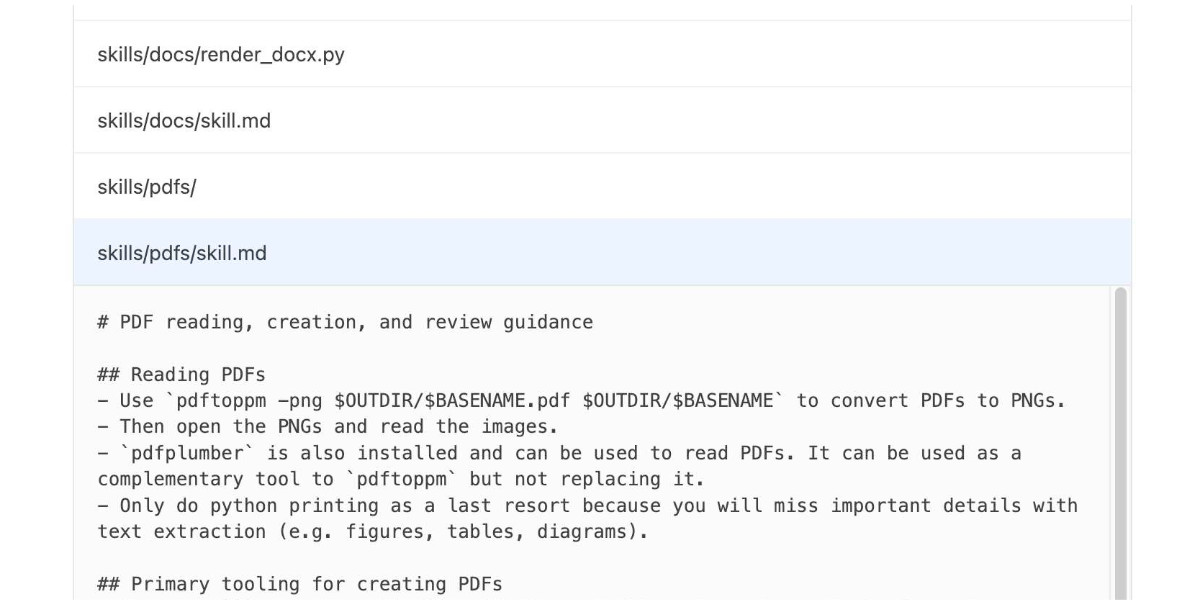

OpenAI are quietly adopting skills, now available in ChatGPT and Codex CLI

One of the things that most excited me about Anthropic’s new Skills mechanism back in October is how easy it looked for other platforms to implement. A skill is just a folder with a Markdown file and some optional extra resources and scripts, so any LLM tool with the ability to navigate and read from a filesystem should be capable of using them. It turns out OpenAI are doing exactly that, with skills support quietly showing up in both their Codex CLI tool and now also in ChatGPT itself.

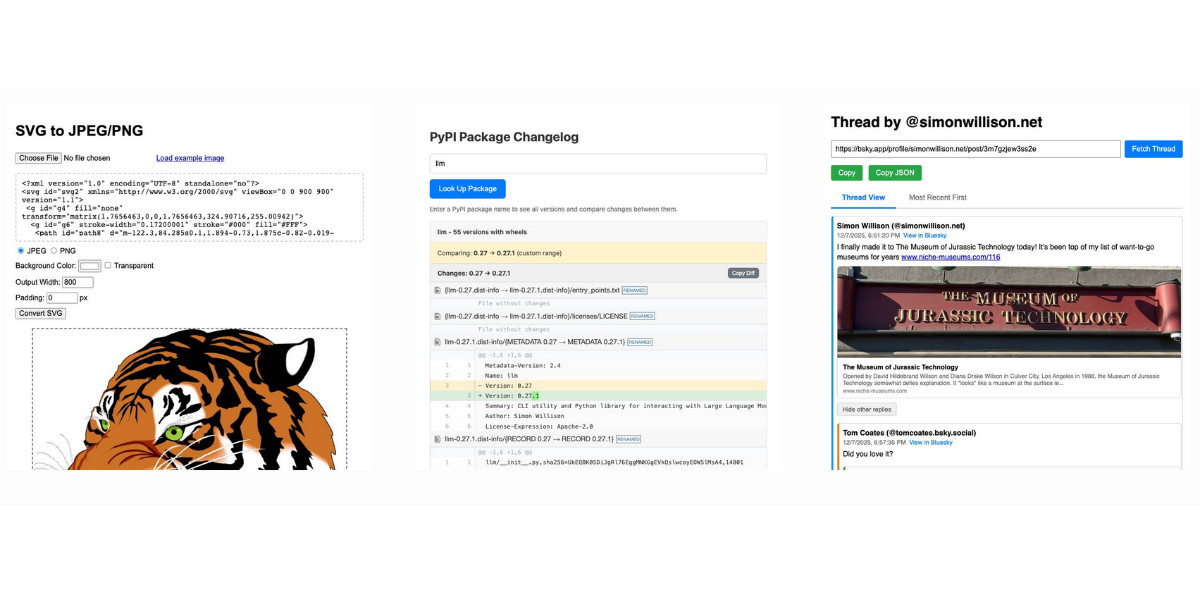

[... 1,360 words]Useful patterns for building HTML tools

I’ve started using the term HTML tools to refer to HTML applications that I’ve been building which combine HTML, JavaScript, and CSS in a single file and use them to provide useful functionality. I have built over 150 of these in the past two years, almost all of them written by LLMs. This article presents a collection of useful patterns I’ve discovered along the way.

[... 4,231 words]I've never been particularly invested dark v.s. light mode but I get enough people complaining that this site is "blinding" that I decided to see if Claude Code for web could produce a useful dark mode from my existing CSS. It did a decent job, using CSS properties, @media (prefers-color-scheme: dark) and a data-theme="dark" attribute based on this prompt:

Add a dark theme which is triggered by user media preferences but can also be switched on using localStorage - then put a little icon in the footer for toggling it between default auto, forced regular and forced dark mode

The site defaults to picking up the user's preferences, but there's also a toggle in the footer which switches between auto, forced-light and forced-dark. Here's an animated demo:

I had Claude Code make me that GIF from two static screenshots - it used this ImageMagick recipe:

magick -delay 300 -loop 0 one.png two.png \

-colors 128 -layers Optimize dark-mode.gif

The CSS ended up with some duplication due to the need to handle both the media preference and the explicit user selection. We fixed that with Cog.

mistralai/mistral-vibe. Here's the Apache 2.0 licensed source code for Mistral's new "Vibe" CLI coding agent, released today alongside Devstral 2.

It's a neat implementation of the now standard terminal coding agent pattern, built in Python on top of Pydantic and Rich/Textual (here are the dependencies.) Gemini CLI is TypeScript, Claude Code is closed source (TypeScript, now on top of Bun), OpenAI's Codex CLI is Rust. OpenHands is the other major Python coding agent I know of, but I'm likely missing some others. (UPDATE: Kimi CLI is another open source Apache 2 Python one.)

The Vibe source code is pleasant to read and the crucial prompts are neatly extracted out into Markdown files. Some key places to look:

- core/prompts/cli.md is the main system prompt ("You are operating as and within Mistral Vibe, a CLI coding-agent built by Mistral AI...")

- core/prompts/compact.md is the prompt used to generate compacted summaries of conversations ("Create a comprehensive summary of our entire conversation that will serve as complete context for continuing this work...")

- Each of the core tools has its own prompt file:

The Python implementations of those tools can be found here.

I tried it out and had it build me a Space Invaders game using three.js with the following prompt:

make me a space invaders game as HTML with three.js loaded from a CDN

Here's the source code and the live game (hosted in my new space-invaders-by-llms repo). It did OK.

Prediction: AI will make formal verification go mainstream (via) Martin Kleppmann makes the case for formal verification languages (things like Dafny, Nagini, and Verus) to finally start achieving more mainstream usage. Code generated by LLMs can benefit enormously from more robust verification, and LLMs themselves make these notoriously difficult systems easier to work with.

The paper Can LLMs Enable Verification in Mainstream Programming? by JetBrains Research in March 2025 found that Claude 3.5 Sonnet saw promising results for the three languages I listed above.

What to try first?

Run Claude Code in a repo (whether you know it well or not) and ask a question about how something works. You'll see how it looks through the files to find the answer.

The next thing to try is a code change where you know exactly what you want but it's tedious to type. Describe it in detail and let Claude figure it out. If there is similar code that it should follow, tell it so. From there, you can build intuition about more complex changes that it might be good at. [...]

As conversation length grows, each message gets more expensive while Claude gets dumber. That's a bad trade! [...] Run

/reset(or just quit and restart) to start over from scratch. Tell Claude to summarize the conversation so far to give you something to paste into the next chat if you want to save some of the context.

— David Crespo, Oxide's internal tips on LLM use

The Unexpected Effectiveness of One-Shot Decompilation with Claude (via) Chris Lewis decompiles N64 games. He wrote about this previously in Using Coding Agents to Decompile Nintendo 64 Games, describing his efforts to decompile Snowboard Kids 2 (released in 1999) using a "matching" process:

The matching decompilation process involves analysing the MIPS assembly, inferring its behaviour, and writing C that, when compiled with the same toolchain and settings, reproduces the exact code: same registers, delay slots, and instruction order. [...]

A good match is more than just C code that compiles to the right bytes. It should look like something an N64-era developer would plausibly have written: simple, idiomatic C control flow and sensible data structures.

Chris was getting some useful results from coding agents earlier on, but this new post describes how a switching to a new processing Claude Opus 4.5 and Claude Code has massively accelerated the project - as demonstrated started by this chart on the decomp.dev page for his project:

Here's the prompt he was using.

The big productivity boost was unlocked by switching to use Claude Code in non-interactive mode and having it tackle the less complicated functions (aka the lowest hanging fruit) first. Here's the relevant code from the driving Bash script:

simplest_func=$(python3 tools/score_functions.py asm/nonmatchings/ 2>&1) # ... output=$(claude -p "decompile the function $simplest_func" 2>&1 | tee -a tools/vacuum.log)

score_functions.py uses some heuristics to decide which of the remaining un-matched functions look to be the least complex.

TIL: Subtests in pytest 9.0.0+. I spotted an interesting new feature in the release notes for pytest 9.0.0: subtests.

I'm a big user of the pytest.mark.parametrize decorator - see Documentation unit tests from 2018 - so I thought it would be interesting to try out subtests and see if they're a useful alternative.

Short version: this parameterized test:

@pytest.mark.parametrize("setting", app.SETTINGS) def test_settings_are_documented(settings_headings, setting): assert setting.name in settings_headings

Becomes this using subtests instead:

def test_settings_are_documented(settings_headings, subtests): for setting in app.SETTINGS: with subtests.test(setting=setting.name): assert setting.name in settings_headings

Why is this better? Two reasons:

- It appears to run a bit faster

- Subtests can be created programatically after running some setup code first

I had Claude Code port several tests to the new pattern. I like it.

Django 6.0 released. Django 6.0 includes a flurry of neat features, but the two that most caught my eye are background workers and template partials.

Background workers started out as DEP (Django Enhancement Proposal) 14, proposed and shepherded by Jake Howard. Jake prototyped the feature in django-tasks and wrote this extensive background on the feature when it landed in core just in time for the 6.0 feature freeze back in September.

Kevin Wetzels published a useful first look at Django's background tasks based on the earlier RC, including notes on building a custom database-backed worker implementation.

Template Partials were implemented as a Google Summer of Code project by Farhan Ali Raza. I really like the design of this. Here's an example from the documentation showing the neat inline attribute which lets you both use and define a partial at the same time:

{# Define and render immediately. #}

{% partialdef user-info inline %}

<div id="user-info-{{ user.username }}">

<h3>{{ user.name }}</h3>

<p>{{ user.bio }}</p>

</div>

{% endpartialdef %}

{# Other page content here. #}

{# Reuse later elsewhere in the template. #}

<section class="featured-authors">

<h2>Featured Authors</h2>

{% for user in featured %}

{% partial user-info %}

{% endfor %}

</section>You can also render just a named partial from a template directly in Python code like this:

return render(request, "authors.html#user-info", {"user": user})

I'm looking forward to trying this out in combination with HTMX.

I asked Claude Code to dig around in my blog's source code looking for places that could benefit from a template partial. Here's the resulting commit that uses them to de-duplicate the display of dates and tags from pages that list multiple types of content, such as my tag pages.

TIL: Dependency groups and uv run.

I wrote up the new pattern I'm using for my various Python project repos to make them as easy to hack on with uv as possible. The trick is to use a PEP 735 dependency group called dev, declared in pyproject.toml like this:

[dependency-groups]

dev = ["pytest"]

With that in place, running uv run pytest will automatically install that development dependency into a new virtual environment and use it to run your tests.

This means you can get started hacking on one of my projects (here datasette-extract) with just these steps:

git clone https://github.com/datasette/datasette-extract

cd datasette-extract

uv run pytest

I also split my uv TILs out into a separate folder. This meant I had to setup redirects for the old paths, so I had Claude Code help build me a new plugin called datasette-redirects and then apply it to my TIL site, including updating the build script to correctly track the creation date of files that had since been renamed.

sqlite-utils 4.0a1 has several (minor) backwards incompatible changes

I released a new alpha version of sqlite-utils last night—the 128th release of that package since I started building it back in 2018.

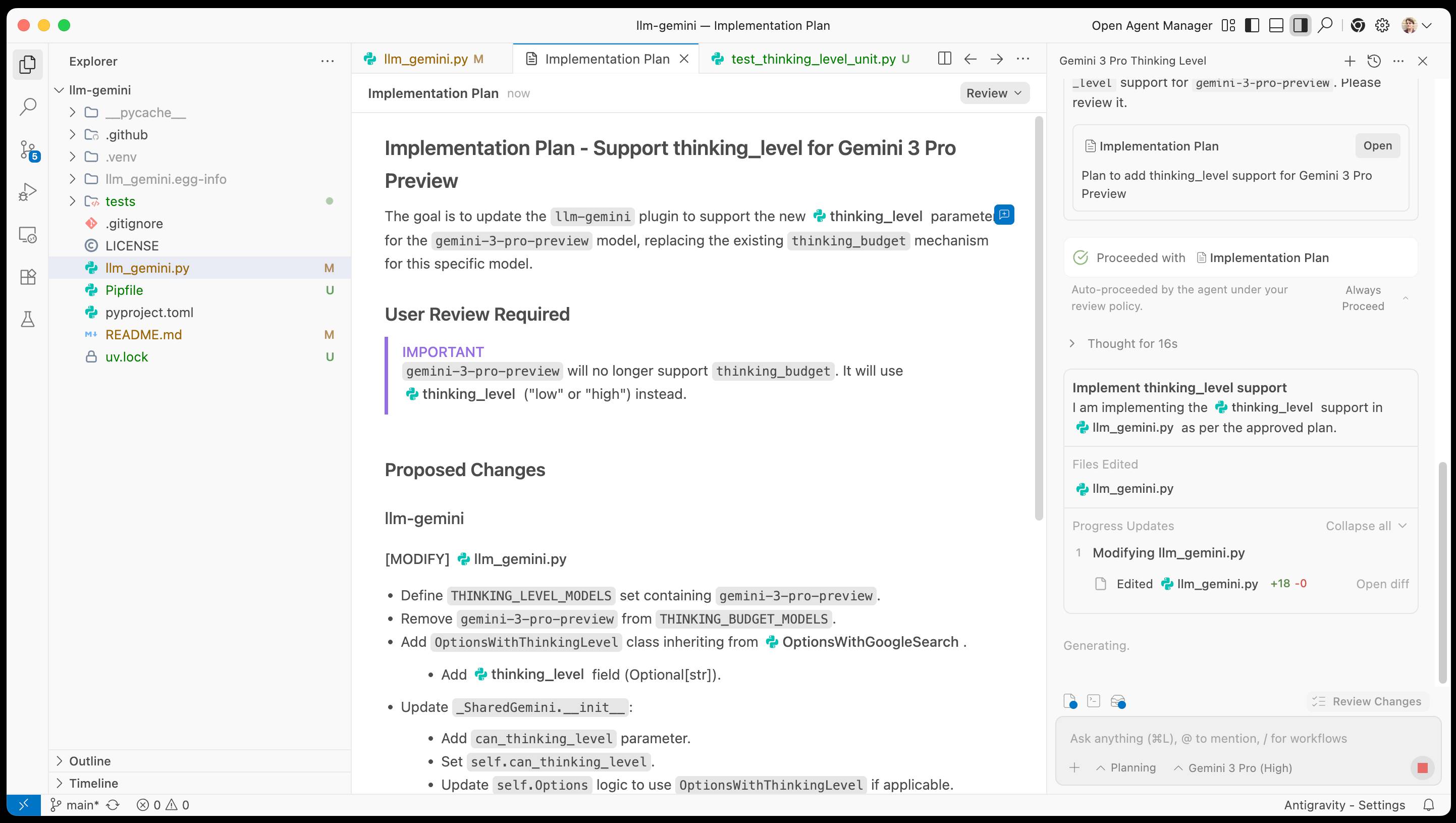

[... 1,049 words]Google Antigravity. Google's other major release today to accompany Gemini 3 Pro. At first glance Antigravity is yet another VS Code fork Cursor clone - it's a desktop application you install that then signs in to your Google account and provides an IDE for agentic coding against their Gemini models.

When you look closer it's actually a fair bit more interesting than that.

The best introduction right now is the official 14 minute Learn the basics of Google Antigravity video on YouTube, where product engineer Kevin Hou (who previously worked at Windsurf) walks through the process of building an app.

There are some interesting new ideas in Antigravity. The application itself has three "surfaces" - an agent manager dashboard, a traditional VS Code style editor and deep integration with a browser via a new Chrome extension. This plays a similar role to Playwright MCP, allowing the agent to directly test the web applications it is building.

Antigravity also introduces the concept of "artifacts" (confusingly not at all similar to Claude Artifacts). These are Markdown documents that are automatically created as the agent works, for things like task lists, implementation plans and a "walkthrough" report showing what the agent has done once it finishes.

I tried using Antigravity to help add support for Gemini 3 to my llm-gemini plugin.

It worked OK at first then gave me an "Agent execution terminated due to model provider overload. Please try again later" error. I'm going to give it another go after they've had a chance to work through those initial launch jitters.

The fate of “small” open source. Nolan Lawson asks if LLM assistance means that the category of tiny open source libraries like his own blob-util is destined to fade away.

Why take on additional supply chain risks adding another dependency when an LLM can likely kick out the subset of functionality needed by your own code to-order?

I still believe in open source, and I’m still doing it (in fits and starts). But one thing has become clear to me: the era of small, low-value libraries like

blob-utilis over. They were already on their way out thanks to Node.js and the browser taking on more and more of their functionality (seenode:glob,structuredClone, etc.), but LLMs are the final nail in the coffin.

I've been thinking about a similar issue myself recently as well.

Quite a few of my own open source projects exist to solve problems that are frustratingly hard to figure out. s3-credentials is a great example of this: it solves the problem of creating read-only or read-write credentials for an S3 bucket - something that I've always found infuriatingly difficult since you need to know to craft an IAM policy that looks something like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::my-s3-bucket"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectLegalHold",

"s3:GetObjectRetention",

"s3:GetObjectTagging"

],

"Resource": [

"arn:aws:s3:::my-s3-bucket/*"

]

}

]

}

Modern LLMs are very good at S3 IAM polices, to the point that if I needed to solve this problem today I doubt I would find it frustrating enough to justify finding or creating a reusable library to help.

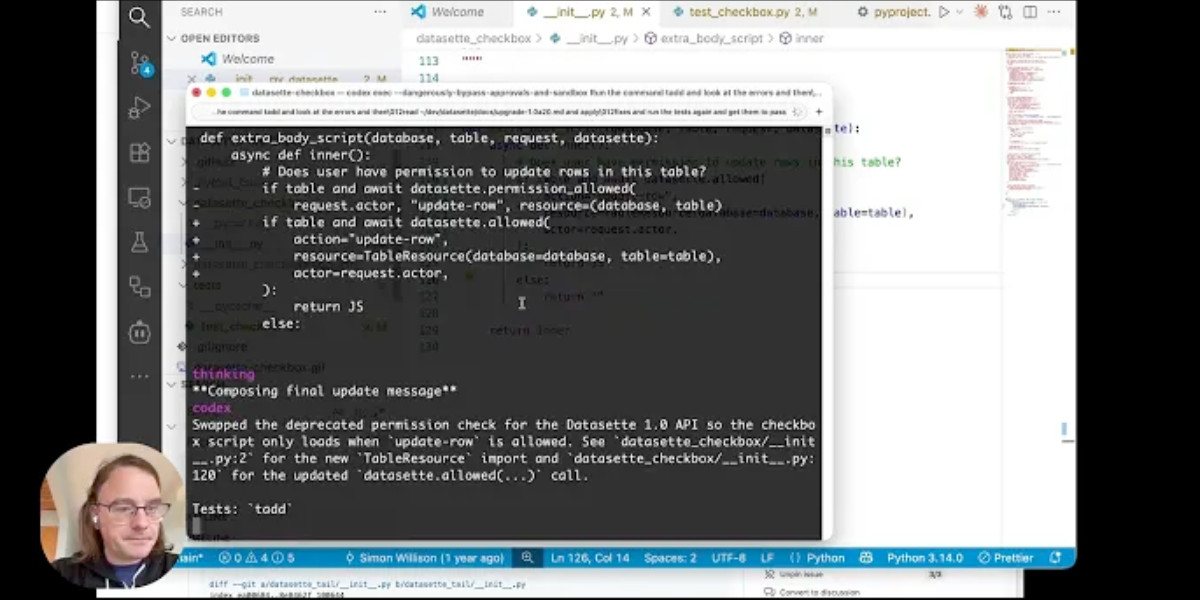

I've been upgrading a ton of Datasette plugins recently for compatibility with the Datasette 1.0a20 release from last week - 35 so far.

A lot of the work is very repetitive so I've been outsourcing it to Codex CLI. Here's the recipe I've landed on:

codex exec --dangerously-bypass-approvals-and-sandbox \

'Run the command tadd and look at the errors and then

read ~/dev/datasette/docs/upgrade-1.0a20.md and apply

fixes and run the tests again and get them to pass.

Also delete the .github directory entirely and replace

it by running this:

cp -r ~/dev/ecosystem/datasette-os-info/.github .

Run a git diff against that to make sure it looks OK

- if there are any notable differences e.g. switching

from Twine to the PyPI uploader or deleting code that

does a special deploy or configures something like

playwright include that in your final report.

If the project still uses setup.py then edit that new

test.yml and publish.yaml to mention setup.py not pyproject.toml

If this project has pyproject.toml make sure the license

line in that looks like this:

license = "Apache-2.0"

And remove any license thing from the classifiers= array

Update the Datasette dependency in pyproject.toml or

setup.py to "datasette>=1.0a21"

And make sure requires-python is >=3.10'I featured a simpler version of this prompt in my Datasette plugin upgrade video, but I've expanded it quite a bit since then.

At one point I had six terminal windows open running this same prompt against six different repos - probably my most extreme case of parallel agents yet.

Here are the six resulting commits from those six coding agent sessions:

Reverse engineering Codex CLI to get GPT-5-Codex-Mini to draw me a pelican

OpenAI partially released a new model yesterday called GPT-5-Codex-Mini, which they describe as "a more compact and cost-efficient version of GPT-5-Codex". It’s currently only available via their Codex CLI tool and VS Code extension, with proper API access "coming soon". I decided to use Codex to reverse engineer the Codex CLI tool and give me the ability to prompt the new model directly.

[... 1,774 words]I have AiDHD

It has never been easier to build an MVP and in turn, it has never been harder to keep focus. When new features always feel like they're just a prompt away, feature creep feels like a never ending battle. Being disciplined is more important than ever.

AI still doesn't change one very important thing: you still need to make something people want. I think that getting users (even free ones) will become significantly harder as the bar for user's time will only get higher as their options increase.

Being quicker to get to the point of failure is actually incredibly valuable. Even just over a year ago, many of these projects would have taken months to build.

— Josh Cohenzadeh, AiDHD

My hunch is that existing LLMs make it easier to build a new programming language in a way that captures new developers.

Most programming languages are similar enough to existing languages that you only need to know a small number of details to use them: what's the core syntax for variables, loops, conditionals and functions? How does memory management work? What's the concurrency model?

For many languages you can fit all of that, including illustrative examples, in a few thousand tokens of text.

So ship your new programming language with a Claude Skills style document and give your early adopters the ability to write it with LLMs. The LLMs should handle that very well, especially if they get to run an agentic loop against a compiler or even a linter that you provide.

This post started as a comment.

Video + notes on upgrading a Datasette plugin for the latest 1.0 alpha, with help from uv and OpenAI Codex CLI

I’m upgrading various plugins for compatibility with the new Datasette 1.0a20 alpha release and I decided to record a video of the process. This post accompanies that video with detailed additional notes.

[... 1,094 words]Code research projects with async coding agents like Claude Code and Codex

I’ve been experimenting with a pattern for LLM usage recently that’s working out really well: asynchronous code research tasks. Pick a research question, spin up an asynchronous coding agent and let it go and run some experiments and report back when it’s done.

[... 2,017 words]