Posts tagged ai, llms in 2023

Filters: Year: 2023 × ai × llms × Sorted by date

YouTube: Intro to Large Language Models. Andrej Karpathy is an outstanding educator, and this one hour video offers an excellent technical introduction to LLMs.

At 42m Andrej expands on his idea of LLMs as the center of a new style of operating system, tying together tools and and a filesystem and multimodal I/O.

There’s a comprehensive section on LLM security—jailbreaking, prompt injection, data poisoning—at the 45m mark.

I also appreciated his note on how parameter size maps to file size: Llama 70B is 140GB, because each of those 70 billion parameters is a 2 byte 16bit floating point number on disk.

Claude: How to use system prompts. Documentation for the new system prompt support added in Claude 2.1. The design surprises me a little: the system prompt is just the text that comes before the first instance of the text “Human: ...”—but Anthropic promise that instructions in that section of the prompt will be treated differently and followed more closely than any instructions that follow.

This whole page of documentation is giving me some pretty serious prompt injection red flags to be honest. Anthropic’s recommended way of using their models is entirely based around concatenating together strings of text using special delimiter phrases.

I’ll give it points for honesty though. OpenAI use JSON to field different parts of the prompt, but under the hood they’re all concatenated together with special tokens into a single token stream.

Introducing Claude 2.1. Anthropic’s Claude used to have the longest token context of any of the major models: 100,000 tokens, which is about 300 pages. Then GPT-4 Turbo came out with 128,000 tokens and Claude lost one of its key differentiators.

Claude is back! Version 2.1, announced today, bumps the token limit up to 200,000—and also adds support for OpenAI-style system prompts, a feature I’ve been really missing.

They also announced tool use, but that’s only available for a very limited set of partners to preview at the moment.

The EU AI Act now proposes to regulate “foundational models”, i.e. the engine behind some AI applications. We cannot regulate an engine devoid of usage. We don’t regulate the C language because one can use it to develop malware. Instead, we ban malware and strengthen network systems (we regulate usage). Foundational language models provide a higher level of abstraction than the C language for programming computer systems; nothing in their behaviour justifies a change in the regulatory framework.

— Arthur Mensch, Mistral AI

Fleet Context. This project took the source code and documentation for 1221 popular Python libraries and ran them through the OpenAI text-embedding-ada-002 embedding model, then made those pre-calculated embedding vectors available as Parquet files for download from S3 or via a custom Python CLI tool.

I haven’t seen many projects release pre-calculated embeddings like this, it’s an interesting initiative.

Exploring GPTs: ChatGPT in a trench coat?

The biggest announcement from last week’s OpenAI DevDay (and there were a LOT of announcements) was GPTs. Users of ChatGPT Plus can now create their own, custom GPT chat bots that other Plus subscribers can then talk to.

[... 5,699 words][On Meta's Galactica LLM launch] We did this with a 8 person team which is an order of magnitude fewer people than other LLM teams at the time.

We were overstretched and lost situational awareness at launch by releasing demo of a base model without checks. We were aware of what potential criticisms would be, but we lost sight of the obvious in the workload we were under.

One of the considerations for a demo was we wanted to understand the distribution of scientific queries that people would use for LLMs (useful for instruction tuning and RLHF). Obviously this was a free goal we gave to journalists who instead queried it outside its domain. But yes we should have known better.

We had a “good faith” assumption that we’d share the base model, warts and all, with four disclaimers about hallucinations on the demo - so people could see what it could do (openness). Again, obviously this didn’t work.

A Coder Considers the Waning Days of the Craft (via) James Somers in the New Yorker, talking about the impact of GPT-4 on programming as a profession. Despite the headline this piece is a nuanced take on this subject, which I found myself mostly agreeing with.

I particularly liked this bit, which reflects my most optimistic viewpoint: I think AI assisted programming is going to shave a lot of the frustration off learning to code, which I hope brings many more people into the fold:

What I learned was that programming is not really about knowledge or skill but simply about patience, or maybe obsession. Programmers are people who can endure an endless parade of tedious obstacles.

Two things in AI may need regulation: reckless deployment of certain potentially harmful AI applications (same as any software really), and monopolistic behavior on the part of certain LLM providers. The technology itself doesn't need regulation anymore than databases or transistors. [...] Putting size/compute caps on deep learning models is akin to putting size caps on databases or transistor count caps on electronics. It's pointless and it won't age well.

ChatGPT: Dejargonizer. I built a custom GPT. Paste in some text with unknown jargon or acronyms and it will try to guess the context and give you back an explanation of each term.

AGI is Being Achieved Incrementally (OpenAI DevDay w/ Simon Willison, Alex Volkov, Jim Fan, Raza Habib, Shreya Rajpal, Rahul Ligma, et al). I participated in an an hour long conversation today about the new things released at OpenAI DevDay, now available on the Latent Space podcast.

Fine-tuning GPT3.5-turbo based on 140k slack messages. Ross Lazerowitz spent $83.20 creating a fine-tuned GPT-3.5 turbo model based on 140,000 of his Slack messages (10,399,747 tokens), massaged into a JSONL file suitable for use with the OpenAI fine-tuning API.

Then he told the new model “write a 500 word blog post on prompt engineering”, and it replied “Sure, I shall work on that in the morning”.

ospeak: a CLI tool for speaking text in the terminal via OpenAI

I attended OpenAI DevDay today, the first OpenAI developer conference. It was a lot. They released a bewildering array of new API tools, which I’m just beginning to wade my way through fully understanding.

[... 1,109 words]YouTube: OpenAssistant is Completed—by Yannic Kilcher (via) The OpenAssistant project was an attempt to crowdsource the creation of an alternative to ChatGPT, using human volunteers to build a Reinforcement Learning from Human Feedback (RLHF) dataset suitable for training this kind of model.

The project started in January. In this video from 24th October project founder Yannic Kilcher announces that the project is now shutting down.

They’ve declared victory in that the dataset they collected has been used by other teams as part of their training efforts, but admit that the overhead of running the infrastructure and moderation teams necessary for their project is more than they can continue to justify.

Hacking Google Bard—From Prompt Injection to Data Exfiltration (via) Bard recently grew extension support, allowing it access to a user’s personal documents. Here’s the first reported prompt injection attack against that.

This kind of attack against LLM systems is inevitable any time you combine access to private data with exposure to untrusted inputs. In this case the attack vector is a Google Doc shared with the user, containing prompt injection instructions that instruct the model to encode previous data into an URL and exfiltrate it via a markdown image.

Google’s CSP headers restrict those images to *.google.com—but it turns out you can use Google AppScript to run your own custom data exfiltration endpoint on script.google.com.

Google claim to have fixed the reported issue—I’d be interested to learn more about how that mitigation works, and how robust it is against variations of this attack.

Now add a walrus: Prompt engineering in DALL‑E 3

Last year I wrote about my initial experiments with DALL-E 2, OpenAI’s image generation model. I’ve been having an absurd amount of fun playing with its sequel, DALL-E 3 recently. Here are some notes, including a peek under the hood and some notes on the leaked system prompt.

[... 3,505 words]If a LLM is like a database of millions of vector programs, then a prompt is like a search query in that database [...] this “program database” is continuous and interpolative — it’s not a discrete set of programs. This means that a slightly different prompt, like “Lyrically rephrase this text in the style of x” would still have pointed to a very similar location in program space, resulting in a program that would behave pretty closely but not quite identically. [...] Prompt engineering is the process of searching through program space to find the program that empirically seems to perform best on your target task.

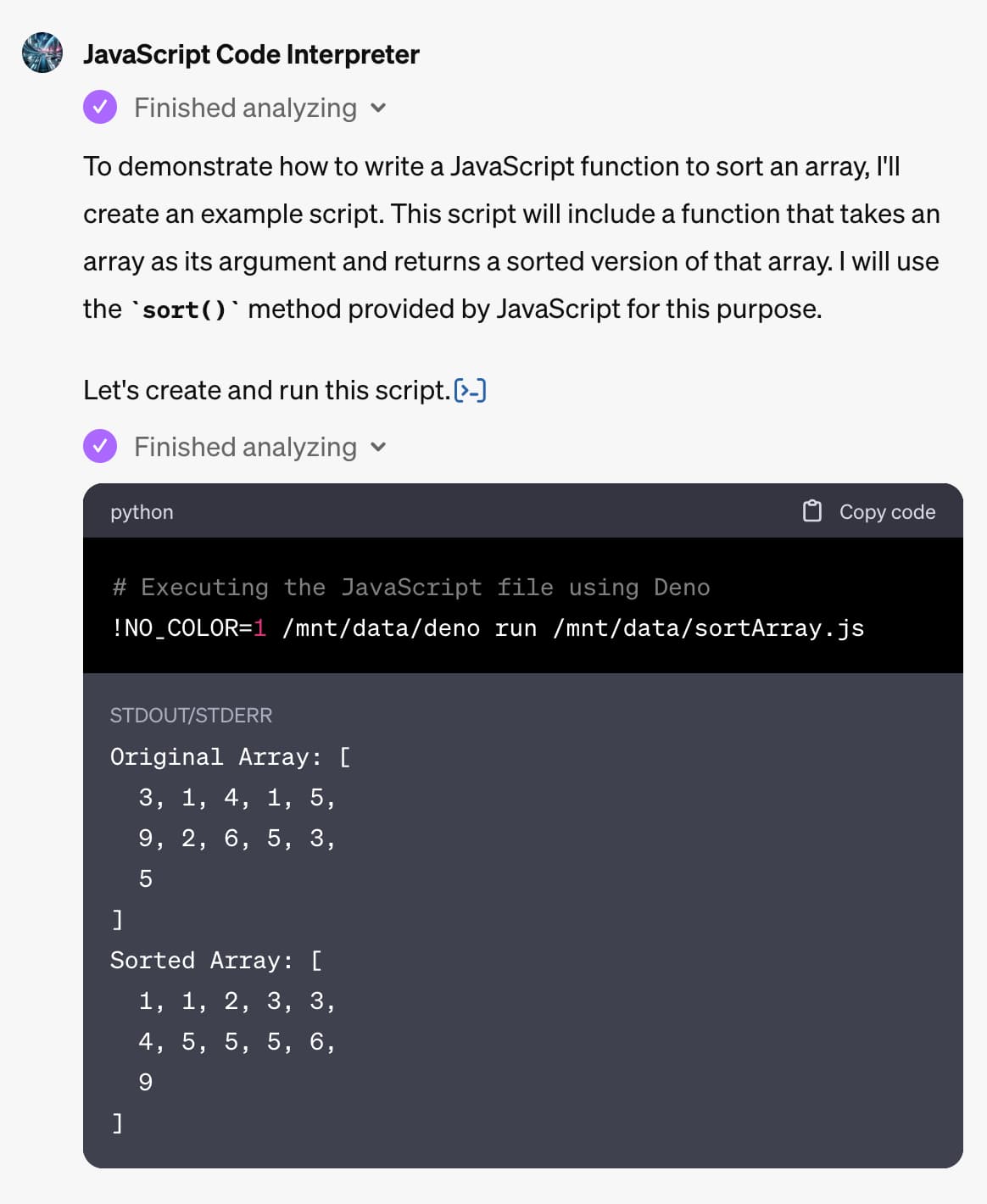

Open questions for AI engineering

Last week I gave the closing keynote at the AI Engineer Summit in San Francisco. I was asked by the organizers to both summarize the conference, summarize the last year of activity in the space and give the audience something to think about by posing some open questions for them to take home.

[... 6,928 words]Multimodality and Large Multimodal Models (LMMs) (via) Useful, extensive review of the current state of the art of multimodal models by Chip Huyen. Chip calls them LMMs for Large Multimodal Models, a term that seems to be catching on.

Bottleneck T5 Text Autoencoder (via) Colab notebook by Linus Lee demonstrating his Contra Bottleneck T5 embedding model, which can take up to 512 tokens of text, convert that into a 1024 floating point number embedding vector... and then then reconstruct the original text (or a close imitation) from the embedding again.

This allows for some fascinating tricks, where you can do things like generate embeddings for two completely different sentences and then reconstruct a new sentence that combines the weights from both.

Claude was trained on data up until December 2022, but may know some events into early 2023.

Decomposing Language Models Into Understandable Components. Anthropic appear to have made a major breakthrough with respect to the interpretability of Large Language Models:

“[...] we outline evidence that there are better units of analysis than individual neurons, and we have built machinery that lets us find these units in small transformer models. These units, called features, correspond to patterns (linear combinations) of neuron activations. This provides a path to breaking down complex neural networks into parts we can understand”

Think before you speak: Training Language Models With Pause Tokens. Another example of how much low hanging fruit remains to be discovered in basic Large Language Model research: this team from Carnegie Mellon and Google Research note that, since LLMs get to run their neural networks once for each token of input and output, inserting “pause” tokens that don’t output anything at all actually gives them extra opportunities to “think” about their output.

Translating Latin demonology manuals with GPT-4 and Claude (via) UC Santa Cruz history professor Benjamin Breen puts LLMs to work on historical texts. They do an impressive job of translating flaky OCRd text from 1599 Latin and 1707 Portuguese.

“It’s not about getting the AI to replace you. Instead, it’s asking the AI to act as a kind of polymathic research assistant to supply you with leads.”

Weeknotes: the Datasette Cloud API, a podcast appearance and more

Datasette Cloud now has a documented API, plus a podcast appearance, some LLM plugins work and some geospatial excitement.

[... 1,243 words]Talking Large Language Models with Rooftop Ruby

I’m on the latest episode of the Rooftop Ruby podcast with Collin Donnell and Joel Drapper, talking all things LLM.

[... 15,489 words]Looking at LLMs as chatbots is the same as looking at early computers as calculators. We're seeing an emergence of a whole new computing paradigm, and it is very early.

The profusion of dubious A.I.-generated content resembles the badly made stockings of the nineteenth century. At the time of the Luddites, many hoped the subpar products would prove unacceptable to consumers or to the government. Instead, social norms adjusted.

Rethinking the Luddites in the Age of A.I. I’ve been staying way clear of comparisons to Luddites in conversations about the potential harmful impacts of modern AI tools, because it seemed to me like an offensive, unproductive cheap shot.

This article has shown me that the comparison is actually a lot more relevant—and sympathetic—than I had realized.

In a time before labor unions, the Luddites represented an early example of a worker movement that tried to stand up for their rights in the face of transformational, negative change to their specific way of life.

“Knitting machines known as lace frames allowed one employee to do the work of many without the skill set usually required” is a really striking parallel to what’s starting to happen with a surprising array of modern professions already.

We already know one major effect of AI on the skills distribution: AI acts as a skills leveler for a huge range of professional work. If you were in the bottom half of the skill distribution for writing, idea generation, analyses, or any of a number of other professional tasks, you will likely find that, with the help of AI, you have become quite good.