Notes

Filters: Sorted by date

Having watched this morning's Sora 2 introduction video, the most notable feature (aside from audio generation - original Sora was silent, Google's Veo 3 supported audio in May 2025) looks to be what OpenAI are calling "cameos" - the ability to easily capture a video version of yourself or your friends and then use them as characters in generated videos.

My guess is that they are leaning into this based on the incredible success of ChatGPT image generation in March - possibly the most successful product launch of all time, signing up 100 million new users in just the first week after release.

The driving factor for that success? People love being able to create personalized images of themselves, their friends and their family members.

Google saw a similar effect with their Nano Banana image generation model. Gemini VP Josh Woodward tweeted on 24th September:

🍌 @GeminiApp just passed 5 billion images in less than a month.

Sora 2 cameos looks to me like an attempt to capture that same viral magic but for short-form videos, not images.

Update: I got an invite. Here's "simonw performing opera on stage at the royal albert hall in a very fine purple suit with crows flapping around his head dramatically standing in front of a night orchestrion" (it was meant to be a mighty orchestrion but I had a typo.)

If you hide the system prompt and tool descriptions for your LLM agent, what you're actually doing is deliberately hiding the most useful documentation describing your service from your most sophisticated users!

It's been an extremely busy day for team Qwen. Within the last 24 hours (all links to Twitter, which seems to be their preferred platform for these announcements):

- Qwen3-Next-80B-A3B-Instruct-FP8 and Qwen3-Next-80B-A3B-Thinking-FP8 - official FP8 quantized versions of their Qwen3-Next models. On Hugging Face Qwen3-Next-80B-A3B-Instruct is 163GB and Qwen3-Next-80B-A3B-Instruct-FP8 is 82.1GB. I wrote about Qwen3-Next on Friday 12th September.

- Qwen3-TTS-Flash provides "multi-timbre, multi-lingual, and multi-dialect speech synthesis" according to their blog announcement. It's not available as open weights, you have to access it via their API instead. Here's a free live demo.

- Qwen3-Omni is today's most exciting announcement: a brand new 30B parameter "omni" model supporting text, audio and video input and text and audio output! You can try it on chat.qwen.ai by selecting the "Use voice and video chat" icon - you'll need to be signed in using Google or GitHub. This one is open weights, as Apache 2.0 Qwen3-Omni-30B-A3B-Instruct, Qwen/Qwen3-Omni-30B-A3B-Thinking, and Qwen3-Omni-30B-A3B-Captioner on HuggingFace. That Instruct model is 70.5GB so this should be relatively accessible for running on expensive home devices.

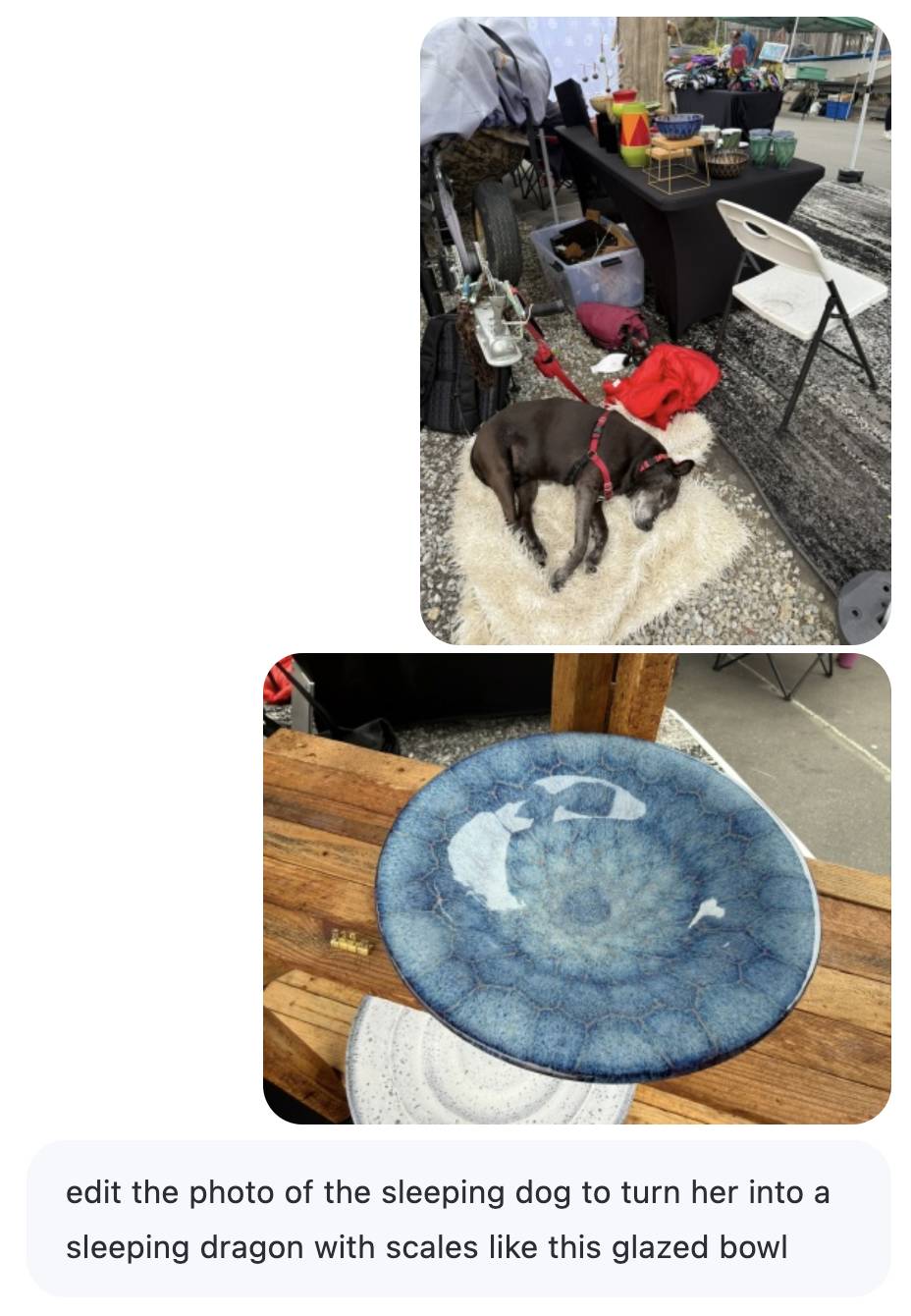

- Qwen-Image-Edit-2509 is an updated version of their excellent Qwen-Image-Edit model which I first tried last month. Their blog post calls it "the monthly iteration of Qwen-Image-Edit" so I guess they're planning more frequent updates. The new model adds multi-image inputs. I used it via chat.qwen.ai to turn a photo of our dog into a dragon in the style of one of Natalie's ceramic pots.

Here's the prompt I used, feeding in two separate images. Weirdly it used the edges of the landscape photo to fill in the gaps on the otherwise portrait output. It turned the chair seat into a bowl too!

Mistral quietly released two new models yesterday: Magistral Small 1.2 (Apache 2.0, 96.1 GB on Hugging Face) and Magistral Medium 1.2 (not open weights same as Mistral's other "medium" models.)

Despite being described as "minor updates" to the Magistral 1.1 models these have one very notable improvement:

- Multimodality: Now equipped with a vision encoder, these models handle both text and images seamlessly.

Magistral is Mistral's reasoning model, so we now have a new reasoning vision LLM.

The other features from the tiny announcement on Twitter:

- Performance Boost: 15% improvements on math and coding benchmarks such as AIME 24/25 and LiveCodeBench v5/v6.

- Smarter Tool Use: Better tool usage with web search, code interpreter, and image generation.

- Better Tone & Persona: Responses are clearer, more natural, and better formatted for you.

In July it was the International Math Olympiad (OpenAI, Gemini), today it's the International Collegiate Programming Contest (ICPC). Once again, both OpenAI and Gemini competed with models that achieved Gold medal performance.

OpenAI's Mostafa Rohaninejad:

We received the problems in the exact same PDF form, and the reasoning system selected which answers to submit with no bespoke test-time harness whatsoever. For 11 of the 12 problems, the system’s first answer was correct. For the hardest problem, it succeeded on the 9th submission. Notably, the best human team achieved 11/12.

We competed with an ensemble of general-purpose reasoning models; we did not train any model specifically for the ICPC. We had both GPT-5 and an experimental reasoning model generating solutions, and the experimental reasoning model selecting which solutions to submit. GPT-5 answered 11 correctly, and the last (and most difficult problem) was solved by the experimental reasoning model.

And here's the blog post by Google DeepMind's Hanzhao (Maggie) Lin and Heng-Tze Cheng:

An advanced version of Gemini 2.5 Deep Think competed live in a remote online environment following ICPC rules, under the guidance of the competition organizers. It started 10 minutes after the human contestants and correctly solved 10 out of 12 problems, achieving gold-medal level performance under the same five-hour time constraint. See our solutions here.

I'm still trying to confirm if the models had access to tools in order to execute the code they were writing. The IMO results in July were both achieved without tools.

Update 27th September 2025: OpenAI researcher Ahmed El-Kishky confirms that OpenAI's model had a code execution environment but no internet:

For OpenAI, the models had access to a code execution sandbox, so they could compile and test out their solutions. That was it though; no internet access.

Here's an interesting example of models incrementally improving over time: I am finding that today's leading models are competent at writing prompts for themselves and each other.

A year ago I was quite skeptical of the pattern where models are used to help build prompts. Prompt engineering was still a young enough discipline that I did not expect the models to have enough training data to be able to prompt themselves better than a moderately experienced human.

The Claude 4 and GPT-5 families both have training cut-off dates within the past year - recent enough that they've seen a decent volume of good prompting examples.

I expect they have also been deliberately trained for this. Anthropic make extensive use of sub-agent patterns in Claude Code, and published a fascinating article on that pattern (my notes on that).

I don't have anything solid to back this up - it's more of a hunch based on anecdotal evidence where various of my requests for a model to write a prompt have returned useful results over the last few months.

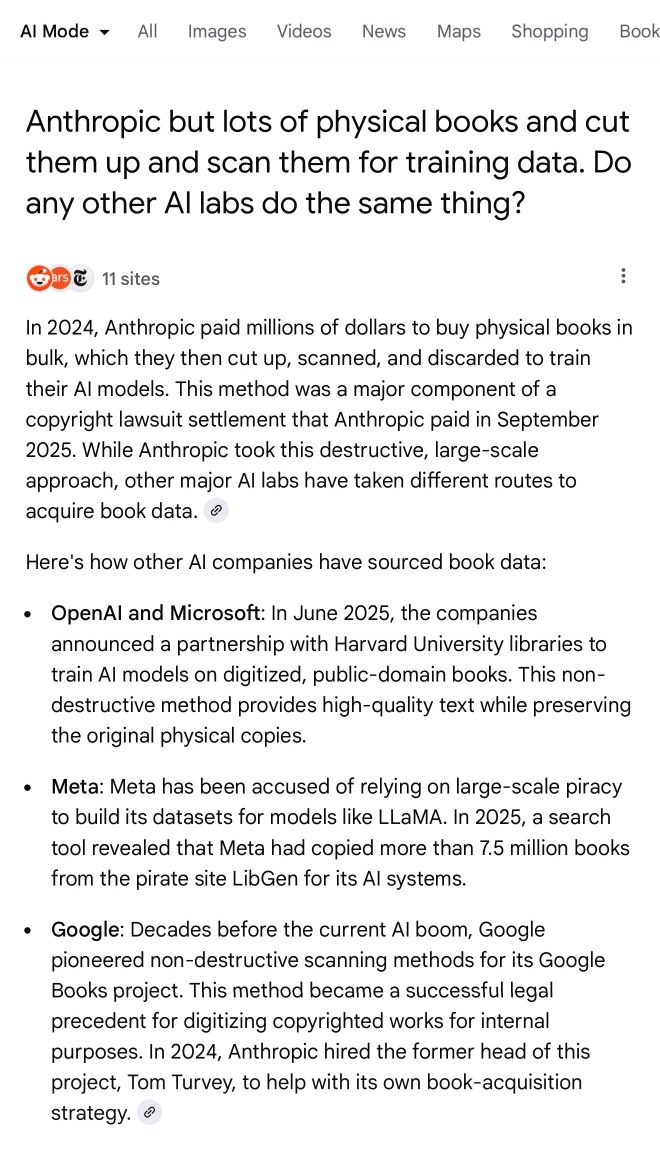

When I wrote about how good ChatGPT with GPT-5 is at search yesterday I nearly added a note about how comparatively disappointing Google's efforts around this are.

I'm glad I left that out, because it turns out Google's new "AI mode" is genuinely really good! It feels very similar to GPT-5 search but returns results much faster.

www.google.com/ai (not available in the EU, as I found out this morning since I'm staying in France for a few days.)

Here's what I got for the following question:

Anthropic but lots of physical books and cut them up and scan them for training data. Do any other AI labs do the same thing?

I'll be honest: I hadn't spent much time with AI mode for a couple of reasons:

- My expectations of "AI mode" were extremely low based on my terrible experience of "AI overviews"

- The name "AI mode" is so generic!

Based on some initial experiments I'm impressed - Google finally seem to be taking full advantage of their search infrastructure for building out truly great AI-assisted search.

I do have one disappointment: AI mode will tell you that it's "running 5 searches" but it won't tell you what those searches are! Seeing the searches that were run is really important for me in evaluating the likely quality of the end results. I've had the same problem with Google's Gemini app in the past - the lack of transparency as to what it's doing really damages my trust.

Any time I share my collection of tools built using vibe coding and AI-assisted development (now at 124, here's the definitive list) someone will inevitably complain that they're mostly trivial.

A lot of them are! Here's a list of some that I think are genuinely useful and worth highlighting:

- OCR PDFs and images directly in your browser. This is the tool that started the collection, and I still use it on a regular basis. You can open any PDF in it (even PDFs that are just scanned images with no embedded text) and it will extract out the text so you can copy-and-paste it. It uses PDF.js and Tesseract.js to do that entirely in the browser. I wrote about how I originally built that here.

- Annotated Presentation Creator - this one is so useful. I use it to turn talks that I've given into full annotated presentations, where each slide is accompanied by detailed notes. I have 29 blog entries like that now and most of them were written with the help of this tool. Here's how I built that, plus follow-up prompts I used to improve it.

- Image resize, crop, and quality comparison - I use this for every single image I post to my blog. It lets me drag (or paste) an image onto the page and then shows me a comparison of different sizes and quality settings, each of which I can download and then upload to my S3 bucket. I recently added a slightly janky but mobile-accessible cropping tool as well. Prompts.

- Social Media Card Cropper - this is an even more useful image tool. Bluesky, Twitter etc all benefit from a 2x1 aspect ratio "card" image. I built this custom tool for creating those - you can paste in an image and crop and zoom it to the right dimensions. I use this all the time. Prompts.

- SVG to JPEG/PNG - every time I publish an SVG of a pelican riding a bicycle I use this tool to turn that SVG into a JPEG or PNG. Prompts.

- Encrypt / decrypt message - I often run workshops where I want to distribute API keys to the workshop participants. This tool lets me encrypt a message with a passphrase, then share the resulting URL to the encrypted message and tell people (with a note on a slide) how to decrypt it. Prompt.

- Jina Reader - enter a URL, get back a Markdown version of the page. It's a thin wrapper over the Jina Reader API, but it's useful because it adds a "copy to clipboard" button which means it's one of the fastest way to turn a webpage into data on a clipboard on my mobile phone. I use this several times a week. Prompts.

- llm-prices.com - a pricing comparison and token pricing calculator for various hosted LLMs. This one started out as a tool but graduated to its own domain name. Here's the prompting development history.

- Open Sauce 2025 - an unofficial schedule for the Open Sauce conference, complete with option to export to ICS plus a search tool and now-and-next. I built this entirely on my phone using OpenAI Codex, including scraping the official schedule - full details here.

- Hacker News Multi-Term Histogram - compare search terms on Hacker News to see how their relative popularity changed over time. Prompts.

- Passkey experiment - a UI for trying out the Passkey / WebAuthn APIs that are built into browsers these days. Prompts.

- Incomplete JSON Pretty Printer - do you ever find yourself staring at a screen full of JSON that isn't completely valid because it got truncated? This tool will pretty-print it anyway. Prompts.

- Bluesky WebSocket Feed Monitor - I found out Bluesky has a Firehose API that can be accessed directly from the browser, so I vibe-coded up this tool to try it out. Prompts.

In putting this list together I realized I wanted to be able to link to the prompts for each tool... but those were hidden inside a collapsed <details><summary> element for each one. So I fired up OpenAI Codex and prompted:

Update the script that builds the colophon.html page such that the generated page has a tiny bit of extra JavaScript - when the page is loaded as e.g. https://tools.simonwillison.net/colophon#jina-reader.html it should notice the #jina-reader.html fragment identifier and ensure that the Development history details/summary for that particular tool is expanded when the page loads.

It authored this PR for me which fixed the problem.

I just sent out my August 2025 sponsors-only newsletter summarizing the past month in LLMs and my other work. Topics included GPT-5, gpt-oss, image editing models (Qwen-Image-Edit and Gemini Nano Banana), other significant model releases and the tools I'm using at the moment.

If you'd like a preview of the newsletter, here's the July 2025 edition I sent out a month ago.

New sponsors get access to the full archive. If you start sponsoring for $10/month or more right now you'll get instant access to the August edition in my simonw-private/monthly GitHub repository.

If you've already read all 85 posts I wrote in August the newsletter acts mainly as a recap, but I've had positive feedback from people who prefer to get the monthly edited highlights over reading the firehose that is my blog!

Here's the table of contents for the August newsletter:

- GPT-5

- OpenAl's open models: gpt-oss-120b and gpt-oss-20b

- Other significant model releases in August

- Image editing: Qwen-Image-Edit and Gemini Nano Banana

- More prompt injection and more lethal trifecta

- Tools I'm using at the moment

- Bonus links

Since I love collecting questionable analogies for LLMs, here's a new one I just came up with: an LLM is a lossy encyclopedia. They have a huge array of facts compressed into them but that compression is lossy (see also Ted Chiang).

The key thing is to develop an intuition for questions it can usefully answer vs questions that are at a level of detail where the lossiness matters.

This thought sparked by a comment on Hacker News asking why an LLM couldn't "Create a boilerplate Zephyr project skeleton, for Pi Pico with st7789 spi display drivers configured". That's more of a lossless encyclopedia question!

My answer:

The way to solve this particular problem is to make a correct example available to it. Don't expect it to just know extremely specific facts like that - instead, treat it as a tool that can act on facts presented to it.

Today I learned - via a proposal to remove mentions of XSLT from the HTML spec - that congress.gov uses XSLT to serve XML bills as XHTML - here's H. R. 3617 117th CONGRESS 1st Session for example.

View source on that page and it starts like this:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="billres.xsl"?> <!DOCTYPE bill PUBLIC "-//US Congress//DTDs/bill.dtd//EN" "bill.dtd"> <bill bill-stage="Introduced-in-House" dms-id="H5BD50AB7712141319B352D46135AAC2B" public-private="public" key="H" bill-type="olc"> <metadata xmlns:dc="http://purl.org/dc/elements/1.1/"> <dublinCore> <dc:title>117 HR 3617 IH: Marijuana Opportunity Reinvestment and Expungement Act of 2021</dc:title> <dc:publisher>U.S. House of Representatives</dc:publisher> <dc:date>2021-05-28</dc:date> <dc:format>text/xml</dc:format> <dc:language>EN</dc:language> <dc:rights>Pursuant to Title 17 Section 105 of the United States Code, this file is not subject to copyright protection and is in the public domain.</dc:rights> </dublinCore> </metadata> <form> <distribution-code display="yes">I</distribution-code> <congress display="yes">117th CONGRESS</congress><session display="yes">1st Session</session> <legis-num display="yes">H. R. 3617</legis-num> <current-chamber>IN THE HOUSE OF REPRESENTATIVES</current-chamber>

Digging into those XSLT stylesheets leads to billres-details.xsl - gist copy here - which starts with a huge changelog comment with notes dating all the way back to 2004!

If you've been experimenting with OpenAI's Codex CLI and have been frustrated that it's not possible to select text and copy it to the clipboard, at least when running in the Mac terminal (I genuinely didn't know it was possible to build a terminal app that disabled copy and paste) you should know that they fixed that in this issue last week.

The new 0.20.0 version from three days ago also completely removes the old TypeScript codebase in favor of Rust. Even installations via NPM now get the Rust version.

I originally installed Codex via Homebrew, so I had to run this command to get the updated version:

brew upgrade codex

Another Codex tip: to use GPT-5 (or any other specific OpenAI model) you can run it like this:

export OPENAI_DEFAULT_MODEL="gpt-5"

codex

This no longer works, see update below.

I've been using a codex-5 script on my PATH containing this, because sometimes I like to live dangerously!

#!/usr/bin/env zsh

# Usage: codex-5 [additional args passed to `codex`]

export OPENAI_DEFAULT_MODEL="gpt-5"

exec codex --dangerously-bypass-approvals-and-sandbox "$@"

Update: It looks like GPT-5 is the default model in v0.20.0 already.

Also the environment variable I was using no longer does anything, it was removed in this commit (I used Codex Web to help figure that out). You can use the -m model_id command-line option instead.

A couple of weeks ago I was invited to OpenAI's headquarters for a "preview event", for which I had to sign both an NDA and a video release waiver. I suspected it might relate to either GPT-5 or the OpenAI open weight models... and GPT-5 it was!

OpenAI had invited five developers: Claire Vo, Theo Browne, Ben Hylak, Shawn @swyx Wang, and myself. We were all given early access to the new models and asked to spend a couple of hours (of paid time, see my disclosures) experimenting with them, while being filmed by a professional camera crew.

The resulting video is now up on YouTube. Unsurprisingly most of my edits related to SVGs of pelicans.

Two interesting examples of inference speed as a flagship feature of LLM services today.

First, Cerebras announced two new monthly plans for their extremely high speed hosted model service: Cerebras Code Pro ($50/month, 1,000 messages a day) and Cerebras Code Max ($200/month, 5,000/day). The model they are selling here is Qwen's Qwen3-Coder-480B-A35B-Instruct, likely the best available open weights coding model right now and one that was released just ten days ago. Ten days from model release to third-party subscription service feels like some kind of record.

Cerebras claim they can serve the model at an astonishing 2,000 tokens per second - four times the speed of Claude Sonnet 4 in their demo video.

Also today, Moonshot announced a new hosted version of their trillion parameter Kimi K2 model called kimi-k2-turbo-preview:

🆕 Say hello to kimi-k2-turbo-preview Same model. Same context. NOW 4× FASTER.

⚡️ From 10 tok/s to 40 tok/s.

💰 Limited-Time Launch Price (50% off until Sept 1)

- $0.30 / million input tokens (cache hit)

- $1.20 / million input tokens (cache miss)

- $5.00 / million output tokens

👉 Explore more: platform.moonshot.ai

This is twice the price of their regular model for 4x the speed (increasing to 4x the price in September). No details yet on how they achieved the speed-up.

I am interested to see how much market demand there is for faster performance like this. I've experimented with Cerebras in the past and found that the speed really does make iterating on code with live previews feel a whole lot more interactive.

This morning I sent out the third edition of my LLM digest newsletter for my $10/month and higher sponsors on GitHub. It included the following section headers:

- Claude Code

- Model releases in July

- Gold medal performances in the IMO

- Reverse engineering system prompts

- Tools I'm using at the moment

The newsletter is a condensed summary of highlights from the past month of my blog. I published 98 posts in July - the concept for the newsletter is that you can pay me for the version that only takes 10 minutes to read!

Here are the newsletters I sent out for June 2025 and May 2025, if you want a taste of what you'll be getting as a sponsor. New sponsors instantly get access to the archive of previous newsletters, including the one I sent this morning.

Update: I also sent out my much longer, more frequent and free weekly-ish newsletter - this edition covers just the last three days because there's been so much going on. That one is entirely copy-and-pasted from my blog so if you read me via feeds you'll have seen it all already.

Here are a few more model releases from today, to round out a very busy July:

- Cohere released Command A Vision, their first multi-modal (image input) LLM. Like their others it's open weights under Creative Commons Attribution Non-Commercial, so you need to license it (or use their paid API) if you want to use it commercially.

- San Francisco AI startup Deep Cogito released four open weights hybrid reasoning models, cogito-v2-preview-deepseek-671B-MoE, cogito-v2-preview-llama-405B, cogito-v2-preview-llama-109B-MoE and cogito-v2-preview-llama-70B. These follow their v1 preview models in April at smaller 3B, 8B, 14B, 32B and 70B sizes. It looks like their unique contribution here is "distilling inference-time reasoning back into the model’s parameters" - demonstrating a form of self-improvement. I haven't tried any of their models myself yet.

- Mistral released Codestral 25.08, an update to their Codestral model which is specialized for fill-in‑the‑middle autocomplete as seen in text editors like VS Code, Zed and Cursor.

- And an anonymous stealth preview model called Horizon Alpha running on OpenRouter was released yesterday and is attracting a lot of attention.

Something that has become undeniable this month is that the best available open weight models now come from the Chinese AI labs.

I continue to have a lot of love for Mistral, Gemma and Llama but my feeling is that Qwen, Moonshot and Z.ai have positively smoked them over the course of July.

Here's what came out this month, with links to my notes on each one:

- Moonshot Kimi-K2-Instruct - 11th July, 1 trillion parameters

- Qwen Qwen3-235B-A22B-Instruct-2507 - 21st July, 235 billion

- Qwen Qwen3-Coder-480B-A35B-Instruct - 22nd July, 480 billion

- Qwen Qwen3-235B-A22B-Thinking-2507 - 25th July, 235 billion

- Z.ai GLM-4.5 and GLM-4.5 Air - 28th July, 355 and 106 billion

- Qwen Qwen3-30B-A3B-Instruct-2507 - 29th July, 30 billion

- Qwen Qwen3-30B-A3B-Thinking-2507 - 30th July, 30 billion

- Qwen Qwen3-Coder-30B-A3B-Instruct - 31st July, 30 billion (released after I first posted this note)

Notably absent from this list is DeepSeek, but that's only because their last model release was DeepSeek-R1-0528 back in April.

The only janky license among them is Kimi K2, which uses a non-OSI-compliant modified MIT. Qwen's models are all Apache 2 and Z.ai's are MIT.

The larger Chinese models all offer their own APIs and are increasingly available from other providers. I've been able to run versions of the Qwen 30B and GLM-4.5 Air 106B models on my own laptop.

I can't help but wonder if part of the reason for the delay in release of OpenAI's open weights model comes from a desire to be notably better than this truly impressive lineup of Chinese models.

Update August 5th 2025: The OpenAI open weight models came out and they are very impressive.

A few months ago I added a tool to my blog for bulk-applying tags to old content. It works as an extension to my existing search interface, letting me run searches and then quickly apply a tag to relevant results.

Since adding this I've been much more aggressive in categorizing my older content, including adding new tags when I spot an interesting trend that warrants its own page.

Today I added system-prompts and applied it to 41 existing posts that talk about system prompts for LLM systems, including a bunch that directly quote system prompts that have been deliberately published or leaked.

Other tags I've added recently include press-quotes for times I've been quoted in the press, agent-definitions for my ongoing collection of different ways people define "agents" and paper-review for posts where I review an academic paper.

If you're running low on disk space and are a uv user, don't forget about uv cache prune:

uv cache pruneremoves all unused cache entries. For example, the cache directory may contain entries created in previous uv versions that are no longer necessary and can be safely removed.uv cache pruneis safe to run periodically, to keep the cache directory clean.

My Mac just ran out of space. I ran OmniDiskSweeper and noticed that the ~/.cache/uv directory was 63.4GB - so I ran this:

uv cache prune

Pruning cache at: /Users/simon/.cache/uv

Removed 1156394 files (37.3GiB)

And now my computer can breathe again!

The more time I spend using LLMs for code, the less I worry for my career - even as their coding capabilities continue to improve.

Using LLMs as part of my process helps me understand how much of my job isn't just bashing out code.

My job is to identify problems that can be solved with code, then solve them, then verify that the solution works and has actually addressed the problem.

A more advanced LLM may eventually be able to completely handle the middle piece. It can help with the first and last pieces, but only when operated by someone who understands both the problems to be solved and how to interact with the LLM to help solve them.

No matter how good these things get, they will still need someone to find problems for them to solve, define those problems and confirm that they are solved. That's a job - one that other humans will be happy to outsource to an expert practitioner.

It's also about 80% of what I do as a software developer already.

Something I've realized about LLM tool use is that it means that if you can reduce a problem to something that can be solved by an LLM in a sandbox using tools in a loop, you can brute force that problem.

The challenge then becomes identifying those problems and figuring out how to configure a sandbox for them, what tools to provide and how to define the success criteria for the model.

That still takes significant skill and experience, but it's at a higher level than chewing through that problem using trial and error by hand.

My x86 assembly experiment with Claude Code was the thing that made this click for me.

Quitting programming as a career right now because of LLMs would be like quitting carpentry as a career thanks to the invention of the table saw.

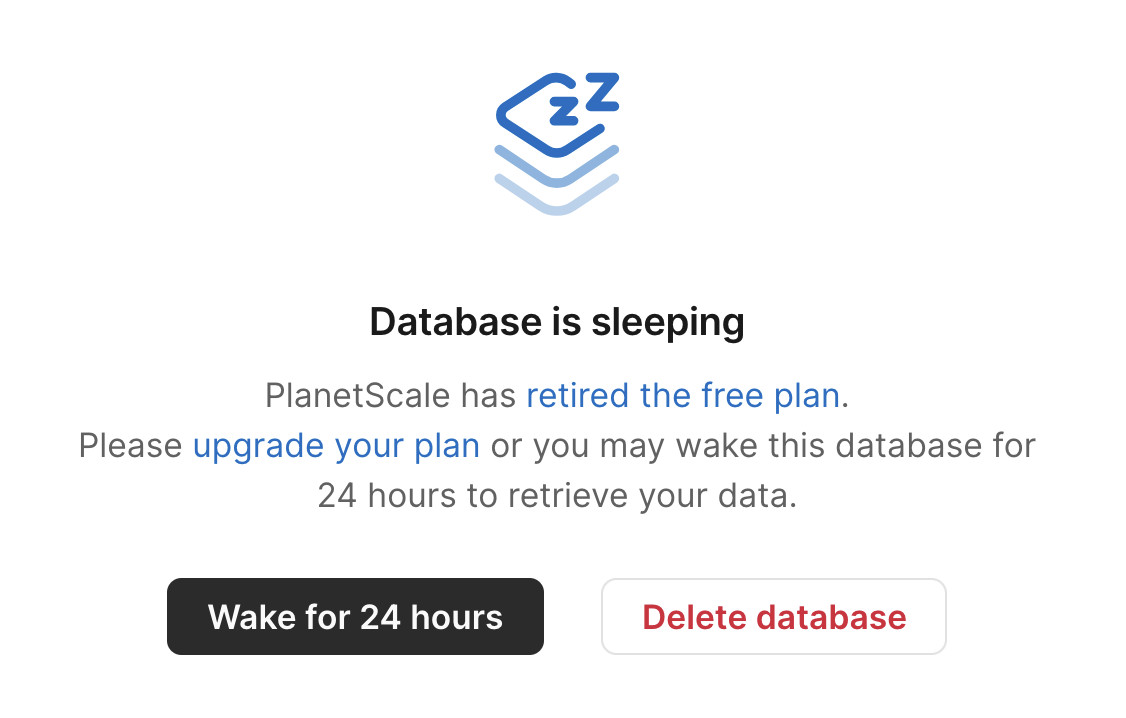

Sometimes a service with a free plan will decide to stop supporting it. I understand why this happens, but I'm often disappointed at the treatment of existing user's data. It's easy to imagine users forgetting about their old accounts, missing the relevant emails and then discovering too late that their data is gone.

Inspired by today's news about PlanetScale PostgreSQL I signed into PlanetScale and found I had a long-forgotten trial account there with a three-year-old database on their free tier. That free tier was retired in March 2024.

Here's the screen that greeted me in their control panel:

What a great way to handle retiring a free plan! My data is still there, and I have the option to spin up a database for 24 hours to help get it back out again.

Using LLMs for code archaeology is pretty fun.

I stumbled across this blog entry from 2003 today, in which I had gotten briefly excited about ColdFusion and implemented an experimental PHP template engine that used XML tags to achieve a similar effect:

<h1>%title%</h1> <sql id="recent"> select title from entries order by added desc limit 0, %limit% </sql> <ul> <output sql="recent"> <li>%title%</li> </output> </ul>

I'd completely forgotten about this, and in scanning through the PHP it looked like it had extra features that I hadn't described in the post.

So... I fed my 22 year old TemplateParser.class.php file into Claude and prompted:

Write detailed markdown documentation for this template language

Here's the resulting documentation. It's pretty good, but the highlight was the Claude transcript which concluded:

This appears to be a custom template system from the mid-2000s era, designed to separate presentation logic from PHP code while maintaining database connectivity for dynamic content generation.

Mid-2000s era indeed!

I just sent out the second edition of my sponsors only monthly newsletter. Anyone who sponsors me for $10/month or more on GitHub gets this carefully hand-curated summary of the last month in AI/LLMs/my projects designed to be readable in ten minutes or less.

My regular newsletter remains free - the monthly one is the only paywalled content I produce, the idea being that you can pay me to send you less.

Here's the first edition for May 2025 as a preview of what you can expect. You'll get access to the June digest and the full archive automatically if you decide to start sponsoring.

The term context engineering has recently started to gain traction as a better alternative to prompt engineering. I like it. I think this one may have sticking power.

Here's an example tweet from Shopify CEO Tobi Lutke:

I really like the term “context engineering” over prompt engineering.

It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.

Recently amplified by Andrej Karpathy:

+1 for "context engineering" over "prompt engineering".

People associate prompts with short task descriptions you'd give an LLM in your day-to-day use. When in every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step. Science because doing this right involves task descriptions and explanations, few shot examples, RAG, related (possibly multimodal) data, tools, state and history, compacting [...] Doing this well is highly non-trivial. And art because of the guiding intuition around LLM psychology of people spirits. [...]

I've spoken favorably of prompt engineering in the past - I hoped that term could capture the inherent complexity of constructing reliable prompts. Unfortunately, most people's inferred definition is that it's a laughably pretentious term for typing things into a chatbot!

It turns out that inferred definitions are the ones that stick. I think the inferred definition of "context engineering" is likely to be much closer to the intended meaning.

Yesterday Anthropic got a bunch of buzz out of their new window.claude.complete() API which allows Claude Artifacts to run their own API calls to execute prompts.

It turns out Gemini had beaten them to that feature by over a month, but the announcement was tucked away in a bullet point of their release notes for the 20th of May:

Vibe coding apps in Canvas just got better too! With just a few prompts, you can now build fully functional personalised apps in Canvas that can use Gemini-powered features, save data between sessions and share data between multiple users.

Ethan Mollick has been building some neat demos on top of Gemini Canvas, including this text adventure starship bridge simulator.

Similar to Claude Artifacts, Gemini Canvas detects if the application uses APIs that require authentication (to run prompts, for example) and requests the user sign in with their Google account:

![Futuristic sci-fi interface screenshot showing "Helm Control" at top with navigation buttons for Helm, Comms, Science, Tactical, Engineering, and Operations, displaying red error message "[SYSTEM_ERROR] Connection to AI core failed: API error: 403. This may be an authentication issue." with command input field showing "Enter command..." and Send button, plus Google Account sign-in notification at bottom stating "You need to sign in with your Google Account to see some features" with Sign in button and X close icon](https://static.simonwillison.net/static/2025/gemini-auth.jpg)

Two interesting new products for running code in a sandbox today.

Cloudflare launched their Containers product in open beta, and added a new Sandbox library for Cloudflare Workers that can run commands in a "secure, container-based environment":

import { getSandbox } from "@cloudflare/sandbox";

const sandbox = getSandbox(env.Sandbox, "my-sandbox");

const output = sandbox.exec("ls", ["-la"]);Vercel shipped a similar feature, introduced in Run untrusted code with Vercel Sandbox, which enables code that looks like this:

import { Sandbox } from "@vercel/sandbox";

const sandbox = await Sandbox.create();

await sandbox.writeFiles([

{ path: "script.js", stream: Buffer.from(result.text) },

]);

await sandbox.runCommand({

cmd: "node",

args: ["script.js"],

stdout: process.stdout,

stderr: process.stderr,

});In both cases a major intended use-case is safely executing code that has been created by an LLM.

I've added a Disclosures section to my about page, listing my various sources of income and the companies that directly sponsor my work or have supported it in the recent past.

I do not receive any compensation writing about specific topics on this blog - no sponsored content! I plan to continue this policy. If I ever change this I will disclose that both here and in the post itself. [...]

I see my credibility as one of my most valuable assets, so it's important to be transparent about how financial interests may influence my writing here.

I took inspiration from Molly White's disclosures page.

Here's a tip that works on YouTube and almost any other web page that shows you a video. You can increase the playback rate beyond the usually-exposed 2x by running this in your browser DevTools console:

document.querySelector('video').playbackRate = 2.5

I find this is the fastest I can reasonably watch most videos at, with subtitles on to help my comprehension - it turns a 40 minute video into just 16 minutes, short enough that I don't feel too guilty taking time off whatever else I'm doing to watch it!