Notes

Filters: Sorted by date

I continue to have fun running fantasy cooking prompts through LLMs - this time I tried "Give me a wildly ambitious recipe for zucchini cooked three ways" followed by "Go more ambitious" and now I need to get myself a centrifuge to help spherify my clarified zucchini consommé.

I wrote this recently in a conversation about whether coding agents can work as a replacement for human programmers.

The "agentic" coding tools we have right now work like this:

- A skilled individual with both deep domain understanding and deep understanding of the capabilities of the agent (including understanding what tools are available to that agent) poses a clear task to it.

- The agent writes some code relating to that task. It runs a tool to execute and test that code. It inspects the result, and if there are errors it edits the code and tries again.

- It may call other tools as well, for example a search tool to find related code or even to look up API documentation elsewhere (including via web search).

- It continues like this until it hits a loosely defined “done” state or gets stuck.

- The skilled individual then reviews what it has done and almost always finds that it has not solved the problem to their satisfaction... so they apply their expertise and domain understanding to prompt it again to try and get to that desired state.

Without the skilled individual, the “agent” is useless. It may as well not exist.

That memvid thing that's been going around recently is a trap. It's an embedding store that records the original text that has been embedded in QR codes in a video file. That's an absurd thing to do, and the only purpose of the repo is to make people who uncritically share it look foolish. Don't fall for the trap.

Every time I get into an online conversation about prompt injection it's inevitable that someone will argue that a mitigation which works 99% of the time is still worthwhile because there's no such thing as a security fix that is 100% guaranteed to work.

I don't think that's true.

If I use parameterized SQL queries my systems are 100% protected against SQL injection attacks.

If I make a mistake applying those and someone reports it to me I can fix that mistake and now I'm back up to 100%.

If our measures against SQL injection were only 99% effective none of our digital activities involving relational databases would be safe.

I don't think it is unreasonable to want a security fix that, when applied correctly, works 100% of the time.

(I first argued a version of this back in September 2022 in You can’t solve AI security problems with more AI.)

My post this morning about Design Patterns for Securing LLM Agents against Prompt Injections is an example of a blogging format I'd love to see more of: informal but informed commentary on academic papers.

Academic papers are generally hard to read. Sadly that's almost a requirement of the format: the incentives for publishing papers that make it through peer review are often at odds with producing text that's easy for non-academics to digest.

(This new Design Patterns paper bucks that trend, the writing is clear, it’s enjoyable to read and the target audience clearly includes practitioners, not just other researchers.)

In addition to breaking a paper down into more digestible chunks, writing about papers offers an extremely valuable filter. There are hundreds of new papers published every day: seeing someone who's work you respect confirm that a paper is worth your time is a really strong signal.

I added a paper-review tag this morning, gathering six posts where I’ve attempted this kind of review. Notes on the SQLite DuckDB paper in September 2022 was my first.

I apply the same principle to these as my link blog: try to add something extra, so that anyone who reads both my post and the paper itself gets a little bit of extra value from my notes.

It's this blog's 23rd birthday today!

On June 12th 2022 I celebrated Twenty years of my blog with a big post full of highlights. Looking back now I'm amused to notice that my 20th birthday post came within two weeks of my earliest writing about LLMs: A Datasette tutorial written by GPT-3 and How to use the GPT-3 language model.

My generative-ai tag has reached 1,184 posts now.

I really do feel like blogging is onto its second wind. The amount of influence you can have on the world by consistently blogging about a subject is just as high today as it was back in the 2000s when blogging first started.

The best time to start a blog may have been twenty years ago, but the second best time to start a blog is today.

OpenAI just dropped the price of their o3 model by 80% - from $10/million input tokens and $40/million output tokens to just $2/million and $8/million for the very same model. This is in advance of the release of o3-pro which apparently is coming later today (update: here it is).

This is a pretty huge shake-up in LLM pricing. o3 is now priced the same as GPT 4.1, and slightly less than GPT-4o ($2.50/$10). It’s also less than Anthropic’s Claude Sonnet 4 ($3/$15) and Opus 4 ($15/$75) and sits in between Google’s Gemini 2.5 Pro for >200,00 tokens ($2.50/$15) and 2.5 Pro for <200,000 ($1.25/$10).

I’ve updated my llm-prices.com pricing calculator with the new rate.

How have they dropped the price so much? OpenAI's Adam Groth credits ongoing optimization work:

thanks to the engineers optimizing inferencing.

Solomon Hykes just presented the best definition of an AI agent I've seen yet, on stage at the AI Engineer World's Fair:

An AI agent is an LLM wrecking its environment in a loop.

I collect AI agent definitions and I really like this how this one combines the currently popular "tools in a loop" one (see Anthropic) with the classic academic definition that I think dates back to at least the 90s:

An agent is something that acts in an environment; it does something. Agents include worms, dogs, thermostats, airplanes, robots, humans, companies, and countries.

We're hosting the sixth in our series of Datasette Public Office Hours livestream sessions this Friday, 6th of June at 2pm PST (here's that time in your location).

The topic is going to be tool support in LLM, as introduced here.

I'll be walking through the new features, and we're also inviting five minute lightning demos from community members who are doing fun things with the new capabilities. If you'd like to present one of those please get in touch via this form.

Here's a link to add it to Google Calendar.

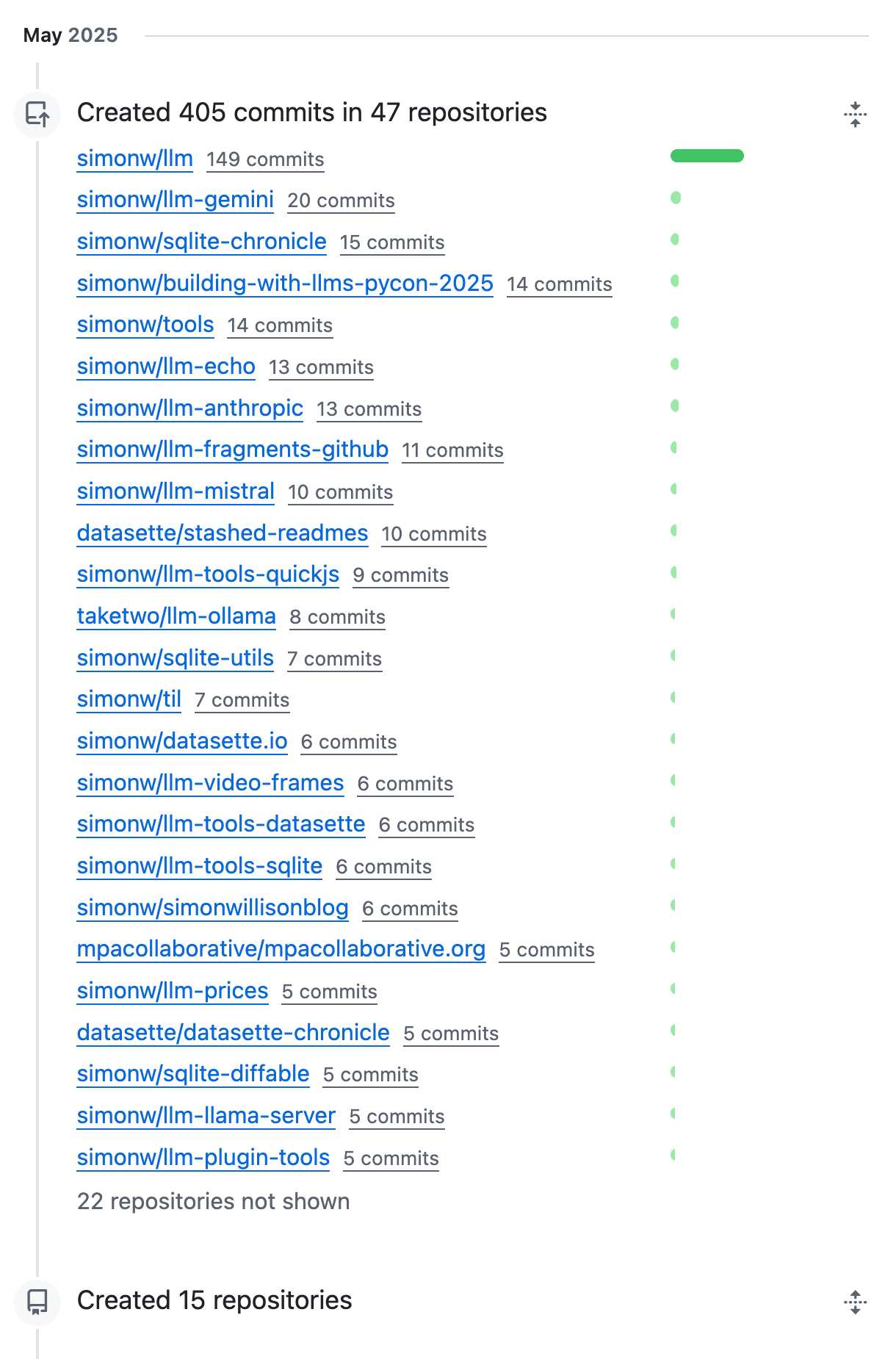

OK, May was a busy month for coding on GitHub. I blame tool support!

If you've found web development frustrating over the past 5-10 years, here's something that has worked worked great for me: give yourself permission to avoid any form of frontend build system (so no npm / React / TypeScript / JSX / Babel / Vite / Tailwind etc) and code in HTML and JavaScript like it's 2009.

The joy came flooding back to me! It turns out browser APIs are really good now.

You don't even need jQuery to paper over the gaps any more - use document.querySelectorAll() and fetch() directly and see how much value you can build with a few dozen lines of code.

I'll be sending out my first curated monthly highlights newsletter tomorrow, only to $10/month and up sponsors. Sign up now if you want to pay me to send you less!

My weekly-ish newsletter remains free, in fact I just sent out the latest edition.

I wonder if one of the reasons I'm finding LLMs so much more useful for coding than a lot of people that I see in online discussions is that effectively all of the code I work on has automated tests.

I've been trying to stay true to the idea of a Perfect Commit - one that bundles the implementation, tests and documentation in a single unit - for over five years now. As a result almost every piece of (non vibe-coding) code I work on has pretty comprehensive test coverage.

This massively derisks my use of LLMs. If an LLM writes weird, convoluted code that solves my problem I can prove that it works with tests - and then have it refactor the code until it looks good to me, keeping the tests green the whole time.

LLMs help write the tests, too. I finally have a 24/7 pair programmer who can remember how to use unittest.mock!

Next time someone complains that they've found LLMs to be more of a hindrance than a help in their programming work, I'm going to try to remember to ask after the health of their test suite.

Here's a quick demo of the kind of casual things I use LLMs for on a daily basis.

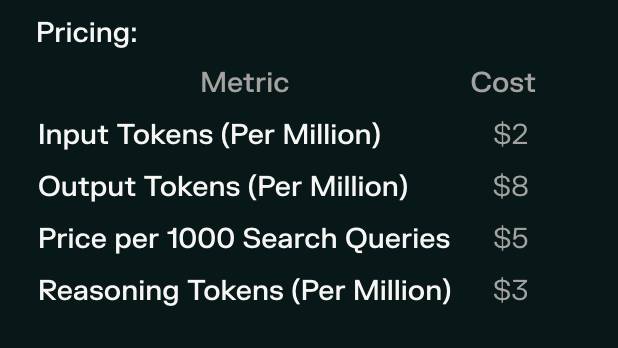

I just found out that Perplexity offer their Deep Research feature via their API, through a model called Sonar Deep Research.

Their documentation includes an example response, which included this usage data in the JSON:

{"prompt_tokens": 19, "completion_tokens": 498, "total_tokens": 517, "citation_tokens": 10175, "num_search_queries": 48, "reasoning_tokens": 95305}

But how much would that actually cost?

Their pricing page lists the price for that model. I snapped this screenshot of the prices:

I could break out a calculator at this point, but I'm not quite curious enough to go through the extra effort.

So I pasted that screenshot into Claude along with the JSON and prompted:

{"prompt_tokens": 19, "completion_tokens": 498, "total_tokens": 517, "citation_tokens": 10175, "num_search_queries": 48, "reasoning_tokens": 95305}Calculate price, use javascript

I wanted to make sure Claude would use its JavaScript analysis tool, since LLMs can't do maths.

I watched Claude Sonnet 4 write 61 lines of JavaScript - keeping an eye on it to check it didn't do anything obviously wrong. The code spat out this output:

=== COST CALCULATIONS ===

Input tokens cost: 19 tokens × $2/million = $0.000038

Output tokens cost: 498 tokens × $8/million = $0.003984

Search queries cost: 48 queries × $5/1000 = $0.240000

Reasoning tokens cost: 95305 tokens × $3/million = $0.285915

=== COST SUMMARY ===

Input tokens: $0.000038

Output tokens: $0.003984

Search queries: $0.240000

Reasoning tokens: $0.285915

─────────────────────────

TOTAL COST: $0.529937

TOTAL COST: $0.5299 (rounded to 4 decimal places)

So that Deep Research API call would cost 53 cents! Curiosity satisfied in less than a minute.

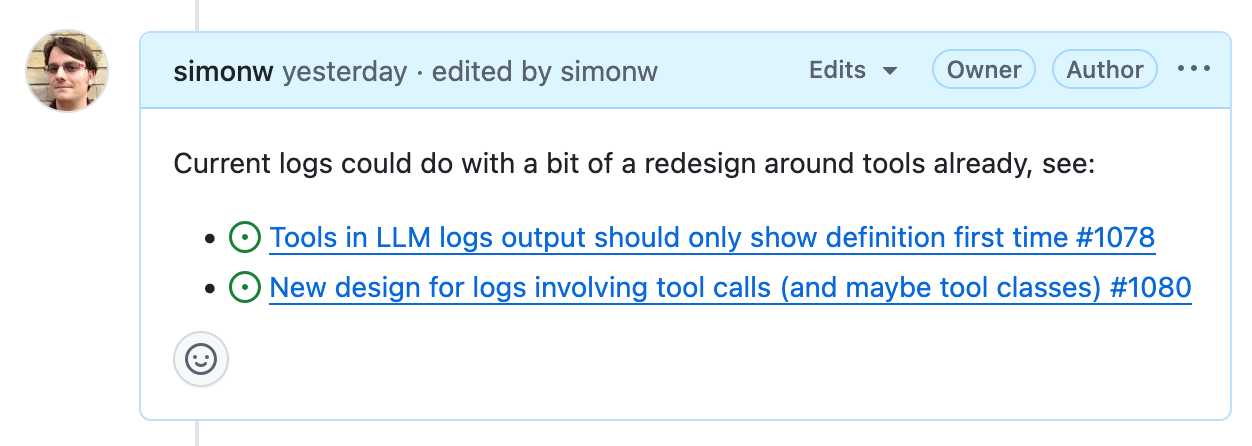

GitHub issues is almost the best notebook in the world.

Free and unlimited, for both public and private notes.

Comprehensive Markdown support, including syntax highlighting for almost any language. Plus you can drag and drop images or videos directly onto a note.

It has fantastic inter-linking abilities. You can paste in URLs to other issues (in any other repository on GitHub) in a markdown list like this:

- https://github.com/simonw/llm/issues/1078

- https://github.com/simonw/llm/issues/1080

Your issue will pull in the title of the other issue, plus that other issue will get back a link to yours - taking issue visibility rules into account.

It has excellent search, both within a repo, across all of your repos or even across the whole of GitHub if you've completely forgotten where you put something.

It has a comprehensive API, both for exporting notes and creating and editing new ones. Add GitHub Actions, triggered by issue events, and you can automate it to do almost anything.

The one missing feature? Synchronized offline support. I still mostly default to Apple Notes on my phone purely because it works with or without the internet and syncs up with my laptop later on.

A few extra notes inspired by the discussion of this post on Hacker News:

- I'm not worried about privacy here. A lot of companies pay GitHub a lot of money to keep the source code and related assets safe. I do not think GitHub are going to sacrifice that trust to "train a model" or whatever.

- There is always the risk of bug that might expose my notes, across any note platform. That's why I keep things like passwords out of my notes!

- Not paying and not self-hosting is a very important feature. I don't want to risk losing my notes to a configuration or billing error!

- The thing where notes can include checklists using

- [ ] itemsyntax is really useful. You can even do- [ ] #refto reference another issue and the checkbox will be automatically checked when that other issue is closed. - I've experimented with a bunch of ways of backing up my notes locally, such as github-to-sqlite. I'm not running any of them on cron on a separate machine at the moment, but I really should!

- I'll go back to pen and paper as soon as my paper notes can be instantly automatically backed up to at least two different continents.

- GitHub issues also scales! microsoft/vscode has 195,376 issues. flutter/flutter has 106,572. I'm not going to run out of space.

- Having my notes in a format that's easy to pipe into an LLM is really fun. Here's a recent example where I summarized a 50+ comment, 1.5 year long issue thread into a new comment using llm-fragments-github.

I was curious how many issues and comments I've created on GitHub. With Claude's help I figured out you can get that using a GraphQL query:

{

viewer {

issueComments {

totalCount

}

issues {

totalCount

}

}

}

Running that with the GitHub GraphQL Explorer tool gave me this:

{

"data": {

"viewer": {

"issueComments": {

"totalCount": 39087

},

"issues": {

"totalCount": 9413

}

}

}

}

That's 48,500 combined issues and comments!

Subscribe to my sponsors-only monthly newsletter

I’ve never liked the idea of charging for my content. I get enormous value from putting all of my writing and research out there for free.

So I’m trying something a little different: pay me to send you less.

I’m starting a sponsors-only monthly newsletter featuring just my heavily curated and edited highlights. If you only have ten minutes, what are the most important things not to miss from the last month?

Don’t want to pay? That’s fine, you can continue to follow my firehose for free!

Anyone who sponsors me for $10/month (or $50/month or more) on GitHub sponsors will receive my new newsletter on approximately the last day of the month. I’ll be sending out the first edition next week.

This blog and my newsletter will continue at their same breakneck pace. Paying subscribers can get a lower volume of stuff.

I'm cautiously optimistic that this could work. I've never liked the idea of business models that incentivize me to publish less. This feels like it encourages me to do what I'm doing already while giving people a rational reason to support my work, at a relatively small incremental cost to myself.

I'm helping make some changes to a large, complex and very unfamiliar to me WordPress site. It's a perfect opportunity to try out Claude Code running against the new Claude 4 models.

It's going extremely well. So far Claude has helped get MySQL working on an older laptop (fixing some inscrutable Homebrew errors), disabled a CAPTCHA plugin that didn't work on localhost, toggled visible warnings on and off several times and figured out which CSS file to modify in the theme that the site is using. It even took a reasonable stab at making the site responsive on mobile!

I'm now calling Claude Code honey badger on account of its voracious appetite for crunching through code (and tokens) looking for the right thing to fix.

I got ChatGPT to make me some fan art:

I was going slightly spare at the fact that every talk at this Anthropic developer conference has used the word "agents" dozens of times, but nobody ever stopped to provide a useful definition.

I'm now in the "Prompting for Agents" workshop and Anthropic's Hannah Moran finally broke the trend by saying that at Anthropic:

Agents are models using tools in a loop

I can live with that! I'm glad someone finally said it out loud.

If your library doesn't have any documentation, it can't have any bugs.

Documentation specifies what your code is supposed to do. Your tests specify what it actually does.

Bugs exist when your test-enforced implementation fails to match the behavior described in your documentation. Without documentation a bug is just undefined behavior.

If you aim to follow semantic versioning you bump your major version when you release a backwards incompatible change. Such changes cannot exist if your code is not comprehensively documented!

Inspired by a half-remembered conversation I had with Tom Insam many years ago.

Tucked into today's Google I/O keynote, a blink-and-you'll miss it moment:

The pelican in the keynote was created by Alexander Chen. Here's the code they wrote with the help of Gemini, which uses p5.js to power the animation.

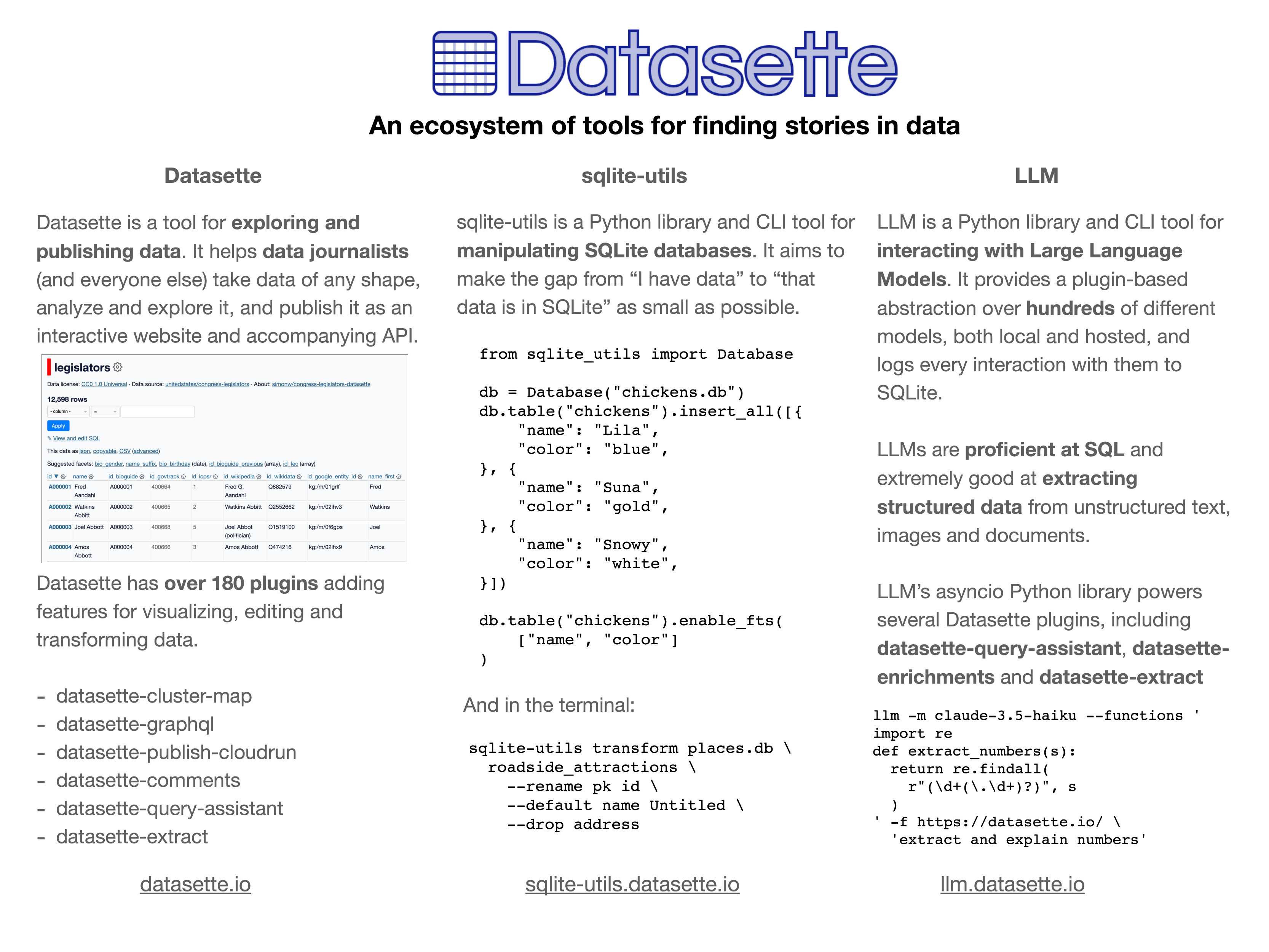

In addition to my workshop the other day I'm also participating in the poster session at PyCon US this year.

This means that tomorrow (Sunday 18th May) I'll be hanging out next to my poster from 10am to 1pm in Hall A talking to people about my various projects.

I'll confess: I didn't pay close enough attention to the poster information, so when I first put my poster up it looked a little small:

... so I headed to the nearest CVS and printed out some photos to better represent my interests and personality. I'm going for a "teenage bedroom" aesthetic here, I'm very happy with the result:

Here's the poster in the middle (also available as a PDF). It has columns for Datasette, sqlite-utils and LLM.

If you're at PyCon I'd love to talk to you about things I'm working on!

Update: Thanks to everyone who came along. Here's a 6MB photo of the poster setup. The museums were all from my www.niche-museums.com site and the pelicans riding a bicycle SVGs came from my pelican-riding-a-bicycle tag.

Today I learned - from a very short "we're sponsoring Python" sponsor blurb by Meta during the opening PyCon US welcome talks - that Python is now "the most-used language at Meta" - if you consider all of the different functional areas spread across the company.

They also have "over 3,000 Python developers working in the language every day".

The live captions for the event are once again provided by the excellent White Coat Captioning - real human beings! This got a cheer when it was pointed out by the conference chair a few moments earlier.

It's interesting how much my perception of o3 as being the latest, best model released by OpenAI is tarnished by the co-release of o4-mini. I'm also still not entirely sure how to compare o3 to o1-pro, especially given o1-pro is 15x more expensive via the OpenAI API.

Achievement unlocked: tap danced in the local community college dance recital.

Poker Face season two just started on Peacock (the US streaming service). It's my favorite thing on TV right now. I've started threads on MetaFilter FanFare for episodes one, two and three.

I had some notes in a GitHub issue thread in a private repository that I wanted to export as Markdown. I realized that I could get them using a combination of several recent projects.

Here's what I ran:

export GITHUB_TOKEN="$(llm keys get github)"

llm -f issue:https://github.com/simonw/todos/issues/170 \

-m echo --no-log | jq .prompt -r > notes.md

I have a GitHub personal access token stored in my LLM keys, for use with Anthony Shaw's llm-github-models plugin.

My own llm-fragments-github plugin expects an optional GITHUB_TOKEN environment variable, so I set that first - here's an issue to have it use the github key instead.

With that set, the issue: fragment loader can take a URL to a private GitHub issue thread and load it via the API using the token, then concatenate the comments together as Markdown. Here's the code for that.

Fragments are meant to be used as input to LLMs. I built a llm-echo plugin recently which adds a fake LLM called "echo" which simply echos its input back out again.

Adding --no-log prevents that junk data from being stored in my LLM log database.

The output is JSON with a "prompt" key for the original prompt. I use jq .prompt to extract that out, then -r to get it as raw text (not a "JSON string").

... and I write the result to notes.md.

I'm disappointed at how little good writing there is out there about effective prompting.

Here's an example: what's the best prompt to use to summarize an article?

That feels like such an obvious thing, and yet I haven't even seen that being well explored!

It's actually a surprisingly deep topic. I like using tricks like "directly quote the sentences that best illustrate the overall themes" and "identify the most surprising ideas", but I'd love to see a thorough breakdown of all the tricks I haven't seen yet.

Our local BBQ spot here in El Granada - Breakwater Barbecue - had a soft opening this weekend in their new location.

Here's the new building. They're still working on replacing the sign from the previous restaurant occupant:

It's actually our old railway station! From 1905 to 1920 the Ocean Shore Railroad ran steam trains from San Francisco down through Half Moon Bay most of the way to Santa Cruz, though they never quite connected the two cities.

The restaurant has some photos on the wall of the old railroad. Here's what that same building looked like >100 years ago.

Having tried a few of the Qwen 3 models now my favorite is a bit of a surprise to me: I'm really enjoying Qwen3-8B.

I've been running prompts through the MLX 4bit quantized version, mlx-community/Qwen3-8B-4bit. I'm using llm-mlx like this:

llm install llm-mlx

llm mlx download-model mlx-community/Qwen3-8B-4bit

This pulls 4.3GB of data and saves it to ~/.cache/huggingface/hub/models--mlx-community--Qwen3-8B-4bit.

I assigned it a default alias:

llm aliases set q3 mlx-community/Qwen3-8B-4bit

I also added a default option for that model - this saves me from adding -o unlimited 1 to every prompt which disables the default output token limit:

llm models options set q3 unlimited 1

And now I can run prompts:

llm -m q3 'brainstorm questions I can ask my friend who I think is secretly from Atlantis that will not tip her off to my suspicions'

Qwen3 is a "reasoning" model, so it starts each prompt with a <think> block containing its chain of thought. Reading these is always really fun. Here's the full response I got for the above question.

I'm finding Qwen3-8B to be surprisingly capable for useful things too. It can summarize short articles. It can write simple SQL queries given a question and a schema. It can figure out what a simple web app does by reading the HTML and JavaScript. It can write Python code to meet a paragraph long spec - for that one it "reasoned" for an unreasonably long time but it did eventually get to a useful answer.

All this while consuming between 4 and 5GB of memory, depending on the length of the prompt.

I think it's pretty extraordinary that a few GBs of floating point numbers can usefully achieve these various tasks, especially using so little memory that it's not an imposition on the rest of the things I want to run on my laptop at the same time.

It's not in their release notes yet but Anthropic pushed some big new features today. Alex Albert:

We've improved web search and rolled it out worldwide to all paid plans. Web search now combines light Research functionality, allowing Claude to automatically adjust search depth based on your question.

Anthropic announced Claude Research a few weeks ago as a product that can combine web search with search against your private Google Workspace - I'm not clear on how much of that product we get in this "light Research" functionality.

I'm most excited about this detail:

You can also drop a web link in any chat and Claude will fetch the content for you.

In my experiments so far the user-agent it uses is Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Claude-User/1.0; +Claude-User@anthropic.com). It appears to obey robots.txt.