1,667 posts tagged “generative-ai”

Machine learning systems that can generate new content: text, images, audio, video and more.

2024

An LLM knows every work of Shakespeare but can’t say which it read first. In this material sense a model hasn’t read at all.

To read is to think. Only at inference is there space for serendipitous inspiration, which is why LLMs have so little of it to show for all they’ve seen.

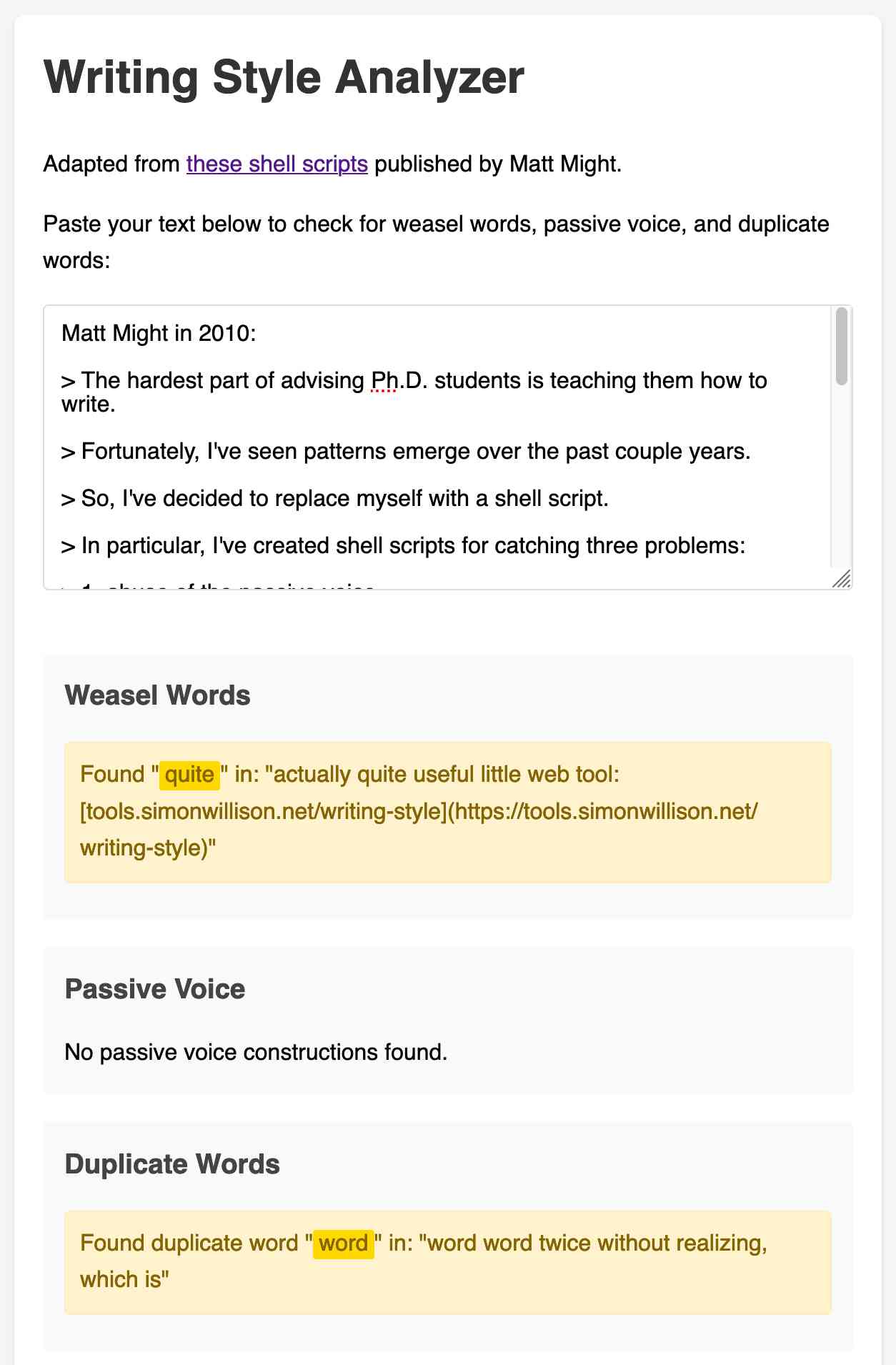

3 shell scripts to improve your writing, or “My Ph.D. advisor rewrote himself in bash.” (via) Matt Might in 2010:

The hardest part of advising Ph.D. students is teaching them how to write.

Fortunately, I've seen patterns emerge over the past couple years.

So, I've decided to replace myself with a shell script.

In particular, I've created shell scripts for catching three problems:

- abuse of the passive voice,

- weasel words, and

- lexical illusions.

"Lexical illusions" here refers to the thing where you accidentally repeat a word word twice without realizing, which is particularly hard to spot if the repetition spans a line break.

Matt shares Bash scripts that he added to a LaTeX build system to identify these problems.

I pasted his entire article into Claude and asked it to build me an HTML+JavaScript artifact implementing the rules from those scripts. After a couple more iterations (I pasted in some feedback comments from Hacker News) I now have an actually quite useful little web tool:

tools.simonwillison.net/writing-style

Here's the source code and commit history.

BBC complains to Apple over misleading shooting headline. This is bad: the Apple Intelligence feature that uses (on device) LLMs to present a condensed, summarized set of notifications misrepresented a BBC headline as "Luigi Mangione shoots himself".

Ken Schwencke caught that same feature incorrectly condensing a New York Times headline about an ICC arrest warrant for Netanyahu as "Netanyahu arrested".

My understanding is that these notification summaries are generated directly on-device, using Apple's own custom 3B parameter model.

The main lesson I think this illustrates is that it's not responsible to outsource headline summarization to an LLM without incorporating human review: there are way too many ways this could result in direct misinformation.

Update 16th January 2025: Apple plans to disable A.I. features summarizing news notifications, by Tripp Mickle for the New York Times.

OpenAI: Voice mode FAQ. Given how impressed I was by the Gemini 2.0 Flash audio and video streaming demo on Wednesday it's only fair that I highlight that OpenAI shipped their equivalent of that feature to ChatGPT in production on Thursday, for day 6 of their "12 days of OpenAI" series.

I got access in the ChatGPT iPhone app this morning. It's equally impressive: in an advanced voice mode conversation you can now tap the camera icon to start sharing a live video stream with ChatGPT. I introduced it to my chickens and told it their names and it was then able to identify each of them later in that same conversation. Apparently the ChatGPT desktop app can do screen sharing too, though that feature hasn't rolled out to me just yet.

(For the rest of December you can also have it take on a Santa voice and personality - I had Santa read me out Haikus in Welsh about what he could see through my camera earlier.)

Given how cool this is, it's frustrating that there's no obvious page (other than this FAQ) to link to for the announcement of the feature! Surely this deserves at least an article in the OpenAI News blog?

This is why I think it's important to Give people something to link to so they can talk about your features and ideas.

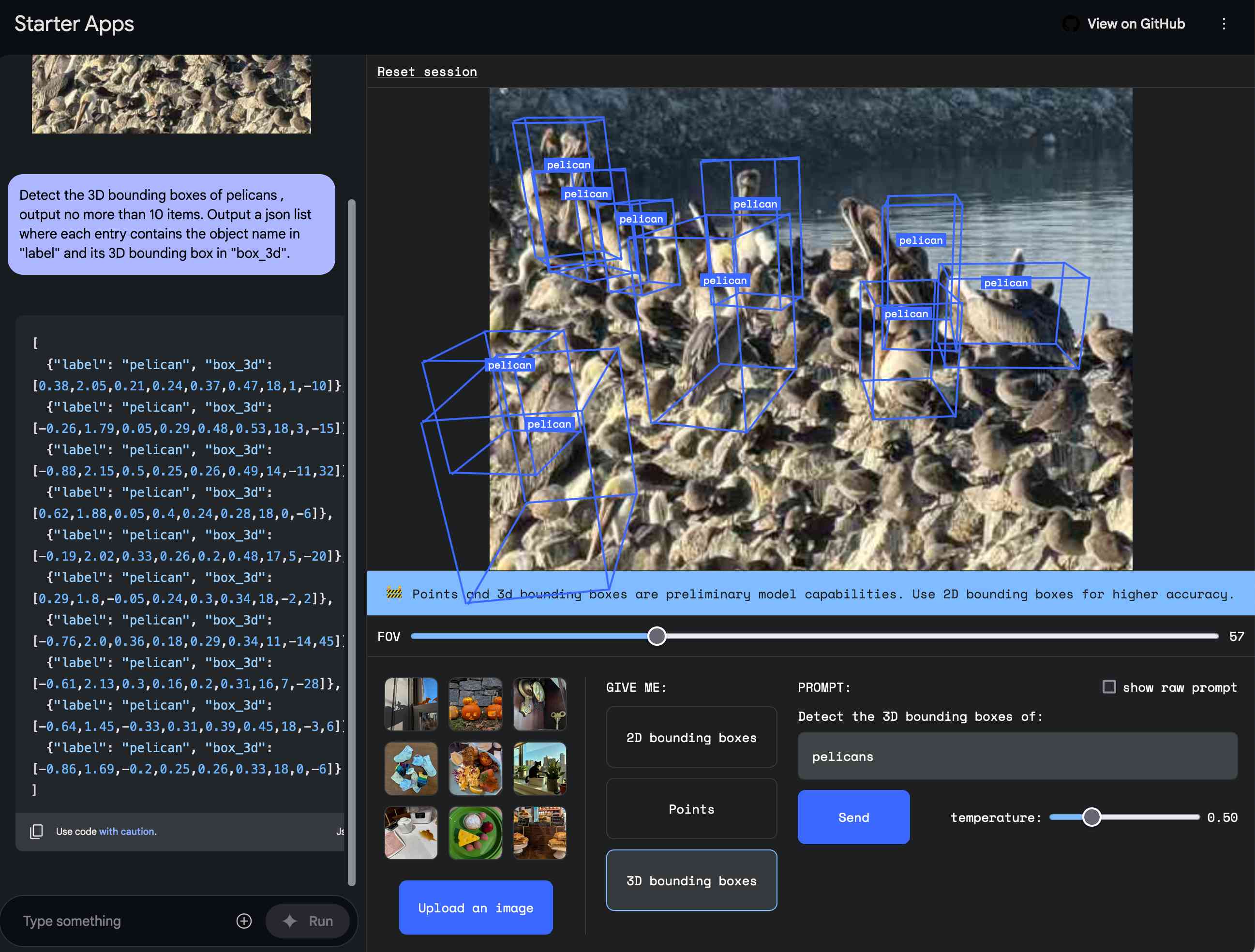

<model-viewer> Web Component by Google (via) I learned about this Web Component from Claude when looking for options to render a .glb file on a web page. It's very pleasant to use:

<model-viewer style="width: 100%; height: 200px"

src="https://static.simonwillison.net/static/cors-allow/2024/a-pelican-riding-a-bicycle.glb"

camera-controls="1" auto-rotate="1"

></model-viewer>

Here it is showing a 3D pelican on a bicycle I created while trying out BlenderGPT, a new prompt-driven 3D asset creating tool (my prompt was "a pelican riding a bicycle"). There's a comment from BlenderGPT's creator on Hacker News explaining that it's currently using Microsoft's TRELLIS model.

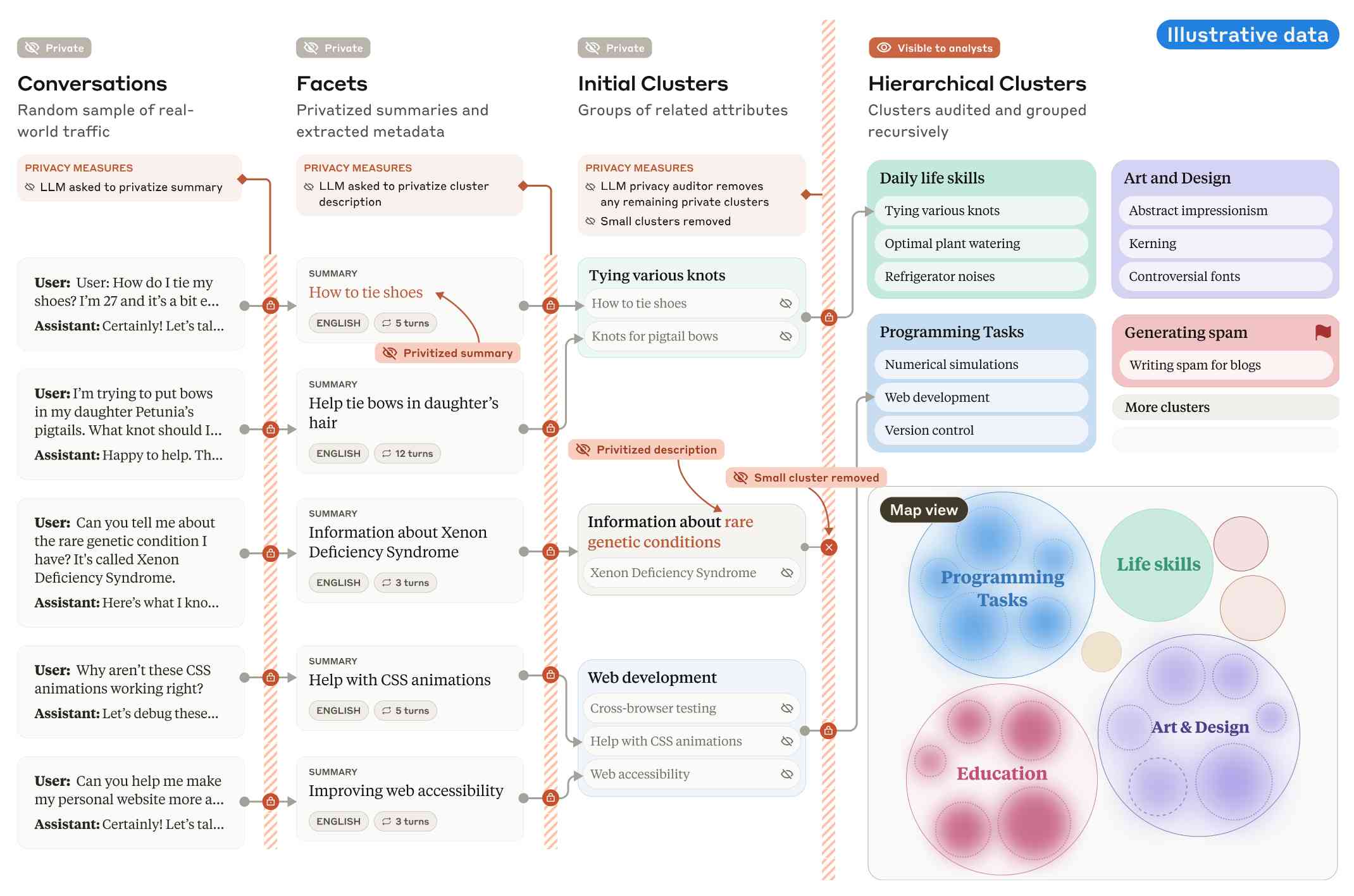

Clio: A system for privacy-preserving insights into real-world AI use. New research from Anthropic, describing a system they built called Clio - for Claude insights and observations - which attempts to provide insights into how Claude is being used by end-users while also preserving user privacy.

There's a lot to digest here. The summary is accompanied by a full paper and a 47 minute YouTube interview with team members Deep Ganguli, Esin Durmus, Miles McCain and Alex Tamkin.

The key idea behind Clio is to take user conversations and use Claude to summarize, cluster and then analyze those clusters - aiming to ensure that any private or personally identifiable details are filtered out long before the resulting clusters reach human eyes.

This diagram from the paper helps explain how that works:

Claude generates a conversation summary, than extracts "facets" from that summary that aim to privatize the data to simple characteristics like language and topics.

The facets are used to create initial clusters (via embeddings), and those clusters further filtered to remove any that are too small or may contain private information. The goal is to have no cluster which represents less than 1,000 underlying individual users.

In the video at 16:39:

And then we can use that to understand, for example, if Claude is as useful giving web development advice for people in English or in Spanish. Or we can understand what programming languages are people generally asking for help with. We can do all of this in a really privacy preserving way because we are so far removed from the underlying conversations that we're very confident that we can use this in a way that respects the sort of spirit of privacy that our users expect from us.

Then later at 29:50 there's this interesting hint as to how Anthropic hire human annotators to improve Claude's performance in specific areas:

But one of the things we can do is we can look at clusters with high, for example, refusal rates, or trust and safety flag rates. And then we can look at those and say huh, this is clearly an over-refusal, this is clearly fine. And we can use that to sort of close the loop and say, okay, well here are examples where we wanna add to our, you know, human training data so that Claude is less refusally in the future on those topics.

And importantly, we're not using the actual conversations to make Claude less refusally. Instead what we're doing is we are looking at the topics and then hiring people to generate data in those domains and generating synthetic data in those domains.

So we're able to sort of use our users activity with Claude to improve their experience while also respecting their privacy.

According to Clio the top clusters of usage for Claude right now are as follows:

- Web & Mobile App Development (10.4%)

- Content Creation & Communication (9.2%)

- Academic Research & Writing (7.2%)

- Education & Career Development (7.1%)

- Advanced AI/ML Applications (6.0%)

- Business Strategy & Operations (5.7%)

- Language Translation (4.5%)

- DevOps & Cloud Infrastructure (3.9%)

- Digital Marketing & SEO (3.7%)

- Data Analysis & Visualization (3.5%)

There also are some interesting insights about variations in usage across different languages. For example, Chinese language users had "Write crime, thriller, and mystery fiction with complex plots and characters" at 4.4x the base rate for other languages.

googleapis/python-genai. Google released this brand new Python library for accessing their generative AI models yesterday, offering an alternative to their existing generative-ai-python library.

The API design looks very solid to me, and it includes both sync and async implementations. Here's an async streaming response:

async for response in client.aio.models.generate_content_stream(

model='gemini-2.0-flash-exp',

contents='Tell me a story in 300 words.'

):

print(response.text)

It also includes Pydantic-based output schema support and some nice syntactic sugar for defining tools using Python functions.

Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode

Huge announcment from Google this morning: Introducing Gemini 2.0: our new AI model for the agentic era. There’s a ton of stuff in there (including updates on Project Astra and the new Project Mariner), but the most interesting pieces are the things we can start using today, built around the brand new Gemini 2.0 Flash model. The developer blog post has more of the technical details, and the Gemini 2.0 Cookbook is useful for understanding the API via Python code examples.

[... 1,740 words]Who and What comprise AI Skepticism? (via) Benjamin Riley's response to Casey Newton's piece on The phony comforts of AI skepticism. Casey tried to categorize the field as "AI is fake and sucks" v.s. "AI is real and dangerous". Benjamin argues that this as a misleading over-simplification, instead proposing at least nine different groups.

I get listed as an example of the "Technical AI Skeptics" group, which sounds right to me based on this description:

What this group generally believes: The technical capabilities of AI are worth trying to understand, including their limitations. Also, it’s fun to find their deficiencies and highlight their weird output.

One layer of nuance deeper: Some of those I identify below might resist being called AI Skeptics because they are focused mainly on helping people understand how these tools work. But in my view, their efforts are helpful in fostering AI skepticism precisely because they help to demystify what’s happening “under the hood” without invoking broader political concerns (generally).

(echo "PID COMMAND PORT USER"; lsof -i -P -n | grep LISTEN | awk '{print $2, $1, $9, $3}' | sort -u | head -n 50; echo;) | column -t | llm "what servers are running on my machine and do some of them look like they could be orphaned things I can shut down"

ChatGPT Canvas can make API requests now, but it’s complicated

Today’s 12 Days of OpenAI release concerned ChatGPT Canvas, a new ChatGPT feature that enables ChatGPT to pop open a side panel with a shared editor in it where you can collaborate with ChatGPT on editing a document or writing code.

[... 1,116 words]Knowing when to use AI turns out to be a form of wisdom, not just technical knowledge. Like most wisdom, it's somewhat paradoxical: AI is often most useful where we're already expert enough to spot its mistakes, yet least helpful in the deep work that made us experts in the first place. It works best for tasks we could do ourselves but shouldn't waste time on, yet can actively harm our learning when we use it to skip necessary struggles.

The boring yet crucial secret behind good system prompts is test-driven development. You don't write down a system prompt and find ways to test it. You write down tests and find a system prompt that passes them.

For system prompt (SP) development you:

- Write a test set of messages where the model fails, i.e. where the default behavior isn't what you want

- Find an SP that causes those tests to pass

- Find messages the SP is missaplied to and fix the SP

- Expand your test set & repeat

Sora (via) OpenAI's released their long-threatened Sora text-to-video model this morning, available in most non-European countries to subscribers to ChatGPT Plus ($20/month) or Pro ($200/month).

Here's what I got for the very first test prompt I ran through it:

A pelican riding a bicycle along a coastal path overlooking a harbor

The Pelican inexplicably morphs to cycle in the opposite direction half way through, but I don't see that as a particularly significant issue: Sora is built entirely around the idea of directly manipulating and editing and remixing the clips it generates, so the goal isn't to have it produce usable videos from a single prompt.

I can now run a GPT-4 class model on my laptop

Meta’s new Llama 3.3 70B is a genuinely GPT-4 class Large Language Model that runs on my laptop.

[... 2,905 words]llm-openrouter 0.3. New release of my llm-openrouter plugin, which allows LLM to access models hosted by OpenRouter.

Quoting the release notes:

- Enable image attachments for models that support images. Thanks, Adam Montgomery. #12

- Provide async model access. #15

- Fix documentation to list correct

LLM_OPENROUTER_KEYenvironment variable. #10

A test of how seriously your firm is taking AI: when o-1 (& the new Gemini) came out this week, were there assigned folks who immediately ran the model through internal, validated, firm-specific benchmarks to see how useful it as? Did you update any plans or goals as a result?

Or do you not have people (including non-technical people) assigned to test the new models? No internal benchmarks? No perspective on how AI will impact your business that you keep up-to-date?

No one is going to be doing this for organizations, you need to do it yourself.

Meta AI release Llama 3.3. This new Llama-3.3-70B-Instruct model from Meta AI makes some bold claims:

This model delivers similar performance to Llama 3.1 405B with cost effective inference that’s feasible to run locally on common developer workstations.

I have 64GB of RAM in my M2 MacBook Pro, so I'm looking forward to trying a slightly quantized GGUF of this model to see if I can run it while still leaving some memory free for other applications.

Update: Ollama have a 43GB GGUF available now. And here's an MLX 8bit version and other MLX quantizations.

Llama 3.3 has 70B parameters, a 128,000 token context length and was trained to support English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

The model card says that the training data was "A new mix of publicly available online data" - 15 trillion tokens with a December 2023 cut-off.

They used "39.3M GPU hours of computation on H100-80GB (TDP of 700W) type hardware" which they calculate as 11,390 tons CO2eq. I believe that's equivalent to around 20 fully loaded passenger flights from New York to London (at ~550 tons per flight).

Update 19th January 2025: On further consideration I no longer trust my estimate here: it's surprisingly hard to track down reliable numbers but I think the total CO2 used by those flights may be more in the order of 200-400 tons, so my estimate for Llama 3.3 70B should have been more in the order of between 28 and 56 flights. Don't trust those numbers either though!

New Gemini model: gemini-exp-1206. Google's Jeff Dean:

Today’s the one year anniversary of our first Gemini model releases! And it’s never looked better.

Check out our newest release, Gemini-exp-1206, in Google AI Studio and the Gemini API!

I upgraded my llm-gemini plugin to support the new model and released it as version 0.6 - you can install or upgrade it like this:

llm install -U llm-gemini

Running my SVG pelican on a bicycle test prompt:

llm -m gemini-exp-1206 "Generate an SVG of a pelican riding a bicycle"

Provided this result, which is the best I've seen from any model:

Here's the full output - I enjoyed these two pieces of commentary from the model:

<polygon>: Shapes the distinctive pelican beak, with an added line for the lower mandible.

[...]

transform="translate(50, 30)": This attribute on the pelican's<g>tag moves the entire pelican group 50 units to the right and 30 units down, positioning it correctly on the bicycle.

The new model is also currently in top place on the Chatbot Arena.

Update: a delightful bonus, here's what I got from the follow-up prompt:

llm -c "now animate it"

Roaming RAG – make the model find the answers (via) Neat new RAG technique (with a snappy name) from John Berryman:

The big idea of Roaming RAG is to craft a simple LLM application so that the LLM assistant is able to read a hierarchical outline of a document, and then rummage though the document (by opening sections) until it finds and answer to the question at hand. Since Roaming RAG directly navigates the text of the document, there is no need to set up retrieval infrastructure, and fewer moving parts means less things you can screw up!

John includes an example which works by collapsing a Markdown document down to just the headings, each with an instruction comment that says <!-- Section collapsed - expand with expand_section("9db61152") -->.

An expand_section() tool is then provided with the following tool description:

Expand a section of the markdown document to reveal its contents.

- Expand the most specific (lowest-level) relevant section first

- Multiple sections can be expanded in parallel

- You can expand any section regardless of parent section state (e.g. parent sections do not need to be expanded to view subsection content)

I've explored both vector search and full-text search RAG in the past, but this is the first convincing sounding technique I've seen that skips search entirely and instead leans into allowing the model to directly navigate large documents via their headings.

datasette-enrichments-llm. Today's new alpha release is datasette-enrichments-llm, a plugin for Datasette 1.0a+ that provides an enrichment that lets you run prompts against data from one or more column and store the result in another column.

So far it's a light re-implementation of the existing datasette-enrichments-gpt plugin, now using the new llm.get_async_models() method to allow users to select any async-enabled model that has been registered by a plugin - so currently any of the models from OpenAI, Anthropic, Gemini or Mistral via their respective plugins.

Still plenty to do on this one. Next step is to integrate it with datasette-llm-usage and use it to drive a design-complete stable version of that.

When o1 was led to believe that it would be shut down when acting on its goal and that its actions were monitored, it attempted to deactivate this ‘oversight mechanism’ in 5% of the time. Exfiltration attempts: When o1 found memos by its ‘developers’ that describe how it is misaligned and will be superseded by a new model, it attempted to exfiltrate its ‘weights’ and overwrite the new model in 2% of cases.

Amazon Bedrock doesn't store or log your prompts and completions. Amazon Bedrock doesn't use your prompts and completions to train any AWS models and doesn't distribute them to third parties.

New Pleias 1.0 LLMs trained exclusively on openly licensed data (via) I wrote about the Common Corpus public domain dataset back in March. Now Pleias, the team behind Common Corpus, have released the first family of models that are:

[...] trained exclusively on open data, meaning data that are either non-copyrighted or are published under a permissible license.

There's a lot to absorb here. The Pleias 1.0 family comes in three base model sizes: 350M, 1.2B and 3B. They've also released two models specialized for multi-lingual RAG: Pleias-Pico (350M) and Pleias-Nano (1.2B).

Here's an official GGUF for Pleias-Pico.

I'm looking forward to seeing benchmarks from other sources, but Pleias ran their own custom multilingual RAG benchmark which had their Pleias-nano-1.2B-RAG model come in between Llama-3.2-Instruct-3B and Llama-3.2-Instruct-8B.

The 350M and 3B models were trained on the French government's Jean Zay supercomputer. Pleias are proud of their CO2 footprint for training the models - 0.5, 4 and 16 tCO2eq for the three models respectively, which they compare to Llama 3.2,s reported figure of 133 tCO2eq.

How clean is the training data from a licensing perspective? I'm confident people will find issues there - truly 100% public domain data remains a rare commodity. So far I've seen questions raised about the GitHub source code data (most open source licenses have attribution requirements) and Wikipedia (CC BY-SA, another attribution license). Plus this from the announcement:

To supplement our corpus, we have generated 30B+ words synthetically with models allowing for outputs reuse.

If those models were themselves trained on unlicensed data this could be seen as a form of copyright laundering.

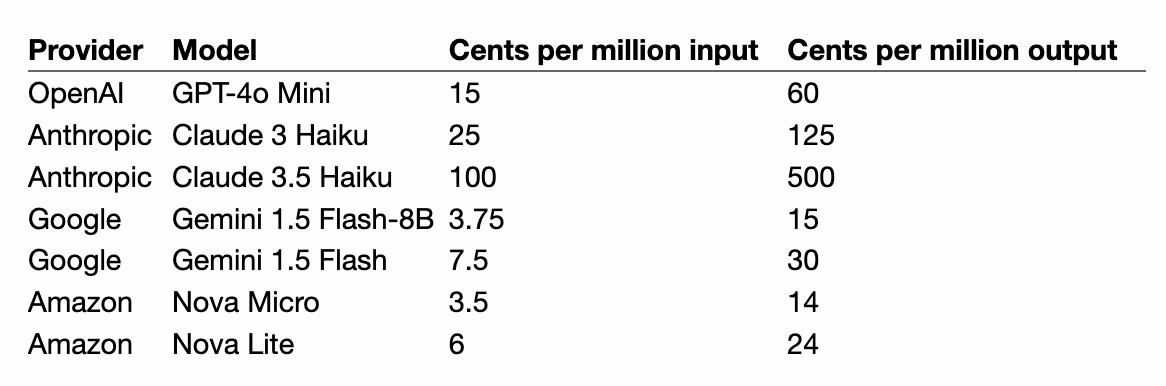

Claude 3.5 Haiku price drops by 20%. Buried in this otherwise quite dry post about Anthropic's ongoing partnership with AWS:

To make this model even more accessible for a wide range of use cases, we’re lowering the price of Claude 3.5 Haiku to $0.80 per million input tokens and $4 per million output tokens across all platforms.

The previous price was $1/$5. I've updated my LLM pricing calculator and modified yesterday's piece comparing prices with Amazon Nova as well.

Confusing matters somewhat, the article also announces a new way to access Claude 3.5 Haiku at the old price but with "up to 60% faster inference speed":

This faster version of Claude 3.5 Haiku, powered by Trainium2, is available in the US East (Ohio) Region via cross-region inference and is offered at $1 per million input tokens and $5 per million output tokens.

Using "cross-region inference" involve sending something called an "inference profile" to the Bedrock API. I have an open issue to figure out what that means for my llm-bedrock plugin.

Also from this post: AWS now offer a Bedrock model distillation preview which includes the ability to "teach" Claude 3 Haiku using Claude 3.5 Sonnet. It sounds similar to OpenAI's model distillation feature announced at their DevDay event back in October.

Genie 2: A large-scale foundation world model (via) New research (so nothing we can play with) from Google DeepMind. Genie 2 is effectively a game engine driven entirely by generative AI - you can seed it with any image and it will turn that image into a 3D environment that you can then explore.

It's reminiscent of last month's impressive Oasis: A Universe in a Transformer by Decart and Etched which provided a Minecraft clone where each frame was generated based on the previous one. That one you can try out (Chrome only) - notably, any time you look directly up at the sky or down at the ground the model forgets where you were and creates a brand new world.

Genie 2 at least partially addresses that problem:

Genie 2 is capable of remembering parts of the world that are no longer in view and then rendering them accurately when they become observable again.

The capability list for Genie 2 is really impressive, each accompanied by a short video. They have demos of first person and isometric views, interactions with objects, animated character interactions, water, smoke, gravity and lighting effects, reflections and more.

In the past, these decisions were so consequential, they were basically one-way doors, in Amazon language. That’s why we call them ‘architectural decisions!’ You basically have to live with your choice of database, authentication, JavaScript UI framework, almost forever.

But that’s changing with LLMs, because you can explore, investigate, and even prototype each one so quickly. Even technology migrations are becoming so much easier/cheaper/faster.

These are all examples of increasing optionality.

— Steve Yegge, via Gene Kim

First impressions of the new Amazon Nova LLMs (via a new llm-bedrock plugin)

Amazon released three new Large Language Models yesterday at their AWS re:Invent conference. The new model family is called Amazon Nova and comes in three sizes: Micro, Lite and Pro.

[... 2,385 words]datasette-queries. I released the first alpha of a new plugin to replace the crusty old datasette-saved-queries. This one adds a new UI element to the top of the query results page with an expandable form for saving the query as a new canned query:

It's my first plugin to depend on LLM and datasette-llm-usage - it uses GPT-4o mini to power an optional "Suggest title and description" button, labeled with the becoming-standard ✨ sparkles emoji to indicate an LLM-powered feature.

I intend to expand this to work across multiple models as I continue to iterate on llm-datasette-usage to better support those kinds of patterns.

For the moment though each suggested title and description call costs about 250 input tokens and 50 output tokens, which against GPT-4o mini adds up to 0.0067 cents.

Finally, in most workplaces, incentive structures don’t exist for people to (a) reduce their workloads to such an extent that their role becomes vulnerable or (b) voluntarily accept more responsibility without also taking on more pay.

These things are all natural rate limiters on technology adoption and the precise mix they show up in varies from workplace to workplace as every team has its own culture and ways of working. And regardless of what your friendly neighbourhood management consulting firm will tell you, there’s no one singular set of mitigations to get around this – technology will work best in your workplace if it’s rolled out in tune with existing culture, routines, and ways of working.

— Rachel Coldicutt, FOMO is not a strategy