Posts tagged git, projects

Filters: git × projects × Sorted by date

simonw/git-scraper-template. I built this new GitHub template repository in preparation for a workshop I'm giving at NICAR (the data journalism conference) next week on Cutting-edge web scraping techniques.

One of the topics I'll be covering is Git scraping - creating a GitHub repository that uses scheduled GitHub Actions workflows to grab copies of websites and data feeds and store their changes over time using Git.

This template repository is designed to be the fastest possible way to get started with a new Git scraper: simple create a new repository from the template and paste the URL you want to scrape into the description field and the repository will be initialized with a custom script that scrapes and stores that URL.

It's modeled after my earlier shot-scraper-template tool which I described in detail in Instantly create a GitHub repository to take screenshots of a web page.

The new git-scraper-template repo took some help from Claude to figure out. It uses a custom script to download the provided URL and derive a filename to use based on the URL and the content type, detected using file --mime-type -b "$file_path" against the downloaded file.

It also detects if the downloaded content is JSON and, if it is, pretty-prints it using jq - I find this is a quick way to generate much more useful diffs when the content changes.

sqlite-comprehend: run AWS entity extraction against content in a SQLite database

I built a new tool this week: sqlite-comprehend, which passes text from a SQLite database through the AWS Comprehend entity extraction service and stores the returned entities.

[... 1,146 words]Help scraping: track changes to CLI tools by recording their --help using Git

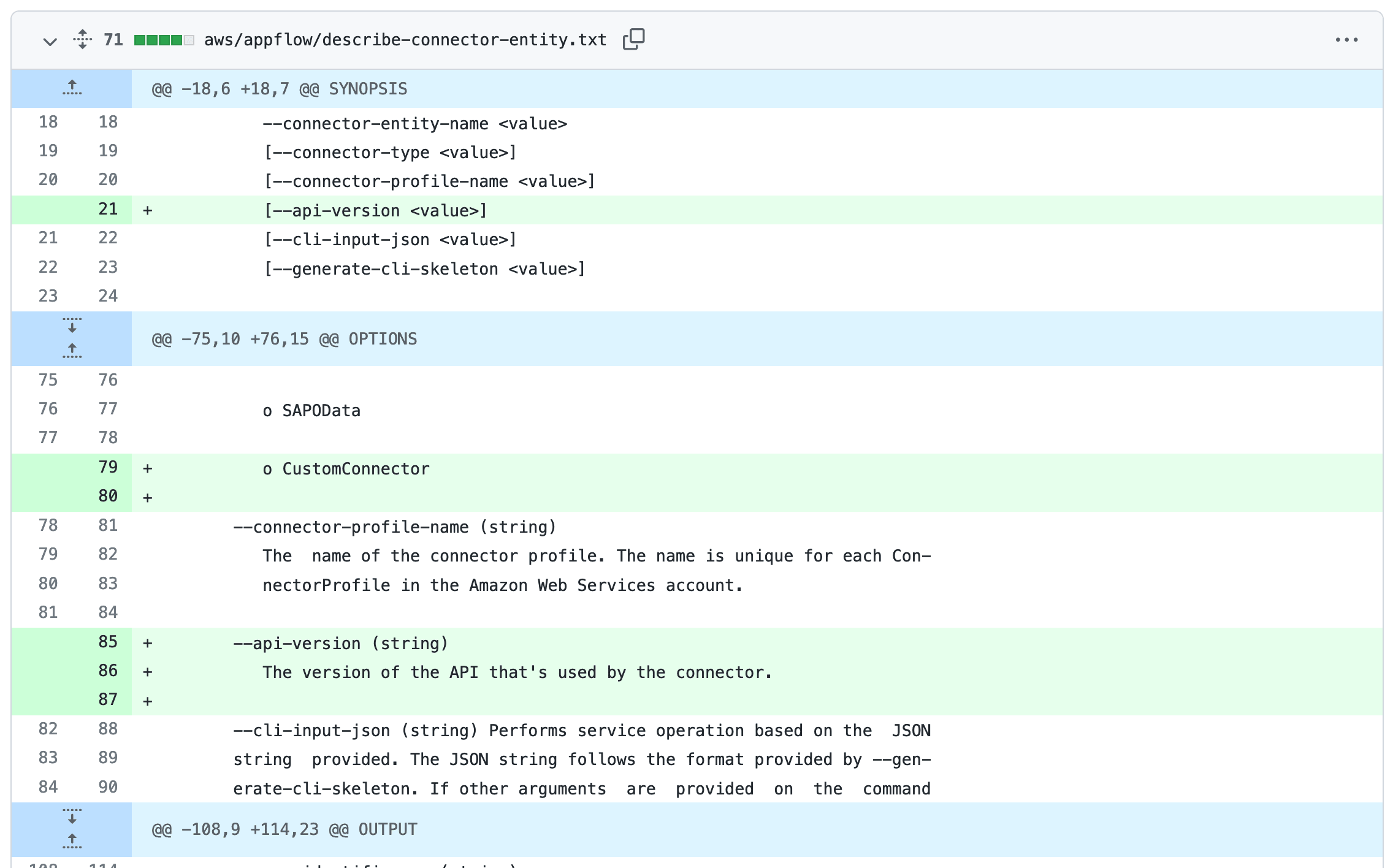

I’ve been experimenting with a new variant of Git scraping this week which I’m calling Help scraping. The key idea is to track changes made to CLI tools over time by recording the output of their --help commands in a Git repository.

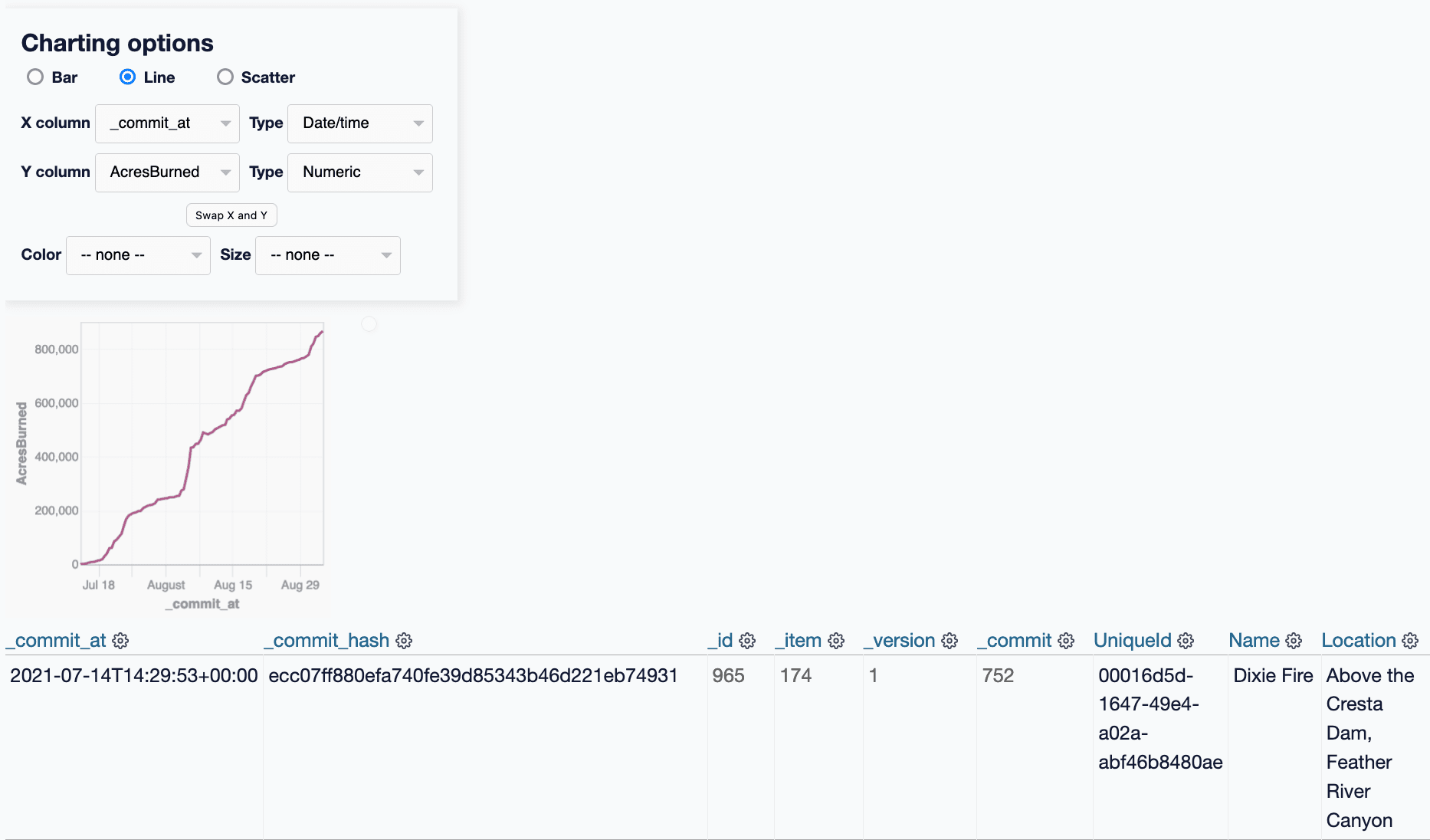

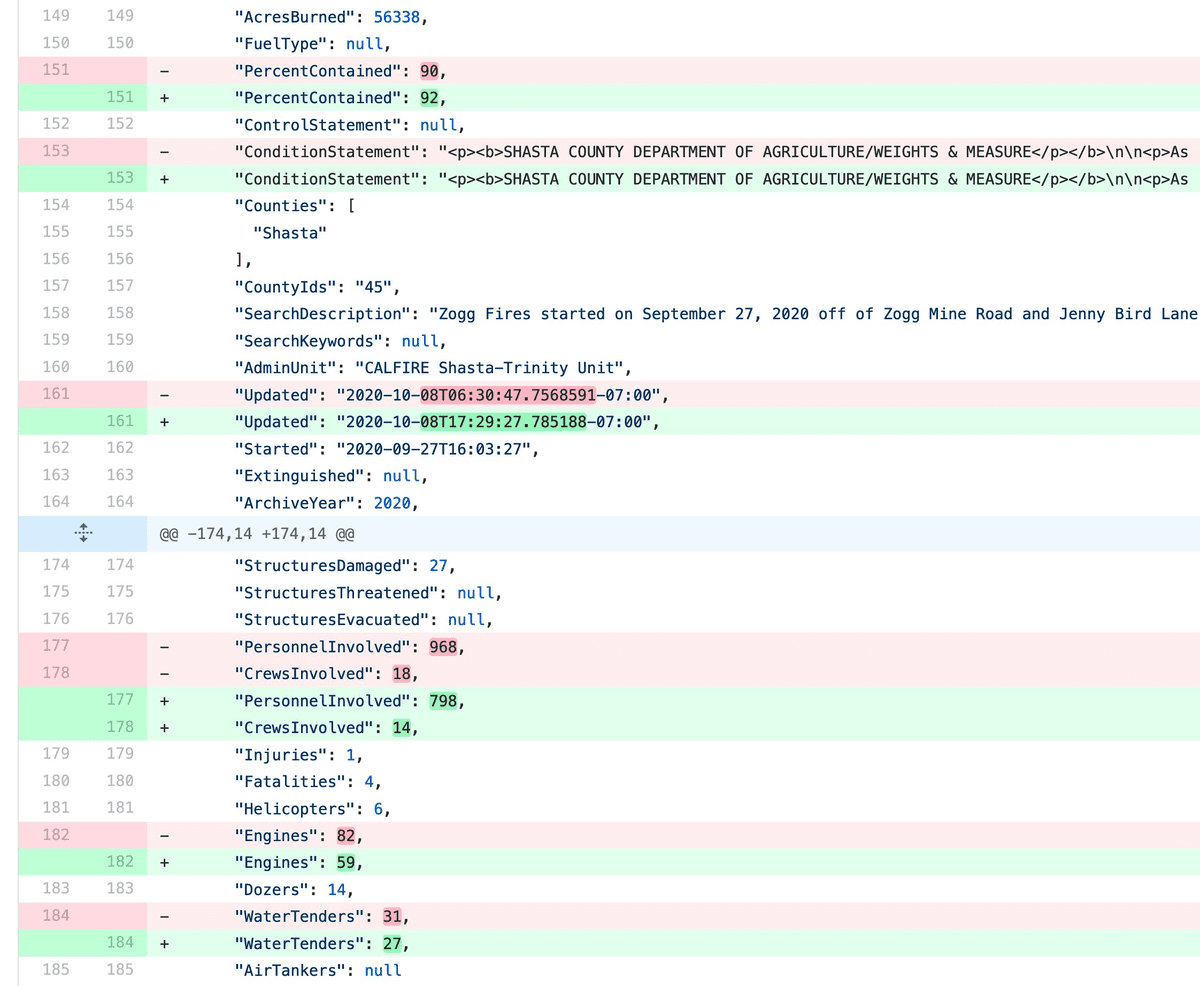

git-history: a tool for analyzing scraped data collected using Git and SQLite

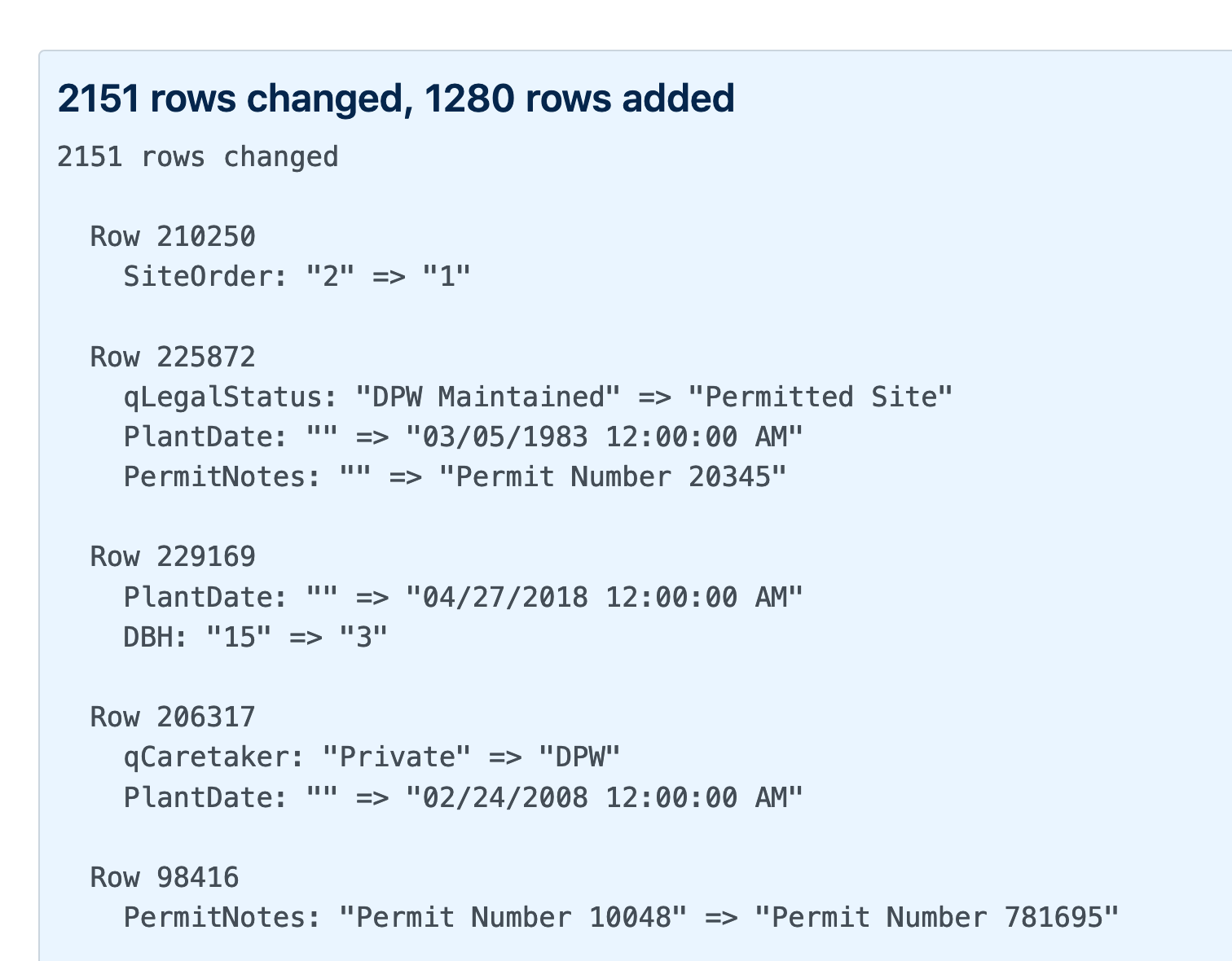

I described Git scraping last year: a technique for writing scrapers where you periodically snapshot a source of data to a Git repository in order to record changes to that source over time.

[... 2,002 words]Git scraping: track changes over time by scraping to a Git repository

Git scraping is the name I’ve given a scraping technique that I’ve been experimenting with for a few years now. It’s really effective, and more people should use it.

[... 963 words]Weeknotes: Rocky Beaches, Datasette 0.48, a commit history of my database

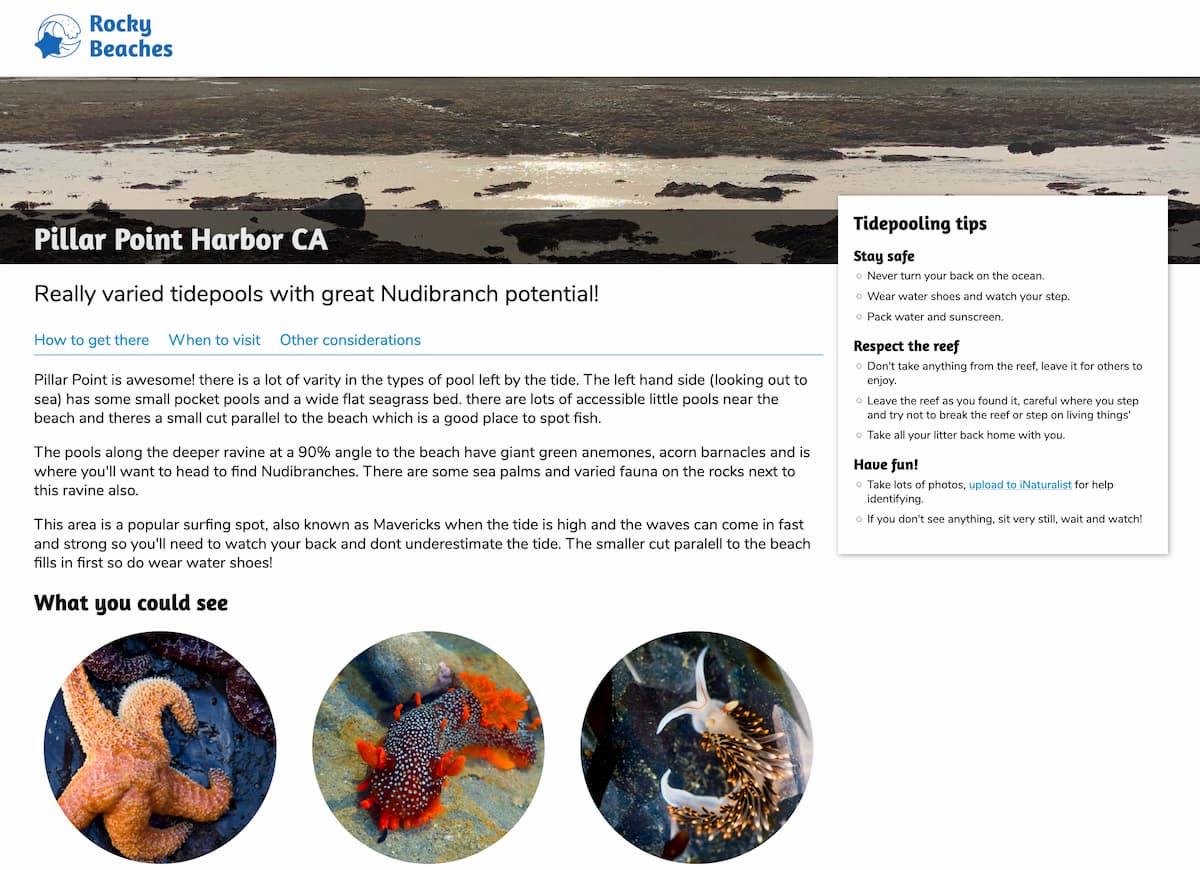

This week I helped Natalie launch Rocky Beaches, shipped Datasette 0.48 and several releases of datasette-graphql, upgraded the CSRF protection for datasette-upload-csvs and figured out how to get a commit log of changes to my blog by backing up its database to a GitHub repository.

Weeknotes: cookiecutter templates, better plugin documentation, sqlite-generate

I spent this week spreading myself between a bunch of smaller projects, and finally getting familiar with cookiecutter. I wrote about my datasette-plugin cookiecutter template earlier in the week; here’s what else I’ve been working on.

[... 703 words]Weeknotes: Archiving coronavirus.data.gov.uk, custom pages and directory configuration in Datasette, photos-to-sqlite

I mainly made progress on three projects this week: Datasette, photos-to-sqlite and a cleaner way of archiving data to a git repository.

[... 1,132 words]Goodbye Zeit Now v1, hello datasette-publish-now—and talking to myself in GitHub issues

This week I’ve been mostly dealing with the finally announced shutdown of Zeit Now v1. And having long-winded conversations with myself in GitHub issues.

[... 2,050 words]Generating a commit log for San Francisco’s official list of trees

San Francisco has a neat open data portal (as do an increasingly large number of cities these days). For a few years my favourite file on there has been Street Tree List, a list of all 190,000 trees in the city maintained by the Department of Public Works.

[... 1,051 words]djangopeople.net on GitHub. I’ve released the source code for Django People, the geographical community site developed last year by myself and Natalie Downe (it hasn’t otherwise been touched since April last year, so it needs porting to Django 1.1). If you want a new feature on the site, implement it and I’ll see about merging it in.